Month: November 2019

Highlights From SEACON:UK 2019 – Enterprise Measurement, Enterprise Structuring for Outcomes

MMS • Douglas Talbot

Article originally posted on InfoQ. Visit InfoQ

Key themes that emerged from the SEACON:UK Study of Enterprise Agility this year were: Measuring success at enterprise level; Structuring your organisation for agility; The importance of culture in agility at scale; and the use of Cloud services in the enterprise.

Many of the case studies describe how they changed the focus of their organisation to measuring its Value and Outcomes, then aligned the organisational structure to match these value streams or products and then instigated significant culture change initiatives to align with these new ways of working. This pattern resonated in talks from OVO, VW, and others all available on the conference YouTube space.

SEACON:UK Study of Enterprise Agility is a conference that covers ideas, issues and insights into Enterprise Transformation, Entrepreneurial Leadership, Design, Agile and DevOps at scale. The 2019 edition was held on November 12th, 2019 at HereEast Innovation Centre (Plexal.com) in London. The event is organised and driven by Barry Chandler and in its 3rd year. There was a large representation of senior leadership both as speakers and attendees. All the presentations are watchable here.

The main stage had key talks from Mik Kersten, Gabrielle Benefield & Tim Beattie, Simon Wardley, Jon Smart and Dan Terhorst-North.

Mik Kersten gave an introductory talk to his recently published book Project to Product on why enterprises need to avoid measurement based on activities and what Mik called the bad habit of ‘task ticking’. He recommended measurement focussed on value to the business. He pointed out that wealth was not being generated by project management or cost watching. He recommended a focus on product and value generation and in his book proposes a Flow Framework to describe how to make this move. This requires both organisational redesign and measuring success differently. (watch here)

Gabrielle Benefield author, speaker and advisor presented with Tim Beattie the Engagement Lead for Red Hat Open Innovation Labs where they also focussed on the theme of improving what we are measuring to drive organisational success. They said the key was to shift the focus of the organisation from outputs to outcomes and creating a culture of continuous Improvement to allow the organisation to adapt as conditions change. (watch here)

Simon Wardley gave an introduction to Wardley mapping (watch here) demonstrating his method for mapping product / organisational strategy. Wardley mapping is growing in popularity as shown by the six hundred attendees at the MapCamp conference in October in London. Simon showed how the principles of mapping can allow insight for evaluation of organisational and product strategies. He also demonstrated ways in which an organisation could more clearly evaluate and measure the impact of business choices. To learn more, the Learn Wardley Mapping site is excellent.

Jon Smart who leads on enterprise agility at Deloitte spoke on working with enterprises to focus them on what they are really optimising for. He showed a model for measurement and delivery in the enterprise and the kinds of paradigms he sees that do and do not work. He promoted for measuring outcomes of Better, Sooner, Safer and Happier. (watch here)

The final key talk was Daniel Terhorst-North who spoke about his latest case study at a very large startup Jio in India where he is using his SWARMing model for the enterprise ‘Scaling Without a Religious Methodology’, Dan is possibly best known for being an originator of BDD (Behaviour Driven Development). (watch here)

MMS • Rags Srinivas

Article originally posted on InfoQ. Visit InfoQ

At the recently concluded Kubecon+CloudNativeCon 2019 conference at San Diego, attended by over 12000 attendees, Helm and in particular the release of Helm 3.0.0 stole the headlines.

Helm, which is a package manager for Kubernetes akin to the OS package managers such as yum, apt, Homebrew, etc. makes it easier to install and manage packages and thier dependencies. Helm’s history precedes the first Kubecon as outlined in the Q&A with co-founder Matt Butcher. As a result it had acquired some technical debt including the much controversial server side component tiller.

InfoQ caught up with Matt Fisher, software engineer at Microsoft and one of the maintainers of Helm and Draft regarding the Helm 3.0.0 announcement.

Matt Fisher talks about the features of Helm 3.0.0, which is a major release, including why and how they overcame the technical debt, primarily related to tiller. He also talks about Helm’s commitment to backwards compatibility, which has always been important to the project. In this Q&A, he outlines a few key changes between Helm 2 and Helm 3.

InfoQ: Helm 3.0.0 is a significant release, correct? Before we get into tiller, can you provide a high level overview of the features in the new release?

Fisher: The most apparent change is the removal of Tiller.

Helm 3 uses a three-way merge patch strategy. Helm considers the previous release’s state, the “live” state, and the proposed state when generating a patch. In Helm 2, it used a two-way merge patch strategy.

The release ledger for a release is now stored in the same namespace as the release itself. This means

helm install wordpress stable/wordpresscan be managed per-namespace.Another change was the introduction of JSON Schemas, which can be imposed upon chart values. This ensures that values provided by the user follow the schema laid out by the chart maintainer, allowing users to catch errors earlier in the release process, and ensures that values are being provided as the chart maintainer expects.

Some features have been deprecated or refactored in ways that make them incompatible with Helm 2.

helm servewas a tool where it was useful when Helm 2 was first introduced, but other tools like chartmuseum have succeeded the project, sohelm servewas removed from the project.Some new experimental features have also been introduced, including OCI support.

There are way more features available in this release, though. The FAQ in the documentation goes into more detail on what changed and why.

InfoQ: Let’s talk about tiller now. Can you briefly talk about what it is, why did you have to get rid of it and how did you get rid of it?

Fisher: It’s important to give a little background context here.

The first version of Helm (AKA Helm Classic) was introduced at the first KubeCon… Way back in November 2015. Kubernetes 1.1.1 was released earlier that month, and 1.0.0 shipped only 4 months prior to that in July 2015.

Back then, Kubernetes had no concept of a ConfigMap. ReplicationControllers were all the hype (remember those?). The Kubernetes API was changing rapidly.

When Helm 2 was being built, we needed an abstraction layer from the Kubernetes API to allow ourselves some room to guarantee backwards compatibility.

Tiller was created as that abstraction layer. It provided us with a layer where we could control the input (via gRPC), the output (also via gRPC), and provide some backwards compatibility guarantees to users.

We’re pretty proud of the fact that Helm has maintained a strong commitment to backwards compatibility since 2.0.0.

Over time, Kubernetes’ API layer has become more stable. Helm 3 is our opportunity to refactor out some of those protective layers we put in 4 years ago. Tiller being one of them.

InfoQ: Containers security is front, left and center with respect to container adoption. What other security issues that developers and architects should be aware of as it relates to Helm 3?

Fisher: A few weeks prior to Helm 3’s release, the CNCF funded Cure53 to perform a security audit of Helm 3. Cure53 has performed audits of other CNCF projects including Prometheus, Envoy, Jaeger, Notary, and others.

The entire report is worth reading as no summary can do it justice. Cure53 provided a conclusion as part of their summary which reads:

To conclude, in light of the findings stemming from this CNCF-funded project, Cure53 can only state that the Helm project projects the impression of being highly mature. This verdict is driven by a number of different factors described above and essentially means that Helm can be recommended for public deployment, particularly when properly configured and secured in accordance to recommendations specified by the development team.

Security audits are one of the benefits of CNCF projects and we are grateful for them and the analysis performed by Cure53. This analysis has provided some concrete areas we can work to improve and given us confidence in what we have.

InfoQ: Despite tools like helm2to3 there are breaking changes that would prevent migration from Helm 2 to Helm 3, correct? Would you summarize these changes and how to obviate the difficulty in the migration process? Also, would you recommend a mixed-mode development that uses both Helm 2 and Helm 3?

Fisher: Helm 3 is a major release. After years of maintaining backward compatibility with each minor and patch release of Helm 2, we have taken the opportunity to rewrite substantial parts of Helm for this release, and there are some backward compatibility breakages. Most of these changes relate to the storage backend in Kubernetes, or how we deal with certain CLI flags. Most of the work was wrapping our heads around how Helm 3 would look with Tiller being removed from the architecture.

Once that was complete, we needed to ensure a smooth migration path forward, which introduced the helm-2to3 plugin.

One of the big design goals we had in mind was to avoid making any breaking changes to charts. We wanted to ensure the community that has built their charts will not have to maintain two separate versions of the same chart: one that works for Helm 2, and one that works for Helm 3.

We’ve heard from community members adopting a strangler pattern for their clusters, where releases that were maintained with Helm 2 continue to use Helm 2, but new installations use Helm 3 going forward.

We strongly discourage users attempting to use Helm 2 and Helm 3 to manage the same release, though. It won’t work. The backend has seen some significant changes, and this is one of the places where the helm-2to3 migration tool comes in to assist with their migration efforts.

InfoQ: Can you briefly summarize the ecosystem that Helm plays in? In terms of tooling for Helm, what are the top tools that you recommend for developers and architects? Where are the major gaps?

Fisher: Helm is a package manager in the same line as apt, yum, homebrew, and others.

A package manager lets someone who has knowledge of how to run an application on a platform (e.g., postgresql on debian) package it up in a way that others who don’t have this knowledge can run an application without needing to learn it.

That’s why I can use

apt installregularly without needing to know the business logic of running all those apps on my system.Package managers let you compartmentalize and automate expertise.

In terms of tooling, you can’t go wrong with chartmuseum or Harbor for hosting your own chart repository. chart-releaser is another take on the chart repository API, relying on automation using Github Releases and Github Pages for hosting chart packages.

The Kubernetes extension for Visual Studio code has some lovely visual tools for interacting with a Kubernetes cluster. I find myself using it more often for simple Kubernetes operations (like

kubectl edit), or for when I’m trying a assemble a new Helm chart based on what’s currently installed in my cluster.

InfoQ: Finally, What’s the roadmap beyond Helm 3? Any other tips for those who have not used Helm or for those who want to contribute to the Helm community?

Fisher: Right now, we’re watching the dust settle and see where things land with the community. We are looking towards stability and enhancements to the existing feature set. Moving experimental features (like the OCI registry integration) towards general availability and gathering feedback from users trying out the Go client libraries. Security fixes and patch releases are being scheduled as needed, of course.

For those who want to contribute, chatting with other community members in the #helm-users Kubernetes Slack channel is always a good first step. It’s also a good place to reach out if you’re hitting issues. I am constantly humbled by the community members who share their stories with others, or help others with certain issues they’ve seen before.

The core maintainers are also looking for feedback from community members integrating Helm (the CLI or the Go SDK) into their own projects. The #helm-dev Kubernetes Slack channel is great for reaching out to core maintainers about high-level topics.

In summary, more details of the Helm 3.0.0 release which introduced some new features but got rid of the server component tiller is available at helm.sh.

More detailed documentation on the Helm 3.0.0 is available in the docs. In addition, there were a number of sessions at the conference around Helm including a Helm 3 Deep Dive.

MMS • Ben Linders Dimitris Andreadis

Article originally posted on InfoQ. Visit InfoQ

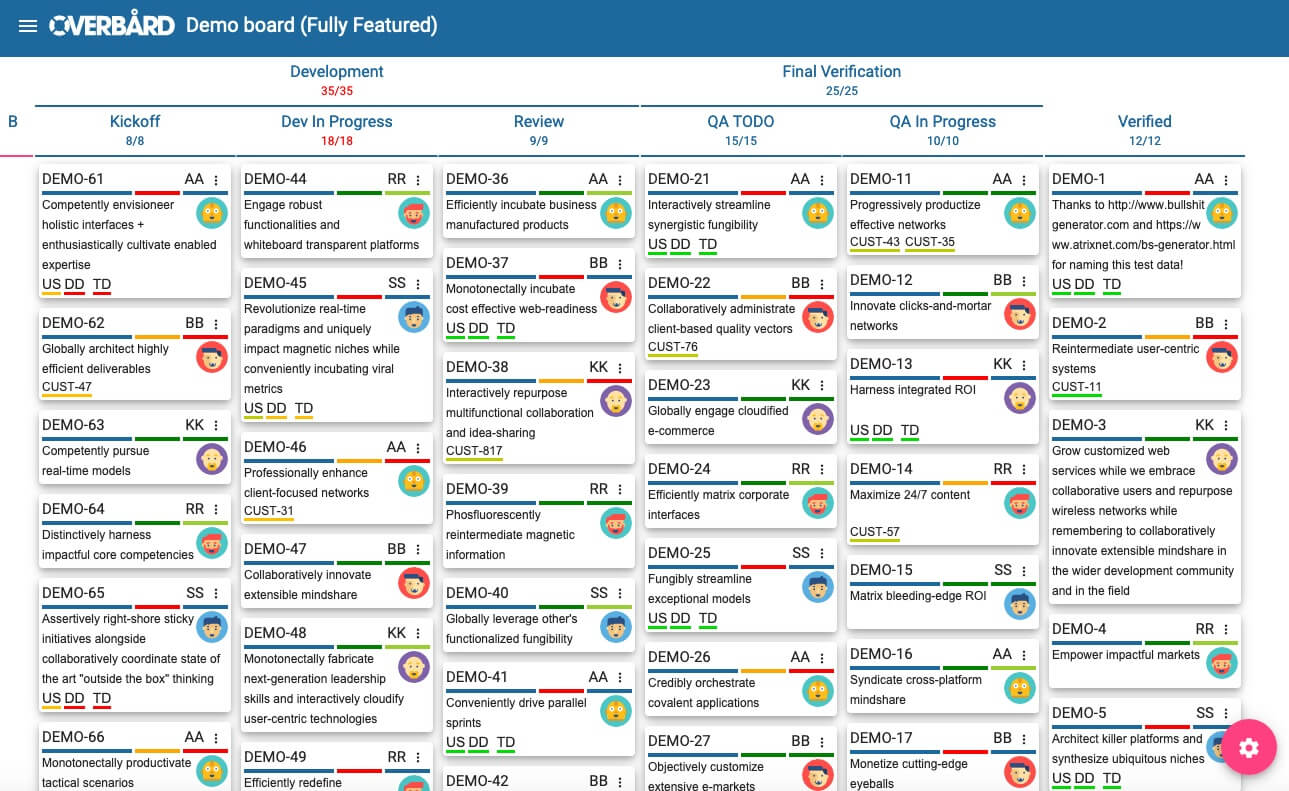

As planning the work for Red Hat JBoss EAP became harder and harder, Red Hat decided to adopt Kanban to make their development process more manageable, while maintaining a very high level of quality. They introduced Kanban in their distributed team and developed their own Jira add-on for visualizing the work, and added parallel tasks to their Kanban cards to simplify the workflow.

By Ben Linders, Dimitris Andreadis

MMS • Jan Stenberg

Article originally posted on InfoQ. Visit InfoQ

Organizations are moving more and more of their processes into software, Jay Kreps notes in a blog post, and adds that he thinks an accompanying change is that businesses are increasingly defined in software – the core processes are specified and executed in software. To support this transition, he believes we have to move away from traditional databases into working with the concepts of events and events streams.

Kreps, CEO of Confluent and one of the co-creators of Apache Kafka, refers to an article by Marc Andreessen from 2011 where he described the world as being in the middle of a technological and economic shift with software companies taking over large parts of the economy. Kreps summarizes the article into the idea that any process that can be moved into software, will be.

Databases have been an extremely important part of application development, irrespective of how they store data, but Kreps notes that they follow a paradigm where data is passively stored, waiting for commands from an external part to read or modify the data. Basically, these applications are CRUD-based with business logic added on top of a process run by humans through a user interface (UI).

A problem with these CRUD style applications is that they commonly lead to an infrastructure with lots of ad-hoc solutions using messaging systems, ETL products and other techniques for integrating applications in order to pass data between them. Often code is written for specific integrations, and all this causes a mess of interconnections between applications in an organization.

To move away from relying on humans working through a UI to a platform that is able to trigger actions and react on things happening in software, Kreps believes that the solution is events and event streams. They represent a new paradigm where a system is built to support a flow of data through the business and reacting in real-time to the events occurring.

The core idea is that an event stream is a true record of what has happened. Any system or application can read the stream in real time and react on each event. Kreps compares this with a central nervous system but for a software defined company, and he points out that a digital organization needs the software equivalent to a nervous system for connecting all its systems, applications, and processes.

For this to work, Kreps believes that we have to treat the streams of everything that is happening within an organization as data and enable continuous queries that process this data. In a traditional database, the data sits passively and an application or a user issues queries to retrieve data. In stream processing, this is inverted: data is an active and continuous stream of events and queries are passive, reacting to and processing the events in the stream as they arrive. Basically, this is a combination of data storage and processing in real time, and for Kreps a fundamental change in how applications are built.

Other experts however believe there are benefits to using more types of messages besides events. In a presentation at this year’s Event-driven Microservices Conference in Amsterdam, Allard Buijze, CTO at AxonIQ, pointed out that there are different reasons why services or applications want to communicate. With only events available, they are also used to indirectly request something to happen in another service. This often increases the coupling between services and can create a very entangled choreography. Therefore, besides events, Buijze thinks there is a need for two other types of messages: Commands, which represent an intent to change something, and Queries to fulfil a need for information.

In a joint meeting with people from Confluent and Camunda earlier this year, Bernd Rücker, co-founder of Camunda, argued that we should talk about record or message streams instead of event streams, and emphasized that the term in Kafka API is records, not events. For him, Kafka can be used for different types of messages, which include both events and commands. He also noted that when only events are used, it can be hard to get a picture of the overall flow from a business perspective, and referred to an article by Martin Fowler where he points out that although the event notification pattern can be useful, it also adds a risk of losing sight of the larger-scale flow.

MMS • Srini Penchikala

Article originally posted on InfoQ. Visit InfoQ

Eric Estabrooks from DataKitchen spoke at this year’s Data Architecture Summit 2019 Conference about how DevOps tasks should be managed for data architecture. DataOps is a collaborative data management practice and is emerging as an area of interest in the industry.

Similar to how DevOps resulted in a transformative improvement in software development and Lean has resulted in improvements in manufacturing, DataOps aims to create an agile data culture in organizations.

Currently, data teams at most organizations have high errors in data management operations every month, due to factors like incorrect data, broken reports, late delivery, and customer complaints. Most companies are also slow in creating new development environments as well as deploying data analytic pipeline changes to production.

Estabrooks mentioned that hero mentality that we see in some teams is actually career-ending and is bad for individual team members and also for the team as a whole. Instead, he recommends teams to create repeatable processes for quality and predictable database builds and deployments. He referred to the book The Phoenix Project for best practices in DevOps area.

A DataOps mindset change is needed in order to power agile data culture; this includes transition from manual operations to automated operations, a tool-centric approach to a code-centric one, as well as integrating quality into product features from earlier phases in the data management lifecycle.

Estabrooks discussed a seven-step process for diverse teams in organizations (data analysts, data scientists, and data engineers) to realize the DataOps transformation and deliver business value quickly and with high quality. The steps include the following:

- Orchestrate two journeys (orchestration of analytic processes and faster deployments to production)

- Add tests and monitoring

- Use a version control system

- Branch and merge (of any and all scripts related data lifecycle)

- Use multiple environments

- Reuse & containerize

- Parameterize your processing

He also explained the DataOps centric architecture model and how it’s different from traditional canonical data architecture. In canonical data architecture we only think about production, not the process, when making changes to production. On the other hand, the DataOps data architecture makes what’s in production a “central idea.” Teams think first about changes over time to their code, servers, tools, and monitoring for errors, as first class citizens in the design.

He described the functional and physical perspectives of DataOps architecture, which include a DataOps platform consisting of separate components for storage, metadata, authentication, secrets management, and metrics.

Estabrooks concluded the presentation by listing the goals of DataOps architecture, which include updating and publishing changes to analytics within a short time without disrupting operations, discovery of data errors before they reach published analytics, and creating and publishing schema changes as frequently as every day.

MMS • Sergio De Simone

Article originally posted on InfoQ. Visit InfoQ

Apple’s latest iOS release, iOS 13, was affected by a number of bugs that caused disappointed reactions by users. In a story ran by Bloomberg, sources familiar with Apple explained what went wrong in iOS 13 release process and how Apple is aiming to fix this for the future.

In an internal meeting with software developers, writes Bloomberg, Apple top executives Craig Federighi and Stacey Lysik identified iOS daily builds’ instability as the main culprit for iOS 13 bugs. In short, Apple developers were pushing too many unfinished or buggy features to the daily builds. Since new features were active by default, independently of their maturity level, testers had a hard time to actually use their devices, which caused Apple’s buggy releases.

Now, releasing a new major version of an operating system, be it for the desktop or mobile devices, is a major endeavour that is often plagued by already known and unforeseen problems that early upgraders have to endure. Those include both ordinary users as well as developers trying to adapt their apps during the beta phase and beyond.

Apple’s operating systems are no exceptions to this rule and buggy releases have been raising a number of complaints and critical voices for the last few years. Most famously, macOS and iOS developer Marco Arment, known for his podcast app Overcast and previously for the hugely successful Instapaper app and blogging platform Tumblr, said iOS 13 was destroying his morale as a developer. Truth be said, Arment has been one of the most vehement critics of Apple’s software quality for a number of years now, being also the author of a strong critique of macOS release quality already in 2015.

We don’t need major OS releases every year. We don’t need each OS release to have a huge list of new features. We need our computers, phones, and tablets to work well first so we can enjoy new features released at a healthy, gradual, sustainable pace.

With iOS 13, though, Apple set a first in its history and announced the release of the first patch to the new OS version before iOS 13 was event shipped. This was read by several people as a clear invitation to not upgrade iPhone and iPads to the new iOS 13 and instead keep waiting for ios 13.1. It goes without saying that iOS 13.1 itself was far from being perfect.

Besides Arment’s morale, it is worth mentioning TechCrunch editor Matthew Panzarino’s opinion, who compared iOS 13 to iOS 3:

iOS 13 feels like I’m back on iOS 3. Keeps dropping apps out of ram in the background at nearly the pace of 1:1 apps launched-to-quit. Makes drilling down to content or links and then losing them is rage inducing. What a crap ass behavior.

As a way around this, Federighi suggested leaving all new features disabled by default, so testers can ensure no regressions make it into the latest build and avoid being impaired by new bugs. New features shall be enabled on-demand by testers using a new internal Flags menu, making it possible to test each new feature in isolation.

We will see whether this new approach will bring any benefits to the overall quality and stability of iOS 14, next year, but this is surely not the only recipe available for a successful new OS release. As veteran Apple developer David Shayer wrote, there are more ways to improve the release process, including not packing too many new features, correct triaging of bugs to fix, not ignoring old bugs, and expand automated testing. Those are worthy suggestions for any organization releasing software products.

MMS • Rob Winch Eleftheria Stein-Kousathana Filip Hanik

Uncategorized

Presentation: RSocket Messaging with Spring

MMS • Rossen Stoyanchev Brian Clozel Rob Winch

InfoQ Homepage Presentations RSocket Messaging with Spring

Summary

Rossen Stoyanchev, Brian Clozel, Rob Winch cover the upcoming RSocket support in the Spring Framework, Spring Boot, and Spring Security.

Bio

Rossen Stoyanchev is a Spring Framework engineer, Pivotal. Brian Clozel is a Spring Team Member, Pivotal. Rob Winch is a Spring Security Lead, Pivotal.

About the conference

Pivotal Training offers a series of hands-on, technical courses prior to SpringOne Platform. Classes are scheduled two full days before the conference and provide you and your team an opportunity to receive in-depth, lab-based training across some of the latest Pivotal technologies.

MMS • Anthony Alford

Article originally posted on InfoQ. Visit InfoQ

Microsoft Research’s Natural Language Processing Group released the dialogue generative pre-trained transformer (DialoGPT), a pre-trained deep-learning natural language processing (NLP) model for automatic conversation response generation. The model was trained on over 147M dialogues and achieves state-of-the-art results on several benchmarks.

The team presented the details of the system in a paper published on arXiv. DialoGPT is built on the GPT-2 transformer architecture and trained using a dataset scraped from Reddit comment threads. The model was evaluated using two test datasets, the Dialog System Technology Challenges (DSTC-7) dataset and a new dataset of 6k examples also extracted from Reddit. For both datasets, the team used machine-translation metrics such as BLEU and Meteor to evaluate the performance of DialoGPT compared with Microsoft’s Personality Chat and with “Team B,” the winner of DSTC-7. DialoGPT outperformed the other models on all metrics. The team also used human judges to rank the output of DialoGPT against real human responses; the judges preferred DialoGPT’s response about 50% of the time.

The Transformer architecture has become a popular deep-learning model for NLP tasks. These models are usually pre-trained, using unsupervised learning, on large datasets such as the contents of Wikipedia. Pre-training allows the model to learn a natural language structure, before being fine-tuned on a dataset for a particular task (such as the DSTC-7 dataset). Even without fine-tuning, the large pre-trained models can achieve state-of-the-art results on NLP benchmarks. However, the DialoGPT team points out that many of these models are “notorious for generating bland, uninformative samples.” To address this, they implemented a maximum mutual information (MMI) scoring function that re-ranks the model’s outputs, penalizing “bland” outputs. The team also investigated using reinforcement learning to improve the model’s results, but found that this usually resulted in responses that simply repeated the source sentence.

Pre-trained models are especially attractive for conversational systems, due to a lack of high-quality training datasets for dialogue tasks. However, using natural dialogue information from Internet sites such as Reddit or Twitter poses risks that the model will be exposed to, and can learn from, offensive speech. Microsoft’s earlier experimental chatbot, Tay, produced output that was “wildly inappropriate and reprehensible” after conversing with users of Twitter. Microsoft’s Personality Chat cloud service attempts to address this by using a series of machine-learning classifiers to filter out offensive input before auto-generating responses. As a precaution, the DialoGPT team chose not to release the decoder that converts the model outputs into actual text strings. Similarly, OpenAI originally held back their fully-trained model due to concerns about “malicious applications of the technology.”

A user from Reddit did reverse-engineer the decoder and posted some results of using the model, along with the comment:

I’d say all of the generations are grammatically acceptable and quite impressive considering how little information it was given, about 1 out of 5 appeared to be very coherent and sometimes strikingly sarcastic (much [like] reddit). Those prompted with a clear defined topic certainly worked out better.

On Twitter, NLP researcher Julian Harris said:

One always needs to bear in mind in these reports that “close to human performance” is for the tested scenario only. Autogeneration of responses (NLG) is still a very new field and is highly unpredictable…As such deep learning-generated conversational dialogs currently are at best entertaining, and at worst, a terrible, brand-damaging user experience.

The DialoGPT code and pretrained models are available on GitHub.