Month: February 2021

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

The new distributed energy market imposes new data and analytics architectures

Introduction

Part 1 provided a conceptual-level reference architecture of a traditional Data and Analytics (D&A) platform.

Part 2 provided a conceptual-level reference architecture of a modern D&A platform.

Part 3 highlighted the strategic objectives and described the business requirements of a TSO that modernizes its D&A platform as an essential step in the roadmap of implementing its cyber-physical grid for energy transition. It also described the toolset used to define the architecture requirements and develop the future state architecture of TSO’s D&A platform.

This part maps the business requirements described in part 3 into architectural requirements. It also describes the future state architecture and the implementation approach of the future state D&A platform.

TRANSPOWER Future state Architectural Requirements

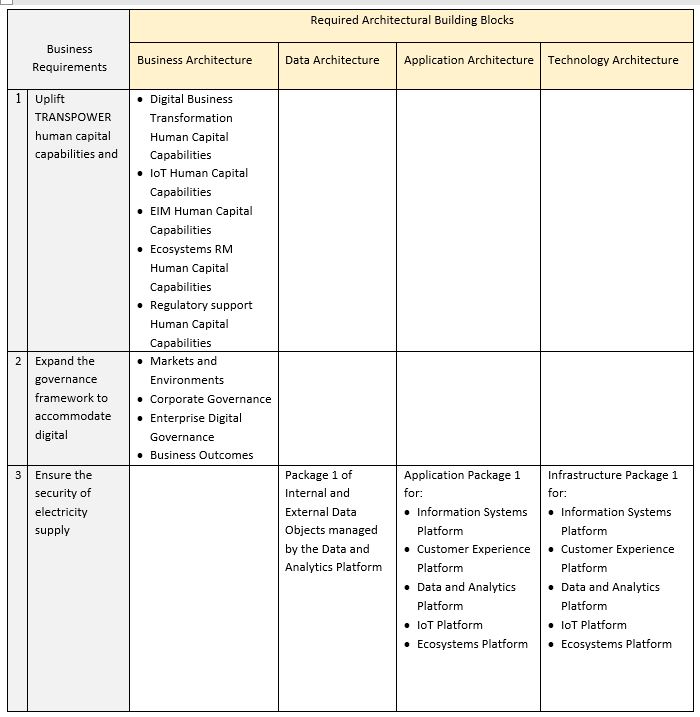

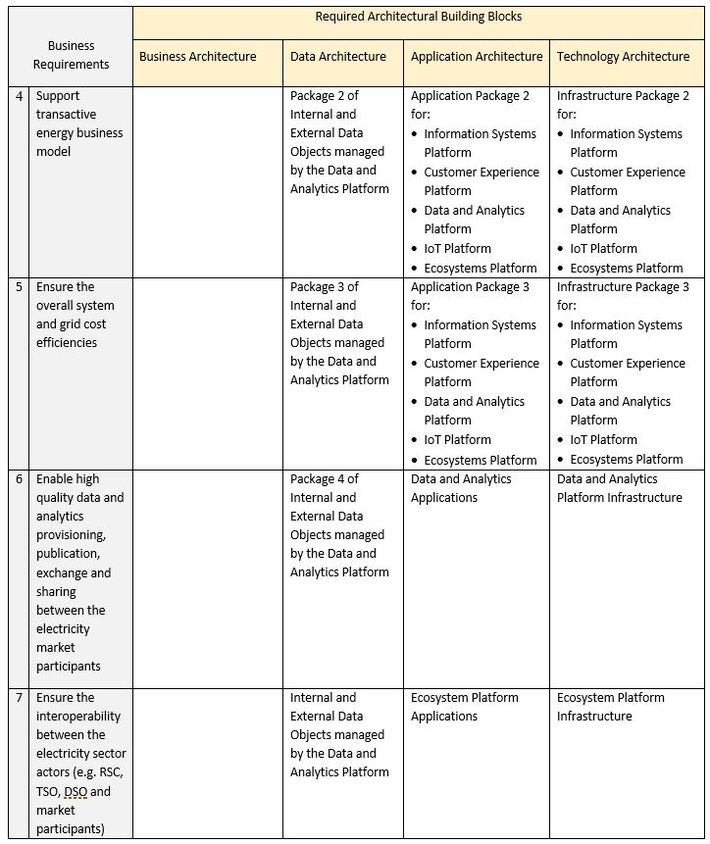

In order to develop the future state architecture, the business requirements described in part 3 are first mapped into high-level architectural requirements. These architectural requirements represent the architectural building blocks that are missing or need to be improved in each domain of TRANSPOWER enterprise architecture in order to realize the future state architecture. Table 1 shows TRANSPOWER high-level architectural requirements.

Table 1: TRANSPOWER high-level architectural requirements

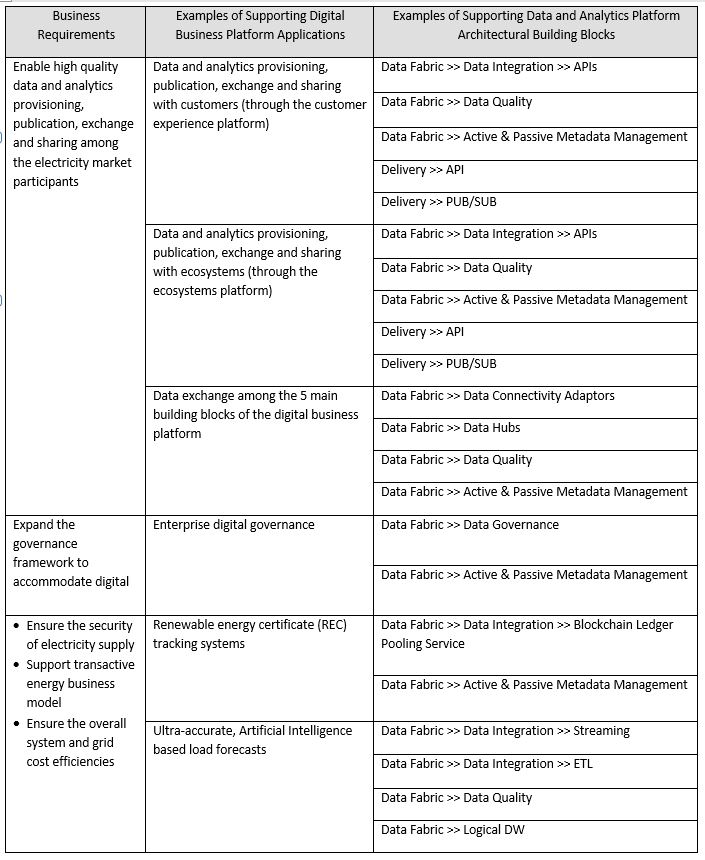

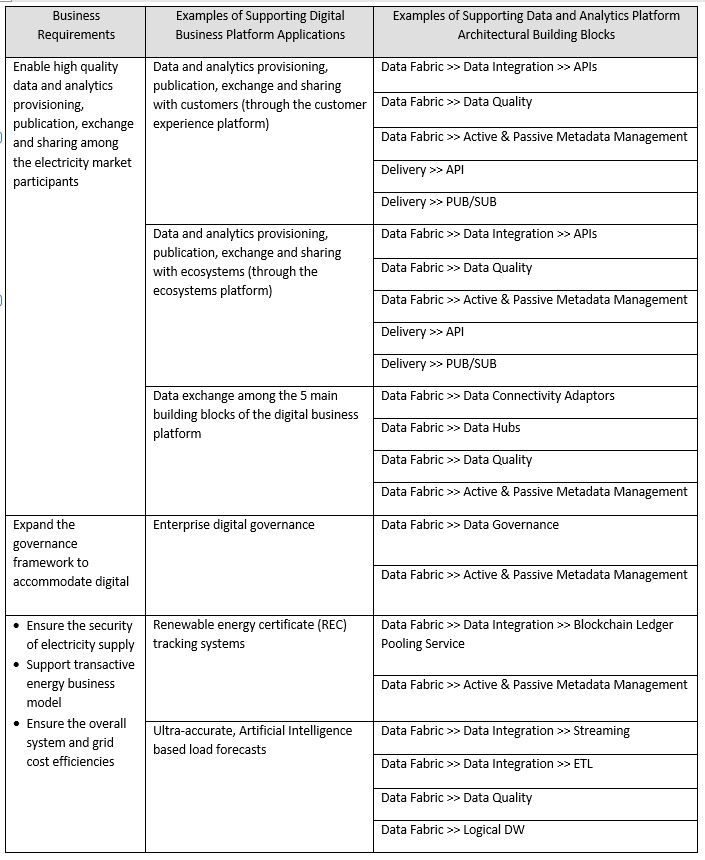

The Future State Architecture of TRANSPOWER Data and Analytics Platform

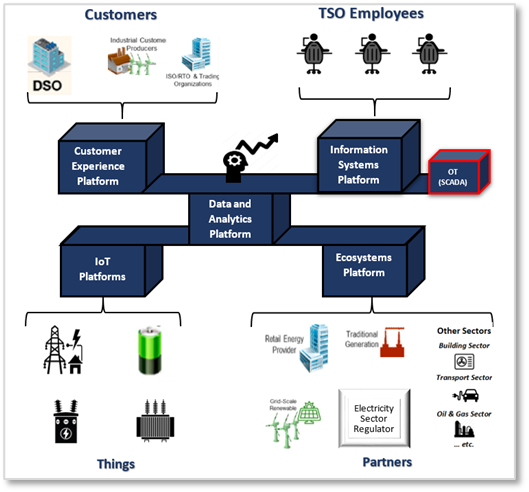

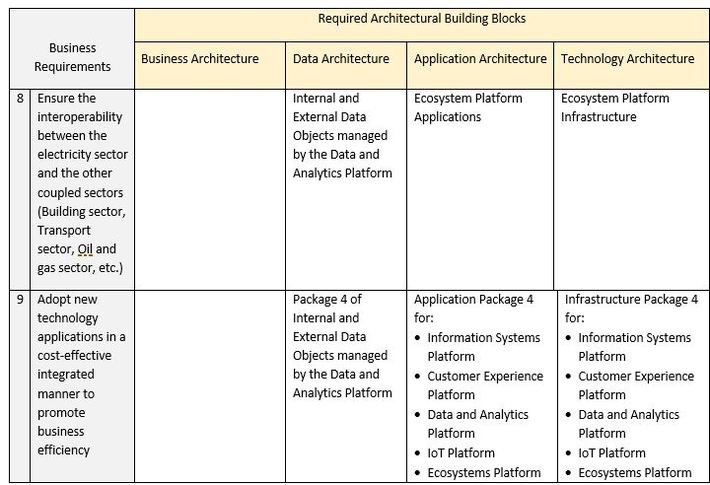

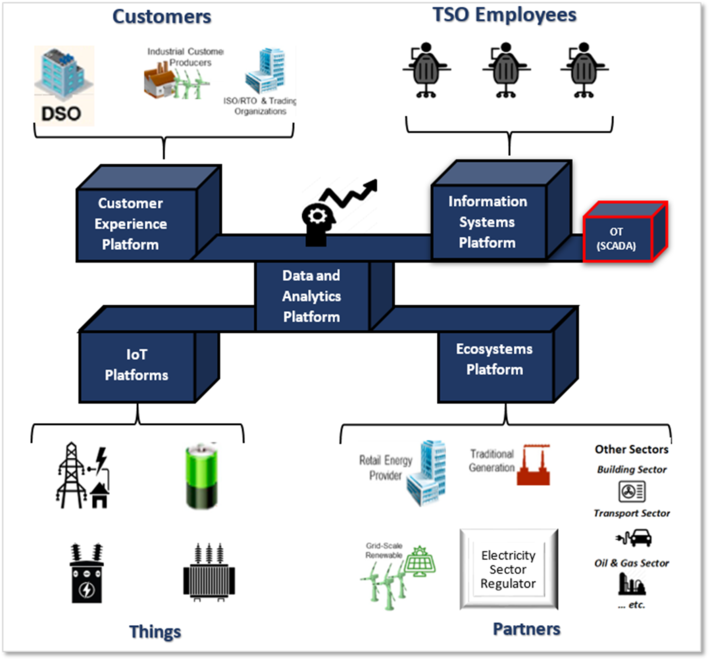

Figure 1 depicts the conceptual-level architecture of TRANSPOWER digital business platform. Modernizing the existing D&A platform is one of the prerequisites for TRANSPOWER to build is digital business platform. Therefore, TRANSPOWER used the high-level architectural requirements shown in Table 1 and the modern data and analytics platform reference architecture described in Part 2 to develop the future state architecture of its D&A platform. Table 2 shows some examples of TRANSPOWER business requirements and their supporting digital business platform applications as well as the D&A platform architectural building blocks that support these applications. These D&A architectural building blocks are highlighted in red in Figure 2.

Figure 1. Conceptual-level architecture of TRANSPOWER digital business platform

Table 2: Examples of TRANSPOWER business requirements and their supporting digital business platform applications and D&A platform architectural building blocks

Figure 2: Examples of the new architectural building blocks

The Implementation Approach

After establishing the new human capital capabilities required for the implementation of the digital business transformation program, TRANSPOWER started to partner with relevant ecosystem players and deliver the program.

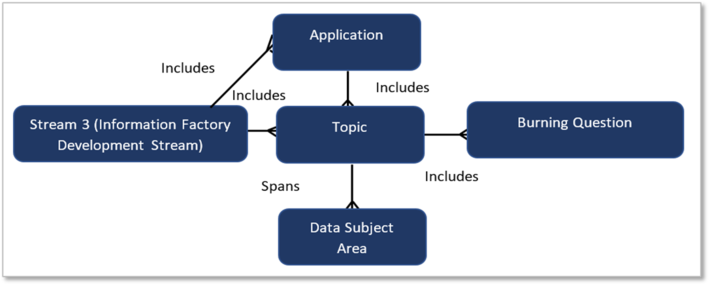

The implementation phase of the D&A platform modernization was based on the Unified Analytics Framework (UAF) described in Part 3. The new D&A applications and architectural building blocks described in Table 2 are planned and delivered using Part II of the UAF (including Inmon’s Seven Streams Approach). According to Inmon’s Seven Streams Approach, stream 3 is the “driver stream” that sets the priorities for the other six streams, and the business discovery process should be driven by the “burning questions” that the business has put forward as its high-priority questions. These are questions for which the business community needs answers so that decisions can be made, and actions can be taken that will effectively put money on the company’s bottom line. Such questions can be grouped into topics, such as customer satisfaction, profitability, risk, and so forth. The information required to answer these questions is identified next. Finally, the data essential to manufacture the information that answers the burning questions (or even automate actions) is identified. It is worth noting that the Information Factory Development Stream is usually built topic by topic or application by application. Topics are usually grouped into applications and a topic contains several burning questions. Topic often spans multiple data subject areas.

Figure 3 depicts the relationship between Burning Questions, Applications, Topics Data Subject Areas, and the Information Factory Development Stream.

Figure 3. Relationship between Burning Questions, Applications, Topics Subject Areas, and the Information Factory Development Stream

Conclusion

In many cases, modernizing the traditional D&A platform is one of the essential steps an enterprise should take in order to build its digital business platform, therefore enabling its digital business transformation and gaining a sustainable competitive advantage. This four-part series introduced a step by step approach and a toolkit that can be used to determine what parts of the existing traditional D&A capabilities are missing or need to be improved and modernized in order to build the enterprise digital business platform. The use of the approach and the toolkit was illustrated by an example of a power utility company, however, this approach and the toolkit can be easily adapted and used in other vertical industries such as Petroleum, Transportation, Mining, and so forth.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

The automated technological enhancement and the edge of AI (Artificial Intelligence) change the scenario of the business like never before. It eliminates the heavy workload of employers and employees. Like due to the implementation of technological things, they can now enjoy their work rather than take the stress of the compilation of the job.

What Is The Meaning Of AI?

Artificial Intelligence is a computerized technology that works like a human being but eliminates human errors from the operations. It reduces the workload of the employees and employers. Everything is handled by internet-based techniques rather than manually. Thus, it saves time and labor costs but provides a hundred percent accuracy in each operation conducted by the human with the help of computerized AI technology.

What Is The Meaning Of Automation?

Automation contains almost every technology that reduces the workload of human beings. Like if we talk about the human resource department of the company, then automation helps the HR admin to secure the information by saving the file into an integrated database that can help them in the payroll process for the workforce. It saves each file automatically in one central system. Thus, it reduces the workload of humans.

What Is The Difference Between AI And Automation?

If you have not enough knowledge about both the terms, i.e., artificial intelligence and automation, then you also think that both are the same because the main feature of both terms is to reduce human interventions. But that is not true!, following are the differences between AI and automation.

|

AI (Artificial Intelligence) |

Automation |

|

AI is a science of technology that solves the more challenging things quickly; even humans can not touch the best solution to the problem that AI can do for them. |

Automation uses technology that needs no human intervention or less human intervention to complete the task. |

|

AI has the efficiency in doing work like humans, learning like humans, and even thinking like humans. |

Automation has the efficiency to reduce the workload of human beings. |

|

AI basically eliminates human work because it works as humans. |

Automation consists of less human work because it needs human’s command to do anything. |

How Can HR Uses AI And Automation To Transform Workplace Environment?

The human resource department needs AI and automation to reduce the workload and retain the employees for a more extended period of time. Following are the HR functions that are simplified with the use of AI and automated technology.

Recruitment And Onboarding

The primary task of the HR department is to recruit the best match for the vacant place. Earlier, they used to select people after analyzing the whole resume manually. But now, they use AI-based automated software called ATS (Applicant Tracking System). With the help of the software, they can classify the eligible candidate’s data automatically in just one click.

Onboarding comes into the picture after selecting the employee. The older manual onboarding process was time-consuming and also not that effective. The technological enhancement simplifies the onboarding process and allows the newly joined employee to change or edit his/her personal and professional information by login into the personal application ID.

Employee Experience

Here I am not discussing the working experience that your employee has. I am talking about the experience of the workforce using HR technology. A better working experience means the more satisfied the workforce. Employee satisfaction is the main ingredient when we are talking about the retention rate.

Everything is synchronized with each other, like the better working experience of the employee means satisfied employees, satisfied employees stay in one organization for a more extended period of time, the retention rate of the employees increases the goodwill of the firm and performance of the workforce, and improvement in employee’s productivity means enhancement the profitability of the business. Thus, it is necessary to implement technological weapons for a better employee working experience, which is ultimately beneficial for the company’s growth.

Attendance Management

Automation and AI technologies are beneficial to any industry, especially in the attendance management system. Earlier, the human resource department took attendance of the employees manually, which was hard to keep for a longer period. But the AI reduces the workload of keeping attendance. Nowadays, the biometric punch method or any automated method tracks the real-time working hours of the workforce. It tracks punch-in, punch-out, early going, late coming and mispunch of the employees and keeps the document in an integrated HR database until the manager deletes it from the system.

Salary And Leave Management

An HR manager must keep the data of employees’ leave; he/she can use it at the end of the month while calculating the salary of the employees. Not just for the salary calculation, leave management is essential for analyzing the performance of the workforce. It is necessary to keep a record of the real-time working hours of the employees for calculating the KPI (Key Performance Indicators). An HR admin compares the performance of the employees with his/her past performance.

Learning And Development

It is essential to the human resource department of the employees to prepare the reports of employee development, and for enhancing the knowledge of the employee, they have to arrange learning sessions for them. They can organize the guest talk, re-training session, doubt solving session, etc., to develop the employees. AI and automation remind them after a specific time to check the development of the employees.

People Analytics

Analysis of the employee’s job is essential to improve their performance. After selecting an efficient workforce, the company can not touch the estimated profit level; then, there may be a fault in the employee’s work or the company’s management. Thus, analysis of both is essential to increase the profitability of the firm.

What Is The Impact Of AI And Automation In An HR Department?

There is a considerable impact of artificial intelligence and automation in the human resource department. It changes each operation and eliminates the paperwork from an organization. Below listed are the three main impacts of both technologies in the HRD;

Personalization

It allows the human resource department to work according to their convenience. Like AI is acting like a human and also thinks like a human; thus, it does not need anyone to operate it and continually watch on it. So, the humanization system is reducing the workload of the HRD.

Lifelong Learning

After implementing AI and automation for the betterment of the company, they must accept further updates in the technology. So, the learning of the system is not a one-time act; it is lifelong learning.

User-centric Design

AI and automation can also be customized. If the company wants additional features in technology or computerized base software, they can make it according to their choice. Thus, it is a user-centric design which is the primary benefit of the technology.

What Is The Future Of AI And Automation In An HRD?

As we know, the advanced technology will provide lots of benefits to the employees and employer both; we can say that it reduces the workload of them and allows them to enjoy their work rather than taking the stress of the work.

Employee Analytics

Employee analytics is essential to improve their performance. It is necessary to identify the loopholes that affect the company’s growth and employee performance. With the help of performance measurement, the human resource department identifies the mistakes of the employee, and according to the report, they can watch over the particular weak point of the workforce and help them to grow.

AI and automation help them to classify the lacking points in employees’ performance through which they can improve their productivity and the company’s profitability.

Digital HR And HRtech

Analytics of the employee’s performance, their growth, company’s growth, etc., is essential to know. HR analytics plays a vital role in the digital age. HR technology helps the human resource manager to do more with lesser efforts. It allows the employer to handle each operation easily and maintain the data automatically without human errors.

Stakeholder Management

AI and automation manage the stock of the company in the NSE and BSE stock markets. The higher price of the stock means the white side of the company, while the lower cost of the stock shows the dark sides of the company. The use of the technology improves the profitability of the firm and attracts investors to invest more in a company’s stock.

Strategic Workforce Planning

The advanced technology allows the HR department to enhance the planning of the workforce. It clarifies the role of the individual within the workplace and also explains whom to report. It maintains the workflow on the premises and keeps the workforce in the discipline.

Employee Engagement

The use of artificial intelligence and automated technology increases the engagement of the employees. It reduces the workload of the employees and allows them to work smartly, with less effort. See! Hard work is essential but working shabbily is not acceptable in a race where everyone wants to win.

Increases Performance

The technology used for reducing the workload of the employees and employers. It allows them to do more with few efforts; thus, it increases the performance of the employees and enhances the profitability of an organization.

Conclusion

From the above discussion, we can state that artificial intelligence and automated technology change the whole scenario of the human resource department and reduce the paperwork, which is a time and cost-consuming method even not that accurate. So, it is obvious to say that it increases the profitability ratio of the company.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Cyber-Physical Systems (CPS) are engineered systems that orchestrate sensing, computation, control, networking, and analytics to interact with the physical world (including humans), and enable safe, real-time, secure, reliable, resilient, and adaptable performance

Introduction

Part 1 provided a conceptual-level reference architecture of a traditional Data and Analytics (D&A) platform.

Part 2 provided a conceptual-level reference architecture of a modern D&A platform.

This part and parts 4 explain how these two reference architectures can be used to modernize an existing traditional D&A platform. They illustrate this by providing an example of a Transmission System Operator (TSO) that modernizes its existing traditional D&A platform in order to build a cyber-physical grid for Energy Transition. However, the approaches used in this example can be leveraged as a toolkit to implement similar work in other vertical industries such as transportation, defence, petroleum, water utilities, and so forth.

A Word About Cyber-Physical Systems

Cyber-Physical Systems (CPS) are engineered systems that orchestrate sensing, computation, control, networking, and analytics to interact with the physical world (including humans), and enable safe, real-time, secure, reliable, resilient, and adaptable performance.

CPS currently exist across a wide range of environments and spans across multiple industries ranging from military, aerospace and automotive, through energy, manufacturing operations and physical security information management, to healthcare, agriculture, and law enforcement.

CPS is part of a family of interconnected concepts that seek to optimize outcomes, from individual processes to entire ecosystems, by merging cyber/digital approaches with the physical world. Examples of these concepts are Operational Technology, Industrial Internet of Things, Internet of Things, Machine-to-Machine and Smart Cities/Industry 4.0/Made in China 2025.

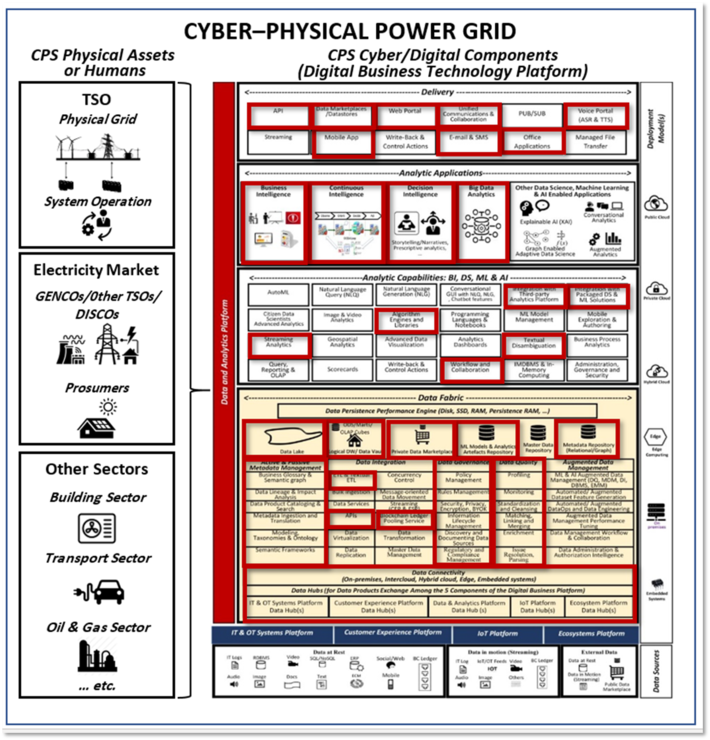

Table 1 shows the main purposes of these interconnected concepts. Figure 1 depicts that Digital Business Technology Platform(s) can be used to implement the cyber/digital components of modern Cyber-Physical Systems in different vertical industries.

Table 1: Main purposes of CPS and other interconnected concepts

Figure 1: Digital Business Technology Platform(s) can be used to implement the cyber/digital components of modern Cyber-Physical Systems

TRANSPOWER Transmission System Operator

TRANSPOWER is one of the nation’s largest Transmission System Operators (TSO) which owns the power transmission assets and operates the system. TRANSPOWER was established 15 years ago when the electricity market evolved from a vertically integrated style of ownership and control to a horizontally integrated style of ownership and control.

Figure 2 depicts that the initial state electricity market exhibits a vertically integrated style of ownership and control; and Figure 3 depicts that the current state electricity market exhibits a horizontally integrated style of ownership and control.

Figure 2: The initial state electricity market exhibits a vertically integrated style of ownership and control

- Genco i … Power Generation Company i

- Disco j … Power Distribution Company j

- TSO … Transmission System Operator

Figure 3: The current state electricity market exhibits a horizontally integrated style of ownership and control

The Future State Electricity Market

Recently, and in response to the key requirements of the national policies of climate and energy, the following strategic objectives were set by the government for the electricity sector to achieve within the next 10 years and TRANPOWER was mandated to lead the development and implementation of a digital business transformation program to achieve them:

- 30% cuts in greenhouse gas emissions

- 25% share for renewable energy

- 26% improvement in energy efficiency

In response to the confluence of these strategic objectives, and the key forces that will be driving transformation in the global electrical utility sectors for years to come (e.g. digitalization, decentralization, democratization and decarbonization), it has been decided to evolve the electricity market (once again) into a distributed energy market style. The sector actors of the distributed energy market will leverage sustainable energy provisioning business models that support transactive energy concepts (including Transactive Control). Figure 4 depicts that the future state electricity market exhibits a distributed energy market style.

Figure 4: The future state electricity market exhibits a distributed energy market style – adapted from the GWAC transactive energy diagram

TRANSPOWER Digital Business Transformation

To realize the vision of the distributed energy market, TRANSPOWER decided to implement a cyber-physical power grid where the cyber components of this grid will be implemented in the form of a Digital Business Technology Platform. TRANSPOWER took this decision based on an initial study that explored and evaluated the business and technical dimensions of the new electricity market, and hence established a digital business transformation program that comprises the following steps:

Development of digital business transformation strategy and roadmap

- Development of current state Operating Model and Business Capability Model

- Conducting a survey on “digital-only” businesses and “born-digital” businesses relevant to the vision of the distributed energy market and relevant to the new strategic objectives set by the government for the electricity sector

- Reviewing the socioeconomic drivers and technology innovation trends driving the power utility industry toward a decarbonized, distributed, digital and democratized future and their implication on TRANSPOWER Information Communication Technology (ICT) and Operational Technology (OT) plans

- Identifying potential areas for delivering business value by rethinking, reinventing, reimagining and transforming the current business model by better leveraging Social, Mobile, Information, Cloud and Internet of Things

- Developing the future state operating model and business capability model

- Reviewing TRANSPOWER organization chart and propose new organizational capabilities and pathways for digital success

- Defining the initiatives of the digital business program

- Expanding the governance framework to accommodate digital

- Synchronizing TRANSPOWER digital business strategy with its ICT and OT strategies

- Developing a high-level implementation roadmap

Defining the architecture requirements and develop the future state architecture

Establishing the new human capital capabilities required for the implementation of the digital business transformation program

Partnering with relevant ecosystem players

Building the digital business technology platform

Implementing the digital business transformation program

Development of Digital Business Transformation Strategy and Roadmap

Some of the most important high-level deliverables generated from this step are shown in Figures 5 – 7.

Figure 5 depicts TRANSPOWER future state operating model. The future state operating model is used to align strategy with execution. The red text in Figure 5 indicates that some capability building blocks are missing or need to be improved and modernized to enable the implementation of the digital business transformation program.

One of the most important new capability building blocks shown in Figure 5 is the digital business transformation capability building block. This building block includes the new capabilities needed to create TRANSPOWER digital services; and it is highlighted by the red text in the left side of Figure 5.

Figure 6 shows a closer look at the digital business transformation capability building block.

Table 2 shows the most important business requirements related to TRANSPOWER future state operating model.

Figure 7 depicts TRANSPOWER digital business transformation program high-level implementation roadmap.

- CG… Corporate Governance

- ERM … Enterprise Risk Management

- IC… Internal Control

- EA … Enterprise Architecture

- EIM … Enterprise Information Management

- ICT … Information Communication Technology

- OT … Operational Technology

- IoT … Internet of Things

- CRM… Customer Relationship Management

- Ecosystem RM … Ecosystems Relationship Management

Figure 5: TRANSPOWER future state operating model

Figure 6: TRANSPOWER digital business transformation capabilities building block – A closer look

Table 2: The most important business requirements

Figure 7: TRANSPOWER digital business transformation program high-level implementation roadmap

Future State Architecture Toolkit

TRANSPOWER used a custom-made Unified Analytics Framework (UAF) as tools to define the future state architectural requirements and develop the future state architecture of its D&A platform. This framework is developed to support both the Agile and the Waterfall approaches for architecture development and program planning and delivery. The reference architecture of modern D&A platform environment is an essential part of the UAF. However, the UAF is based on a wider set of underlying foundations that includes other reference architecture models, best practices and industry standards that had been selected, combined, and tailored to serve this purpose. The UAF is suitable for the utility industry and it can be easily tailored and used to implement similar work in other asset-intensive industries such as heavy manufacturing, aviation, oil and gas, transportation, and mining. Figure 8 depicts the high-level structure of the UAF, Table 2 depicts the UAF underlying foundation. Figures 9 and 10 depict tow of these foundations (the reference architecture of modern D&A platform environment and Inmon’s seven streams approach respectively.

Figure 8: High-Level Structure of Unified Analytics Framework

Table 3: The UAF Underlying Foundations

Figure 9: Reference Architecture of Modern D&A Platform Environment

Stream 3 is the “driver stream” that sets the priorities for the other six streams

Figure 10: Inmon’s Seven Streams Approach for Analytical Program Planning and Delivery

Conclusion

This part described the business case and highlighted the strategic objectives of a TSO that modernizes its D&A platform as a part of implementing its cyber-physical grid for energy transition. The TSO used a toolset to define the architecture requirements and develop the future state architecture of the D&A platform. The most essential tools used by the TSO include the TSO operating model, the D&A environment reference architecture, and the seven streams approach for program planning and delivery. The next part describes the architecture requirements, the future state architecture, and the implementation approach of the modern D&A platform.

MMS • Arthur Casals

Article originally posted on InfoQ. Visit InfoQ

Earlier this week, the first edition of the .NET Conf: Focus series for 2021 took place, featuring Windows desktop development topics. The event targeted developers of all stripes, covering both existing functionalities on .NET 5 and upcoming projects such as .NET MAUI and Project Reunion. The focus conferences are free, one-day live-streamed events featuring speakers from the community and .NET product teams.

The focus series is a branch of the original .NET Conf, an annual event organized by the .NET community and Microsoft that showcases the latest developments for the .NET platform. Each focus event targets a specific .NET-related technology, providing a series of in-depth, hands-on sessions aimed at .NET developers.

.NET Conf: Windows was different from the other past events in the series because it was focused on a single operating system (OS) – which may seem strange considering the ongoing unification plan towards a cross-platform, multi-OS .NET framework. However, the focus was justified, considering the importance of upcoming projects such as .NET MAUI and the ongoing efforts related to ARM64 development.

The conference started with an overview of the latest developments related to .NET and desktop development. In this context, Scott Hunter, director of program management at Microsoft, talked about existing .NET 5 features related to desktop app development, such as self-contained single executable files and ClickOnce deployment. He also talked about the latest performance improvements and features in Windows Forms and WPF, assisted with live demonstrations by Olia Gavrysh and Dmitry Lyalin, both at Microsoft.

The following session, presented by Cathy Sullivan (program manager at Microsoft), featured the preview release of the .NET Upgrade Assistant, an automated tool to assist developers in upgrading existing .NET applications to .NET 5. While not being a complete upgrade tool (developers will still have to complete the upgrade manually), its GitHub repository includes a link to a free e-book on porting ASP.NET apps to .NET Core that covers multiple migration scenarios.

The remainder of the sessions were short (approx. 30 minutes), covering topics mentioned in the keynote (such as WPF and Windows forms – including the recent support for ARM64 released with .NET 6 Preview 1), app deployment with ClickOnce, and specific coverage of WebView2, Microsoft’s new embedded web browser control used by Windows Forms. Other interesting sessions included demonstrations on building real-time desktop apps with Azure SignalR services (presented by Sam Basu) and accessing WinRT and Win32 APIs with .NET 5 (presented by Mike Battista and Angela Zhang, both at Microsoft).

The last three sessions focused on features and projects expected to ship with .NET 6 later this year. Daniel Roth, program manager at Microsoft, talked about building hybrid applications with Blazor. Hybrid applications are native apps that use web technologies for the UI, and support for cross-platform hybrid apps is an important feature of both .NET 6 and .NET MAUI.

Zarya Faraj and Miguel Ramos explained the concepts behind Project Reunion, which provides a unified development platform that can be used for all apps (Win32, Packaged, and UWP) targeting all the Windows 10 versions. The event was closed with a presentation by Maddy Leger and David Ortinau (both at Microsoft) on the future of native applications development in .NET 6 – which focused on .NET MAUI.

A relevant takeaway from the conference is how the recent efforts on developing native device applications targeting multiple platforms are revolving around .NET MAUI. However, it is important to notice that .NET MAUI does not represent a universal .NET client application development model, merging both native and web applications. This is an important distinction, especially in light of the many cross-references and mentions of Blazor Desktop, another highly anticipated feature in .NET 6. Richard Lander, program manager for the .NET team at Microsoft, recently approached this topic in multiple comments and posts:

I think folks may be missing the narrative on Blazor desktop. It is intended as a compelling choice for cross-platform client apps that enable using web assets. […] Blazor Desktop and MAUI are intended to be separate. Blazor Desktop will be hosted via a MAUI webview. MAUI will provide the desktop or mobile application container. MAUI will enable using native controls if that is needed/desired.

The next focus events are still undefined. The complete recording of this event is already available on YouTube. Recordings of all .NET Conf and .NET Conf: Focus events are available in curated playlists on MSDN Channel 9.

MMS • Bruno Couriol

Article originally posted on InfoQ. Visit InfoQ

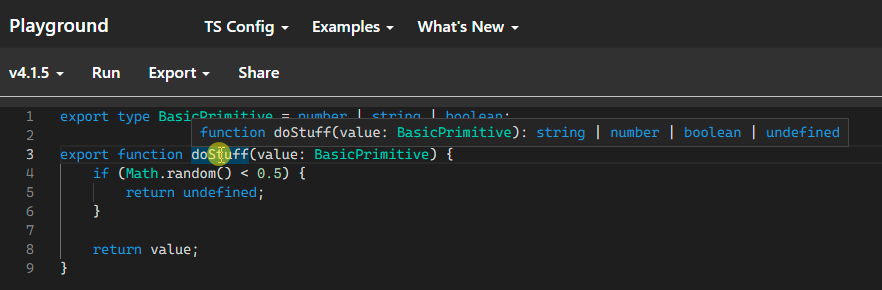

The TypeScript team announced the release of TypeScript 4.2, which features more flexible type annotations, stricter checks, extra configuration options, and a few breaking changes. Tuple types now allow rest arguments in any position (instead of only in last position). Type aliases are no longer expanded in type error messages, providing a better developer experience.

TypeScript 4.2 supports rest arguments in any position in tuple types:

type T1 = [...string[], number]; // Zero or more strings followed by a number

type T2 = [number, ...boolean[], string, string]; // Number followed by zero or more booleans followed by two strings

In previous versions, the rest arguments had to be in last position (e.g., type T1 = [number, ...string[]];. It was thus not possible to strongly type functions with a variable number of parameters that ended with a fixed set of parameters:

function f1(...args: [...string[], number]) {

const strs = args.slice(0, -1) as string[];

const num = args[args.length - 1] as number;

}

f1(5);

f1('abc', 5);

f1('abc', 'def', 5);

f1('abc', 'def', 5, 6);

The function f1 has an indefinite number of arguments of type string, followed by a parameter of type number. f1 can now be typed accurately. Multiple rest elements are not permitted. An optional element cannot precede a required element or follow a rest element. Types are normalized as follows:

type Tup3<T extends unknown[], U extends unknown[], V extends unknown[]> = [...T, ...U, ...V];

type TN1 = Tup3<[number], string[], [number]>;

type TN2 = Tup3<[number], [string?], [boolean]>;

type TN3 = Tup3<[number], string[], [boolean?]>;

type TN4 = Tup3<[number], string[], boolean[]>;

type TN5 = Tup3<string[], number[], boolean[]>;

TypeScript 4.2 provides a better developer experience when using type aliases:

The previous example shows that the type alias BasicPrimitive that was previously expanded (normalized) in some contexts (i.e. to number | string | boolean) is no longer so. The release note emphasized the improved developer experience in several parts of the TypeScript experience:

You can avoid some unfortunately humongous types getting displayed, and that often translates to getting better

.d.tsfile output, error messages, and in-editor type displays in quick info and signature help. This can help TypeScript feel a little bit more approachable for newcomers.

The abstract modifier can now be used on constructor signatures.

instance.

abstract class Shape {

abstract getArea(): number;

}

new Shape();

interface HasArea {

getArea(): number;

}

let Ctor: new () => HasArea = Shape;

let Ctor: abstract new () => HasArea = Shape;

The new semantics for the abstract modifier allows writing mixin factories in a way that supports abstract classes.

Destructured variables can now be explicitly marked as unused. Some developers would previously write:

const [Input, , , meta] = input

for better maintainability and readability instead of

const [Input, , , meta] = input

Those developers can now prefix unused variables with an underscore:

const [Input, _state , _actions, meta] = input

The new version of TypeScript also adds stricter checks for the in operator: "foo" in 42 will trigger a type error. TypeScript’s uncalled function checks now apply within && and || expressions. Setting the noPropertyAccessFromIndexSignature flag no longer makes it possible to use property access with the dot operator (e.g., person.name) when a type had a string index signature. The explainFiles compiler flag (e.g., tsc --explainFiles) has the compiler produce detailed information about resolved and processed files:

TS_Compiler_Directory/4.2.2/lib/lib.es5.d.ts

Library referenced via 'es5' from file 'TS_Compiler_Directory/4.2.2/lib/lib.es2015.d.ts'

TS_Compiler_Directory/4.2.2/lib/lib.es2015.d.ts

Library referenced via 'es2015' from file 'TS_Compiler_Directory/4.2.2/lib/lib.es2016.d.ts'

... More Library References...

foo.ts

Matched by include pattern '**/*' in 'tsconfig.json'

TypeScript 4.2 also contains a few breaking changes. Type arguments in JavaScript are not parsed as type arguments, meaning that the valid TypeScript code f<T>(100) will be parsed in a JavaScript file as per the JavaScript spec, i.e. as (f < T) > (100). .d.ts extensions cannot be used In import paths: import { Foo } from "./foo.d.ts"; may be replaced with any of the following:

import { Foo } from "./foo.js";

import { Foo } from "./foo";

import { Foo } from "./foo/index.js";

TypeScript contains additional features and breaking changes. Developers are invited to read the full release note. TypeScript is open-source software available under the Apache 2 license. Contributions and feedback are encouraged via the TypeScript GitHub project and should follow the TypeScript contribution guidelines and Microsoft open-source code of conduct.

Presentation: Momentum > Urgency and Other Counter-intuitive Principles for Increasing Velocity

MMS • Elisabeth Hendrickson

Article originally posted on InfoQ. Visit InfoQ

Transcript

Hendrickson: My name is Elisabeth Hendrickson. Let’s talk about speed, more specifically, speed of software project delivery. It’s amazing, nobody ever says, “I want to figure out how to go slower and be more methodical, and cautious.” Everyone I talk to typically wants to figure out how to go faster. This was true when I was a consultant. It’s been true in most of the jobs that I’ve held. Everyone wants to go faster, and yet, our intuition is a terrible source of ideas for how to go faster. The most common ways that I hear is, got to hold somebody’s feet to the fire. Fairly violent. Got to set deadlines, because that’s the way to create a sense of urgency. In my experience, these techniques, they tend to backfire. They don’t go quite the way people expect.

Pressuring Engineers to Deliver

In fact, let’s start with a story. This story takes place a few years ago, in an open office plan, back when we had those, before COVID. I want you to imagine, it’s me and one of my peers, the head of product management. The two of us are in a tense discussion. For our purposes, I’m going to call him Jay. That’s not his real name, but he needs a name in this story. Jay has been pressuring me for a while, not just on this particular day, about how to get the engineers to go faster. He’s frustrated with the pace of delivery. He has, after all, made commitments to our customers, and engineering is not holding up their end of the bargain. For the moment, I’m going to skip the fact that he made these commitments without actually consulting engineering, but that’s neither here nor there.

Jay and I are having a tense but still very professional discussion. Neither one of us is raising our voices. Neither one of us is losing it. We are clearly on opposite sides in a very critical issue. At this point in the conversation, Jay snaps. Jay says, “Come on, Elisabeth, you know developers. You have to light a fire under them to get anything done.” This does not go over well with me. In fact, you can probably imagine what’s going through my head. At this point, what I’m thinking is, what? No, I don’t know that. I don’t know that you have to light a fire under developers. The developers that I know all care deeply about delivering value. Nobody is malingering or being lazy. You don’t need to light a fire under them. In fact, what I do know is that we have too many top priorities in flight. We have too much work in progress. Nothing is getting all the way done, because we are attempting to do too much. Furthermore, your assumptions, Jay, about the duration that something should take are based largely on what you’re hearing from your product managers. I’m hearing from those same product managers. One of them cornered me to inform me that the particular chunk of work that was taking a couple of weeks was, in fact, the work of only a couple of days if we had competent developers. That I should be firing anybody who couldn’t deliver said work in a couple of days. That conversation incidentally, also did not go well.

I’m not saying this out loud to Jay. That would have been counterproductive. Inside my head, what I’m thinking is, you’re making assumptions about how long it should take to deliver based on estimates that somebody who is not the developer gave you, and the only people with valid estimates are the ones who are actually doing the work. Finally, I think that we’re probably confusing output and outcomes. You’re focused on output, and hurrying up the speed of delivery instead of being focused on the outcomes that we actually want. We’re at risk of confusing speed and progress. You’re trying to manage by deadlines. The irony is that deadlines can ultimately make projects go slower. You see, this particular group was working on software that had been around for a while. There had been, over the course of the years, many people like Jay, who had tried to manage by deadline. As a result, there had been numerous circumstances where the developers had to make a trade-off, because something had to give. Sometimes the trade-off they made was against their own personal lives. They worked a lot of hours. That thing is, about working a lot of hours, exhausted developers don’t produce good work. The code was incredibly complex, difficult to reason about.

Then the other thing that happens is when something has to give, inevitably, it often is in the code quality or in the tests. There were numerous places in the code where logic had been shoved in wherever it was expedient, instead of where it actually belonged. Of course, there had never been time to go clean things up and refactor to make the code easier to work with. Furthermore, writing good automated tests does take time. We had a set of tests, but they weren’t necessarily the right tests, and they didn’t run consistently. They tended to have flakes, tests that would fail for no good reason, causing our CI to go red. We couldn’t trust our CI. This was a natural outcome of having managed to deadlines, and now Jay was turning up the heat even harder on those deadlines. The engineers were in the impossible situation of having to figure out what trade-offs they could make. Frankly, we were pretty much out of trade-offs that we could make.

Risk Is Invisible

One of the most insidious things about this is that when a developer has to make a trade-off because of a deadline, what they’re really trading off is future risk against current velocity. That risk, it’s nearly invisible. People like Jay, who had put pressure on the development organization previously, probably didn’t stick around long enough to discover the implications of the decisions that the developers had made on their behalf. We found ourselves in a legacy code situation, and it was pretty difficult to dig out of it.

What Does Work?

I had to figure out how to frame a response to Jay. In my head, what I was thinking about was everything that I know, that did work. That’s what I really wanted to talk about today, what does work? I want to tell you a story. This is a good story. It’s a happy story. It’s a story of a project that was tremendously successful that delivered business value, where the engineering team was incredibly effective. We worked incredibly collaboratively with our product manager who was also the company founder. Once upon a time, back in 2007 or so, Drew McManus and Melissa Dyrdahl had formed a company called Bring Light. They engaged Pivotal Labs to build the website. Bring Light was a charitable giving app. This was long before GoFundMe was a thing. You can see from the UI, it’s a very 2007-ish website. This is a screenshot of the original Bring Light website. This website, it was a charitable giving website, which meant that it was financially critical. It had to take payments. It needed to stay up so that we could take payments.

It had a wealth of capabilities. A 503(C) could register themselves, and after going through a vetting process could start setting up projects. Projects were the unit of funding. For example, in this particular screenshot, we see that the Humane Society of Silicon Valley had a project around raising funds that would save animals. There were all sorts of projects across all types of charities. Donors could not just donate to given projects and search for projects that matched their values and charitable giving goals, but donors could also organize themselves into giving groups. Then giving groups could challenge each other or challenge the giving group to raise a certain amount of money cumulatively.

As you see, there were a fair number of features. The team that delivered this was four engineers, and one product manager, Drew. There were just five of us, and we delivered it in four months. Furthermore, after the go-live date, there really wasn’t a whole lot more that needed to happen to realize the vision of what Bring Light was supposed to be. Ultimately, Drew ended up hiring a part-time developer for a few hours a month to do routine maintenance on the site. We went live with virtually no bugs, certainly none that were serious bugs. The maintenance cost was comparatively low. Eventually, Drew and Melissa sold Bring Light. Overall, I would say that this project was a phenomenal success, and we delivered quite quickly, as well as delivering with quality. At no time did Drew ever set deadlines. At no time did we feel pressured to cut corners.

Typical Sources of Friction

How did this work? Why was this such a success and so unusual for most software projects? Before I answer that, let’s take a look at some typical sources of friction on any given software project. From the time that someone has an idea to the time that it’s realized, delivered out in the real world, we’d like to believe that’s a straight shot, a straight path forward. It never is. These paths typically have twists and turns and speed bumps, which is what this illustration shows. What are typical sources of speed bumps? One is consistently slow feedback. Another is when you have to wait on someone else. When maybe there’s another engineering group that is responsible for maintaining the API that you have to rely on, and that group needs to add the endpoint for that API before you are able to do your work and deliver the new capability in the website. Or maybe that other group is an ops group, and you have to file a ticket and wait for your VM to be provisioned before you can stand up your staging site. Whatever it is, oftentimes, software development projects involve waiting.

Then once the waiting is over, there’s a whole bunch of manual work to do. Whether it’s manual verification, or manual deployments, or mostly automated deployments except for those few little tiny things, or mostly automated everything except you have to go SSH around nodes in order to collect logs when something goes wrong. Whatever it is, manual work is often a source of friction. Then of course, after all of this, surprise, there’s bugs. Because of course there are. You end up having to go back around and go through the entire cycle, run through all those speed bumps again, in order to deliver the fixes for the bugs. Excruciating.

Dedicated, Engaged, Self-Contained Team

We didn’t have any of that on Bring Light. None of it. We were a self-contained team with all the skills that we needed and full access to our staging environment and our production environment, full control of it. We did not have to file a ticket to get somebody else to go do a thing before we could proceed. We were all dedicated, specifically 100% to that project, except for Drew. Because remember, he was a founder of the company. He did have other things to do besides work the backlog with the engineers. The engineers were all 100% dedicated, no multitasking. We were all deeply engaged in the work. Drew, like I said, he was a special case. He split his time between being in the office with us, and making sure that we were all aligned on what we were building. Then going out into the wilds of Silicon Valley and trying to raise funds for his startup.

Single Flow of Work

We were working with a single flow of work. As is common with many agile projects, Drew as the product manager had the steering wheel. He got to say what we built and in what order. As engineers, we got to say how we were going to build it and how long it took. It was a genuine partnership. There was none of this tension between the groups and negotiation. As soon as you start negotiating estimates, you’ve got a serious problem. Instead, we would give an estimate. Then, if Drew didn’t like the estimate, because it was more than he had mentally budgeted in terms of time for a given capability, we would have a conversation about, if this is going to cost this much, what could we do for half the time. It never felt like a tense negotiation. It always felt like partnering around. We know that we only have so many hours total, let’s figure out how to make the best possible use of them with the highest leverage from a business perspective. Drew was the one who could tell us about the business perspective.

Drew worked really hard to keep us all aligned, in being so available to the engineers, and so incredibly open to answering questions. He helped us calibrate what was and was not important to him. Consequently, we got really good at figuring out what kinds of bugs we should be looking for as we were developing and ensure that they didn’t happen, versus what kinds of things he would eventually say he just didn’t care about. We would frequently go to him and say, “Drew, we noticed that when we do bool in this site, we get this result. Is that something you care about?” Drew would say something like, “Yes, please just fix that,” or he would say, “No, I don’t care about that. Just move on.” Over time, we got much better at guessing what his response would be to a given thing.

Work In Small Shippable Units

Along the way, we were always working in small shippable units. This was a Rails app, and that did give us an advantage. It was relatively straightforward for us to deliver a capability that was all the way ready for production with nothing left to do. We had a shared definition of done. We worked to done, and we worked on small capabilities. A typical thing would take us somewhere between half a day and two or three days to deliver. We had fast feedback, because as we were working on those small shippable units, as developers, we were doing test-driven development. That meant that we were writing a little tiny executable unit test before we wrote the code that would make that pass. Then of course, we were doing the full cycle: red, green, clean. Make the test, watch it fail, write the code that makes it pass. Then clean up the code base to make it more malleable for the next little cycle of TDD.

Furthermore, Drew was amazing at giving us fast feedback. Drew, even when he was out of the office, if he had an hour between meetings, as he was trying to get VC funding, he would go sit in a Starbucks and hover over the project tracking software we were using, just waiting for us to deliver a feature, a new thing, so that he could try it out and accept or reject the work. We knew as engineers that he was hovering and waiting, and that there was a high probability if we delivered something he liked that he would be demoing it when he had a demo, the half an hour from that point.

That is where I learned about momentum. Drew really is the one who by example, taught me how important momentum is. We knew that minutes mattered to Drew. We never felt rushed. We never compromised our integrity with respect to engineering practices. We knew that we were in a partnership, and that we needed to deliver solid, high quality code that would not have risk of not being able to accept payments, for example. At the same time, we also knew that Drew was waiting, and eagerly, never punitively, always eagerly waiting for the next thing. The way this played out was there were several times where we were just inches away from being able to deliver something. If we hadn’t had that sense of momentum, and that sense of Drew cares, and wants to see this so that he could maybe demo it. We might have said, as lunchtime approached, “We’ll get back to this after lunch.” Instead, what we said was, “If we take 5 more minutes now, I think we could deliver this. Do you want to just take the 5 minutes and deliver this?” As a result, Drew got things sooner, and we got feedback sooner. It became this virtuous cycle. We were eager to get the feedback, so we were eager to deliver. I can’t emphasize enough the extent to which this was a sense of momentum and not a sense of pressure. Consequently, we never cut corners. We did make sure that we used every hour of every day as effectively as we possibly could have.

What about Efficiency?

That is how this project, Bring Light, worked. That all sounds great. Don’t you want to live in that world? Sounds fabulous. It’s counterintuitive to so many people who would say, but what about the efficiencies? It is a common thing to say, if we want to go faster, we should make the most efficient use possible of every single developer. That means that we want to ensure that the utilization of every developer is as close to 100% as possible. That they are leaning into their specialties where they’re going to have a much higher point of leverage. We’re going to create a system that’s really focused around efficiency of use of developer time. It’s a trap, incidentally. Eliyahu Goldratt talks about that trap in “The Goal” beautifully. It’s something that our brains tell us sometimes, seems intuitive that if we want it to be efficient we should make the most efficient use of somebody’s time. By contrast, the way that we worked on Bring Light, we were focused on throughput through the entire system. We weren’t always operating in the most efficient way possible. The end result was quite effective.

The Importance of Shaving the Right Yak

I also want to take a quick pause to talk about yak shaving, and the importance of learning how to shave the right yaks. Because the other thing about efficiency that some people sometimes think is, it will be more efficient, if while I am in this piece of code, I just go ahead and do all this other work that I know needs to happen anyway. Or, it will be more efficient, if we hold off on delivering so that we can just batch everything all together. The end result of that will be less overhead. That will be more efficient. Part of what I learned on that project was the importance of shaving the right yak. That phrase that probably seems a little bit weird to some of you anyway, it isn’t a common phrase used in the software engineering field and elsewhere. It came out of the MIT lab. Seth Godin has a lovely blog post, describing his story of the yak story. I have my own. I’m going to tell that very briefly.

It’s a lovely Saturday afternoon, and you decide that it’s a perfect time to go trim your hedges. You go into the garage to get the hedge trimmers, and that’s when you realize, they’re not there. I lent them to the neighbor. I better go get them. You’re about to go over to your neighbor’s house to get your hedge trimmers, and that’s when you remember, the reason I haven’t asked for them back yet is that I still have their lawn furniture from the time that we did a socially distanced, small family gathering but needed enough seats outside for everybody. You head back into the garage to get the lawn furniture so that you can return it. That’s when you discover that the dog got to the cushions on the lawn furniture and they have all been ripped to shreds.

You’re about to go into the house to get the sewing kit so that you can re-stuff the cushions and sew them back up so that you can return the lawn furniture, so that you can get your hedge trimmers, so that you can trim your hedges because it’s the perfect day for it. That’s when you remember, your neighbor was bragging about how they had special ordered this from a catalog that specialized in fair trade, yak stuffed furniture. You realize that you can’t just re-stuff it with whatever poly-fill you happen to have on hand. That’s how you find yourself with trimmers and a bag packed in a suitcase heading to the airport hoping that despite COVID, you’ll be able to catch a flight to the Himalayas where you can shave a yak. Stuff the bag. Bring it back. Stuff the furniture. Sew it back up. Return it to the neighbor. Get your hedge clippers so that some number of weeks later, you would be able to trim your hedges.

Seth Godin, in describing the yak shaving problem, says, don’t shave the yak. Go to Lowe’s and get a new pair of hedge trimmers. I have a different take. My take is the difference between a more senior engineer and a more junior engineer is the senior engineer has learned which yaks to shave. It’s not that we never did the, while we’re here, let’s do this thing, while we were on Bring Light. There were times that we did. It was always in service of the work that we were doing at the time. We were hyper-focused on delivering the capability that we were actually working on. When we chose to shave yaks, they were always the yaks that would do our near-term future selves a favor. We weren’t trying to future-proof for two years from that point. We knew that if we were going to have to go back and do this in the next couple of days anyway, and it would help things go faster now, it was the right digression. It was the right yak to shave.

Eliminate Friction

Ultimately, everything that we did on that project was all about eliminating friction, those speed bumps that get in the way. This wasn’t the only friction that I learned about at Labs. Eliminating friction involves identifying every source of an interrupt between idea and implementation. The things that I just talked about on Bring Light were really at a project level. This happens at a micro level every single day. I will admit that there are times when I get accustomed to certain speed bumps or sources of friction, and just start ignoring them. I think it’s a common thing.

In one particular case, I was working on a different project, not Bring Light, much long before that in the early 2000s, probably 2004 or so. I was pairing with Alex Chafee. Alex taught me something so critical in that moment. I was relatively new to pairing. We were pairing on my personal laptop. He was helping me get something set up. My personal laptop was quirky. The mouse was a touchpad mouse, and the mouse had a tendency to move wherever it felt like on the screen. You’d be working along and things were going fine. Then all of a sudden, the mouse cursor would teleport to someplace else on the screen. You’d have to patiently get the mouse back where it was supposed to go.

As it was, Alex was exhibiting a fair amount of patience with me and my mousing because he’s the person who memorizes keyboard shortcuts in order to eliminate that friction between the time he has an idea of how to express something in code and the actual writing of the code. Mousing does get in the way a bit on that. However, he was willing to put up with that. The first time he noticed that my mouse decided to teleport to some other part of the screen, he just looked at me and he said, “Can I get you an external mouse? We have external mice. An external mouse would be good right now.” I being relatively inured to the pain of the mouse moving around, said, “No, I’m fine.” I was relatively new to pairing and was not picking up on the cues that my pair was giving me, but we’re going to gloss over that for the purposes of the story as well.

At this point, Alex’s knee started doing this. You can picture it. Somebody whose knee is bouncing. Then the mouse thing happened again, and Alex’s knee sped up a little bit. Alex turned to me and he said, “We have external mice. Would you like an external mouse? External mice are good.” I said, “No, it’s fine. This won’t take very long. It’ll take you longer to go get the mouse. Let’s just keep going.” We kept going. Alex’s knee is doing this. Alex suddenly shouted, “Three.” I looked around like, what? He wasn’t explaining. I just kept doing what I was doing. Alex’s knee. Then he says, “Four.” That’s when I realized he was counting the number of times that my quirky mouse had interrupted our flow. At that point, I said, “Ok, fine, go get an external mouse.”

Alex was right. In that moment, he taught me the value of seconds, and the cost of ignoring the sources of friction. It took less time to go get an external mouse and plug it in, and get it working. Even though that felt like a big interruption, it took less time to do that than it would have taken to recover each time the mouse got in the way. It’s an example of shaving the right yak. Alex taught me something very important about workflow as a developer. Ultimately, it’s about increasing momentum. At every step on the way, as we eliminate friction, we increase the possibility of momentum. Developing software goes from feeling like you’re driving a truck through sand to feeling like you’re skating across wonderful ice.

Epilogue

You may recall, we started this story with me standing with Jay in an open plan office having intense discussion. What happened? He had just said, “Come on, Elisabeth, you know developers. You have to light a fire under them to get anything done.” I needed to frame a response. I took a very deep breath. I calmly said, “Jay, the pressure you’re putting on the team is going to backfire. We will end up going slower. Let’s work on establishing momentum, instead of increasing the pressure.” I really wish that I could tell you that in that moment, Jay had an epiphany and realized that I was right. That we should work on momentum and supportive culture that would help engineers do their best work. That is not exactly what happened. Jay and I really struggled to get along. He continued to put pressure on me. Honestly, I don’t remember what happened with the particular deadline that he was concerned about. In the grand scheme of things, that one particular deadline didn’t really matter.

Eventually, Jay decided that he wanted to go work on other things in the organization, and not have to deal with me and this particular engineering team. He moved on, and presumably put the same pressure on whatever group he moved on to. What happened to our group? You may recall, we were shipping enterprise software. It was fairly complex. It was enterprise software that had been around for a while, and had a number of circumstances where shortcuts had been made. We were slogging through the increased risk that had resulted from all of those shortcuts over time. This was ultimately why Jay was so frustrated with the pace of development, because there are no quick fixes and it does take time to address the underlying issues. The underlying sources of friction.

Over the course of the next two years, we managed to organize ourselves so that we were enabled to enable teams to focus. One of the differences between a fairly small scope Rails web app, and a honkin’ big piece of enterprise software, is that it’s not the thing you can develop with four people in four months. We did have multiple teams, and the teams had to coordinate, and so we didn’t end up with a fully self-contained team. We were able to structure ourselves to enable teams to focus. We changed our branching strategy. Historically, teams had worked in massive batch sizes. Instead of the ideal of small, incremental delivery teams, historically had had incredibly long running branches that were more than even feature branches. There had been circumstances where we had lost huge amounts of work. Huge amounts of work had ended up going to waste because for whatever reason, the branch was never going to be able to be merged back together with other branches that were all being developed in long running projects. We reduced the amount of work on any given branch and changed our branching strategy. We paid down a lot of technical debt. We invested heavily in test automation, in automating manual tasks, and invested in our CI.

Ultimately, I’m so proud of the team. We went from struggling to ship on an annual basis to being able to ship incredibly predictably, monthly. At which point we could have trade-off discussions that weren’t about, how are we going to make this deadline? Were more about, there’s going to be a bus leaving every month, what do we want to have, be on it? Ultimately, these are the stories and the lessons that I have learned around how to speed up a team. It’s really hard to do this. It’s incredibly valuable. Honestly, I think attempting to manage by the pressure of deadlines is an easy way out that honestly doesn’t work all that well. Doing these things, this is how you speed up a team and get a sense of momentum.

See more presentations with transcripts

MMS • Steef-Jan Wiggers

Article originally posted on InfoQ. Visit InfoQ

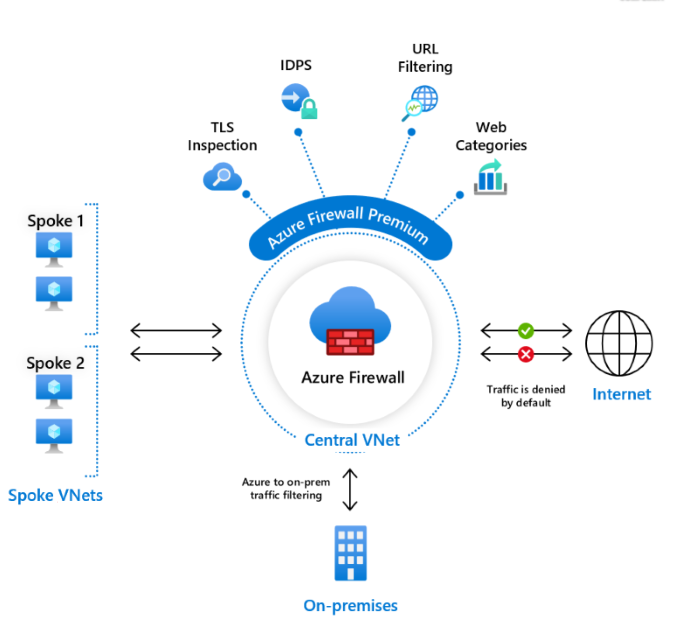

Microsoft Azure Firewall is a managed, cloud-based network security service that protects your Azure Virtual Network resources. The company recently announced a preview release of a premium version of the cloud-based network security service.

Azure Firewall became generally available during Ignite in 2018 and received several updates later on, such as Threat Intelligence and Service Tags filters, Custom DNS, IP Groups, and now has an additional tier with premium. The release of the premium tier includes the following features according to the Azure documentation:

- TLS inspection – decrypts outbound traffic, processes the data, encrypts the data, and sends it to the destination.

- IDPS – a network intrusion detection and prevention system (IDPS), which allows users to monitor network activities for malicious activity, log information about this activity, report it, and optionally attempt to block it.

- URL filtering – extends Azure Firewall’s FQDN filtering capability to consider an entire URL. For example, www.contoso.com/a/c instead of www.contoso.com.

- Web categories – administrators can allow or deny user access to website categories such as gambling websites, social media websites, etc.

Eliran Azulai, Principal Program Manager, Azure Networking, told InfoQ:

When it comes to network security, the key is to use cloud-native services to secure the network infrastructure and application delivery. To minimize attack surface, customers need network segmentation, threat protection, and encryption. Network segmentation helps prevent lateral movement and data exfiltration. Our customers can use Virtual Networks and Azure Firewall to perform network segmentation effectively When it comes to threat protection, the most basic protection they must turn on is DDoS protection on all public IPs. We have added our unique intelligent threat protection to Azure Firewall to stay ahead of the attacks. Customers can also use IDPS to identify, alert and block malicious traffic. Finally, customers can encrypt communication channels across the cloud and hybrid networks with industry leading encryption such as TLS.

Source: https://docs.microsoft.com/en-us/azure/firewall/premium-features

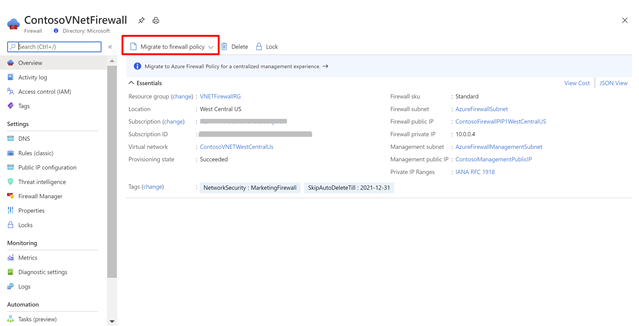

The premium release also includes a new firewall policy tier for Firewall Premium configuration. Previously, the standard tier also had policies, which Premium tier policies can inherit. Moreover, Azure Firewalls configured by classic rules created before the premium release can be easily migrated to Firewall Policy with the Migrate to Firewall Policy option from the Azure Firewall resource page. This migration process doesn’t incur downtime.

Source: https://azure.microsoft.com/en-us/blog/azure-firewall-premium-now-in-preview-2/

Azure Firewall is not the only offering available for Microsoft Azure customers. They also can provision other Firewall solutions through the Azure Marketplace such as Barracuda, Palo Alto, Fortinet, and Checkpoint. Marius Sandbu, a guild lead for Public Cloud at TietoEVRY, compared Azure Firewall Premium to third-party offerings CheckPoint, Palo Alto, and Cisco in a blog post :

- Azure Firewall is a managed service that runs as active/active and scales automatically depending on traffic flow, while a 3rd party NVA requires complex IaaS deployment and throughput is dependent on the size of virtual machines.

- Azure Firewall is fully managed through Azure Resource Manager. If your environment has adopted a cloud-based operating model and automated the environment, Azure Firewall changes and updates the environment using the same code structure/framework. This also means that deployment is simplistic compared to 3rd parties.

- Much of the logic from different firewall vendors are the rule engines and built-in threat intelligence. However, Microsoft can provide somewhat of an equal threat database using the Intelligent Security API.

- If the organization uses other supporting services such as Azure Monitor and Sentinel for SIEM/SOC, Azure Firewall makes more sense since you can continue to build on existing knowledge to build dashboard and monitoring points.

In addition, Azulai told InfoQ:

Azure Firewall Premium is a next-generation firewall with capabilities that are required for highly sensitive and regulated environments. As the world increasingly shifts toward digitization, it’s imperative that our customers are able to protect and rely on their virtual network infrastructures using products like Azure Firewall Premium from trusted companies like Microsoft.

More details of the Azure Firewall are available on the documentation landing page. And lastly, the pricing of the premium tier is currently discounted to 50% during the preview.

NoSQL Market Report 2021 – Global Industry Analysis, Business Prospect, Gross Margin Analysis …

MMS • RSS

Posted on nosqlgooglealerts. Visit nosqlgooglealerts

Global “NoSQL Market“ report provides in-depth information about NoSQL Market with market overview, top vendors, Key market highlights, product types, market drivers, challenges, trends, Market landscape, Market size and forecast, five forces analysis, Key leading countries/Region. The report offers an overview of revenue, demand, and supply of data, futuristic cost, and development analysis during the projected year 2021 – 2025. The NoSQL market report contains a comprehensive market and vendor landscape in addition to a SWOT analysis of the key vendors.

Get a Sample Copy of the Report – https://www.marketreportsworld.com/enquiry/request-sample/16363394

- In COVID-19 outbreak, Chapter 2.2 of this report provides an analysis of the impact of COVID-19 on the global economy and the NoSQL industry.

- Chapter 3.7 covers the analysis of the impact of COVID-19 from the perspective of the industry chain.

- In addition, chapters 7-11 consider the impact of COVID-19 on the regional economy.

To Understand How COVID-19 Impact is Covered in This Report. Get Sample copy of the report at – https://www.marketreportsworld.com/enquiry/request-sample/16363394

NoSQL Market report offers detailed coverage of the market which includes industry chain structure, definitions, applications, and classifications. The report offers SWOT analysis for NoSQL market segments. The report provides helpful insights into all the leading trends of the NoSQL market. It delivers a comprehensive study of all the segments and shares information regarding the leading regions in the market. It also provides statistical data on all the recent developments in the market. It also comprises a basic overview and revenue and strategic analysis under the company profile section. NoSQL market analysis is provided for the international markets including development trends, competitive landscape analysis, investment plan, business strategy, opportunity, and key regions development status. This report also states import/export consumption, supply and demand Figures, cost, industry share, policy, price, revenue, and gross margins.

Top listed manufacturers for global NoSQL Market are:

- SAP

- MarkLogic

- IBM Cloudant

- MongoDB

- Redis

- MongoLab

- Amazon Web Services

- Basho Technologies

- MapR Technologies

- Couchbase

- AranogoDB

- DataStax

- Aerospike

- Apache

- CloudDB

- MarkLogic

- Oracle

- RavenDB

- Neo4j

- Microsoft

NoSQL Market Analysis Report contains all Analytical and Statistical brief about Market Overview, Growth, Demand and Forecast Research with strong overview and solution in the composite world of NoSQL Industry. NoSQL Market Research Report Highlights include key Market Dynamics of sector. Various definitions and classification of applications of the industry and Chain structure with Upstream Raw Materials, Sourcing Strategy and Downstream Buyers are given. This NoSQL Market Research report focuses on the Key Manufacturers Profiles in Detail with Granular Analysis of the Market Share, Production Technology, Market Entry Strategies, Revenue Forecasts and Regional Analysis of the Market. Additionally, prime strategical activities in the market, which includes product developments, mergers and acquisitions, partnerships are discussed.

Enquire before purchasing this report – https://www.marketreportsworld.com/enquiry/pre-order-enquiry/16363394

Market by Type:

- Key-Value Store

- Document Databases

- Column Based Stores

- Graph Database

Market by Application:

- Retail

- Online gaming

- IT

- Social network development

- Web applications management

- Government

- BFSI

- Healthcare

- Education

- Others

Market by Region:

- North America

- Europe

- Asia-Pacific

- South America

- Middle East & Africa

Points Covered in The Report:

- The points that are discussed within the NoSQL Market report are the major market players that are involved in the market such as manufacturers, raw material suppliers, equipment suppliers, end users, traders, distributors and etc.

- The complete profile of the companies is mentioned. And the capacity, production, price, revenue, cost, gross, gross margin, sales volume, sales revenue, consumption, growth rate, import, export, supply, future strategies, and the technological developments that they are making are also included within the report.

- The NoSQL market report contains the SWOT analysis of the market. Finally, the report contains the conclusion part where the opinions of the industrial experts are included.

- Data and information by manufacturer, by region, by type, by application and etc, and custom research can be added according to specific requirements.

- The growth factors of the market are discussed in detail wherein the different end users of the market are explained in detail.

Key questions answered in the report:

- What will the market growth rate of NoSQL market in 2025?

- What are the key factors driving the global NoSQL market?

- Who are the key manufacturers in NoSQL market space?

- What are the market opportunities, market risk and market overview of the NoSQL market?

- What are sales, revenue, and price analysis of top manufacturers of NoSQL market?

- Who are the distributors, traders and dealers of NoSQL market?

- What are the NoSQL market opportunities and threats faced by the vendors in the global NoSQL industry?

- What are sales, revenue, and price analysis by types and applications of NoSQL market?

- What are sales, revenue, and price analysis by regions of NoSQL industry?

Purchase this report (Price 3500 USD for single user license) – https://www.marketreportsworld.com/purchase/16363394

NoSQL Market 2021 global industry research report is a professional and in-depth study on the market size, growth, share, trends, as well as industry analysis. The report begins from overview of industrial chain structure, and describes the upstream. Besides, the report analyses market size and forecast in different geographies, type and end-use segment, in addition, the report introduces market competition overview among the major companies and company’s profiles, besides, market price and channel features are covered in the report. Furthermore, market size, the revenue share of each segment and its sub-segments, as well as forecast figures are also covered in this report.

Research objectives:

- To understand the structure of NoSQL market by identifying its various subsegments.

- Focuses on the key global NoSQL manufacturers, to define, describe and analyze the sales volume, value, market share, market competition landscape, SWOT analysis and development plans in next few years.

- To analyze the NoSQL with respect to individual growth trends, future prospects, and their contribution to the total market.

- To share detailed information about the key factors influencing the growth of the market (growth potential, opportunities, drivers, industry-specific challenges and risks).

- To project the consumption of NoSQL submarkets, with respect to key regions (along with their respective key countries).

- To analyze competitive developments such as expansions, agreements, new product launches, and acquisitions in the market.

- To strategically profile the key players and comprehensively analyze their growth strategies.

Years considered for this report:

- Historical Years: 2015-2021

- Base Year: 2021

- Estimated Year: 2021

- Forecast Period: 2021-2025

Detailed TOC of Global NoSQL Market Study 2021-2025

1 NoSQL Introduction and Market Overview

1.1 Objectives of the Study

1.2 Overview of NoSQL

1.3 Scope of The Study

1.3.1 Key Market Segments

1.3.2 Players Covered

1.3.3 COVID-19’s impact on the NoSQL industry

1.4 Methodology of The Study

1.5 Research Data Source

2 Executive Summary

2.1 Market Overview

2.1.1 Global NoSQL Market Size, 2015 – 2021

2.1.2 Global NoSQL Market Size by Type, 2015 – 2021

2.1.3 Global NoSQL Market Size by Application, 2015 – 2021

2.1.4 Global NoSQL Market Size by Region, 2015 – 2025

2.2 Business Environment Analysis

2.2.1 Global COVID-19 Status and Economic Overview

2.2.2 Influence of COVID-19 Outbreak on NoSQL Industry Development

3 Industry Chain Analysis

3.1 Upstream Raw Material Suppliers of NoSQL Analysis

3.2 Major Players of NoSQL

3.3 NoSQL Manufacturing Cost Structure Analysis

3.3.1 Production Process Analysis

3.3.2 Manufacturing Cost Structure of NoSQL

3.3.3 Labor Cost of NoSQL

3.4 Market Distributors of NoSQL

3.5 Major Downstream Buyers of NoSQL Analysis

3.6 The Impact of Covid-19 From the Perspective of Industry Chain