Month: December 2021

MMS • RSS

Posted on mongodb google news. Visit mongodb google news

MongoDB, Inc. (NASDAQ:MDB) was the target of unusually large options trading on Wednesday. Stock investors purchased 23,831 put options on the stock. This is an increase of 2,157% compared to the typical daily volume of 1,056 put options.

MongoDB, Inc. (NASDAQ:MDB) was the target of unusually large options trading on Wednesday. Stock investors purchased 23,831 put options on the stock. This is an increase of 2,157% compared to the typical daily volume of 1,056 put options.

Several brokerages have commented on MDB. UBS Group upped their price objective on MongoDB from $300.00 to $450.00 and gave the company a “neutral” rating in a research report on Friday, September 3rd. The Goldman Sachs Group upped their price objective on MongoDB from $475.00 to $545.00 and gave the company a “buy” rating in a research report on Wednesday, December 8th. Mizuho increased their price target on MongoDB from $475.00 to $490.00 and gave the stock a “neutral” rating in a research report on Tuesday, December 7th. Zacks Investment Research downgraded MongoDB from a “buy” rating to a “hold” rating in a research report on Friday, September 10th. Finally, Piper Sandler increased their price target on MongoDB from $525.00 to $585.00 and gave the stock an “overweight” rating in a research report on Tuesday, December 7th. Four research analysts have rated the stock with a hold rating and thirteen have given a buy rating to the company. According to data from MarketBeat, the stock has a consensus rating of “Buy” and an average target price of $539.59.

In related news, CRO Cedric Pech sold 279 shares of the stock in a transaction on Monday, October 4th. The stock was sold at an average price of $460.55, for a total transaction of $128,493.45. The transaction was disclosed in a document filed with the SEC, which is accessible through this hyperlink. Also, Director Charles M. Hazard, Jr. sold 1,667 shares of the stock in a transaction on Friday, October 1st. The shares were sold at an average price of $470.01, for a total value of $783,506.67. The disclosure for this sale can be found here. Insiders have sold a total of 76,223 shares of company stock worth $37,834,146 over the last ninety days. Insiders own 7.40% of the company’s stock.

Access our premier research platform that includes MarketBeat Daily Premium, portfolio monitoring tools, stock screeners, research tools, a real-time news feed, email and SMS alerts, the MarketBeat Idea Engine, proprietary brokerage rankings, extended data export tools and much more. Save 50% Your 2022 Subscription. Just $1.00 for the first 30 days.

A number of institutional investors and hedge funds have recently modified their holdings of MDB. Price T Rowe Associates Inc. MD boosted its stake in shares of MongoDB by 191.2% in the second quarter. Price T Rowe Associates Inc. MD now owns 5,766,896 shares of the company’s stock worth $2,084,848,000 after acquiring an additional 3,786,467 shares during the last quarter. Moors & Cabot Inc. purchased a new position in MongoDB during the third quarter worth approximately $601,000. Vanguard Group Inc. lifted its position in MongoDB by 7.9% during the second quarter. Vanguard Group Inc. now owns 5,378,537 shares of the company’s stock worth $1,944,448,000 after buying an additional 391,701 shares in the last quarter. Growth Interface Management LLC purchased a new position in MongoDB during the third quarter worth approximately $86,758,000. Finally, Caas Capital Management LP purchased a new position in MongoDB during the second quarter worth approximately $65,542,000. 88.50% of the stock is currently owned by institutional investors.

MongoDB stock traded down $5.66 during midday trading on Thursday, hitting $532.35. 222,557 shares of the company were exchanged, compared to its average volume of 820,014. The firm has a market capitalization of $35.54 billion, a PE ratio of -113.64 and a beta of 0.66. MongoDB has a fifty-two week low of $238.01 and a fifty-two week high of $590.00. The business has a fifty day simple moving average of $521.81 and a two-hundred day simple moving average of $449.41. The company has a debt-to-equity ratio of 1.71, a quick ratio of 4.75 and a current ratio of 4.75.

MongoDB (NASDAQ:MDB) last issued its quarterly earnings data on Monday, December 6th. The company reported ($0.11) EPS for the quarter, beating the consensus estimate of ($0.38) by $0.27. MongoDB had a negative return on equity of 101.71% and a negative net margin of 38.32%. The company had revenue of $226.89 million for the quarter, compared to analyst estimates of $205.18 million. During the same period last year, the company earned ($0.98) earnings per share. MongoDB’s quarterly revenue was up 50.5% compared to the same quarter last year. As a group, equities analysts expect that MongoDB will post -4.56 earnings per share for the current year.

MongoDB Company Profile

MongoDB, Inc engages in the development and provision of a general purpose database platform. The firm’s products include MongoDB Enterprise Advanced, MongoDB Atlas and Community Server. It also offers professional services including consulting and training. The company was founded by Eliot Horowitz, Dwight A.

See Also: What is the Bid-Ask Spread?

This instant news alert was generated by narrative science technology and financial data from MarketBeat in order to provide readers with the fastest and most accurate reporting. This story was reviewed by MarketBeat’s editorial team prior to publication. Please send any questions or comments about this story to [email protected]

Should you invest $1,000 in MongoDB right now?

Before you consider MongoDB, you’ll want to hear this.

MarketBeat keeps track of Wall Street’s top-rated and best performing research analysts and the stocks they recommend to their clients on a daily basis. MarketBeat has identified the five stocks that top analysts are quietly whispering to their clients to buy now before the broader market catches on… and MongoDB wasn’t on the list.

While MongoDB currently has a “Buy” rating among analysts, top-rated analysts believe these five stocks are better buys.

Article originally posted on mongodb google news. Visit mongodb google news

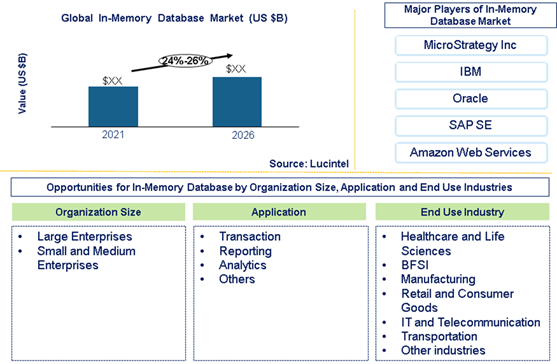

In-Memory Database Market is expected to grow at a CAGR of 19% to 21% from 2021 to 2026

MMS • RSS

Posted on nosqlgooglealerts. Visit nosqlgooglealerts

Trends, opportunities and forecast in In-memory database market to 2026 by data type (relational, NoSQL and NewSQL), application (transaction, reporting, analytics, and others), processing type (online analytical processing (OLAP), and online transaction processing (OLTP)), organization size (large enterprises and small and medium enterprises), end use industry , and region

Lucintel’s latest market report analyzed that in-memory database provides attractive opportunities in the healthcare and life science, BFSI, manufacturing, retail and consumer goods, IT and telecommunication, and transportation industries. The In-memory database market is expected to grow at a CAGR of 19% to 21%. In this market, NewSQL is the largest segment by data type, whereas BFSI is largest by end use industry.

Download Brochure of this report by clicking on https://www.lucintel.com/in-memory-database-market.aspx Based on data type, the in-memory database market is segmented into relational, NoSQL and NewSQL), application (transaction, reporting, analytics, and others. The NewSQL segment accounted for the largest share of the market in 2020 and is expected to register the highest CAGR during the forecast period, due to better performance to scale online transaction processing as compared to other in-memory database solutions.

Browse in-depth TOC on “In-Memory Database Market”

XX – Tables

XX – Figures

150 – Pages

The In-Memory Database Market is marked by the presence of several big and small players. Some of the prominent players offering in-memory database include Microsoft, IBM, Oracle, SAP SE, Teradata, Amazon Web Services, Tableau, Kognitio, VoltDB, and DataStax

This unique research report will enable you to make confident business decisions in this globally competitive marketplace. For a detailed table of contents, contact Lucintel at +1-972-636-5056 or click on this link helpdesk@lucintel.com.

About Lucintel

Lucintel, the premier global management consulting and market research firm, creates winning strategies for growth. It offers market assessments, competitive analysis, opportunity analysis, growth consulting, M&A, and due diligence services to executives and key decision-makers in a variety of industries. For further information, visit www.lucintel.com.

Media Contact

Company Name: Lucintel

Contact Person: Brandon Fitzgerald

Email: Send Email

Phone: 303.775.0751

Address:8951 Cypress Waters Blvd., Suite 160

City: Dallas

State: TEXAS

Country: United States

Website: www.lucintel.com

MMS • RSS

Posted on mongodb google news. Visit mongodb google news

If you’re looking for a VPN server to host in-house, look no further than the AlmaLinux/Pritunl combination. See how easy it is to get this service up and running.

Getty Images/iStockphoto

Pritunl is an open source VPN server you can easily install on your Linux servers to virtualize your private networks. This particular VPN solution offers a well-designed web UI for easy administration and management. All traffic between clients and server is encrypted and the service uses MongoDB, which means it includes support for replication.

I’ve walked you through the process of installing Pritunl on Ubuntu Server 20.04 and now I want to do the same with AlmaLinux 8.5. You should be able to get this VPN solution up and running in minutes.

SEE: Password breach: Why pop culture and passwords don’t mix (free PDF) (TechRepublic)

What you’ll need

To successfully install Pritunl on AlmaLinux, you’ll need a running/updated instance of the OS and a user with sudo privileges. You’ll also need a domain name that points to the hosting server (so users can access the VPN from outside your network).

How to configure the firewall

The first thing we’ll do is configure the AlmaLinux firewall. Let’s start by allowing both HTTP and HTTPS traffic in with the commands:

sudo firewall-cmd --permanent --add-service=http sudo firewall-cmd --permanent --add-service=https

Then, we’ll reload the firewall with:

sudo firewall-cmd --reload

How to install MongoDB

Next, we’ll install the MongoDB database. Create a new repo file with:

sudo nano /etc/yum.repos.d/mongodb-org-4.4.repo

Paste the following into the new file:

[mongodb-org-4.4] name=MongoDB Repository baseurl=https://repo.mongodb.org/yum/redhat/$releasever/mongodb-org/4.4/x86_64/ gpgcheck=1 enabled=1 gpgkey=https://www.mongodb.org/static/pgp/server-4.4.asc

Note: There’s a newer version of MongoDB (version 5), but I have yet to successfully get it to install on AlmaLinux. Because of that, I’m going with version 4.4.

Save and close the file.

Install MongoDB with:

sudo dnf install mongodb-org -y

Start and enable MongoDB with:

sudo systemctl enable --now mongod

SEE: VPN and mobile VPN: How to pick the best security solution for your company (TechRepublic Premium)

How to install Pritunl Server

Next, we’ll install Pritunl. Create the repo file with:

sudo nano /etc/yum.repos.d/pritunl.repo

In that file, paste the following:

[pritunl] name=Pritunl Repository baseurl=https://repo.pritunl.com/stable/yum/centos/8/ gpgcheck=1 enabled=1

Save and close the file.

Install the EPEL repository with:

sudo dnf install epel-release -y

Import the Pritunl GPG keys with:

gpg --keyserver hkp://keyserver.ubuntu.com --recv-keys 7568D9BB55FF9E5287D586017AE645C0CF8E292A gpg --armor --export 7568D9BB55FF9E5287D586017AE645C0CF8E292A > key.tmp; sudo rpm --import key.tmp; rm -f key.tmp

Install Pritunl with the command:

sudo dnf install pritunl -y

When the installation completes, start and enable the service with:

sudo systemctl enable pritunl --now

How to increase the Open File Limit

To prevent connection issues to the Pritunl server when it’s under a higher load, we need to increase the open file limit. To do this, issue the following commands:

sudo sh -c 'echo "* hard nofile 64000" >> /etc/security/limits.conf' sudo sh -c 'echo "* soft nofile 64000" >> /etc/security/limits.conf' sudo sh -c 'echo "root hard nofile 64000" >> /etc/security/limits.conf' sudo sh -c 'echo "root soft nofile 64000" >> /etc/security/limits.conf'

How to access the Pritunl web UI

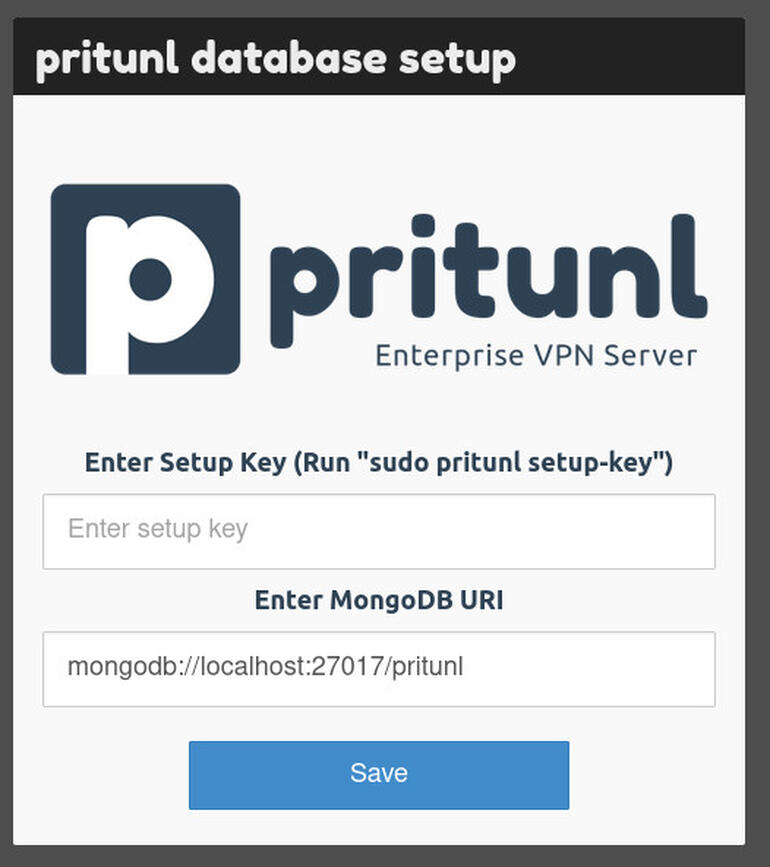

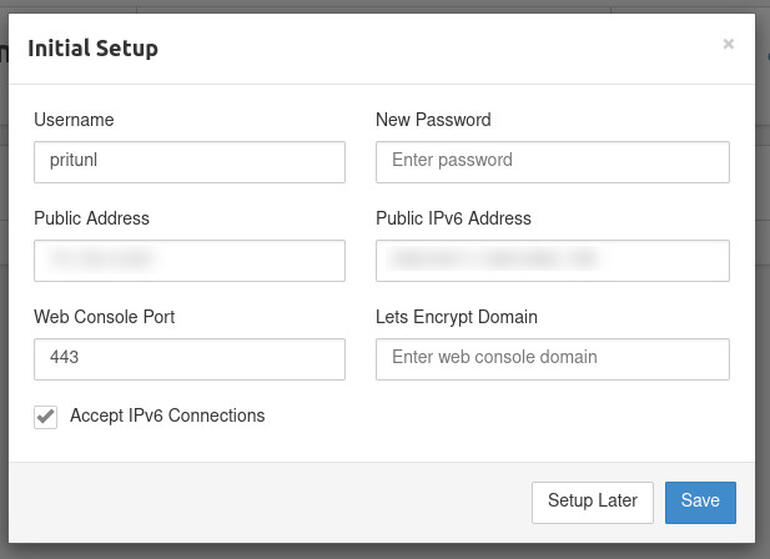

Give the service a moment to start and then point a web browser to https://SERVER (where SERVER is either the IP address or domain of the hosting server). You should be greeted by the Pritunl database setup window (Figure A).

Figure A

The Pritunl database setup window is ready for you to continue.

To continue, you must generate a setup key with the command (run on the hosting server):

sudo pritunl setup-key

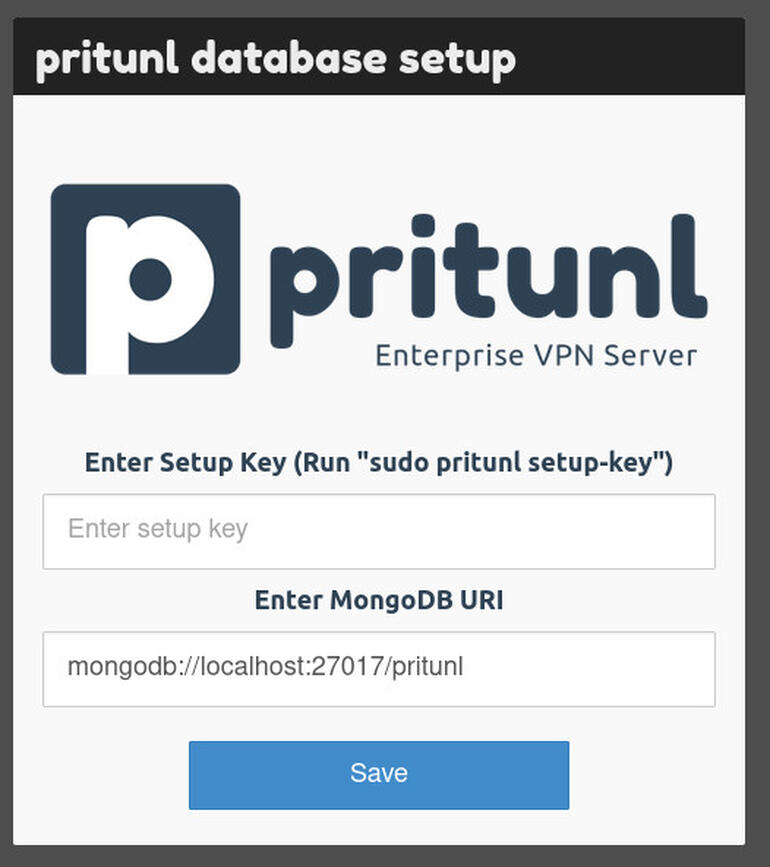

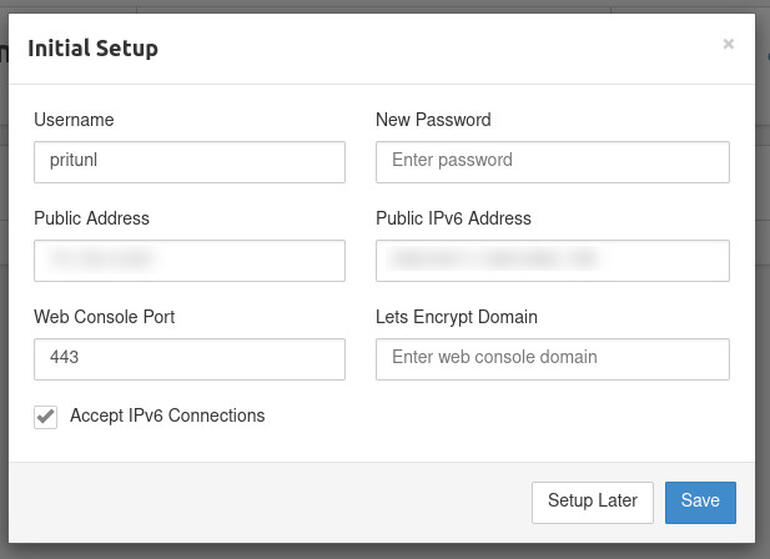

This will generate a random string of characters that you copy and paste into the Setup Key text area of the Pritunl database setup window. After pasting the key, click Save and wait for the database to be upgraded. You will then be presented with the Pritunl login window. Before you log in, you must retrieve the default login credentials with the command:

sudo pritunl default-password

The above command will print out both the username and password for you to use to log into the Pritunl web UI. Make sure to save those credentials. Once you’ve successfully logged in, you’ll be prompted to change the admin user’s password and complete the initial setup (Figure B).

Figure B

Completing the Pritunl initial setup

And there you go. You now have the Pritunl VPN server up and running on AlmaLinux 8.5. At this point, you can configure the server to meet the needs of your business and users.

Also see

Subscribe to TechRepublic’s How To Make Tech Work on YouTube for all the latest tech advice for business pros from Jack Wallen.

Article originally posted on mongodb google news. Visit mongodb google news

MMS • RSS

Posted on nosqlgooglealerts. Visit nosqlgooglealerts

New Jersey, United States,- The latest report published by Verified Market Research shows that the NoSQL Database Market is likely to garner a great pace in the coming years. Analysts examined market drivers, confinements, risks and openings in the world market. The NoSQL Database report shows the likely direction of the market in the coming years as well as its estimates. A close study aims to understand the market price. By analyzing the competitive landscape, the report’s authors have made a brilliant effort to help readers understand the key business tactics that large corporations use to keep the market sustainable.

The report includes company profiling of almost all important players of the NoSQL Database market. The company profiling section offers valuable analysis on strengths and weaknesses, business developments, recent advancements, mergers and acquisitions, expansion plans, global footprint, market presence, and product portfolios of leading market players. This information can be used by players and other market participants to maximize their profitability and streamline their business strategies. Our competitive analysis also includes key information to help new entrants to identify market entry barriers and measure the level of competitiveness in the NoSQL Database market.

Get Full PDF Sample Copy of Report: (Including Full TOC, List of Tables & Figures, Chart) @ https://www.verifiedmarketresearch.com/download-sample/?rid=129411

Key Players Mentioned in the NoSQL Database Market Research Report:

Objectivity Inc, Neo Technology Inc, MongoDB Inc, MarkLogic Corporation, Google LLC, Couchbase Inc, Microsoft Corporation, DataStax Inc, Amazon Web Services Inc & Aerospike Inc.

NoSQL Database Market Segmentation:

NoSQL Database Market, By Type

• Graph Database

• Column Based Store

• Document Database

• Key-Value Store

NoSQL Database Market, By Application

• Web Apps

• Data Analytics

• Mobile Apps

• Metadata Store

• Cache Memory

• Others

NoSQL Database Market, By Industry Vertical

• Retail

• Gaming

• IT

• Others

The global market for NoSQL Database is segmented on the basis of product, type, services, and technology. All of these segments have been studied individually. The detailed investigation allows assessment of the factors influencing the NoSQL Database Market. Experts have analyzed the nature of development, investments in research and development, changing consumption patterns, and growing number of applications. In addition, analysts have also evaluated the changing economics around the NoSQL Database Market that are likely affect its course.

The regional analysis section of the report allows players to concentrate on high-growth regions and countries that could help them to expand their presence in the NoSQL Database market. Apart from extending their footprint in the NoSQL Database market, the regional analysis helps players to increase their sales while having a better understanding of customer behavior in specific regions and countries. The report provides CAGR, revenue, production, consumption, and other important statistics and figures related to the global as well as regional markets. It shows how different type, application, and regional segments are progressing in the NoSQL Database market in terms of growth.

Get Discount On The Purchase Of This Report @ https://www.verifiedmarketresearch.com/ask-for-discount/?rid=129411

NoSQL Database Market Report Scope

| ATTRIBUTES | DETAILS |

|---|---|

| ESTIMATED YEAR | 2022 |

| BASE YEAR | 2021 |

| FORECAST YEAR | 2029 |

| HISTORICAL YEAR | 2020 |

| UNIT | Value (USD Million/Billion) |

| SEGMENTS COVERED | Types, Applications, End-Users, and more. |

| REPORT COVERAGE | Revenue Forecast, Company Ranking, Competitive Landscape, Growth Factors, and Trends |

| BY REGION | North America, Europe, Asia Pacific, Latin America, Middle East and Africa |

| CUSTOMIZATION SCOPE | Free report customization (equivalent up to 4 analysts working days) with purchase. Addition or alteration to country, regional & segment scope. |

Geographic Segment Covered in the Report:

The NoSQL Database report provides information about the market area, which is further subdivided into sub-regions and countries/regions. In addition to the market share in each country and sub-region, this chapter of this report also contains information on profit opportunities. This chapter of the report mentions the market share and growth rate of each region, country and sub-region during the estimated period.

• North America (USA and Canada)

• Europe (UK, Germany, France and the rest of Europe)

• Asia Pacific (China, Japan, India, and the rest of the Asia Pacific region)

• Latin America (Brazil, Mexico, and the rest of Latin America)

• Middle East and Africa (GCC and rest of the Middle East and Africa)

Key questions answered in the report:

1. Which are the five top players of the NoSQL Database market?

2. How will the NoSQL Database market change in the next five years?

3. Which product and application will take a lion’s share of the NoSQL Database market?

4. What are the drivers and restraints of the NoSQL Database market?

5. Which regional market will show the highest growth?

6. What will be the CAGR and size of the NoSQL Database market throughout the forecast period?

For More Information or Query or Customization Before Buying, Visit @ https://www.verifiedmarketresearch.com/product/nosql-database-market/

Visualize NoSQL Database Market using Verified Market Intelligence:-

Verified Market Intelligence is our BI-enabled platform for narrative storytelling of this market. VMI offers in-depth forecasted trends and accurate Insights on over 20,000+ emerging & niche markets, helping you make critical revenue-impacting decisions for a brilliant future.

VMI provides a holistic overview and global competitive landscape with respect to Region, Country, and Segment, and Key players of your market. Present your Market Report & findings with an inbuilt presentation feature saving over 70% of your time and resources for Investor, Sales & Marketing, R&D, and Product Development pitches. VMI enables data delivery In Excel and Interactive PDF formats with over 15+ Key Market Indicators for your market.

Visualize NoSQL Database Market using VMI @ https://www.verifiedmarketresearch.com/vmintelligence/

About Us: Verified Market Research®

Verified Market Research® is a leading Global Research and Consulting firm that has been providing advanced analytical research solutions, custom consulting and in-depth data analysis for 10+ years to individuals and companies alike that are looking for accurate, reliable and up to date research data and technical consulting. We offer insights into strategic and growth analyses, Data necessary to achieve corporate goals and help make critical revenue decisions.

Our research studies help our clients make superior data-driven decisions, understand market forecast, capitalize on future opportunities and optimize efficiency by working as their partner to deliver accurate and valuable information. The industries we cover span over a large spectrum including Technology, Chemicals, Manufacturing, Energy, Food and Beverages, Automotive, Robotics, Packaging, Construction, Mining & Gas. Etc.

We, at Verified Market Research, assist in understanding holistic market indicating factors and most current and future market trends. Our analysts, with their high expertise in data gathering and governance, utilize industry techniques to collate and examine data at all stages. They are trained to combine modern data collection techniques, superior research methodology, subject expertise and years of collective experience to produce informative and accurate research.

Having serviced over 5000+ clients, we have provided reliable market research services to more than 100 Global Fortune 500 companies such as Amazon, Dell, IBM, Shell, Exxon Mobil, General Electric, Siemens, Microsoft, Sony and Hitachi. We have co-consulted with some of the world’s leading consulting firms like McKinsey & Company, Boston Consulting Group, Bain and Company for custom research and consulting projects for businesses worldwide.

Contact us:

Mr. Edwyne Fernandes

Verified Market Research®

US: +1 (650)-781-4080

UK: +44 (753)-715-0008

APAC: +61 (488)-85-9400

US Toll-Free: +1 (800)-782-1768

Email: [email protected]

Website:- https://www.verifiedmarketresearch.com/

MMS • RSS

Posted on nosqlgooglealerts. Visit nosqlgooglealerts

The main objective of NoSQL databases is improved efficiency. It is somewhat achieved with the use of new technologies and thinking outside the box.

An example of the new technologies applied could be opting for solutions that require more storage space. In the past, we used to mind storage requirements more, but lately, it has become less of an issue as a result of the cost of this resource dropping significantly.

Now, for the metaphorical box, it can represent the strict limits of a SQL schema. We are opening it up to find out what else there is that can allow us to connect data in a meaningful whole, manipulate it and exploit it with the least friction.

What is a graph database?

A graph database certainly is outside that box. It focuses on relationships between pieces of data as much as it does on the data itself, thus managing to store data purposefully. Using a graph data model positively helps visualize data. It is highly appreciated as in the world of big data; it is always good to be able to make a quick sense of the data in front of you.

The elements

Based on the graph theory, these databases consist of nodes and edges. Nodes are the entities of a graph database. Simply put, they are the agents and objects of relationships and can be presented as answers to questions “who” and “whom.”

Each of the entities holds a unique identifier. They can also have properties consisting of key-value pairs and can have labels with or without metadata assigning a role of a particular node in a domain. There are also the incoming and outgoing edges. Think of them as different ends of an arrow showing you who is the agent and who is the object of a relationship.

Edges are equally as important as nodes because they hold a vital piece of information. They represent relationships between entities. A SQL database would likely have a designated table for each class of relationships. A graph database does not require such mediation because it connects its entities directly. Edges also have unique identifiers and, just like nodes, can have other properties apart from the defined type, direction and the starting and ending node.

Graph database models

There are two common graph database models: Resource Description Framework (RDF) graphs and Property graphs. They have their similarities but are built with a focus on different purposes. One focuses on data integration, whereas the other focuses on analytics.

RDF graphs focus on data integration. They consist of the RDF triple — two nodes and an edge that connects them (subject, predicate, object). Each of the three elements is identified by a unique resource identifier. You can find them in knowledge graphs, and they are used to link data together. RDFs are often used by healthcare companies, statistics agencies, etc.

Property graphs are much more descriptive and each of the elements carries properties, attributes that further determine its entities. They also consist of nodes and edges connecting the nodes and are better suited for data analysis.

Advantages

The emphasis placed on the edges of a graph database model means these databases represent a powerful way of getting to understand even the most complex relationships between data. The beauty of it is that this way of storing relationships also enables quick execution of queries.

With a clear representation of relationships in a graph database, it is easier to spot trends and recognize elements with the most influence.

Disadvantages

Graph databases share the common downfall of NoSQL databases — the lack of uniform query language. While this can be an obstacle for the use of a database, it does not affect the performance of this database type. Certain graph databases are more prominent than others; so are the languages they use. Some of the most common graph database languages are PGQL, Gremlin, SPARQL, AQL, etc.

Another downfall is the scalability of these databases because they are designed for one-tier architecture meaning that they are hard to be scaled across a number of servers.

As with all other NoSQL databases, they are designed to serve a specific purpose and excel at it. They are not a universal solution designed to replace all other databases.

Use cases and examples

Graph databases are designed with a focus on relationships and, coincidentally, so are social networks. A graph database is a great way to store all users of a certain social media platform and their engagements to analyze them. You can determine how “lively” or active a social media platform is based on the activity volume of its users. Furthermore, you can identify the “influencers,” analyze user behavior, isolate target groups for marketing purposes, etc.

By being able to track and map out the most complex networks of relationships, graph databases are a good tool for fraud detection. Connections between elements that are hard to detect with traditional databases suddenly become prominent with graph databases.

Some of the most popular graph databases — as well as multimodel databases including graph data models — are Neo4j suitable for a variety of business-related purposes and followed by the multimodel Microsoft Azure Cosmos DB, OrientDB, ArangoDB, etc.

Graph databases vs. relational databases

A major advantage of any NoSQL over a SQL database is the flexibility of storing data with NoSQL. Whenever there is a case of less structured data or highly complex data, there is room for NoSQL application. If you are considering introducing new relationship types and properties, to place them in a SQL database, depending on what it is, you would have to add new tables.

On the other hand, with a graph database, it is as simple as adding a new edge or a property. By tracing the edges between nodes, you can get to the depth of the most complex relationship between two nodes in a database.

The need for a graph database is recognized by the level of connectedness between data — where the data is highly connected, there could be room for a graph database. Furthermore, seeing how powerful these connections are, a graph database is a better choice for data analysis rather than simple data storage. Finally, if you want to be flexible with data that is changing often, a NoSQL graph database is likely the better option for you.

MMS • Ben Linders

Article originally posted on InfoQ. Visit InfoQ

Blockchain technology can be used to build solutions that can naturally deliver better software quality. Using blockchain we can shift to smaller systems that store everything in a contract. We have to understand our data needs and decide what is stored in the chain and what off-chain, and think about how requirements, defects and testing history can be built into the contract models.

Craig Risi spoke about testing in the blockchain at Agile testing Days 2021.

Risi mentioned that the key design principles that any Blockchain application should be designed around are data integrity, data transparency, scalability, reliability and availability. The trick is to start checking for all these attributes early in the design phase and have regular checks to ensure you are meeting them throughout the entire design process, Risi said.

In his talk, Risi provided several architecture design tips for working with Blockchain:

You want to work with small services and APIs to allow for the system to scale as needed and avoid single points of failure and build in redundancy so that your system remains highly available even when certain services are down.

To ensure your system meets these above requirements you want to spend a lot of time understanding your solution’s data needs; what is stored in the chain and what off-chain, how large the data elements that need to be stored are and how the encryption will happen.

Having clear requirements allows you to put automated tests in place that can verify the integrity of data in test environments, as you have the required information to mock and test effectively. Along with this functional test effort, effort should be invested in security to ensure that the endpoints that interact with smart contracts are incredibly secure.

Blockchain technology will improve the way we approach software design, Risi mentioned. He gave some suggestions for utilizing blockchain technology to build better quality solutions:

We will see a shift from big systems that work with millions of lines of data to smaller systems that store everything that is needed in a contract. This smaller focus, while it comes with its own problems, could make software development and test automation simpler, improving our ability to deliver features faster.

I also see traceability improvement, as requirements, defects and testing history can be built into the contract models, making it simpler for teams to have evidence built into their test contract, while also making debugging contracts easier if all evidence is stored on it and give a clearer picture of the impact code has on the greater system.

Lastly, some of the basic principles of Blockchain technology, which include distributed databases, transparency with pseudonymity and irreversibility of records, make the impacts of mistakes far more reaching than ever before. While this doesn’t have a direct impact on software quality, it is forcing teams to think about it more and place more emphasis on verification, which has an indirect impact on the overall quality of a software solution.

InfoQ interviewed Craig Risi about building quality in blockchain systems.

InfoQ: What advice would you give to companies wanting to develop Blockchain solutions?

Craig Risi: I think the key thing is not just jumping onto the Blockchain train because it’s popular. Too many companies want to build blockchain solutions to try and gain access to funding, but if you’re going to try and develop a new solution where Blockchain is simply just a replacement and doesn’t add value, you are unlikely to reap any benefit from it.

As an example, some of the work currently being done to move health records to the blockchain are working out because of the way we are making this data more legitimate and open to the public. If we are simply going to replace medical databases with blockchain technology but not put the control in the hands of consumers, we are simply moving data around to a format that isn’t suited for it.

It’s also important to be aware of the different international regulations and rules with regards to Blockchain and the handling of data. While the world is warming up to the technology, there are different rules that different countries require from a data perspective and it’s important that you cater for this in your final solution.

We see this with cryptocurrencies where different rules between countries affect how they are handled and taxed, with some countries blocking their use entirely. The work that is currently being done on medical records is facing this problem as certain countries require health practitioners to keep this data themselves and restrict where this data can be stored, limiting the effectiveness of a blockchain solution until regulations are adapted.

InfoQ: How blockchain will impact future software development?

Risi: One of the key fundamental changes that Blockchain introduces is the way we work with data and it could effectively bring about the end of the database as we know it, with all operations taking place directly on a contract rather than big database systems. This will remove one of the key complexities that generally affects software architectures and is a change in the way we work with data. This could also lead to an increase in the adoption of infrastructure as code or cloud computing more aggressively, as the data concerns are largely removed.

This affects things like data collection and machine learning, and companies may need to build models where certain data is stored on the blockchain and others in traditional data stores for AI/ML purposes.

Blockchains data ownership model can make a massive change to the tech industry as a whole. Think of medical applications around the world that interact with a common blockchain for patients, where a patient provides the needed access to their information and history, allowing them to have control over their data.

Blockchain technology can also be improved to act as a source of digital identity documents that can be used to store all your private details and even things like baking records, making transactions and even voting in elections. In fact, Blockchain contracts can be even integrated into social media sites, giving you the power to store your own data and choose when it is shared and with whom.

There is still a long way for this technology to go before it will become mainstream, but there is no doubt that it has the potential to drastically change the way we think about certain aspects of data and privacy and it holds remarkable potential to transform the way we interact with technology.

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

There are constant changes in the software development trends, but a few trends seem to be dominant in 2022. With the evolution of advanced technology, there has been a significant change in the software development landscape. Businesses need to keep up with these changes in order to compete in the next-gen world.

To help you be aware of the latest software trends, we have come up with a list of the top software development trends that will work in 2022 and beyond that in the software industry. Let’s take a look at the top software trends in 2022.

Major Software Development Trends in 2022

- Rigid software quality standards

With the growing demand for software, it will be required to follow software quality standards as proposed by ISO in the near future. We will see ISO certification in our day-to-day lives and in most of the devices we use as software solutions become a major part of our lives. Companies will see benefits such as improved quality, more efficient processes, and more respect following ISO certification.

- Code standards & guidelines

It is expected that companies will be employing style consistency and language conventions in the software development process to raise coding standards following clear guidelines. This will help both existing as well as new developers to write standard code with clear guidelines.

- Focus on cybersecurity

Cybersecurity is another most popular software development trend which is expected to grow more in 2022. Businesses will focus more on the modernization of their systems, applications, and technology stack with regular assessments for cybersecurity.

- The Internet of Things

The Internet of Things (IoT) is expected to create more than $6 trillion economic value by the end of 2022. When the Internet of Things (IoT) is combined with cloud computing and connected data, software development will be transformed. Mobile devices are likely to become even more specialized for vertical markets (such as healthcare or aerospace) in the future as sensors and analytics allow real-time control.

- Cloud computing

Cloud computing is another yet more growing software development trend, which is broadly used by many startups, businesses, institutions, and even by government organizations. In addition to this, the value of cloud computing technology can be seen in security offices, hospitals, and legal authorities.

We can expect a huge transition of cloud computing technology in various industries, businesses, and organizations around the world.

Tech giants like Microsoft, Google, and Amazon have already been providing cloud computing to individuals, businesses, and enterprises. It offers complete flexibility to businesses and allows them to scale as they grow.

- Rise of Python

Python is the most popular and ever-growing programming language widely used for creating complex and enterprise-grade web and app development applications to meet the modern needs of businesses and their customers.

It is widely known for addressing modern software needs and providing one-stop-solution for web development, mobile development, or enterprise projects.

Python provides developers with the ability to conduct complex mathematical processes, huge data analysis, machine learning, and more.

- JavaScript is still in the headline

Seeing the growing trend of software development, JavaScript is still on the rise and is a perfect language for building modern and innovative software development solutions. JS is assumed to be the growing software development trend in 2022 even after the introduction of AngularJS.

It is quite perfect and capable of handling many backend operations at the same time without requiring much load. The frameworks of JS are expected to be the next big surprise in the software development trend due to their compatibility and ease to use both ways: applicants and clients’ server-side.

- Cloud-Native Apps & Framework

Cloud-native apps and frameworks are expected to be the ever-growing software development trend in 2022, allowing developers to create highly efficient and robust cloud-native applications quickly and more efficiently.

With Node.js, you can create servers, data layers, applications, and web apps with JavaScript using a single platform. A cloud-native app can be built using many frameworks.

- DevOps

DevOps is a modern development approach used for creating custom software by combining software development with IT operations to streamline workflows and improve efficiency. DevOps also combines various Agile elements that seem to rise in top software development trends.

Considering both employees and user experiences, it is expected that companies will adopt a more agile and development DevOps methodology that can kill two birds with an arrow.

- Artificial Intelligence for Improved user experiences

Believe it or not, Artificial Intelligence (AI) is one of the most popular and fastest-growing software development trends used for modern and highly innovative technologies. With the evolution of artificial intelligence, there has been a dramatic change in deep learning, and artificial neural networks and are predicted to have a great impact on software development trends in 2022, and beyond.

Artificial Intelligence (AI) uses high technology for giving more accurate predictions about user behaviors, customer data, and human psychology. Businesses are assumed to use AI for giving predictions about industrial machinery maintenance, robotics, or other complicated systems.

- The Rise of Microservices Architecture

The rise of microservices architecture is significantly increasing as the new standards for the modern software development needs, resulting in the decline of monolithic architectures.

Microservices architecture is a hot topic in the top 10 software development trends, which provides a modular approach where small and independent components work together and can be adapted easily. Using microservice architecture can help businesses take competitive advantage of achieving greater results.

- Low-code and no-code development

Anyone can build applications using drag-and-drop or low-code editors, providing no programming experience is required. Some predict the beginning of a new era of no-code coding in which technology will soon reach the average person even more.

Bottom Line

In the software world, there are many factors influencing it, such as technologies, consumer preferences, and underlying factors. In order to develop modern and innovative applications, startups need to understand the latest software trends in 2022. With the ever-growing technologies, there will be more software development trends in the upcoming years.

Database Software Market Size, Analysis, Forecast to 2029 | Key Players – Industrial IT

MMS • RSS

Posted on mongodb google news. Visit mongodb google news

New Jersey, United States,- The latest report published by Verified Market Research shows that the Database Software Market is likely to garner a great pace in the coming years. Analysts examined market drivers, confinements, risks and openings in the world market. The Database Software report shows the likely direction of the market in the coming years as well as its estimates. A close study aims to understand the market price. By analyzing the competitive landscape, the report’s authors have made a brilliant effort to help readers understand the key business tactics that large corporations use to keep the market sustainable.

The report includes company profiling of almost all important players of the Database Software market. The company profiling section offers valuable analysis on strengths and weaknesses, business developments, recent advancements, mergers and acquisitions, expansion plans, global footprint, market presence, and product portfolios of leading market players. This information can be used by players and other market participants to maximize their profitability and streamline their business strategies. Our competitive analysis also includes key information to help new entrants to identify market entry barriers and measure the level of competitiveness in the Database Software market.

Get Full PDF Sample Copy of Report: (Including Full TOC, List of Tables & Figures, Chart) @ https://www.verifiedmarketresearch.com/download-sample/?rid=85913

Key Players Mentioned in the Database Software Market Research Report:

Teradata, MongoDB, Mark Logic, Couch base, SQLite, Datastax, InterSystems, MariaDB, Science Soft, AI Software.

Database Software Market Segmentation:

Database Software Market, By Type of Product

• Database Maintenance Management

• Database Operation Management

Database Software Market, By End User

• BFSI

• IT & Telecom

• Media & Entertainment

• Healthcare

The global market for Database Software is segmented on the basis of product, type, services, and technology. All of these segments have been studied individually. The detailed investigation allows assessment of the factors influencing the Database Software Market. Experts have analyzed the nature of development, investments in research and development, changing consumption patterns, and growing number of applications. In addition, analysts have also evaluated the changing economics around the Database Software Market that are likely affect its course.

The regional analysis section of the report allows players to concentrate on high-growth regions and countries that could help them to expand their presence in the Database Software market. Apart from extending their footprint in the Database Software market, the regional analysis helps players to increase their sales while having a better understanding of customer behavior in specific regions and countries. The report provides CAGR, revenue, production, consumption, and other important statistics and figures related to the global as well as regional markets. It shows how different type, application, and regional segments are progressing in the Database Software market in terms of growth.

Get Discount On The Purchase Of This Report @ https://www.verifiedmarketresearch.com/ask-for-discount/?rid=85913

Database Software Market Report Scope

| ATTRIBUTES | DETAILS |

|---|---|

| ESTIMATED YEAR | 2022 |

| BASE YEAR | 2021 |

| FORECAST YEAR | 2029 |

| HISTORICAL YEAR | 2020 |

| UNIT | Value (USD Million/Billion) |

| SEGMENTS COVERED | Types, Applications, End-Users, and more. |

| REPORT COVERAGE | Revenue Forecast, Company Ranking, Competitive Landscape, Growth Factors, and Trends |

| BY REGION | North America, Europe, Asia Pacific, Latin America, Middle East and Africa |

| CUSTOMIZATION SCOPE | Free report customization (equivalent up to 4 analysts working days) with purchase. Addition or alteration to country, regional & segment scope. |

Geographic Segment Covered in the Report:

The Database Software report provides information about the market area, which is further subdivided into sub-regions and countries/regions. In addition to the market share in each country and sub-region, this chapter of this report also contains information on profit opportunities. This chapter of the report mentions the market share and growth rate of each region, country and sub-region during the estimated period.

• North America (USA and Canada)

• Europe (UK, Germany, France and the rest of Europe)

• Asia Pacific (China, Japan, India, and the rest of the Asia Pacific region)

• Latin America (Brazil, Mexico, and the rest of Latin America)

• Middle East and Africa (GCC and rest of the Middle East and Africa)

Key questions answered in the report:

1. Which are the five top players of the Database Software market?

2. How will the Database Software market change in the next five years?

3. Which product and application will take a lion’s share of the Database Software market?

4. What are the drivers and restraints of the Database Software market?

5. Which regional market will show the highest growth?

6. What will be the CAGR and size of the Database Software market throughout the forecast period?

For More Information or Query or Customization Before Buying, Visit @ https://www.verifiedmarketresearch.com/product/database-software-market/

Visualize Database Software Market using Verified Market Intelligence:-

Verified Market Intelligence is our BI-enabled platform for narrative storytelling of this market. VMI offers in-depth forecasted trends and accurate Insights on over 20,000+ emerging & niche markets, helping you make critical revenue-impacting decisions for a brilliant future.

VMI provides a holistic overview and global competitive landscape with respect to Region, Country, and Segment, and Key players of your market. Present your Market Report & findings with an inbuilt presentation feature saving over 70% of your time and resources for Investor, Sales & Marketing, R&D, and Product Development pitches. VMI enables data delivery In Excel and Interactive PDF formats with over 15+ Key Market Indicators for your market.

Visualize Database Software Market using VMI @ https://www.verifiedmarketresearch.com/vmintelligence/

About Us: Verified Market Research®

Verified Market Research® is a leading Global Research and Consulting firm that has been providing advanced analytical research solutions, custom consulting and in-depth data analysis for 10+ years to individuals and companies alike that are looking for accurate, reliable and up to date research data and technical consulting. We offer insights into strategic and growth analyses, Data necessary to achieve corporate goals and help make critical revenue decisions.

Our research studies help our clients make superior data-driven decisions, understand market forecast, capitalize on future opportunities and optimize efficiency by working as their partner to deliver accurate and valuable information. The industries we cover span over a large spectrum including Technology, Chemicals, Manufacturing, Energy, Food and Beverages, Automotive, Robotics, Packaging, Construction, Mining & Gas. Etc.

We, at Verified Market Research, assist in understanding holistic market indicating factors and most current and future market trends. Our analysts, with their high expertise in data gathering and governance, utilize industry techniques to collate and examine data at all stages. They are trained to combine modern data collection techniques, superior research methodology, subject expertise and years of collective experience to produce informative and accurate research.

Having serviced over 5000+ clients, we have provided reliable market research services to more than 100 Global Fortune 500 companies such as Amazon, Dell, IBM, Shell, Exxon Mobil, General Electric, Siemens, Microsoft, Sony and Hitachi. We have co-consulted with some of the world’s leading consulting firms like McKinsey & Company, Boston Consulting Group, Bain and Company for custom research and consulting projects for businesses worldwide.

Contact us:

Mr. Edwyne Fernandes

Verified Market Research®

US: +1 (650)-781-4080

UK: +44 (753)-715-0008

APAC: +61 (488)-85-9400

US Toll-Free: +1 (800)-782-1768

Email: [email protected]

Website:- https://www.verifiedmarketresearch.com/

Article originally posted on mongodb google news. Visit mongodb google news

MMS • Chris Palmer

Article originally posted on InfoQ. Visit InfoQ

Transcript

Palmer: I’m Chris Palmer from the Chrome security team. I’m going to talk about getting the most out of sandboxing, which is a key defensive technique for software that faces the internet, like a browser or a server application, or any sorts of things. I’m going to talk about the limitations and benefits that we found while doing this. We’ve had about 10 years of experience. I’m going to talk about what else to do in addition to that once you’ve hit those limits, but you probably haven’t yet.

I’ve been on the Chrome security team for about nine-and-a-half years. Before that I was on the Android team. I’ve done a bunch of different things. I’ve done usability, HTTPS, and authentication stuff. These days I’m on what we call the platform security team, where we focus on under the hood, low-level security defense mechanisms, like memory safety, and sandboxing, obviously, and exploit mitigations. We try to make sure we’re making the best possible use of the operating system to get the maximum defensive value out of that.

What Is Sandboxing?

First, let me give you an idea of what sandboxing even is. I’ll talk about how we do it, what it means, and how you can use it. Then, I’ll talk about stuff that comes after that as well. Here’s a picture from Chromium’s website, chromium.org, where we have a simple picture of what sandboxing looks like for us. Chrome is a complex application, obviously. We break it up into multiple pieces, and each of those pieces runs in a separate process. We have like the browser process, here it’s called the broker, and that runs at full privilege. It has all the power of your user account when you’re logged into your machine. Then we fire off a bunch of other processes called renderer processes. Their job is to render the web for a given website. If you go to newyorktimes.com, there’s one renderer for that. Then when you go to Gmail, or Office 365, or Twitter, that’s three more renderer processes. They’re each isolated from each other, and they’re isolated from the browser process itself. They also have their privileges limited as much as we can manage in ways that are specific to each operating system. What that gives us is, if they crash, of course, the whole browser does not go down. If site A causes its renderer to crash, all your other sites are still up and running. You get reliability and stability. Also, if the process is compromised by malicious JavaScript, or HTML, or CSS that helps take over a website, usually it’s JavaScript or WebAssembly, then the damage is contained, we hope, in that renderer process. If site A gets compromised, sites B and C and D, have some protection against that compromise. The browser process itself up here, is protected against that compromise. The attacker to take over your whole computer, for example, would need to find additional bugs, whether in the browser process, in the operating system kernel. If they want to extend the reach of their compromise, they have to do more work. It’s a pretty darn good defensive mechanism, and we use it heavily.

Good Sandboxing is Table Stakes

I want to say, first of all, that sandboxing is table stakes for a browser, and every browser does some: Firefox, Safari, all the Chromium based browsers like Edge, Brave, Opera, Chrome itself, obviously. They all do sandboxing. It’s not just for browsers. I think you can get a lot of benefit from sandboxing in a wide variety of different application types. For example, the problem is, we are reading in complicated inputs, like JavaScript, HTML, images, videos, from anywhere on the internet, and there’s a risk there because we’re processing them in C++ or C. There’s all sorts of memory safety concerns. There’s buffer overflows, type confusion, use-after-free, bugs like that, that can allow an attacker to compromise the code that’s parsing and rendering these complicated formats. Any application that parses and renders and deserializes complicated stuff has the same problem, and it has to somehow defend against it. That includes your text messenger, a web server that takes in PDFs from users and processes them, for example. If you have a web application that converts images from one format to another, or takes videos from users or a font sharing website, or all sorts of things like that, you have the same basic problem. You don’t want to let a bug in your image decoder or your JavaScript interpreter, take over your whole server. You don’t want to let an attacker have that power.

In Chromium, we’ve been working on this for about 10 years. We think we’ve taken sandboxing just about as far as we can go with it. I’ll talk about how we face certain special limitations that you might not, depending on your application. You may be able to go further with it than we can, or maybe not as far as we can. It all depends on a bunch of design factors you have to take into consideration. I’ll talk about what those are. Again, in any case, sandboxing is like your step one of all the things you want to do to defend against bad inputs from the internet. I think it’s table stakes. You got to start there.

How to Build a Sandbox

How do you build a sandbox? It depends what you can do. It varies with each operating system. Android is very different than macOS, and Linux is a whole different thing. Windows is a whole different thing. They all provide different options to us, the defender, they give us different kinds of mechanisms. We have separate user accounts in Windows that are called SIDs, security identifiers. There’s various access tokens. You can take a token away from a process or give them a restricted token. That’s a Windows thing. You can filter the system calls that a process can call. We do a lot of that on Linux based systems, Chrome OS, Android, Linux desktop. You can do it on macOS with a Seatbelt system. We bend the rules and define our own Seatbelt policy, but Apple gives you some baked in ones with Xcode. I think these days the default is not to have full privilege anymore. They make it easy for you to reduce your own privilege with Seatbelt. That is very powerful, very good defense. Another idea is that you could segment memory within the same process. What if we had a zone that code could not escape from and then another zone that other code could not escape from? I’ll talk a bit more later about how we are looking at that thing, and Firefox people are also. It’s a very cool idea. For the most part, our basic building block is the process. Then we apply controls to different types of processes.

OS Mechanisms (Android)

On Android, it’s very different than Windows and Linux, the key mechanism is what is called the isolated process service. It’s a technique that they invented for us. We asked them, could we have this on Android, and then we can make Chrome better? They said, sure. They built it for us. It does a couple things. It runs a new process. It runs an Android service, in its own special separate process with a random user ID separate from your main application, and then you can talk to it and get data out, and parse data in. That’s our first line of defense on Android. Renderers run as isolated services. It works pretty good. There’s a couple things that come with it. There’s some system call filtering. It comes with a policy that Android platform people define for us. They also define a SELinux profile for us. SELinux stands for Security-Enhanced Linux. It’s an additional set of policies you can use to say, don’t let this process access these files, or, raise an alarm if it tries to do this or that. That’s useful for us too. It comes with the isolated process service. That’s number one on Android.

OS Mechanisms (Linux, Chrome OS)

On Linux and Chrome OS, we put together what we want by hand. We use Seccomp-BPF. Again, it’s a system call filtering mechanism. We can define whatever policy we want, so we have all sorts of finer-grained policies for different types of processes. We also use Linux’s user and PID and network namespaces feature, where you can create a little isolated world for a process, and it doesn’t get to see the whole system. It doesn’t get to see processes that it shouldn’t know about. It doesn’t get to see network stuff that it shouldn’t know about, and so on. Where we don’t have that, not all Linux systems enable that subsystem of the kernel. We have a set UID helper that does some of that stuff itself, and that it spawns children and takes away their privilege, and then those are the renderers. We consider that to be a bit of a legacy thing. We think the future and certainly our present is the namespaces idea. It’s not risk-free. Namespaces come with bugs of their own because they change the assumptions of other parts of the kernel. That’s why some distributions don’t turn it on, but we do on Chrome OS, certainly. We think it’s pretty useful.

Limitations and Costs

The key thing here is that you can’t sandbox everything. If you think about the most extreme form of sandboxing, you could sandbox every function call or sandbox every class. Especially if you had that segmented memory idea that I was talking about, you could give each component of your code its own little zone to live in, and it couldn’t escape, we hope. As it is now, for the most part, we have to pretty much create a new process for each thing that we want to sandbox. You’ll see in the pictures that are coming up, we use fairly coarse-grained form of sandboxing, because processes are our main thing, and processes are expensive. On Windows and Android, processes are a big deal, and threads are cheap. They want you to do that. The way the systems are designed, you’d have one process for your application, and many threads used during different things. That’s not enough of a boundary for us. We have to be careful in how we use them. Then starting up a new process on Android and Windows, a new process gives you a lot of stuff that you typically want, like all the Android framework stuff, and all the nice Windows libraries. It costs time and memory to create those things for us. They’re not quite as free on those two platforms. On Linux and Chrome OS, they’re very cheap, indeed. macOS also quite cheap to make a process. We face different headwinds on different platforms.

Site Isolation

A key thing I mentioned before, and this is going to be the introduction to how you should think about sandboxing for yourself, for your application, different sites are in their own renderers, and so we have to have a way of deciding when to create a new one. We would like to create a new process for each web origin, which is the principle in the web platform, the security principle. The origin is defined as the scheme, host, and port like HTTPS, google.com, Port 443, or HTTP, example.org, Port 80, things like that. Each of those should be separated. We can’t always afford to make that many new processes. Instead, we group several origins together, if they belong to what we call the site, and that is just the scheme, like HTTPS or HTTP, and just the second level domain after the first register or bold domain, like google.com, everything under google.com counts as one site. Example.org, everything under that counts as one site. It’s a bit of a tradeoff to save some time and memory, but if we had our way we’d isolate each origin in its own process.

Here’s a simple view. This is a bit like what the status quo is, but it’s a little trickier. We have the browser process with full privilege. It creates different renderers for different sites. We also have the graphics processing interface, the stuff that talks to the graphics system of your operating system. We put that in its own process to separate it. It might be crashy. We can reduce its privilege a little bit, so we do. It doesn’t need the full power of the browser process. We have coming up on most platforms, a separate process to handle all the networking too, all the HTTP, all the TLS, all the DNS, all that complicated stuff, we’re putting it in its own process. We’re, on each platform, gradually reducing the privilege of that process on each platform. It’s an ongoing adventure. It’s done on macOS. It’s starting to happen on Windows. We got a plan for Android.

Now, you might imagine that, if we had all the memory and time we wanted, we could make a separate networking process for each site, and then it would be linked to its renderer. That would be great. We would do that if we could, if we could afford it. Similarly, we’re creating a new storage process to support all the web APIs that handle storage, like the local storage cookies. There’s an IndexedDB database API for the web. That stuff is also complicated and makes sense to put in its own process, so that’s happening. You could imagine, we could have a separate storage process for each site also. Again, it would be linked to its renderer. Again, they would all be isolated from each other. Security would go up, resilience and reliability would go up. It would be cool. For us on the platforms that are popular for Chrome, mainly Android and Windows, it’s just too expensive, so we can’t do it. That might not be true for you, on for example, a Linux server, where processes are cheap and fast to start up. You might be able to have many different sandbox processes supporting different aspects of your user interface, or doing the things for your users that you need to do, whether it’s database processing, giving them their own frontend web server, maybe hosting some JavaScript for them, things like that. It depends.

Moving Forward: Memory Safety

That doesn’t get us everything we need, though, but it does get us a lot. The key thing you may have gotten so far is that we’re using sandboxes to contain the damage of the problems of memory unsafety, like C++, and C, are just hard to use. It’s all sorts of, out-of-bounds Reads, out-of-bounds Writes, objects leaking and they never get cleaned up when you’re not using them anymore. That can happen. Or you use them after they’ve been cleaned up, use-after-free. It’s an exploitable vulnerability, a lot of the time. Or type confusion if you have an animal, and it’s actually a dog, but you cast it to a cat, and then you call the Meow method on it. That’s not going to work, and trouble may ensue. C++ will let you do that a lot of the time, whereas other languages wouldn’t. Java, for example, would notice at runtime, this is a cat, and it would raise an exception. Not so necessarily in C++.

Containing memory unsafety is a key benefit of sandboxing. It also gives us some defense against stranger problems, like Spectre and Meltdown, the hardware bugs where, even with perfect memory safety, you could do strange things and the hardware would accidentally leak important facts about the memory that’s in your process. You can get a free out-of-bounds Reads, even if the code were perfect, which is pretty wild. We get some defense against that from sandboxing. However, the real fix for that is at the hardware level. As an application, I and we can only do so much. It’s a little tricky. There are variants of that problem that can in fact go across processes and even into the kernel. That’s pretty exciting. We have to just wait for better hardware to get rid of that problem. There’s a lot we can handle before we can get there, before that’s our biggest problem. C++ is dangerous. C is dangerous. Sandboxing helps a lot. To get all the way to Wonderland in software, we really would like to have a language that defends against memory unsafety, baked in.

Investigating Safer Language Options

You could think, obviously Java has a lot of that, Kotlin. Swift on macOS and iOS has a lot of safety baked in. Rust, that’s their selling point. WebAssembly has a chance to give us that isolated box inside a process so we could create little sandboxes without having to spawn new processes, and so we could have cheaper sandboxing and we could sandbox more stuff. We’re actually experimenting on that now this quarter. Firefox also is. They have a thing called RLBox. We call ours WasmBox, for WebAssembly Box. The basic idea is the same. There’s different efficiency tradeoffs and different technical ways of going about it. The basic idea is, you give a function or a component, a chunk of memory, and then you by various means enforce that it can never read or write outside that area. If it goes wrong, it’s at least mostly stuck in there. It might still give you a bad result, and you’ll have to evaluate the result for correctness. It can at least, hopefully, not destroy your whole process and compromise the process and take it over. We’ll see how that goes. We’re hoping to maybe ship something with it soon. It could be a very big deal for us, and perhaps for you.

Migrating to Memory-Safe Languages

We’d also like to migrate to a safer language to the extent that’s possible. No one’s saying we’re going to rewrite everything in Rust or replace all of our C++. That’s not possible. What we can do is find particular soft targets that we know attackers are attacking, and we can replace those particular things with a safer language. Like you could take your image processors, your JSON parser, your XML parser, for example, and get Java, or Kotlin, or Rust versions of those and put them in place. Then you could have a much smaller memory safety problem. That would be a complementary benefit to sandboxing. Neither one by itself gets you all the way there. I think they’re both necessary. Neither is sufficient on its own. Together, I think you have a really solid defense story, at least as far as software can go. That’s where we’re heading. Of course, hardware continues to be a difficulty.

There’s also the matter of the learning curve of a new language. To get everybody to learn C++ is hard. It’s a complicated language. Any language that can do what it does is likely to be at least as complex. Rust does a lot. It takes a while to learn it. Swift does a lot. It takes a while to learn it. There’s a way to use Java well. It takes a while to learn it. We’re asking more of our developers, but we’re thinking in the end that we’re going to get better performance, better safety, for sure. That it will be beneficial to the people who use our application.

Improving Memory-Unsafe Languages

Again, you can think along the same lines, don’t use C++ if you can avoid it. If you’re starting something new, have a plan to migrate away to the extent you can. Getting the two languages to work together is a key aspect of the problem, and it’s improving. In the last year alone, we made great headway with a thing called CXX and autocxx. It’s a way to get Rust and C++ to talk to each other in a more easy to use way. It’s very cool. We’re also doing things with C++ as much as we can, garbage collection, new types of smart pointer that know if the thing they own still exists or not. Then it’ll stop you from doing use-after-free, for example. Then there’s new hardware features coming that can help us with the memory safety problem. There’s memory tagging coming from ARM. Control-flow integrity, we’re already shipping some of that in Chrome now. We’re very happy about that, and there’s more coming. Generally, we can replace some of the undefined behavior that’s in C++’s libraries with our own. We’ve done a lot of that already too. For example, does operator brackets on a vector allow you to go out of bounds? In standard C, they don’t guarantee that it’s not, but we can define our own vector that does guarantee that it will not allow you to go out of bounds, and so we do.

These bugs are real and important, memory safety bugs, I should say. We have a wide variety. We have a lot of use-after-free, managing lifetimes is very hard. There’s other bugs too.

The Future

The future is, sandboxing is giving us 10 good years. We’re going to keep using it, of course. It’s great. We need to move to our next stage of evolution, which is adding strong memory safety on top of that.

Questions and Answers

Schuster: How much variance is there for different architecture builds of Chrome?

Palmer: For different architecture builds, I assume you mean like ARM versus Intel, that hardware architecture? We don’t yet have a huge variance, but we do expect to see a lot more coming in the future. For example, on Intel, there’s a feature called CET, Control-Flow Enforcement Technology. It helps you make sure that when you’re returning from a function, that you’re returning to the same function you came from, which turns out to be pretty important. Attackers love to change that. The hardware is going to help us make sure we always return back to the right place. That doesn’t exist in the same form on ARM. ARM has other mechanisms, for example, Pointer Authentication Codes, or PAC. It covers more and it covers things differently. It’s along the same lines of the basic idea of control-flow integrity. We do different things there.

Then, similarly, there’s a thing for ARM called Memory Tagging Extensions, where you can say, this area of memory is of type 12, and this other area is of type 9. Then it will raise a hardware fault if you try to take a pointer to type 9, and instead make it point to some memory of type 12. That’ll explode. Similarly, you get a little bit of type safety in a super coarse-grained way, not in the same way you would get from Java, where it’s class by class. You can get a pretty good protection against things like use-after-free, type confusion, and even can stop buffer overflows in certain cases. If you’re about to overflow from one type into another, that can in certain cases become detectable. That doesn’t exist on Intel. On ARM, we’re hoping to make good use of it. Increasingly, we’re seeing more protections of that kind from hardware vendors.

Someone also asked about memory encryption. Again, if we were to ever use that, it would be very different from one hardware vendor to another, and we have to do different things. As the future comes at us, I expect that our different hardware platforms may become, from a security perspective, as different operating systems. We can maybe have a roughly similar big picture, but the details are going to be totally different.

Schuster: You mentioned CET, which I think is being rolled out now. Has that been circumvented yet by the attacker community because it seems like every time some new hardware mitigation comes along, you think that’s it, all buffer overflows are now fine. Then they come along, and say, “No, it broke ASLR and all that stuff.”

Palmer: Yes. For example, there’s already ways of working around PAC on ARM. There’s three benefits to this. One is, they can make software defenses more efficient. Like for example, I mentioned earlier, a thing called MiraclePtr, where we’re inventing a type of smart pointer that knows whether or not the thing it points to is still alive. There’s another variant of the thing called StarScan, and that’s like a garbage collection process where it looks around on the heap to see if there’s any more references to the thing you’re about to destroy. The trouble with that is it can be slow, because it has to search the whole heap. With memory tagging, it can speed up the scan by a factor of however many tags there are. If you have 16 different memory tags, it’s weak type safety, but it speeds up scanning by a factor of 16, because you only have to even search for the particular one of 16 types on the heap. You don’t have to scan the whole heap, you scan 1/16th of the heap. It speeds up software defenses.

Two is, the various hardware defenses work best together. If you combine control-flow integrity like CET and software control-flow integrity for forward jumps, like calls and jumps, and you combine them with Data Execution Prevention, where you can stop a data page from being used as a code page, for example, and you combine that with tagging, it starts to cover more of the attack techniques. You’re probably never going to get all the way there, just by throwing hardware features at the problem. We can close the gap. We can speed up software based defenses. Then, performance, combining them to get a better benefit.

Then three, is, it really does make the attacker do more work, at least at first, but maybe even in continuing. It’s like, yes, some very smart people can work around PAC or maybe even break it. Doing so, can be anywhere from, they had to invent a technique to do it once, and then ever after it’s easy. Sometimes that happens, and that’s terrible. Sometimes they invent a technique, and then they have to reapply it every time they develop a new exploit, and it’s just a pain in the butt. It becomes an ongoing cost for attackers, and that’s what we want. Like ASLR, address space layout randomization is a terrible technique. It annoys me. It’s very silly. Attackers do have to have a way of bypassing it every time they write, or most of the time when they write an exploit. They often can, but they have to. Even though it annoys me, it’s still valuable, on 64-bit. On 32-bit, it’s maybe not.

Schuster: Yes, there’s not enough room to do it.

Why does it annoy you? Does it make anything more difficult to bug or anything like that?

Palmer: No, not really. What annoys me about it is just that it’s ugly. It’s a hack. What I want is something like a language that just doesn’t allow these problems in the first place. Why are we trying to hide things from the attacker? Like, “No, they might find where the stack is and then they’ll know where to overwrite the return pointer.” Why do we let them get to that point in the first place? That bothers me, because the software bug that let that happen, has no reason to exist. It’s just, you didn’t check the bounds on your buffer. Let’s fix that. Let’s fix that at scale by using a language that makes it easy rather than making every C programmer remember every single time. I want to fix the problem for real.

Schuster: There’s lots of legacy software, lots of C, C++.

Do you have a lot of legacy backwards compatibility versus security tradeoffs?