MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

A smoothly running sensor data analytics tool may be just as difficult to manage as a symphony orchestra. Because every musician in an orchestra – and every part of an IoT system – needs to work properly and ‘harmonize’ with the others. But how do conductors make their orchestras work so nicely and sound so heavenly instead of creating a mismanaged cacophony? Obviously, there’s a lot of practice involved. But besides that, they definitely know what pitfalls they need to avoid. Which is why, if we’re talking about orchestrating sensor data, it’s important to know 5 major sensor analytics challenges that you can face.

Challenge #1. Difficulty in choosing the right interval to collect and transmit data in real time

The thing is that each particular business and even task may require a completely different approach to real-time analysis. And choosing the data collection and transmission interval that is right for your specific purposes may be challenging. For instance, an oil drilling site’s big data solution may need to have control apps send commands to actuators every second to optimize pumping pressure or rotational speed of drills. This may mean collecting data every 80 milliseconds and transmitting it in packages every 800 milliseconds. Whereas it won’t be necessary to collect and transmit solar power generation rates more often than once every 30 minutes to be able to adjust the panel to the sunrays angle. And data analysis based on such intervals is also real time.

Choosing the right interval so that it’s not too frequent but you still are able to extract valuable insights in time is specific to every enterprise, but:

Keep in mind that the bigger the interval is, the more chances you have to lose the data, since within the wait time it’s not stored properly (in the limited local memory only). At the same time, the lesser the interval, the more overloaded your system gets. So your solution needs to be ready to handle the data flow.

Even if your solution collects data very frequently, you should make sure it notices rapid changes in sensor readings that happen in between your data analysis jobs (if such a thing is possible with your readings at all). Using sensors with a quicker reading capability would help here. Otherwise, it’s easy to get blinded by a seemingly normal performance of your equipment.

Challenge #2. Analytical noise created by poor sensor data quality

Eliminating erroneous data samples has always been a problem, and the core of that problem is poor data quality. To ensure adequate analysis results, you do need to deal with these:

Sensor malfunctions. Multiple sensors can turn out to be showing wrong readings with, for instance, 2.4 degrees always more than the temperature really is. If you’ve uncovered such a mishap, some of the data in your big data warehouse will be compromised. So you’ll need to clean it up (or at least mark up the data within a given timeframe) and fix/replace the sensors. A second option here is that sensors can start to always show irrational readings saying that, for example, the water temperature in the tank is 248°F, when it is in fact barely warm. Although the difference between such readings and the real state of things is way bigger, these are much easier to uncover and eliminate than the first option.

Sensor drift. Even if nothing out of the ordinary is happening with your sensors, you should always remember that sensors drift. With time, their sensitivity numbs and their readings start to ‘tilt’ and deviate. And the more you ignore this problem, the more tangible it becomes, which adds to the importance of its resolution. That is why there are whole data sets dedicated to it.

Unknown anomalies. Everything may be working fine, but sometimes you can find that readings are missing for no reason. Or, from time to time, that sensors here and there transmit inadequate values and then go back to normal. Such deviations can be irritating, and uncovering their root-cause is a job for an experienced sensor specialist.

Obviously, you need to look out for these sensor data quality issues to be able to get adequate actionable insights out of it. But you shouldn’t think that you need to eliminate all erroneous readings. Because due to the nature of big data analysis (applied to your sensor data), it’s actually unnecessary, let alone impossible.

Challenge #3. Complexity of uncovering tricky but meaningful patterns

In the huge amounts of data, it can be difficult to spot truly meaningful patterns. For example, it can be easy to note dramatic rises in temperature, find the cause and eliminate it. This way, you can, say, somewhat prolong your equipment’s life. But this won’t bring any dazzling value comparing to what you could do with revealing the ‘hidden’ patterns in data. For example, with uncovering what lies beneath slight rises and falls in temperature that happen more and more often. If you find a way to detect and understand the true meaning of such abnormalities, it will push your production to more sophisticated insights and more saving.

Looking for these patterns and interpreting them correctly is a statistical and mathematical task, and a highly complex one at that. This is where hiring a data analyst won’t do, since their efforts may not be enough. Pushing your sensor data analytics possibilities further is a case for someone with more knowledge and skill. A case for a data scientist.

Challenge #4. Troubles of putting sensor data into context

Even if you fish for the tricky patterns, looking at separate changes in sensor readings won’t be enough. To really understand what’s going on and see the bigger picture, you’ll need far more than that:

Searching for the interdependencies between readings. For instance, you’ll find that pressure rises after dramatic changes in temperature and particular chemical’s concentration in a substance. Obviously, to understand that you’ll need to establish what parameters might interact. But also you will need to understand within what timeframe these interactions may manifest.

Combining sensor data with other data types. To really put sensor data into context, you should try to find other data that can help better analyze sensor readings. The foremost additional data type here is data about machinery configurations, sensor placements and – if your production management is (semi-)automated – commands issued to actuators. Other types of ‘assistant’ data can be: maintenance teams’ actions registry (text), substance colors (image) or the sounds of tapping a cheese wheel with a hammer (audio). All these can influence your yield, downtimes or defect prevention, which is why it’s useful to analyze them.

Only putting your data into context and revealing hidden meaningful patterns can drive your enterprise to new heights of operational efficiency. From descriptive and diagnostic methods (the simple forms of sensor data analysis) to predictive or even prescriptive analytics.

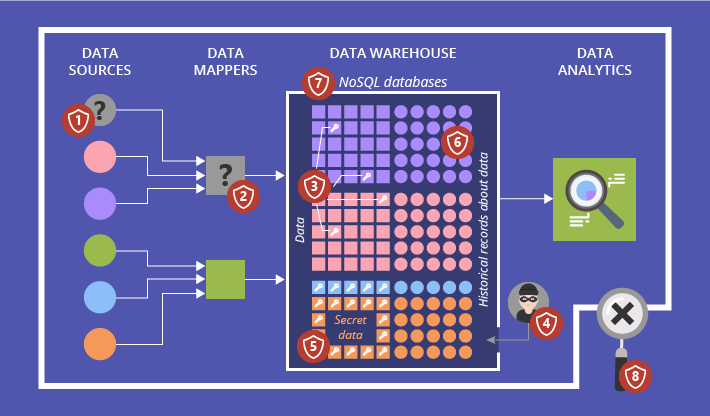

Challenge #5. Dangers of neglected data security

We all definitely need to beware of overall big data security challenges, but there are points in sensor data analytics where data security is particularly important in its own way. Here, we’re talking not only about somebody spoiling your analytics results or stealing your sensor readings for some unclear purpose. The biggest dangers that neglected sensor data security bears are threatening automated or semi-automated production management systems. These complex ecosystems can potentially become dangerous because they use actuators. If fake sensor data is fed to an analytical tool, it can send inadequate commands to actuators, which can bring chaos to your production. To make it more vivid, picture a situation often portrayed in movies: a person is stuck in a self-driving vehicle that moves towards a deadly obstacle.

To avoid such horrors, your employees or consultants will need to work really hard on your system’s security so that it is both safe and doesn’t deteriorate the overall system’s performance.

So, deadly or curable?

There’re actually tons of ways within these challenges how your analytical efforts may go in vain, for instance:

You may choose too little an interval for data collection and transmission and your solution will barely work because of overloads.

- You may have too much dirty data coming from sensors and such noise won’t let you analyze anything properly.

You may concentrate only on obvious patterns in your data and miss out on more serious but less noticeable sequences’ analysis. - You may analyze your data out of context and fail to understand how your manufacturing parameters and actions interact.

- You may neglect sensor data security and completely ignore the threats of external penetrations that can crash your system.

So, if you don’t manage to handle all of these challenges properly, they will obviously be deadly. That is why you should gain enough sensor data understanding and maybe turn to professionals before embracing sensor data and its challenges in your enterprise.