MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Summary: Remember when we used to say data is the new oil. Not anymore. Now Training Data is the new oil. Training data is proving to be the single greatest impediment to the wide adoption and creation of deep learning models. We’ll discuss current best practice but more importantly new breakthroughs into fully automated image labeling that are proving to be superior even to hand labeling.

More and more data scientists are skilled in the deep learning arts of CNNs and RNNs and that’s a good thing. What’s interesting though is that ordinary statistical classifiers like regression, trees, SVM, and hybrids like XGboost and ensembles are still getting good results on image and text problems too and sometimes even better.

More and more data scientists are skilled in the deep learning arts of CNNs and RNNs and that’s a good thing. What’s interesting though is that ordinary statistical classifiers like regression, trees, SVM, and hybrids like XGboost and ensembles are still getting good results on image and text problems too and sometimes even better.

I had a conversation with a fellow data scientist just this morning about his project to identify authors based on segments of text. LSTM was one of the algos he used but to get a good answer it was necessary to stack the LSTM with XGboost, logistic regression, and multinomial Naïve Bayes to make the project work satisfactorily.

Would this have worked as well without the LSTM component? Why go to the cost in time and compute to spin up a deep neural net? What’s holding us back?

Working with DNN Algos is Getting Easier – Somewhat

The technology of DNNs itself is getting easier. For example we have a better understanding of how many layers and how many neurons are needed to use as a starting point. A lot of this used to be trial and error but there are some good rules-of-thumb to get us started.

There are any numbers of papers now on this but I was particularly attracted to this article by Ahmed Gad that suggests a simple graphical diagramming technique can answer the question.

There are any numbers of papers now on this but I was particularly attracted to this article by Ahmed Gad that suggests a simple graphical diagramming technique can answer the question.

The trick is to diagram the data to be classified so that it shows you how many divisions of the data would be needed to segment the data using straight lines. In this diagram, even though the data is intermixed, Gad concludes the right number of hidden layers is four. At least it gets you to a good starting point.

And yes, the cost of compute on AWS, Google, and Microsoft is now somewhat less than it used to be, and faster to boot with the advent of not just GPUs but custom TPUs. Still, you can’t realistically do a DNN on the CPUs in your office. You need to spend not insignificant amounts of time and money with a GPU cloud provider to get successful results.

Transfer Learning (TL) and Automated Deep Learning (ADL)

Transfer Learning and Automated Deep Learning need to be seen as two separate categories with the same goal, to make DL faster, cheaper, and more assessable to the middle tier of non-specialist data scientists.

First, Automated Deep Learning has to fully automate the setup of the NN architecture, nodes, layers, and hyperparameters for full de novo deep learning models. This is the holy grail for the majors (AWS, Google, Microsoft) but the only one I’ve seen to date is from a relatively new entrant OneClick.AI that handles this task for both image and text. Incidentally their platform also has fully Automated Machine Learning including blending, prep, and feature selection.

Meanwhile Transfer Learning is the low hanging fruit offered us by the majors along the journey to full ADL. Currently TL works mostly for CNNs. That used to mean just images but recently CNNs are increasingly being used for text/language problems as well.

The central concept is to use a more complex but successful pre-trained DNN model to ‘transfer’ its learning to your more simplified problem. The earlier or shallower convolutional layers of the already successful CNN are learning the features. In short, in TL we retain the successful front end layers and disconnect the backend classifier replacing it with the classifier for your new problem.

The central concept is to use a more complex but successful pre-trained DNN model to ‘transfer’ its learning to your more simplified problem. The earlier or shallower convolutional layers of the already successful CNN are learning the features. In short, in TL we retain the successful front end layers and disconnect the backend classifier replacing it with the classifier for your new problem.

Then we retrain the new hybrid TL with your problem data which can be remarkably successful with far less data. Sometimes as few as 100 items per class (more is always better so perhaps 1,000 is a more reasonable estimate).

Transfer Learning services have been rolled out by Microsoft (Microsoft Custom Vision Services, https://www.customvision.ai/, and Google (in beta as of January Cloud AutoML).

These are good first steps but they come with a number of limitations on how far you can diverge from the subject matter of the successful original CNN and still have it perform well on your transfer model.

The Crux of the Matter Remains the Training Data

Remember when we used to say that data is the new oil. Times have changed. Now Training Data is the new oil.

Remember when we used to say that data is the new oil. Times have changed. Now Training Data is the new oil.

It’s completely clear that acquiring or hand-coding millions of instances of labeled training data is costly, time consuming, and is the single constraint that causes many interesting DNN projects to be abandoned.

Since the cloud providers are anxious for us to use their services the number of items needed to successfully train has consistently been minimized in everything we read.

The reuse of large scale models already developed by the cloud providers in transfer models is a good start, but real breakthrough applications still lie in developing your own de novo DNN models.

A 2016 study by Goodfellow, Bengio and Courville concluded you could get ‘acceptable’ performance with about 5,000 labeled examples per category BUT it would take 10 Million labeled examples per category to “match or exceed human performance”. My guess is that you and your boss are really shooting for that second one but may have way under estimated the data necessary to get there.

Some Alternative Methods of Creating DNN Training Data

There are two primary thrusts being explored today in reducing the cost of creating training data. Keep in mind that your model probably needs not only its initial training data, but also continuously updated retraining and refresh data to keep it current in the face of inevitable model drift.

Human-In-the Loop with Generated Labels

You could of course pay human beings to label your training data. There are entire service companies set up in low labor cost countries for just this purpose. Alternatively you could try Mechanical Turk.

But the ideal outcome would be to create a separate DNN model that would label your data, and to some extent this is happening. The problem is that the labeling is imperfect and using it uncorrected would result in errors in your final model.

Two different approaches are being used, one using CNNs or CNN/RNN combinations for predicting labels and the other using GANs to generate labels. Both however are realistic only if quality checked and corrected by human checkers. The goal is to maximize quality (never perfect if only sampled) while minimizing cost.

The company Figure 8 (previously known as Crowdflower) has built an entire service industry around their platform for automated label generation with human-in-the-loop correction. A number of other platforms have emerged that allow you to organize your own internal SMEs for the same purpose.

Completely Automated Label Generation

Taking out the human cost and time barrier by completely automating label generation for training data is the next big hurdle. Fortunately there are several organizations working on this, the foremost of which may be the Stanford Dawn project.

These folks are working on a whole portfolio of solutions to simplify deep learning many of which have already been rolled out. In the area of training data creation they offer DeepDive, and most recently Snorkel.

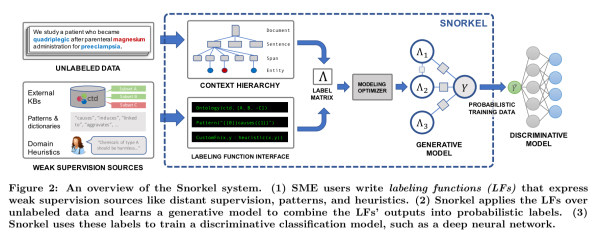

Snorkel is best described as a whole new category of activity within deep learning. The folks at Stanford Dawn have labeled it “data programming”. This is a fully automated (no human labeling) weakly supervised system. See the original study here.

In short, SMEs who may not be data scientists are trained to write ‘labeling functions’ which express the patterns and heuristics that are expected to be present in the unlabeled data.

Source: Snorkel: Rapid Training Data Creation with Weak Supervision

Source: Snorkel: Rapid Training Data Creation with Weak Supervision

Snorkel then learns a generative model from the different labeling functions so it can estimate their correlations and accuracy. The system then outputs a set of probabilistic labels that are the training data for deep learning models.

The results so far are remarkably good both in terms of efficiency and accuracy compared to hand labeling and other pseudo-automated labeling methods. This sort of major breakthrough could mean a major cost and time savings, as well as the ability for non-specialists to produce more valuable deep learning models.

Other articles by Bill Vorhies.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at: