MMS • Roland Meertens

Article originally posted on InfoQ. Visit InfoQ

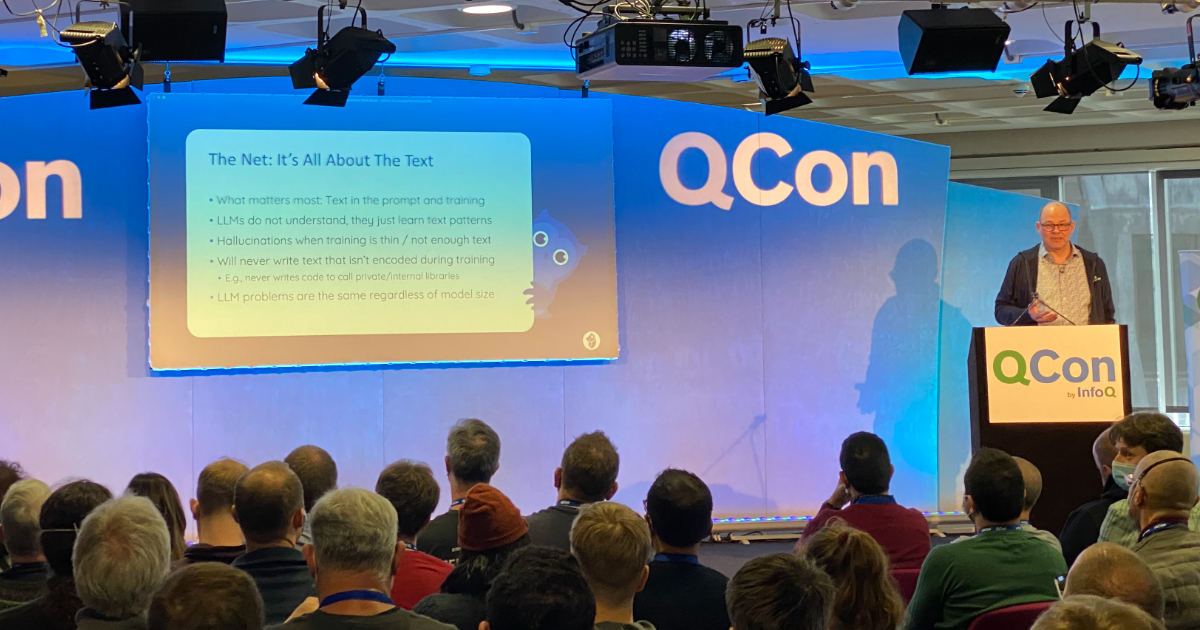

At the recent QCon London conference, Mathew Lodge, CEO of DiffBlue, gave a presentation on the advancements in artificial intelligence (AI) for writing code. Lodge highlighted the differences between Large Language Models and Reinforcement Learning approaches, emphasizing what both approaches can and can’t do. The session gave an overview of the state of the current state of AI-powered code generation and its future trajectory.

In his presentation, Lodge delved into the differences between AI-powered code generation tools and unit test writing tools. Code generation tools like GitHub Copilot, TabNine, and ChatGPT primarily focus on completing code snippets or suggesting code based on the context provided. These tools can greatly speed up the development process by reducing the time and effort needed for repetitive tasks. On the other hand, unit test writing tools such as DiffBlue aim to improve the quality and reliability of software by automatically generating test cases for a given piece of code. Both types of tools leverage AI to enhance productivity and code quality but target different aspects of the software development lifecycle.

Lodge explained how code completion tools, particularly those based on transformer models, predict the next word or token in a sequence by analyzing the given text. These transformer models have evolved significantly over time, with GPT-2, one of the first open-source models, being released in February 2019. Since then, the number of parameters in these models has scaled dramatically, from 1.5 billion in GPT-2 to 175 billion in GPT-3.5, released in November 2022.

OpenAI Codex, a model with approximately 5 billion parameters used in GitHub CoPilot, was specifically trained on open-source code, allowing it to excel in tasks such as generating boilerplate code from simple comments and calling APIs based on examples it has seen in the past. The one-shot prediction accuracy of these models has reached levels comparable to explicitly trained language models. Unfortunately, information regarding the development of GPT-4 remains undisclosed. Both training data and information around the number of parameters is not published which makes it a black box.

Lodge also discussed the shortcomings of AI-powered code generation tools, highlighting that these models can be unpredictable and heavily reliant on prompts. As they are essentially statistical models of textual patterns, they may generate code that appears reasonable but is fundamentally flawed. Models can also lose context, or generate incorrect code that deviates from the existing code base calling functions or APIs which do not exist. Lodge showed an example of code for a so-called perceptron model which had two difficult to spot bugs in them which essentially made the code unusable.

GPT-3.5, for instance, incorporates human reinforcement learning in the loop, where answers are ranked by humans to yield improved results. However, the challenge remains in identifying the subtle mistakes produced by these models, which can lead to unintended consequences, such as the ChatGPT incident involving the German coding company OpenCage.

Additionally, Large Language Models (LLMs) do not possess reasoning capabilities and can only predict the next text based on their training data. Consequently, the models’ limitations persist regardless of their size, as they will never generate text that has not been encoded during their training. Lodge highlighted that these problems do not go away, no matter how much training data and parameters are actually used during the training of these models.

Lodge then shifted the focus to reinforcement learning and its application in tools like DiffBlue. Reinforcement learning differs from the traditional approach of LLMs by focusing on learning by doing, rather than relying on pre-existing knowledge. In the case of DiffBlue Cover, a feedback loop is employed where the system predicts a test, runs the test, and then evaluates its effectiveness based on coverage, other metrics, and existing Java code. This process allows the system to iteratively improve and generate tests with higher coverage and better readability, ultimately resulting in a more effective and efficient testing process for developers. Lodge also mentioned that their representation of test coverage allows them to only run relevant tests when changing code, resulting in a decrease of about 50% of testing costs.

To demonstrate the capabilities of DiffBlue Cover, Lodge conducted a live demo featuring a simple Java application designed to find owners. The application had four cases for which tests needed to be created. Running entirely on a local laptop, DiffBlue Cover generated tests within 1.5 minutes. The resulting tests appeared in IntelliJ as a new file, which included mocked tests for scenarios such as single owner return, double owner return, no owner, and an empty array list.

In conclusion, the advancements in AI-powered code generation and reinforcement learning-based testing, as demonstrated by tools like DiffBlue Cover, have the potential to greatly impact the software development and testing landscape. By understanding the strengths and limitations of these approaches, developers and architects can make informed decisions on how to best utilize these technologies to enhance code quality, productivity, and efficiency while reducing the risk of subtle errors and unintended consequences.