MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Conversational user interface (UI) is changing the way that we interact. Intelligent assistants, chatbots and voice-enabled devices, like Amazon Alexa and Google Home, offer a new, natural, and intuitive human-machine interaction and open up a whole new world for us as humans. Chatbots and voicebots ease, speed up, and improve daily tasks. They increase our efficiency and compared to humans, they are also very cost effective for the businesses employing them.

This article will address the concept of conversational UIs by initially exploring what they are, how they evolved, what they offer. The article provides an introduction to the conversational world. We will take a look at how UI has developed over the years and the difference between voice control, chatbots, virtual assistants, and conversational solutions.

What is conversational UI?

Broadly speaking, conversational UI is a new form of interaction with computers that tries to mimic a “natural human conversation”. To understand what this means, we can turn to the good old Oxford Dictionary and search for the definition of a conversation:

con·ver·sa·tion

/ˌkänvərˈsāSH(ə)n/ noun

A talk, especially an informal one, between two or more people, in which news and ideas are exchanged.

On Wikipedia (https://en.wikipedia.org/wiki/Conversation), I found some interesting additions. There, conversation is defined a little more broadly: “An interactive communication between two or more people…the development of conversational skills and etiquette is an important part of socialization.”

The development of conversational skills in a new language is a frequent focus of language teaching and learning. If we sum up the two definitions, we can agree that a conversation must be:

- Some type of communication (a talk)

- Between more than two people

- Interactive: ideas and thoughts must be exchanged

- Part of a socialization process

- Focused on learning and teaching

Now if we go back to our definition of conversational UI, we can easily identify the gaps between the classic definition of a conversation and what we define today as conversational UI.

Conversational UI, as opposed to the preceding definition:

- Doesn’t have to be oral: it could be in writing (for example, chatbots).

- Is not just between people and is limited to two sides: in conversational UI we have at least one form of a computer involved, and the conversation is limited to only two participants. Rarely does conversational UI involve more than two participants.

- Is less interactive and it’s hard to say whether ideas are exchanged between the two participants.

- Is claimed to be unsocialized, since we are dealing with computers and not people. However, the two main components are already there.

- Is a medium of communication that enables natural conversation between two entities.

- Is about learning and teaching by leveraging natural language understanding (NLU), artificial intelligence, machine learning and deep learning, as computers continue to learn and grow their understanding capabilities.

The gaps that we identified above represent the future evolution of conversational UI. While it seems like there is a long way to go for us to actually be able to truly replace a human-to-human interaction, with today’s and future technologies, those gaps will close sooner than we think. However, let’s start by taking a look at how human-computer interaction evolved over the last 50 years, before we try to predict the future.

The evolution of conversational UI

Conversational UI is part of a long evolution of human-machine interaction. The interface of this communication has evolved tremendously over the years, mostly thanks to technology improvements, but also through humans’ imagination and vision.

Science fiction books and movies predicted different forms of humanized interaction with machines for decades (some of the best-known examples are Star Wars, 2001: A Space Odyssey, and Star Trek), however, computing power was extremely scarce and expensive, so investing in this resource on UIs wasn’t a high priority. Today, when our smartphones use more computing power than a supercomputer did in the past, the development of human-machine interaction is much more natural and intuitive. In this article, we will review the evolution of computer UI, from the textual through to the graphical and all the way to the conversational UI.

Textual interface

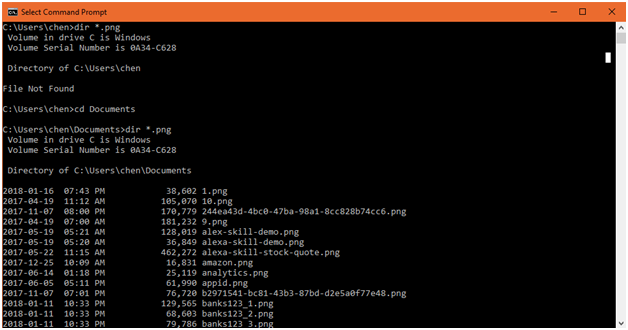

For many years, a textual interface was the only way to interact with computers. The textual interface used commands with a strict format and evolved into free natural language text.

Figure 1: A simple textual interaction based on commands

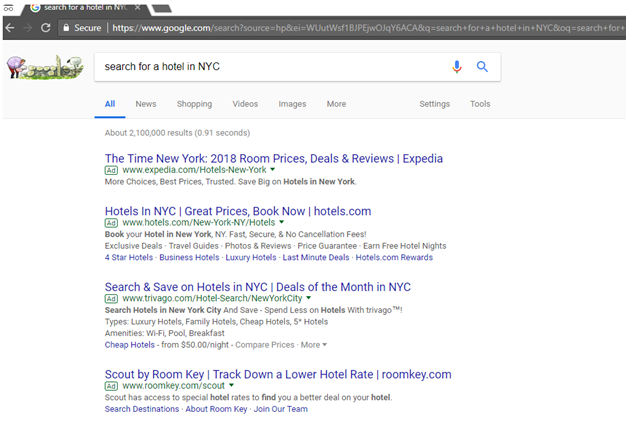

A good example of a common use of textual interaction is search engines. Today, if I type a sentence such as search for a hotel in NYC on Google or Bing (or any other search engine for that purpose) the search engine will provide me with a list of relevant hotels in NYC.

Figure 2: Modern textual UI: Google’s search engine.

Graphical user interface (GUI)

A later evolution of human-machine interface was the GUI. This interface mimics the way that we perform mechanical tasks in “real life” and replaces the textual interaction.

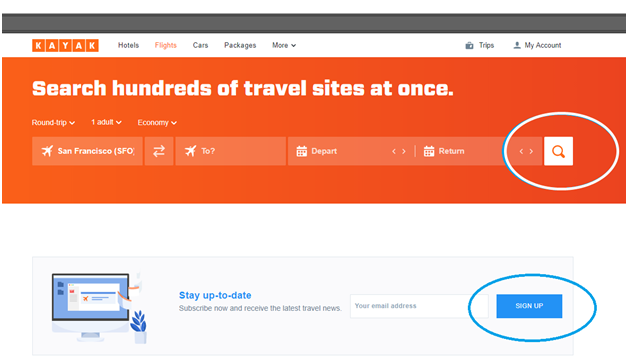

Figure 3: The GUI mimicking real-life actions.

With this interface, for example, to enable/disable an action or specific capability, we will click a button on a screen, using a mouse (instead of writing a textual command line), mimicking a mechanical action of turning on or off a real device.

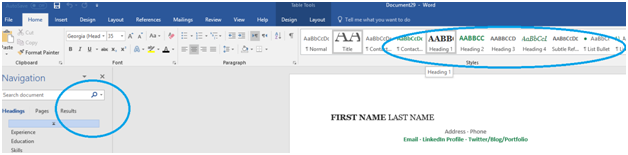

Figure 4: Microsoft Word is changing the way we interact with personal computers

The GUI became extremely popular during the 90s, with the introduction of Microsoft Windows, which became the most popular operating system for personal computers. The following evolution of GUIs came with the introduction of touchscreen devices, which eliminated the need for mediators, like the mouse, and provided a more direct and natural way of interacting with a computer.

Figure 5: Touchscreens are eliminating the mouse

Figure 6: Touchscreens allow scrolling and clicking, mimicking manual actions

Conversational UI

The latest evolution of computer-human interaction is the conversational UI. As defined above, a conversational interaction is a new form of communication between humans and machines that includes a series of questions and answers, if not an actual exchange of thoughts.

Figure 7: The CNN Facebook Messenger chatbot

In the conversational interface, we experience, once again, a form of two-sided communication, where the user asks a question and the computer will respond with an answer. In many ways, this is similar to the textual interface we introduced earlier (see the example of the search engine), however, in this case, the end user is not searching for information on the internet but is rather interacting in a one-to-one format with someone who delivers the answer. That someone is a humanized-computer entity called a bot.

The conversational UI mimics a text/voice interaction with a friend/service provider. Though still not a true conversation as defined in the Oxford Dictionary, it provides a free and natural experience, that gets the closest to a human-human interaction that we have seen yet.

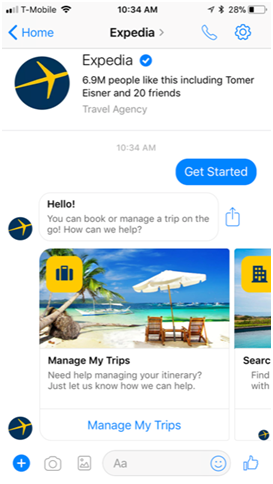

Figure 8: The Expedia Facebook Messenger chatbot

Voice-enabled conversational UI

A sub-category in the field of conversational UI is voice-enabled conversational UI. Whereas the shift from textual to GUI and then from GUI to conversational is defined as evolution, conversational voice interaction is a full paradigm shift. This new way to interact with machines, using nothing but our voice – our most basic communication and expression tool – takes human-machine relationships to a whole new level.

Computers are now capable of recognizing our voice, “understanding” our requests, responding back and even replying with suggestions and recommendations. Being a natural interaction method for humans, voice makes it easy for young people and adults to engage with computers, in a limit-free environment.

Figure 9: Amazon Alexa and Google Home are voice-enabled devices that facilitate conversational interactions between human and machines

The stack of conversational UI

The building blocks required to develop a modern and interactive conversational application include:

- Speech recognition (for voicebots)

- NLU

- Conversational level:

- Dictionary/samples

- Context

- Business logic

In this section, we will walk through the “journey” of a conversational interaction along the conversational stack.

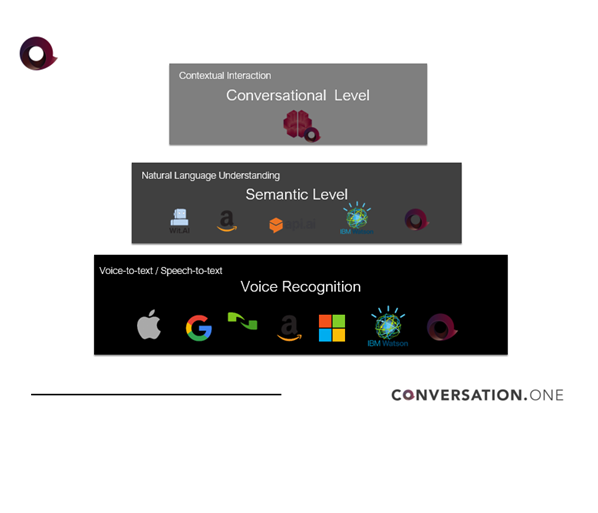

Figure 10: The conversational stack: voice recognition, NLU, and context

Voice recognition technology

Voice recognition (also known as speech recognition or speech-to-text) transcribes voice into text. The computer captures our voice with a microphone and provides the text transcription of the words. Using a simple level of text processing, we can develop a voice control feature with simple commands, such as “turn left” or “call John”. Leading providers of speech recognition today include Nuance, Amazon, IBM Watson, Google, Microsoft and Apple.

NLU

To achieve a higher level of understanding, beyond simple commands, we must include a layer of NLU. NLU fulfills the task of reading comprehension. The computer “reads the text” (in a voicebot it will be the transcribed text from the speech recognition) and then tries to grasp the user’s intent behind it and translate it into concrete steps.

An example is a travel bot. The system identifies two individual intentions:

- Flight booking – BookFlight

- Hotel booking – BookHotel

When a user asks to book a flight, the NLU layer is what helps the bot to understand that the intent behind the user’s request is BookFlight. However, since people don’t talk like computers, and since our goal is to create a humanized experience (and not a computerized one), the NLU layer should understand or be able to connect various requests to a specific intent.

Another example is when a user says, I need to fly to NYC. The NLU layer is expected to understand that the user’s intent is to book a flight. A more complex request for our NLU to understand will be when a user says, I’m travelling again.

Similarly, the NLU should connect the user’s sentence to the BookFlight intent. This is a much more complex task, since the bot can’t identify the word flight in the sentence or a destination out of a list of cities or states. Therefore, the sentence is more difficult for the bot to understand.

Computer science considers NLU to be a “hard AI problem”(Roman V. Yampolskiy, Turing Test as a Defining Feature of AI-Completeness in Artificial Intelligence, Evolutionary Computation and Metaheuristics (AIECM)), meaning that even with artificial intelligence (powered by deep learning) developers are still struggling to provide a high-quality solution. To call a problem AI-hard means that this problem cannot be solved by a simple specific algorithm and that means dealing with unexpected circumstances while solving any real-world problem. In NLU, those unexpected circumstances are the various configurations of words and sentences in an endless number of languages and dialects. Some leading providers of speech recognition are Dialogflow (previously api.ai, acquired by Google), wit.ai (acquired by Facebook), Amazon, IBM Watson and Microsoft.

Dictionaries/samples

To build a good NLU layer, that can understand people, we must provide a broad and comprehensive sample set of concepts and categories in a subject area or domain. Simply put, we need to provide a list of associated samples or even better, a collection of possible sentences for each single intent (request) that a user can activate on our bot. If we go back to our travel example, we would need to build a comprehensive dictionary, as you can see in the following table:

|

User says (samples) |

Related intent |

|

I want to book my travel I want to book a flight I need a flight |

BookFlight |

|

Please book a hotel room I need accommodation |

BookRoom |

Building these dictionaries, or set of samples, can be a tough and Sisyphean task. It is domain specific and language specific, and, as such, requires different configurations and tweaks from one use case to another, and from one language to the other. Unlike the graphic UI, where the user is restricted to choosing from the web screen, the conversational UI is unique, since it offers the user an unlimited experience. However, as such, it is also very difficult to pre-configure to a level of perfection (see the AI-hard problem above). Therefore, the more samples we provide, the better the bot’s NLU layer will be able to understand different requests from a user. Beware of the Catch 22 in this case: the more intentions we build, the more samples are required and all those samples can easily lead to intentions overlapping. For example, when a user says, I need help. They might mean they want to contact support, but they also might require help on how to use the app.

Summary

Intelligent assistance, chatbots, voicebots, and voice-enabled devices, like Amazon Echo and Google Home, have stormed into our lives offering many ways to improve daily tasks, through a natural human-computer communication. In fact, some of the applications that we use today already take advantage of voice/chat-enabled interaction to ease our lives. Whether we are turning on and off the lights in our living room with a simple voice command or shopping online with a Facebook Messenger bot, conversational UI makes our interactions more focused and efficient.

Fast-forward from today, we can assume that conversational UI, and more specifically voice-enabled communication, will replace all interactions with computers. In the movie Her (2013), written and directed by Spike Jonze, an unseen computer bot communicates with the main character using voice. This voicebot (played remarkably by Scarlett Johansson) assists, guides, and consults the main character on any possible matter. It is a personal assistant on steroids. Its knowledge is unlimited, it continues to learn all the time, it can create a conversation (a true exchange of ideas) and at the end it can even understand feelings (however it still doesn’t feel by itself). However, as we’ve seen above, with current technology, real-life conversational UI still lacks much of the components in Her and is facing unsolved challenges and question marks around it. The experience is limited for the user, as it’s still mostly un-contextual and bots are far from understanding feelings or social situations.

Nevertheless, with all the limitations we experience today, creating a supercomputer, that knows everything, is more in reach than creating a super-knowledgeable person. Technology, whether in the form of advanced artificial intelligence, machine learning, or deep learning methodologies, will solve most of those challenges and make the progress needed in building successful bot assistances.

What might take a bit more time to transform is human skepticism: conversational UI is limited also because its users are still very skeptical of it. Aware of its limitations, we stick to what works best and tend to not challenge it too much. When comparing children-bot interaction with that of adults, it is clear to see that while the latter group stays within specific boundaries of usage, the former interacts with the bot as if it is a real adult human – knowledgeable about almost everything. It might be a classic chicken or the egg dilemma, but one thing is for sure: the journey has started and there’s no going back.

You enjoyed an excerpt from Packt Publishing’s upcoming book “Chatbot and Voicebot Design”, written by Rachel Batish. If you want to create conversational interfaces for AI from scratch and with major frameworks this is the book for you.