MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Summary: How about we develop a ML platform that any domain expert can use to build a deep learning model without help from specialist data scientists, in a fraction of the time and cost. The good news is the folks at the Stanford DAWN project are hard at work on just such a platform and the initial results are extraordinary.

Last week we wrote that sufficient labeled training data was the single greatest cost factor holding back wide adoption of machine learning. I stand corrected. It may be the most expensive, but it’s far from the only thing holding ML back.

Last week we wrote that sufficient labeled training data was the single greatest cost factor holding back wide adoption of machine learning. I stand corrected. It may be the most expensive, but it’s far from the only thing holding ML back.

After examining the work of the Stanford DAWN project these researchers propose that literally all the steps in the development process from data acquisition, to feature extraction, to model training, and all the way to productionizing the model are all deeply flawed. Each contributes significantly to holding back adoption of machine learning.

The Stanford DAWN project (Data Analytics for What’s Next) is a five year project started just about a year ago by four well known Stanford CS principal investigators Peter Bailis, Kunle Olukotun, Christopher Re, and Matei Zaharia, plus their post docs and Ph.D. students. Together in this team of about 30+ there are plenty of academic and start-up chops to go around.

What’s Broken

What they say is wrong with the current process is pretty much what we all know.

- Training data is too limited and too expensive to create.

- Our algorithms are pretty much OK but it still takes a substantial team of specialist data scientists, data engineers, domain experts, and more supporting staff to get good results.

- And if the number and cost of the expert staff were not sufficiently deterring, our understanding of how to optimize for nodes, layers, and hyperparameters is still primitive. Plus it seems that the rules change based on the type of data being analyzed.

- Finally moving the models into production and keeping them updated is a final hurdle considering that the scoring created by the model can frequently only be achieved by continuing to use the same costly and complex architecture used for training.

Despite the huge improvements in GPU/TPU compute, most of that machine time is wasted in trial and error. Our development process is not up to our hardware.

In short, it just takes too many people too darn long to create useful models, much less those that rise to the level of human performance.

We’ve said it before and the DAWN PIs say it too, this phase in our development looks a lot like the dawn of the PC with lots of lab-coated specialists hovering around, all needed to keep this very young technology alive.

The major cloud providers along with everyone else understand this. The introduction of transfer learning in the last year or so is a kind of stop gap that provides some simplification while reusing many of the very large and expensive pre-trained models they’ve built.

Still, if we want to get from the earliest prototype PC to today’s simple and reliable laptop, we’ve got a lot of simplifying and standardizing to do.

Measuring Success

As the DAWN team was setting out, like all good data scientists one of the first questions they faced was how to tell if they were actually making things better. They decided to focus on the measure: reducing the time and cost for the end-to-end ML workflow. So there are these design goals:

- Simplify, standardize, and optimize the end-to-end ML workflow.

- Empower domain experts to be able to build ML models without the need for data science ML experts.

Interestingly, improving the accuracy of existing algorithms is not a goal. The DAWN PIs think that our existing algorithms are almost always good enough.

The Solution – At a High Level

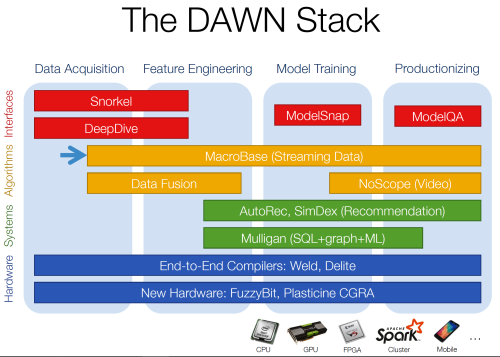

The work done over the last year has created a group of open source applications, many of which have already been implemented by DAWN’s commercial sponsors. At a high level, that group of solutions looks like this: (note all the graphics in this article are drawn directly from the DAWN project.)

DAWNBench

The entire stack comprises DAWNBench which is the benchmark suite against which the team measures its progress to reduce end-to-end time, cost, and complexity. The DAWN team uses a reference set of common deep learning workloads, quantifying training time, training cost, inference latency, and inference cost across different optimization strategies, model architectures, software frameworks, clouds, and hardware.

Here are some highlights of the major components.

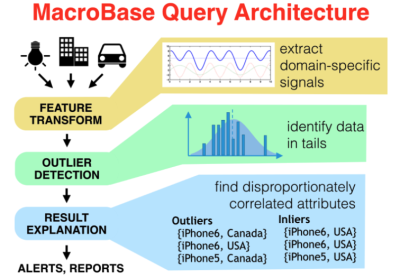

MacroBase

The problem with streaming data is that there is simply too much too fast. MacroBase is a new analytic engine specialized for one task, to find and explain unusual trends in data. In keeping with their design goals, MacroBase requires no ML expertise, uses general models and tunes them automatically, and can be incorporated directly into production environments. It’s open source and available now on github.

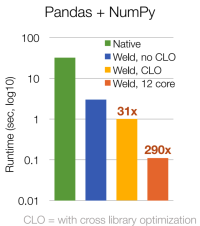

Weld: End to End Compilers

In the process of creating ML models we use many processing libraries and functions ranging from SQL, to Pandas, to NumPy, to TensorFlow, and the rest. These are all data intensive and as a result data movement cost dominates on modern hardware.

In the process of creating ML models we use many processing libraries and functions ranging from SQL, to Pandas, to NumPy, to TensorFlow, and the rest. These are all data intensive and as a result data movement cost dominates on modern hardware.

Weld creates a common runtime for many of these libraries with many more yet to come. The results are impressive and result in single library speed increases ranging from 4.5X for NumPy to 30X for TensorFlow (logistic regression).

Where Weld really shines is when the ML calls for cross library calculation. For example, tests on Pandas + NumPy scored a 290X increase.

The list of supported libraries and functions is growing fast. Currently available on github.

NoScope:

CNNs are doing a great job allowing accurate queries of visual data in real time production. The problem is that it requires $1,000 GPU to process one video in real time.

NoScope is a video query system that accelerates the process with demonstrated cases of 100X to 3000X speed increases, with obvious reduction in resource use and cost.

Snorkel:

Snorkel was the focus of our article last week so for detail we’ll let you look back. The goal of Snorkel is to allow the creation of training data with a fully automated system with no human-in-the-loop correction required.

Domain experts write ‘labeling functions’ which express the patterns and heuristics that are expected to be present in the unlabeled data. The system characterized as weakly supervised produces the labels with accuracy equal to human hand coding.

We’ve been somewhat lulled to sleep on this by claims that you can get acceptable performance from a DNN with only about 5,000 labeled examples per category. But the 2016 study by Goodfellow, Bengio and Courville concluded it would take 10 Million labeled examples per category to “match or exceed human performance”.

Results So Far

Keep in mind that the goal is to reduce the end-to-end time, cost, and complexity of producing a ML model. The DAWN team has been rigorous about benchmarking the progress of the entire DAWNBench against their baselines on different common ML problems.

As of this last April which was the first anniversary of the DAWN Project the team reported a number of impressive time reductions. Here’s the example for ImageNet:

Baseline without DAWNBench: 10 days 10 hours and 42 minutes and $1,112.64 for public cloud instances to train.

With DAWNBench: 30 minutes with checkpointing and 24 minutes without checkpointing using half of a Google TPUv2 Pod, representing a 477x speed-up with similar accuracy.

It’s too early in the project to say whether we’ll ever see a fully integrated DAWNBench open source platform rolled out for all of us to use. What’s much more likely is that the DAWN Project’s commercial sponsors including Intel, Microsoft, Teradata, Google, NEC, Facebook, Ant Financial, and SAP will take these advancements and incorporate them as they become available. We understand that this is already underway.

Other articles by Bill Vorhies.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at:

Bill@Data-Magnum.com or Bill@DataScienceCentral.com