How the incorporation of prior information can accelerate the speed at which neural networks learn while simultaneously increasing accuracy

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Deep neural nets typically operate on “raw data” of some kind, such as images, text, time series, etc., without the benefit of “derived” features. The idea is that because of their flexibility, neural networks can learn the features relevant to the problem at hand, be it a classification problem or an estimation problem. Whether derived or learned, features are important. The challenge is in determining how one might use what one learned from the features in future work (staying inside the same typology, e.g. images, text, etc.).

By eliminating the need to relearn these features one can increase the performance (speed and accuracy) of a neural networks.

In this post, we describe how these ideas are used to speed up the learning process in image datasets while simultaneously improving accuracy. This work is a continuation of our collaboration with Rickard Brüel Gabrielsson

As we recall from our first and second installments we can, discover how convolutional neural nets learn and how to use that information to improve performance while allowing for the generalization of those techniques between data sets of the same type.

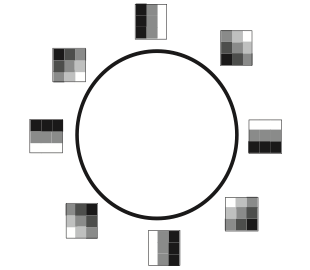

These findings drew from a previous paper where we analyzed a high density set of 3×3 image patches in a large database of images. That paper identified the patches visible in Figure 1 below and allowed them to be described algebraically. This permits the creation of features (for more detail, see the technical note at the end). What was found in the paper was that at a given density threshold (e.g. the top 10% densest patches) those patches were organized around a circle. The organization is summarized by the following image.

Figure 1: Patches of Features Organized Around a Circle

Figure 1: Patches of Features Organized Around a Circle

While the initial finding was via the study of a data set of patches, we have confirmed the finding through the study of a space of weight vectors in a neural network trained on data sets of images of hand-drawn digits. Each point on the circle is specified by an angle θ, and there is a corresponding patch Pθ.

What this allows us to do is to create a feature that is very simple to compute using trigonometric functions, that for each angle θ that measures the similarity of the given patch to Pθ. See the technical note at the end for a precise definition. By adjoining these features to two distinct data sets, and treating them as separate data channels, we have obtained improved training results for the two distinct data sets, one MNIST and the other SVHN. We’ll call this approach the boosted method. We are using a simple two layer convolutional neural net in both cases. The results are as follows.

MNIST

|

Validation Accuracy |

# Batch iterations Boosted |

# Batch iterations standard |

|

.8 |

187 |

293 |

|

.9 |

378 |

731 |

|

.95 |

1046 |

2052 |

|

.96 |

1499 |

2974 |

|

.97 |

2398 |

4528 |

|

.98 |

5516 |

8802 |

|

.985 |

9584 |

16722 |

SVHN

|

Validation Accuracy |

# Batch iterations Boosted |

# Batch iterations standard |

|

.25 |

303 |

1148 |

|

.5 |

745 |

2464 |

|

.75 |

1655 |

5866 |

|

.8 |

2534 |

8727 |

|

.83 |

4782 |

13067 |

|

.84 |

6312 |

15624 |

|

.85 |

8426 |

21009 |

The application to MNIST gives a rough speedup of a little under 2X. For the more complicated SVHN, we obtain improvement by a rough factor of 3.5X until hitting the .8 threshold, and lowering to 2.5-3X thereafter. An examination of the graphs of validation accuracy vs. number of batch iterations suggests, plotted to 30,000 iterations, that at the higher accuracy ranges, the standard method may never attain the results of the boosted method.

These findings suggest that one should always use these features for image data sets in order to speed up learning. The results in our earlier post also suggest that they contribute to improved generalization capability from one data set to another.

These results are only a beginning. There are more complex models of spaces of high density patches in natural images, which can generate richer sets of features, and which one would expect to enable improvement, particularly when used in higher layers.

In our next post, we will describe a methodology for constructing neural net architectures especially adapted to data sets of fixed structures, or adapted to individual data sets based on information concerning their sets of features. By using a set of features equipped with a geometry or distance function, we will show how that information can be used to inform the development of architectures for various applications of deep neural nets.

Technical Note

As stated above, each point on the circle is specified by an angle θ, and there is a corresponding patch Pθ, which is in turn a discretization of a real valued function ƒθ on the unit square of the form

(x,y) —–> xcos (θ ) + ysin (θ )

By forming the scalar product of a patch (which is a 9-vector of values, one for each pixel) with the 9-vector of values obtained by evaluating ƒθ on the 9 grid points, we obtain a number, which is the feature assigned to the angle θ. By selecting down to a finite discrete set of angles, equally spaced, we get a finite set of features we can work with.