MMS • Daniel Dominguez

Article originally posted on InfoQ. Visit InfoQ

Hugging Face, a top supplier of open-source machine learning tools, and AWS have joined together to increase the access to artificial intelligence (AI). Hugging Face’s cutting-edge transformers and natural language processing (NLP) models will be made available to AWS customers as a result of the cooperation, making it simpler for them to develop and deploy AI applications.

Hugging Face has become well-known in the AI community for its free, open-source transformers library, which is used by thousands of programmers all around the world to build cutting-edge AI models for a range of tasks, including sentiment analysis, language translation, and text summarization. By partnering with AWS, Hugging Face will be able to provide its tools and expertise to a broader audience.

Generative AI has the potential to transform entire industries, but its cost and the required expertise puts the technology out of reach for all but a select few companies, said Adam Selipsky, CEO of AWS. Hugging Face and AWS are making it easier for customers to access popular machine learning models to create their own generative AI applications with the highest performance and lowest costs. This partnership demonstrates how generative AI companies and AWS can work together to put this innovative technology into the hands of more customers.

AWS has increased the scope of its own generative AI offerings. For instance, it improved the AWS QuickSight Q business projections to comprehend common phrases like “show me a forecast.” The Microsoft-owned GitHub Copilot, which uses models built from OpenAI’s Codex, has competition in the form of AWS’s Amazon CodeWhisperer, an AI programming assistant that autocompletes software code by extrapolating from a user’s initial hints.

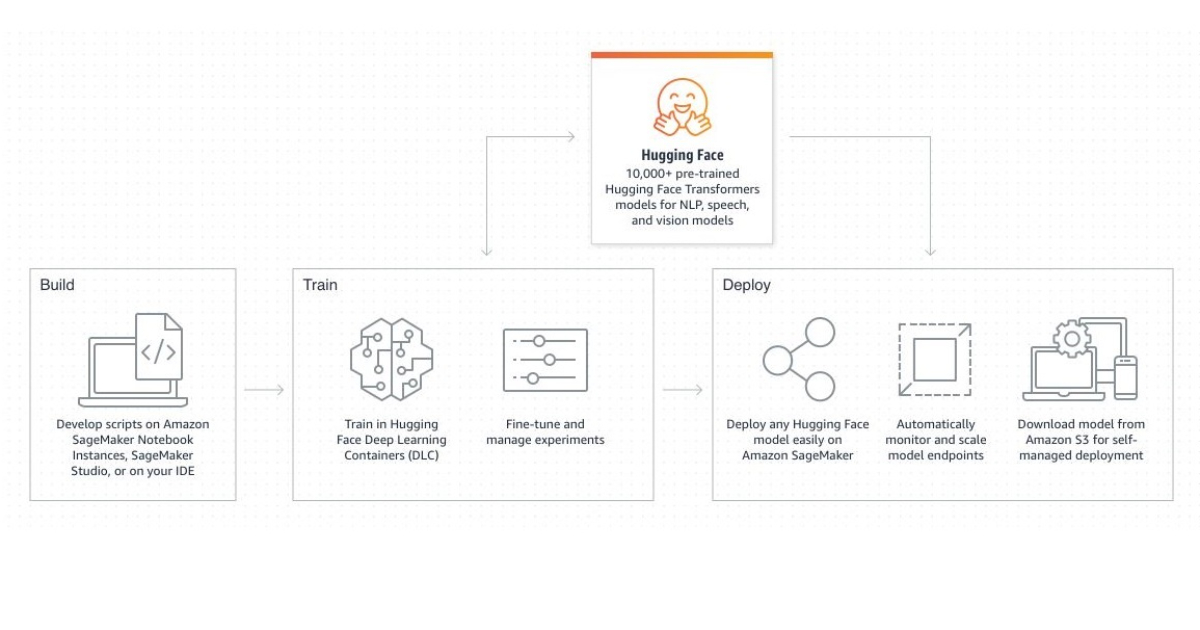

As part of the collaboration, Hugging Face’s models will be integrated with AWS services like Amazon SageMaker, a platform for creating, honing, and deploying machine learning models. This will make it simple for developers to create their own AI applications using pre-trained models from Hugging Face without needing to have considerable machine learning knowledge.

The future of AI is here, but it’s not evenly distributed, said Clement Delangue, CEO of Hugging Face. Accessibility and transparency are the keys to sharing progress and creating tools to use these new capabilities wisely and responsibly. Amazon SageMaker and AWS-designed chips will enable our team and the larger machine learning community to convert the latest research into openly reproducible models that anyone can build on.

Hugging Face offers a library of over 10,000 Hugging Face Transformers models that you can run on Amazon SageMaker. With just a few lines of code, you can import, train, and fine-tune pre-trained NLP Transformers models such as BERT, GPT-2, RoBERTa, XLM, DistilBert, and deploy them on Amazon SageMaker.

Hugging Face and AWS’s partnership is anticipated to have a big impact on the AI market since it will make it possible for more companies and developers to use cutting-edge AI tools to generate unique consumer solutions.