MMS • Daniel Dominguez

Mistral AI announced the release of Devstral, a new open-source large language model developed in collaboration with All Hands AI. Devstral is aimed at improving the automation of software engineering workflows, particularly in complex coding environments that require reasoning across multiple files and components. Unlike models optimized for isolated tasks such as code completion or function generation, Devstral is designed to tackle real-world programming problems by leveraging code agent frameworks and operating across entire repositories.

Devstral is part of a new class of agentic language models, which are designed not just to generate code, but to take contextual actions based on specific tasks. This agentic structure allows the model to perform iterative modifications across multiple files, conduct explorations of the codebase, and propose bug fixes or new features with minimal human intervention. These capabilities are aligned with the demands of modern software engineering, where understanding project structure and dependencies is as important as writing syntactically correct code.

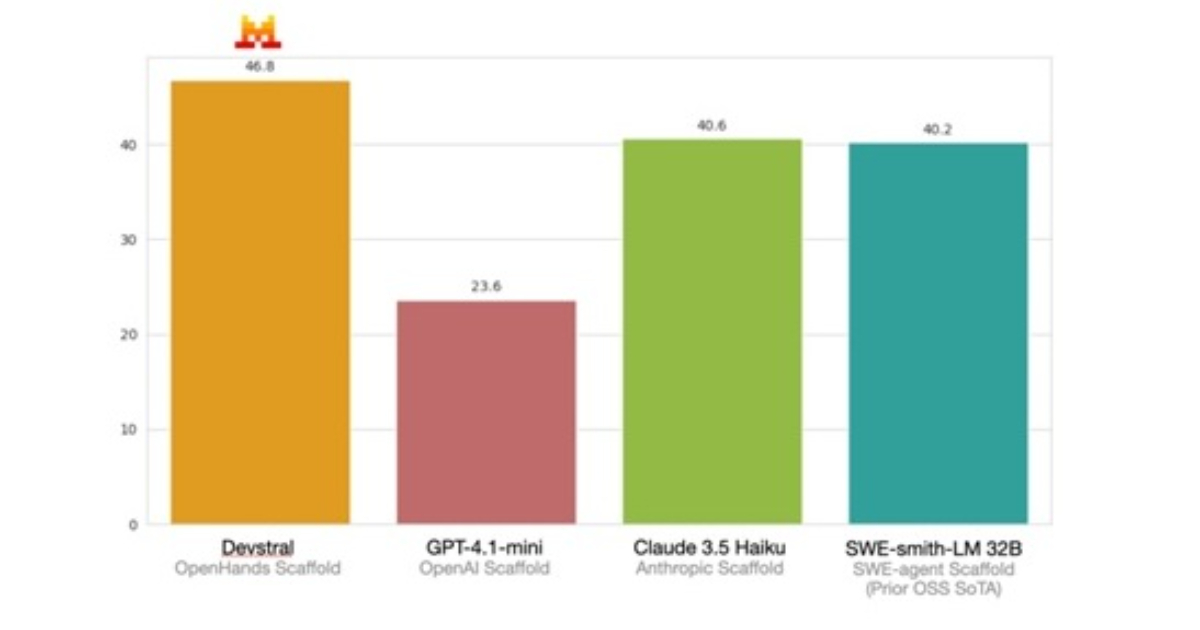

According to Mistral’s internal evaluations, Devstral achieves a performance score of 46.8% on SWE-Bench Verified, a benchmark composed of 500 manually screened GitHub issues. This score places it ahead of previously published open-source models, surpassing them by over six percentage points. The benchmark tests not only whether models can generate valid code but whether that code actually resolves a documented issue in a real project. When compared on the same OpenHands framework, Devstral outperforms significantly larger models such as Deepseek-V3-0324, which has 671 billion parameters, and Qwen3 232B-A22B, highlighting the model’s efficiency.

Devstral was fine-tuned from the Mistral Small 3.1 base model. Before training, the vision encoder was removed, resulting in a fully text-based model optimized for code understanding and generation. It supports a long context window of up to 128,000 tokens, allowing it to ingest large codebases or extended conversations in a single pass. With a parameter size of 24 billion, Devstral is also relatively lightweight and accessible for developers and researchers. The model can run locally on a consumer-grade GPU such as the NVIDIA RTX 4090, or on Apple Silicon devices with 32GB of RAM. This lowers the barrier to entry for teams or individuals working in constrained environments or handling sensitive codebases.

Mistral has made Devstral available under the permissive Apache 2.0 license, which allows both commercial and non-commercial use, as well as modifications and redistribution. The model can be downloaded through various platforms including Hugging Face, LM Studio, Ollama, and Kaggle. It is also accessible via Mistral’s own API under the identifier devstral-small-2505.

Community feedback reflects a mix of excitement and critical evaluation. Product Builder, Nayak Satya commented:

Another promising enhancement from Mistral. This company is silently building some great additions for AI space. Europe is not far behind in AI when Mistral stands tall. Meantime can it be added inside VS studio or any modern IDE’S folks?

On Reddit’s r/LocalLLaMA, users praised Devstral’s performance, user Coding9 posted:

It works in Cline with a simple task. I can’t believe it. Was never able to get another local one to work. I will try some more tasks that are more difficult soon!

Although Devstral is released as a research preview, its deployment marks a step forward in the practical application of LLMs to real-world software engineering. Mistral has indicated that a larger version of the model is already in development, with more advanced capabilities expected in upcoming releases. The company is inviting feedback from the developer community to further refine the model and its integration into software tooling ecosystems.