MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Summary: How about a deep learning technique based on decision trees that outperforms CNNs and RNNs, runs on your ordinary desktop, and trains with relatively small datasets. This could be a major disruptor for AI.

Suppose I told you that there is an algorithm that regularly beats the performance of CNNs and RNNs at image and text classification.

Suppose I told you that there is an algorithm that regularly beats the performance of CNNs and RNNs at image and text classification.

- That requires only a fraction of the training data.

- That you can run on your desktop CPU device without need for GPUs.

- That trains just as rapidly and in many cases even more rapidly and lends itself to distributed processing.

- That has far fewer hyperparameters and performs well on the default settings.

- And relies on easily understood random forests instead of completely opaque deep neural nets.

Well there is one just announced by researchers Zhi-Hua Zhou and Ji Feng of the National Key Lab for Novel Software Technology, Nanjing University, Nanjing, China. And it’s called gcForest.

State of the Art

This is the first installment in a series of “Off the Beaten Path” articles that will focus on methods that advance data science that are off the mainstream of development.

Mainstream in AI for image and text classification is clearly in deep neural nets (DNNs), specifically Convolutional Neural Nets (CNNs), Recurrent Neural Nets (RNNs), and Long/Short Term Memory Nets (LSTM). The reason is simple. These were the first techniques shown to work effectively on these featureless problems and we were completely delighted to have tools that work.

Thousands of data scientists are at this moment reskilling to be able to operate these DNN variants. The cloud giants are piling in because of the extreme compute capacity required to make them work which will rely on their mega-clouds of high performance specialty GPM chips. And many hundreds if not thousands of AI startups, not to mention established companies, are racing to commercialize these new capabilities in AI. Why these? Because they work.

Importantly, not only have they been proven to work in a research environment but they are sufficiently stable to be ready for commercialization.

The downsides however are well knows. They rely on extremely large datasets of labeled data that for many problems may never be physically or economically available. They require very long training times on expensive GPU machines. The long training time is exacerbated because there are also a very large number of hyperparameters which are not altogether well understood and require multiple attempts to get right. It is still true that some of these models fail to train at all losing all the value of the time and money invested.

Combine this with the estimate that there are perhaps only 22,000 individuals worldwide who are skilled in this area, most employed by the tech giants. The result is that many potentially valuable AI problems simply are not being addressed.

Off the Beaten Path

To some extent we’ve been mesmerized by DNNs and their success. Most planning and development capital is pouring into AI solutions based on where DNNs are today or where they will be incrementally improved in a year or two.

But just as there were no successful or practical DNNs just 5 to 7 years ago, there is nothing to say that the way forward is dependent on current technology. The history of data science is rife with disruptive methods and technologies. There is no reason to believe that those won’t continue to occur. gcForest may be just such a disruptor.

gcForest in Concept

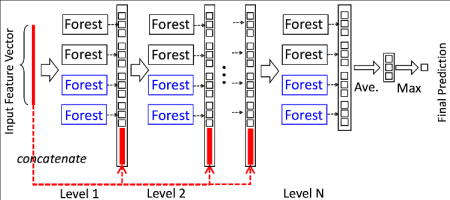

gcForest (multi-Grained Cascade Forest) is a decision tree ensemble approach in which the cascade structure of deep nets is retained but where the opaque edges and node neurons are replaced by groups of random forests paired with completely-random tree forests. In this case, typically two of each for a total of four in each cascade layer.

Image and text problems are categorized as ‘feature learning’ or ‘representation learning’ problems where features are neither predefined nor engineered as in traditional ML problems. And the basic rule in these feature discovery problems is to go deep, using multiple layers each of which learns relevant features of the data in order to classify them. Hence the multi-layer structure so familiar with DNNs is retained.

All the images and references in this article are from the original research report which can be found here.

Figure 1: Illustration of the cascade forest structure. Suppose each level of the cascade consists of two random forests (black) and two completely-random tree forests (blue). Suppose there are three classes to predict; thus, each forest will output a three-dimensional class vector, which is then concatenated for re-representation of the original input.

By using both random forests and completely-random tree forests the authors gain the advantage of diversity. Each forest contains 500 completely random trees allowed to split until each leaf node contains only the same class of instances making the growth self-limiting and adaptive, unlike the fixed and large depth required by DNNs.

The estimated class distribution forms a class vector which is then concatenated with the original feature vector to be the input of the next level cascade. Not dissimilar from CNNs.

The final model is a cascade of cascade forests. The final prediction is obtained by aggregating the class vectors and selecting the class with the highest maximum score.

The Multi-Grained Feature

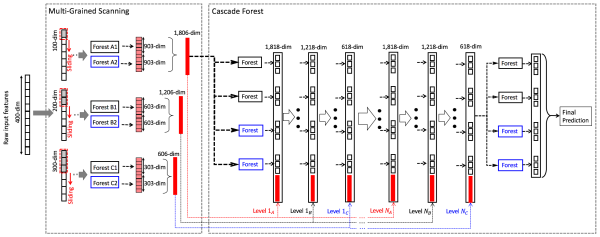

The multi-grained feature refers to the use of a sliding window to scan raw features. Combining multiple size sliding windows (varying the grain) becomes a hyperparameter that can improve performance.

Figure 4: The overall procedure of gcForest. Suppose there are three classes to predict, raw features are 400-dim, and three sizes of sliding windows are used.

Replaces both CNNs and RNNs

As a bonus gcForest works well with either sequence data or image-style data.

Figure 3: Illustration of feature re-representation using sliding window scanning. Suppose there are three classes, raw features are 400-dim, and sliding window is 100-dim.

Reported Accuracy

To achieve accurate comparisons the authors held many variables constant between the two approaches and performance might have been improved with more tuning. Here are just a few of the reported performance results on several different standard reference sets.

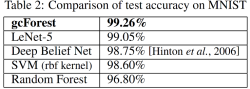

Image Categorization: The MNIST dataset [LeCun et al., 1998] contains 60,000 images of size 28 by 28 for training (and validating), and 10,000 images for testing.

Image Categorization: The MNIST dataset [LeCun et al., 1998] contains 60,000 images of size 28 by 28 for training (and validating), and 10,000 images for testing.

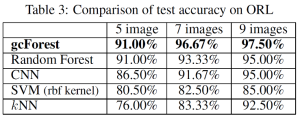

Face Recognition: The ORL dataset [Samaria and Harter, 1994] contains 400 gray-scale facial images taken from 40 persons. We compare it with a CNN consisting of 2 conv-layers with 32 feature maps of 3 × 3 kernel, and each conv-layer has a 2 × 2 maxpooling layer followed. We randomly choose 5/7/9 images per person for training, and report the test performance on the remaining images.

Face Recognition: The ORL dataset [Samaria and Harter, 1994] contains 400 gray-scale facial images taken from 40 persons. We compare it with a CNN consisting of 2 conv-layers with 32 feature maps of 3 × 3 kernel, and each conv-layer has a 2 × 2 maxpooling layer followed. We randomly choose 5/7/9 images per person for training, and report the test performance on the remaining images.

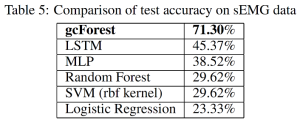

Hand Movement Recognition: The sEMG dataset [Sapsanis et al., 2013] consists of 1,800 records each belonging to one of six hand movements, i.e., spherical, tip, palmar, lateral, cylindrical and hook. This is a time-series dataset, where EMG sensors capture 500 features per second and each record associated with 3,000 features. In addition to an MLP with input-1,024-512-output structure, we also evaluate a recurrent neural network, LSTM [Gers et al., 2001] with 128 hidden units and sequence length of 6 (500-dim input vector per second).

Hand Movement Recognition: The sEMG dataset [Sapsanis et al., 2013] consists of 1,800 records each belonging to one of six hand movements, i.e., spherical, tip, palmar, lateral, cylindrical and hook. This is a time-series dataset, where EMG sensors capture 500 features per second and each record associated with 3,000 features. In addition to an MLP with input-1,024-512-output structure, we also evaluate a recurrent neural network, LSTM [Gers et al., 2001] with 128 hidden units and sequence length of 6 (500-dim input vector per second).

The authors show a variety of other test results.

Other Benefits

The run times on standard CPU machines are quite acceptable especially as compared to comparable times on GPU machines. In one of the above tests the gcForest ran 40 minutes compared to the MLP version which required 78 minutes.

Perhaps the biggest unseen advantage is that the number of hyperparameters is very much less that on a DNN. In fact DNN research is hampered because comparing two separate runs with different hyperparameters so fundamentally changes the learning process that researchers are unable to accurately compare them. In this case the default hyperparameters on gcForest run acceptably across a wide range of data types, though tuning in these cases will significantly approve performance.

The code is not fully open source but can be had from the authors. The link for that request occurs in the original study.

We have all been waiting for DNNs to be sufficiently simplified that the average data scientist can have it on call in his tool chest. The cloud giants and many of the platform developers are working on this but a truly simplified version still seems very far away.

It may be that gcForest, though still in research, is the first step toward a DNN replacement that is fast, powerful, runs on your ordinary machine, trains with little data, and is easy for anyone with a decent understanding of decision trees to operate.

P.S. Be sure to note where this research was done, China.

Other articles in this series:

Off the Beaten Path – HTM-based Strong AI Beats RNNs and CNNs at Prediction and Anomaly Detection

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at: