MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

I read an article this morning, about a top Cornell food researcher having 13 studies retracted, see here. It prompted me to write this blog. It is about data science charlatans and unethical researchers in the Academia, destroying the value of p-values again, using a well known trick called p-hacking, to get published in top journals and get grant money or tenure. The issue is widespread, not just in academic circles, and make people question the validity of scientific methods. It fuels the fake “theories” of those have lost faith in science.

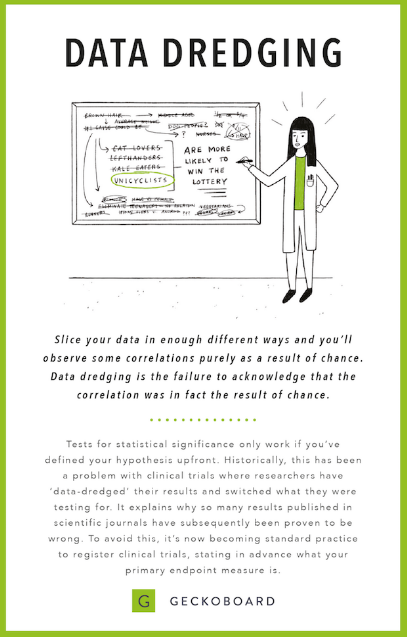

The trick consist of repeating an experiment sufficient many times, until the conclusions fit with your agenda. Or by being cherry-picking about the data you use, or even discarding observations deemed to have a negative impact on conclusions. Sometimes, causation and correlations are mixed up on purpose, or misleading charts are displayed. Sometimes, the author lacks statistical acumen.

Usually, these experiments are not reproducible. Even top journals sometimes accept these articles, due to

- Poor peer-review process

- Incentives to publish sensational material

By contrast, research that is truly aimed at finding the truth, sometimes does not use p-values nor classical tests of hypotheses. For instance, my recent article comparing whether two types of distributions are identical, does not rely on these techniques. Also the theoretical answer is know, so I would be lying to myself by showing results that fit with my gut feelings or intuition. In some of my tests, I clearly state that my sample size is too small to make a conclusion. And the presentation style is simple so that non-experts can understand it. Finally, I share my data and all the computations. You can read that article here. I hope it will inspire those interested in doing sound analyses.

Below are some extracts from the article I read this morning:

P-values of .05 aren’t that hard to find if you sort the data differently or perform a huge number of analyses. In flipping coins, you’d think it would be rare to get 10 heads in a row. You might start to suspect the coin is weighted to favor heads and that the result is statistically significant. But what if you just got 10 heads in a row by chance (it can happen) and then suddenly decided you were done flipping coins? If you kept going, you’d stop believing the coin is weighted.

Stopping an experiment when a p-value of .05 is achieved is an example of p-hacking. But there are other ways to do it — like collecting data on a large number of outcomes but only reporting the outcomes that achieve statistical significance. By running many analyses, you’re bound to find something significant just by chance alone.

There’s a movement of scientists who seek to rectify practices in science like the ones that Wansink is accused of. Together, they basically call for three main fixes that are gaining momentum.

- Preregistration of study designs: This is a huge safeguard against p-hacking. Preregistration means that scientists publicly commit to an experiment’s design before they start collecting data. This makes it much harder to cherry-pick results.

- Open data sharing: Increasingly, scientists are calling on their colleagues to make all the data from their experiments available for anyone to scrutinize (there are exceptions, of course, for particularly sensitive information). This ensures that shoddy research that makes it through peer review can still be double-checked.

- Registered replication reports: Scientists are hungry to see if previously reporting findings in the academic literature hold up under more intense scrutiny. There are many efforts underway to replicate (exactly or conceptually) research findings with rigor.

There are other potential fixes too: There’s a group of scientists calling for a stricter definition of statistically significant. Others argue that arbitrary cutoffs for significance are always going to be gamed. And increasingly, scientists are turning to other forms of mathematical analysis, such as Bayesian statistics, which asks a slightly different question of data. (While p-values ask, “How rare are these numbers?” a Bayesian approach asks, “What’s the probability my hypothesis is the best explanation for the results we’ve found?”)

Related articles

For related articles from the same author, click here or visit www.VincentGranville.com. Follow me on on LinkedIn, or visit my old web page here.

DSC Resources

- Invitation to Join Data Science Central

- Free Book: Applied Stochastic Processes

- Comprehensive Repository of Data Science and ML Resources

- Advanced Machine Learning with Basic Excel

- Difference between ML, Data Science, AI, Deep Learning, and Statistics

- Selected Business Analytics, Data Science and ML articles

- Hire a Data Scientist | Search DSC | Classifieds | Find a Job

- Post a Blog | Forum Questions