MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Summary: We’re stuck. There hasn’t been a major breakthrough in algorithms in the last year. Here’s a survey of the leading contenders for that next major advancement.

We’re stuck. Or at least we’re plateaued. Can anyone remember the last time a year went by without a major notable advance in algorithms, chips, or data handling? It was so unusual to go to the Strata San Jose conference a few weeks ago and see no new eye catching developments.

We’re stuck. Or at least we’re plateaued. Can anyone remember the last time a year went by without a major notable advance in algorithms, chips, or data handling? It was so unusual to go to the Strata San Jose conference a few weeks ago and see no new eye catching developments.

As I reported earlier, it seems we’ve hit maturity and now our major efforts are aimed at either making sure all our powerful new techniques work well together (converged platforms) or making a buck from those massive VC investments in same.

I’m not the only one who noticed. Several attendees and exhibitors said very similar things to me. And just the other day I had a note from a team of well-regarded researchers who had been evaluating the relative merits of different advanced analytic platforms, and concluding there weren’t any differences worth reporting.

Why and Where are We Stuck?

Where we are right now is actually not such a bad place. Our advances over the last two or three years have all been in the realm of deep learning and reinforcement learning. Deep learning has brought us terrific capabilities in processing speech, text, image, and video. Add reinforcement learning and we get big advances in game play, autonomous vehicles, robotics and the like.

We’re in the earliest stages of a commercial explosion based on these like the huge savings from customer interactions through chatbots; new personal convenience apps like personal assistants and Alexa, and level 2 automation in our personal cars like adaptive cruise control, accident avoidance braking, and lane maintenance.

Tensorflow, Keras, and the other deep learning platforms are more accessible than ever, and thanks to GPUs, more efficient than ever.

However, the known list of deficiencies hasn’t moved at all.

- The need for too much labeled training data.

- Models that take either too long or too many expensive resources to train and that still may fail to train at all.

- Hyperparameters especially around nodes and layers that are still mysterious. Automation or even well accepted rules of thumb are still out of reach.

- Transfer learning that means only going from the complex to the simple, not from one logical system to another.

I’m sure we could make a longer list. It’s in solving these major shortcomings where we’ve become stuck.

What’s Stopping Us

In the case of deep neural nets the conventional wisdom right now is that if we just keep pushing, just keep investing, then these shortfalls will be overcome. For example, from the 80’s through the 00’s we knew how to make DNNs work, we just didn’t have the hardware. Once that caught up then DNNs combined with the new open source ethos broke open this new field.

All types of research have their own momentum. Especially once you’ve invested huge amounts of time and money in a particular direction you keep heading in that direction. If you’ve invested years in developing expertise in these skills you’re not inclined to jump ship.

Change Direction Even If You’re Not Entirely Sure What Direction that Should Be

Sometimes we need to change direction, even if we don’t know exactly what that new direction might be. Recently leading Canadian and US AI researchers did just that. They decided they were misdirected and needed to essentially start over.

Sometimes we need to change direction, even if we don’t know exactly what that new direction might be. Recently leading Canadian and US AI researchers did just that. They decided they were misdirected and needed to essentially start over.

This insight was verbalized last fall by Geoffrey Hinton who gets much of the credit for starting the DNN thrust in the late 80s. Hinton, who is now a professor emeritus at the University of Toronto and a Google researcher, said he is now “deeply suspicious“ of back propagation, the core method that underlies DNNs. Observing that the human brain doesn’t need all that labeled data to reach a conclusion, Hinton says “My view is throw it all away and start again”.

So with this in mind, here’s a short survey of new directions that fall somewhere between solid probabilities and moon shots, but are not incremental improvements to deep neural nets as we know them.

These descriptions are intentionally short and will undoubtedly lead you to further reading to fully understand them.

Things that Look Like DNNs but are Not

There is a line of research closely hewing to Hinton’s shot at back propagation that believes that the fundamental structure of nodes and layers is useful but the methods of connection and calculation need to be dramatically revised.

Capsule Networks (CapsNet)

It’s only fitting that we start with Hinton’s own current new direction in research, CapsNet. This relates to image classification with CNNs and the problem, simply stated, is that CNNs are insensitive to the pose of the object. That is, if the same object is to be recognized with differences in position, size, orientation, deformation, velocity, albedo, hue, texture etc. then training data must be added for each of these cases.

It’s only fitting that we start with Hinton’s own current new direction in research, CapsNet. This relates to image classification with CNNs and the problem, simply stated, is that CNNs are insensitive to the pose of the object. That is, if the same object is to be recognized with differences in position, size, orientation, deformation, velocity, albedo, hue, texture etc. then training data must be added for each of these cases.

In CNNs this is handled with massive increases in training data and/or increases in max pooling layers that can generalize, but only by losing actual information.

The following description comes from one of many good technical descriptions of CapsNets, this one from Hackernoon.

Capsule is a nested set of neural layers. So in a regular neural network you keep on adding more layers. In CapsNet you would add more layers inside a single layer. Or in other words nest a neural layer inside another. The state of the neurons inside a capsule capture the above properties of one entity inside an image. A capsule outputs a vector to represent the existence of the entity. The orientation of the vector represents the properties of the entity. The vector is sent to all possible parents in the neural network. Prediction vector is calculated based on multiplying its own weight and a weight matrix. Whichever parent has the largest scalar prediction vector product, increases the capsule bond. Rest of the parents decrease their bond. This routing by agreement method is superior to the current mechanism like max-pooling.

CapsNet dramatically reduces the required training set and shows superior performance in image classification in early tests.

gcForest

In February we featured research by Zhi-Hua Zhou and Ji Feng of the National Key Lab for Novel Software Technology, Nanjing University, displaying a technique they call gcForest. Their research paper shows that gcForest regularly beats CNNs and RNNs at both text and image classification. The benefits are quite significant.

- Requires only a fraction of the training data.

- Runs on your desktop CPU device without need for GPUs.

- Trains just as rapidly and in many cases even more rapidly and lends itself to distributed processing.

- Has far fewer hyperparameters and performs well on the default settings.

- Relies on easily understood random forests instead of completely opaque deep neural nets.

In brief, gcForest (multi-Grained Cascade Forest) is a decision tree ensemble approach in which the cascade structure of deep nets is retained but where the opaque edges and node neurons are replaced by groups of random forests paired with completely-random tree forests. Read more about gcForest in our original article.

Pyro and Edward

Pyro and Edward are two new programming languages that merge deep learning frameworks with probabilistic programming. Pyro is the work of Uber and Google, while Edward comes out of Columbia University with funding from DARPA. The result is a framework that allows deep learning systems to measure their confidence in a prediction or decision.

In classic predictive analytics we might approach this by using log loss as the fitness function, penalizing confident but wrong predictions (false positives). So far there’s been no corollary for deep learning.

Where this promises to be of use for example is in self-driving cars or aircraft allowing the control to have some sense of confidence or doubt before making a critical or fatal catastrophic decision. That’s certainly something you’d like your autonomous Uber to know before you get on board.

Both Pyro and Edward are in the early stages of development.

Approaches that Don’t Look Like Deep Nets

I regularly run across small companies who have very unusual algorithms at the core of their platforms. In most of the cases that I’ve pursued they’ve been unwilling to provide sufficient detail to allow me to even describe for you what’s going on in there. This secrecy doesn’t invalidate their utility but until they provide some benchmarking and some detail, I can’t really tell you what’s going on inside. Think of these as our bench for the future when they do finally lift the veil.

For now, the most advanced non-DNN algorithm and platform I’ve investigated is this:

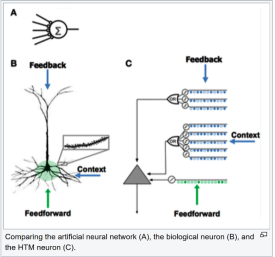

Hierarchical Temporal Memory (HTM)

Hierarchical Temporal Memory (HTM) uses Sparse Distributed Representation (SDR) to model the neurons in the brain and to perform calculations that outperforms CNNs and RNNs at scalar predictions (future values of things like commodity, energy, or stock prices) and at anomaly detection.

Hierarchical Temporal Memory (HTM) uses Sparse Distributed Representation (SDR) to model the neurons in the brain and to perform calculations that outperforms CNNs and RNNs at scalar predictions (future values of things like commodity, energy, or stock prices) and at anomaly detection.

This is the devotional work of Jeff Hawkins of Palm Pilot fame in his company Numenta. Hawkins has pursued a strong AI model based on fundamental research into brain function that is not structured with layers and nodes as in DNNs.

HTM has the characteristic that it discovers patterns very rapidly, with as few as on the order of 1,000 observations. This compares with the hundreds of thousands or millions of observations necessary to train CNNs or RNNs.

Also the pattern recognition is unsupervised and can recognize and generalize about changes in the pattern based on changing inputs as soon as they occur. This results in a system that not only trains remarkably quickly but also is self-learning, adaptive, and not confused by changes in the data or by noise.

We featured HTM and Numenta in our February article and we recommend you read more about it there.

Some Incremental Improvements of Note

We set out to focus on true game changers but there are at least two examples of incremental improvement that are worthy of mention. These are clearly still classical CNNs and RNNs with elements of back prop but they work better.

Network Pruning with Google Cloud AutoML

Google and Nvidia researchers use a process called network pruning to make a neural network smaller and more efficient to run by removing the neurons that do not contribute directly to output. This advancement was rolled out recently as a major improvement in the performance of Google’s new AutoML platform.

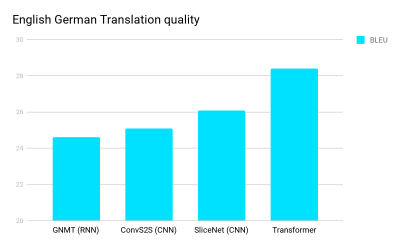

Transformer

Transformer is a novel approach useful initially in language processing such as language-to-language translations which has been the domain of CNNs, RNNs and LSTMs. Released late last summer by researchers at Google Brain and the University of Toronto, it has demonstrated significant accuracy improvements in a variety of test including this English/German translation test.

The sequential nature of RNNs makes it more difficult to fully take advantage of modern fast computing devices such as GPUs, which excel at parallel and not sequential processing. CNNs are much less sequential than RNNs, but in CNN architectures the number of steps required to combine information from distant parts of the input still grows with increasing distance.

The accuracy breakthrough comes from the development of a ‘self-attention function’ that significantly reduces steps to a small, constant number of steps. In each step, it applies a self-attention mechanism which directly models relationships between all words in a sentence, regardless of their respective position.

Read the original research paper here.

A Closing Thought

If you haven’t thought about it, you should be concerned at the massive investment China is making in AI and its stated goal to overtake the US as the AI leader within a very few years.

In an article by Steve LeVine who is Future Editor at Axios and teaches at Georgetown University he makes the case that China may be a fast follower but will probably never catch up. The reason, because US and Canadian researchers are free to pivot and start over anytime they wish. The institutionally guided Chinese could never do that. This quote from LeVine’s article:

“In China, that would be unthinkable,” said Manny Medina, CEO at Outreach.io in Seattle. AI stars like Facebook’s Yann LeCun and the Vector Institute’s Geoff Hinton in Toronto, he said, “don’t have to ask permission. They can start research and move the ball forward.”

As the VCs say, maybe it’s time to pivot.

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at: