Month: August 2024

Google Cloud Launches C4 Machine Series: High-Performance Computing and Data Analytics

MMS • Steef-Jan Wiggers

Google Cloud recently announced the general availability of its new C4 machine series, powered by 4th Gen Intel Xeon Scalable Processors (Sapphire Rapids). The series offers a range of configurations tailored to meet the needs of demanding applications such as high-performance computing (HPC), large-scale simulations, and data analytics.

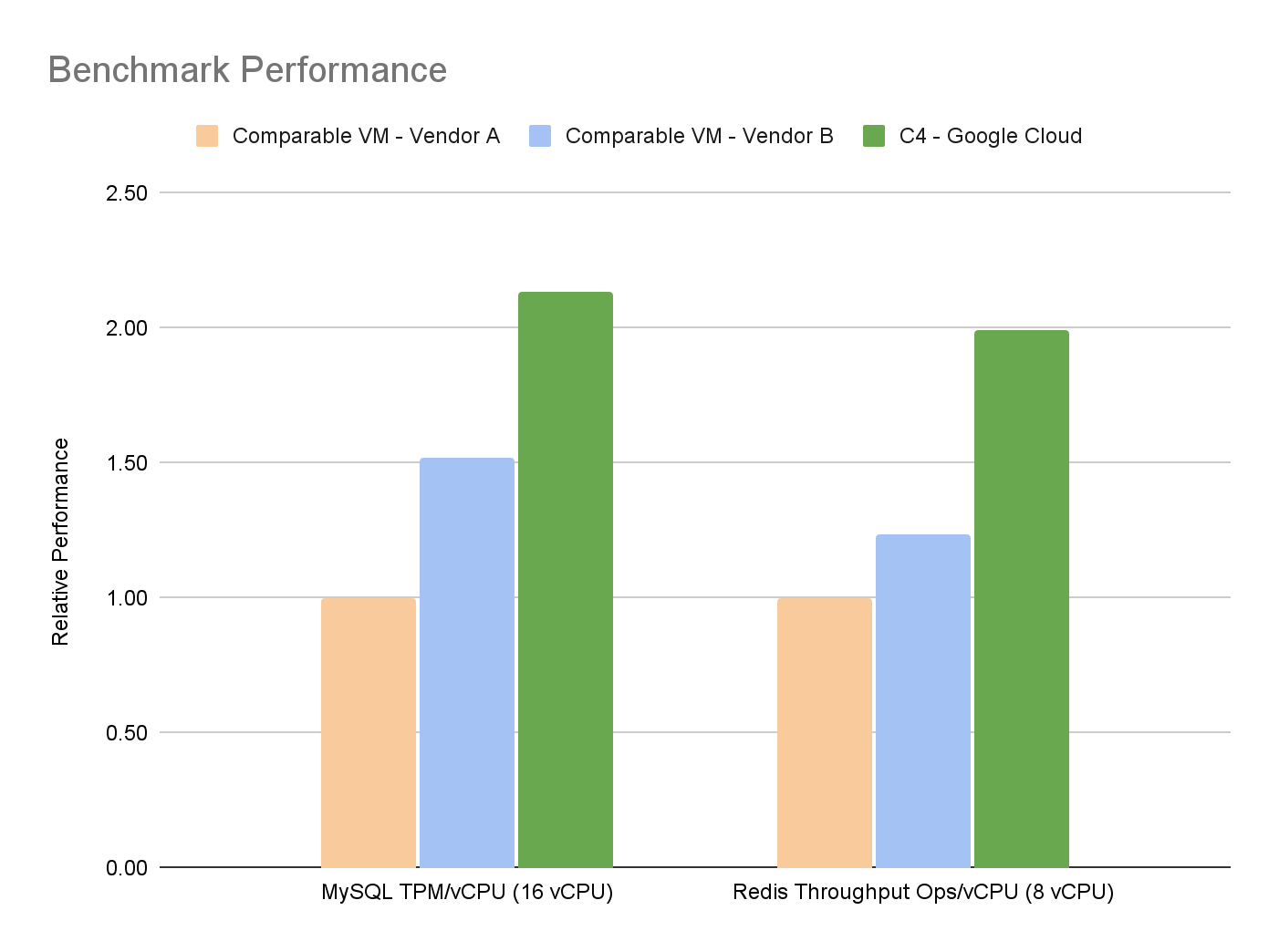

The C4 machine series is optimized to handle workloads that require substantial computational power. According to the company, it leverages Intel’s latest technology to provide up to 60% better performance per core than its predecessor, the C2 series. The machines in this series are equipped with Intel Advanced Matrix Extensions (AMX), which are instrumental in accelerating AI and machine learning tasks, particularly those involving large models and datasets. The machines are available in several configurations, ranging from 4 to 96 vCPUs, allowing businesses to choose the best setup for their workload requirements.

One of the features of the C4 series is its enhanced networking capabilities. Each C4 machine offers up to 200 Gbps of network bandwidth, enabling faster data transfer and reducing latency. This is particularly beneficial for applications that rely on distributed computing or real-time data processing. Integrating Google’s Virtual NIC (gVNIC) further improves network performance by offloading packet processing tasks from the CPU, thus freeing up resources for compute tasks.

The C4 series supports many use cases beyond traditional compute-intensive tasks. For instance, businesses engaged in AI and machine learning can leverage the AMX extensions to accelerate the training and inference of complex models. Meanwhile, companies involved in rendering and simulation can benefit from the series’ high performance and memory bandwidth to quickly run large-scale simulations and generate high-quality visual outputs.

Olivia Melendez, a product manager at Google Cloud, wrote:

C4 VMs provide the performance and flexibility you need to handle most workloads, all powered by Google’s Titanium. With Titanium offload technology, C4 delivers high performance connectivity with up to 200 Gbps of networking bandwidth and scalable storage with up to 500k IOPS and 10 GB/s throughput on Hyperdisk Extreme. C4 instances scale up to 192 vCPUs and 1.5TB of DDR5 memory and feature the latest generation performance with Intel’s 5th generation Xeon processors (code-named Emerald Rapids) offering predefined shapes in high-cpu, standard, and high-mem configurations.

In addition, Richard Seroter, chief evangelist, made a bold statement on X:

Yes, this new C4 machine type is rad / the bee’s knees / fire for database and AI workloads. But I particularly appreciated that we shared our test conditions for proving we’re more performant than other cloud offerings.

(Source: Google blog post)

The C4 series is now generally available in several Google Cloud regions, including the United States, Europe, and Asia-Pacific. Google has announced plans to expand availability to additional regions soon.

Pricing for the C4 series includes options for on-demand, committed use contracts, and sustained use discounts. This allows businesses to optimize their cloud spend based on specific usage patterns. Google also offers preemptible instances for the C4 series, providing a cost-effective option for workloads that can tolerate interruptions.

MMS • Rafiq Gemmail

Scrum.org recently published an article titled AI as a Scrum Team Member by its COO, Eric Naiburg. Naiburg described the productivity benefits for Scrum masters, product owners, and developers, challenging the reader to “imagine AI integrating seamlessly” as a “team member” into the Scrum team. Thoughtworks’ global lead for AI-assisted software delivery, Birgitta Böckeler, also recently published an article titled Exploring Generative AI, where she shared insights into experiments involving the use of LLMs (Large Language Models) in engineering scenarios, where they may potentially have a multiplier effect for software delivery teams.

Naiburg compared the role of AI tooling to that of a pair-programming collaborator. Using a definition of AI spanning from LLM integrations to analytical tools, he wrote about how AI can be used to reduce cognitive load across the key roles of a Scrum team. He discussed the role of the Scrum master and explained that AI provides an assistant capable of advising on team facilitation, team performance and optimisation of flow. Naiburg gave the example of engaging with an LLM for guidance on driving engagement in ceremonies:

AI can suggest different facilitation techniques for meetings. If you are having difficulty with Scrum Team members engaging in Sprint Retrospectives, for example, just ask the AI, “I am having a problem getting my Scrum Team to fully engage in Sprint Retrospectives any ideas?”

Naiburg wrote that AI provides developers with an assistant in the team to help decompose and understand stories. Further, he called out the benefit of using AI to simplify prototyping, testing, code-generation, code review and synthesis of test data.

Focusing on the developer persona, Böckeler described her experiment with using LLMs to onboard onto an open source project and deliver a story against a legacy software project. To understand the capabilities and limits of AI tooling, she used LLMs to work on a ticket from the backlog of the open-source project Bhamni. Böckeler wrote about her use of LLMs in comprehending the ticket, the codebase, and understanding the bounded context of the project.

Böckeler’s main tools comprised of an LLM using RAG (Retrieval Augmented Generation) to provide insights based on the content of Bhamni’s wiki. She offered the LLM a prompt containing the user story and asked it to “explain the Bhamni and health care terminology” which it mentioned. Böckeler wrote:

I asked more broadly, “Explain to me the Bahmni and healthcare terminology in the following ticket: …”. It gave me an answer that was a bit verbose and repetitive, but overall helpful. It put the ticket in context, and explained it once more. It also mentioned that the relevant functionality is “done through the Bahmni HIP plugin module”, a clue to where the relevant code is.

Speaking on the InfoQ Podcast in June, Meryem Arik, co-founder/CEO at TitanML, described the use of LLMs with RAG performing as “research assistant” being “the most common use cases that we see as a 101 for enterprise.” While Böckeler did not directly name her RAG implementation beyond describing it as a “Wiki-RAG-Bot”, Arik spoke extensively about the privacy and domain-specialisation benefits that can be gained from a custom solution using a range of open models. She said:

So actually, if you’re building a state-of-the-art RAG app, you might think, okay, the best model for everything is OpenAI. Well, that’s not actually true. If you’re building a state-of-the-art RAG app, the best generative model you can use is OpenAI. But the best embedding model, the best re-ranker model, the best table parser, the best image parser, they’re all open source.

To understand the code and target her changes, Böckeler wrote that she “fed the JIRA ticket text” into two tools used for code generation and comprehension, Bloop and Github Copilot. She asked both tools to help her “find the code relevant to this feature.” Both models gave her a similar set of pointers, which she described as “not 100% accurate,” but “generally useful direction.” Exploring the possibilities around autonomous code-generators Böckeler experimented with Autogen to build LLM based AI agents to port tests across frameworks, she explained:

Agents in this context are applications that are using a Large Language Model, but are not just displaying the model’s responses to the user, but are also taking actions autonomously, based on what the LLM tells them.

Böckeler reported that her agent worked “at least once,” however it “also failed a bunch of times, more so than it worked.” InfoQ recently reported on a controversial study by Upwork Research Institute, pointing at a perception from those sampled that AI tools decrease productivity, with 39% of respondents stating that “they’re spending more time reviewing or moderating AI-generated content.” Naiburg calls out the need to ensure that teams remain focused on value and not just the output of AI tools:

One word of caution – the use of these tools can increase the volume of “stuff”. For example, some software development bots have been accused of creating too many lines of code and adding code that is irrelevant. That can also be true when you get AI to refine stories, build tests or even create minutes for meetings. The volume of information can ultimately get in the way of the value that these tools provide.

Commenting on her experiment with Autotgen, Böckeler shared a reminder that the technology still has value in “specific problem spaces,” saying:

These agents still have quite a way to go until they can fulfill the promise of solving any kind of coding problem we throw at them. However, I do think it’s worth considering what the specific problem spaces are where agents can help us, instead of dismissing them altogether for not being the generic problem solvers they are misleadingly advertised to be.

Spring News Roundup: Milestone Releases for Spring Boot, Cloud, Security, Session and Spring AI

MMS • Michael Redlich

There was a flurry of activity in the Spring ecosystem during the week of August 19th, 2024, highlighting: point and milestone releases of Spring Boot, Spring Data, Spring Cloud, Spring Security, Spring Authorization Server, Spring Session, Spring for Apache Kafka and Spring for Apache Pulsar.

Spring Boot

The second milestone release of Spring Boot 3.4.0 delivers bug fixes, improvements in documentation, dependency upgrades and many new features such as: an update to the @ConditionalOnSingleCandidate annotation to deal with fallback beans in the presence of a regular single bean; and configure the SimpleAsyncTaskScheduler class when virtual threads are enabled. More details on this release may be found in the release notes.

Versions 3.3.3 and 3.2.9 of Spring Boot have been released to address CVE-2024-38807, Signature Forgery Vulnerability in Spring Boot’s Loader, where applications that use the spring-boot-loader or spring-boot-loader-classic APIs contain custom code that performs signature verification of nested JAR files may be vulnerable to signature forgery where content that appears to have been signed by one signer has, in fact, been signed by another. Developers using earlier versions of Spring Boot should upgrade to versions 3.1.13, 3.0.16 and 2.7.21.

Spring Data

Versions 2024.0.3 and 2023.1.9, both service releases of Spring Data, feature bug fixes and respective dependency upgrades to sub-projects such as: Spring Data Commons 3.3.3 and 3.2.9; Spring Data MongoDB 4.3.3 and 4.2.9; Spring Data Elasticsearch 5.3.3 and 5.2.9; and Spring Data Neo4j 7.3.3 and 7.2.9. These versions can be consumed by Spring Boot 3.3.3 and 3.2.9, respectively.

Spring Cloud

The first milestone release of Spring Cloud 2024.0.0, codenamed Mooregate, features bug fixes and notable updates to sub-projects: Spring Cloud Kubernetes 3.2.0-M1; Spring Cloud Function 4.2.0-M1; Spring Cloud OpenFeign 4.2.0-M1; Spring Cloud Stream 4.2.0-M1; and Spring Cloud Gateway 4.2.0-M1. This release provides compatibility with Spring Boot 3.4.0-M1. Further details on this release may be found in the release notes.

Spring Security

The second milestone release of Spring Security 6.4.0 delivers bug fixes, dependency upgrades and new features such as: improved support to the @AuthenticationPrincipal and @CurrentSecurityContext meta-annotations to better align with method security; preserve the custom user type in the InMemoryUserDetailsManager class for improved use in the loadUserByUsername() method; and the addition of a constructor in the AuthorizationDeniedException class to provide the default value for AuthorizationResult interface. More details on this release may be found in the release notes and what’s new page.

Similarly, versions 6.3.2, 6.2.6 and 5.8.14 of Spring Security have also been released providing bug fixes, dependency upgrades and a new feature that implements support for multiple URLs in the ActiveDirectoryLdapAuthenticationProvider class. Further details on these releases may be found in the release notes for version 6.3.2, version 6.2.6 and version 5.8.14.

Spring Authorization Server

Versions 1.4.0-M1, 1.3.2 and 1.2.6 of Spring Authorization Server have been released that ship with bug fixes, dependency upgrades and new features such as: a new authenticationDetailsSource() method added to the OAuth2TokenRevocationEndpointFilter class used for building an authentication details from an instance of the Jakarta Servlet HttpServletRequest interface; and allow customizing an instance of the Spring Security LogoutHandler interface in the OidcLogoutEndpointFilter class. More details on these releases may be found in the release notes for version 1.4.0-M1, version 1.3.2 and version 1.2.6.

Spring Session

The second milestone release of Spring Session 3.4.0-M2 provides many dependency upgrades and a new RedisSessionExpirationStore interface so that it is now possible to customize the expiration policy in an instance of the RedisIndexedSessionRepository.RedisSession class. Further details on this release may be found in the release notes and what’s new page.

Similarly, the release of Spring Session 3.3.2 and 3.2.5 ship with dependency upgrades and a resolution to an issue where an instance of the AbstractSessionWebSocketMessageBrokerConfigurer class triggers an eager instantiation of the SessionRepository interface due to a non-static declaration of the Spring Framework ApplicationListener interface. More details on this release may be found in the release notes for version 3.3.2 and version 3.2.5.

Spring Modulith

Versions 1.3 M2, 1.2.3, and 1.1.8 of Spring Modulith have been released that ship with bug fixes, dependency upgrades and new features such as: an optimization of the publication completion by event and target identifier to allow databases to optimize the query plan; and a refactor of the EventPublication interface that renames the isPublicationCompleted() method to isCompleted(). Further details on these releases may be found in the release notes for version 1.3.0-M2, version 1.2.3 and version 1.1.8.

Spring AI

The second milestone release of Spring AI 1.0.0 delivers bug fixes, improvements in documentation and new features such as: improved observability functionality for the ChatClient interface, chat models, embedding models, image generation models and vector stores; a new MarkdownDocumentReader for ETL pipelines; and a new ChatMemory interface that is backed by Cassandra.

Spring for Apache Kafka

Versions 3.3.0-M2, 3.2.3 and 3.1.8 of Spring for Apache Kafka have been released with bug fixes, dependency upgrades and new features such as: support for Apache Kafka 3.8.0; and improved error handling on fault tolerance retries. These releases will be included in the Spring Boot 3.4.0-M2, 3.3.3 and 3.2.9, respectively. More details on this release may be found in the release notes for version 3.3.0-M2, version 3.2.3 and version 3.1.8.

Spring for Apache Pulsar

The first milestone release of Spring for Apache Pulsar 1.2.0-M1 ships with improvements in documentation, dependency upgrades and new features: the ability to configure a default topic and namespace; and the ability to use an instance of a custom Jackson ObjectMapper class for JSON schemas. This release will be included in Spring Boot 3.4.0-M2. Further details on this release may be found in the release notes.

Similarly, versions 1.1.3 and 1.0.9 of Spring for Apache Pulsar have been released featuring dependency upgrades and will be included in Spring Boot 3.3.3 and 3.2.9, respectively. More details on these releases may be found in the release note for version 1.1.3 and version 1.0.9.

MMS • Sergio De Simone

Several strategies exist to apply the principles of zero-trust security to development environments based on Docker Desktop to protect against the risks of security breaches, Docker senior technical leader Jay Schmidt explains.

The zero-trust security model has gained traction in the last few years as an effective strategy to protect sensitive data, lower the risk of security breaches, get visibility into network traffic, and more. It can be applied to traditional systems as well as to container-based architectures, which are affected by image vulnerabilities, cyberattacks, unauthorized access, and other security risks.

The fundamental idea behind the zero-trust model is that both external and internal actors should be treated in the same way. This means going beyond the traditional “perimeter-based” approach to security, where users or machines within the internal perimeter of a company can be automatically trusted. In the zero-trust model, privileges are granted exclusively based on identities and roles, with the basic policy being not trusting anyone.

In his article, Schmidt analyzes how the core principles of the zero-trust model, including microsegmentation, least-privilege access, device access controls, and continuous verification can be applied to a Docker Desktop-based development enviroment.

Microsegmentation aims to create multiple protected zones, such as testing, training, and production, so if one is compromised, the rest of the network can continue working unaffected.

With Docker Desktop, says Schmidt, you can define fine-grained network policies using the bridge network for creating isolated networks or using the Macvlan network driver that allows containers to be treated as distinct physical devices. Another feature that allows to customize network access rule are air-gapped containers.

In keeping with the principle of least-privilege access, enhanced container isolation (ECI) makes it easier to ensure an actor has only the minimum privileges required to perform an action.

In terms of working with containers, effectively implementing least-privilege access requires using AppArmor/SELinux, using seccomp (secure computing mode) profiles, ensuring containers do not run as root, ensuring containers do not request or receive heightened privileges, and so on.

ECI enforces a number of desirable properties, such as running all containers unprivileged, remapping the user namespace, restricting file system access, blocking sensitive system calls, and isolating containers from the Docker Engine’s API.

Authentication and authorization using role-based access control (RBAC) is another key practice that Docker Desktop supports in various ways. These include Single Sign On to manage groups and enforce role and team membership at the account level, and Registry Access Management (RAM) and Image Access Management (IAM) to protect against supply chain attacks.

Another component of Docker Desktop security model is logging and support for software bills of materials (SBOM) using Docker Scout. Using SBOMs enables continually checking against CVEs and security policies. For example, you could enforce all high-profile CVEs to be mitigated, root images to be validated, and so on.

Finally, Docker security can be strengthened through container immutability, which ensures containers are not replaced or tampered with. To this aim, Docker provides the possibility to run a container using the --read-only flag or specifying the read_only: true key value pair in the docker-compose file.

This is just an overview of tools and features provided by Docker Desktop to enforce the zero-trust model. Do not miss the original article to get the full details.

MMS • Renato Losio

At the recent Cloud Next conference in Tokyo, Google announced Spanner Graph, a managed feature that integrates graph, relational, search, and AI capabilities within Spanner. This new database supports a graph query interface compatible with ISO GQL (Graph Query Language) standards while avoiding the need for a standalone graph database.

Spanner Graph now combines graph database capabilities with Cloud Spanner, Google Cloud’s globally distributed and scalable database service that provides horizontal scaling and RDBMS features without the need for sharding or clustering. One of the goals of the project is the full interoperability between GQL and SQL to break down data silos and lets developers choose the tool for the specific use case, without extracting or transforming data. Bei Li, senior staff software engineer at Google, and Chris Taylor, Google fellow, explain:

Tables can be declaratively mapped to graphs without migrating the data, which brings the power of graph to tabular datasets. With this capability, you can late-bind (i.e., postpone) data model choices and use the best query language for each job to be done.

While graphs provide a natural mechanism for representing relationships in data, Google suggests that adopting standalone graph databases leads to fragmentation, operational overhead, and scalability and availability bottlenecks, especially since organizations have substantial investments in SQL expertise and infrastructure. Taylor comments on LinkedIn:

Interconnected data is everywhere, and graph query languages are a fantastic way to understand and gain value from it. With Spanner Graph, you can have the expressive power and performance of native graph queries, backed by the reliability and scale of Spanner.

A highly anticipated feature in the community, Spanner Graph offers vector search and full-text search, allowing developers to traverse relationships within graph structures using GQL while leveraging search to find graph content. Li and Taylor add:

You can leverage vector search to discover nodes or edges based on semantic meaning, or use full-text search to pinpoint nodes or edges that contain specific keywords. From these starting points, you can then seamlessly explore the rest of the graph using GQL. By integrating these complementary techniques, this unified capability lets you uncover hidden connections, patterns, and insights that would be difficult to discover using any single method.

Among the use cases suggested for the new Spanner Graph, the cloud provider highlights fraud detection, recommendation engines, network security, knowledge graphs, route planning, data cataloging, and data lineage tracing. Eric Zhang, software engineer at Modal, comments:

Google’s new Spanner Graph DB looks pretty awesome and hints at a world where multi-model databases are the norm.

Rick Greenwald, independent industry analyst, adds:

By having graph, as well as structured data, search operations and vector operations all accessible within the same SQL interface, Spanner essentially removes the need for users to understand what database technology they need and implement it before they can get started solving problems. The range of options to derive value from your data expands, without undue overhead.

Previously, Neo4J was the recommended deployment option on Google Cloud for many use cases now covered by Spanner Graph. Google is not the only cloud provider offering a managed graph database: Microsoft offers Azure Cosmos DB for Apache Gremlin, while AWS introduced Amazon Neptune years ago, a service distinct from NoSQL Amazon DynamoDB, which was previously recommended for similar scenarios.

A codelab is now available to get started with Spanner Graph. During the conference, Google also announced new pricing models and GoogleSQL functions for Bigtable, the NoSQL database for unstructured data, and latency-sensitive workloads.

MMS • Julia Kreger

Transcript

Kreger: I want to get started with a true story of something that happened to me. Back in 2017, my manager messaged me and said, can you support an event in the Midwest? I responded as any engineer would, what, when, where? He responded with, the International Collegiate Programming Contest in Rapid City, South Dakota in two weeks.

I groaned, as most people do, because two weeks’ notice on travel is painful. I said, ok, I’m booking. Two weeks later, I landed in Rapid City, two days early. Our hosts at the School of Mines who were hosting the International Collegiate Programming Contest wanted us to meet each other. They actually asked for us to be there two days early. They served us home-style cooking in a conference hall for like 30 people. It was actually awesome, great way to meet people. I ended up sitting at a random table. I asked the obvious non-professor to my right, what do you do? As a conversation starter. He responded that he worked in a data center in Austin, which immediately told me he was an IBM employee as well.

Then he continued to talk about what he did. He said that he managed development clusters of 600 to 2000 bare metal servers. At which point I cringed because I had concept of the scale and the pain involved. Then he added the bit that these clusters were basically being redeployed at least every two weeks. I cringed more. The way he was talking, you could tell he was just not happy with his job. It was really coming through.

It was an opportunity to have that human connection where you’re learning about someone and gaining more insight. He shared how he’d been working 60-hour weeks. He lamented how his girlfriend was unhappy with him because they weren’t spending time together, and all those fun things. All rooted in having to deploy these servers with thumb drives. Because it would take two weeks to deploy a cluster, and then the cluster would have to get rebuilt.

Then he shifted gears, he realized that he was making me uncomfortable. He started talking about a toolkit he had recently found. One that allowed him to deploy clusters in hours once he populated all the details required. Now his girlfriend was no longer upset with him. How he was actually now happy, and how his life was actually better. Then he asked me what I did for IBM. As a hint, it was basically university staff, volunteers, and IBM employees at this gathering.

I explained I worked on open source communities, and that I did systems automation. I had been in the place with the racks of hardware, and that I knew the pain of bare metal. Suddenly, a few moments later, his body language shifted to pure elation. He was happy beyond belief. The smile on his face just blew me away, because he had realized something. He was sitting next to the author who wrote the toolkit that made his life better.

Personally, as someone who made someone’s life better and got to hear the firsthand story, how it made his life better, the look on his face will always be in my mind. It will always be inspiration for me, which is partly the reason I’m here, because I believe in automating these aspects to ensure that we don’t feel the same pain. We don’t have to spread it around.

Context

The obvious question is, who am I? My name is Julia Kreger. I’m a Senior Principal Software Engineer at Red Hat. I’m also the chair of the board of directors at The OpenInfra Foundation. Over the past 10 years now, I’ve been working on automating the deployment of physical bare metal machines in varying use cases. That technology is used in Red Hat OpenStack and Red Hat OpenShift, to enable the deployment in our customer use cases.

It’s actually really neat technology and provides many different options. It is what you make of it, and how much you leverage it. I’m going to talk about why bare metal and the trends driving the market at this point. Then the shift to computing technology, and the shift underway. Then I’m going to talk about three tools you can use.

Why Bare Metal, in the Age of Cloud?

We’re in the age of cloud. I don’t think that’s disputed at this point. Why bare metal? The reality is, the cloud has been in existence for 17 years, at least public cloud, as we know it today. When you start thinking about what is the cloud, its existing technologies, with abstractions, and innovations which help make new technologies, all in response to market demand on other people’s computers.

Why was it a hit? It increased our flexibility. We went through self-service on-demand. We weren’t ordering racks of servers, and waiting months to get the servers, and then having to do the setup of the servers anymore. This enabled a shift from a Cap-Ex operating model of businesses to an Op-Ex model for businesses. How many people actually understand what Cap-Ex and Op-Ex is? It is shorthand for capital expense and operational expense.

Capital expense is an asset that you have, that you will maintain on your books in accounting. Your auditors will want to see it occasionally. You may have to pay taxes on it. Basically, you’re dealing with depreciation of value. At some point, you may be able to sell it and regain some of that value or may not be able to depending on market conditions. Whereas Op-Ex is really the operational expense of running a business. Employees are operational expenses, although there are some additional categories there of things like benefits.

In thinking about it, I loaded up Google Ngrams, just to model it mentally, because I’ve realized this shift over time. One of the things I noticed was looking at the graph of the data through 2019, which is all it’s loaded in Google Ngrams right now, unfortunately, we can see delayed spikes of the various booms in the marketplace and shifts in the market response.

Where businesses are no longer focusing on capital expenditures, which I thought was really interesting, actually. Not everyone can go to the cloud, some businesses are oriented for capital expenses. They have done it for 100 years. They know exactly how to do it, and to keep it in such a way so that it’s not painful for them. One of the driving forces with keeping things local on-prem, or in dedicated data centers is you might have security requirements. For example, you may have a fence and the data may never pass that fence line. Or you may not be able to allow visitors into your facility because of high security requirements. Another aspect is governance.

You may have rules and regulations which apply to you, and that may be just legal contracts with your vendors or customers that prevent you from going to a cloud provider. Sovereignty is a topic which is interesting, I think. It’s also one of the driving forces in running your own data center, having bare metal. You may do additional cloud orchestration technologies on top of that, but you still have a reason where you do not trust another provider, or where data may leave that country. That’s a driving reason for many organizations. Then latency. If you’re doing high-performance computing, you’re doing models of fluid dynamics, you can’t really tolerate latency of a node that might have a noisy neighbor. You need to be able to reproduce your experiment repeatedly, so, obviously, you’re going to probably run your own data center if you’re doing that sort of calculation.

The motives in the marketplace continue to shift. I went ahead and just continued to play with Google Ngrams. I searched for gig economy and economic bubble. Not that surprising, gig economy is going through the roof because the economy we have is changing. At the same time, economic bubble is starting to grow as a concern. You can actually see a little slight uptick in the actual graph, which made me smirk a little bit.

I was like, change equals uncertainty. Then, I searched for data sovereignty, and I saw almost an inverse mirror of some of the graphing that I was seeing with Cap-Ex and Op-Ex. Then I went for self-driving and edge computing. Because if you can’t fit everything onto the self-driving car, and you need to go talk to another system, you obviously want it to be an edge system that’s nearby, because you need low latency.

Because if you need to depress the brakes, you have 30 milliseconds to make that decision. There are some interesting drivers that we can see in literature that has been published over the last 10 years where we can see some of these shifts taking place.

The Shift in Computing Technology Underway Today

There are more shifts occurring in the marketplace. One of the highlights, I feel, that I want to make sure everyone’s mentally on the same page for is that evolution is constant in computer. The computers are getting more complex every single day. Some vendors are adding new processor features. Some vendors are adding specialized networking chips. A computer in a data center hasn’t changed that much over the years. You functionally have a box with a network cable for the management, a network cable for the actual data path, and applications with an operating system.

It really hasn’t changed. Except, it is now becoming less expensive to use purpose-built, dedicated hardware for domain specific problems. We were seeing this with things like GPUs and FPGAs, where you can write a program, run it on that device, and have some of your workload calculate or process there to solve specific problems in that domain. We’re also seeing a shift in diversifying architectures, except this can also complicate matters.

An example is an ARM system might look functionally the same until you add an x86 firmware-based GPU, and all of a sudden, your ARM cores and your firmware are trying to figure out how to initialize that. The secret apparently, is they launch a VM quietly in the substrate that the OS can’t see. There’s another Linux system running alongside of the system you’re probably running on that ARM system, initializing the card.

There’s also an emerging trend, which are data processing or infrastructure processing units. The prior speaker spoke of network processing units, and ASICs that can be programmed for these same sorts of tasks. Except in this case, these systems are much more generalized. I want to model a network card mentally. We have a PCIe bus. We have a network card as a generic box. It’s an ASIC, we don’t really think about it.

The network cable goes out the back. What these devices are that we’re starting to see in servers, that can be added for relatively low cost, is they may have an AI accelerator ASIC. They may have FPGAs. They may have additional networking ASICs for programmable functions. They have their own operating system that you can potentially log in to with applications you may want to put on that card, with a baseboard management controller just like the host. Yes, we are creating computers inside of computers.

Because now we have applications running on the main host, using a GPU with a full operating system. We have this DPU or IPU plugged into the host, presenting PCIe devices such as network ports to the main host operating system. Meanwhile, the actual workload and operating system can’t see into the actual card, nor has any awareness of what’s going on there, because they are programming the card individually and running individual workloads on the card. The software gets even more complicated, because now you need two management network connections per host, at least. That is unless the vendor supports the inbound access standards, which are a thing, but it’s going to take time.

To paint a complete picture, I should briefly talk about the use cases where these devices are being modeled. One concept that is popular right now is to use these devices for load balancing, or request routing, and not necessarily thinking like a web server load balancer, but it could just be a database connection load balancer, or for database sharding. It could actually decide, I am not the node you need to talk to, I’ll redirect your connection.

At which point, the actual underlying host that the card’s plugged into and receiving power from, never sees an interrupt from the transaction. It’s all transparent to it. Another use case that is popular is as a security isolation layer, so run like an AI enabled firewall, and have an untrusted workload on the machine. Then also do second stage processing. You may have a card or port where you’re taking data in and you may be dropping 90% of it. Then you’re sending it to the main host if it’s pertinent and makes sense to process.

This is mentally very similar to what some of the large data processing operations do, where they have an initial pass filter, and they’re dropping 90% of the data they’re getting because they don’t need to act upon it. It’s not relevant, and it’s not statistically useful for them. What they do get through, then they will apply additional filtering, and they only end up with like 1% of that useful data. This could also be in the same case as like the cell networks.

You could actually have a radio and the OS only sees Ethernet packets coming off this card as if it’s a network port. The OS is none the wiser to it. With hidden computers that we’re now creating in these infrastructures, I could guarantee these cards exist in deployed servers in this city today, need care and attention as well. There are efforts underway to standardize the interfaces and some of the approaches and modeling for management. Those are taking place in the OPI project. If you’re interested, there’s the link, https://opiproject.org.

Automation is important because, what if there’s a bug that’s been found inside of these units, and there’s a security isolation layer you can’t program from the base host? Think about it for a moment, how am I going to update these cards? I can’t touch them. I’ll go back to my story for a moment. That engineer had the first generation of some of these cards in his machines.

He had to remove the card and plug into a special card and put a USB drive into it to flash the firmware and update the operating system. To him, that was extremely painful, but it was far and few between that he had to do it. What we’re starting to see is the enablement of remote orchestration of these devices through their management ports, and through the network itself. Because, in many cases, people are more willing to trust the network than they are willing to trust an untrusted workload that may compromise the entire host.

Really, what I’m trying to get at is automation is necessary to bring any sanity at any scale to these devices. We can’t treat these cards as one-off, especially because they draw power from the base host. If you shut down the host, the card shuts down. The card needs to be fully online for the operating system to actually be able to boot.

Tools You Can Use (Ironic, Bifrost, Metal3)

There are some tools you can use. I’m going to talk about three different tools. Two of them actually use one tool. What I’m going to talk about is the Ironic Project. It’s probably the most complex and feature full of the three. Then I’ll talk about Bifrost, and then Metal3. Ironic was started as a scalable Bare Metal as a Service platform in 2012 in OpenStack. Mentally, it applies a state machine for data center operations, and the workflows that are involved to help enable the management of those machines. If you think about it, if you wheel racks of servers into a data center, you’re going to take a certain workflow.

Some of it’s going to be dictated by business process, some of it is going to be dictated by what’s the next logical step in the order, in the intake process. We have operationalized as much of that into a service as possible over the years. One can use a REST API to interact with the service and their backend conductors. There’s a ton of functionality there. Realistically, we can install Ironic without OpenStack. We can install it on its own. It supports management protocols like DMTF Redfish, IPMI. It supports flavors of the iLO protocol, iRMC interface, Dell iDRAC, and has a stable driver interface that vendors can extend if they so desire.

One of the things we see a lot of is use of virtual media to enable these deployments of these machines in edge use cases. Think cell tower on a pole, as a single machine, where the radio is on one card, and we connected into the BMC, and we have asserted a new operating system. One of the other things that we do as a service is we ensure the machine is in a clean state prior to redeployment of the machine, because the whole model of this is lifecycle management. It’s not just deployment. It’s, be able to enable reuse of the machine.

This is the Ironic State Machine diagram. This is all the state transitions that Ironic is aware of. Only those operating Ironic really need to have a concept of this. We do have documentation, but it’s quite a bit.

Then there’s Bifrost, which happened to be the tool that that engineer that I sat next to in Rapid City had stumbled upon. The concept here was, I want to deploy a data center with a laptop. It leverages Ansible with an inventory module and playbooks to drive a deployment of Ironic, and drive Ironic through command sequences to perform deployments of machines. Because it’s written, basically, in Ansible, it’s highly customizable.

For example, I might have an inventory payload. This is YAML. As an example, the first node is named node0. We’re relying on some defaults here of the system. Basically, we’re saying, here’s where to find it. Here’s the MAC address so that we know the machine is the correct machine, and we don’t accidentally destroy someone else’s machine. We’re telling what driver to also use. Then we have this node0-subnode0 defined in this configuration with what’s called a host group label.

There’s a feature in Bifrost that allows us to one way run the execution. When the inventory is processed, it can apply additional labels to each node that you may request. If I need to deploy only subnodes, or perform certain actions on subnodes, say, I need to apply certain software to these IPU or DPU devices, then you can do that as a subnode in this configuration. It’s probably worth noting, Ironic has work in progress to provide a more formalized model of DPU management. It’s going to take time to actually get there. We just cut the first release of it, actually. Again, because these IPUs and DPUs generally run on ARM processors, in this example, we provide a specific RAM disk and image to write to the block device storage of the IPU.

Then we can execute a playbook. This is a little bit of a sample playbook. The idea here is we have two steps. Both nodes in that inventory are referred to as bare metal, in this case. When it goes to process these two roles, it will first generate configuration drives, which are metadata that gets injected into the machine so that the machine can boot up and know where it’s coming from, where it’s going to, and so on. You can inject things like SSH keys, or credentials, or whatever. Then, after it does that first role, it will go ahead and perform a deployment. It’s using variables that are expected in the model between the two, to populate the fields and then perform the deployment with the API. Then there’s also the subnode here, where because we defined that host group label, we are able to execute directly upon that subnode.

Then there’s Metal3. Metal3 is deployed on Kubernetes clusters and houses a local Ironic instance. It is able to translate cluster API updates, and bare metal custom resource updates, to provision new bare metal nodes. You’re able to do things like BIOS and RAID settings, and deploy an operating system with this configuration. You can’t really customize it unless you want to go edit all the code that makes up the bare metal operator in Metal3.

This is what the payload looks like. This is a custom resource update, where we’re making a secret, which is the first part. Then the second part is, we’re creating the custom resource update to say, the machine’s online. It has a BMC address. It has a MAC address. Here’s the image we want to deploy to it, checksum. For the user data, use this defined metadata that we already have in the system and go deploy. Basically, what will happen is the bare metal operator will interact with the custom resource, find out what we’ve got, and take action upon it, and drive Ironic’s API that it houses locally in a pod to deploy bare metal servers for that end user. You’re able to grow a Kubernetes cluster locally if you have one deployed, utilizing this operator, and scale it down as you need it, with a fleet of bare metal.

Summary

There’s a very complex future ahead of us with bare metal servers in terms of servers with these devices in them. We have to be mindful that they are other systems too, and they will require management. The more of these sorts of devices that appear in the field, the more necessity for bare metal management orchestration will be in play.

Questions and Answers

Participant 1: I work with a number of customers who do on-premise Kubernetes clusters. The narrow play is to spend a truckload of money on VMware under the hood. Then that’s how you make it manageable. It always felt to me kind of overkill for all the other elastic capabilities Kubernetes gives you if we could manage the hardware better. Do you really need that virtualization layer? Do you have any thoughts on that with the way these tools are evolving?

Kreger: It’s really, in a sense, unrelated to the point I want to get across regarding IPUs and DPUs. What we’re seeing is Kubernetes is largely designed to run in cloud environments. It’s not necessarily designed to run on-prem. Speaking with my Red Hat hat on, we put a substantial investment in to make OpenShift be based on Kubernetes and operate effectively, and as we expect, on-prem, without any virtualization layer. It wasn’t an easy effort in any sense of imagination. Some of the expectations that existed in some of the code were that there’s always an Amazon metadata service available someplace. It’s not actually the case.

Participant 2: What I understood from one of the bigger slides was either like Redfish, or IPMI, or one of the existing protocols for management was going to be interfacing to the DPU or IPU port management through an external management interface facilitated by the server? Is there any thought at all to doing something new instead of sticking with these older protocols that [inaudible 00:30:57]?

Kreger: The emerging trend right now is to use Redfish or consensus. One of the things that does also exist and is helpful in this is there’s also consensus of maybe not having an onboard additional management controller, baseboard management controller style device in these cards. We’re seeing some consensus of maybe having part of it, and then having NC-SI support, so that the system BMC can connect to it and reach the device.

One of the things that’s happening in the ecosystem with 20-plus DPU vendors right now, is they are all working towards slightly different requirements. These requirements are being driven by market forces, what their customers are going to them and saying, we need this to have a minimum viable product or to do the needful. I think we’re always going to see some variation of that. The challenge will be providing an appropriate level of access for manageability. Redfish is actively being maintained and worked on and improved. I think that’s the path forward since the DMTF has really focused on that. Unfortunately, some folks still use IPMI and insist on using IPMI. Although word has it from some major vendors that they will no longer be doing anything with IPMI, including bug fixes.

Participant 2: How do you view the intersection of hardware-based security devices with these IPU, DPU platforms, because a lot of times they’re joined at the hip with the BMC. How is that all playing out?

Kreger: I don’t think it’s really coming up. Part of the problem is, again, it’s being driven by market forces. Some vendors are working in that direction, but they’re not talking about it in community. They’re seeing it as value add for their use case and model, which doesn’t really help open source developers or even other integrators trying to make complete solutions.

See more presentations with transcripts

MMS • Mandy Gu Namee Oberst Srini Penchikala Roland Meertens Antho

Subscribe on:

Transcript

Srini Penchikala: Hello everyone. Welcome to the 2024 AI and ML Trends Report podcast. Greetings from the InfoQ, AI, ML, and Data Engineering team. We also have today two special guests for this year’s Trends report. This podcast is part of our annual report to share with our listeners what’s happening in the AI and ML technologies. I am Srini Penchikala. I serve as the lead editor for the AI, ML and Data Engineering community on InfoQ. I will be facilitating our conversation today. We have an excellent panel with subject matter experts and practitioners from different specializations in AI and ML space. I will go around our virtual room and ask the panelists to introduce themselves. We will start with our special guests first, Namee Oberst and Mandy Gu. Hi Namee. Thank you for joining us and participating in this podcast. Would you like to introduce yourself and tell our listeners what you’ve been working on?

Introductions [01:24]

Namee Oberst: Yes. Hi. Thank you so much for having me. It’s such a pleasure to be here. My name is Namee Oberst and I’m the founder of an open source library called LLMware. At LLMware, we have a unified framework for building LLM based applications for RAG and for using AI agents, and we specialize in providing that with small specialized language models. And we have over 50 fine-tuned models also in Hugging Face as well.

Srini Penchikala: Thank you. Mandy, thank you for joining us. Can you please introduce yourself?

Mandy Gu: Hi, thanks so much for having me. I’m super excited. So my name is Mandy Gu. I am lead machine learning engineering and data engineering at Wealthsimple. Wealthsimple is a Canadian FinTech company helping over 3 million Canadians achieve their version of financial independence through our unified app.

Srini Penchikala: Next up, Roland.

Roland Meertens: Hey, I’m Roland, leading the datasets team at Wayve. We make self-driving cars.

Srini Penchikala: Anthony, how about you?

Anthony Alford: Hi, I’m Anthony Alford. I’m a director of software development at Genesis Cloud Services.

Srini Penchikala: And Daniel?

Daniel Dominguez: Hi, I’m Daniel Dominguez. I’m the managing partner of an offshore company that works with cloud computing with the AWS Department Network. I’m also an AWS Community Builder and the machine learning too.

Srini Penchikala: Thank you everyone. We can get started. I am looking forward to speaking with you about what’s happening in the AI and ML space, where we currently are and more importantly where we are going, especially with the dizzying pace of AI technology innovations happening since we discussed the trends report last year. Before we start the podcast topics, a quick housekeeping information for our audience. There are two major components for these reports. The first part is this podcast, which is an opportunity to listen to the panel of expert practitioners on how the innovative AI technologies are disrupting the industry. The second part of the trend report is a written article that will be available on InfoQ website. It’ll contain the trends graph that shows different phases of technology adoption and provides more details on individual technologies that have been added or updated since last year’s trend report.

I recommend everyone to definitely check out the article as well when it’s published later this month. Now back to the podcast discussion. It all starts with ChatGPT, right? ChatGPT was rolled out about a year and a half ago early last year. Since then, generative AI and LLM Technologies feels like they have been moving at the maximum speed in terms of innovation and they don’t seem to be slowing down anytime soon. So all the major players in the technology space have been very busy releasing their AI products. Earlier this year, we know at Google I/O Conference, Google announced several new developments including Google Gemini updates and generative AI in Search, which is going to significantly change the way the search works as we know it, right? So around the same time, open AI released GPT-4o. 4o is the omni model that can work with audio vision and text in real time. So like a multi-model solution.

And then Meta released around the same time Llama 3, but with the recent release of Llama version 3.1. So we have a new Llama release which is based on 405 billion parameters. So those are billions. They keep going up. Open-source solutions like Ollama are getting a lot of attention. It seems like this space is accelerating faster and faster all the time. The foundation of Gen AI technology are the large language models that are trained on a lot of data, making them capable of understanding and generating natural language and other types of content to perform a wide variety of tasks. So LLMs are a good topic to kick off this year’s trend report discussion. Anthony, you’ve been closely following the LLM models and all the developments happening in this space. Can you talk about what’s the current state of Gen AI and LLM models and highlight some of the recent developments and what our listeners should be watching out for?

The future of AI is Open and Accessible [05:32]

Anthony Alford: Sure. So I would say if I wanted to sum up LLMs in one word, it would be “more” or maybe “scale”. We’re clearly in the age of the LLM and foundation models. OpenAI is probably the clear leader, but of course there are big players like you mentioned, Google, also Anthropic has their Claude model. Those are closed, even OpenAI, their flagship model is only available through their API. Now Meta is a very significant dissenter to that trend. In fact, I think they’re trying to shift the trend toward more open source. I think it was recently that Mark Zuckerberg said, “The future of AI is open.” So Meta and Mistral, their models are open weight anyway, you can get the weight. So I mentioned one thing about OpenAI, even if they didn’t make the model weights available, they would publish some of the technical details of their models. For example, we know that GPT-3, the first GPT-3 had 175 billion parameters, but with GPT-4, they didn’t say, but the trend indicates that it’s almost certainly bigger, more parameters. The dataset’s bigger, the compute budget is bigger.

Another trend that I think we are going to continue to see is, so the ‘P’ in GPT stands for pre-trained. So these models, as you said, they’re pre-trained on a huge dataset, basically the internet. But then they’re fine-tuned, so that was one of the key innovations in ChatGPT was it was fine-tuned to follow instructions. So this instruct tuning is now extremely common and I think we’re going to continue to see that as well. Why don’t we segue now into context length? Because that’s another trend. The context length, the amount of data that you can put into the model for it to give you an answer from, that’s increasing. We could talk about that versus these new SSMs like Mamba, which in theory don’t have a context length limitation. I don’t know, Mandy, did you have any thoughts on this?

Mandy Gu: Yes, I mean I think that’s definitely a trend that we’re seeing with longer context windows. And originally when ChatGPT, when LLMs first got popularized, this was a shortcoming that a lot of people brought up. It’s harder to use LLM at scale or as more as you called it when we had restrictions around how much information we can pass through it. Earlier this year, Gemini, the Google Foundation, this GCP foundational models, they introduced the one plus million context window length and this was a game changer because in the past we’ve never had anything close to it. I think this has sparked the trend where other providers are trying to create similarly long or longer context windows. And one of the second order effects that we’re seeing from this is around accessibility. It’s made complex tasks such as information retrieval a lot simpler. Whereas in the past we would need a multi-stage retrieval system like RAG, now it’s easier, although not necessarily better, we could just pass all those contexts into this one plus million context window length. So that’s been an interesting development over the past few months.

Anthony Alford: Namee, did you have anything to add there?

Namee Oberst: Well, we specialize in using small language models. I understand the value of the longer context-length windows, but we’ve actually performed internal studies and there have been various experiments too by popular folks on YouTube where you take even a 2000 token passage and you pass it to a lot of the larger language models and they’re really not so good at finding the lost in the middle problem for doing passages. So if you really want to do targeted information search, it’s still the longer context windows are a little misleading I feel like sometimes to the users because it makes you feel like you can dump in everything and find information with precision and accuracy. But I don’t think that that’s the case at this point. So I think really well-crafted RAG workflow is still the answer.

And then basically for all intents and purposes, even if it’s like a million token context lines or whatever, it could be 10 million. But if you look at the scale of the number of documents that an enterprise has in an enterprise use case, it probably still doesn’t move the needle. But for a consumer use case, yes, definitely a longer context window for a very quick and easy information retrieval is probably very helpful.

Anthony Alford: Okay, so it sounds like maybe there’s a diminishing return, would you say? Or-

Namee Oberst: There is. It really, really depends on the use case. If you have what we deal with, if you think about it like a thousand documents, somebody wants to look through 10,000 documents, then that context window doesn’t really help. And there are a lot of studies just around how an LLM is really not a search engine. It’s really not good at finding the pinpointed information. So I don’t really personally like to recommend longer context LLMs instead of RAG. There are other strategies to look for information. Having said that, where is it very, very helpful in my opinion, the longer context window? If you can pass, for instance, a really long paper that wouldn’t have fit through a narrow context window and ask it to rewrite it or to absorb it and to almost… What I love to use LLMs for is to transform one document into another, take a long Medium article and transform that to a white paper, let’s just say as an example, that would’ve previously been outside the boundaries of a normal context window. I think this is fantastic. Just as an example of a really great use case.

Anthony Alford: So you brought up RAG and retrieval augmented generation. Why don’t we look at that for a little bit? It seems like number one, it lets you avoid the context length problem possibly. It also seems like a very common case, and maybe you could comment on this, the smaller open models. Now people can run those locally or run in their own hardware or their own cloud, use RAG with that and possibly solve problems and they don’t need the larger closed models. Namee, would you say anything about that?

Namee Oberst: Oh yes, no, absolutely. I’m a huge proponent of that and if you look at the types of models that we have available in Hugging Face to start and you look at some of the benchmark testing around their performance, I think it’s spectacular. And then the rate and pace of innovation around these open source models are also spectacular. Having said that, when you look at GPT-4o and the inference speed, the capability, the fact that it can do a million things for a billion people, I think that’s amazing.

But if you’re looking at an enterprise use case where you have very specific workflows and you’re looking to solve a very targeted problem, let’s say, to automate a specific workflow, maybe automate report generation as an example, or to do RAG for rich information retrieval within these predefined 10,000 documents, I think that you can pretty much solve all of these problems using open source models or take an existing smaller language model, fine tune them, invest in that, and then you can basically run it with privacy and security in your enterprise private cloud and then also deploy them on your edge devices increasingly. So I’m really, really bullish on using smaller models for targeted tasks.

Srini Penchikala: Yes, I tried Ollama for a use case a couple of months ago and I definitely see open source solutions like Ollama that you can self-host. You don’t have to send all your data to the cloud and you don’t know where it’s going. So use these self-hosted models with RAG techniques. RAG is mainly for the proprietary information knowledge base. So definitely I think that combination is getting a lot of attention in the corporations. Companies don’t want to send the data outside but still be able to use the power.

Roland Meertens: I do still think that at the moment most corporations are starting with OpenAI as a start, prove their business value and then they can start thinking about, “Oh, how can we really integrate it into our app?” So I think it’s fantastic that you can so easily get started with this and then you can build your own infrastructure to support the app later on.

Srini Penchikala: Yes. For scaling up, right Roland? And you can see what’s the best scale-up model for you, right?

Roland Meertens: Yes.

Srini Penchikala: Yes. Let’s continue the LLM discussions, right? Another area is the multi-model LLMs, the GPT-4o model, the omni model. So where I think it definitely takes the LLMs to the next level. It’s not about text anymore. We can use audio or video or any of the other formats. So anyone have any comments on the GPT-4o or just the multi-model LLMs?

Namee Oberst: In preparation for today’s podcast, I actually did an experiment. I have a subscription to GPT-4o, so I actually just put in a couple of prompts this morning, just out of curiosity because we’re very text-based, so I don’t actually use that feature that much. So I asked it to generate a new logo for LLMware, like for LLMware using the word, and it failed three times, so it botched the word LLMware like every single time. So having said that, I know it’s really incredible and I think they’re making fast advances, but I was trying to see where are they today, and it wasn’t great for me this morning, but I know that of course they’re still better than probably anything else that’s out there having said that, before anybody comes for me.

Roland Meertens: In terms of generating images, I must say I was super intrigued last year with how good Midjourney was and how fast they were improving, especially the small size of the company. That a small company can just beat out the bigger players by having better models is fantastic to see.

Mandy Gu: I think that goes back to the theme, Namee was touching on it, where big companies like OpenAI, they’re very good at generalization and they’re very good at getting especially new people into the space, but as you get deeper, you find that, as we always say in AI machine learning, there’s no free lunch. You explore, you test, you learn, and then you find what works for you, which isn’t always one of these big players. For us, where we benefited the most internally from the multi-modal models is not from image generation, but more so from the OCR capabilities. So one very common use case is just passing in images or files and then being able to converse with the LLM against, in particular, the images. That has been the biggest value proposition for us and it’s really popular with our developers because a lot of the times when we’re helping our end users, where our internal teams debug, they’ll send us a screenshot of the stack trace or a screenshot of the problem and being able to just throw that into the LLM as opposed to deciphering the message has been a really valuable time saver.

So not so much image generation, but from the OCR capabilities, we’ve been able to get a lot of value.

Srini Penchikala: That makes sense. When you take these technologies, OpenAI or anyone else, it’s not a one-size-fits-all when you introduce the company use cases. So everybody has unique use cases.

Daniel Dominguez: I think it’s interesting that I think now we mentioned all the Hugging Face libraries and models that are right now, for example, I’m thinking and looking right now in Hugging Face, there are more than 800,000 models. So definitely it’ll be interesting next year how many new models are going to be out there. Right now the trendings are, as we mentioned, Llama, Google Gemma, Mistral models, Stability models. So definitely in one year, how many new models are going to be out there, not only on text, but also on images, also on video? So definitely there’s something that, I mean it will be interesting to know how many models were last year actually, but now it could be an interesting number to see how many new models are going to be next year on this space.

RAG for Applicable Uses of LLMs at Scale [17:42]

Srini Penchikala: Yes, good point. Daniel. I think just like the application servers, probably like 20 years ago, right? There was one coming out every week. I think a lot of these are going to be consolidated and just a few of them will stand out to last for a longer time. So let’s quickly talk about the RAG, you mentioned about it. So this is where I think the real sweet spot-for companies, to input their own company information, whether on-site or out in the cloud and run through LLM models and get the insights out. Do you see any real-world use cases for RAG that may be of interest to our listeners?

Mandy Gu: I think RAG is one of the most applicable uses of LLMs at scale, and I think they can be shaped, and depending on how you design the retrieval system, it can be shaped into many use cases. So for us, we use a lot of RAG internally and we have a tool, this internal tool that we’ve developed which integrates our self-hosted LLMs against all of our company’s knowledge sources. So we have our documentation in Notion, we have our code in GitHub, and then we also have public artifacts from our help center website and other integrations.

And we essentially just built a retrieval augmented generation system on top of these knowledge bases. And how we’ve designed this is that every night we would have these background jobs which would extract this information from our knowledge sources, put in our vector database, and then through this web app that we’ve exposed to our employees, they’d be able to ask questions or give instructions against all of this information. And internally when we did our benchmarking as well, we’ve also found this to be a lot better from a relevancy and accuracy perspective than just feeding all of this context window into something like the Gemini 1.5 series. But going back to the question primarily as a way of boosting employee productivity, we’ve been able to have a lot of really great use cases from RAG.

Namee Oberst: Well Mandy, that is such a classic textbook, but really well-executed project for your enterprise and that’s such a sweet spot for what the capabilities of the LLMs are. And then you said something that’s really interesting. So you said you’re self-hosting the LLMs, so did you take an open source LLM or do you mind sharing some of the information? You don’t have to go into details, but that is a textbook example of a great application of Gen AI.

Mandy Gu: Yes, of course. So yes, they’re all open source and a lot of the models we did grab from Hugging Face as well. When we first started building our LLM platform, we wanted to provide our employees with this way to securely and accessibly explore this technology. And like a lot of other companies, we started with OpenAI, but then we put a PII redaction system in front of it to protect our sensitive data. And then the feedback we got from our employees, our internal users was that this PII redaction model actually prevented the most effective use cases of generative AI because if you think about people’s day-to-day works, there’s a large degree of not just PII but sensitive information they need to work with. And that was our natural segue of, okay, instead of going from how do we prevent people from sharing sensitive information with external providers, to how do we make it safe for people to share this information with LLMs? So that was our segue from OpenAI to the self-hosted large language models.

Namee Oberst: I’m just floored Mandy. I think that’s exactly what we do at LLMware. Actually, that’s exactly the type of solution that we look to provide with using small language models chained at the back-end for inferencing. You mentioned Ollama a couple of times, but we basically have Llama.cpp integrated into our platform so that you can bring in a quantized model and inference it very, very easily and securely. And then I’m a really strong believer that this amazing workflow that you’ve designed for your enterprise, that’s an amazing workflow. But then we’re also going to see other workflow automation type of use cases that will be miniaturized to be used on laptops. So I really almost see a future very, very soon where everything becomes miniaturized, these LLMs become smaller and almost take the footprint of software and we’re all going to start to be able to deploy this very, very easily and accurately and securely on laptops just as an example, and of course private cloud. So I love it. Mandy, you’re probably very far ahead in the execution and it sounds like you just did the perfect thing. It’s awesome.

Mandy Gu: That’s awesome to hear that you’re finding similar things and that’s amazing the work you’re doing as well. You mentioned Llama.cpp, and I thought that’s super interesting because I don’t know if everyone realizes this, but there’s so much edge that quantized models, smaller models can give and right now when we’re in this phase, when we’re still in the phase of this rapid experimentation, speed is the name of the game. Sure, we may lose a few precision points by going with a more quantized models, but what we get back from latency, what we get back from being able to move faster, it’s incredible. And I think Llama.cpp is a huge success story in its own, how this framework created by an individual, a relative small group of people, how well it is able to be executed at scale.

AI Powered Hardware [23:03]

Namee Oberst: Yes, I love that discussion because like Llama.cpp though, Georgi Gerganov, amazing, amazing in open source, but it’s optimized for actually Mac Metal and works really well also in NVIDIA CUDA. So the work that we’re doing is actually to allow data scientists and machine learning groups in enterprises also on top of everything else to not only be able to deliver the solution on Mac Metal, but across all AI PCs. So using Intel OpenVINO using Microsoft ONNX so that, data scientists like to work on Macs, but then also be able to deploy that very seamlessly and easily on other AI PCs because MacOS is only like 15% of all the operating systems out there, the rest of the 85 are actually non-MacOS. So just imagine the next phase of all this when we can deploy this across multiple operating systems and access to GPU capabilities of all these AI PCs. So it’s going to be really exciting in terms of a trend in the future to come, I think.

Small Language Models and Edge Computing [24:02]

Srini Penchikala: Yes, a lot of good stuff happening there. You both mentioned about small language models and also edge computing. Maybe we can segue into that. I know LLMs, we can talk about them for a long time, but I want to hear your perspective on other topics. So regarding the small language models, Namee, you’ve been looking into SLMs at your company, LLMWare, and also a RAG framework you mentioned specifically designed for SLMs. Can you talk about this space a little bit more? I know this is a recent development. I know Microsoft is doing some research on what they call a Phi-3 model. Can you talk about this? How are they different? What our listeners can do to get up to speed with SLMs?

Namee Oberst: So we’re actually a pioneer in working with small language models. So we’ve been working and focused actually on small language models for well over a year, so almost like too early, but that’s because RAG as a concept, it didn’t just come out last year. You know that probably RAG was being used in data science and machine learning for probably the past, I’d say, three or four years. So basically when we were doing experimentation with RAG and we changed one of our small parameter models very, very early on in our company, we realized that we can make them do very powerful things and we’re getting the performance benefits out of them, but with the data safety and security and exactly for all the reasons that Mandy mentioned and all these things were top of mind for me because my background is I started as a corporate attorney at a big law firm and I was general counsel of a public insurance brokerage company.

So those types of data safety, security concerns were really top of mind. So for those types of highly regulated industries, it’s almost a no-brainer to use small language models or smaller models for so many different reasons, a lot of which Mandy articulated, but there’s also the cost reason too. A cost is huge also. So there’s no reason to really deploy these large behemoth models when you can really shrink the footprint of these and really bring down the cost significantly. What’s really amazing is other people have really started to realize this and on the small language model front they’re getting better and better and better. So the latest iteration by Microsoft Phi-3, we have RAG fine-tuned models and Hugging Face that are really specifically designed to do RAG. When we fine-tuned it using our proprietary datasets across which we’ve fine-tuned 20 other models the exact same way, same datasets so we have a true apples to apples comparison. The Phi-3 or Phi-3 model really broke our test. It was like the best performing model out of every model we’ve ever tested, including 8 billion parameter models.

So our range is from one to 8 billion and really just performed the highest in terms of accuracy just blew my mind. The small language models that they’re really making accessible to everyone in the world for free on Hugging Face are getting better and better and better and at a very rapid clip. So I love that. I think that’s such an exciting world and this is why I made the assertion earlier, with this rate and pace of innovation, they are going to become small, so small that they’re going to take on the footprint of software in a not-so-distant future and we are going to look to deploy a lot of this on our edge devices. Super exciting.

Srini Penchikala: Yes, definitely a lot of the use cases include a combination of offline large language model processing versus online on the device closer to the edge real time analysis. So that’s where small language models can help. Roland or Daniel or Anthony, do you have any comments on the small language models? What are you guys seeing in this space?

Anthony Alford: Yes, exactly. Microsoft’s Phi or Phi, I think first we need to figure out which one that is, but definitely that’s been making headlines. The other thing, we have this on our agenda and Namee, you mentioned that they’re getting better. The question is how do we know how good they are? How good is good enough? There’s a lot of benchmarks. There’s things like MMLU, there’s HELM, there’s the Chatbot Arena, there’s lots of leader boards, there’s a lot of metrics. I don’t want to say people are gaming the metrics, but it’s like p-hacking, right? You publish a paper that says you’ve beat some other baseline on this metric, but that doesn’t always translate into say, business value. So I think that’s a problem that still is to be solved.

Namee Oberst: Yes, no, I fully agree. Anthony, your skepticism around the public..

Anthony Alford: I’m not skeptical.

Namee Oberst: No, I actually, I’m not crazy about them. So we’ve actually developed our own internal benchmarking tests that are asking some common sense business type questions and legal questions, just fact-based questions because our platform is really for the enterprise. So in an enterprise you really care less so about creativity in this instance, but just about how well are these models able to answer fact-based questions and basic logic, basic math, like yes or no questions. So we actually created our own benchmark testing and so the Phi-3 result is off of that because I’m skeptical of some of the published results because, I mean, have you actually looked through some of those questions like on HellaSwag or whatever? I can’t answer some of that. I am not an uneducated person. I don’t know what the right or wrong answer is sometimes either. So we decided to create our own testing and the Phi-3 results that we’ve been talking about are based on what we developed and I’m not sponsored by Microsoft. I wish they would, but I’m not.

Srini Penchikala: Definitely, I want to get into LLM evaluation shortly, but before we go there, any language model thoughts?

Roland Meertens: One thing which I think is cool about Phi is that they trained it using higher quality data and also by generating their own data. For example, for the coding, they asked it to write instructions for a student and then trained on that data. So I really like seeing that if you have higher quality data and you select the data you have better, you also get better models.

Anthony Alford: “Textbooks Are All You Need”, right? Was that the paper?

Roland Meertens: “Textbooks Are All You Need” is indeed name of the paper, but there’s multiple papers coming out also from people working at Hugging Face around “SantaCoder: don’t reach for the stars!”. There’s so much interest into what data do you want to feed into these models, which is still an underrepresented part of machine learning, I feel.

Srini Penchikala: Other than Phi, I guess that’s probably the right way to pronounce. I know Daniel, you mentioned TinyLlama. Do you have any comments on these tiny language models, small language models?