Month: February 2025

MMS • RSS

Software companies are constantly trying to add more and more AI features to their platforms, and it can be hard to keep up with it all. We’ve written this roundup to share updates from 10 notable companies that have recently enhanced their products with AI.

OpenAI announces research preview of GPT-4.5

OpenAI is calling GPT-4.5 its “largest and best model for chat yet.” The newest model was trained using data from smaller models, which improves steerability, understanding of nuance, and natural conversation, according to the company.

In comparison to o1 and o3-mini, this model is a more general-purpose model, and unlike o1, GPT-4.5 is not a reasoning model, so it doesn’t think before it responds.

“We believe reasoning will be a core capability of future models, and that the two approaches to scaling—pre-training and reasoning—will complement each other. As models like GPT‑4.5 become smarter and more knowledgeable through pre-training, they will serve as an even stronger foundation for reasoning and tool-using agents,” the company wrote in a post.

Anthropic releases Claude 3.7 Sonnet and Claude Code

Anthropic made two major announcements this week: the release of Claude 3.7 Sonnet and a research preview for an agentic coding tool called Claude Code.

Claude Sonnet is the company’s medium cost and performance model, sitting in between the smaller Haiku models and the most powerful Opus models.

According to Anthropic, Claude 3.7 Sonnet is the company’s most intelligent model yet and the “first hybrid reasoning model on the market.” It produces near-instant responses and has an extended thinking mode where it can provide the user with step-by-step details of how it came to its answers.

The company also announced a research preview for Claude Code, which is an agentic coding tool. “Claude Code is an active collaborator that can search and read code, edit files, write and run tests, commit and push code to GitHub, and use command line tools—keeping you in the loop at every step,” Anthropic wrote in a blog post.

Gemini 2.0 Flash-Lite now generally available

Google has announced that Gemini 2.0 Flash-Lite is now available in the Gemini App for production use in Google AI Studio or in Vertex AI for enterprise customers.

According to Google, this model features better performance in reasoning, multimodal, math, and factuality benchmarks compared to Gemini 1.5 Flash.

Google to offer free version of Gemini Code Assist

Google also announced that it is releasing a free version of Gemini Code Assist, which is an AI-coding assistant.

Now in public preview, Gemini Code Assist for individuals provides free access to a Gemini 2.0 model fine-tuned for coding within Visual Studio Code and JetBrains IDEs. The model was trained on a variety of real-world coding use cases and supports all programming languages in the public domain.

The assistant offers a chat interface that is aware of a developer’s existing code, provides automatic code completion, and can generate and transform full functions or files.

The free version has a limit of 6,000 code-related requests and 240 chat requests per day, which Google says is roughly 90 times more than other coding assistants on the market today. It also has a 128,000 input token context window, which allows developers to use larger files and ground the assistant with knowledge about their codebases.

Microsoft announces Phi-4-multimodal and Phi-4-mini

Phi-4-multimodal can process speech, vision, and text inputs at the same time, while Phi-4-mini is optimized for text-based tasks.

According to Microsoft, Phi-4-multimodal leverages cross-modal learning techniques to enable it to understand and reason across multiple different modalities at once. It is also optimized for on-device execution and reduced computational overhead.

Phi-4-mini is a 3.8B parameter model designed for speed and efficiency while still outperforming larger models in text-based tasks like reasoning, math, coding, instruction-following, and function-calling.

The new models are available in Azure AI Foundry, HuggingFace, and the NVIDIA API Catalog.

IBM’s next generation Granite models are now available

IBM has released the next generation models in its Granite family: Granite 3.2 8B Instruct, Granite 3.2 2B Instruct, Granite Vision 3.2 2B, Granite-Timeseries-TTM-R2.1, Granite-Embedding-30M-Sparse, and new model sizes for Granite Guardian 3.2.

Granite 3.2 8B Instruct and Granite 3.2 2B Instruct provide chain of thought reasoning that can be toggled on and off.

“The release of Granite 3.2 marks only the beginning of IBM’s explorations into reasoning capabilities for enterprise models. Much of our ongoing research aims to take advantage of the inherently longer, more robust thought process of Granite 3.2 for further model optimization,” IBM wrote in a blog post.

All of the new Granite 3.2 models are available on Hugging Face under the Apache 2.0 license. Additionally, some of the models are accessible through IBM watsonx.ai, Ollama, Replicate, and LM Studio.

Precisely announces updates to Data Integrity Suite

The Data Integrity Suite is a set of services that help companies ensure trust in their data, and the latest release includes several AI capabilities.

The new AI Manager allows users to register LLMs in the Data Integrity Suite to ensure they comply with the company’s requirements, offers the ability to scale AI usage using external LLMs with processing handled by the same infrastructure the LLM is on, and uses generative AI to create catalog asset descriptions.

Other updates in the release include role-based data quality scores, new governance capabilities, a new Snowflake connector, and new metrics for understanding latency.

Warp releases its AI-powered terminal on Windows

Warp allows users to navigate the terminal using natural language, leveraging a user’s saved commands, codebase context, what shell they are in, and their past actions to make recommendations.

It also features an Agent Mode that can be used to debug errors, fix code, and summarize logs, and it has the power to automatically execute commands.

It has already been available on Mac and Linux, and the company said that Windows support has been its most requested feature over the past year. It supports PowerShell, WSL, and Git Bash, and will run on x64 or ARM64 architectures.

MongoDB acquires Voyage AI for its embedding and reranking models

MongoDB has announced it is acquiring Voyage AI, a company that makes embedding and reranking models.

This acquisition will enable MongoDB’s customers to build reliable AI-powered applications using data stored in MongoDB databases, according to the company.

According to MongoDB, it will integrate Voyage AI’s technology in three phases. During the first phase, Voyage AI’s embedding and reranking models will still be available through Voyage AI’s website, AWS Marketplace, and Azure Marketplace.

The second phase will involve integrating Voyage AI’s models into MongoDB Atlas, beginning with an auto-embedding service for Vector Search and then adding native reranking and domain-specific AI capabilities.

During the third phase, MongoDB will advance AI-powered retrieval with multi-modal capabilities, and introduce instruction tuned models.

IBM announces intent to acquire DataStax

The company plans to utilize DataStax AstraDB and DataStax Enterprise’s capabilities to improve watsonx.

“Our combined technology will capture richer, more nuanced representations of knowledge, ultimately leading to more efficient and accurate outcomes. By harnessing DataStax’s expertise in managing large-scale, unstructured data and combining it with watsonx’s innovative data AI solutions, we will provide enterprise ready data for AI with better data performance, search relevancy, and overall operational efficiency,” IBM wrote in a post.

IBM has also said that it will continue collaborating on DataStax’s open source projects Apache Cassandra, Langflow, and OpenSearch.

Read last week’s AI announcements roundup here.

MMS • RSS

For the week ending Feb. 28, CRN takes a look at the companies that brought their ‘A’ game to the channel including IBM, HashiCorp, DataStax, MongoDB, EDB, Intel and Alibaba.

The Week Ending Feb. 28

Topping this week’s Five Companies that Came to Win is IBM for completing its $6.4 billion acquisition of HashiCorp., a leading developer of hybrid IT infrastructure management and provisioning tools, and for striking a deal to buy database development platform company DataStax.

Also making this week’s list is cloud database provider MongoDB for its own strategic acquisition in the AI modeling technology space. EDB is here for a major revamp of its channel program as it looks to partners to help expand sales of its EDB Postgres AI database. Intel makes the list for expanding its Xeon 6 line of processors for mid-range data center systems. And cloud giant Alibaba got noticed for its vow to invest $53 billion in AI infrastructure.

IBM Closes $6.4B HashiCorp Acquisition, Strikes Deal To Buy DataStax

IBM tops this week’s Five Companies That Came to Win list for two strategic acquisitions.

On Thursday, IBM said it completed its $6.4 billion purchase of HashiCorp, a leading provider of tools for managing and provisioning cloud and hybrid IT infrastructure and building, securing and running cloud applications.

IBM will leverage HashiCorp’s software, including its Terraform infrastructure-as-code platform, to boost its offerings in infrastructure and security life-cycle management automation, infrastructure provisioning, multi-cloud management, and consulting and artificial intelligence, among other areas.

The deal’s consummation was delayed while overseas regulators, including in the U.K., scrutinized the acquisition.

Earlier in the week, IBM announced an agreement to acquire DataStax and its cloud database development platform in a move to expand the capabilities of the IBM Watsonx AI portfolio.

IBM said adding DataStax to Watsonx will accelerate the use of generative AI at scale among its customers and help “unlock value” from huge volumes of unstructured data.

“The strategic acquisition of DataStax brings cutting-edge capabilities in managing unstructured and semi-structured data to Watsonx, building on open-source Cassandra investments for enterprise applications and enabling clients to modernize and develop next-generation AI applications,” said Ritika Gunnar, IBM general manager, data and AI, in a blog post.

In addition to its flagship database offering, DataStax’s product portfolio includes Astra Streaming for building real-time data pipelines, the DataStax AI Platform for building and deploying AI applications, and an enterprise AI platform that incorporates Nvidia AI technology. Another key attraction for IBM is DataStax’s Langflow open-source, low-code tools for developing AI applications that use retrieval augmented generation (RAG).

MongoDB Looks To Improve AI Application Accuracy With Voyage AI Acquisition

Sticking with the topic of savvy acquisitions, MongoDB makes this week’s list for acquiring Voyage AI, a developer of “embedding and rerank” AI models that improve the accuracy and efficiency of RAG (retrieval-augmented generation) data search and retrieval operations.

MongoDB plans to add the Voyage AI technology to its platform to help businesses and organizations build more trustworthy AI and Generative AI applications that deliver more accurate results with fewer hallucinations.

“AI has the promise to transform every business, but adoption is held back by the risk of hallucinations,” said Dev Ittycheria, MongoDB CEO, in a statement announcing the acquisition.

MongoDB’s developer data platform, based on its Atlas cloud database, has become a popular system for building AI-powered applications. Last May the company launched its MongoDB AI Applications Program (MAAP), which provides a complete technology stack, services and other resources to help partners and customers develop and deploy at scale applications with advanced generative AI capabilities.

“By bringing the power of advanced AI-powered search and retrieval to our highly flexible database, the combination of MongoDB and Voyage AI enables enterprises to easily build trustworthy AI-powered applications that drive meaningful business impact. With this acquisition, MongoDB is redefining what’s required of the database for the AI era,” Ittycheria said.

EDB Boosts Channel Program Offerings As It Expands Data Platform Sales For AI, Analytical Tasks

EDB wins applause for expanding its channel program, including increasing investments to raise partners’ expertise and go-to-market capabilities, as the company looks to boost adoption of its Postgres-based database platform for more data analytics and AI applications.

The company also launched a new partner portal and “industry success hub” repository of vertical industry customer case studies that partners can draw on.

The upgraded EDB Partner Program is the company’s latest move as it looks to grow beyond its roots of providing a transaction-oriented, Oracle-compatible database to developing a comprehensive data and AI platform.

EDB is a long-time player in the database arena with its software based on the open-source PostgreSQL database. But EDB has been shooting higher for the last 18 months following the appointment of former Wind River CEO Kevin Dallas as the database company’s new CEO in August 2023.

The expanded channel program offerings tie in with last year’s launch of EDB Postgres AI, a major update of the company’s flagship database that can handle transactional, analytical and AI workloads.

Intel Debuts Midrange Xeon 6 CPUs To Fight AMD In Enterprise Data Centers

Intel showed off its technology prowess this week when it launched its new midrange Xeon 6 processors to help customers consolidate data centers for a broad range of enterprise applications.

Intel said the new processors provide superior performance and lower total cost of ownership than AMD’s latest server CPUs.

Using the same performance cores of the high-end Xeon 6900P Series, the new Xeon 6700P series scales to 86 cores on 350 watts while the new Xeon 6500P series reaches up to 32 cores on 225 watts, expanding the Xeon 6 family for a broader swath of the data center market. Intel said its Xeon 6 processors “have already seen broad adoption across the data center ecosystem, with more than 500 designs available now or in progress” from major vendors such as Dell Technologies, Nvidia, Hewlett Packard Enterprise, Lenovo, Microsoft, VMware, Supermicro, Oracle, Red Hat and Nutanix, “among many others.

Intel also debuted the Xeon 6 system-on-chips for network and edge applications and two new Ethernet product lines.

Alibaba Plans To Invest $53B In AI Infrastructure

Cloud computing giant Alibaba Group got everyone’s attention this week when it unveiled plans to invest $53 billion in its AI infrastructure and data centers over the next three years.

Alibaba looks to become a global powerhouse in providing AI infrastructure and AI models with CEO Eddie Wu declaring that AI is now the company’s primary focus.

Alibaba builds its own open-source AI large language models (LLMs), dubbed Qwen, while owning billions worth of cloud infrastructure inside its data centers. The Chinese company recently introduced the Alibaba Cloud GenAI Empowerment Program, a dedicated support program for global developers and startups leveraging its Qwen models to build generative AI applications.

Alibaba said its new $53 billion AI investment exceeds the company’s spending in AI and cloud computing over the past decade.

MMS • Robert Krzaczynski

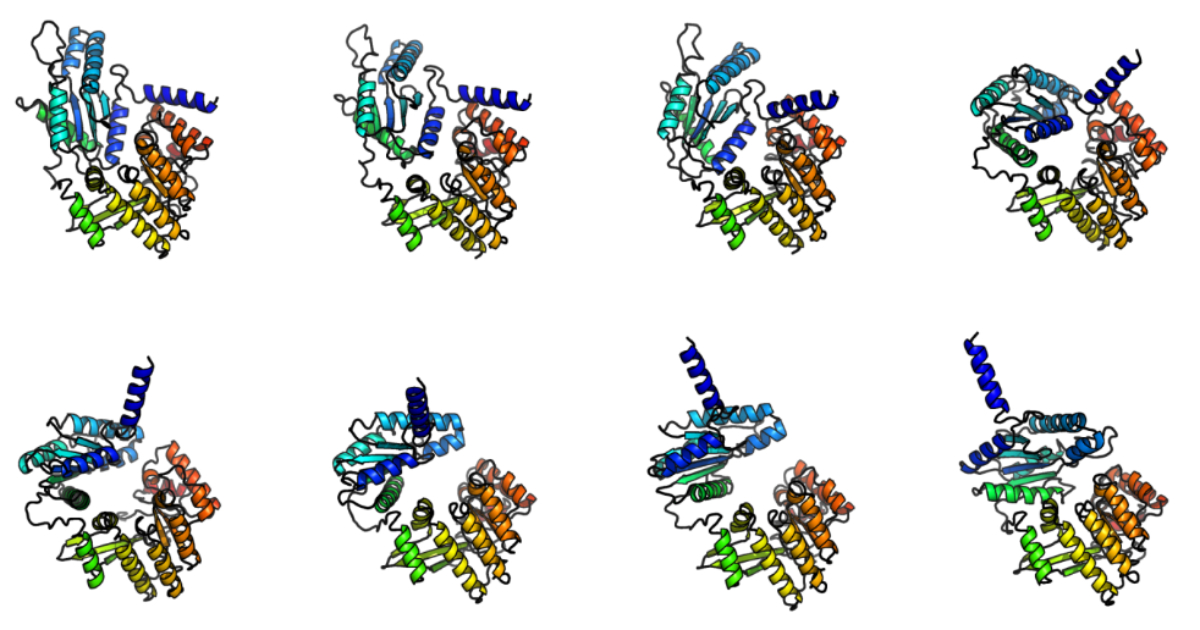

Microsoft Research has introduced BioEmu-1, a deep-learning model designed to predict the range of structural conformations that proteins can adopt. Unlike traditional methods that provide a single static structure, BioEmu-1 generates structural ensembles, offering a broader view of protein dynamics. This method may be especially beneficial for understanding protein functions and interactions, which are crucial in drug development and various fields of molecular biology.

One of the main challenges in studying protein flexibility is the computational cost of molecular dynamics (MD) simulations, which model protein motion over time. These simulations often require extensive processing power and can take years to complete for complex proteins. BioEmu-1 offers an alternative by generating thousands of protein structures per hour on a single GPU, making it 10,000 to 100,000 times more computationally efficient than conventional MD simulations.

BioEmu-1 was trained on three types of datasets: AlphaFold Database (AFDB) structures, an extensive MD simulation dataset, and an experimental protein folding stability dataset. This method allows the model to generalize to new protein sequences and predict various conformations. It has successfully identified the structures of LapD, a regulatory protein in Vibrio cholerae bacteria, including both known and unobserved intermediate conformations.

BioEmu-1 demonstrates strong performance in modeling protein conformational changes and stability predictions. The model achieves 85% coverage for domain motion and 72–74% coverage for local unfolding events, indicating its ability to capture structural flexibility. The BioEmu-Benchmarks repository provides benchmark code, allowing researchers to evaluate and reproduce the model’s performance on various protein structure prediction tasks.

Experts in the field have noted the significance of this advancement. For example, Lakshmi Prasad Y. commented:

The open-sourcing of BioEmu-1 by Microsoft Research marks a significant leap in overcoming the scalability and computational challenges of traditional molecular dynamics (MD) simulations. By integrating AlphaFold, MD trajectories, and experimental stability metrics, BioEmu-1 enhances the accuracy and efficiency of protein conformational predictions. The diffusion-based generative approach allows for high-speed exploration of free-energy landscapes, uncovering crucial intermediate states and transient binding pockets.

Moreover, Nathan Baker, a senior director of partnerships for Chemistry and Materials at Microsoft, reflected on the broader implications:

I ran my first MD simulation over 25 years ago, and my younger self could not have imagined having a powerful method like this to explore protein conformational space. It makes me want to go back and revisit some of those molecules!

BioEmu-1 is now open-source and available through Azure AI Foundry Labs, providing researchers with a more efficient method for studying protein dynamics. By predicting protein stability and structural variations, it can contribute to advancements in drug discovery, protein engineering, and related fields.

More information about the model and results can be found in the official paper.

MMS • RSS

MMS • RSS

Solutions Review Executive Editor Tim King curated this list of notable data management news for the week of February 28, 2025.

Keeping tabs on all the most relevant big data and data management news can be a time-consuming task. As a result, our editorial team aims to provide a summary of the top headlines from the last week in this space. Solutions Review editors will curate vendor product news, mergers and acquisitions, venture capital funding, talent acquisition, and other noteworthy big data and data management news items.

For early access to all the expert insights published on Solutions Review, join Insight Jam, a community dedicated to enabling the human conversation on AI.

Top Data Management News for the Week Ending February 28, 2025

Acceldata Announces Agentic Data Management

It augments and replaces traditional data quality, and governance tools, data catalogs, with a unified AI-driven platform, transforming how enterprises manage and optimize data. By leveraging AI agents, contextual memory, and automated actions, Agentic DM ensures data is always reliable, governed, and AI-ready—without human intervention.

Rohit Choudhary, Founder and CEO at Acceldata.io, said: “Acceldata Agentic Data Management enables true autonomous operations, which is intent-based and context-aware.”

Ataccama Releases 2025 Data Trust Report

The Data Trust Report suggests that organizations must reframe their thinking to see compliance as the foundation for long-term business value and trust. The report found that 42 percent of organizations prioritize regulatory compliance, but only 26 percent focus on it within their data teams. This highlights blind spots with real-world consequences like regulatory fines and data breaches that can erode customer trust, financial stability, and competitiveness.

BigID Announces New BigID Next Offering

With a modular, AI-assisted architecture, BigID Next empowers organizations to take control of their most valuable asset—data—while adapting to the fast-evolving risk and compliance landscape in the age of AI.

Couchbase Integrates Capella AI Model Services with NVIDIA NIM Microservices

Capella AI Model Services, powered by NVIDIA AI Enterprise, minimize latency by bringing AI closer to the data, combining GPU-accelerated performance and enterprise-grade security to empower organizations to seamlessly operate their AI workloads. The collaboration enhances Capella’s agentic AI and retrieval-augmented generation (RAG) capabilities, allowing customers to efficiently power high-throughput AI-powered applications while maintaining model flexibility.

Cribl Unveils New Crible Lakehouse Offering

With Cribl Lakehouse, companies can eliminate the complexity of schema management and manual data transformation while simultaneously delivering ultra-elastic scalability, federated query capabilities, and a fully unified management experience across diverse datasets and geographies.

Google Adds Inline Filtering to AlloyDB Vector Search

Inline filtering helps ensure that these types of searches are fast, accurate, and efficient — automatically combining the best of vector indexes and traditional indexes on metadata columns to achieve better query performance.

GridGain Contributes to Latest Release of Apache Ignite 3.0

Apache Ignite has long been the trusted choice for developers at top companies, enabling them to achieve unmatched speed and scale through the power of distributed in-memory computing. These contributions reflect GridGain’s continued commitment to the open-source distributed database, caching, and computing platform.

Paige Roberts, Head of Technical Evangelism at GridGain, said: “Completely rearchitecting the core of Apache Ignite 3 to improve stability and reliability provides real-time response speed on a foundation that just works. GridGain 9.1 is built on that foundation, plus things from 9.0 like on-demand scaling and strong data consistency that were needed to better support AI workflows. Any data processing and analytics platform that isn’t aimed at supporting AI these days is already obsolete.”

Hydrolix Joins AWS ISV Accelerate Program

The AWS ISV Accelerate Program provides Hydrolix with co-sell support and benefits to meet customer needs through collaboration with AWS field sellers globally. Co-selling provides better customer outcomes and assures mutual commitment from AWS and its partners.

IBM Set to Acquire DataStax

IBM says the move will help enterprises “harness crucial enterprise data to maximize the value of generative AI at scale.”

William McKnight, President at McKnight Consulting Group, said: “DataStax has a very strong vector database. In our benchmark, we found Astra DB ingestion and indexing speeds up to 6x faster than Pinecone, with more consistent performance. It also showed faster search response times both during and after indexing. Furthermore, Astra DB exhibited better recall (accuracy) during active indexing and maintained low variance. Finally, DataStax Astra DB Serverless (Vector) had a significantly lower Total Cost of Ownership (TCO).

IBM was supporting Milvus, but now they have their own vector capabilities.”

Robert Eve, Advisor at Robert Eve Consulting, said: “This seems like a win-win-win for DataStax customers, IBM customers, and both firms’ investors.

As we have seen many times in the database industry, specialized capabilities frequently find homes within broader offerings as market acceptance grows, installed bases overlap, and requirements for shared (common) development tools and operational functionality increase.

This is yet another example.”

MetaRouter Launches New Schema Enforcement Layer

Designed to address persistent challenges in data quality, consistency, and governance, this new tool ensures that data flowing through MetaRouter pipelines adheres to defined schema standards. MetaRouter’s Schema Enforcement tool tackles these challenges head-on by empowering data teams to define, assign, and enforce schemas, preventing bad data from ever reaching downstream systems.

MongoDB is Set to Acquire Voyage AI

Integrating Voyage AI’s technology with MongoDB will enable organizations to easily build trustworthy, AI-powered applications by offering highly accurate and relevant information retrieval deeply integrated with operational data.

Observo AI Launches New AI Data Engineer Assistant Called Orion

Orion is an on-demand AI data engineer, enabling teams to build, optimize, and manage data pipelines through natural language interactions, dramatically reducing the complexity and expertise traditionally required for these critical operations.

Precisely Announces New AI-Powered Features

These advancements address key enterprise data challenges such as improving data accessibility, enabling business-friendly governance, and automating manual processes. Together, they help organizations boost efficiency, maximize the ROI of their data investments, and make confident, data-driven decisions.

Profisee MDM is Now Available in Public Preview for Microsoft Fabric

This milestone positions Profisee as the sole MDM provider offering an integrated solution within Microsoft Fabric, empowering users to define, manage and leverage master data products directly within the platform.

Redpanda Releases New Snowflake Connector Based on Snowpipe Streaming

The Redpanda Snowflake Connector not only optimizes the parsing, validation code, and assembly for building the files that are sent to Snowflake, but also makes it easy to split a stream into multiple tables and do custom transformations on data in flight.

Reltio Launches New Lightspeed Data Delivery Network

Reltio Data Cloud helps enterprises solve their most challenging data problems—at an immense scale—and enables AI-driven innovation across their organizations. Reltio Data Cloud today processes 61.1B total API calls per year and has 9.1B consolidated profiles under management, 100B relationships under management, and users across 140+ countries.

Saksoft Partners with Cleo on Real-Time Logistics Data Integration

The partnership aims to empower Saksoft customers—particularly in the Logistics industry—with CIC, enabling them to harness EDI and API automation alongside real-time data visibility. This will equip them with the agility, control, and actionable insights necessary to drive their business forward with confidence.

Snowflake Launches Silicon Valley AI Hub

Located in the heart of Silicon Valley at Snowflake’s new Menlo Park campus, the nearly 30,000 square foot space plans to open in Summer 2025, and will feature a range of spaces designed for people across the AI ecosystem.

Expert Insights Section

Watch this space each week as our editors will share upcoming events, new thought leadership, and the best resources from Insight Jam, Solutions Review’s enterprise tech community where the human conversation around AI is happening. The goal? To help you gain a forward-thinking analysis and remain on-trend through expert advice, best practices, predictions, and vendor-neutral software evaluation tools.

Watch this space each week as our editors will share upcoming events, new thought leadership, and the best resources from Insight Jam, Solutions Review’s enterprise tech community where the human conversation around AI is happening. The goal? To help you gain a forward-thinking analysis and remain on-trend through expert advice, best practices, predictions, and vendor-neutral software evaluation tools.

InsightJam.com’s Mini Jam LIVE! Set for March 6, 2025

From AI Architecture to the Application Layer explores the critical transition of AI from its underlying architectures to its practical applications in order to illustrate the transformations occurring now in the Enterprise Tech workspace.

This one-day Virtual Event brings together leading experts across four thought leader Keynotes and four expert Panels to reveal the nuances of AI Development, from the design of AI to its integration of radical solutions in virtually every tech application.

NEW by Solutions Review Expert @ Insight Jam Thought Leader Dr. Joe Perez – Bullet Train Breakthroughs: Lessons in Data, Design & Discovery

Ford revolutionized automobile manufacturing by observing meatpacking lines and how they moved animal carcasses along their conveyor belts. Adapting that mechanization to his factory, Ford was able to streamline production from 12 hours for each car to a mere 90 minutes, a feat that would change the character of manufacturing forever.

NEW by Solutions Review Expert @ Insight Jam Thought Leader Nicola Askham – Do You Need a Data Strategy and a Data Governance Strategy?

With the increasing importance of data, many organizations are asking whether they need both a data strategy and a Data Governance strategy. I’ve been doing Data Governance for over twenty years now, and I’ll be honest – in the first fifteen years, no one even talked about a data strategy or a Data Governance strategy. But before we dive into the answer, let’s start by getting the basics straight.

NEW by Solutions Review Expert @ Insight Jam Thought Leader Dr. Irina Steenbeek – Key Takeaways from the Free Masterclass: Adapting Data Governance for Modern Data Architecture

I believe that data governance and data management follow a yin-yang duality. Data governance defines why an organization must formalize a data management framework and its feasible scope. Data management, in turn, designs and establishes the framework, while data governance controls its implementation.

What to Expect at Solutions Review‘s Spotlight with Object First & Enterprise Strategy Group on March 13

According to ESG research 81 percent of businesses agree immutable backup storage is the best defense against ransomware. However, not all backup storage solutions can deliver full immutability and enable the quick and complete recovery that is essential for ransomware resilience. With the next Solutions Spotlight event, the team at Solutions Review has partnered with leading Veeam storage provider Object First.

On-Demand: Solutions Review‘s Spotlight with Concentric AI & Enterprise Strategy Group

With the next Solutions Spotlight event, the team at Solutions Review has partnered with leading data security governance platform provider Concentric AI to provide viewers with a unique webinar called Modern Data Security Governance in the Generative AI Era.

Insight Jam Panel Highlights: Best Practices for Ensuring Data Quality & Integrity in the AI Pipeline

They address critical challenges with data provenance, bias detection, and records management while offering insights on legal compliance considerations. Essential viewing for security professionals implementing AI systems who need to balance innovation with data quality requirements.

For consideration in future data management news roundups, send your announcements to the editor: tking@solutionsreview.com.

MMS • RSS

Generali Investments Towarzystwo Funduszy Inwestycyjnych grew its position in MongoDB, Inc. (NASDAQ:MDB – Free Report) by 32.3% in the fourth quarter, according to its most recent 13F filing with the Securities and Exchange Commission. The fund owned 12,300 shares of the company’s stock after acquiring an additional 3,000 shares during the quarter. MongoDB makes up approximately 1.7% of Generali Investments Towarzystwo Funduszy Inwestycyjnych’s holdings, making the stock its 17th largest position. Generali Investments Towarzystwo Funduszy Inwestycyjnych’s holdings in MongoDB were worth $2,864,000 at the end of the most recent quarter.

A number of other institutional investors have also made changes to their positions in the company. Jennison Associates LLC increased its holdings in shares of MongoDB by 23.6% in the 3rd quarter. Jennison Associates LLC now owns 3,102,024 shares of the company’s stock valued at $838,632,000 after acquiring an additional 592,038 shares during the last quarter. Geode Capital Management LLC increased its holdings in shares of MongoDB by 2.9% in the 3rd quarter. Geode Capital Management LLC now owns 1,230,036 shares of the company’s stock valued at $331,776,000 after acquiring an additional 34,814 shares during the last quarter. Westfield Capital Management Co. LP increased its holdings in shares of MongoDB by 1.5% in the 3rd quarter. Westfield Capital Management Co. LP now owns 496,248 shares of the company’s stock valued at $134,161,000 after acquiring an additional 7,526 shares during the last quarter. Holocene Advisors LP grew its stake in MongoDB by 22.6% during the 3rd quarter. Holocene Advisors LP now owns 362,603 shares of the company’s stock worth $98,030,000 after buying an additional 66,730 shares during the last quarter. Finally, Assenagon Asset Management S.A. grew its stake in MongoDB by 11,057.0% during the 4th quarter. Assenagon Asset Management S.A. now owns 296,889 shares of the company’s stock worth $69,119,000 after buying an additional 294,228 shares during the last quarter. 89.29% of the stock is currently owned by institutional investors.

Insider Transactions at MongoDB

In other MongoDB news, insider Cedric Pech sold 287 shares of MongoDB stock in a transaction on Thursday, January 2nd. The shares were sold at an average price of $234.09, for a total transaction of $67,183.83. Following the sale, the insider now owns 24,390 shares in the company, valued at $5,709,455.10. The trade was a 1.16 % decrease in their ownership of the stock. The transaction was disclosed in a filing with the Securities & Exchange Commission, which is available at this link. Also, CAO Thomas Bull sold 169 shares of MongoDB stock in a transaction on Thursday, January 2nd. The stock was sold at an average price of $234.09, for a total transaction of $39,561.21. Following the sale, the chief accounting officer now owns 14,899 shares in the company, valued at $3,487,706.91. This represents a 1.12 % decrease in their position. The disclosure for this sale can be found here. Over the last quarter, insiders have sold 41,979 shares of company stock worth $11,265,417. Insiders own 3.60% of the company’s stock.

MongoDB Trading Down 2.2 %

Shares of MongoDB stock opened at $262.41 on Friday. The company’s fifty day simple moving average is $261.95 and its 200-day simple moving average is $274.67. The company has a market cap of $19.54 billion, a price-to-earnings ratio of -95.77 and a beta of 1.28. MongoDB, Inc. has a 1 year low of $212.74 and a 1 year high of $449.12.

MongoDB (NASDAQ:MDB – Get Free Report) last announced its quarterly earnings data on Monday, December 9th. The company reported $1.16 earnings per share (EPS) for the quarter, topping the consensus estimate of $0.68 by $0.48. The business had revenue of $529.40 million for the quarter, compared to the consensus estimate of $497.39 million. MongoDB had a negative net margin of 10.46% and a negative return on equity of 12.22%. MongoDB’s quarterly revenue was up 22.3% on a year-over-year basis. During the same quarter last year, the company posted $0.96 earnings per share. As a group, research analysts forecast that MongoDB, Inc. will post -1.78 earnings per share for the current fiscal year.

Analysts Set New Price Targets

A number of research analysts recently commented on MDB shares. Robert W. Baird upped their price objective on MongoDB from $380.00 to $390.00 and gave the stock an “outperform” rating in a research note on Tuesday, December 10th. Canaccord Genuity Group upped their price objective on MongoDB from $325.00 to $385.00 and gave the stock a “buy” rating in a research note on Wednesday, December 11th. Rosenblatt Securities assumed coverage on MongoDB in a research report on Tuesday, December 17th. They set a “buy” rating and a $350.00 price target for the company. JMP Securities reiterated a “market outperform” rating and set a $380.00 price target on shares of MongoDB in a research report on Wednesday, December 11th. Finally, DA Davidson boosted their price target on MongoDB from $340.00 to $405.00 and gave the stock a “buy” rating in a research report on Tuesday, December 10th. Two analysts have rated the stock with a sell rating, four have assigned a hold rating, twenty-three have assigned a buy rating and two have issued a strong buy rating to the stock. Based on data from MarketBeat.com, the stock currently has a consensus rating of “Moderate Buy” and an average price target of $361.00.

View Our Latest Research Report on MDB

About MongoDB

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Recommended Stories

Want to see what other hedge funds are holding MDB? Visit HoldingsChannel.com to get the latest 13F filings and insider trades for MongoDB, Inc. (NASDAQ:MDB – Free Report).

This instant news alert was generated by narrative science technology and financial data from MarketBeat in order to provide readers with the fastest and most accurate reporting. This story was reviewed by MarketBeat’s editorial team prior to publication. Please send any questions or comments about this story to contact@marketbeat.com.

Before you consider MongoDB, you’ll want to hear this.

MarketBeat keeps track of Wall Street’s top-rated and best performing research analysts and the stocks they recommend to their clients on a daily basis. MarketBeat has identified the five stocks that top analysts are quietly whispering to their clients to buy now before the broader market catches on… and MongoDB wasn’t on the list.

While MongoDB currently has a Moderate Buy rating among analysts, top-rated analysts believe these five stocks are better buys.

Wondering what the next stocks will be that hit it big, with solid fundamentals? Enter your email address to see which stocks MarketBeat analysts could become the next blockbuster growth stocks.

Google Cloud Introduces Quantum-Safe Digital Signatures in Cloud KMS to Future-Proof Data Security

MMS • Steef-Jan Wiggers

Google recently unveiled quantum-safe digital signatures in its Cloud Key Management Service (Cloud KMS), aligning with the National Institute of Standards and Technology (NIST) post-quantum cryptography (PQC) standards. This update, now available in preview, addresses the growing concern over the potential risks posed by future quantum computers, which could crack traditional encryption methods.

Quantum computing, with its ability to solve problems exponentially faster than classical computers, presents a serious challenge to current cryptographic systems. Algorithms like Rivest–Shamir–Adleman (RSA) and elliptic curve cryptography (ECC), which are fundamental to modern encryption, could be vulnerable to quantum attacks.

One of the primary threats is the “Harvest Now, Decrypt Later” (HNDL) model, where attackers store encrypted data today with plans to decrypt it once quantum computers become viable. While large-scale quantum computers capable of breaking these cryptographic methods are not yet available, experts agree that preparing for this eventuality is crucial.

To safeguard against these quantum threats, Google integrates two NIST-approved PQC algorithms into Cloud KMS. The first is the ML-DSA-65 (FIPS 204), a lattice-based digital signature algorithm; the second is SLH-DSA-SHA2-128S (FIPS 205), a stateless, hash-based signature algorithm. These algorithms provide a quantum-resistant means of signing and verifying data, ensuring that organizations can continue to rely on secure encryption even in a future with quantum-capable adversaries.

Google’s decision to integrate these algorithms into Cloud KMS allows enterprises to test and incorporate quantum-resistant cryptography into their security workflows. The cryptographic implementations are open-source via Google’s BoringCrypto and Tink libraries, ensuring transparency and allowing for independent security audits. This approach is designed to help organizations gradually transition to post-quantum encryption without overhauling their entire security infrastructure.

The authors of a Google Cloud blog post write:

While that future may be years away, those deploying long-lived roots-of-trust or signing firmware for devices managing critical infrastructure should consider mitigation options against this threat vector now. The sooner we can secure these signatures, the more resilient the digital world’s foundation of trust becomes.

Google’s introduction of quantum-safe digital signatures comes at a time when the need for post-quantum security is becoming increasingly urgent. The rapid evolution of quantum computing, highlighted by Microsoft’s recent breakthrough with its Majorana 1 chip, raises concerns over the imminent risks of quantum computers. While these machines are not yet powerful enough to crack current encryption schemes, experts agree that the timeline to quantum readiness is narrowing, with NIST aiming for compliance by 2030.Top of Form

Phil Venables, a chief information security officer at Google Cloud, tweeted on X:

Cryptanalytically Relevant Quantum Computers (CRQCs) are coming—perhaps sooner than we think, but we can conservatively (and usefully) assume in the 2032 – 2040 time frame. Migrating to post-quantum cryptography will be more complex than many organizations expect, so starting now is vital. Adopting crypto-agility practices will mitigate the risk of further wide-scale changes as PQC standards inevitably evolve.

MMS • Shruti Bhat

Transcript

Bhat: What we’re going to talk about is agentic AI. I have a lot of detail to talk about, but first I want to tell you the backstory. I personally was at Oracle like eight years ago. I landed there through another acquisition. After I finished integrating the company, I said, let me go explore some new opportunities. I ended up leading AI strategy across all of Oracle’s products. Eight years ago, we didn’t have a lot of answers, and almost every CIO, CDO, CTO I spoke with, the minute we started talking about AI, they’d say, “Stop. I only have one question for you. I’ve been collecting all the semi-structured data, unstructured data, putting it in my data lake, like you guys asked me to, but that’s where data goes to die. How do I go from data to intelligent apps?”

Frankly, we didn’t have a great answer back then. That’s what took me on this quest. I was looking for startups we could acquire. I was looking for who was innovating. I ended up joining Rockset as a founding team member. I was the chief product officer over the last seven years there. We built this distributed search and AI database, which got acquired by OpenAI. Now, eight years later, I’m having a lot of very similar conversations where enterprises are asking, what we have today with AI, LLMs, are very interesting, ChatGPT, super interesting. Doesn’t write great intros, but it’s pretty good for other things. The question still remains, how do I go from my enterprise data to intelligent agents, no longer apps. That’s what we’re going to talk about.

GenAI Adoption

Before I get into it, though, let’s look at some data. Somebody asked me the other day if I believe in AI. I believe in God and I believe in data. Let’s see what data tells us. If you chart out the adoption of some of the most transformative technologies of our times, PCs, internet, and look at it two years out from when it was first commercialized. Like, what was the first commercially available version? For PCs, it goes back to 1981, when the IBM PC was commercialized. Then you plot it out two years later and see what was the rate of adoption. With GenAI, it’s almost twice that of what we saw with PCs and internet. That’s how fast this thing is taking over. That’s both incredibly exciting and creating a lot of disruption for a lot of people. When people now ask me if I believe in AI, I’m basically saying, here’s what the data says. I don’t want to be on the wrong side of history for this one.

GenAI vs. Agentic AI

The previous chart that I showed you, that was basically GenAI. We’re now talking about agentic AI. What’s the difference? The simplest way to think about or just internalize the main concept here is, with GenAI, we talk about zero-shot, and as you start getting better prompt engineering, you’d go into multi-shot. Really with agentic AI, we’re talking about multi-step. Think about how you and I work. If you’re writing code, you don’t just sit down and say, let me go write this big piece of code, and the first time you do it, end-to-end, assume that it’s going to be perfect. No, you take a series of steps. You say, let me go gather some more data around this. Let me go do some research. Let me talk to some other senior engineers. Let me iterate on this thing, test, debug, get some code reviews.

These are all the steps that you’re following. How can you possibly expect AI to just go in zero step and get it perfectly right? It doesn’t. The big advancement we’re seeing now is with multi-step and agentic AI. That brings us to the most simple definition of agent that there is. It can basically act. It’s not just generating text or not just generating images for you. It’s actually taking actions, so it has some autonomy. It can collaborate with other agents. This is very important. As we get into the talk here, you’ll see why the success depends on multi-agent collaboration. Of course, it has to be able to learn. Imagine an agent that’s helping you with your flight bookings. You might ask it to go rebook your tickets, and it might collaborate with a couple more agents to go look up a bunch of flights. It might collaborate with another agent to maybe accept your credit card payment.

Eventually, after it’s done the booking, it realizes it’s overbooked that flight. It has to learn from that mistake and do better next time. This is the most simple definition. We’re all engineers. We like precise definitions. The interesting thing that happened in the industry is this sparked a whole lot of debate on what exactly is the correct definition of an agent. Hien was literally debating against himself in the keynote, and that’s what we like to do. We like to debate. A very simple solution came out, which is, let’s just agree it’s agentic. We don’t really know what exactly an agent looks like, but now we can start looking at what agentic AI looks like.

I really like this definition. We’re not great at naming, but the definition makes sense, because the reality is that this lies on a spectrum. Agentic AI really lies on a very wide spectrum. That’s what your spectrum looks like. We talked a little bit about multi-step. In prompt engineering, what you’re doing is you’re just going zero-shot to multi-shot. You’re really trying to understand, how can I give a series of prompts to my LLM to get better outputs? Now with agentic AI, you’re very quickly saying, maybe I don’t want the same LLM to be called every single time. You might call the same LLM, or you might call a different LLM as you start realizing that different models do better on different dimensions.

Very quickly you realize that if, again, you think about a human, the most fundamental thing that a human does well is reasoning and memory. It’s not enough to have reasoning, you also need memory. You need to remember all the learnings that you’ve had from your past. That’s the long-term memory. Then you need memory between your steps, and that’s just the short-term working memory. The long-term memory typically comes from vector databases. In fact, Rockset, the database that I worked on over the last seven years, was an indexing database. What we did was we indexed all the enterprise data for search and retrieval.

Then, of course, added vector indexing so that you could now do hybrid search. Vector databases are great for long-term memory. There’s still a lot of new stuff emerging on the short-term working memory. That’s just one part of the equation. As you start adding memory, you want learning, and this is what reflection does. Reflection is basically where you say, I’m going to do the feedback loop iteratively and keep going and keep learning and keep getting better, until you get to a point where you’re not getting any more feedback. It can continue. Learning is endless. Every time something changes externally, the learning loop kicks in again, and you keep having more reflection. This is like a self-refined loop, and this is super important for agentic AI.

Planning and tool use is another big area that’s emerging. In fact, when you see LLMs doing planning, it’s quite mind boggling. As you get more senior in your role, you get really good at looking at a big problem and breaking it down into specific things. You think about, how do you chunk it up into smaller tasks? You think about, who do you assign it to? In what sequence does it need to be done? Again, an LLM is really good at doing this kind of planning and getting those steps executed in the right way. The tool use portion is just as important, because as it’s planning, it’s planning what tools to use as well. Those tools are supplied by you, so the quality of your output depends on what tools you’re supplying. Here I have some examples, like search, email, calendaring. That’s not all. You can think about the majority of the tools actually being code execution. You decide what it needs to compute, and you can give it some code execution, and those can be the functions that it calls and uses as tools.

Eventually, you can imagine agents using all these tools to do their work. Again, you control the quality of that output by giving it excellent tools. As you start thinking about this, you get into multi-agent collaboration really fast. When you think about the discussion around agents so far, the reason for this is the multi-agent collaboration gives you much better output. This actually is some really interesting data that was shared by Andrew Ng of DeepLearning.AI. HumanEval is a coding benchmark, so it basically has a series of simple programming prompts. Think of it like something you’d see on a basic software engineering interview, and you can use that to benchmark how well your GPT is performing.

In this case, 3.5 outperforms GPT-4 when you use agentic workflows. That’s really powerful. Most of you know that between 3.5 and 4, there was a drastic improvement. You can almost see that on the screen there. If you look at just zero-shot between 3.5 and 4, the improvement was amazing, but now 3.5 is already able to outperform GPT-4 if you layer on or wrap agentic workflows on top.

As you look at this, the next layer of complexity is, let’s get into multi-agent workflows, because there is absolutely no way an agent can do an entire role. The agent can only do specific tasks. What you really want to do is, you scope the task, you decide how small the task can be, and then you have one agent just focusing on one specific task. The best mental model I can give you is, think about microservices. Some of you may love it. Some of you may hate it. The reason you want to think about microservices is the future we’re looking at already is a micro agent future. You’re not going to have these agents that just resemble a human being. You’re going to have agents that do very specific tasks and they collaborate with each other.

Where Are We Headed? (Evolution and Landscape of Agentic AI)

If many of you have seen some of the splashy demos that came out earlier and then said, this simply does not work in production, this is the real reason that agents failed earlier this year. People tried to create these agents that would literally take on entire roles. I’m going to have an agent that’s going to be an SDR. I’m going to replace all these SDRs. I’m going to be an agent that replaces software engineers. It’s not happening. The reason is, going from that splashy demo to production is extremely hard. Today, the state of the art is very much, let’s be realistic. Let’s break it down to very small, simple tasks. Let’s narrow the scope as much as we possibly can, and let’s allow these micro agents to orchestrate, coordinate, and collaborate with each other. If you imagine that, you can envision a world where you have very specific things.

Going back to your software engineering example, you have an agent that starts writing code. You have another agent that is reviewing the code, all it does is code review. You have another agent that’s planning and scheduling and allowing them to figure out how they break down their tasks. You might even have a manager agent that’s overseeing all of them. Is this going to really replace us? Unlikely. Think of them as your own little set of minions that do your work for you.

However, like somebody asked me, does that mean now I have to manage all these minions? That’s the last thing I want to do. Not really, because this is where your supervisor agents come in, your planner agents, your manager agents come in. You will basically interface with a super minion that can handle and coordinate and assign tasks and report back to you. This is just like the right mental model, as you think about where are we headed and how do we think about the complexity that this is going to create if everybody in the organization starts creating a set of agents for themselves? This was my thesis, but I was really happy to see that this view of the world is starting to emerge from external publications as well.

This was actually McKinsey publishing their view of how you’re going to have all these specialist agents. They not only use the tools that you provide to them, they also query your internal databases. They might go query your Salesforce. They might go query wherever your data lives, depending on what governance you lay on, and what access controls you give these agents.

Agentic AI Landscape: Off-the-Shelf vs. Custom Enterprise Agents

That brings me to, what is the agentic AI landscape as of today? Some of these startups might actually be familiar to you. Resolve.ai had a booth at QCon. I’m by no means saying that these are the winning startups. All I’m saying is these are startups where I’ve personally spent time with the investors, with the CEOs, going super deep into, what are they building? What are the challenges? Where do they see this going? I’ll give you a couple of these examples. Sierra AI is building a customer service agent. SiftHub, which is building a sales agent, very quickly showed me a demo that says, “We’re not trying to create a salesperson. We’re just trying to automate one very specific task, and that is as specific as just responding to RFPs”. If you’ve ever been in a situation where your sales team is saying, “I need input from you. I need to go respond to this RFP, or I need input from you to go through the security review”.

That is a very specific task in a salesperson’s end-to-end workflow, and that’s really where SiftHub is focusing their sales agent. That’s how specific it gets. Resolve.ai is calling it an AI production engineer, but if you look at the demo, it shows you, basically, it’s in Slack with you, so basically, it’s exactly where you live. It’s an on-call support. When you’re on-call, it helps you to remediate. It’s very simple. It’s RCA remediation. It only kicks in when you’re on-call. This is where all the startups are focusing. When you look at enterprise platforms, and the enterprise companies are coming in saying, this is great. Startups are going in building these small, vertical agents.

If you look at an end-to-end workflow in any enterprise, there’s no way these startups are going to build all these little custom agents that they need, so might as well create a way for people to go build their own custom agents. Most of the big companies, Salesforce, Snowflake, Databricks, OpenAI are all starting to talk about custom enterprise agents. How do you build them? How do you deploy them, using the data that already lives on their platform? Each of them is taking a very different approach.

Salesforce coming at it from having all your customer data in there. To Databricks and Snowflake coming at it from a very different perspective, because they’re being your warehouse or your data lake. To OpenAI, of course, coming in with having both the LLM. This is really the choice that you have. Do you use off-the-shelf or do you build your own custom enterprise agents? The real answer is, as long as you can use an off-the-shelf agent, you want to do that. There’s just not going to be enough off-the-shelf agents. In that case, you might want to build a custom agent for yourself. It is really hard.

Custom AI Agents – Infra Stack and Abstractions

That’s what we’re going to talk about. What are the challenges in building these custom agents? Where is the infra stack today? What are the abstractions that you want to think about and layer on? How do you approach this problem space? I’m just going to simplify the infra building blocks here. Most of these are things that you’ve already done for your applications. This is where all the learning that you’ve had in building enterprise applications really transfers over. Of course, at the foundational level, you have the foundation models, that’s what’s giving you the reasoning.

Then you have your data layer, whether it’s your data lake, and of course, your vector database, giving you the memory. The context and memory and the reasoning give you the foundation. As you get into the many micro agents that we talked about, the real challenges are going to show up in the orchestration and governance layer. Because somebody was asking me, again, isn’t it easier for us to just wait for the reasoning to become so good that we don’t have to deal with any of this? Not really. Because if you think about how this is developing, I’m going to give you a real example, it’s not like, let’s say you’ve been hiring high school interns at work. They’re your equivalent of saying, I just have a simple foundational model that can do simple tasks using general knowledge.

As these models get better, it’s not like you’re suddenly getting a computer science engineer or you’re getting some PhD researcher. What you’re really getting is the IQ of that intern has gotten better and higher, but they’re still not super specialized, so you will have to give it the context. You will have to build in the specialization, irrespective of how much advancement you start seeing. You will see a lot more advancement on the reasoning side, but all these other building blocks are still with you. The governance layer here is really complicated. Again, a lot of the primitives that we’ve had on the application governance, it still carries over. How do you think about access controls? How do you think about privacy? How do you think about access to sensitive data? If an agent can act on your behalf, how do you make sure that these agents are carrying over the privileges that were assigned to the person who created the agent? There’s a lot of complexity that you need to think through.

Similarly, on the orchestration side too, when you start thinking about multi-agent workflows, it’s not simply, this agent is now passing information or passing the ball to another agent. What ends up happening is now you suddenly see distributed systems. Because, again, when LLM starts planning the work, it immediately starts distributing it. Now all the orchestration challenges that you’re very familiar with in distributed systems are going to show up here. It’s up to you and how you want to orchestrate all of this.

Then, on the top layer, you will see conspicuously missing, much talk about UIs. Why? Because the way you interact with an agent is going to be very different. Whether it’s conversational or whether it’s chat, you’re going to interact with it in the channels that you live today. You really don’t want to go to a bunch of different tools and log in, and forget the password every single time. You just have an agent, and that agent now is collaborating with a bunch of other agents to get the work done. The real top layer is going to be SDKs and APIs’ access to the tools that you are providing it. This is where it’s up to you to control which tools it can access. What is the quality of the tools that you’re providing it? How do you set up the infra building blocks in a way that is really scalable?

I want to spend a minute on specifically the context question, because you think about where we opened and said, we’re still figuring out how do you bring all of the enterprise context into agentic AI? There are a few different ways to go about it. We talked about prompt engineering. I always say, if you can get away with prompting, you want to stay there. It’s the simplest and most flexible way to get the job done. Many times, prompting is just not enough.

Then you look at more advanced techniques, RAG, or Retrieval Augmented Generation is the simplest, next best alternative. What you basically do with RAG is you’re indexing all your data. Imagine a vector database, Rockset. What we built is a great example here. Imagine you’re indexing your enterprise data so that now, as you’re building your agentic workflows, you’re building in the reasoning alongside your enterprise context. The challenge here is, it’s first very complicated, setting up your RAG pipeline, choosing your vector database, scaling your vector database, not that simple. The other big challenge you’re going to run into is, it’s extremely expensive.

Indexing is not cheap, as you know. Real-time indexing, which is what you run into when you really want agents that can act in real-time. Real-time indexing is very expensive, and you want to keep your data updated. Unless you really need it, you don’t want to go there. Fine-tuning is when you have much more domain-specific needs and training. I’d rather not go there, unless you absolutely have to, because as an enterprise, you’re much more in control of your data, your context, and much better to make a foundational model work for you. There are plenty of good ones out there.

Take your pick, open source, like really fast-paced development there. It’s very expensive too. Training your own model is extremely hard, extremely expensive, and just getting the talent to go train your own models in this competitive environment, forget about it. I think the first three are really the most commonly deployed. Training, yes, if you have extremely high data maturity and domain-specific needs.

The other thing to remember here is, all these building blocks we talked about are changing very fast. If you’re going to expose all of these to your end users, it’s going to be very hard. The best thing you can do is think about, what are the right abstractions from the user’s perspective? I have Salesforce as an example here because I thought they did a good job of really thinking about, what is the end user thinking about? How do they create agents? Really exposing those abstractions to everybody in the enterprise, and then decoupling your infra stack from how users create and manage their agents. The minute you do this decoupling, it gives you a lot more flexibility and allows you to move much faster as your infra stack starts changing.

Advancing Your Infrastructure for Agentic AI

With that, let’s talk more into what are the real challenges in your infra stack? I think we talked about the building blocks, but it looks very simple. It’s pretty complex under the hood. This statement, “Software is eating the world, but AI is going to eat software”, I couldn’t agree more. I’ve been having some conversations with some of the startups doing agentic AI and asking them how their adoption is going. The biggest finding for them is how quickly they’re able to go and show companies that they can go reduce their SaaS application licenses. You have 300 licenses of, name your favorite SaaS vendor, we’ll bring that down to 30 by the end of the year, and 5 the following year.

That’s the trajectory that you’re talking to customers about, and they’re already seeing that. They’re seeing customers starting to retire. One of the more famous ones was the Klarna CEO actually made a statement saying that they are now completely retiring Workday and Salesforce in favor of agentic AI. That is really bold and fast, if they’re able to get there that quickly. That is the thinking. Klarna was actually my customer while we were at Rockset. All of the innovation was happening in the engineering team. The engineers were moving incredibly fast, experimenting with AI, and before you know it, we have the Klarna CEO make a statement like this. This is going to be a very interesting world.

This tradeoff is one of the hardest to make. Having worked on distributed databases for the last few years, we spent so much time thinking about the price, performance tradeoff, and how do you give users more control so they can make their own tradeoffs? Now you have the cost, accuracy, latency tradeoff. Accuracy, especially like going from 95% to 98%, it’s like 10x more expensive. You really have to think deeply about what is the use case, and what tradeoff does it need? Latency, again, real-time is very expensive. Unless your agent needs to answer questions about flight booking and what seats are available right now, you might not need that much real-time.

Maybe you can make do with an hour latency, as long as you set up the guardrails in a way that this agent is going to be an hour behind. Cost, I had this really interesting experience. I was in the room. We were having this really heated debate between product and engineering. The product managers are like, I need this kind of performance. Why can’t you deliver? The engineers were like, you’re out of your mind. There’s no way we can ever get there at that scale. This is ridiculous. After 10 minutes of this, someone asked a very simple question, what is the implicit cost assumption we’re making here? That is really the disconnect between what product managers are saying and what the engineers are saying. Engineers know there’s a fixed budget, and the PMs are only thinking about, I can go charge the customer more, but just give me the performance. This disconnect is very real.

The only advice I have here is, think very deeply about your use case and what tradeoffs you’re making for your use case. Communicate it with all your stakeholders as early and often as you can, because these can’t be implicit assumptions, you have to make sure everybody’s on the same page. Then know that these tradeoffs will keep changing, especially given how fast the models are changing. You’re going to get advancements. These tradeoffs will change. If you made certain assumptions and bake them in, all hell will break loose when the next model comes out.

The previous one was about tradeoffs. Here there’s no or, this is an and. You absolutely need to layer on trust as one of the most important layers here. There are four key components. You’re thinking about transparency. Literally, nobody will adopt agentic AI, or any sort of AI if they cannot understand what was the thought process behind it. What were the steps? If you’ve even used GPT search, it now gives you links that tell you where am I getting this information from. If you want credibility, you want adoption, you have to build explainability right into it. I want to give a shout out to Resolve.ai for their demo, immediately, not only does it do an RCA, it tells you why. Why does it think this is the root cause, and gives you links to all of the information that it’s using to get there.

That’s explainability you want to bake in. Observability is hard. You know all the application logging and monitoring in everything that you’ve built in, how do you now apply that to agentic AI when agents are going in, taking all these actions on behalf of humans? Are you going to log every single action? You probably have to because you need to be able to trace back. What are you going to monitor? How are you going to evaluate? There’s just so many challenges on the observability side. This is where, as senior engineers, all the learnings that you’ve had from application domain, can transfer over here. This is still an emerging field. There aren’t a lot of easy answers. We’ve talked a little bit about governance.

How do you ensure that the agent is only accessing the data it’s supposed to? How do you ensure that it’s not doing anything that is not authorized? How do you make sure that it’s not getting malicious attacks, because anytime you create this new surface vector, you’re going to have more attacks? We haven’t even seen the beginning of what kind of cybersecurity challenges we’re going to run into once you start giving agentic AI more controls. The more you can get ahead of this now, think about these challenges, because this is moving so fast. Before you know it, you’ll have somebody in the company saying, yes, we’ve already built a couple of agents and deployed it here. What about all these other things?

The scalability concerns are also extremely real. When OpenAI was talking to us, I was looking at your scale, and it completely blew my mind. It was 100x more than anything I’d ever seen. That kind of scalability is by design. You have to bake it in from day one. The best approach here is to take a very modular approach. Not only are you breaking down your entire workflow into specific agents, you’re also thinking about, how do you break up your agent itself into a very modular approach so that each of them can be scaled independently, debugged independently?

We talked about the data stack, your foundational model. Make sure every single thing is modular, and that you have monitoring and evaluation built right into it. Because what’s going to happen is, you’re going to have a lot more advancements in every part of your stack, and you have to be able to swap that piece out depending on what the state of the art is. As you swap it out, you have to be able to evaluate the impact of this new change. You’re going to see a lot of regressions if GPT-5 comes out tomorrow, and you go, let me just swap out 4o and just drop in 5. I have no idea what is going to break. I’m speaking about GPT, but you can apply this to Claude. You can apply this to anything.

The next version comes out, you’re going to see a bunch of regressions because we just don’t know what this is going to look like. I think Sam Altman in one of his interviews made a really interesting statement, he said, the startups that are going to get obliterated very soon would be the ones that are building for the current state of AI, because everything is going to change. Especially, how do you think about this when maybe your development cycles are 6 to 12 months, whereas the advancements in AI are happening every month, or every other month.

Unknown Unknowns

That brings us to what we call the unknown unknowns. This is the most difficult challenge. When we were building an early-stage startup at Rockset, we used to say this, building a startup is like driving on a winding road at night with only your headlights to guide you. Of course, it’s raining, and you have no idea what unknown unknowns you’re going to hit. It’s kind of where we are today. The best thing to do is say, what things are going to change? Let’s bake in flexibility and agility into everything that we do. Let’s assume that things are going to change very fast. You can choose to wait it out. You might be thinking, if things are changing so fast, why don’t I just wait it out? You could, but then your competitor is probably not waiting it out. Other people in your company are not going to wait. Everybody is really excited. They start deploying agents, and before you know it, this is going rogue.

Lean Into The 70-20-10 (Successful Early Adopters Prioritize the Human Element)

This is really the most interesting piece as I’ve seen companies go through this journey. What ends up happening is, all of us, we love to talk about the tech, the data stack, the vector databases, which LLM, let’s compare all these models. Let’s talk about what algorithms, like what tools are we going to give to the agents. This is only less than 30%, ideally, of your investment. Where you want to focus is on the people and the process. Because what ends up happening, if you envision this new world where you’re going to have your own personal set of minions, not just you, but everybody in the company starts creating all of these agents or micro agents which are collaborating with each other, and with your agents, and maybe even some off-the-shelf agents that you’ve purchased, can you imagine how much that is going to disrupt the way we do work today?

This whole concept of, let’s open up this application, log in to this UI, and do all of these things, just goes away. That is going to be much harder than anything we can imagine on the agentic AI technology advancements, because ultimately you’re disrupting everything that people know. It was really fun to hear Victor’s presentation on multi-agent workflows. In one slide he mentioned, let’s make sure the agents delegate some of the tricky tasks to humans. I still believe, humans will be delegating work to agents, and not the other way round. How do you delegate tasks to agents? What should you delegate? How do you make sure that agents understand the difference in weights between all the different tasks that it can do? That is going to be really hard.

It is a huge opportunity to stop doing tedious tasks. It is a huge opportunity to get a lot more productive. It comes at the cost of a lot of disruption. It comes with people and processes having to really readjust to this new world of working. What we’re finding is that most of the leaders who are embracing this change are spending 70% of their time and energy and investment into people and processes, and only 30% on the rest. This is a really good mental model to have.

Conclusion

We haven’t talked at all about ethics. Eliezer is one of the researchers on ethics. I think the greatest danger really is that we conclude that we understand it, because we are just scratching the surface. The fun is just beginning.

See more presentations with transcripts

MMS • Courtney Nash

Transcript

Shane Hastie: Good day folks. This is Shane Hastie for the InfoQ Engineering Culture Podcast. Today I’m sitting down with Courtney Nash. Courtney, welcome. Thanks for taking the time to talk to us.

Courtney Nash: Hi Shane. Thanks so much for having me. I am an abashed lover of podcasts, and so I’m also very excited to get the chance to finally be on yours.

Shane Hastie: Thank you so much. My normal starting point with these conversations is who’s Courtney?

Introductions [00:56]

Courtney Nash: Fair question. I have been in the industry for a long time in various different roles. My most known, to some people, stint was as an editor for O’Reilly Media for almost 10 years. I chaired the Velocity Conference and that sent me down the path that I would say I’m currently on, early days of DevOps and that whole development in the industry, which turned into SRE. I was managing the team of editors, one of whom was smart enough to see the writing on the wall that maybe there should be an SRE book or three or four out there. And through that time at O’Reilly, I focused a lot on what you focus on, actually, on people and systems and culture.

I have a background in cognitive neuroscience, in cognitive science and human factors studies. And that collided with all of the technology and DevOps work when I met John Allspaw and a few other folks who are now really leading the charge on trying to bring concepts around learning from incidents and resilience engineering to our industry.

And so the tail end of that journey for me ended up working at a startup where I was researching software failures, really, for a company that was focusing on products around Kubernetes and Kafka, because they always work as intended. And along the way I started looking at public incident reports and collecting those and reading those. And then at some point I turned around and realized I had thousands and thousands of these things in a very shoddy ad hoc database that I still to this day maintain by myself, possibly questionable. But that turned into what’s called The VOID, which has been the bulk of my work for the last four or five years. And that’s a large database of public incident reports.

Just recently we’ve had some pretty notable ones that folks may have paid attention to. Things like when Facebook went down in 2021 and they couldn’t get into their data center. Ideally companies write up these software failure reports, software incident reports, and I’ve been scooping those up into a database and essentially doing research on that for the past few years and trying to bring a data-driven perspective to our beliefs and practices around incident response and incident analysis. That’s the VOID. And most recently just produced some work that I spoke at QCon about, which is how we all got connected, on what I found about how automation is involved in software incidents from the database that we have available to us in The VOID.