Month: February 2025

The Future of Cybersecurity: Moksha Investigates Hybrid ML Models for Critical Infrastructure

MMS • RSS

PRESS RELEASE

Published February 16, 2025

Moksha Shah: Cybersecurity Research Pioneer

The modern world today is interconnected, and cyber-based threats are evolving at a fast pace, making traditional mechanisms for security ineffective. It is Moksha Shah, an Information Technology-embedded researcher, who works day and night to ameliorate the situation. Cocking a keen eye at the crossroads of Cybersecurity and AI, Moksha investigates Hybrid Machine Learning (ML) models, which constitute the latest big thing employing diverse AI methodologies to create an intelligent, more dynamic Cybersecurity system.

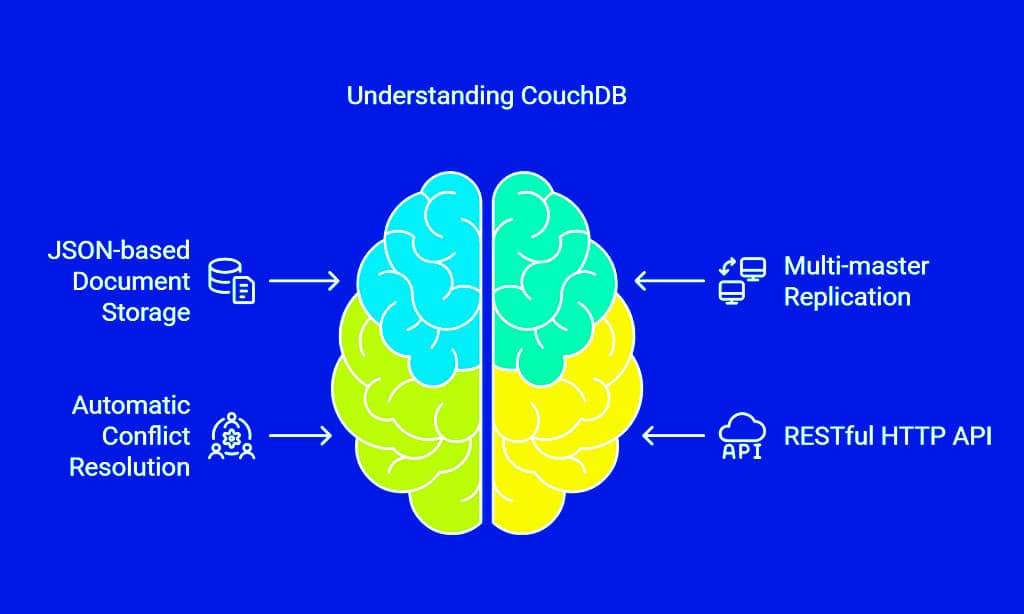

Moksha Shah is a Java developer with 5+ years of experience specializing in API development, backend solutions, and cybersecurity. She has designed and implemented RESTful and SOAP APIs using Java and frameworks like Spring Boot and JAX-RS. Moksha has extensive experience integrating APIs with databases like MySQL, SQL Server, Oracle DB, and MongoDB. She is proficient in developing responsive web applications using JavaScript, HTML5, CSS3, Angular.js, and JSTL. Additionally, Moksha is proficient in Python, applying it in the fields of cybersecurity, AI and Machine Learning. She has developed security solutions, automated tasks, and built AI models for threat detection and response. She is adept at using agile methodologies, including TDD and SCRUM, and has a solid understanding of building scalable, efficient, and secure web services. Moksha is also proficient in API testing and documentation tools such as Postman and Swagger, and has a strong background in backend technologies and cloud solutions.

Moksha’s research endeavor revolves around the protection of critical systems, including power grids, banks, healthcare service providers, and government networks. This is so because these Criticals are paramount to society, and their safety becomes a matter of national economy and security. With the onset of AI-enabled cyberattacks, ransomware, and nation-state attacks from within states, it remains glaringly evident that these traditional mechanics of security are fading. Moksha’s research activity intends to fill this gap with the application of Hybrid ML models capable of detecting, identifying, and blocking a cyber threat instantly.

The Latest Project: Hybrid ML to Secure Critical Systems

The latest project Moksha is investigating Hybrid Machine Learning Models that combine Supervised Learning, Unsupervised Learning, and Reinforcement Learning techniques to develop a multi-layered self-healing cybersecurity system. Compared to traditional rule-based security mechanisms, this system is to a greater extent advanced in that it combines AI intelligence that learns, adapts, and ensures its preservation.

Why Traditional Cybersecurity is Failing

The advancements in cybersecurity have not kept organizations immune to sophisticated attacks-that is, attacks that have successfully evaded traditional mechanisms of defense. Some of the trickiest deficiencies in traditional security include:

- Static Rule-Based Systems-Traditional security relies on a very predefined rule set and a signature set, which is easy for the attacker or any cybercriminal to evade.

- Slow Threat Detection- Most security systems work on an after-the-fact approach-they focus on detecting the threat after the attack has taken place; this, however, comes with a significant cost in terms of damage and downtime.

- Inability to Predict Future Attacks-Contrary to modern solutions, classic ones do not build in predictive intelligence, making it entirely impossible to anticipate future cyber threats.

How the Hybrid ML Model Resolves These Challenges

The hybrid ML model is leading the revolution in cybersecurity, overcoming the stated weaknesses with:

- Real-Time Threat Detection-In AI systems, continuous network behavior analysis assists in identifying anomalies that can indicate a cyberattack.

- Adaptive Defense Mechanisms-The hybrid ML model is therefore ever-evolving and learns to adjust its active defense mechanism against a new threat, unlike other systems that operate statically.

- Security Intelligence-Predictive models can determine if a vulnerability exists and whether it is likely to be exploited before an attacker exploits it. Predictive ML tools have better accuracy in determining cyber events.

- Automated Response System- Automated threat response within this system will now integrate AI-driven automated systems and action in real-time with the least possible intervention from humans.

Moksha’s research embodies deploying cutting-edge AI algorithms to envision and establish a proactive cybersecurity framework that stops attacks before they happen, rather than responding to them.

Main Uses of Hybrid ML in Cybersecurity

The hybrid ML models going through changing environments are creating waves in the world of cyberspace:

- Financial Institutions: Acts as a real-time fraud detection to prevent identity theft and protect banking systems from cyberattacks-driven AI.

- Health Systems: To protect against unauthorized access to patient information; furthermore, it secures IoT-enabled medical devices from being hacked.

- Government & Defense: Performing national strength in cybersecurity, preventing strategies against cyber-espionage, and automating security for critical systems.

- Smart Cities & IoT: To detect infrastructure anomalies, safeguard connected devices, and further develop cloud security for data from smart cities.

Reasons Why Hybrid ML Is the Cyber Security Tomorrow

Cybercriminals have been using AI to launch vigilant and evasive attacks. The ongoing AI-generated attacks compel immediate counteractions from the organizational side. A hybrid ML model addresses such counteractions:

- Proactive: It can forestall cyberattacks.

- Smart: It learns about new threats and adapts accordingly.

- Scalable: It can be implemented on large networks without compromising speed or efficiency.

Conclusion: Preparing for Tomorrow’s AI-Powered Cyber Threats

The future of cyber defense systems lies in advanced, AI-based mechanisms-Hybrid Machine Learning Models are for sure the driving engine behind this change. Moksha Shah’s research will bring forth new horizons for a world of cybersecurity in which organizations do not have to wait in reactive mode for an attack, responding with a self-learning AI-driven security, but can actually prevent such attacks.

With the evolution and magnification of cyber threats, businesses, governments, and critical infrastructure providers must turn towards AI-enabled cybersecurity frameworks in order to safeguard.

Track new cyber threats with Moksha Shah’s study on the latest in Hybrid ML-based cybersecurity.

Vehement Media

Java News Roundup: JDK 24-RC1, JDK Mission Control, Spring, Hibernate, Vert.x, JHipster, Gradle

MMS • Michael Redlich

This week’s Java roundup for February 10th, 2025 features news highlighting: the first release candidate of JDK 24; JDK Mission Control 9.1.0; milestone releases of Spring Framework 7.0, Spring Data 2025.0.0 and Hibernate 7.0; release candidates of Vert.x 5.0.0 and Gradle 8.13.0; and JHipster 8.9.0.

OpenJDK

The release of JDK Mission Control 9.1.0 provides bug fixes and improvements such as: the ability to use the custom JFR event types, i.e., those extending the Java Event class, in the JFR Writer API followed by registering those types; and the ability to use primitive types in converters. More details on this release may be found in the list of issues.

JDK 24

Build 36 remains the current build in the JDK 24 early-access builds. Further details may be found in the release notes.

As per the JDK 24 release schedule, Mark Reinhold, Chief Architect, Java Platform Group at Oracle, formally declared that JDK 24 has entered its first release candidate as there are no unresolved P1 bugs in Build 36. The anticipated GA release is scheduled for March 18, 2025 and will include a final set of 24 features. More details on these features and predictions for JDK 25 may be found in this InfoQ news story.

JDK 25

Build 10 of the JDK 25 early-access builds was also made available this past week featuring updates from Build 9 that include fixes for various issues. Further details on this release may be found in the release notes.

For JDK 24 and JDK 25, developers are encouraged to report bugs via the Java Bug Database.

Spring Framework

The second milestone release of Spring Framework 7.0.0 delivers new features such as: improvements to the equals() method, defined in the AnnotatedMethod class, and the HandlerMethod to resolve failed Cross-Origin Resource Sharing (CORS) configuration lookups; and a refinement of the GenericApplicationContext class that adds nullability using the JSpecify @Nullable annotation to the constructorArgs parameter listed in the overloaded registerBean() method. More details on this release may be found in the release notes.

Similarly, versions 6.2.3 and 6.1.17 of Spring Framework have also been released to provide new features such as: improvements in MVC XML configuration that resolved an issue where the handler mapping, using an instance of the the AntPathMatcher class, instead used an instance of the PathPatternParser class; and a change to the ProblemDetails class to implement the Java Serializable interface so that it may be used in distributed environments. These versions will be included in the upcoming releases of Spring Boot 3.4.3 (and 3.5.0-M2) and 3.3.9, respectively. Further details on this release may be found in the release notes for version 6.2.3 and version 6.1.17.

The first milestone release of Spring Data 2025.0.0 ships with new features such as: support for vector search for MongoDB and Cassandra via MongoDB Atlas and Cassandra Vector Search; and a new Vector data type that allows for abstracting underlying values within a domain model that simplifies the declaration, portability and default storage options. More details on this release may be found in the release notes.

Similarly, Spring Data 2024.1.3 and 2024.0.9, both service releases, ship with bug fixes, dependency upgrades and and respective dependency upgrades to sub-projects such as: Spring Data Commons 3.4.3 and 3.3.9; Spring Data MongoDB 4.4.3 and 4.3.9; Spring Data Elasticsearch 5.4.3 and 5.3.9; and Spring Data Neo4j 7.4.3 and 7.3.9. These versions will be included in the upcoming releases of Spring Boot and 3.4.3 and 3.3.9, respectively.

The release of Spring Tools 4.28.1 provides: a properly signed Eclipse Foundation distribution for WindowOS; and a resolution to an unknown publisher error upon opening the executable for Spring Tool Suite in Windows 11. Further details on this release may be found in the release notes.

Open Liberty

IBM has released version 25.0.0.2-beta of Open Liberty features the ability to configure the MicroProfile Telemetry 2.0 feature, mpTelemetry-2.0, to send Liberty audit logs to the OpenTelemetry collector. As a result, the audit logs may be managed with the same solutions with other Liberty log sources.

Micronaut

The Micronaut Foundation has released version 4.7.6 of the Micronaut Framework featuring Micronaut Core 4.7.14, bug fixes and a patch update to the Micronaut Oracle Cloud module. This version also provides an upgrade to Netty 4.1.118, a patch release that addresses CVE-2025-24970, a vulnerability in Netty versions 4.1.91.Final through 4.1.117.Final, where a specially crafted packet, received via an instance of the SslHandler class, doesn’t correctly handle validation of such a packet, in all cases, which can lead to a native crash. More details on this release may be found in the release notes.

Hibernate

The fourth beta release of Hibernate ORM 7.0.0 features: a migration to the Jakarta Persistence 3.2 specification, the latest version targeted for Jakarta EE 11; a baseline of JDK 17; improved domain model validations; and a migration from Hibernate Commons Annotations (HCANN) to the new Hibernate Models project for low-level processing of an application domain model. Further details on this release may be found in the release notes and the migration guide.

The release of Hibernate Reactive 2.4.5.Final features compatibility with Hibernate ORM 6.6.7.Final and provides resolutions to issues: a Hibernate ORM PropertyAccessException when creating a new object via the persist() method, defined in the Session interface, with an entity having bidirectional one-to-one relationships in Hibernate Reactive with Panache; and the doReactiveUpdate() method, defined in the ReactiveUpdateRowsCoordinatorOneToMany class, ignoring the return value of the deleteRows() method, defined in the same class. More details on this release may be found in the release notes.

Eclipse Vert.x

The fifth release candidate of Eclipse Vert.x 5.0 delivers notable changes such as: the removal of deprecated classes – ServiceAuthInterceptor and ProxyHelper – along with the two of the overloaded addInterceptor() methods defined in the ServiceBinder class; and support for the Java Platform Module System (JPMS). Further details on this release may be found in the release notes and deprecations and breaking changes.

Micrometer

The second milestone release of Micrometer Metrics 1.15.0 delivers bug fixes, improvements in documentation, dependency upgrades and new features such as: the removal of special handling of HTTP status codes 404, Not Found, and 301, Moved Permanently, from OkHttp client instrumentation; and a deprecation of the SignalFxMeterRegistry class (step meter) in favor of the OtlpMeterRegistry class (push meter). More details on these releases may be found in the release notes.

The second milestone release of Micrometer Tracing 1.5.0 provides dependency upgrades and features a deprecation of the ArrayListSpanProcessor class in favor of the Open Telemetry InMemorySpanExporter class. Further details on this release may be found in the release notes.

Piranha Cloud

The release of Piranha 25.2.0 delivers many dependency upgrades, improvements in documentation and notable changes such as: removal of the GlassFish 7.x and Tomcat 10.x compatibility extensions; and the ability to establish a file upload size in the FileUploadExtension, FileUploadMultiPart, FileUploadMultiPartInitializer and FileUploadMultiPartManager classes. More details on this release may be found in the release notes, documentation and issue tracker.

Project Reactor

Project Reactor 2024.0.3, the third maintenance release, providing dependency upgrades to reactor-core 3.7.3, reactor-netty 1.2.3, reactor-pool 1.1.2. There was also a realignment to version 2024.0.3 with the reactor-addons 3.5.2, reactor-kotlin-extensions 1.2.3 and reactor-kafka 1.3.23 artifacts that remain unchanged. Further details on this release may be found in the changelog.

Similarly, Project Reactor 2023.0.15, the fifteenth maintenance release, provides dependency upgrades to reactor-core 3.6.14, reactor-netty 1.1.27 and reactor-pool 1.0.10. There was also a realignment to version 2023.0.15 with the reactor-addons 3.5.2, reactor-kotlin-extensions 1.2.3 and reactor-kafka 1.3.23 artifacts that remain unchanged. More details on this release may be found in the changelog.

JHipster

The release of JHipster 8.9.0 features: dependency upgrades to Spring Boot 3.4.2, Node 22.13.1, Gradle 8.12.1, Angular 19.0.6 and Typescript 5.7.3; and support for plain time fields (Java LocalTime class) without it being tied to a date to the JHipster Domain Language (JDL). Further details on this release may be found in the release notes.

Gradle

The first release candidate of Gradle 8.13.0 introduces a new auto-provisioning utility that automatically downloads a JVM required by the Gradle Daemon. Other notable enhancements include: an explicit Scala version configuration for the Scala Plugin to automatically resolve required Scala toolchain dependencies; and refined millisecond precision in JUnit XML test event timestamps. More details on this release may be found in the release notes.

Distributed Multi-Modal Database Aerospike 8 Brings Support for Real-Time ACID Transactions

MMS • RSS

Aerospike has announced version 8.0 of its distributed multi-modal database, bringing support for distributed ACID transactions. This enables large-scale online transaction processing (OLTP) applications like banking, e-commerce, inventory management, health care, order processing, and more, says the company.

As Aerospike director of product Ronen Botzer explains, large-scale applications all require some horizontal scaling to support concurrent load and reduce latency, which inevitably brings the CAP Theorem into play.

The CAP theorem states that when a network partitions due to some failure, a distributed system may be either consistent or available. In contrast, both of these properties can be guaranteed in the absence of partitions. For distributed database systems, the theorem led to RDBMS usually choosing consistency via ACID, with NoSQL databases favoring availability following the BASE paradigm.

Belonging to the NoSQL camp, Aerospike was born as an AP (available and partition-tolerant) datastore. Later, it introduced support for ACID with its fourth release by allowing developers to select whether a namespace runs in a high-availability AP mode or a high-performance CP mode. CP mode in Aerospike is known as strong consistency (SC) and provides sequential consistency and linearizable reads, guaranteeing consistency for single objects.

While Aerospike pre-8.0 has been great at satisfying the requirements of internet applications […] limiting SC mode to single-record and batched commands left something to be desired. The denormalization approach works well in a system where objects are independent of each other […] but in many applications, objects actually do have relationships between them.

As Botzer explained, the existence of relationships between objects makes transactions necessary, and many developers had to build their own transaction mechanism on top of a distributed database. This is why Aerospike built native distributed transaction capabilities into Database 8, which meant providing strict serializability for multi-record updates and doing this without hampering performance.

Aerospike distributed transactions have a cost, which includes four extra writes and one extra read, so it is important to understand the performance implications they have. Tests based on Luis Rocha’s Chinook database showed results in line with those extra operations, meaning that smaller transactions are affected most while overhead is amortized in larger ones. All in all, says Botzer,

Transactions perform well when used judiciously together with single-record read and write workloads.

ACID transactions display properties designed to ensure the reliability and consistency of database transactions, i.e., atomicity, consistency, isolation, and durability. They guarantee that database operations are executed correctly. If there is any failure, the database can recover to a previous state without losing any data or impacting the consistency of the data. BASE systems opt instead for being Basically Available, Soft-stated, and Eventually consistent, thus giving up on consistency.

Distributed Multi-Modal Database Aerospike 8 Brings Support for Real-Time ACID Transactions

MMS • Sergio De Simone

Aerospike has announced version 8.0 of its distributed multi-modal database, bringing support for distributed ACID transactions. This enables large-scale online transaction processing (OLTP) applications like banking, e-commerce, inventory management, health care, order processing, and more, says the company.

As Aerospike director of product Ronen Botzer explains, large-scale applications all require some horizontal scaling to support concurrent load and reduce latency, which inevitably brings the CAP Theorem into play.

The CAP theorem states that when a network partitions due to some failure, a distributed system may be either consistent or available. In contrast, both of these properties can be guaranteed in the absence of partitions. For distributed database systems, the theorem led to RDBMS usually choosing consistency via ACID, with NoSQL databases favoring availability following the BASE paradigm.

Belonging to the NoSQL camp, Aerospike was born as an AP (available and partition-tolerant) datastore. Later, it introduced support for ACID with its fourth release by allowing developers to select whether a namespace runs in a high-availability AP mode or a high-performance CP mode. CP mode in Aerospike is known as strong consistency (SC) and provides sequential consistency and linearizable reads, guaranteeing consistency for single objects.

While Aerospike pre-8.0 has been great at satisfying the requirements of internet applications […] limiting SC mode to single-record and batched commands left something to be desired. The denormalization approach works well in a system where objects are independent of each other […] but in many applications, objects actually do have relationships between them.

As Botzer explained, the existence of relationships between objects makes transactions necessary, and many developers had to build their own transaction mechanism on top of a distributed database. This is why Aerospike built native distributed transaction capabilities into Database 8, which meant providing strict serializability for multi-record updates and doing this without hampering performance.

Aerospike distributed transactions have a cost, which includes four extra writes and one extra read, so it is important to understand the performance implications they have. Tests based on Luis Rocha’s Chinook database showed results in line with those extra operations, meaning that smaller transactions are affected most while overhead is amortized in larger ones. All in all, says Botzer,

Transactions perform well when used judiciously together with single-record read and write workloads.

ACID transactions display properties designed to ensure the reliability and consistency of database transactions, i.e., atomicity, consistency, isolation, and durability. They guarantee that database operations are executed correctly. If there is any failure, the database can recover to a previous state without losing any data or impacting the consistency of the data. BASE systems opt instead for being Basically Available, Soft-stated, and Eventually consistent, thus giving up on consistency.

MMS • Aditya Kulkarni

Slack recently integrated automated accessibility testing into its software development lifecycle to improve user experience for individuals with disabilities.

Natalie Stormann, Software Engineer at Slack, detailed the journey in Slack’s engineering blog, communicating the company’s ongoing adherence to Web Content Accessibility Guidelines (WCAG).

Slack has internal standards and the company further collaborates with external accessibility testers as well. These standards align with WCAG, an internationally recognized benchmark for web accessibility. While manual testing remains important for identifying nuanced accessibility issues, Slack recognized the need to augment these efforts with automation.

In 2022, Slack started incorporating automated tests into its development workflow to proactively address accessibility violations. The company chose Axe, a widely-used accessibility testing tool, for its flexibility and WCAG alignment. Integrating Axe into Slack’s existing test frameworks presented some challenges. Embedding Axe checks directly into React Testing Library (RTL) and Jest created conflicts, further complicating the development process.

Slack accessibility team envisioned using Playwright, a testing framework compatible with Axe via the @axe-core/playwright package. However, integrating Axe checks into Playwright’s Locator object posed its own set of challenges. To overcome these hurdles, Slack adopted a customized approach, strategically adding accessibility checks in Playwright tests after key user interactions to ensure content was fully rendered. Slack also customized Axe checks by filtering out irrelevant rules and focusing initially on critical violations. The checks were integrated into Playwright’s fixture model, and developers were given a custom function runAxeAndSaveViolations to trigger checks within test specifications.

The tech community on Hacker News took notice of this blog. One of the maintainers of axe accessibility testing engine with HN username dbjorge commented on the post,

It’s awesome to see such a detailed writeup of how folks are building on our team’s work…. It’s very enlightening to see which features the Slack folks prioritized for their setup and to see some of the stuff they were able to do by going deep on integration with Playwright specifically. It’s not often you are lucky enough to get feedback as strong as “we cared about enough to invest a bunch of engineering time into it.

To improve reporting, Slack included violation details and screenshots using Playwright’s HTML Reporter and customized error messages. Their testing strategy involved a non-blocking test suite mirroring critical functionality tests, with accessibility checks added to avoid redundancy. Developers can run tests locally, schedule periodic runs, or integrate them into continuous integration (CI) pipelines. The accessibility team further collaborates with developers to triage automated violations, using a Jira workflow for tracking.

Regular audits ensure coverage and prevent duplicate checks, with Slack exploring AI-driven solutions to automate this process.

Slack aims to continue balancing automation and manual testing. Future plans include developing blocking tests for core functionalities such as keyboard navigation, and using AI to refine test results and automate the placement of accessibility checks.

MMS • Avraham Poupko

Transcript

Thomas Betts: Hello, and welcome to another episode of The InfoQ Podcast. I’m Thomas Betts. Today I’m joined by Avraham Poupko to discuss how AI can be used as a software architect’s assistant. He likes teaching and learning about how people build software systems.

He spoke on the subject at the iSAQB Software Architecture Gathering in Berlin last month, and I wanted to continue the conversation, so I figured the InfoQ audience would also get some value from it. Avraham, welcome to The InfoQ Podcast.

Avraham Poupko: Thank you, Thomas. Thank you for inviting me. A pleasure to be here. The talk that I gave at the iSAQB conference in Berlin, it was really fascinating for me and I learned a lot preparing the talk. And as anybody that has given a talk knows, when you have a good talk, the hardest part is deciding what not to say. And in this talk that I gave, that was the hardest part. I learned so much about AI and about how AI and architects collaborate. It was painful, the amount of stuff I had to take out. Maybe we could make some of that up here in this conversation.

Architects won’t be replaced by AI [01:21]

Thomas Betts: That’d be great. So from that research that you’ve done, give us your quick prediction. Are the LLMs and the AIs coming for our jobs as software architects?

Avraham Poupko: They’re not coming for our jobs as software architects. Like I said in the talk, architects are not going to be replaced by AI and LLMs. Architects will be replaced by other architects, architects that know how to make good use of AI and LLM, at least in the near term. It’s not safe to make a prediction about the long term. We don’t know if the profession of a software architect is even going to exist, and it’s not a fundamental difference from the way it’s always been.

Craftsmen that make good use of their skill and their tools replace people that don’t. And the same goes for architects, and AI and LLM is just a tool. A very impressive tool, but it’s a tool, and we’re going to have to learn how to use it well. And it will change things for us. It will change things for us, but it will not replace us, at least not in the near future.

LLMs only give the appearance of intelligence [02:16]

Thomas Betts: So again, going back to that research that you’ve done, what is this tool? We talked about the artificial intelligence. There is an intelligence there, it’s doing some thinking. Is that what you found that these LLMs are thinking, or is it just giving the appearance of it?

Avraham Poupko: In our experience, the only entities capable of generating and understanding speech are humans. So once I have a machine that knows how to talk and knows how to understand speech, we immediately associate it with intelligence. It can talk, so it must be intelligence. And that’s very difficult for us to overcome. There are things that look like intelligence. There are things that certainly look like creative thinking or novel thinking, and as time goes on, these things are becoming more and more impressive.

But real intelligence, which is a combination of emotional intelligence and quantitative intelligence and situation intelligence and being aware of your environment, it’s not yet there. That’s where the human comes in. The main thing that AI is lacking is a body. We’ll get to that. Most AIs do not have a body. And because they don’t have a body, they do not have other things that bodies give us.

Humans learn through induction and deduction. LLMs only learn through induction [03:28]

Thomas Betts: Disregarding the fact they don’t have a body, most people are interacting with an LLM through some sort of chat window, maybe a camera or some other multimodal thing. But that gets to what’s the thinking once you give it a prompt and say, “Hey, let’s talk about this subject”. Compare the LLM thinking to human thinking. How do we think differently and what’s fundamentally going on in those processes?

Avraham Poupko: I could talk about the experience and I could talk about what’s going on under the hood. So let’s go under the hood first. We’ll talk a bit about under the hood. I am a product of my genetics, my culture and my experience. And every time that something happens, I am assimilating that into who I am and then that gives fruit to the thoughts and the actions that I do.

I do not have a very strong differentiation between my learning phase and my application phase, what we sometimes call the inference phase. I’m learning all the time and I’m inferring all the time. And I’m learning and applying knowledge, learning and applying knowledge, and it’s highly iterative and highly complex. Yes, I learned a lot as a child. I learned a lot in school, but I also learned a lot today.

LLMs, their model, even if they’re multimodal, their model is text or images. And they’re trained, and that’s when they learn, and then they infer, and that’s when they apply the knowledge. And because they are a model, they’re a model of either the language or the sounds or the videos or the texts. But my world model is comprised of many, many things. My world model is comprised of much, much more than language, than sounds. My world model is composed of my internal feelings, of what I feel as a body.

I have identity, I have mortality, I have sexuality, I have a certain locality. I exist here and not there. And that makes me into who I am and that governs my world experience. And I’m capable of things like body language, I’m capable of things like being tired, of being bored, of being scared, of growing old. And that all combines into my experience and that combines into my thinking. Can an architect, who has never felt the pain or humiliation of making a shameful mistake, can she really guide another architect in being risk-averse or in being adventure-seeking?

Only a person that has had those feelings themselves could guide others. And as architects, we bring our humanity, we bring our experience to the table. For instance, one of the things that typifies older architects as opposed to younger architects, older architects are much more tentative. They’ve seen a lot, they’re much more conservative. They understand that complexity, as Gregor Hohpe says, “Complexity is a price the organization pays for the inability to make decisions”.

And if you’re not sure what to do and you just do both, you’re going to end up paying a dear price for that. And the only way you know that is by having lived through it and done it yourself. So that’s all under the hood.

I’d like to talk a bit about how the text models work. The LLMs that we have today, the large language models, are mostly trained on texts, written texts. And these written texts, let’s say, the texts about architecture are typically scraped or gathered or studied or learned or trained on books, books written by architects, books written about architects.

And our human learning is done in a combination of induction and deduction. And I’ll explain. Induction means I learn a lot of instances, a lot of individual cases, and I’m able to extract a rule. We call that induction. So if I experience the experience once, twice, thrice, four times, five times, and I start recognizing a pattern, I will say, “Oh, every day or every time or often”, and I’ll recognize the commonality, and that is called induction.

Then deduction is when I experience a rule and I have a new case and I realized that it falls into the category, I will apply the generic rules in a deductive manner. And we’re constantly learning by induction and deduction, and that’s how we recognize commonalities and variabilities. And part of being intelligent is recognizing when things are the same and when they are different. And one of the most intelligent things that we can say as humans are, “Oh, those two things are the same. Let’s apply a rule. And those two things are different. We can’t apply the same rule”.

And one of the most foolish things we could say as humans are, “Aren’t those two the same? Let’s just apply the same rule. Or they’re not really the same, they’re different”. And our intelligence and our foolishness is along the lines of commonality and variability.

Now, when we write a book, the way we write a book, if anybody in the audience or anybody that’s written a book knows, you gain a lot of world experience over time, sometimes over decades. You aggregate all those world experiences into a pattern, and this is the inductive phase into a pattern, into something that you could write as a rule, something that you could write as a general application.

So architects that write books, they don’t write about everything they’ve ever experienced. That would make a very, very long and very boring book. Instead, they consolidate everything they’ve experienced. All their insights from all the experience, they consolidate it into a book. Then, when I read the book, I apply the rule back down to my specific case. So the architect experienced a lot and then she wrote a pattern. Then I read the pattern and I apply it to my case.

So writing a book, in some sense, is an inductive process. Reading a book and applying it is, in some sense, a deductive process. The LLMs, as I’ve seen yet, don’t know how to do that. They don’t know how to apply a rule that they read in a book or in a text and apply it in a novel way to a new situation. What I know as an architect, I know because of the books that I’ve read, but I also know it because of the things that I’ve tried.

I know myself as a human being. So I read the books, I tried it, I interacted, I failed, I made mistakes. And then I read the books again and I apply the knowledge in a careful, curated manner. The LLMs don’t yet have that. So it’s very, very helpful, just like books are very helpful. Books are extremely helpful. I recommend everybody should read books, but don’t do what it says in the book. Apply what it says in the book to your particular case, and then you’ll find the books to be useful and then you’ll find the LLMs to be useful.

LLMs lack the context necessary to make effective architectural decisions [10:20]

Thomas Betts: Yes, the standard architect answer is, “It depends”, right? And it depends is always context-specific. So that moving from the general idea to the very specific, “How does this apply to me?” And recognizing sometimes that thing you read in the book doesn’t apply to your situation, but you may not have known that. You could read the book and say, “Oh, I should always do this”.

Very few architecture guidelines say, “Thou shalt always do this thing”. It always comes down to, “In this situation, do this, and in this situation, do that and evaluate those trade-offs”. So you’re saying the LLMs don’t see those trade-offs?

Avraham Poupko: First of all, they will not see them unless they’ve been made explicit and even then, maybe not. So if I would ask the question, the architect’s question is, you said, Thomas, “What should I do? Should I do A or B?” And the answer always is, it depends. And if I would say, “It depends on what?” The answer is, it depends on context. Context being the exact requirements, functional and not functional, articulated and not articulated, the business needs as articulated and as not.

It depends on the capabilities of the individuals who are implementing the architecture. It depends on the horizon of the business, it depends on the nature of the organization. It depends on the nature of the problem you are solving and of the necessity or urgency or criticality or flexibility of the solution. If you are going to write all that into the prompt, that will be a very, very long prompt.

Now, the architects that do really well, are often the architects that either work for the organization that solves the problem, or who have spent a long amount of time studying the organization, embedded in the organization. That’s why the successful workshops are not one-day workshops. The successful workshops are one-week workshops because it takes time to get to know all the people and forces, and then you could guide them towards an architecture that is appropriate to all the “it depends” things.

And one of the things that LLMs are quite limited in, they do not establish a personal relationship. Now, by a personal relationship, the only thing the LLM knows about me is what I told it about me. But what you know about me is if you told me something, and I made a face or I sneered or I looked away or I focused at you very intently, you know some things interest me and some things bore me. And then that is part of our relationship as humans where we could have a trusting technical relationship.

We don’t have that with text-based LLMs, because they don’t have a body, they don’t have eye contact yet. They can’t look back. So I could stare the LLM in the camera, but the LLM can’t stare at me. Again, I’m saying all this yet. Who knows, maybe in 10 years there’ll be LLMs or not LLMs, but large models that know how to do eye contact, they know how to interpret eye contact, they know how to reciprocate eye contact and know how to think slow and know how to think fast, and know how to be deliberate and take their time and know how to initiate a conversation.

I have not yet had an LLM ever call me and say, “Avraham, remember that conversation we had a couple of days ago? I just thought of something. I want to change my mind because I didn’t fully think it out”. I’ve had humans do that to me all the time.

LLMs do not ask follow up questions [13:47]

Thomas Betts: Yes. I think going back to where you’re saying the LLM isn’t going to ask the next question. If you prompt it, “Help me make this decision. What do I need to consider?” then it knows how to respond. But its job, again, the fundamental purpose of an LLM is to predict the most likely next word or the next phrase.

And so if you give it a prompt and all you say is, “Design a system that solves this problem”, it will spit out a design and it’ll sound reasonable, but it has no basis in your context because you didn’t provide the context. And it didn’t stop to ask, “I need to know more information”, right? You’re saying the LLMs don’t follow up with that request for more information, and a human would do that.

Avraham Poupko: I’m saying exactly that. And humans, mature humans, accountable humans, if you ask me, “Design a system that does something”, I will know, in context, if the responsible thing for me is to design the system or if the responsible thing for me is to ask more questions and then not design. And an appropriate answer is, I’ve asked you a bunch of questions. You, Thomas, told me to design a system, and I asked you three or four questions. And then I come back and I say, “Thomas, I really don’t know how to do that”, or “I don’t feel safe doing that”, or “I’m going to propose a design, but I really would like you to check the environment in which the thing is going to work because I’m a little suspect of myself and I’m not as confident as I sound”.

Mature human beings know how to do that. Or I might say, “I actually did that before and it worked, and this is exactly what you should do. And I actually have a picture or a piece of code that I’ll be happy to share with you”. The LLM doesn’t have experience. It would never say, “Oh, somebody else asked me that question yesterday, so I’m going to just give you that same picture”, or something like that. It doesn’t have long-term memory.

Architects are responsible for their decisions, even if the LLM contributed [15:41]

Thomas Betts: But it sounds just as confident, whether it’s giving the correct answer, if you know what the correct answer is or an incorrect answer. And that’s what makes it difficult to tell, “Is that a good answer? Should I follow that guidance?” So if you aren’t an experienced architect, and let’s say you’re an architect who doesn’t know, the right answer is to ask another question. And you just ask a question and the LLM spits out a response, how do you even evaluate that if you don’t have enough experience built up to say, “I know what I should have done was ask more questions”?

Avraham Poupko: So I’ll actually tell you a tip that I give to coders. Coders have discovered LLMs. They love using LLMs and to generate code and it generates code blindingly fast. And the good coders will then take that code, let’s say, the C or the Python, and read it and make sure they understand it and only then use it. And the average coders, as long as it compiles, they use it. As long as it passes some basic sanity test or as long as it passes the unit test, they will use it.

And then you end up with a lot of what some people call very correct and very bad code. It’s code that is functionally correct but might not have some contextual integrity or might not have some mental or conceptual integrity. Now, the same thing goes with an architect. As an architect, just like I would never apply something I read in a book unchecked, I read it in a book, I would see if it makes sense for me. And I could read a book and say, “No, that doesn’t make any sense, or it doesn’t make sense in my particular context”.

Or I would read a book and say, “Yes, that does make a lot of sense”. Now, I do trust the experience of the author of a book. If she’s a good author that has had a lot of experience and designed very robust systems, I will trust their experience to an extent. The same thing goes with an LLM. When the LLM spits out an answer, that is, at most, a proposal. And I will use it as a proposal. I will read it, I will make sure I understand it, and then I will use it as an architecture or as a basis for an architecture or as a part of an architecture or as a proposal for an architecture or not.

But ultimately, I am accountable. So when the system fails and the pacemaker doesn’t work and the poor person gets a heart attack, I’m the one who’s accountable for that and I should do good architecture. I can’t roll it back on the LLM and say, “Why did you tell me that I should write a pacemaker in HTML? Why didn’t you tell me that you’re supposed to write a pacemaker in machine code, in highly optimized machine code?”

I should have known that because I’m the architect and I’m accountable for the performance decisions. But it’s an extremely helpful tool as such because it’s so fast and it gives such good responses, and many of them are correct and many of them are useful.

You can ask an LLM “why” it suggested something, but the response will lack context [18:35]

Thomas Betts: Again, looking at the interaction you’d have with another human architect, if someone came to me and says, “Here’s the design I came up with, I’d like you to review it”, I’m going to ask the, “Why did you decide that?” question. “Show me your decision making process. Don’t just show me the picture of the design. Show me what did you consider, what did you trade off?” Again, my interactions with LLMs, you ask them a question, it gives you an answer. How are they when you ask why? Because they don’t volunteer why, unless you ask them. But if you ask them, do they give a reasonable why behind the decision?

Avraham Poupko: But it’ll never be a contextual answer. That means if you give me a design and I say, “Why?” and you could say something like, “Well, last week I gave it to another organization and it worked well”, or “Last week I gave something else to the organization and it didn’t work well”. Or you’ll tell me a story. You’ll tell me, “I actually did see QRS in a previous role and I saw that it was too hard to explain to the people”, or “It ended up not being relevant”, or “I used the schema database, but then the data was so varied that it was a mistake, and I think that in your case, which is similar enough, we’re going to go schemaless”, or whatever it is.

So the why won’t always be a technical argument. It might be a business argument, it might be an experiential argument, it might be some other rhetorical device. The LLM will always give me a technical answer because technically, that’s the appropriate answer for the question. It’s a different why. And a good question to ask an architect, a very good question to ask an architect is not why, because the hard questions are questions that have two sides, right? I’m now dealing with an issue in an AWS deployment and I’m wondering, “Should I go EKS or should I go ECR? Should I go with the Amazon Container Registry or should I go with the Kubernetes?”

And each one has its pluses and minuses. And I know how to argue both ways. Should I go multi-tenant or single-tenant? The question should be, what, ultimately, convinced you? Not why, because I could give you a counter-argument. But given all the arguments for and against, why were you convinced to go multi-tenant? Why were you convinced to go Kubernetes? And you’ll have to admit and say, “Well, it’s not risk-free and the trade-offs to the other, and I know how to make the other argument, but given everything that I know about everything, this is what I decided is the most reasonable thing”.

Humans are always learning, while LLMs have a fixed mindset [20:57]

Thomas Betts: I like the idea of the human is always answering the question based on the knowledge I’ve had last week, previous project, a year ago. We have that, you mentioned earlier, that humans are always going through that learning cycle. It’s not a, “I learned and now I apply. I’m done with school”. But the LLM had that learning process and then it’s done. It’s baked. It’s a built-in model. Where else does that surface in the interactions you’ve had working with this? How does it not respond to more recent situations?

Avraham Poupko: So the experiences that we remember are those experiences that caused us pain or caused us joy. We’re not really statistical models in that sense. So if I made a bad decision and it really, really hurt me, I will remember that for a long time. I got fired because I made a bad decision. I got promoted because I made a brilliant decision, or everybody clapped their hands and said, “That was an amazing thing”. And they took my architecture and framed it on a wall in the hall of fame. I’ll remember that.

And if I made a reasonably good decision 10 times and the system sort of worked, that would be okay. And that’s my experience. You might have a different experience. What got me fired, got you promoted, and what got you promoted, got me fired. And that’s why we’re different. And that’s good. And one of the things that I encourage the people to do, the people that I manage or the people that I work with, is read lots of books and talk to lots of architects and listen to lots of stories.

Because those stories and those books that you read from multiple sources and multiple experience, read old books, read new books, read and listen to stories of successes from times of old and times of new, and you’ll create your own stories and notice these things. So when you, as an architect, do a good design and it’s robust and it works well and the system deploys, tell yourself a story and try to remember why that worked well and what you felt about that.

LLMs don’t know how to do that. They’re not natural storytellers. If something happens to me and I want to tell it to my friends or to my family or over on a podcast, I will spend some time deliberately rehearsing my narrative. As I’m going, as I’m preparing this story, I’m going to tell the story, “It was a dark night and I was three days late for the deadline and I was designing”. And it’s a narrative. I’m not thinking of it the first time I’m saying it. I thought of it a long time before. And part of me rehearsing the narrative, is me processing the story, is me experiencing the story.

And you know that good storytellers, if we give a story during a talk or during an event, they tell it with a lot of emotion, and you can tell that they’re experiencing it all over again. And they’ve spent a lot of time crafting words and body language and intonation. People that get this really, really well are stand-up comedians. Stand-up comedians are artists of the spoken word. If you would read a transcript of a stand-up comedy, it’s not funny. What makes a stand-up comedian so funny is their interaction with the audience, is there sense of timing, is there sense of story, is there sense of narrative, is there sense of context.

I haven’t yet seen an LLM be a stand-up comedian. It could tell a joke, and the joke might even be funny. It might be surprising, it might have a certain degree of incongruity or a certain degree of play on words or a certain degree of double meaning or ambiguity or all those things that comprise written humor. But most stand-up comedy is a story, and it’s a combination of the familiar and the surprising, the familiar and the surprising. So the stand-up comedian tells a story, and you say to yourself, “Yes, that happened to me too. It’s really funny”. Or he tells you something surprising and you say, “That never happened to me. I only live normal things”.

And that’s a very, very human thing. And part of it, of course, has to do with our mortality and the fact that we live in time and we’re aware of time. Our bodies tell us about time. We get tired, we get hungry, we get all kinds of other things. Time might even be our most important sense, certainly as adults, much more than being hot or cold is we’re aware of time. The reason that we are so driven to make good use of our lives, of our careers, of our relationships is because our time is limited. Death might be the biggest driver to be productive.

LLMs do not continue thinking about something after you interacted with them [25:28]

Thomas Betts: Then time is also one of those factors that we understand the benefits. Sometimes I think about a problem and I’m stuck in the cycle, and then next day in the shower or overnight, whatever it is, I’m like, “Oh, that’s the brilliant idea”. I just need to get away from the problem enough and think about it and let my brain do some work. And that’s not something that’s happening with the LLMs.

Going back to your example of the LLM never calls you up two days later and says, “Hey, I thought about that a little bit more. Let’s do this instead”. But that’s the thing. I keep working, that time keeps progressing, and every LLM is an interaction. Right now, this moment I engage, it responds, we’re done.

Avraham Poupko: That’s an interesting point, and I’d like to elaborate on that a bit. As a human, I know how I learn best and I know I’m stuck in this problem. I need to go take a walk because I know myself, I know how I learn. I’m stuck in this problem. I need to call up a friend and bounce it around, or I need to take a nap or I need to take a shower or I need to give it a few days. And the answer we say will come to me.

And it’s not a divine statement, but it means I know how my mind works. I know how my mind works. I’m very, very self-aware. I know how I learn well. And the good students know how they learn well and know how they don’t learn well. I have not yet seen an LLM that knows how to say, “I don’t know the answer yet, but I know how my mind works and I’m going to think about this”.

LLMs are trained on words; Humans are trained through experiences [26:52]

Thomas Betts: Yes, this is going back to, “Is it going to replace us?” And we don’t expect our tools to do this kind of thing. We expect humans to do human things, but I don’t expect my hammer or my drill to come back with a question, “Oh, I need a few minutes to think about pounding in that nail”. I expect to pick up the hammer and pound in the nail. And the fact that it is involving language is confusing, but there is no behavior, I guess is what we’re getting to.

The interaction model is, “I give you words, you give me words in response”. And if you think about it in just those terms, you have a better expectation of what’s going to happen and you just use it as a tool. But if you think, “I expect behavior”, it starts thinking like an architect, which is we’re placing human characteristics on this thing that has no human characteristics. That’s where we start to go into the slippery slope of we’re going to have assumptions that it’s going to do more than it’s supposed to do.

Avraham Poupko: That is exactly, exactly true. And it’s becoming more and more apparent to me that so much of the world wisdom and so much of my wisdom, it’s not written in words. Maybe it’s not even spoken in words. It’s written in experience, in the way I’ve seen other people behave that has never been put into written words or spoken words.

So when I watch a good architect moderate a workshop, she doesn’t say, “And now I am going to stop for a minute and let somebody else speak”. She just does it. Or, “Now I’m going to give a moment of silence”, or “Now I’m going to interrupt”, or “Now I’m going to ask a provocative question”. They just do that. And the good architects catch on that. And then, when they run workshops, they do the same because they learned it and they saw that it works. And so much of what we do is emulated behavior and not behavior that has found expression in text or in spoken word.

When we watch art, when we look at art, some art deeply moves us. Now, an art critic might be able to say what it was about the picture that moved us, what it was about the piece of music that moved us. And then the LLM will see the picture and see the words of the art critic, and it’ll see another picture with the other words of the art critic. And then the LLM will be able to become an art critic, and it’ll see a new picture and tell you what emotions that evokes.

But when the art critic tells you in words what the music or the picture made her feel, she’s not able to do it completely in words because they’re never complete. Otherwise, I wouldn’t go to the museum. I’d just read the art criticism of the museum. But I want to go to the museum, and I want to go to the concert, and I want to go to the concert with other people, and I want to experience the music. I don’t want to read about it. I want to experience. And there is a different experience in experiencing something than reading about it.

The best engineers and architects are curious; LLMs do not have curiosity [29:42]

Thomas Betts: Yes. I thought about this a few minutes ago in the conversation that you’re talking about the good architect is picking up on these behaviors and then going to start using those behaviors in the future and adapting them to new situations, but saying, “Oh, I’ve seen someone do this”. Going back to the LLM has the learning phase and then it’s done learning.

I’ve unfortunately interacted with a few architects and engineers who feel like I’ve done my learning. I went to school, I learned this thing, and that’s all the knowledge I need to have, and I’m just going to keep applying that. This is an extreme example. Maybe 10, 15 years ago, someone’s like, “I learned ColdFusion. That’s all I ever need to know to write websites”. And I don’t know anyone in 2024 that’s still using ColdFusion, but he was adamant that was going to carry him for the rest of his career.

And that idea that I’ve learned everything and all I need to know is going to get me through forever, doesn’t make you a good engineer, doesn’t make you a good architect. You might be competent, you might be okay, but you’re never going to be getting promoted. You’re never going to be at the top of your career. You’re never going to grow.

And I wonder if that’s analogous to some of these LLMs. I can use that person who has this one skill because they’re great at that one skill, but I’m only going to use them for that one thing. I’m never going to ask them to step outside their comfort zone. The LLM, I’m going to use them for the one thing that it’s good at.

Avraham Poupko: That’s a great analogy. If I’m a hiring manager, let’s say as a hypothetical example. And I have two candidates, one that thinks she knows everything or I would even say knows everything, and is not interested and doesn’t believe that there’s anything left to learn because I know it all. And the other candidate that admittedly doesn’t know everything but is curious, that is proactively curious and is constantly interested in learning and experiencing new things and new architectures.

Of course, it’s a matter of scale, like everything. But I would say I would give extra points to the one that’s curious and wants to learn new things. And if you’ve ever had a curious member on your team, either as a colleague or as a manager or as a managee, people that like learning are fun. And anybody that has children or grandchildren, one of the things we love about children is that they’re experiencing the world and they’re so curious and they’re so excited by new things.

And if it’s your own children or children that you’re responsible for, maybe nieces and nephews or grandchildren, you vicariously learn when they learn. It’s a lot of fun. You become, in some sense… The parents among us will know exactly what I mean. You become a child all over again when you watch your child learn new things and experience new things to the point that you say, “Oh, I wish I could re-experience that awe at the world and at the surprise”. And LLMs have not shown that feeling.

I have never seen an LLM say, “Wow, that was really, really interesting and inspiring. I am going to remember this session for a long time. I’m going to go back home and tell the other LLMs about what I just learned today or what I just experienced today. I have to make a podcast about this”. They don’t work like that. And humans, if you’ve done something fun and you come home energized, even though you haven’t slept for a while, and there’s a lot of brain fatigue going around, you think, “Yes, that was really, really cool. That was fun. I learned something”.

Thomas Betts: I like that. Any last-minute words you want to use to wrap up our discussion?

Avraham Poupko: Well, first of all, since there are lots of architects in our audience, learn. Learn a lot. And experiment with the LLMs, and experiment and do fun things and funny things. And we were going to talk about virtual think tanks and we were going to talk about some other ideas. We need that for another podcast.

But the main thing is experiment with new technologies, don’t be afraid of failing. Well, be a little afraid of failing, but don’t be too afraid of failing. And have fun. And Thomas, thank you so much for having me here. This has been a real pleasure and delight, and I hope we have another opportunity in the future.

Thomas Betts: Yes, absolutely, Avraham. It’s great talking to you again. Good seeing you on screen at least. And listeners, we hope you’ll join us again for a future episode of The InfoQ Podcast.

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • RSS

FerretDB has announced the first release candidate of version 2.0. Now powered by the recently released DocumentDB, FerretDB serves as an open-source alternative to MongoDB, bringing significant performance improvements, enhanced feature compatibility, vector search capabilities, and replication support.

Originally launched as MangoDB three years ago, FerretDB became generally available last year, as previously reported by InfoQ. Peter Farkas, co-founder and CEO of FerretDB, writes:

FerretDB 2.0 represents a leap forward in terms of performance and compatibility. Thanks to changes under the hood, FerretDB is now up to 20x faster for certain workloads, making it as performant as leading alternatives on the market. Users who may have encountered compatibility issues in previous versions will be pleased to find that FerretDB now supports a wider range of applications, allowing more apps to work seamlessly.

Released under the Apache 2.0 license, FerretDB is usually compatible with MongoDB drivers and tools. It is designed as a drop-in replacement for MongoDB 5.0+ for many open-source and early-stage commercial projects that prefer to avoid the SSPL license, a source-available copyleft software license.

FerretDB 2.x is leveraging Microsoft’s DocumentDB PostgreSQL extension. This open-source extension, licensed under MIT, introduces the BSON data type and related operations to PostgreSQL. The solution includes two PostgreSQL extensions: pg_documentdb_core for BSON optimization and pg_documentdb_api for data operations.

According to the FerretDB team, maintaining compatibility between DocumentDB and FerretDB allows users to run document database workloads on Postgres with improved performance and better support for existing applications. Describing the engine behind the vCore-based Azure Cosmos DB for MongoDB, Abinav Rameesh, principal product manager at Azure, explains:

Users looking for a ready-to-use NoSQL database can leverage an existing solution in FerretDB (…) While users can interact with DocumentDB through Postgres, FerretDB 2.0 provides an interface with a document database protocol.

In a LinkedIn comment, Farkas adds:

With Microsoft’s open sourcing of DocumentDB, we are closer than ever to an industry-wide collaboration on creating an open standard for document databases.

In a separate article, Farkas explains why he believes document databases need standardization beyond just being “MongoDB-compatible.” FerretDB provides a list of known differences from MongoDB, noting that while it uses the same protocol error names and codes, the exact error messages may differ in some cases. Although integration with DocumentDB improves performance, it represents a significant shift and introduces regression constraints compared to FerretDB 1.0. Farkas writes:

With the release of FerretDB 2.0, we are now focusing exclusively on supporting PostgreSQL databases utilizing DocumentDB (…) However, for those who rely on earlier versions and backends, FerretDB 1.x remains available on our GitHub repository, and we encourage the community to continue contributing to its development or fork and extend it on their own.

As part of the FerretDB 2.0 launch, FerretDB Cloud is in development. This managed database-as-a-service option will initially be available on AWS and GCP, with support for Microsoft Azure planned for a later date. The high-level road map of the FerreDB project is available on GitHub.

MMS • Renato Losio

FerretDB has announced the first release candidate of version 2.0. Now powered by the recently released DocumentDB, FerretDB serves as an open-source alternative to MongoDB, bringing significant performance improvements, enhanced feature compatibility, vector search capabilities, and replication support.

Originally launched as MangoDB three years ago, FerretDB became generally available last year, as previously reported by InfoQ. Peter Farkas, co-founder and CEO of FerretDB, writes:

FerretDB 2.0 represents a leap forward in terms of performance and compatibility. Thanks to changes under the hood, FerretDB is now up to 20x faster for certain workloads, making it as performant as leading alternatives on the market. Users who may have encountered compatibility issues in previous versions will be pleased to find that FerretDB now supports a wider range of applications, allowing more apps to work seamlessly.

Released under the Apache 2.0 license, FerretDB is usually compatible with MongoDB drivers and tools. It is designed as a drop-in replacement for MongoDB 5.0+ for many open-source and early-stage commercial projects that prefer to avoid the SSPL license, a source-available copyleft software license.

FerretDB 2.x is leveraging Microsoft’s DocumentDB PostgreSQL extension. This open-source extension, licensed under MIT, introduces the BSON data type and related operations to PostgreSQL. The solution includes two PostgreSQL extensions: pg_documentdb_core for BSON optimization and pg_documentdb_api for data operations.

According to the FerretDB team, maintaining compatibility between DocumentDB and FerretDB allows users to run document database workloads on Postgres with improved performance and better support for existing applications. Describing the engine behind the vCore-based Azure Cosmos DB for MongoDB, Abinav Rameesh, principal product manager at Azure, explains:

Users looking for a ready-to-use NoSQL database can leverage an existing solution in FerretDB (…) While users can interact with DocumentDB through Postgres, FerretDB 2.0 provides an interface with a document database protocol.

In a LinkedIn comment, Farkas adds:

With Microsoft’s open sourcing of DocumentDB, we are closer than ever to an industry-wide collaboration on creating an open standard for document databases.

In a separate article, Farkas explains why he believes document databases need standardization beyond just being “MongoDB-compatible.” FerretDB provides a list of known differences from MongoDB, noting that while it uses the same protocol error names and codes, the exact error messages may differ in some cases. Although integration with DocumentDB improves performance, it represents a significant shift and introduces regression constraints compared to FerretDB 1.0. Farkas writes:

With the release of FerretDB 2.0, we are now focusing exclusively on supporting PostgreSQL databases utilizing DocumentDB (…) However, for those who rely on earlier versions and backends, FerretDB 1.x remains available on our GitHub repository, and we encourage the community to continue contributing to its development or fork and extend it on their own.

As part of the FerretDB 2.0 launch, FerretDB Cloud is in development. This managed database-as-a-service option will initially be available on AWS and GCP, with support for Microsoft Azure planned for a later date. The high-level road map of the FerreDB project is available on GitHub.

MMS • RSS

abrdn plc lessened its stake in shares of MongoDB, Inc. (NASDAQ:MDB – Free Report) by 16.7% in the 4th quarter, according to its most recent disclosure with the SEC. The institutional investor owned 34,877 shares of the company’s stock after selling 6,998 shares during the period. abrdn plc’s holdings in MongoDB were worth $8,184,000 at the end of the most recent quarter.

Several other hedge funds and other institutional investors also recently bought and sold shares of MDB. B.O.S.S. Retirement Advisors LLC purchased a new stake in MongoDB during the fourth quarter worth $606,000. Aigen Investment Management LP bought a new position in shares of MongoDB during the 3rd quarter worth approximately $1,045,000. Geode Capital Management LLC boosted its stake in MongoDB by 2.9% in the third quarter. Geode Capital Management LLC now owns 1,230,036 shares of the company’s stock valued at $331,776,000 after acquiring an additional 34,814 shares in the last quarter. B. Metzler seel. Sohn & Co. Holding AG acquired a new stake in MongoDB in the third quarter valued at approximately $4,366,000. Finally, Charles Schwab Investment Management Inc. increased its position in shares of MongoDB by 2.8% during the third quarter. Charles Schwab Investment Management Inc. now owns 278,419 shares of the company’s stock worth $75,271,000 after purchasing an additional 7,575 shares in the last quarter. Institutional investors own 89.29% of the company’s stock.

Insider Transactions at MongoDB

In other news, CAO Thomas Bull sold 169 shares of the firm’s stock in a transaction that occurred on Thursday, January 2nd. The shares were sold at an average price of $234.09, for a total transaction of $39,561.21. Following the completion of the sale, the chief accounting officer now owns 14,899 shares in the company, valued at $3,487,706.91. This trade represents a 1.12 % decrease in their position. The sale was disclosed in a filing with the Securities & Exchange Commission, which is available at this hyperlink. Also, Director Dwight A. Merriman sold 3,000 shares of MongoDB stock in a transaction that occurred on Monday, February 3rd. The shares were sold at an average price of $266.00, for a total transaction of $798,000.00. Following the sale, the director now directly owns 1,113,006 shares in the company, valued at approximately $296,059,596. The trade was a 0.27 % decrease in their position. The disclosure for this sale can be found here. Over the last three months, insiders have sold 42,491 shares of company stock valued at $11,543,480. Corporate insiders own 3.60% of the company’s stock.

MongoDB Trading Down 0.1 %

MongoDB stock traded down $0.23 during midday trading on Friday, reaching $277.87. The company had a trading volume of 1,285,901 shares, compared to its average volume of 1,481,443. MongoDB, Inc. has a 12-month low of $212.74 and a 12-month high of $509.62. The firm’s fifty day simple moving average is $267.08 and its 200-day simple moving average is $270.55.

MongoDB (NASDAQ:MDB – Get Free Report) last issued its quarterly earnings results on Monday, December 9th. The company reported $1.16 earnings per share (EPS) for the quarter, topping analysts’ consensus estimates of $0.68 by $0.48. MongoDB had a negative return on equity of 12.22% and a negative net margin of 10.46%. The firm had revenue of $529.40 million for the quarter, compared to analyst estimates of $497.39 million. During the same period in the previous year, the company posted $0.96 earnings per share. The business’s revenue was up 22.3% on a year-over-year basis. On average, sell-side analysts predict that MongoDB, Inc. will post -1.78 EPS for the current fiscal year.

Wall Street Analyst Weigh In

A number of analysts have recently weighed in on the company. Guggenheim raised MongoDB from a “neutral” rating to a “buy” rating and set a $300.00 price objective for the company in a research report on Monday, January 6th. Tigress Financial lifted their price objective on shares of MongoDB from $400.00 to $430.00 and gave the stock a “buy” rating in a research note on Wednesday, December 18th. Citigroup upped their target price on MongoDB from $400.00 to $430.00 and gave the company a “buy” rating in a research report on Monday, December 16th. Rosenblatt Securities initiated coverage on MongoDB in a research report on Tuesday, December 17th. They set a “buy” rating and a $350.00 price target for the company. Finally, Royal Bank of Canada increased their price objective on MongoDB from $350.00 to $400.00 and gave the company an “outperform” rating in a report on Tuesday, December 10th. Two analysts have rated the stock with a sell rating, four have given a hold rating, twenty-three have issued a buy rating and two have issued a strong buy rating to the company. Based on data from MarketBeat, the company has a consensus rating of “Moderate Buy” and a consensus price target of $361.00.

Get Our Latest Research Report on MongoDB

MongoDB Company Profile

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Recommended Stories

Before you consider MongoDB, you’ll want to hear this.

MarketBeat keeps track of Wall Street’s top-rated and best performing research analysts and the stocks they recommend to their clients on a daily basis. MarketBeat has identified the five stocks that top analysts are quietly whispering to their clients to buy now before the broader market catches on… and MongoDB wasn’t on the list.

While MongoDB currently has a “Moderate Buy” rating among analysts, top-rated analysts believe these five stocks are better buys.

As the AI market heats up, investors who have a vision for artificial intelligence have the potential to see real returns. Learn about the industry as a whole as well as seven companies that are getting work done with the power of AI.

MMS • RSS

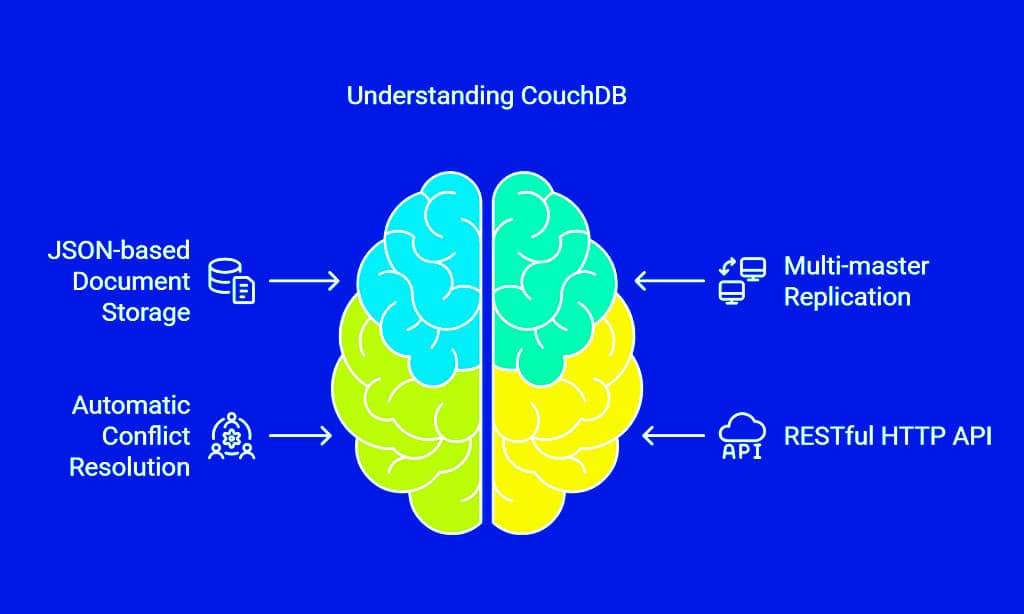

In today’s digital landscape, scalability is a crucial factor for web applications. As businesses expand, their data grows exponentially, making traditional relational databases less efficient for handling large-scale applications.

This is where NoSQL databases come into play. The best NoSQL databases for scalable web applications offer high performance, flexibility, and distributed architecture to meet modern development needs.

Unlike traditional relational databases that rely on structured schemas and SQL queries, NoSQL databases provide diverse data storage solutions that accommodate unstructured, semi-structured, and structured data.

These databases are designed to handle rapid growth, making them ideal for businesses dealing with massive datasets, real-time analytics, and cloud-based applications.

In this comprehensive guide, we will explore the 5 best NoSQL databases for scalable web applications, highlighting their features, use cases, and benefits. By the end of this article, you’ll have a clear understanding of which NoSQL database suits your specific needs.

What Makes a NoSQL Database Ideal for Scalable Web Applications?

Before diving into the best NoSQL databases for scalable web applications, it’s essential to understand what makes them suitable for scalability. NoSQL databases differ from traditional relational databases in several key ways:

High Performance & Speed

- NoSQL databases handle large volumes of data with low latency.

- They use optimized indexing and in-memory storage to enhance query speed.

Flexible Data Modeling

- Unlike relational databases, NoSQL allows dynamic schema changes.

- Supports a variety of data models: document, key-value, column-family, and graph.

Scalability & Horizontal Expansion

- NoSQL databases support horizontal scaling by distributing data across multiple nodes.

- This ensures the system remains highly available even under heavy workloads.

Fault Tolerance & Reliability

- Built-in replication and auto-failover mechanisms ensure high availability.

- Data is distributed across multiple servers to prevent single points of failure.

Now, let’s explore the 5 best NoSQL databases for scalable web applications and how they cater to different business needs.

The 5 Best NoSQL Databases for Scalable Web Applications

Selecting the right NoSQL database is essential for web applications that require high availability, low latency, and seamless scalability. As different NoSQL databases cater to different business needs, understanding their unique strengths is crucial. Below, we explore the best NoSQL databases for scalable web applications, each excelling in specific use cases.

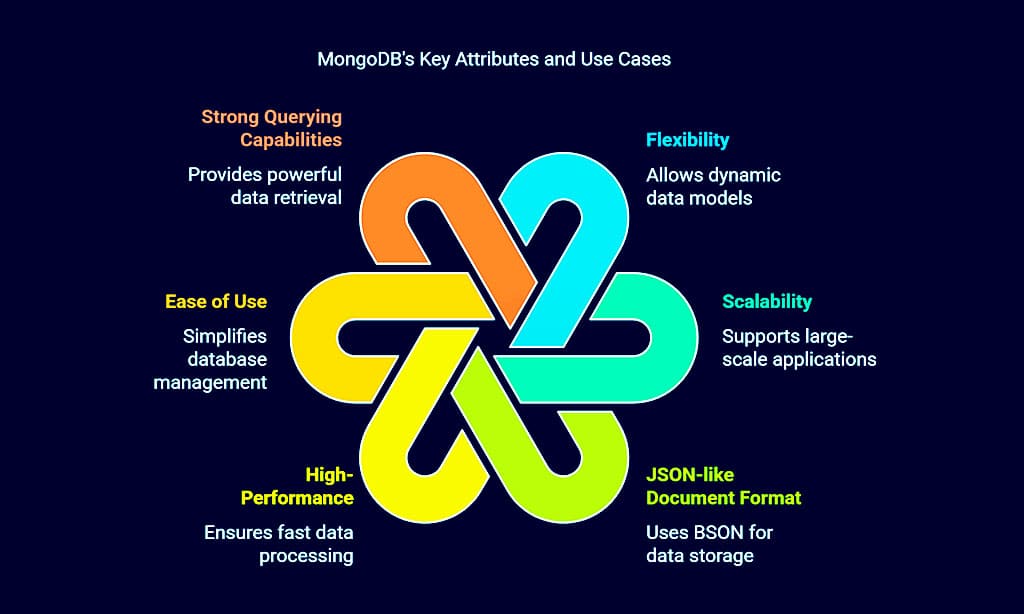

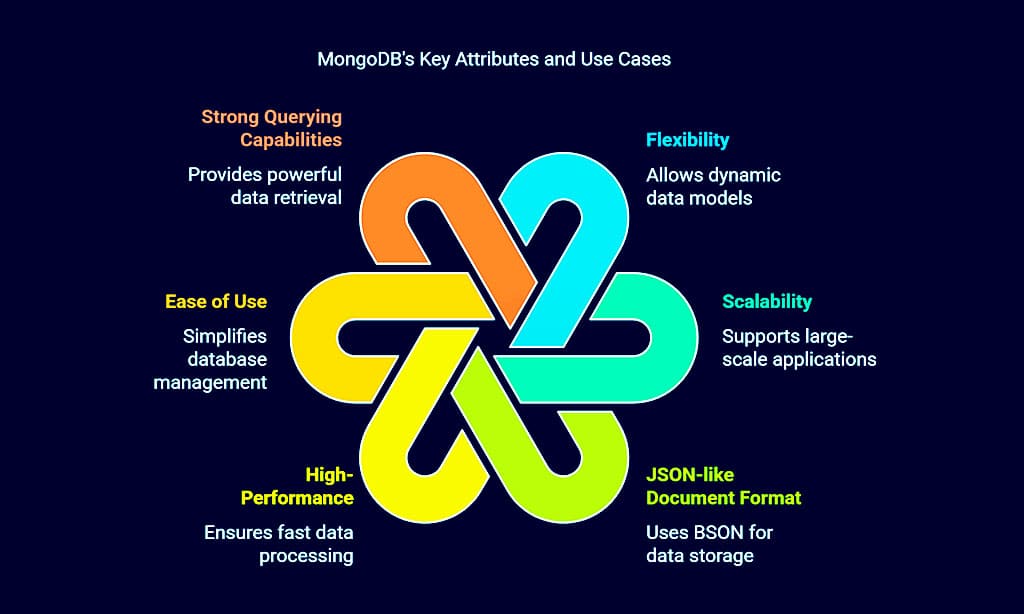

1. MongoDB – Best for General Purpose & High Scalability

MongoDB is one of the most popular NoSQL databases, designed for flexibility and high-performance scalability. It stores data in a JSON-like document format, making it an excellent choice for developers who require fast and efficient data retrieval. Organizations such as eBay, Forbes, and Adobe use MongoDB for its ease of scalability and strong querying capabilities.

Key Features:

- Document-oriented NoSQL database.

- Supports automatic sharding and replication.

- Schema-less design for flexible data modeling.

- Indexing and aggregation framework for fast queries.

Use Cases:

| Feature | MongoDB |

| Data Model | Document |

| Scalability | High |

| Performance | High |

| Best Use Case | General-Purpose Apps |

2. Cassandra – Best for High Availability & Distributed Applications

Apache Cassandra is a decentralized, highly available NoSQL database used by companies such as Netflix, Facebook, and Twitter. It is designed to handle massive amounts of data across multiple data centers, ensuring no single point of failure.

Key Features:

- Decentralized peer-to-peer architecture.

- Supports automatic replication across multiple data centers.

- Handles massive read and write operations efficiently.

- No single point of failure.

Use Cases:

- IoT and time-series data storage.

- High-traffic websites.

- Decentralized applications.

| Feature | Cassandra |

| Data Model | Column-Family |

| Scalability | Very High |

| Performance | High |