Month: June 2025

ScyllaDB X Cloud’s autoscaling capabilities meet the needs of unpredictable workloads in real time

MMS • RSS

The team behind the open-source distributed NoSQL database ScyllaDB has announced a new iteration of its managed offering, this time focusing on adapting workloads based on demand.

ScyllaDB X Cloud can scale up or down within a matter of minutes to meet actual usage, eliminating the need to overprovision for worst-case scenarios or deal with latency while waiting for autoscaling to occur. For example, the company says it only takes a few minutes to scale from 100K to 2M OPS.

According to the company, applications like retail or food delivery services often have peaks aligned with customer work hours and then a low baseline in the off-houses. “In this case, the peak loads are 3x the base and require 2-3x the resources. With ScyllaDB X Cloud, they can provision for the baseline and quickly scale in/out as needed to serve the peaks. They get the steady low latency they need without having to overprovision – paying for peak capacity 24/7 when it’s really only needed for 4 hours a day,” Tzach Livyatan, VP of product for ScyllaDB, wrote in a blog post.

ScyllaDB X Cloud also reaps the benefits of tablets, which were introduced last year in ScyllaDB Enterprise. Tablets distribute data by splitting tables into smaller logical pieces, or tablets, that are dynamically balanced across the cluster.

“ScyllaDB X Cloud lets you take full advantage of tablets’ elasticity. Scaling can be triggered automatically based on storage capacity (more on this below) or based on your knowledge of expected usage patterns. Moreover, as capacity expands and contracts, we’ll automatically optimize both node count and utilization,” Livyatan said.

Tablets also increase the maximum storage utilization that ScyllaDB can safely run at from 70% to 90%. This is because tablets can move data to new nodes faster, allowing the database to defer scaling until the last minute.

Support for mixed instance sizes supports the 90% storage utilization as well. For example, a company can start with tiny instances and then replace them with larger instances later if needed, rather than needing to add the same instance size again.

“Previously, we recommended adding nodes at 70% capacity. This was because node additions were unpredictable and slow — sometimes taking hours or days — and you risked running out of space. We’d send a soft alert at 50% and automatically add nodes at 70%. However, those big nodes often sat underutilized. With ScyllaDB X Cloud’s tablets architecture, we can safely target 90% utilization. That’s particularly helpful for teams with storage-bound workloads,” Livyatan said.

Other new features in ScyllaDB X Cloud include file-based streaming, dictionary-based compression, and a new “Flex Credit” pricing option, which combines the cost benefits of an annual commitment with the flexibility of on-demand pricing, ScyllaDB says.

MMS • RSS

Cloud Firestore—a flexible, scalable database for mobile, web, and server development from Firebase and Google Cloud—offers a variety of benefits for its developers, from flexible, hierarchical data structures to expressive querying and real-time updates. Now, Firestore developers can leverage MongoDB’s API portability in conjunction with Firestore’s differentiated serverless service, unlocking even more value.

Minh Nguyen, senior product manager, Google Cloud, and Patrick Costello, engineering manager, Google Cloud, joined DBTA’s webinar, Hands On: Firestore With MongoDB Compatibility in Action, to walk viewers through the wide array of advantages offered by Firestore with MongoDB compatibility, including multi-region replication with strong consistency, virtually unlimited scalability, high availability of up to 99.999% SLA, and single-digit milliseconds read performance.

To begin, Nguyen provided a brief overview of Firestore, Google’s cloud-first, serverless document database.

As “an enterprise-ready document database that allows you to build rich applications for your users,” said Nguyen, Firestore offers an advanced query engine with more than 120 capabilities supporting JSON data types; strongly consistent reads and ACID transactions; integrations with GCP governance; broad support for SDKs, drivers, and tools in more than 17 programming languages; batch and live-streaming migration; simple, cost-effective pricing based on the traffic you incur; and more.

Now, with MongoDB compatibility, Firestore helps “bring the database to your developers,” explained Nguyen. This integration enables developers to utilize their existing MongoDB app code, drivers, and tools directly within Firestore, benefitting from Firestore’s scalability and availability—with no code changes.

Data interoperability, where users can leverage Firestore and Datastore SDKs alongside MongoDB drivers, is coming later this year. According to Nguyen, “This enables developers to get started on Firestore with MongoDB compatibility, but then, furthermore, take advantage of Firestore’s innovative, real-time and offline caching SDKs.”

“Firestore brings together multiple, popular developer communities into one system,” emphasized Nguyen. “Firestore supports MongoDB tools, Google Cloud service integrations, and Firebase service integrations…allowing you to maximize these ecosystems.”

Firestore with MongoDB compatibility also delivers on security and compliance, offering features such as:

- Data encryption with Customer Managed Encryption Key (CMEK)

- IAM authentication and authorization

- Cloud Audit Logging for compliance

- Cloud Monitoring for insights and alerts

- Disaster recovery strategies ranging from backups to comprehensive options to ensure operational resilience

- Database Center, a unified view for Firestore fleet management with AI-powered optimization

- Firestore Query Explain, which provides query plan analysis

- Firestore Query Insights, which identifies and resolves query performance issues with detailed diagnostics

Following Nguyen’s overview, Costello offered more detailed explanations of how Firestore works “under the hood,” further aided by live demos of the product.

This is only a snippet of the full Hands On: Firestore With MongoDB Compatibility in Action webinar. For the full webinar, featuring more detailed explanations, demos, a Q&A, and more, you can view an archived version of the webinar here.

MMS • David Berg Romain Cledat

Transcript

Berg: I’m David, this is Romain. We’re going to be talking about the breadth of use cases from machine learning at Netflix. We’re not going to focus on the machine learning itself, but rather the infrastructure that we built to support all these diverse use cases. Just to give you an idea of some of the verticals that our team supports, the Metaflow team, we work on computer vision, something called Content Demand Modeling, which we’ll use as a use case and talk a little bit more detail about later. You’re obviously familiar with recommendations and personalization being a very key part of our business. Intelligent infrastructure is something that is over the past few years really becoming a big use case. This is things like systems that parse logging, or just, generally speaking, engineers starting to use more machine learning into their traditional engineering products. Payments and growth ads. We’ll talk about Content Knowledge Graph a little bit.

Throughout the talk, we’re going to refer back to a few specific examples to highlight some of the aspects of our architecture. This first one is identity resolution. We try to keep a knowledge graph of all actors, movies, and various entities in our space, and they have maybe different representations across different databases, maybe some missing data, different attributes, and things like that. This is a massively parallel computation matching problem to try to determine which entities belong to one another. We’re also going to talk about media processing.

On top of the Metaflow Hosting part of our product, we have a whole media processing system. This is building infra on top of our infra. We’re going to talk about content decision-making, and this is a complex set of models that try to predict the value or the demand for different content across the entire life cycle of the content. This goes all the way from when we first hear a pitch all the way to post-service where we have actual viewing behavior.

All of these models need to be orchestrated to give one complete view of the world. We’re also going to talk about a really interesting use case, which is meta-models. These are models that are used to explain other models. We’re going to talk about dependency management as a driving factor for this use case. To give you an idea of the size of our platform, here are some numbers. To put it into perspective for Netflix overall, we are not the only machine learning set of tooling in Netflix, but we are the Python-paved path. Metaflow is all about doing machine learning in Python. We have a whole separate Java, Scala ecosystem that has historically been used for personalization and recommendations. If you’re interested in that, we have lots of tech blogs, lots of Netflix research blogs on that topic as well.

Platform Principles

We want to focus on some of the principles that we used when designing Metaflow, and they might be a little bit different. Everybody knows why we have platforms. There’s obviously cost savings, consolidation of software, and a variety of business-related things.

Myself, I come from a neuroscience background, and so we started to think about what makes people productive, because machines are actually relatively cheap compared to people these days. We want to minimize the overall cognitive load that someone using our platform experiences so that they can focus more on the machine learning. What does that mean? It means reducing anxiety. It means having stable platforms that people feel comfortable operating, comfortable experimenting with. That helps them push their work, reducing the overall attentional load. This means building software that doesn’t draw attention to itself, but rather lets people focus their attention on their own work, unlike this young woman here whose production ranking model seems to be a small part of this system that she’s trying to wrangle. We also want to reduce memory. We want to handle complexity on behalf of the user as opposed to push complexity back onto them. We’ll go over a few principles that we’ve learned over the years.

One of them is the house of cards effect. No one would be comfortable building their own system on top of this rather shaky-looking house of cards. There’s another problem with that. Not everyone can stand on top of your system. You need a scaffolded system such that at any given point, they can tap off to do their own project. You can’t expect any machine learning platform to handle all use cases, but you certainly can allow people opportunity to build on top of your platform.

Another one that we’ve seen over the years is the puzzle effect. You may have seen that many platforms have components and they all fit together, but they fit together in really non-obvious ways and maybe only one way. That’s more of a puzzle. What we would like to build are Legos, things where people can combine them in novel ways. One thing that we do is have similar levels of abstraction and interface aesthetics. That gives you transfer of knowledge from one component to another. The waterbed effect is actually my favorite one. Engineers love to expose the complexity of their systems to other users.

If you don’t know exactly how people are going to use it, why not just give them all the options? Complexity is a bit of a fixed-size volume similar to a waterbed that has a fixed amount of water inside of it. If you push the waterbed down in one place, it pops up in another. We want to have that complexity pop up for us as the platform team, not for our users. You can see this example of an overly complicated request, some sort of a web request API here, or a very simple one where we just made some of the decisions on the user’s behalf.

Introduction to Metaflow

Cledat: My name is Romain. I’ll give you here a brief introduction of Metaflow. The goal isn’t to make you a Metaflow expert, but just to allow you to understand the rest of the presentation. At a very basic point, Metaflow just allows you to express computation. You have some input, you’ll do some computation on it, and it’ll produce some output. In Metaflow, this concept here that is represented is a step. We put these steps together in a graph, a directed acyclic graph, which we will call a flow. As you can see here, you have a couple of steps. It starts at the start step. Once the start step is done, A and B can execute in parallel if they want to. Once both of those are done, then the join step can execute.

Once that is done, the end step can execute. That representation of the graph that you see on one side of the screen, it’s coded up in Metaflow on the other side. We’ll go in detail over some of these concepts here in the next few slides. Suffice to say that this looks like very regular Python code that any Python developer would be comfortable coding. There’s no special DSL, no special constructs. It looks pretty natural for a Python developer. The important thing to note about how this graph executes is that in Metaflow, each of these steps will execute in an independent process or an independent node. This is very important for Metaflow because this allows Metaflow to execute this flow either entirely on your laptop using different processes or across a cluster of machines using different nodes with no change to the user code. The same code that’s on the right, will execute both on your laptop and on a cluster with no change in code.

One of the main things that allows Metaflow to do this is how it handles data. You can see in the code here that you have this variable x that goes through from the different steps, from start to A to B to join. The value changes, but it’s accessible in each of these steps. The way Metaflow does this is it basically stores in a persistent storage system like S3, that’s the one we use at Netflix, the association between a pickled representation of the value and a hash of it, here in this case a4abb6, and the name of it, so here, x. It says, for the start step, x refers to this particular hash. It’ll do this for the rest of the steps and it’ll make it accessible effectively in all of the steps. We do this in a content address manner like this so that we don’t store the same value multiple times.

This also means that effectively all the steps have access to the value and you can also refer to them later. You can say, what was the value of x in my start step for this particular flow? When you execute the flows, they will be transformed to runs. A run is an instantiation of a flow. We effectively give it a particular ID. Users can associate tags with it. They’re also separated out by users so that it’s very easy to collaborate across different people in the team and keep your runs separate, and also be able to refer to them later and figure out, what did this run do, and associate semantic tags to it.

I would go over some of the details of the code here. You can see that in the first part, you can specify dependencies. We go over this a little bit later in this talk. David will talk about both scaling and orchestration, which are highlighted here in terms of your code where you can specify the resources that you need, as well as when you want your code to execute. We’ve already talked about the artifacts, those x. We’ve talked about parallelization that you can see that A and B execute at the same time.

Another thing that is not shown in this slide but that is useful in the rest of the talk is you can have multiple instances of the same step in a foreach manner. You can iterate over different inputs in the same step. Going back to some of the principles that David highlighted, Metaflow tries to focus on the bottom layer of the ML stack. We want to allow the developer or the data scientist, and usually at Netflix is other teams in the platform teams that will do this, focus on the upper layers like model development, feature engineering, while we at the Metaflow team focus more on the infrastructure need.

The good thing here is that the data scientists don’t really care about what the infrastructure looks like as long as it does what they want it to do. We also don’t want to constrain the data scientists in their model development as long as we can provide the infrastructure that they need. This is even more important today with LLMs and other large compute where people are very interested in doing cool stuff with models and you need a lot of resources at the bottom.

To finish off this section I’ll just give a brief history of Metaflow. It started out in 2017 at Netflix. I came around 2019 when it was open sourced at AWS re:Invent at the end of 2019. At that time, we had effectively Netflix Metaflow and then an open source Metaflow, which were separate. We had to rewrite part of it to do this. In 2021 a company named Outerbounds was formed with some of the employees from Netflix that basically went out and created a company to support Metaflow in open source. It’s still around.

At that time, we still had two versions of Metaflow which was difficult for collaboration. We finally merged it at the middle of 2021, and this is the state we’re in right now where we have effectively a common core that we use at Netflix. The open source Metaflow that is out there on GitHub is the one we use at Netflix, and we add stuff to it. Other companies do too. We released some of the additional extensions that we have in 2023 and a bunch of these other companies use it including two known ones, like Zillow, MoneyLion that actually built their own set of extensions to support their infrastructure. To show what the infrastructure looks like, you have a core that basically runs the graph that I explained earlier that allows you to access artifacts.

Around it we build things like data support where we have S3 support, then you have Azure and Google Cloud. We don’t use those. We use S3 and we add our own extension called FastData. You have Compute where you can use Kubernetes or Batch. We don’t use those, we use Titus. Schedulers, Argo, Airflow, Step Functions. We use Maestro. Environment management, which we’ll talk about. There’s a PyPI conda decorator in open source. We use our own from the Netflix extensions. Outerbounds released FastBakery. All this to show that we can pick and choose different parts again with the Lego block abstraction that we’ve talked about that this allows you to build the right approach for Metaflow. We’ll talk about hosting which is another part. There are other components to Metaflow that we will not get into in this talk.

Orchestration and Compute

Berg: You can run all of these jobs as a local process on your machine, but when you’re scaling up and scaling out, that’s going to be insufficient, although it’s great for prototyping. We rely on two also open-source technologies. One, Titus. If you’re interested in the details of Titus, you can look at some tech blogs we’ve produced over the years. It’s our in-house container and compute management system. It allows us to do something like shown here on my right, where I can request certain resources, number of CPUs, memory, and whatnot. We also need scheduling and orchestration, and that is handled by another OSS product called Maestro. When you’re going to production you need to schedule this thing either to run on a chronological every hour, every day, or through an event triggering system. We’ll talk a little bit about how the event triggering is used. I do want to highlight one really important aspect of Metaflow is that prototype to production, all of the code looks exactly the same.

Let’s take a look at this example here. It does some sort of a large computation. It’s just a very simple two-step graph. You’ll notice that at the very top here we have this schedule decorator. At some point we’re expressing that we want this to run hourly. We also have this resource decorator and we’re expressing that this start step here requires 480 gigs of RAM and 64 CPUs. The exact same code can run in these three different contexts. This is all just through the command line interface. If you just type your flow name run, it’ll run everything in subprocesses on your local machine. You’ll see logs in a variety of output.

If you do run with Titus, we’ll actually export Metaflow, land a code package in a container that was requested by the Titus system, expand the code package, and run your step on Titus, and the exact same code, nothing changed. Your dependencies that you specify and everything magically appear for you. You can even mix and match. Some steps can run locally on your laptop. Other steps can run on Titus. When you’re finally ready to schedule this thing, you can do maestro create. Now finally the schedule decorator will be respected. When you push it to the Maestro system, we will run this thing in an hourly cadence.

As a way of understanding the complexity of the systems that we have at Netflix, we want to talk just briefly about Content Demand Modeling. This is about understanding the drivers for content across the life cycle, like I’d mentioned, all the way from pitch to post viewing behavior. What we want to impress upon you here is that this is a very complex system. It has many machine layering models to take into account aspects across the life cycle of the content. It also interacts with separate systems. Although you can’t see the details in this slide, the gray boxes are external data sources that we get from other places. The green boxes are Spark ETLs that are wrangling large amounts of data to organize them into tables for machine learning.

Then the blue boxes are all Metaflow flows that are actually doing machine learning and usually writing tables out as well. Maestro allows the underlying signaling capabilities to support this kind of a graph. Interestingly, we don’t really want to expose all the users to the complexities of Maestro. Just in the same notion as I had said earlier, from prototype to production, we developed some special syntax. This syntax actually works across which system. If you’re using the Kubernetes integration with Airflow in the OSS, the syntax here is the same. Again, back to this Lego plugin architecture. If you’re just connecting one flow to another, it’s very simple. We just have this trigger on finish decorator. Under the hood we expand this into the whole complex signaling system that Maestro supports. We have other mechanisms to listen to arbitrary signals, and they have a very similar syntax. That’s how you can create the complex graph that we showed previously.

FastData

We’re going to move along to a different component. This is one that I have worked on quite a bit and I’m quite proud of, it’s called FastData. Let’s go into some of the details. What is FastData? FastData is about getting memory into your Python process very quickly. Many companies, probably yours, have some sort of a data warehouse. There’s probably some SQL engine behind it, and that’s all great for all of your large-scale data wrangling and preparation. At some point, you need to actually do something in Python. You need to get that data into your Python process. The use cases here might be some last mile feature engineering or actually training your model. We provide two abstractions, Metaflow Table and Metaflow DataFrame. Metaflow Table is an abstraction that represents data in the data warehouse.

At Netflix, our data warehouse is in Apache Iceberg table. Iceberg is a metadata layer on top of raw data files. It’s really great for us because it is independent of any engine. You can implement your own engine which is what we have done. The raw data is Parquet files, and those live in S3 like most everything at Netflix. Below that is in the user space, so the table in-memory, this is in your Python process. Here, we have a hermetically sealed dependency-free Parquet decoder built on Apache Arrow. We’ll go into some of the details of how that works in the coming slides. We’re not reinventing Parquet decoding. We’re using what is out in the open-source community. Your whole goal here is to get the data into your Python process as fast as possible.

Then, probably convert it into one of your favorite frameworks. We’re not super opinionated about which ones data scientists use. Here I’m showing pandas, or my new favorite framework, polars. Then, also we have a custom C++ layer as there are some operations that are specific to Netflix that we can just do better if we implement them ourselves. As I mentioned, the remote tables in the Netflix data warehouse are Iceberg tables. Here’s just some really simple syntax about how you can get some data into your Python process. We instantiate a table with a name, in this case, the namespace dse. The table is called my_table. I want to just grab data from one partition. This would be the equivalent SQL of a SELECT * from dse.my_table where dateint equals that date. We’re not actually running any query engine. We are parsing the Iceberg manifest files ourselves, finding the data files that correspond to this partition, downloading them directly to the machine, and then decoding them.

I just described the remote table representation. Now let’s take a look at the Metaflow DataFrame, the intable representation. Here in this little code snippet on top, we’re again using the Table object. This get_all function will just grab the whole table. Then, here we’re using one of those high-performance C++ operators that I had mentioned, this one’s called take. It’s very simple. It selects rows. In this case, we’re using the row, the column is active, like if you’re an active member or not, to select those rows and create a new DataFrame.

Eventually, you can convert it to polars or pandas. What’s so special about this Metaflow DataFrame is that it’s completely sealed, independency-free. What do I mean by that? I mean that we actually have our own version of Apache Arrow. It’s just their OSS version of Apache Arrow that we fixed to a particular number. We control the entire compilation, add some of our own custom code in, and we create a very fat binary called metaflow-data.so. It’s a Linux shared object. We actually ship this along with Metaflow. This means that we don’t download any packages. We don’t have any dependencies on any external software. You might be asking, why did these people not just use Apache PyArrow? That would be the natural way of decoding Parquet in Python.

The problem is is that our users might want to use Apache PyArrow or their software that they’re using, like TensorFlow, may have a dependency on it, and we don’t want our dependencies to step on the dependencies of our users. We are isolating them from how we do the Parquet decoding. Interestingly, this means that we can change the implementation under the hood and no one will see a difference.

I want to give you a few numbers here about how this is used. You can see in 2023, we had quite a lot of usage of this piece of software, but more importantly, the throughput. In this example that I created here, we scanned 60 partitions, download the Parquet, do the parallel decoding using the Metaflow DataFrame. It was about 76 gigabytes uncompressed on a single machine, and I get throughput of about 1.7 gigabytes per second. That’s actually really close to the throughput of the NIC on this particular machine. I have some other performance examples here we won’t go into too much. This top one is select account from user.table. It’s just showing that this fast downloading method in bypassing the query engine is much faster than what people were doing before, using Presto or Spark.

The last two graphs are showing that our C++ implementation of filtering is much faster than what you would achieve if you were using pandas or something like that. We still encourage people to use pandas or polars or whatever software they want, but we provide a few heavy lifting operations that they can do before converting to those more convenient frameworks.

By way of demonstration, I want to talk about a Content Knowledge Graph. As I had mentioned earlier, this is a process that matches entities across different database representations, and they may have missing data, incorrect data, different attributes, whatnot, creates a bipartite matching graph problem. It requires daily, if that’s the frequency at which we want to run it, over 1 billion matches to be computed. It’s embarrassingly parallel, but quite high scale. Here’s a Metaflow graph that shows the overall architecture of this. We have an input table that is sharded by a reasonable quantity, like the hash of the rows. This gives us a bunch of different partitions. We can spread those partitions out in a Metaflow foreach.

Each one of these nodes in the center is getting a different subset of the matching problem. We use the Table object to read the data in, Metaflow DataFrame and whatever other software you want to process it. Then, similarly to reading, we have tools to writing back out to the Iceberg data warehouse. Again, all of this is done in compliance with our Netflix data warehouse, which is usually like the Spark system. Let me show you a little bit about what that code could look like. This is not the exact matching problem, but structurally, it’s the same as the graph I showed you. We have some ways of getting all of the Parquet shards as groups. In this case, we want 100 groups. That means that we are going to spin up 100 containers to run this process function, where we grab some data, load the Parquet. In this case, I’m just showing, grabbing the number of rows from the DataFrame, and then join the graph back together, and you can do something with all of the results that you just computed.

Metaflow Environments

Metaflow environments is the way that we can keep things consistent across all of these different compute substrates that we have.

Cledat: Metaflow environments. What is the problem we want to solve? The basic problem is that we want our data scientists to be able to have reproducible experiments. If they run something today, then a week later, they can get back to it, and they can run it again and observe the results, or even a month later, they can go into, what was the model like? What artifacts did I produce in this result? That’s great. Unfortunately, the world keeps moving, and so it makes it hard to do. The solution that data scientists had been using before to keep their environments the same was something like pip install my awesomepackage. That works until my awesomepackage decides to become less awesome and depend on a package that you didn’t depend on and break your whole system.

Environments basically solve this, and it’s just a fancy way of saying we want a good dependency management that is self-contained. If you look at what a reproducible experiment looks like, it’s a spectrum all the way from not reproducible at all to the holy grail. The first thing, you need to be able to store effectively your data, so your model in this case, for example. Metaflow already does this by storing it in Metaflow artifacts. It persists this to S3, which sticks around. Then you need your code, what you do with your data effectively. We already do this. Metaflow stores your code as a Metaflow package, again, in S3, so it’s accessible later in version control, so you can refer to it, which run you did. We have this already. We talked about it with Metaflow run. You have metadata, so you can refer to it, like, which type of hyperparameter did I use? We have tags to support this already with Metaflow. This part is about supporting libraries, which is effectively your environment that all of this code can run in.

There are two more things on top that we don’t talk about here. You have data, so you can refer to which data you actually need to use. If you use good hygiene here, which most people do, which means that you don’t overwrite your tables and you actually append new partitions, for example, you have this reproducible data that you can refer to later. Then, of course, there’s entropy. The keynote speaker spoke about cosmic rays. We don’t have nearly that problem here, but that’s what I’m talking about here. We can’t solve that completely.

How do we do it? We considered a couple of solutions, PyPI, Docker, Mamba. Basically, the PyPI don’t really have runtime isolation because you depend on your platform. It’s not really language agnostic. It does have a low overhead and it’s a very good solution for people. Docker is great for the first two, not so much for low overhead. It also is hard to use in our Netflix environment because we already run in a Docker container, so it makes it hard to use Docker in Docker. Conda, or specifically Mamba, answered all of these questions in the positive, and that is what we’ve been using. What does it look like? Effectively, users can just specify something like this, saying, this is how I want to use PyTorch 2.1.0, and CUDA version 11.8, and that’s pretty much it.

Then after that, Metaflow will take care of making sure that this environment is available wherever your code runs, and reproducing it there. We also support things like requirements.txt, again, to lower the cognitive overhead for users, going back to one of the principles. That is something that they’re familiar with, so they can use that. We have externally resolved named environments, again, for the pyramid concept here, where other teams can build effectively environments for data scientists to use, and they don’t have to really think about them. You can think of named environments like just tags, like someone will create this PyTorch environment that you can then go ahead and use.

From a technical detail, how do we do this? An environment is actually defined by two quantities. The first one is effectively what the user requests, and the second one is effectively what the environment looks like at the end. If we have a set of these environments, and we have ones that are already solved, so that means that we know what the user requested and we know how exactly it was solved, we can take this and say, the user wants all these environments, we know all the ones that are already solved, now we need to solve these other ones. We take these other environments that we need to solve, we run them through sets of tools that are completely open source, we didn’t invent the wheel here, things like Pip, Poetry, Conda lock, Mamba, whatever. There’s a bunch of technology that runs under the hood, abstracted completely from the user. We figure out what packages we need, we will then effectively fetch all these packages from the web, upload them to S3, persist them. Why do we do this?

If you run at scale like we do at Metaflow, we realize that some of these sources for these packages actually will throttle you, or sometimes will go down, and this means that your environment no longer is reproducible. You can’t then fetch those packages from your sources. We put them in S3, which is a high bandwidth system, and which makes your environment much more reproducible. Once we have that, we also save effectively the metadata for the environment, saying, all these packages belong together in this environment. This is where we implement named environments as well, where we can refer it to a particular alias. We give it back to the user as well, saying, here are all the environments that you have to solve.

After this, we can effectively run your code. We can reproduce the environment. We can rehydrate it anywhere you run it. We can rehydrate it later. We can say, if you want to access the artifacts from this run, here’s the environment that you need, and we’ll give it to you.

One example how people use this, is through effectively training explainers. In this case, we effectively have flows that train models, and then we want to have another flow that trains another model on the model that was trained in the first flow. Each of these models that were trained, model A, B, and C, have different sets of dependencies. Each data scientist or data scientist group will use different sets of dependencies for their model, we leave them the choice there.

Now when you want to train the explainer for it, you need to not only run in the environment that the model was trained in, and you also need to add your own dependencies, for example, SHAP, to be able to train it. What the user did here, using Metaflow environments, is, effectively, they’re in their flow, the first step builds the new environment to train the model. In the second, you basically say, now I’m going to train the explainer for this model. This is a very simplified view of it, but this is what Metaflow environment allows you to do quite simply. Before this, this was not possible to do, you had to really hack around to figure out which dependencies the user had. This makes it very easy and very few lines of code to make this service run.

One more thing. You might think that this is great, if your environment is all nice and contained, then nothing can change. However, the world, unfortunately, doesn’t work quite that way. There are things like thick client, and we actually have use cases at Netflix, where you have an external service, like a gRPC image service, that also keeps trotting along, and so that service will nicely keep, effectively, the Netflix managed Docker up to date, saying, these two can talk to each other all the time.

Now, if you have your own thick client inside your environment that doesn’t move, because you’ve locked it in place, at some point, it may stop moving, therefore breaking the reproducibility of your experiment. The solution for this is what we call the escape-hatch, which effectively runs a subprocess with the thick client on the side, and allows you to escape out of your conda environment. In this case, your thick client runs through this fake proxy escape, and will communicate with the base Python environment that runs on the main image, that moves along with the service itself.

Metaflow Hosting

Metaflow Hosting is the basis over which Amber, which is our media processing framework, works. I’ll explain some of the details here. Metaflow Hosting is, again, a very simple way of having the user be able to express a RESTful service. This is all that it takes to basically take your code that you have in the endpoint right here, your code to have that put into a RESTful service. It looks very much like Metaflow, so again, it looks very much like Python, and so this is something that you don’t need a lot of cognitive overhead to figure out how to do. I’ll go over both the initialize and the endpoint specification in the next few slides.

At a basic level, like I mentioned, you basically can do any code that you want in your endpoint, and you can load any artifact that you want, in this case, you can load something from Torch. This, as we’ll show in the next slide, is also tied to Metaflow, though. The typical use case is that you will train your model in a training flow, and then you will host it using this, and allow users to basically access it to do real-time inference.

Defining your Metaflow service. There are two main parts. The first one is the initialize part, where you basically say, what does my service want? In this case, going back to the dependency management that we just talked about, you can link it to an environment, saying, I want to use this environment. This is the same environment that you can use for training, for example, so this makes it very easy for users. We have region support. This is for internal use, since we run in multiple regions. You can specify resources that you want for your service. You can specify some scaling policies as well. We don’t need the user to tell us more than this, and we will take care with this, basically, of scaling their clusters up and down, depending on their usage. You can also specify cost-saving measures, or how long you want it to keep up. That’s pretty much all you need to specify your service.

The next thing is you need to specify what your computation does. It’s similarly simple. You specify a name and a description, which can appear in a Swagger page to let your users know what your service does. You specify the methods for the REST things that it needs to do, and some schemas that will be enforced and checked as well. Then, you can specify any arbitrary Python code, nothing special required. You return your payload, and that’s all that you need to do. Deploying it, if you tie it to a Metaflow, so this is a regular Metaflow flow, as we’ve seen it before, this will, for example, run on a daily schedule in a Maestro workflow. This is like, you can retrain your model and then deploy it every day. You specify your environment. This is your artifact, your model that you’re training.

Then, you specify, I want to deploy this model. You say, I want to deploy whatever happened in start. This is how long I want to wait for the deployment to happen. Some audit functions, so this allows you to basically verify that your model is actually valid. You can specify any arbitrary audit function here. Then, you say, now I make this visible to my user. That’s it. That’s pretty much it. Users basically use this, and they can have an entire flow where they train their model, and then they deploy their service to endpoints. Then consumers can start using it right away. For users, this has been really easy because they really don’t have a lot to do, and all the infrastructure under the hood is completely hidden out and taken care of for them. Out of the box, they get request tracing. They get metrics. They get dashboards. They get autoscaling. They get Swagger. They get logging for free.

The example for this is effectively Amber. Amber is a system that is built on top of Metaflow Hosting, which sits here. Amber will take movies and then features that need to be computed on those movies and then send them over to Metaflow Hosting. Each feature will be running effectively as a service. Metaflow Hosting will take care of scaling the service up, getting the request from Amber to the movie, processing the request, and sending it back. One of the things that this slide shows is that Metaflow Hosting actually supports both with the exact same code that you saw earlier, synchronous request, as well as asynchronous request, which is what Amber uses here, because some of these requests can take a really long time to process, so they are done in an asynchronous fashion.

In-Flight Work

Berg: We started working on Metaflow Hosting, circa 2018, and back then, we already had this other idea called Metaflow Functions. What’s the problem that we want to solve here with Metaflow Functions? This is work that’s ongoing right now. It’s actually what I’m working on. This is not complete by any means, but we do hope to put it in the OSS. The idea is model relocation. You train a model in Metaflow, but then I actually need to use it in all these other contexts. It might be my own workflow that does some sort of bulk scoring, like I had shown during the data section. It might be another user’s workflow that doesn’t know or care about the details of your model, like the dependencies or anything like that. It might be some other Python environment, like a Jupyter Notebook, or it might even be the Metaflow Hosting. This problem of relocation gets even trickier if you are working in a large company like Netflix. It’s not just us working on a machine learning platform, there’s a large team.

In fact, some of that team implements their systems in different languages. We have a large Java, Scala stack to do feature engineering, model serving, and some offline inference. How do we share code from Metaflow with all of these other systems? The idea here is to make it as simple as possible. We just want the user to express one UX that binds the code, the artifacts, and the environment together, and builds a single encapsulation that can be used elsewhere. You might be wondering, I thought this is what microservices were for. We just talked about those in Metaflow Hosting. The problem with the microservices, it actually adds a new UX. It adds a communication protocol on whatever your core computation was, like REST in the case of Metaflow Hosting, or gRPC. That mixes two concepts together that we would like to be separate for the user. It’s also possibly inefficient. It’s choosing a networking runtime for you that may not be appropriate for all of those different relocatable scenarios.

Let’s take a step back and do something really simple. What if we allowed some syntax here with this @function decorator? The user is telling us that this function that just adds two numbers together is going to be a part of this system. What if we could export it someplace? This would, using all of the same mechanisms that Romain described earlier, package up the dependencies, package up the code, and create a little metadata file that describes this function. Any other system can read that metadata file, which tells you how to pull the package and recreate a little local runtime.

After importing the function here below, you’re not actually getting the original function. You’re getting like a proxy object to it. That function is actually running in its own subprocess, which encapsulates the environment and all of the other properties that Romain had mentioned in the previous slides. What about more complicated scenarios? Don’t we want to take things that were trained from Metaflow? Let’s take another slightly different version of this adder function that takes a Metaflow task as one of its arguments. Below, we can use our client to say, grab the latest run from my flow, refer to one of the steps, and grab that task object.

That task object contains all of the data artifacts, like the model and all of the environment. We have this fun little syntax where we combined that task object to that function. It works like currying or other things that you’ve seen in other systems. It basically creates a new function back that removes the task, and you get that same adder function that you had seen previously. The user of your function is completely unaware that you have bound this task to it and provided some information that wasn’t available through the arguments.

We’ll just give a little hint of the architecture. We rehydrate your function in its own conda environment. This is the user’s Python process. This is our internal Python process. We have a ring buffer shared memory interface, and we use some certain Avro-based deserializers and serializers to pass the data back and forth. I had mentioned that this is also meant to work in cross-platform. You can take that same diagram that I just showed, and you can add any other layer on top of it. If your language has good POSIX-compliant shared memory facilities, you can go ahead and implement a direct memory map interface to this function, very highly efficient. If that’s inconvenient, you can implement a REST, or a socket, or a gRPC communication layer on top.

Developer Experience

Cledat: The last section here on this talk is effectively developer experience. Metaflow has been really useful in getting from the laptop all the way to production, as David mentioned earlier. One of the things that users have been telling us is that still that first step of going from your Jupyter Notebook, for example, your ideation stage, to your Metaflow flow is not always the easiest. That’s what we want to try to improve. Again, this is very in-flight work. Some of this, you’ll see it appear in open source, most likely, and some will take a little longer. What we want to improve, though, is effectively the development cycle that users go through.

Usually, you will start developing your code, and particularly if you think of a Metaflow flow, you will probably focus on a particular step first. You will start developing your step, and then you will execute your step. Then you want to validate whether your execution is correct, and you’ll make progress, probably either iterate on that same step. At some point, you might move on to the next step. Once you have your whole flow that’s working, you’ll want to productionize that through some CI/CD pipeline. You’ll push out your flow, there you’ll have effectively some tests. You might have some configurations where you want your flow to be deployed in different manners. This is extremely common at Netflix, and I’m sure in other companies as well.

If you take, for example, the CDM example, the Content Demand Modeling, they will effectively have different metrics that they’re optimizing for that they need to calculate, but their flow looks very similar. It will use different tables. It might use different objectives, slightly different metrics, but most of the structure is the same. Ideally, they want to develop this flow once, and then configure it to different configurations. You’ll take all those and deploy multiple flows. We want to improve this whole cycle, both the first one, where you’re basically iterating and developing, and then the CI/CD part where you’re deploying. The two features that we have upcoming for this, the first one is called Spin. The name may change, we may spin on it a little bit. The idea is to iterate on a single step quickly with a fast local execution.

Then, once you’re done with that, you can iterate on your next step using the results of your previous step in that next step. You can build your flow step by step, and once you have the whole thing, you’ll be able to then ship it over to production. This will actually also be useful, if you think about it, just for debugging a particular step that has run. You’ll be able to say, I just want to debug this step, just execute it really locally, make the changes quickly, and re-execute that step. Right now, that is not very possible. You have to re-execute the whole flow, so it’s annoying for our users. The last one that should be coming out soon is the configurations, and will be very simply, you’ll have deploy-time, static parameters that you can define in some file, and those values can be used anywhere in your flow, in the decorators, in the parameters, steps.

Decorators and Their Context

Betts: You had a slide about, I can run this locally, I can run on a server, and here’s where the instructions are for how many cores and memory or the scheduling. Does that all just get ignored when you’re running it locally? Those were only applied for Maestro?

Cledat: The decorators have different context, and they’re aware of their context, and they’ll apply themselves as needed in the correct context.

Betts: I was hoping my laptop was suddenly going to have 64 cores.

Cledat: No, we didn’t increase the RAM on your laptop.

Questions and Answers

Participant: Again, about resources and autoscaling. If I determine that my model needs a certain amount of resources, and that’s incorrect. I tell you it needs 64 cores, whatnot, and actually it needs 128, or it needs 2. What is abstracted away to increase performance for that throughput?

Cledat: There are actually two cases here. The first case is you tell us that you need more, and you actually need less. At that point, it’s not a functionality problem, it’ll be a resource problem. Netflix has become more concerned with resources, especially with large language models these days. We do try to surface this to the user and give them hints like, you asked for this many resources, but you actually only needed this much, so maybe tweak it around. We don’t prevent users from doing that. We try to provide a platform that can give them as much information for them to do their job.

On the other case, where, effectively, it won’t work, then unfortunately, it will break, and then users usually come to us and say, what happened here? We help them solve the problem. You’re right that effectively, we can’t abstract everything away, unfortunately. We did explore at some point whether or not we could auto-tune the resources, and there’s some research that’s going on there. There are some cases at Netflix where we do do that. Actually, there is one interesting blog post that you can find on the Netflix Medium blog that goes about tuning resources for Spark jobs, depending on their failure characteristics. Actually, the tuning algorithm that is used uses Metaflow, so it’s funny. It’s not Metaflow that’s tuning itself, but it’s Metaflow used to tune something else. Yes, unfortunately, not everything can be abstracted.

Berg: One thing that we do provide is some automatic visualizations. We have a Metaflow UI that can show you characteristics about your individual runs and tasks. In those visualizations, for instance, if you use a GPU, we automatically embed some GPU statistics in a graph, and warn you if you’re underutilization, for instance. We definitely have a notion of freedom. We would rather people do their work and use too many resources, because, again, I mentioned that people time is actually more costly than machine time. In the best case, a project is successful, that’s fine, if we go back and tune the resources a little bit manually.

See more presentations with transcripts

MMS • RSS

Buyback capacity is moving up in a very big way for three stocks. Two tech stocks hoping to leverage and profit from AI are indicating that management has significant confidence in generating future returns. Additionally, an automobile components company can now buy back nearly a third of its shares.

MongoDB Expands Share Buyback Program to $1 Billion

On June 4, MongoDB NASDAQ: MDB reported earnings that ended its streak of disappointing results. The company also announced a big increase to its share buyback program. MongoDB raised its share repurchase authorization by $800 million.

MongoDB Today

As of 06/17/2025 04:00 PM Eastern

- 52-Week Range

- $140.78

▼

$370.00

- Price Target

- $282.47

Now, its total buyback capacity is $1 billion. This is a substantial buyback capacity, equal to approximately 5.9% of the company’s market cap as of the June 13 close.

MongoDB’s stock has dropped dramatically over the past 16 months or so. The stock reached a share price of around $500 in February 2024, but now trades in the low $200 range. MongoDB has never actually spent on stock buybacks before, and the timing of the authorization suggests the company sees value in its stock near current levels.

This was a welcome surprise, especially when paired with the company’s Q1 financials that beat on sales and adjusted earnings per share (EPS). Shares rose approximately 13% in the day after the results. This comes after the company’s March report, when shares saw a post-earnings drop of 27%.

The company issued its full fiscal year outlook that quarter, and analysts considered it disappointing. It has been waiting to gain traction in AI application-building use cases, but progress has been slow. Still, the company’s subscription growth last quarter was strong at 22%.

Autoliv Launches $2.5 Billion Share Repurchase Program

Autoliv Today

- 52-Week Range

- $75.49

▼

$112.27

- Dividend Yield

- 2.54%

- P/E Ratio

- 12.67

- Price Target

- $114.00

Next up is Autoliv NYSE: ALV. The company just announced a massive share repurchase program. The company’s $2.5 billion authorization is equal to around 30% of its market capitalization as of the June 13 close. The program will last through the end of 2029, or approximately 18 quarters. Autoliv would need to significantly accelerate its buyback pace to utilize this full capacity over that period.

Since the company began repurchasing stock back in 2022, it has averaged buyback spending of around $82 million per quarter. To use the full $2.5 billion over 18 quarters, that number would need to ramp up to around $139 million per quarter, an increase of nearly 70%.

Autoliv also raised its dividend by 21%. Its next $0.85 per share dividend will be payable on Sept. 23 to shareholders of record on Sept. 5. This quarterly figure implies an annual dividend payout of $3.40, giving the stock an indicated dividend yield of approximately 3.2%.

DocuSign Adds $1 Billion to Share Buyback Authorization

DocuSign NASDAQ: DOCU, a software firm implementing AI, also just announced a substantial addition to its buyback authorization.

Docusign Today

As of 06/17/2025 04:00 PM Eastern

- 52-Week Range

- $48.80

▼

$107.86

- P/E Ratio

- 14.14

- Price Target

- $89.77

The company’s additional share buyback authorization is worth $1 billion. This adds to the stock’s previous authorization, bringing its total buyback capacity to $1.4 billion. This total is equal to around 9.4% of the company’s market capitalization as of the June 13 close. DocuSign has really stepped up its buybacks recently, spending $700 million on repurchases over the last 12 months. From 2020 to 2023, its average annual spending was only around $300 million.

Shares have been moving on an upward trajectory, rising around 44% over the past 52 weeks. This strong performance comes even after the firm’s latest earnings, which caused shares to fall 19%. However, the company’s recent rise in buybacks indicates confidence in the generally improving outlook of its own business. Recent buyback activity and the sizeable new authorization suggest that the company thinks shares can keep rising.

DocuSign is in the process of releasing AI features for customers powered by its Iris AI engine. It plans to roll out AI contract agents later this year. This tool will analyze contracts in seconds, creating efficiencies over manual contract review.

These increases in share buyback capacity seem to indicate that management is confident in the direction of shares going forward. For Mongo, this may be more due to the huge drop in its share price. Management may think the stock is hitting a bottom near current levels.

For DocuSign, management may be bullish on its coming AI features and believes that markets are undervaluing its growth prospects. For Autoliv, the sheer size of the new authorization suggests a strong commitment to shareholder returns and confidence in long-term performance, especially following its recent dividend increase.

Before you consider MongoDB, you’ll want to hear this.

MarketBeat keeps track of Wall Street’s top-rated and best performing research analysts and the stocks they recommend to their clients on a daily basis. MarketBeat has identified the five stocks that top analysts are quietly whispering to their clients to buy now before the broader market catches on… and MongoDB wasn’t on the list.

While MongoDB currently has a Moderate Buy rating among analysts, top-rated analysts believe these five stocks are better buys.

Discover the next wave of investment opportunities with our report, 7 Stocks That Will Be Magnificent in 2025. Explore companies poised to replicate the growth, innovation, and value creation of the tech giants dominating today’s markets.

MMS • RSS

An enterprise IT and cloud services provider, Tier 5 Technologies, has partnered with MongoDB, a database for modern applications, to harness Africa’s digital economy potential projected to reach $100 billion.

The partnership, also aimed at fostering the expansion of Tier 5 Technologies services into the West African market, specifically in Nigeria, was launched at a recent “Legacy Modernisation Day” event in Lagos.

Speaking at the event, Anders Irlander Fabry, the Regional Director for Middle East and Africa at MongoDB, described the partnership as a pivotal step in deepening their presence in Nigeria.

SPONSOR AD

“Tier 5 Technologies brings a proven track record, a strong local network, and a clear commitment to MongoDB as their preferred data platform.

“They’ve already demonstrated their dedication by hiring MongoDB-focused sales specialists, connecting us with top C-level executives, and closing our first enterprise deals in Nigeria in record time. We’re excited about the impact we can make together in this strategic market,” he added.

The Director of Sales, Tier 5 Technologies, Afolabi Bolaji, stressed the importance of the partnership, describing it as a broader shift in how global technology leaders perceive Africa.

“This isn’t just a reseller deal; we have made significant investments in MongoDB because we believe it will underpin the next generation of African innovation. Many of our customers from nimble fintechs to established banks already rely on it. Now, they’ll have access to enterprise-grade features, local support, and global expertise.

“With surging demand for solutions in fintech, logistics, AI, and edtech across the continent, this partnership holds the potential for transformative impact and signals a long-term commitment to Africa’s technological future, helping to define how Africa builds its digital economy from the ground up,” he said.

MMS • RSS

Exchange Traded Concepts LLC raised its holdings in MongoDB, Inc. (NASDAQ:MDB – Free Report) by 92.3% during the 1st quarter, according to its most recent disclosure with the Securities & Exchange Commission. The firm owned 15,571 shares of the company’s stock after buying an additional 7,472 shares during the period. Exchange Traded Concepts LLC’s holdings in MongoDB were worth $2,731,000 at the end of the most recent quarter.

A number of other hedge funds also recently made changes to their positions in MDB. Vanguard Group Inc. raised its stake in MongoDB by 0.3% in the 4th quarter. Vanguard Group Inc. now owns 7,328,745 shares of the company’s stock valued at $1,706,205,000 after acquiring an additional 23,942 shares during the period. Franklin Resources Inc. boosted its holdings in shares of MongoDB by 9.7% during the 4th quarter. Franklin Resources Inc. now owns 2,054,888 shares of the company’s stock worth $478,398,000 after buying an additional 181,962 shares during the period. Geode Capital Management LLC grew its stake in MongoDB by 1.8% in the 4th quarter. Geode Capital Management LLC now owns 1,252,142 shares of the company’s stock valued at $290,987,000 after buying an additional 22,106 shares during the last quarter. First Trust Advisors LP raised its holdings in MongoDB by 12.6% in the 4th quarter. First Trust Advisors LP now owns 854,906 shares of the company’s stock valued at $199,031,000 after acquiring an additional 95,893 shares during the period. Finally, Norges Bank acquired a new stake in MongoDB during the fourth quarter worth approximately $189,584,000. 89.29% of the stock is owned by institutional investors and hedge funds.

Insider Transactions at MongoDB

In other MongoDB news, insider Cedric Pech sold 1,690 shares of the firm’s stock in a transaction on Wednesday, April 2nd. The shares were sold at an average price of $173.26, for a total value of $292,809.40. Following the completion of the sale, the insider now directly owns 57,634 shares in the company, valued at approximately $9,985,666.84. This trade represents a 2.85% decrease in their ownership of the stock. The transaction was disclosed in a document filed with the SEC, which can be accessed through this hyperlink. Also, CAO Thomas Bull sold 301 shares of MongoDB stock in a transaction on Wednesday, April 2nd. The shares were sold at an average price of $173.25, for a total transaction of $52,148.25. Following the completion of the sale, the chief accounting officer now directly owns 14,598 shares of the company’s stock, valued at approximately $2,529,103.50. This trade represents a 2.02% decrease in their ownership of the stock. The disclosure for this sale can be found here. In the last ninety days, insiders sold 49,208 shares of company stock valued at $10,167,739. Corporate insiders own 3.10% of the company’s stock.

Analyst Upgrades and Downgrades

Several research firms have recently commented on MDB. Wedbush reaffirmed an “outperform” rating and issued a $300.00 target price on shares of MongoDB in a research report on Thursday, June 5th. UBS Group increased their price objective on MongoDB from $213.00 to $240.00 and gave the stock a “neutral” rating in a research report on Thursday, June 5th. Piper Sandler boosted their target price on shares of MongoDB from $200.00 to $275.00 and gave the company an “overweight” rating in a research report on Thursday, June 5th. Cantor Fitzgerald upped their price target on shares of MongoDB from $252.00 to $271.00 and gave the company an “overweight” rating in a research note on Thursday, June 5th. Finally, Canaccord Genuity Group dropped their price objective on shares of MongoDB from $385.00 to $320.00 and set a “buy” rating for the company in a research note on Thursday, March 6th. Eight analysts have rated the stock with a hold rating, twenty-four have assigned a buy rating and one has issued a strong buy rating to the company’s stock. According to data from MarketBeat, the company has a consensus rating of “Moderate Buy” and an average price target of $282.47.

Check Out Our Latest Research Report on MDB

MongoDB Price Performance

Shares of MongoDB stock traded up $3.10 during midday trading on Tuesday, reaching $205.60. The company had a trading volume of 1,852,561 shares, compared to its average volume of 1,955,294. The stock has a fifty day moving average price of $182.98 and a 200 day moving average price of $223.93. MongoDB, Inc. has a fifty-two week low of $140.78 and a fifty-two week high of $370.00. The firm has a market cap of $16.69 billion, a PE ratio of -75.04 and a beta of 1.39.

MongoDB (NASDAQ:MDB – Get Free Report) last issued its quarterly earnings results on Wednesday, June 4th. The company reported $1.00 earnings per share (EPS) for the quarter, topping analysts’ consensus estimates of $0.65 by $0.35. MongoDB had a negative net margin of 10.46% and a negative return on equity of 12.22%. The firm had revenue of $549.01 million for the quarter, compared to the consensus estimate of $527.49 million. During the same quarter in the previous year, the company earned $0.51 EPS. The company’s revenue for the quarter was up 21.8% on a year-over-year basis. As a group, sell-side analysts forecast that MongoDB, Inc. will post -1.78 EPS for the current year.

MongoDB Company Profile

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Read More

Before you consider MongoDB, you’ll want to hear this.

MarketBeat keeps track of Wall Street’s top-rated and best performing research analysts and the stocks they recommend to their clients on a daily basis. MarketBeat has identified the five stocks that top analysts are quietly whispering to their clients to buy now before the broader market catches on… and MongoDB wasn’t on the list.

While MongoDB currently has a Moderate Buy rating among analysts, top-rated analysts believe these five stocks are better buys.

MarketBeat’s analysts have just released their top five short plays for June 2025. Learn which stocks have the most short interest and how to trade them. Enter your email address to see which companies made the list.

MMS • RSS

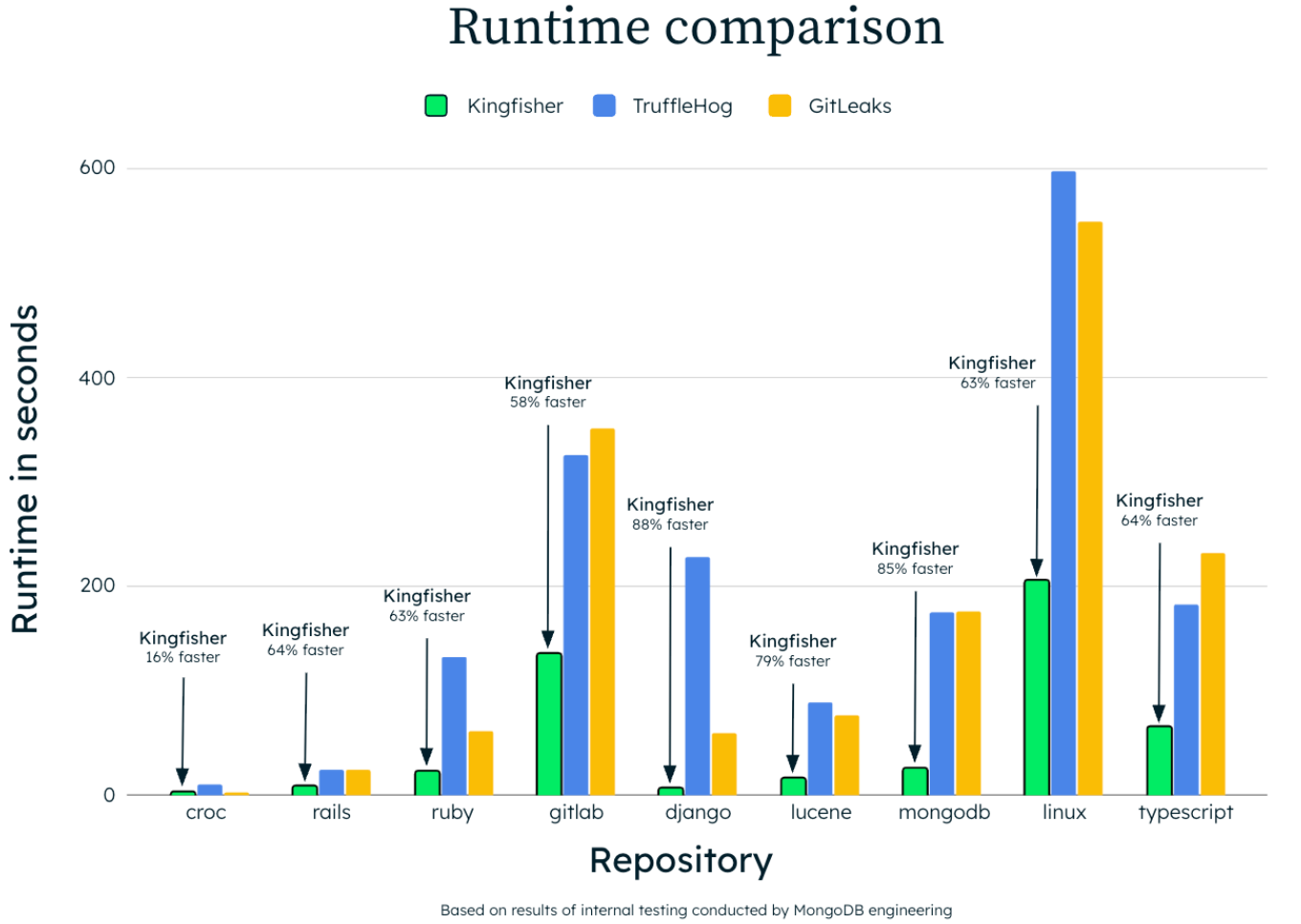

MongoDB has open-sourced a tool called Kingfisher that it uses internally to “rapidly scan and verify secrets across Git repositories, directories, and more”, publishing it under an Apache 2.0 licence this week. (GitHub here.)

Unlike Wiz’s recent Llama-3.2-1B-based secret scanner, this one proudly uses some turbo-charged regex rather than generative AI to work.

Staff security engineer Mick Grove said he created Kingfisher at MongoDB after growing dissatisfied with the “array of tools, from static code analyzers to secrets managers” that he was using to detect and manage exposed secrets before they turned into security risks for the company.

One frustration was the range of false positives such tools generate.

Kingfisher, he said, actively validates the secrets it detects – claiming it is significantly faster than rival OSS tools like TruffleHog or Gitleaks. Users can run it for service‑specific validation checks (AWS, Azure, GCP, etc.) to confirm if a detected string is a live credential, the Kingfisher repo says; i.e. it can be used for testing database connectivity and calling cloud service APIs to confirm whether the secret is active and poses a risk.

It can parse source code across 20+ programming languages.

(The project was “initially inspired by and built on top of a forked version of” the Apache 2.0 licensed Nosey Parker tool developed by Praetorian for offensive security engagements. “Kingfisher re-engineers and extends its foundation with modern, high-performance technologies” said Grove.)

Kingfisher “combs through code repositories, Git commit histories, and file systems. Kingfisher performs this to rapidly uncover hard-coded credentials, API keys, and other sensitive data. It can be used seamlessly across GitHub and GitLab repositories, both remote and local, as well as files and directories on disk,” Grove wrote of the Rust-based toolkit.

It “combines Intel’s hardware‑accelerated Hyperscan regex engine with language‑aware parsing via Tree‑Sitter, and ships with 700+ built‑in rules to detect, validate, and triage secrets before they ever reach production.”

MMS • RSS

The mix of Java, AWS, and MongoDB is helping the fuel industry run more efficiently and serve customers better.

Sasikanth Mamidi

The fuel sector might not seem like the most obvious place to look for innovation, but technology is quietly reshaping how things work there, too. From managing fuel pumps to tracking tank levels and offering loyalty rewards, digital tools like Java, AWS, and MongoDB are playing a more increasingly significant role.

A seasoned professional, Sasikanth Mamidi, whose work spans across cloud platforms and large-scale applications, has been central to these changes. As a Senior Software Engineer Lead who has been in the industry over 15 years, he helped build a Spring Boot application that runs on AWS Lambda and uses Kinesis streams to move data quickly and securely. The system uses MongoDB Atlas to store real-time data and has greatly assisted in reducing operating costs by 15%, while keeping fuel dispensers running 95% of the time.

He shared how serverless computing, where systems automatically scale without needing physical servers, has been a key part of this shift. One platform built with AWS Lambda and Amazon API Gateway cut infrastructure costs by 20%. It means better performance for less money-something every business aims for. But the impact of these initiatives isn’t just about reducing costs. Real-time event handling is a major requirement in this space. “With is kinds of feature implementation with this tech stack, we saved millions of dollars from Fuel thefts, almost 20% cost reductions in the maintenance of ATGs,” he added. “We also achieved to deliver accurate reconciliation reports, also we can send fuel sales report in terms of volumes and dollars on demand for all the stores.”

Another big improvement through the development of a mobile application that was built to let customers authorize pumps, fuel their vehicles, and get receipts-completely contact-free. “This was implemented at the Covid which helps the customers to reduce the contact to the pumps and also gave very exciting experience too,” he noted. The app also ties into a loyalty system where users earn points and get discounts based on how much fuel they buy. The company also replaced expensive third-party tracking devices with a cloud-based system. Before, they had to rent equipment just to read fuel tank data. Now, data is pulled directly from each location and stored in MongoDB, making it easy to generate reports, track inventory, and even detect unusual activity. This change alone led to a 15% cost saving, while also making maintenance easier and more efficient.

Additionally, the loyalty system has turned out to be more than a bonus feature-it’s a way to better understand customers. The backend can analyze fuel usage, suggest personalized offers, and send real-time promotions that encourage people to head inside stores after fueling up. The goal is not just to sell fuel but to increase overall business.

Mamidi also highlighted how none of this was easy. Before these systems were in place, most operations relied on manual monitoring or external vendors. It was costly and often inaccurate. Now, with cloud technology, the same tasks can be done faster, more reliably, and at a lower cost.

Looking ahead, there are even more possibilities. The next step can be adding AI to make fuel pumps smarter-turning them into kiosks where people can place orders, see personalized ads, or even pay for in-store items. With better cloud infrastructure, updates can roll out automatically, helping keep everything running smoothly across locations.

In short, the mix of Java, AWS, and MongoDB is helping the fuel industry run more efficiently and serve customers better. It’s a reminder that even industries that seem unchanged for decades are being reshaped by smart use of technology.

Subscribe today by clicking the link and stay updated with the latest news!” Click here!

Subscribe today by clicking the link and stay updated with the latest news!” Click here!

MMS • Daniel Curtis

Biome, the all-in-one JavaScript toolchain, has released v2.0 Beta. Biome 2.0 Beta introduces a number of new features in this beta which bring it closer to ESLint and Prettier, such as plugins, to write custom lint rules, domains to group your lint rules by technology and improved sorting capabilities.

Biome has support for JavaScript, TypeScript, JSX, TSX JSON and GraphQL and claims to be 35x faster when compared to Prettier.

While ESLint and Prettier have long been the default choices for JavaScript and TypeScript projects, developers have had to maintain multiple configurations, plugins, and parsers to keep these tools working together. Biome’s goal is to consolidate all of that under one high-performance tool written in Rust. Although the goal is clear, Biome has still been missing features compared to ESLint and Prettier, as noted here on Reddit:

“I’ve replaced ESLint + Prettier in favor of Biome, however it does feel incomplete. YAML, GraphQL, import sorting and plugins are missing.”

Biome v2.0 aims to address these missing features. Plugins will allow users to match custom code snippets and report diagnostics on them, they are a first step in extending the linting rules for Biome users, and the team have indicated in their release blog that they have “plenty of ideas for making them more powerful”.

The plugins should be written using GritQL, which is an open-source query language created by GritIO for searching and modifying source code. Plugins can be added into a project by adding a reference to all the .grit plugin files within a project’s configuration. It is noted in the documentation that not all GritQL features are supported yet in Biome, and there is a GitHub issue that tracks the status of feature support.

Domains are a way to organise all the linting rules by technology, framework or domain, and there are currently four domains: next, react, solid and test. It is possible to control rules for the full domain in the biome config. Biome will also automatically inspect a package.json file and determine which domains should be enabled by default.

Improved Import Organizer is causing some excitement from early adopters. The improvements include the organizer now bundling together imports onto a single line when they come from the same module or file. Custom ordering configuration has also been added which provides the functionality for custom import ordering using the new import organizer. An example, or common use case of this is being able to group type imports at either the start or end of the import chunk.

Biome has a guide dedicated to developers looking to migrate from ESList and Prettier over to Biome. It includes two separate commands for automatically migrating both ESLint and Prettier. There is a note in the migration guide for Prettier that Biome does attempt to match as closely as possible, but there may be differences due to Biome’s different defaults.

Originally forked from Rome, Biome was born and built by the open-source community. Since then, the project has grown with contributions from former Rome maintainers and new community members alike. The Biome GitHub repository is now actively maintained, and the project has continued to grow.

The full documentation for Biome v2.0 Beta is available on biomejs.dev, including rule references and setup instructions for various environments.

MMS • RSS

The fuel sector might not seem like the most obvious place to look for innovation, but technology is quietly reshaping how things work there, too. From managing fuel pumps to tracking tank levels and offering loyalty rewards, digital tools like Java, AWS, and MongoDB are playing a more increasingly significant role.

A seasoned professional, Sasikanth Mamidi, whose work spans across cloud platforms and large-scale applications, has been central to these changes. As a Senior Software Engineer Lead who has been in the industry over 15 years, he helped build a Spring Boot application that runs on AWS Lambda and uses Kinesis streams to move data quickly and securely. The system uses MongoDB Atlas to store real-time data and has greatly assisted in reducing operating costs by 15%, while keeping fuel dispensers running 95% of the time.