Month: June 2025

MMS • RSS

MongoDB, Inc. (NASDAQ:MDB – Get Free Report) saw some unusual options trading activity on Wednesday. Investors bought 36,130 call options on the stock. This represents an increase of approximately 2,077% compared to the average volume of 1,660 call options.

MongoDB, Inc. (NASDAQ:MDB – Get Free Report) saw some unusual options trading activity on Wednesday. Investors bought 36,130 call options on the stock. This represents an increase of approximately 2,077% compared to the average volume of 1,660 call options.

Insider Buying and Selling at MongoDB

In related news, insider Cedric Pech sold 1,690 shares of the firm’s stock in a transaction that occurred on Wednesday, April 2nd. The shares were sold at an average price of $173.26, for a total transaction of $292,809.40. Following the transaction, the insider now owns 57,634 shares in the company, valued at $9,985,666.84. This trade represents a 2.85% decrease in their ownership of the stock. The transaction was disclosed in a legal filing with the Securities & Exchange Commission, which is available through the SEC website. Also, Director Hope F. Cochran sold 1,175 shares of the firm’s stock in a transaction that occurred on Tuesday, April 1st. The shares were sold at an average price of $174.69, for a total transaction of $205,260.75. Following the transaction, the director now owns 19,333 shares in the company, valued at $3,377,281.77. This represents a 5.73% decrease in their position. The disclosure for this sale can be found here. Over the last 90 days, insiders have sold 49,208 shares of company stock worth $10,167,739. 3.10% of the stock is owned by company insiders.

Institutional Investors Weigh In On MongoDB

A number of institutional investors have recently modified their holdings of the stock. OneDigital Investment Advisors LLC boosted its stake in shares of MongoDB by 3.9% during the 4th quarter. OneDigital Investment Advisors LLC now owns 1,044 shares of the company’s stock valued at $243,000 after buying an additional 39 shares during the period. Avestar Capital LLC raised its holdings in shares of MongoDB by 2.0% during the 4th quarter. Avestar Capital LLC now owns 2,165 shares of the company’s stock valued at $504,000 after purchasing an additional 42 shares in the last quarter. Aigen Investment Management LP raised its holdings in shares of MongoDB by 1.4% during the 4th quarter. Aigen Investment Management LP now owns 3,921 shares of the company’s stock valued at $913,000 after purchasing an additional 55 shares in the last quarter. Handelsbanken Fonder AB raised its holdings in shares of MongoDB by 0.4% during the 1st quarter. Handelsbanken Fonder AB now owns 14,816 shares of the company’s stock valued at $2,599,000 after purchasing an additional 65 shares in the last quarter. Finally, O Shaughnessy Asset Management LLC raised its holdings in shares of MongoDB by 4.8% during the 4th quarter. O Shaughnessy Asset Management LLC now owns 1,647 shares of the company’s stock valued at $383,000 after purchasing an additional 75 shares in the last quarter. Institutional investors and hedge funds own 89.29% of the company’s stock.

MongoDB Stock Down 1.1%

Shares of NASDAQ:MDB opened at $210.60 on Thursday. The firm has a fifty day moving average of $179.38 and a two-hundred day moving average of $227.94. The firm has a market capitalization of $17.10 billion, a P/E ratio of -76.86 and a beta of 1.39. MongoDB has a 12-month low of $140.78 and a 12-month high of $370.00.

MongoDB (NASDAQ:MDB – Get Free Report) last issued its earnings results on Wednesday, June 4th. The company reported $1.00 earnings per share (EPS) for the quarter, beating analysts’ consensus estimates of $0.65 by $0.35. The company had revenue of $549.01 million during the quarter, compared to analyst estimates of $527.49 million. MongoDB had a negative net margin of 10.46% and a negative return on equity of 12.22%. The company’s revenue for the quarter was up 21.8% on a year-over-year basis. During the same quarter last year, the business posted $0.51 EPS. Equities research analysts expect that MongoDB will post -1.78 earnings per share for the current fiscal year.

Wall Street Analysts Forecast Growth

A number of research analysts recently commented on MDB shares. JMP Securities reiterated a “market outperform” rating and set a $345.00 price objective on shares of MongoDB in a research note on Thursday, June 5th. Macquarie reiterated a “neutral” rating and set a $230.00 price objective (up previously from $215.00) on shares of MongoDB in a research note on Friday, June 6th. Wedbush reiterated an “outperform” rating and set a $300.00 price objective on shares of MongoDB in a research note on Thursday, June 5th. Morgan Stanley reduced their target price on shares of MongoDB from $315.00 to $235.00 and set an “overweight” rating for the company in a report on Wednesday, April 16th. Finally, KeyCorp cut shares of MongoDB from a “strong-buy” rating to a “hold” rating in a report on Wednesday, March 5th. Eight equities research analysts have rated the stock with a hold rating, twenty-four have given a buy rating and one has given a strong buy rating to the stock. Based on data from MarketBeat.com, the stock has a consensus rating of “Moderate Buy” and a consensus target price of $282.47.

View Our Latest Stock Report on MDB

About MongoDB

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Read More

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

MMS • RSS

MongoDB, Inc. (NASDAQ:MDB – Get Free Report) was the recipient of some unusual options trading on Wednesday. Investors purchased 23,831 put options on the stock. This is an increase of 2,157% compared to the average daily volume of 1,056 put options.

MongoDB, Inc. (NASDAQ:MDB – Get Free Report) was the recipient of some unusual options trading on Wednesday. Investors purchased 23,831 put options on the stock. This is an increase of 2,157% compared to the average daily volume of 1,056 put options.

Insider Activity

In other news, insider Cedric Pech sold 1,690 shares of MongoDB stock in a transaction dated Wednesday, April 2nd. The stock was sold at an average price of $173.26, for a total transaction of $292,809.40. Following the completion of the sale, the insider now owns 57,634 shares in the company, valued at approximately $9,985,666.84. This trade represents a 2.85% decrease in their position. The sale was disclosed in a legal filing with the SEC, which can be accessed through this link. Also, Director Hope F. Cochran sold 1,175 shares of MongoDB stock in a transaction dated Tuesday, April 1st. The shares were sold at an average price of $174.69, for a total value of $205,260.75. Following the sale, the director now owns 19,333 shares of the company’s stock, valued at approximately $3,377,281.77. This trade represents a 5.73% decrease in their position. The disclosure for this sale can be found here. Insiders sold a total of 49,208 shares of company stock valued at $10,167,739 in the last ninety days. 3.10% of the stock is currently owned by insiders.

Institutional Inflows and Outflows

A number of institutional investors and hedge funds have recently made changes to their positions in the stock. Cloud Capital Management LLC purchased a new position in MongoDB during the 1st quarter worth $25,000. Hollencrest Capital Management purchased a new stake in MongoDB during the 1st quarter valued at about $26,000. Cullen Frost Bankers Inc. grew its stake in MongoDB by 315.8% during the 1st quarter. Cullen Frost Bankers Inc. now owns 158 shares of the company’s stock valued at $28,000 after purchasing an additional 120 shares during the last quarter. Strategic Investment Solutions Inc. IL purchased a new stake in MongoDB during the 4th quarter valued at about $29,000. Finally, NCP Inc. acquired a new position in shares of MongoDB in the 4th quarter valued at about $35,000. 89.29% of the stock is currently owned by hedge funds and other institutional investors.

MongoDB Stock Down 1.1%

Shares of NASDAQ MDB opened at $210.60 on Thursday. The business has a fifty day simple moving average of $179.38 and a two-hundred day simple moving average of $227.94. The firm has a market cap of $17.10 billion, a PE ratio of -76.86 and a beta of 1.39. MongoDB has a twelve month low of $140.78 and a twelve month high of $370.00.

MongoDB (NASDAQ:MDB – Get Free Report) last announced its quarterly earnings results on Wednesday, June 4th. The company reported $1.00 earnings per share (EPS) for the quarter, beating analysts’ consensus estimates of $0.65 by $0.35. MongoDB had a negative return on equity of 12.22% and a negative net margin of 10.46%. The business had revenue of $549.01 million for the quarter, compared to the consensus estimate of $527.49 million. During the same period last year, the business posted $0.51 EPS. The business’s revenue was up 21.8% compared to the same quarter last year. On average, research analysts expect that MongoDB will post -1.78 earnings per share for the current year.

Wall Street Analyst Weigh In

A number of equities analysts have commented on the company. Citigroup decreased their price target on MongoDB from $430.00 to $330.00 and set a “buy” rating on the stock in a research note on Tuesday, April 1st. Monness Crespi & Hardt upgraded MongoDB from a “neutral” rating to a “buy” rating and set a $295.00 price target on the stock in a research report on Thursday, June 5th. Canaccord Genuity Group dropped their price target on MongoDB from $385.00 to $320.00 and set a “buy” rating on the stock in a research report on Thursday, March 6th. Cantor Fitzgerald boosted their target price on MongoDB from $252.00 to $271.00 and gave the stock an “overweight” rating in a report on Thursday, June 5th. Finally, Needham & Company LLC reissued a “buy” rating and issued a $270.00 target price on shares of MongoDB in a report on Thursday, June 5th. Eight analysts have rated the stock with a hold rating, twenty-four have issued a buy rating and one has given a strong buy rating to the stock. Based on data from MarketBeat, the company presently has an average rating of “Moderate Buy” and an average target price of $282.47.

View Our Latest Report on MongoDB

MongoDB Company Profile

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

See Also

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

MMS • RSS

MongoDB, Inc. (NASDAQ:MDB – Get Free Report) was the target of some unusual options trading activity on Wednesday. Traders bought 36,130 call options on the stock. This represents an increase of 2,077% compared to the typical daily volume of 1,660 call options.

MongoDB Price Performance

MDB stock traded up $0.06 during trading hours on Thursday, reaching $210.66. The stock had a trading volume of 2,180,893 shares, compared to its average volume of 1,956,303. The stock has a 50-day simple moving average of $179.38 and a 200 day simple moving average of $227.94. The stock has a market capitalization of $17.10 billion, a PE ratio of -76.88 and a beta of 1.39. MongoDB has a fifty-two week low of $140.78 and a fifty-two week high of $370.00.

MongoDB (NASDAQ:MDB – Get Free Report) last issued its quarterly earnings data on Wednesday, June 4th. The company reported $1.00 earnings per share (EPS) for the quarter, topping the consensus estimate of $0.65 by $0.35. MongoDB had a negative net margin of 10.46% and a negative return on equity of 12.22%. The firm had revenue of $549.01 million during the quarter, compared to the consensus estimate of $527.49 million. During the same period last year, the business posted $0.51 earnings per share. The business’s revenue for the quarter was up 21.8% compared to the same quarter last year. On average, sell-side analysts forecast that MongoDB will post -1.78 EPS for the current fiscal year.

Analyst Upgrades and Downgrades

A number of analysts have weighed in on the stock. Royal Bank of Canada reissued an “outperform” rating and issued a $320.00 price target on shares of MongoDB in a report on Thursday, June 5th. Guggenheim boosted their target price on MongoDB from $235.00 to $260.00 and gave the stock a “buy” rating in a report on Thursday, June 5th. The Goldman Sachs Group dropped their target price on shares of MongoDB from $390.00 to $335.00 and set a “buy” rating for the company in a research note on Thursday, March 6th. Truist Financial dropped their price objective on MongoDB from $300.00 to $275.00 and set a “buy” rating for the company in a report on Monday, March 31st. Finally, Cantor Fitzgerald lifted their price objective on MongoDB from $252.00 to $271.00 and gave the stock an “overweight” rating in a research note on Thursday, June 5th. Eight research analysts have rated the stock with a hold rating, twenty-four have assigned a buy rating and one has assigned a strong buy rating to the company. According to data from MarketBeat.com, the stock presently has a consensus rating of “Moderate Buy” and a consensus price target of $282.47.

Read Our Latest Analysis on MongoDB

Insider Activity

In related news, Director Hope F. Cochran sold 1,175 shares of MongoDB stock in a transaction on Tuesday, April 1st. The shares were sold at an average price of $174.69, for a total value of $205,260.75. Following the transaction, the director now owns 19,333 shares in the company, valued at $3,377,281.77. This trade represents a 5.73% decrease in their position. The sale was disclosed in a legal filing with the SEC, which is available through this link. Also, CEO Dev Ittycheria sold 25,005 shares of the stock in a transaction that occurred on Thursday, June 5th. The shares were sold at an average price of $234.00, for a total value of $5,851,170.00. Following the sale, the chief executive officer now owns 256,974 shares of the company’s stock, valued at approximately $60,131,916. The trade was a 8.87% decrease in their position. The disclosure for this sale can be found here. Insiders have sold a total of 49,208 shares of company stock worth $10,167,739 in the last ninety days. Corporate insiders own 3.10% of the company’s stock.

Institutional Inflows and Outflows

Several hedge funds have recently made changes to their positions in MDB. Strategic Investment Solutions Inc. IL acquired a new position in MongoDB during the fourth quarter valued at approximately $29,000. Cloud Capital Management LLC bought a new stake in shares of MongoDB in the 1st quarter valued at about $25,000. NCP Inc. acquired a new stake in MongoDB during the 4th quarter valued at approximately $35,000. Hollencrest Capital Management bought a new position in MongoDB during the first quarter worth $26,000. Finally, Cullen Frost Bankers Inc. lifted its stake in shares of MongoDB by 315.8% in the 1st quarter. Cullen Frost Bankers Inc. now owns 158 shares of the company’s stock valued at $28,000 after purchasing an additional 120 shares during the period. 89.29% of the stock is owned by institutional investors.

About MongoDB

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Recommended Stories

Before you consider MongoDB, you’ll want to hear this.

MarketBeat keeps track of Wall Street’s top-rated and best performing research analysts and the stocks they recommend to their clients on a daily basis. MarketBeat has identified the five stocks that top analysts are quietly whispering to their clients to buy now before the broader market catches on… and MongoDB wasn’t on the list.

While MongoDB currently has a Moderate Buy rating among analysts, top-rated analysts believe these five stocks are better buys.

Enter your email address and we’ll send you MarketBeat’s guide to investing in 5G and which 5G stocks show the most promise.

MMS • Robert Krzaczynski

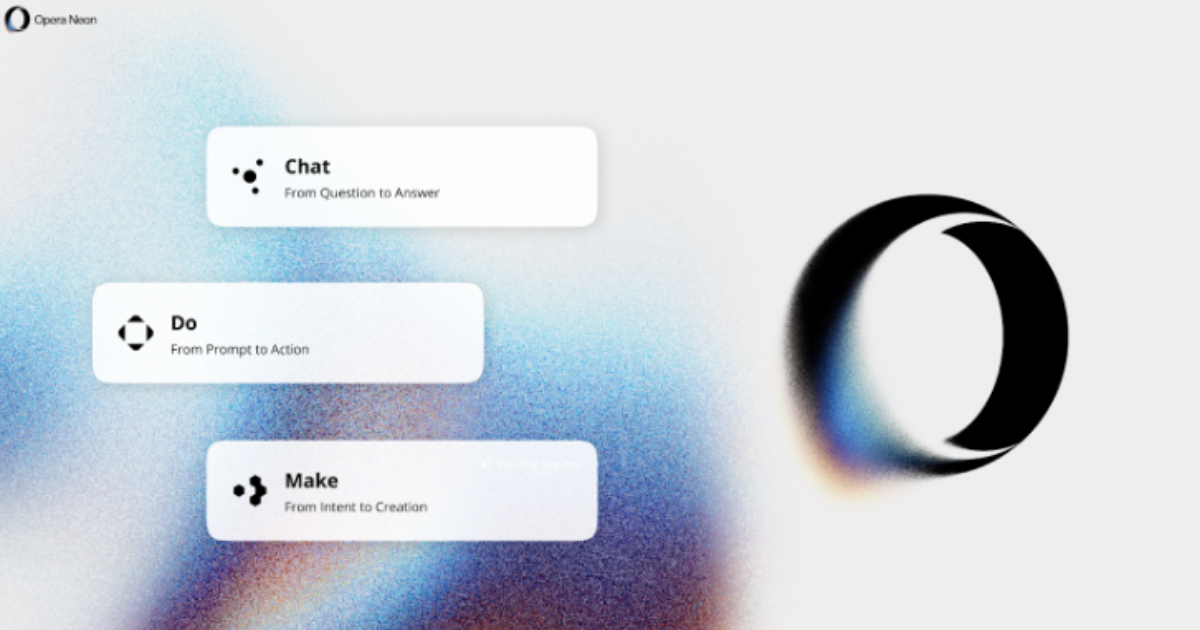

Opera has introduced Opera Neon, a new browser that goes beyond traditional web navigation by integrating AI agents capable of interpreting user intent, performing tasks, and supporting creative workflows. The launch reflects a move toward what Opera describes as “agentic browsing,” where the browser takes an active role in helping users accomplish goals, such as automating tasks or generating content, rather than simply displaying websites.

Neon is the result of several years of development and includes three core AI-driven functions: Chat, Do, and Make. The Chat function embeds a conversational AI assistant directly into the browser, enabling users to ask questions, look up information, or receive contextual summaries related to the page they’re viewing, without switching tabs or apps.

The Do agent, previously previewed under the name Browser Operator, is built to automate routine web tasks. It can fill out forms, search for travel bookings, or carry out online purchases by interpreting webpage structures and content. Importantly, Opera says these actions happen locally within the browser, reducing reliance on external servers and maintaining user privacy.

The third feature, Make, introduces generative capabilities. Users can ask Neon to create websites, reports, code snippets, or visual assets. These tasks are processed in a cloud-based virtual machine that runs independently, allowing projects to continue even if the user disconnects. This setup enables more complex, asynchronous workflows that traditional browsers are not equipped to handle.

The use of both local and cloud-based processing—local for basic tasks and cloud for more complex ones—has raised practical questions among users. A user on X asked:

Does the browser divide the AI agent into two operating modes? Can it be run locally or in a virtual machine?

Opera’s technical breakdown suggests that yes, the system uses both on-device and cloud-based agents depending on the task type and resource demands.

Henrik Lexow, a senior AI product director at Opera, framed the release as an invitation for experimentation:

We see it as a collaborative platform to shape the next chapter of agentic browsing together with our community.

The early response from the community is positive. Jitendra Gupta, a technical lead and AI enthusiast, wrote on LinkedIn:

Imagine your browser actually working for you instead of just waiting for commands. This feels like the start of a whole new era—Web 4.0—where our browser helps us think, create, and stay productive.

Opera Neon is offered as a premium subscription product, with early access now available via a waitlist at operaneon.com.

MMS • Artenisa Chatziou

QCon AI New York (December 16-17, 2025), from the team behind InfoQ and QCon, has announced its Program Committee to shape talks focused on practical AI implementation for senior software engineering teams. The committee comprises senior practitioners with extensive experience in scaling AI in enterprise environments.

The 2025 Program Committee includes:

- Adi Polak – director of advocacy and developer experience engineering at Confluent, and author on scaling machine learning. Adi brings expertise in data-intensive AI applications and the infrastructure challenges of production ML systems.

- Randy Shoup – SVP of engineering at Thrive Market, with previous leadership roles at eBay, Google, and Stitch Fix. Shoup’s background in building scalable, resilient systems will inform content on enterprise-grade AI architecture.

- Jake Mannix – technical fellow for AI & relevance at Walmart Global Tech, with experience at LinkedIn and Twitter. Mannix contributes expertise in applying AI at scale within large enterprise contexts.

- Wes Reisz – QCon AI New York 2025 chair, technical principal at Equal Experts, and 16-time QCon chair.

QCon AI will focus on practical strategies for software engineers and teams looking to implement and scale artificial intelligence within enterprise environments.

Many teams are challenged by transitioning AI from promising proof-of-concepts (PoCs) to robust, value-driving production systems. QCon AI is specifically designed to help senior software engineers, architects, and team leaders navigate these issues and scale artificial intelligence within enterprise environments.

The conference program will feature practitioner-led strategies and case studies from companies successfully scaling AI, with sessions covering:

- Strategic AI integration using proven architectural patterns

- Building production-grade enterprise AI systems for scale and resilience

- Connecting Dev, MLOps, Platform, and Data practices to accelerate team velocity

- Navigating compliance, security, cost, and technical debt constraints in AI projects

- Using AI for design, validation, and strategic decision-making

- Showing clear business impact and return on investment from AI initiatives

“QCon AI is specifically designed to help software engineers navigate these issues in AI adoption and integration, focusing on the applied use of AI in delivering software to production.”

Reisz explained.

“The goal is to help teams not only embrace these changes but ship better software.”

Early bird registration is available. Book your seat now.

GitLab 17.11 Enhances DevSecOps with Custom Compliance Frameworks and Expanded Controls

MMS • Craig Risi

On April 17, 2025, GitLab released version 17.11, introducing significant advancements in compliance management and DevSecOps integration. A standout feature of this release is the introduction of Custom Compliance Frameworks, designed to embed regulatory compliance directly into the software development lifecycle.

These frameworks allow organizations to define, implement, and enforce compliance standards within their GitLab environment. With over 50 out-of-the-box controls, teams can tailor frameworks to meet specific regulatory requirements such as HIPAA, GDPR, and SOC 2. These controls cover areas like separation of duties, security scanning, authentication protocols, and application configurations.

To create a custom compliance framework, as detailed in GitLab’s own post, users identify applicable regulations and map them to specific controls. Within GitLab’s Compliance Center, they can define new frameworks, add requirements, and select relevant controls. Once established, these frameworks can be applied to projects, ensuring consistent compliance across the organization.

Integrating compliance directly into the development workflow offers several key advantages. By automating compliance checks, teams can significantly reduce the manual effort typically required for tracking and documentation. This streamlining not only saves time but also ensures greater accuracy and consistency. Real-time monitoring of compliance status accelerates audit readiness, allowing organizations to respond quickly and efficiently to regulatory requirements. Furthermore, embedding compliance controls into every stage of development enhances the overall security posture, ensuring that security and regulatory standards are continuously enforced throughout the software delivery lifecycle.

With the release of Custom Compliance Frameworks, Ian Khor, a product manager at GitLab, highlighted the significance of this milestone, stating:

Big milestone moment – Custom Compliance Frameworks is now officially released in GitLab 17.11! This feature has been a long time coming, and I’m incredibly proud of the team that brought it to life.

Khor emphasized the collaborative effort across product, engineering, UX, and security teams to ensure that organizations can define, manage, and monitor compliance requirements effectively within GitLab.

Joel Krooswyk, CTO at GitLab, also expressed enthusiasm about the new features in GitLab 17.11, particularly the compliance frameworks.

Psst – hey – did you hear? GitLab 17.11 dropped today, and there are 3 huge things I’m excited to share. 1. Compliance frameworks. 50 of them, ready to pull into your projects.

In addition to compliance enhancements, GitLab 17.11 introduces over 60 improvements, including more AI features on GitLab Duo Self-Hosted, custom epic, issue, and task fields, CI/CD pipeline inputs, and a new service accounts UI. These updates aim to streamline development workflows and enhance overall productivity.

MMS • RSS

MongoDB, Inc. MDB popped following its Q1 FY26 earnings release, with shares surging more than 12% post-earnings. The beat-and-raise quarter came alongside stronger profitability and a bold $1 billion share buyback announcement, reigniting investor optimism in a stock that had recently struggled with macro and execution concerns.

This article will cover MongoDB’s business model, its competitive advantages, total addressable market (TAM), and the latest Q1 results to assess whether the recent performance supports or challenges my investment thesis.

What is MongoDB?

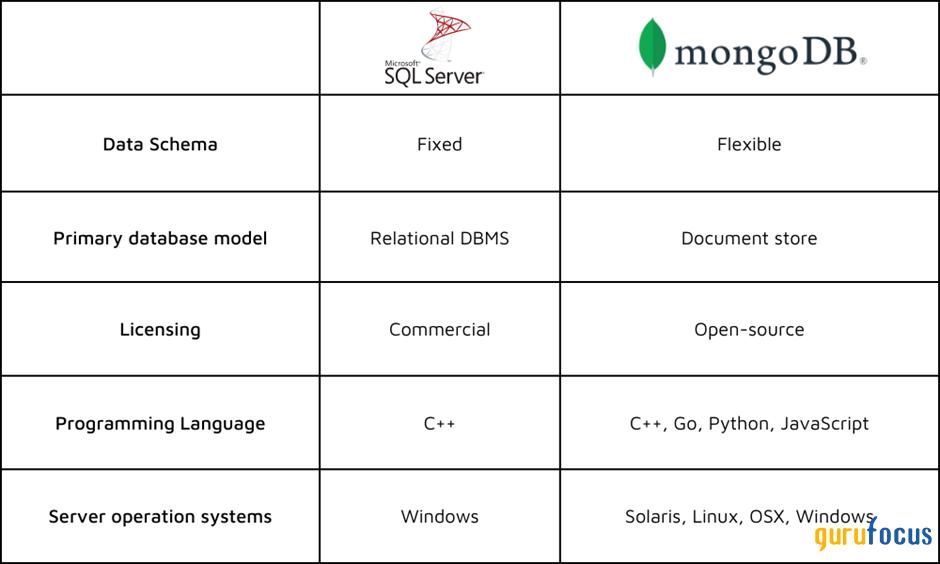

MongoDB is a leading provider of NoSQL database technology, offering a flexible, JSON-like document model instead of rigid relational tables. Its core products include MongoDB Atlas, a fully managed cloud database service, and MongoDB Enterprise Advanced, a self-managed on-premise solution for enterprises.

The company’s mission is to simplify and accelerate application development. By using MongoDB, developers can store and query diverse data types with ease, which speeds up project timelines and adapts to changing requirements better than traditional SQL databases. MongoDB’s platform has grown into a full-fledged developer data platform that includes capabilities like full-text search, analytics, mobile data sync, and now vector search for AI applications. As of the latest quarter, over 57,100 customers utilise MongoDB’s technology, reflecting its widespread adoption across various industries. In essence, MongoDB provides the plumbing behind modern applications, from web and mobile apps to IoT and AI systems, and its products aim to be the default database infrastructure for new software projects.

Competitive Advantage and TAM

Source: Ardentisys

MongoDB’s approach gives developers far more flexibility than traditional SQL databases. While traditional SQL databases follow a rigid model, where data is stored in structured tables with fixed schemas, MongoDB’s NoSQL model stores data in flexible, JSON-like documents. This difference defines how quickly teams can iterate, adapt, and scale.

In a SQL environment, changing the schema often requires complex migrations. MongoDB, on the other hand, allows for dynamic fields, nested arrays, and schema evolution with minimal disruption

Scalability is another key distinction. Relational databases typically scale vertically, requiring more powerful hardware to handle growing workloads. MongoDB was designed to scale horizontally, distributing data across multiple nodes to support large-scale, real-time applications in cloud environments.

These architectural differences make MongoDB more aligned with modern development needs, particularly in cases where speed, agility, and scalability are critical. As a result, NoSQL databases like MongoDB have become the preferred choice for a growing number of use cases, from web and mobile apps to AI and IoT platforms.

With this distinction being said, MongoDB’s competitive advantage stems from its modern architecture and developer-centric approach in a massive market. The database management system market is estimated to be over $85 billion in size, yet much of it still relies on decades-old relational database technology. However, the global NoSQL market size of approximately $10 billion in 2024 is still small but growing rapidly, expected to grow at a CAGR of 29.50% between 2025 and 2034.

MongoDB’s document model natively handles both structured and unstructured data and maps more naturally to how developers think in code. This flexibility allows companies to represent the messiness of real-world data and evolve their schemas without costly migrations. Applications built on MongoDB can iterate faster and scale more easily because the database does not require rigid schemas or complex join operations. According to management, this fundamental architectural advantage translates to faster time-to-market, greater agility, and the ability to scale without re-architecting, which is why customers increasingly entrust MongoDB with mission-critical workloads.

Another pillar of MongoDB’s moat is its developer mindshare and ecosystem. The company has invested heavily in making its platform accessible, from an open-source foundation to a free-tier Atlas offering and a wide array of developer tools and integrations. This approach creates a self-reinforcing dynamic: the more developers adopt MongoDB, the richer its ecosystem becomes, through community-driven documentation, integrations, and support, which in turn attracts even more developers. Over time, these network effects deepen the platform’s defensibility.

The lack of attracting developers was something that can define a platform’s fate. A great example is Windows Phone which failed to convince developers to build for it. As Ben Thompson said, The number one reason Windows Phone failed is because it was a distant third in a winner-take-all market; this meant it had no users, which meant it had no developers, which meant it had no apps, which meant it had no users. This was the same chicken-and-egg problem that every potential smartphone competitor has faced since, and a key reason why there are still only two viable platforms.

Each new generation of startups and IT projects choosing MongoDB adds to a virtuous cycle: those applications grow, require bigger paid deployments, and demonstrate MongoDB’s reliability at scale, attracting even more adoption. This bottom-up adoption complements MongoDB’s direct sales focus on enterprises, enabling it to grab market share in a large, under-penetrated market. Management frequently notes that MongoDB still has a relatively small fraction of the overall database market, leaving ample room for growth as organizations modernize their data infrastructure.

Crucially, MongoDB’s advantage is being reinforced as industry trends shift towards the company’s strengths. The rise of cloud-native computing, microservices, and AI-driven applications all favor flexible, distributed data stores. MongoDB’s platform was built for cloud, distributed, real-time, and AI-era applications, whereas many competitors are now scrambling to bolt on similar capabilities. In fact, some legacy database vendors have started retrofitting features like JSON document support or vector search onto their products as afterthoughts, which MongoDB’s CEO characterizes as a passive admission that MongoDB’s approach is superior.

New Technology

In keeping with its focus on staying at the forefront of modern application development, MongoDB has aggressively embraced the AI wave. A key development was the acquisition of Voyage AI, an AI startup specializing in embedding generation and re-ranking models for search. Announced in early 2025, the Voyage AI deal (approximately a $200+ million purchase) was aimed at redefining the database for the AI era by baking advanced AI capabilities directly into MongoDB’s platform. By integrating Voyage’s state-of-the-art embedding and re-ranking technology, MongoDB enables its customers to feed more precise and relevant context into AI models, significantly improving the accuracy and trustworthiness of AI-driven applications. In practical terms, this means a company using MongoDB can now do things like generate vectors (embeddings) from its application data, perform semantic searches, and retrieve context for an AI model’s queries, all within MongoDB itself. Developers no longer need a separate specialized vector database or search system.

MongoDB is already showing progress from this integration. The company released Voyage 3.5, an updated set of AI models, which reportedly outperform other leading embedding models while reducing storage requirements by over 80%. This is a significant improvement in efficiency and accuracy, making AI features more cost-effective at scale for MongoDB users. It also helps solve the AI hallucination problem by grounding LLMs in a trusted database, thereby increasing output accuracy.

Beyond Voyage, MongoDB launched broader AI initiatives such as the MongoDB AI Innovators Program (in partnership with major cloud providers and AI firms) to help customers design and deploy AI-powered applications. Early pilot programs using MongoDB’s AI features have yielded promising results, dramatically cutting the time and cost needed to modernize legacy applications with AI assistance.

Financial Results (Q1 FY2026)

MongoDB’s Q1 FY2026 delivered strong results above expectations, regaining the company’s momentum. Revenue for Q1 came in at $549.0 million, a 22% increase year-over-year (YoY), and comfortably ahead of Wall Street’s $528 million consensus estimate. Atlas revenue grew 26% YoY and made up 72% of total revenue in Q1, reflecting strong usage trends. While other segments like Enterprise Advanced and services also posted growth, Atlas remains the primary driver of MongoDB’s momentum. The company added approximately 2,600 net new customers in the quarter, bringing the total customer count to over 57,100. This was the highest quarterly addition in six years, suggesting MongoDB’s strategy to focus on higher-value clients and strong self-service adoption is paying off. In the words of CEO Dev Ittycheria, we got off to a strong start in fiscal 2026 as MongoDB executed well against its large opportunity.

MongoDB has shown significant improvements in profitability and efficiency, although it’s still unprofitable on a GAAP basis. The company recorded a non-GAAP operating income of $87.4 million in Q1, which represents a 16% increase. This is a jump from the 7% non-GAAP operating margin a year ago. Operating expenses grew more slowly than planned, particularly due to more measured hiring, which contributed to the margin outperformance. Non-GAAP net income was $86.3 million, or $1.00 per diluted share, doubling Non-GAAP EPS from the prior year period.

On a GAAP basis, MongoDB reported a net loss of $37.6 million ($0.46 per share) for the quarter, which is still an improvement from the $80.6 million loss ($1.10 per share) a year earlier. The GAAP loss was much narrower than expected with analysts forecasting a loss of around $0.85 per share. Gross margins remain healthy but are shrinking. Q1 gross margin was 71.2%, 72 basis points lower than last year’s 72.8% due to the revenue mix.

Despite MongoDB’s strong top-line performance, stock-based compensation (SBC) remains elevated, consuming 24% of total revenue in Q1. For a company growing revenue in the high single digits and still unprofitable on a GAAP basis, this level of dilution is concerning.

MongoDB’s cash flow generation and balance sheet also underscore its improving efficiency. Operating cash flow in Q1 rose to approximately $110 million, up from $64 million a year ago, while free cash flow nearly doubled to $106 million. The improvement was driven by higher operating profits and solid collections, leading MongoDB to end the quarter with $2.5 billion in cash and short-term investments and no debt. In fact, boosted by the quarter’s results, MongoDB’s Board of Directors authorized an additional $800 million buyback authorization, on top of $200 million authorized last quarter, bringing the total program to $1.0 billion. This is a strong vote of confidence by management in the company’s future. It’s also a shareholder-friendly move to offset dilution from stock-based compensation and the Voyage deal. Due to a blackout period linked to the CFO transition, no shares were repurchased in Q1, but the company indicated buybacks would begin shortly. To put it in perspective, this share buyback is roughly 5% of MongoDB’s market capitalization.

Guidance

Looking ahead, MongoDB management struck an optimistic tone and raised their outlook for the full fiscal year. Citing a strong start to the year, the company increased its FY2026 revenue guidance by $10 million to a range of $2.25$2.29 billion. This implies roughly 13% YoY growth at the midpoint, and it incorporates some conservatism for potential macro headwinds in the second half (including an expected $50 million headwind from lower multi-year license revenue in FY26). Management also boosted its profitability outlook, raising the full-year non-GAAP operating income guidance by 200 basis points in margin. The updated guidance calls for FY26 non-GAAP operating income of $267 to $287 million and non-GAAP EPS of $2.94 to $3.12. Previously, the company had expected $210$230 million in non-GAAP operating income (EPS $2.44$2.62) for the year, so this upward revision is substantial. MongoDB appointed Mike Berry as its new Chief Financial Officer in late May. Berry, a seasoned executive with over 30 years of experience and prior CFO roles at NetApp, McAfee, and FireEye, replaces Michael Gordon, who stepped down earlier this year after nearly a decade with the company. Berry’s track record in scaling enterprise software businesses and driving operational discipline aligns well with MongoDB’s current phase of improving margins and shareholder returns.

Valuation

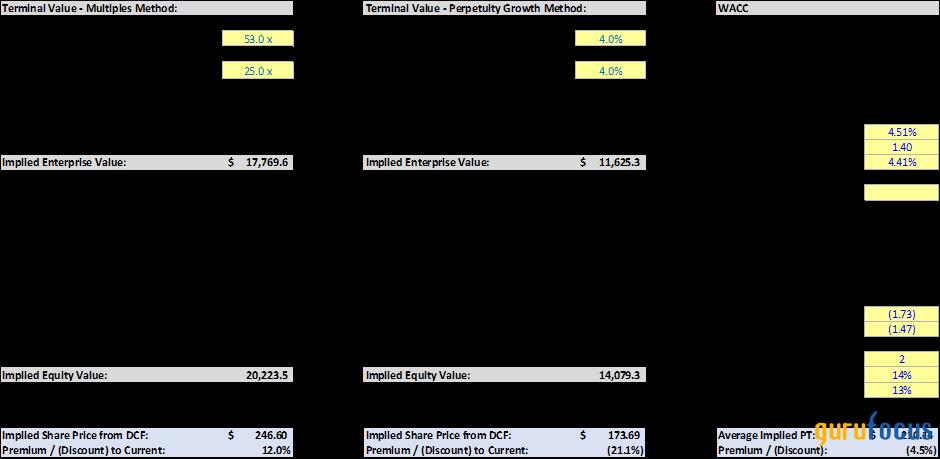

MongoDB’s stock price has rallied on the back of its strong Q1 report, reflecting renewed investor enthusiasm. Even after this jump, it’s still undervalued in some multiples compared to its peers.

Source: Author

Valuation multiples paint a mixed picture. MongoDB trades at the lowest price-to-sales and price-to-gross-profit ratios among its software peers, which could reflect its strong gross margins. However, it may also signal growing investor skepticism about the company’s long-term growth trajectory and ability to convert usage into durable profitability.

Meanwhile, MongoDB’s price-to-earnings-growth ratio is the highest in the group, primarily because its revenue growth is expected to decelerate into the low double digits. In contrast, peers like Snowflake SNOW and Datadog

DDOG continue to command premium valuations, backed by faster top-line expansion and stronger free cash flow margins.

Another way to assess the opportunity is through a discounted cash flow analysis that blends multiple-based and perpetuity growth assumptions. I estimate a fair value of around $210 per share, suggesting the stock is fairly valued after the post-earnings rally.

Source: Author

Finally, in the first quarter of the calendar year, MongoDB has seen the same number of guru sellers as buyers. Baillie Gifford (Trades, Portfolio), Jefferies Group (Trades, Portfolio), and Paul Tudor Jones (Trades, Portfolio) have reduced their positions in the stock, with some trimming up to 98%. On the other hand, Lee Ainslie (Trades, Portfolio) has created a new position, while Steven Cohen (Trades, Portfolio) and PRIMECAP Management (Trades, Portfolio) add to their position significantly.

Risks

MongoDB’s long-term opportunity remains compelling, but several risks warrant attention. The most immediate is the sensitivity of Atlas revenue to macroeconomic conditions. Because Atlas follows a usage-based pricing model, any slowdown in customer consumption, whether from tighter IT budgets or reduced application traffic, can quickly translate into revenue deceleration. In fact, management acknowledged some softness in April before usage rebounded in May, prompting them to maintain a cautious full-year outlook.

A second area of concern lies in the company’s non-Atlas license revenue, which is expected to decline at a high single-digit rate this year. This includes a roughly $50 million headwind from multiyear license renewals that took place in the prior year. As customers continue shifting to cloud-based solutions, these traditional license revenues may remain volatile and difficult to predict, creating a drag on MongoDB’s overall subscription growth in the short term.

Lastly, competition from major cloud providers and open-source alternatives remains persistent. AWS DocumentDB, Google Firestore, and Postgres-based document stores represent credible threats. These platforms are often bundled with broader cloud services or offered at lower price points, creating pricing pressure. MongoDB’s advantage lies in its developer-friendly architecture and integrated tooling, but maintaining that lead will require ongoing innovation and execution.

Final Take

MongoDB delivered a strong quarter, regaining some favour with Wall Street following two disappointing earnings periods. The company added its highest number of net new customers in six years, demonstrating continued developer interest and adoption. However, management is forecasting a revenue slowdown, with low double-digit growth expected. SBC also remains elevated, a concern given MongoDB’s ongoing GAAP unprofitability. I want to see continued progress on profitability, margin expansion, and more disciplined equity compensation practices, particularly as the company matures. This quarter was a solid step in the right direction, but I need to see more. I’ll hold my position for another quarter or two before deciding whether MongoDB can sustainably deliver on its potential.

AWS Introduces Open Source Model Context Protocol Servers for ECS, EKS, and Serverless

MMS • Steef-Jan Wiggers

AWS has released a set of open-source Model Context Protocol (MCP) servers on GitHub for Amazon Elastic Container Service (Amazon ECS), Amazon Elastic Kubernetes Service (Amazon EKS), and AWS Serverless. These are specialized servers that enhance the capabilities of AI development assistants, such as Amazon Q Developer, by providing them with real-time, contextual information specific to these AWS services.

While Large Language Models (LLMs) within AI assistants typically rely on general public documentation, these MCP servers offer current context and service-specific guidance. Hence, developers can receive more accurate assistance and proactively prevent common deployment errors when building and deploying applications on AWS.

Hariharan Eswaran concluded in a Medium blog post:

The launch of MCP servers is about empowering developers with tools that keep up with the complexity of modern cloud-native apps. Whether you’re deploying containers, managing Kubernetes, or going serverless, MCP servers let your AI assistant manage infrastructure like a team member — not just a chatbot.

Furthermore, according to the company, leveraging these open-source solutions allows developers to accelerate their application development process by utilizing up-to-date knowledge of AWS capabilities and configurations directly within their integrated development environment (IDE) or command-line interface (CLI). Moreover, the key features and benefits include:

- Amazon ECS MCP Server: Simplifies containerized application deployment to Amazon ECS by configuring necessary AWS resources like load balancers, networking, auto-scaling, and task definitions using natural language. It also aids in cluster operations and real-time troubleshooting.

- Amazon EKS MCP Server: Provides AI assistants with up-to-date, contextual information about specific EKS environments, including the latest features, knowledge base, and cluster state. This enables more tailored guidance throughout the Kubernetes application lifecycle.

- AWS Serverless MCP Server: Enhances the serverless development experience by offering comprehensive knowledge of serverless patterns, best practices, and AWS services. Integration with the AWS Serverless Application Model Command Line Interface (AWS SAM CLI) streamlines function lifecycles and infrastructure deployment. It also provides contextual guidance for Infrastructure as Code decisions and best practices for AWS Lambda.

The announcement details practical examples of using the MCP servers with Amazon Q CLI to build and deploy applications for media analysis (serverless and containerized on ECS) and a web application on EKS, all through natural language commands. The examples showcase the AI assistant’s ability to identify necessary tools, generate configurations, troubleshoot errors, and even review code based on the contextual information provided by the MCP servers.

The announcement has already garnered positive attention from the developer community. Maniganda, commenting on a LinkedIn post, expressed enthusiasm:

The ability for AI to interact with AWS compute services in real-time will undoubtedly streamline operations and enhance efficiency. I’m looking forward to seeing how the open-source framework evolves and the impact it will have on Kubernetes management.

Users can get started by visiting the AWS Labs GitHub repository for installation guides and configurations. The repository also includes MCP servers for transforming existing AWS Lambda functions into AI-accessible tools and for accessing Amazon Bedrock Knowledge Bases. Deep-dive blogs are available for those wanting to learn more about the individual MCP servers for AWS Serverless, Amazon ECS, and Amazon EKS.

MMS • Sergii Gorbachov

Transcript

Shane Hastie: Good day, folks. This is Shane Hastie for the InfoQ Engineering Culture Podcast. Today I’m sitting down with Sergii Gorbachov. Sergii, welcome. Thank you for taking the time to talk to us today.

Sergii Gorbachov: Thank you. Thank you for inviting me.

Introductions [01:02]

Shane Hastie: We met because you recently gave a really interesting talk at QCon San Francisco. Do you want to just give us the high-level picture of that talk?

Sergii Gorbachov: Sounds good. The project that I presented was around code migration. It was a migration project that took about 10 months.

We moved from Enzyme to React Testing Library. Those are the libraries that help you create tests to test React.

The interesting piece there was that I combined a traditional approach using AST or Abstract Syntax Tree and LM. Together with the traditional and new approaches, I was able to save a lot of engineering hours and finish this project a lot faster than initially was planned.

Shane Hastie: Real-world, hands-on implementation of the large language models in production code bases. Before we go any further, who is Sergii?

Sergii Gorbachov: Sure, yes, I can talk more about myself. My name is Sergii Gorbachov, and I am a staff engineer at Slack.

I’m part of the Developer Experience Organization, and I’m a member of the front-end test frameworks team, so I deal with anything front-end testing related.

Shane Hastie: What got you interested even, in using the LLM models?

What Got You Into Using AI/LLMs? [02:30]

Sergii Gorbachov: Well, first of all, of course hype. You go on LinkedIn, or you go to any talks or conferences, you see that AI is very prominent. I think, everyone should probably learn about new technologies, so that was the initial push for me.

Also, before Slack, I worked at a fintech company, it was called Finn AI, where I built a testing framework for their chatbot.

I was already acquainted with some of the conversational systems that use artificial intelligence, not large language models, but regular more typical models.

Then of course, the reality of working as a front-end or software developer engineering test, working with front-end technologies, that’s changed quite often.

I think JavaScript is notorious for all of these changes, and the libraries change so drastically, that there are so many breaking changes that you need to, in our case, rewrite 20,000 tests

Doing it manually would take too long. It would be about, we calculated 10 to 15,000 engineering hours, if we went with the manual conversion and using developer time with that.

At that time, Anthropic came out with one of their LLM, large language models, and one of the use cases that people were talking about is code-generation and conversion, so that’s why we decided to try it.

Of course, at that time they were not very popular. There were not too many use cases that were successful, so we had to just try it out.

To be honest, we were desperate, because that much amount of work, and we had to do it and had to help ourselves.

Shane Hastie: What were the big learnings?

Key Learnings from AI Implementation [04:18]

Sergii Gorbachov: I’d say, one of the biggest learnings is that AI by itself was not a very successful tool.

I saw that it was an over-hyped technology, and if we used AI by itself to convert code A to code B, in our case from one framework to another framework, in the same language, so the scope was large but not very wildly large.

It was still not performing well, and we had to control the flow. We had to collect all the context ourselves, and we also had to use the conventional traditional approaches, together with AI.

I think, that’s the main biggest learning, is that our traditional common things that we have been using for decades are still relevant, and AI does not displace them completely, it only complements them, it’s just another tool.

It’s useful, but it cannot completely replace what we’ve been doing before.

Shane Hastie: What does it change? Particularly in how people interact and how our roles as developers change?

How Developer Roles Are Changing [05:29]

Sergii Gorbachov: Sure. In this specific example for Enzyme to RTL conversion, I’d say, the biggest part of AI was the generation part and large language models. Those are generative models, and that’s where we saw that there were no other tools available in the industry.

Usually that role is done by actual developers, where you, for example, convert something, you use those tools manually, and then you have to write the code.

That piece is now more automated, and in our case, for our project, was done by the LM models. The developers role shifted more to reviewing, fixing, verifying, so it was more a validation part that was done by the developers.

Shane Hastie: One of the consistent things that I’m hearing in the industry, and in almost any role adopting generative AI, is that shift from author to editor.

That is a mindset shift. If you’re used to being the author, what does it take to become the editor, to be that reviewer rather than the creator?

Sergii Gorbachov: Well, that’s more of a extreme use case. Maybe it exists in real life, but I would say, realistically it’s, you are a co-author.

Yes, you’re a co-author with the AI system, and you still have to write code, you definitely spend a lot more time reviewing the code and guiding the system, rather than writing everything yourself.

In that respect, I don’t think that I personally, in my development experience, I don’t miss that part, writing some of the scaffolding or code that is very straightforward, that I can just maybe copy and paste from other sources.

Now, I can just ask the LM to do that easy bit for me, and go produce the code and write code myself, that is more complex, where more thinking is necessary.

Yes, definitely the role of a developer, at least in my experience, has shifted to more of a co-author and a person who drives this process.

To a certain extent, it’s still also very empowering, because I’m in control of everything, but I’m not the person who does all the work.

Shane Hastie: We’ve been abstracting you further and further away from the underlying bare metal, so to speak. Is this just another abstraction layer, or is there something different?

AI as the Next Abstraction Layer [08:15]

Sergii Gorbachov: I think it’s definitely another abstraction layer on top of our regular work, or another layer, how we can interact with various coding languages or systems, but it could be the final one.

What is going above just natural language? Because that’s how we interact with the models. You use natural language to build tools, so the next step is just implanting it in our brains, and then controlling them with our minds, which I don’t think will happen.

The difference I think, with previous technologies that would change how developers work, is that this is the final level and we are able to use tools that we use in everyday life, like language or natural language, I guess.

This is the key that is so easy and sometimes it’s, I guess, it may be democratizing the process of writing software, because you don’t really need to know some of those hardcore algorithms, or maybe sometimes learn how a specific program and language works.

Rather, you need to understand the concepts, the systems, as something more abstract, and I guess intellectually challenging.

I see how many people changed in terms of how they write code, let’s say, managers, who typically do not write code, but they possess all of this very interesting information.

For example, what qualities of a systems are important, so they can codify that knowledge and then an AI system will just code it for them.

Of course, there are some limitations of what an AI system can do, but the key here is that it enables some people, especially those who possess some of the knowledge, but they don’t know the programming language.

Shane Hastie: Thinking of developer education, what would you say to a junior developer today? What should they learn?

Advice for Junior Developers [10:16]

Sergii Gorbachov: My background is in social sciences and humanities, so maybe this whole shift fits me very well, because I can operate at a higher level where you think about, you take a system, you break it down, and when for example, in humanities, you always look at the very non-deterministic systems, let’s say languages or things that are more related to humans, and it’s very hard to pinpoint what’s going to happen next, or how the system behaves.

I would suggest focusing more on system analysis or understanding, I guess, some of the … Or taking maybe more humanities classes, that help you analyzing very complex things that we deal with every day, like talking to other people or relationships, psychological courses.

Then, that would give the ability or this apparatus to handle something so non-deterministic, like dealing with an LM, and being able to create the right prompts that would generate good code.

Shane Hastie: If we think of the typical CI pipeline series of steps, how does that change? What do we hand over to the tools, and what do we still control?

Impact on CI/CD and Development Workflows [11:47]

Sergii Gorbachov: I think, we’re still controlling the final product, and we still I think, have the ownership of the code that we produce, regardless of what tools we use, AI or no AI tools.

We still have to be responsible. We cannot just generate a lot of code, and today generation is the easiest part. The most difficult part is that you have generated so much code that you don’t know what it does, you don’t know how to validate it.

Long-term, it could be problematic, because there is a lot of duplication, some of the abstractions are not created. Long-term, I think we should still think about code quality, and control what we generate and what code is produced.

As for the whole experience, human experience, does it change? With CI systems in particular, they have served very well for us to automate certain tasks.

Let’s say you create a PR, you write your code and all of those tests linters run there, and one of those, let’s say, linters on steroids, could be an AI system that would just check our code.

It would probably not change too much of our work today, but it will be able to provide us extra feedback that we should act on. That’s I think, the reality right now.

Long-term, for example, another initiative that I’ve been working on, is test generation, and test generation is a part that no other tools can do.

Let’s say, a developer or you or me, create a piece of code and then you do not cover it with tests.

In that case, you can hook up an AI system that would generate the tests, suggest it for you, and maybe create a background job that you would be able to come back later, in one day or two days, and fix those tests or add them.

Especially for features that are not production facing, let’s say, it’s a prototype. It changes how we might be doing our job, especially for testing, where it could switch how we view, for example, some of the tasks, if they can be outsourced to an AI system, and usually AI system take longer than 10 to 20 minutes to produce some artifact.

Then, we would just change our way of working. Rather than doing everything right away in an alternative fashion, we would just create the bare bones and then ask all of those systems to do something for us in the cloud, in CI systems, and come back the next day or in two days and continue on them, or just validate and verify that what has been produced is of good quality or not.

Shane Hastie: One of the things that we found in the engineering culture trends discussion recently, was pull requests seem to be getting bigger, more code. That’s antithetical to the advice that’s been the core for the last, well, certainly since DevOps was a thing. How is that impacting us?

Challenges with Larger Pull Requests [15:19]

Sergii Gorbachov: I guess, one of the metrics that I sometimes look at is PR throughput, and definitely, if for example, your PR has a lot more code, then it would take a longer time for other people to review it, or a developer to add a code for that feature to be working.

It’s definitely, probably making, AI systems make it more possible for you to generate and create more code.

I’m not sure exactly what the future with this is, but the idea that everyone had, for example, the AI systems will increase the PR throughput and merging a lot more code.

I mean, creating maybe more PR’s is not the reality, because there is just too much code and we just maybe are using their own metrics here, and there is a mismatch between what has been before, versus the speed that AI allows us to produce that code with.

There is more code, but I think that maybe the final metrics should be slightly different, rather than what has been before, or what has been popular in DevOps such as PR size or PR throughput.

Shane Hastie: Again, a fairly significant shift. What are some of the important metrics that you look at?

Important Metrics and Tool Selection [16:51]

Sergii Gorbachov: I work at a more local level, and I come from a testing background, and I deal with front-end test frameworks.

Some of the important metrics for me specifically, is how long developers wait for our tests to run, so they can go to the next step, or before they get feedback.

Things like test suite runtime, test file or test case runtime, and those are our primary metrics for developers to be happy with … Creating the PR and getting some feedback.

I think that’s the main one for us. Wouldn’t look for … There’s probably a lot more other metrics, that people at my company are looking at, but for our team, for our level, that’s the main one.

Shane Hastie: There’s an abundance of tools, how do we choose?

Sergii Gorbachov: I think, that at this time, especially in my work, I can see ourselves more a reactive team, and when we see a problem, we sometimes cannot foresee it, but when we see a problem, we should probably use whatever tools are available for us at that time, rather than spending time and investing too much into building our own things to predict whatever is going to come next.

For example, for my previous work, for all these tools for test generation or Enzyme to RTL conversion, of course, we looked at all of the available tools, but there was nothing, because some of those tools are not created with an AI component in mind.

I think right now, a lot of my work, or people in the DevOps role, they spend a lot more time on building the infrastructure for all of these AI tools to consume that information.

Let’s say, in end-to-end testing, which is at the highest level of the testing pyramid, you sometimes get an area or a failed test, and it’s very difficult to understand what’s the root cause of this, because your product, your application might be so distributed and there is no clear associations between some of those errors.

Let’s say, you have three services and one service fails, but in your test you only see that an element, for example, did not show up.

It’s a great use case for an AI system, that can intelligently identify the root cause. Without the ability to get all of the deformation from all of those services while the test is running, it would be impossible.

Those systems, those pipelines, they at this point do not exist. I see that, at my company, that sometimes we’re used to more traditional, typical use of, let’s say, logs, where you have very strict permission rules, so that you cannot get access to some of the information from your development environment, where tests are running.

Those are the things that I think, it makes sense to invest and build, in order to beef up these AI systems. The more context you provide for them, the better they are.

I think therefore, some of the tools that we’ve been building, that’s how our work has changed. We are building these infrastructure and context collection tools, rather than using AI for all of that.

Following, I think, an AI endpoint or using an AI tool is probably the easiest, but collecting all of that other stuff is usually 80% of the success in the project.

Shane Hastie: What other advice, what else would tell your peers about your experience using the generative AI tools, in anger, in the real world?

Advice for Others on Using AI Code [20:58]

Sergii Gorbachov: I would say, one of the things that I saw among my peers and in other companies, or on LinkedIn, is that people are trying to build these very massive systems that can solve any problem.

I think, the best result that we got from our tools, was when the scope is so minimal, the smaller the better. Try to analyze the problem that you are trying to solve and break it down as much as you can, and then, try to solve and automate that one little piece.

In that case, it’s very easy to understand if it works or it doesn’t work. For example, if you can quantify the output, let’s say, in our case we usually count things like saving engineering hours. How long would it take me to do that one little thing? Let’s say, we’ve save 30 seconds, but we run our tests 30,000 times a month.

There you go, that’s some metrics that you can go and tell to your managers or present to the company, so that you can get more time and more resources to build the system, and show that there is an actual impact.

Go very, very, small, and then try to show impact. I think, those are two things. I guess, the third one is just, try it out. If it doesn’t work, then it’s all right, and if it’s a small project, then you wouldn’t waste too much time, and always there’s the learning part in it.

Shane Hastie: Sergii, lots of good advice and interesting points here. If people want to continue the conversation, where do they find you?

Sergii Gorbachov: They can find me on LinkedIn. My name is Sergii Gorbachov.

Shane Hastie: We’ll include that link in the show notes.

Sergii Gorbachov: Perfect, yes.

Shane Hastie: Thanks so much for taking the time to talk to us today.

Sergii Gorbachov: Thank you.

Mentioned:

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • Soledad Alborno

Transcript

Alborno: My name is Soledad Alborno. I’m a product manager at Google. I’m here to talk about what to pack for your GenAI adventure. We’re going to talk about what skills can you reuse when building products, GenAI products, and what are the new skills and new tools that we have to learn to build successful products. I’m an information system engineer. I was an engineer for 10 years and then moved to product. I’m an advocate for equality and diversity. I’ve been building GenAI products for the last two years.

In fact, my first product in 2023 was Summarize. Summarize was the first GenAI product or feature for Google Assistant. We had a vision on building something that helped the user get the gist of long-form text. Have you ever received a link from a friend or someone that you know, saying, you have to read this, and you open it and it’s so long and you don’t have time? That’s the moment when you pull up Google Assistant and now Gemini, and you ask, give me a summary of this. It’s going to create a quick summary of the information you have in your screen. In this case, this is how it looks like. You tap the Summarize button and it generates a summary that you can see, in three bullet points. We will talk about, when I started this product and what I learned at the end.

My GenAI Creation Journey

When I started building Summarize, I thought I was like a very seasoned product manager, 10 years of experience. I can do any kind of products. Let’s start. That was good. I had a lot of tools in my backpack. On the way, I learned that I had to interact with this big monster, the non-deterministic monster that is LLM and GenAI, I call it here Moby-Dick. In my journey of building this product, I learned to treat and build datasets. I learned to create ratings and evals. I will share with you some tips on how do we build those evals and datasets so it makes a successful product. I had to learn to deal with hallucinations, very long latencies and how do we make this thing faster. Because you ask for a summary, you don’t want to wait 10 seconds to get the result. Trust and safety, so a lot of things related to GenAI are related to trust and safety, and we had to work with that.

Traditional Product Management Tools are Still Useful

I’m going to start with a few questions. Have you ever used any kind of LLM? Have you used prompt engineering to build a product? Just prompt engineering: you set up some roles for the model, you made it work. Are you right now building a GenAI product in your role? Have you ever fine-tuned a model? Do you design evals or analyze rating results for a product? Do you believe your current tools and skills are useful to create GenAI products? Whatever you know is very useful to create AI products.

My role is to be a product manager. In order to be a product manager, I need to know my users. I’m the voice of the user in my products. I need to know technology because I build technical solutions for my users. I need to know business because whatever product I build needs to be good for my business and be aligned to the strategy. That’s my role.

In my role, the tools and skills that I get as anyone that works in any startup or how to lead any project know, the first one is business and market fit. I need to know, who are my competitors, what’s happening in this market, how to align to the business goal, how do we make money with this product? It’s still very relevant in the GenAI world. No changes there. I need to know, who are my target users? Are these professional users? Are these people students, they are doctors? What is the age I’m targeting? Very important as well for generative AI products. You need to know your users because they will interact with the product. I need to know, what are the users’ pain points? What are the problems? What are the things that will help me understand how to build a solution for them and prove that my solution helped them to solve a real problem and bring real value to them? I need to know, then work with my teams and everyone to build a solution for those problems. To build a solution, I’m going to write the requirements.

These are still very relevant terms for any product, software product. There are little differences there that we will talk about. The last two things are metrics. We need to know how to measure this product to be successful. Go-to market, how are we going to push this product in the market, our marketing strategies, and so on? Everything relevant, all your skills, everything that you have in your backpack so far, everything is useful for GenAI. The difference is in the solution and requirements. There are very small differences there. This is where we need to help our engineering teams to pick the right GenAI stack. They will start asking, what type of model. Do I need a small model, a big model, a model on device? Does it need to be multimodal or text only? What is this model and how do we pick it?

Activity – Selecting the Right GenAI Stack

Next, I’m going to work on a little exercise that will help us to understand, what is the difference between a small model and a big model, and why do we care? The first thing I want to tell you is we care because small models are cheaper to run than bigger models. That’s the first thing why we care. Let’s see what is the quality of them. Who loves traveling here? Any traveler? Who can help me with this question? Lee, your task is super simple. Using only those words, adventure, new, lost, food, luggage, and beach, you have to answer three questions, only those words. Why do you love traveling?

Lee: Got leaner luggage. Food, and beach, and adventure, and new.

Alborno: Describe a problem you had when traveling.

Lee: Luggage, lost, food, adventure.

Alborno: What’s your dream vacation?

Lee: Adventure.

Alborno: As you can see, it’s a little hard to answer these very easy questions with restricted vocabulary. This represents a model with few parameters. It’s fast to run. It’s only a few choices, but it’s cheaper. The response is not that good. It doesn’t feel like human. What happens when we add a little more words to the same exercise? It’s the same questions. Now we have extra words: adventure, new, lost, food, luggage, beach, in, paradise, the, we, our, airport, discover, relax, and sun. Why do you love traveling?

Lee: Adventure, discover, relax, currently paradise.

Alborno: Describe a problem you had when traveling.

Lee: Lost, new, the sun sometimes.

Alborno: What is your dream vacation?

Lee: Not in the airport. Beach, adventure, paradise, sun.

Alborno: We are getting a little better, getting a little longer responses. Still some hallucination in the middle. Hallucination means that the model is inventing stuff that is not in the intent of the response because it doesn’t have more information than what we have here in the vocabulary. The last one is, using all the words in the British dictionary and at least three words, we are introducing some prompt here, why do you love traveling?

Lee: I like the travel to be good. I like the adventure of going to new places and discovering the history and the culture of the people.

Alborno: Describe a problem you had when traveling.

Lee: The airport’s usually a free work zone. I lost money, being lost.

Alborno: What is your dream vacation?

Lee: Going somewhere new, that the people are friendly and inviting.

Alborno: You can see when the model has more information, more parameters, then it’s easier for the answers to be better quality and to represent human in a better way. You can borrow this exercise to present this to anyone, any customer that doesn’t know about how this works. It works very well. I did it a couple of times.

Requirements to Select the Right GenAI Stack

Let’s go back to the requirements. How do we make sure that we help the engineering team to define the right model or the right stat to use in my product? There are many things we need to define. Four of the things that are very important are the input and the output, but not in the general way. We need to think about, what are the modalities? Is my system text only, text, images, voices, video, attachment, document? What is the input of my process and my product? What is the output? Is it text? Is it images? Are we generating images? Are we updating images? What is the output that we need from the model? Accuracy versus creativity. Is this a product to help people create new things? Is it a GenAI product for canvas or to create images for a social network, for TikTok, for Instagram? What is it? If it’s creative, then it’s ok. It’s very easy. LLMs are very creative.

If we need more accuracy, like a GenAI product to help doctors to diagnose illnesses, then we need accuracy. That’s a different topic. It’s a different model, different techniques. We will use RAG, or fine-tune it in a different way. The last one is, how much domain specific knowledge? Is this just a chatbot that talks about whatever the user will ask or is it a customer support agent for a specific business? In which case, you need to upload all the domain specific knowledge from that business. For instance, if it is doing recommendations to buy a product, you need to upload all the information for the product.

For Summarize, I have the following requirements. User input was text. We started thinking, we are going to use the text and the images from the article. Then, the responses were not improving. The model was slower, so we decided to use text to start, and the responses were good enough for our product. In the output, we said, what is the output? Here it was a little complicated because we all know what a summary is. We all have different definitions of a summary. I had to use user experience research to understand, what is a summary for my users? Is it like two paragraphs, one paragraph, two sentences, three bullet points? Is it 50 words or 300 words?

All of these matter when we decide what is this product going to do. We had to go a little into talking with a lot of people and understanding, at the end of the day, what we decided is three bullet points was something that everyone would agree is a good summary. We went with that definition for my output. We wanted no hallucinations. Not in the typical term of, do not add flowers in an article that is talking about the stock. It was no hallucination in the sense, do not add any information that is not in the original article, because LLMs were trained with all of this massive amount of information. They tend to fill in the gaps and the summaries will have information from other places. We needed to make sure the summary accurately represented the article. For that, we had to create metrics and numbers.

Actually, metrics, automated metrics. It’s a number that represents how good the summary represents the original article. We did that to improve our quality of the summaries, too. Last, we didn’t have any domain specific knowledge. We just had the input from the article we were trying to summarize.

The Data-Driven Development Lifecycle

Once we have all the requirements, we say, how do we start developing this thing? The first thing was, create a good dataset. We started. When I work with my product teams, we work with a small, medium, and large dataset. The small dataset is 100 inputs that we can get from different previous studies, from logs of our previous system, or whatever. Sometimes the team makes the inputs. We create 100 prompts and we test. That’s a small dataset. It’s nice because in a small dataset, you can have different prompts, different results from different models for the same prompt. You can compare it with your eyes. You can feel what is better and feel where is the missing point for each of them. We have a medium dataset that usually I use that with the raters and in the evals. That’s about 300 examples.

A large dataset, it’s like 3,000 examples or more, depending on the context, on the product. That big dataset is more for training and validation and fine-tuning. First, we create our datasets. Second, when I had the 100 examples dataset, we went to prompt engineering and just take the foundational model, do some prompting, just generate a summary for this, and get the results. Maybe do another one with generate a summary in three bullet points. Generate a summary that is shorter than 250 words. We try different prompts, so we can evaluate this thing.

Then, in some of the cycles, we also have model customization. Here is when we use RAG or fine-tuning to improve the model, and the evaluation, which is actually using an evaluation criteria. We send these examples to raters, people that will say, this is good or not, or more or less. Then, we can have patterns. We use these patterns to add more data to the dataset, and go again. This is a cycle. We keep going until we feel that the quality is good and the result of the evaluation is good.