Month: June 2025

Java News Roundup: MicroProfile, Open Liberty, TomEE, JobRunr, LangChain4j, Apple SwiftJava

MMS • Michael Redlich

This week’s Java roundup for June 16th, 2025, features news highlighting: point releases of MicroProfile 7.1, Apache TomEE 10.1 and LangChain4j 1.1; the June edition of Open Liberty; the second beta release of JobRunr 8.0; and Apple’s new SwiftJava utility.

JDK 25

Build 28 of the JDK 25 early-access builds was made available this past week featuring updates from Build 27 that include fixes for various issues. More details on this release may be found in the release notes.

JDK 26

Build 3 of the JDK 26 early-access builds was also made available this past week featuring updates from Build 2 that include fixes for various issues. Further details on this release may be found in the release notes.

Jakarta EE 11

In his weekly Hashtag Jakarta EE blog, Ivar Grimstad, Jakarta EE Developer Advocate at the Eclipse Foundation, provided an update on Jakarta EE 11, writing:

Some of you may have noticed that the ballot for the Jakarta EE 11 Platform specification concluded this [past] week. And the even more observant may have noticed that the various artefacts are available on the website and in Maven Central.

There will be an official launch with press releases, analyst briefings, and celebrations to mark the release as officially released the upcoming week.

InfoQ will follow up with a more detailed news story.

Spring Framework

It was a busy week over at Spring as the various teams have delivered point releases of Spring Boot, Spring Security, Spring Authorization Server, Spring Session, Spring Integration, Spring Modulith, Spring REST Docs, Spring AMQP, Spring for Apache Kafka, Spring for Apache Pulsar, Spring Web Services and Spring Vault. More details may be found in this InfoQ news story.

Release trains for numerous Spring projects will also reach the end of OSS support on June 30, 2025.

MicroProfile

The MicroProfile Working Group has released version 7.1 of MicroProfile featuring updates to the MicroProfile Telemetry and MicroProfile Open API specifications.

New features in MicroProfile Telemetry 2.1 include: a dependency upgrade to Awaitility 4.2.2 to allow for running the TCK on JDK 23; and improved metrics from ThreadCountHandler class to ensure consistent text descriptions.

New features in MicroProfile Open API 4.1 include: the addition of a jsonSchemaDialect() method, defined in the OpenAPI interface, to render the jsonSchemaDialect field; and a minor improvements to the Extensible interface that adds the @since tag in the JavaDoc.

Open Liberty

The release of Open Liberty 25.0.0.6 features backporting compatibility of Microprofile Health 4.0 specification (mpHealth-4.0 feature) to the Java EE 7 and Java EE 8 applications; and the file-based health check mechanism as an alternative to the traditional /health endpoints, introduced in Open Liberty 25.0.0.4-beta, has been updated to include a new server.xml attribute, startupCheckInterval, and a corresponding environment variable, MP_HEALTH_STARTUP_CHECK_INTERVAL, that defaults to 100 ms if no configuration has been provided.

JobRunr

The second beta release of JobRunr 8.0.0 introduces Carbon Aware Job Processing, a new feature that optimizes the carbon footprint, that is, the lowest amount of CO2 being generated, when scheduling jobs. Other new features include: support for Kotlin serialization with a new KotlinxSerializationJsonMapper class, an implementation of the JsonMapper interface, for an enhanced experience when writing JobRunr applications in Kotlin; and improved synchronization with the @Recurring annotation. Further details on this release may be found in the release notes.

LangChain4j

The formal release (along with the first release candidate and seventh beta release) of LangChain4j 1.1.0 delivers modules: langchain4j-core; langchain4j; langchain4j-http-client; langchain4j-http-client-jdk and langchain4j-open-ai with the the remaining modules under the seventh beta release. For the first release candidate, new modules: langchain4j-anthropic; langchain4j-azure-open-ai; langchain4j-bedrock; langchain4j-google-ai-gemini; langchain4j-mistral-ai; and langchain4j-ollama are now available for developers to use in their applications. Version 1.2 of these new modules will be delivered in July. More details on this release may be found in the release notes.

Apple

At the recent Apple WWDC25 conference, SwiftJava, a new experimental utility providing interoperability between Java and Swift, was introduced to conference attendees. SwiftJava consists of: the Swift Package, a JavaKit containing Swift libraries and macros; a Java Library, a SwiftKit containing a Java library for Swift interoperability; and tools that include the swift-java command line utility and support for the Swift Package Manager and Gradle build tools.

Apache Software Foundation

The release of Apache TomEE 10.1.0 delivers bug fixes, dependency upgrades and alignment with MicroProfile 6.1 along with specifications MicroProfile Config 3.1, MicroProfile Metrics 5.1 and MicroProfile Telemetry 1.1. Further details on this release may be found in the release notes.

The release of Apache Log4j 2.25.0 ships with bug fixes and notable changes such as: support for embedded GraalVM reachability metadata in all Log4j extensions for seamless generation of native images; a refactor of the Pattern Layout API that resolves bugs and ensures consistent behavior across all exception converters; and an updated JMS Appender utility now supports the Jakarta Messaging specification. More details on this release may be found in the release notes.

Gradle

The first release candidate of Gradle 9.0.0 provides bug fixes and new features such as: a minimal JDK 17 version; the configuration cache is now enabled by default as it has been declared as the preferred mode of execution for developers; and support for Kotlin 2.1 and Groovy 4.0. Further details on this release may be found in the release notes.

MMS • Matt Foster

AWS has expanded GuardDuty’s threat detection capabilities on EKS clusters, introducing new runtime monitoring features that use a managed eBPF agent to detect container-level threats. The update allows customers to identify suspicious behavior such as credential exfiltration, reverse shells, and crypto mining by analyzing system calls directly from the Kubernetes data plane. GuardDuty joins a growing set of cloud-native security services that embed workload protection into infrastructure through managed integrations rather than user-deployed agents.

Traditional agent-based threat detection in Kubernetes has long faced criticism for adding complexity, requiring elevated privileges, and increasing the attack surface. Agents can be difficult to deploy in managed environments and often consume valuable node resources.

Vendors like Orca Security and Wiz pioneered agentless cloud security by integrating via cloud APIs and snapshots rather than runtime hooks. This approach provides broad visibility—across virtual machines, containers, storage, and IAM configurations—but can miss real-time behaviors requiring OS-level introspection.

GuardDuty takes a hybrid approach. While still using an agent—a DaemonSet deployed to the EKS cluster—it is fully managed by AWS. Customers do not have to install or maintain the agent, and it runs outside the application context, avoiding sidecar or in-container deployments. This approach allows for more granular runtime visibility at the container level.

Open-source projects like Falco and Cilium Tetragon have also explored eBPF-based threat detection, offering powerful capabilities but requiring manual deployment, tuning, and ongoing maintenance. GuardDuty abstracts that complexity for teams operating within the AWS ecosystem.

The service continuously consumes system-level telemetry, analyzing patterns for anomalous or malicious behavior, and publishes findings to the GuardDuty console and EventBridge for integration with incident response workflows.

This telemetry is streamed from the data plane, where it’s enriched with context (such as pod metadata, image IDs, and namespace) and analyzed by GuardDuty’s detection engine.

AWS claims the extended suite of telemetry can detect suspicious binary execution, known crypto-mining tools, network connections to threat actors, and potential credential exfiltration.

The feature is currently accessible to users when either EKS Protection or Runtime Monitoring is enabled.

GuardDuty’s EKS extension reflects a broader industry trend: cloud providers are embedding threat detection deeper into their managed infrastructure and offering built-in security capabilities that reduce the need for customer-deployed agents. Microsoft Defender for Containers supports agentless scanning of Azure Kubernetes Service (AKS), while Google Cloud’s Security Command Center includes Kubernetes threat detection through Event Threat Detection (ETD).

This shift is not coincidental. The 2024 State of Kubernetes Security Report highlights that complexity and configuration overhead remain key barriers to adopting Kubernetes security solutions. In this context, GuardDuty’s Extended Threat Detection signals a move toward embedded, opinionated defenses—designed to reduce friction while preserving deep runtime visibility.

MMS • Craig Risi

On April 23, 2025, the Cloud Native Computing Foundation (CNCF) announced the graduation of in‑toto, a framework designed to enforce supply chain integrity by ensuring that every step in the software development lifecycle, such as building, signing, and deployment, is properly authorized and verifiable. This move signifies that in‑toto has achieved full maturity and stability, joining other graduated CNCF projects that are widely adopted and ready for production at scale.

Developed primarily by researchers at NYU Tandon School of Engineering, in‑toto provides a declarative framework enabling organizations to define policies that ensure only authorized actors perform specific build steps in the correct sequence. It uses signed metadata to create a traceable record from source code through to the final software artifact. This model helps prevent tampering, unauthorized actions, and insider threats, addressing the rising number and sophistication of software supply chain attacks.

Chris Aniszczyk, CTO of CNCF, emphasized its timely impact:

in‑toto addresses a critical and growing need in our ecosystem, ensuring trust and integrity in how software is built and delivered. As software supply chain threats grow in scale and complexity, in‑toto enables organizations to confidently verify their development workflows, reducing risk, enabling compliance, and ultimately accelerating secure innovation.

The graduation follows in-toto’s journey from a Sandbox project in 2019 to Incubation in early 2022 to a stable 1.0 specification in mid-2023. It is backed by major US federal agencies, including the National Science Foundation, DARPA, and the Air Force Research Laboratory have contributed funding and research support.It is already in use by organizations like Autodesk and SolarWinds, and integrated with standards such as OpenVEX and SLSA, in‑toto is gaining traction across sectors.

Tools such as Witness and Archivista make in‑toto easier to implement for developers to adopt in-toto. Jesse Sanford, Software Architect at Autodesk, noted:

The fact that Witness and Archivista have reduced developer friction so significantly has really set the in‑toto framework apart for us… we can now run securely by default.

Graduation from the CNCF marks a significant moment: in‑toto is now recognized by them as production‑ready, offering a systematic way to defend against supply chain threats and meet regulatory standards via verifiable workflows. The CNCF plans to continue advancing the project, including enhancements to policy language support and developer experience.

Podcast: Building the Middle Tier and Doing Software Migrations: A Conversation with Rashmi Venugopal

MMS • Rashmi Venugopal

Transcript

Introduction [00:28]

Michael Stiefel: Welcome to the Architects Podcast where we discuss what it means to be an architect and how architects actually do their job. Today’s guest is Rashmi Venugopal, who has a track record of building and operating reliable distributed systems, which power mission-critical applications at scale.

Over the past decade, she has been instrumental in designing and developing complex software systems that enable consumers to effortlessly pay for their subscriptions at Netflix, calculate tier pricing for various ride options at Uber, and manage Azure Cloud networking configurations at Microsoft. With a master’s degree in information networking from Carnegie Mellon, Rashmi currently serves as staff software engineer at Netflix. In this role, she leads cross-functional initiatives aimed at driving subscription growth through payment orchestration for over 250 million members.

Becoming an Architect [01:27]

When she’s not immersed in code, you’ll find her outdoors either hiking, camping or at a local park reflecting on the impact of technology on the world. It’s great to have you here on the podcast, and I would like to start out by asking you how your interest in software architecture started. It’s not as if you woke up one morning and said, “Today I’m going to be an architect today, I’m going to do architecture”.

Rashmi Venugopal: Hey Michael, thank you so much for having me and I’m excited to be here. An interesting observation, just based on how you phrased your question around being an architect, is how less common the title of the architect has become now. That said the role of the architect is still alive and very much around and relevant for folks in senior or staff plus roles for sure. Yes, you’re right. It’s definitely not the case that I woke up one day and decided that I want to be an architect or a staff engineer, which is almost why I feel like my answer here is it was kind of an accident, and what I think I mean by that is that it represents a natural progression in my career, rather than a goal or an end state that I was actively pursuing. It is a function of seeking out new challenges and problems and questions for me to go after personally at an individual level.

But even organizationally, as you move up the proverbial ladder, the open questions that bubble up to you tend to become more complex and nuanced and it’s like, “Hey, the distributed systems challenges or software design questions”. Those are the ones that bubble up to you. And then when you spend more time thinking about that, and living and breathing that stuff, then that’s how you I guess, evolve into the role that I am in today.

Michael Stiefel: Did you ever think at some point consciously, or even unconsciously, that you were at a branch point? In other words, you could have become an architect, but maybe you could have stayed at an individual contributor or maybe you could have gone off into quality assurance. In other words, this evolution is partly under your control, and partly with the opportunities you get or the places you go to seek them out.

Rashmi Venugopal: Totally, and one branching point that I can think of now that you say it was, “Hey, did I want to become a manager? Or did I want to get into people-managing?” And that was an opportunity that came up in my organization as well, and that had me reflecting deeper like, “Hey, where do I find work satisfaction? Where does my sense of accomplishment come from?” And I made the explicit decision to stay as an individual contributor and not switch into people management.

Michael Stiefel: And no doubt you’re happier that you made that decision.

Rashmi Venugopal: Yes, I’m not saying that that door is closed always, but I definitely feel like I made the right decision at that time.

Michael Stiefel: Just thinking about this and maybe I have a biased perspective on this, you may turn out to be, if you do decide to go down the managerial role. You have a better idea of what your employees are going to go through having done it yourself.

Rashmi Venugopal: Yes.

Michael Stiefel: As opposed to somebody who just goes straight into the managerial role and does not necessarily understand. So I think that door not being closed, I think you may turn out to be a better manager if you decide to go down that route.

Rashmi Venugopal: Fair, fair and managers that have technical expertise, you can actually tell the difference when they’re the ones managing versus somebody that doesn’t. Yes.

Front-End, Middle-Tier, and Back-End Architecture [05:27]

Michael Stiefel: And hopefully they know enough to ask good questions, but not to dictate to you. So I’ve looked over some of the stuff that you’ve done and it’s been quite interesting. One of the things that I personally have found in my career of doing architecture and working in distributed systems or even going back to the days of service oriented architecture, even I’m old enough to remember the client server days. Hopefully I’m not dating myself too much, but one of the biggest problems I have found is that backend software architects and developers very often view the world very differently from front-end software architectures, and this disconnect can be quite difficult for an architect to grasp. What I mean by this is back-end software architects, you can tell me whether you think I’m right or wrong about this, obviously, tend to view the world much more in terms of individual events, transactions that we can roll back or compensate for.

I remember the days in client-server for example, where very often people used stored procedures, and that had a tremendous effect on the front-end. The front-end engineers think in terms of workflow, how users approach things. So for example, let’s say you have to fill out an application, you don’t have all the information you need right away. Supposing the social security number, I don’t have it. I need to get some sort of certification. I don’t have that right now. I don’t have the bank account number that I need to set up the payments or the credit card number. So if you took a very rigid backend view of the world and said, well, the database can’t have any nulls in it and we either have this information now or we don’t have it at all from a front-end, that’s foolish.

Now I think we’ve learned already how to deal with a situation like that, but I think that’s a very simple example of this disconnect and the fact that systems that don’t resolve this disconnect or handle it well have trouble evolving. And the question is how can we stop this from happening?

Rashmi Venugopal: Great question Michael, and a good one for me especially as somebody that works in the middle tier. So my team operates between the UI layer and the back-end layer, and I view our core capability as translating the APIs that the back-end teams provide and generate into packaging them into unified APIs or orchestrating between the UI interactions in the back-end. And this goes back to this almost a joke that people say that everything can be solved with an added layer of abstraction. That’s what this has come down to. How do we solve the mismatches or the challenges that we have, translating UI requirements to backend requirements. Hey, add a layer in the middle.

Michael Stiefel: If I can inject just a joke for a moment, I’ve often told people that I’ve done three things in my software consulting career, one trade off space and time, insert levels of indirection, and try to get my clients to tell me what they really want.

Rashmi Venugopal: The third one sounds like UI engineers trying to tell us, “Hey, this is what we want the user to do”.

Michael Stiefel: Yes. So I’m sorry for interrupting, but that’s sort of just this problem goes back a long way.

Rashmi Venugopal: Yes, and all those three points resonate with me as well. So in software topology we have, let’s say we have a three layered-system with front-end, middle tier, and back-end. The layers have separate responsibilities and they all exist for a reason and traditionally, as you said, backend engineers expose APIs, each of them are useful and meant to be used in a specific way.

The mid-tier is an orchestration engine that ties the backend and the front-end. They take the backend APIs and package them into atomic operations, and they know how to sequence these API calls based on an action that the user can take on the front-end side. In addition to all the challenges that you already called out with like creating a user workflow, I think our technology landscape has evolved to now people want to sign up or fill a form on the TV versus your iPad versus your mobile and just the presentation challenges can be so different across the platforms and now we expect our UI teams to be able to serve all types of platforms.

So the layers of abstractions are meant to help each of the layers to not think of anything that’s not part of their core competency or expertise. That said, if I boil it down to the core responsibilities for each, the way I think of it is: the backend is the source of truth for user state and user data, mid-tier can do experimentation or generate data for analytics purposes or provide some sort of standardization across different operations, and the UI of course, presentation across different platforms, user feedback that they’re optimized for user interactions.

When Reality Conflicts With Best Practices [11:13]

But there are of course obvious pitfalls that sometimes happen in the way we operate. What do I call a pitfall? If we have a scenario where there’s a tight project deadline and we have the backend API already exists, but we’re trying to create a user feature, sure enough, what we decide to do, especially as we’re AB testing something, we don’t know how well this is going to do when we put it out in the real world and we don’t know if a huge investment is worth it. So the initial stages are when the UI sidesteps the mid-tier and directly reaches out to the backend API, makes a call, and then that’s how they enable whatever this new feature is that they wanted to enable. And sure enough, the AB test did great, and now the product managers are like, when can we roll it out to everybody and fully productize this experience? How quickly can we do this because here’s the dollar amount associated with this feature.

This is like an example of how your ideal state, and your three tiered architecture then starts to get diluted, and the boundaries start dissolving, and then we deviate from the theoretical best practices in the real world, and this is something what I would call tech debt like we’ve done this once.

Michael Stiefel: Absolutely.

Understanding Tradeoffs [12:35]

Rashmi Venugopal: We’ve done this once, it’s worked well, but what does it mean for maintenance? What does it mean for extending this feature from its V zero version to a V two or a V three that’s even better. What does it mean for all of that, is something that is often overlooked when you take a very short term view. And I do want to say that at Netflix we’re pretty good at balancing the trade-offs and engineers have a say. And a lot of times I feel like some of these challenges are not obvious for everybody. So if you are the person that can foresee the pitfalls or the downsides of a certain trade-off, I would encourage them to be vocal about it.

Michael Stiefel: Two strains of conversation come to mind when you say that, but one that’s the most immediate to me is, do you find it easy or do you find people being receptive when you say “Yes”. Going directly from the front-end to the back there without the middle tier is good for the moment, but this is going to cost us in the long term and maybe we should do it now, but realize it’s going to cost. Are people receptive to that argument or does the pressure of the constant turnout functionality really force people not to think very clearly about this until it becomes unavoidable to do something about it?

Rashmi Venugopal: It depends. It depends on the scenario. Maybe I’ll give you an example of both cases. One example is there is a new policy or a regulation that’s come out and there’s a strict deadline that companies need to abide by to roll out the specific feature in order to continue operating. At that time, all bets are off. We’re trying to get this out the door as quickly as possible, but even then, I feel like there is a level of due diligence that is important to be done, which is in your proposal, call out the risks. It’s like, “Hey, be explicit about the trade-offs that you’re making and communicate and make sure that your stakeholders know that this is the trade-off that’s been made”. As opposed to silently make it and then being forced to eat the cost for it in the future. Nobody’s heard about the fact that the trade-off was even made.

So that’s one example. But in another case where the PMs or even the leadership is very invested in a feature because they see it evolving into the future, they think that it’s a big bet for the company and it’s aligned with the long-term strategic priorities for the company, then there’s more room to negotiate of, “Hey, what is this V zero version going to be and how much of an investment we’re going to make in that?”

Make Trade-off Decisions Explicit [15:34]

Michael Stiefel: I think that’s fair. And I guess it partially depends on how willing the engineers are. I think I’ve always done it in my career, and I think it’s very important to just state what is important because the worst decisions I have found that are made are the decisions that get made by default and where people don’t realize that they’re making a decision.

Rashmi Venugopal: And I want to add one more thing to that. Sometimes people hate the messenger.

Michael Stiefel: Yes absolutely. Yes, yes.

The Importance of Personality and Soft Skills [16:07]

Rashmi Venugopal: So as engineers, it’s important for you to have good relationships with all your cross-functional partners, and then it’s hard to do that. It starts from building the relationship, building some credit with that person to then be able to say that, “Hey, so you’ve built up that credibility”. So it’s a long way to build up to when you can push back and bring up something like this.

Michael Stiefel: I think that’s an excellent point because in the end, very often it’s personal relationships and very often cause technical decisions to be made one way or the other. I mean, I often had an advantage as being a consultant from the outside being called in. Sometimes, I got a little more leeway because I wasn’t going to necessarily be there for the longest term, to be a little more blunt. But even then, you have to be diplomatic. You have to care about people’s feelings, of the fact that someone may work on a feature that’s been a long time. and now you want to deprecate it. People are people at the end of the day.

Rashmi Venugopal: Yes. This reminds me of a common misconception that people have about the role of the architect, even the staff engineer role, is that they assume that it is a purely technical role. It is, but in my mind it is also a leadership role where your persuasion skills matter, your written communication matters, your ability to strategize and influence matters. Something to keep in mind.

Michael Stiefel: A theme on these podcasts has been sort of Gregor Hohpe’s elevator architect, where the architect has to be able to go from the boiler room to the boardroom, and be able to communicate business requirements to the technical people, and help the technical people understand that these business requirements are coming from real places and not because management woke up on a different side of the bed in the morning. Those are interpersonal skills, and I think too often people can think of architects as enterprise architects that exist in some on top of some mountain somewhere issuing edicts, and not the down and the depths of solution architects, the architects who have product responsibility, which is very much as you say, almost as much personality as well as the technical chops. You need them both.

Rashmi Venugopal: Totally.

Michael Stiefel: Or you stay as an individual contributor. And yes, where technical chops matter more. I think people are more forgiving of someone who has technical expertise and not the best personality, than someone who’s an architect.

Rashmi Venugopal: The reverse.

Michael Stiefel: Yes.

Rashmi Venugopal: I agree.

Michael Stiefel: And of course, as we talked about before, sometimes if you have too much personality, you can be too convincing, and be technically wrong. I’m sure we’ve all come across those people.

Rashmi Venugopal: Sometimes it feels like if you have the right kind of relationships and you’re able to convince the right people, you get to get your way.

Architecting the Middle-Tier [19:19]

Michael Stiefel: Yes, yes. So I want to come back to the sort of the second thing that when you were talking about the middle tier, and I found it very interesting what you were saying. What occurred to me is very often people want to think in terms of orchestration, or choreography, or event driven solutions. Do you have any feeling about how to use those in the middle tier? Because the way you portray the middle tier, and I think it’s valid, is that’s the view that the front-end has of the back-end and it’s the view that the back-end has of the front-end. This analogy has occurred to me, the backend is the raw cooking ingredients. The front-end is sort of like the servers, the waiter, the waitresses, and the middle tier is actually the cook where the meal gets created.

Rashmi Venugopal: That’s a great analogy because that reminds me of a state machine, where the state machine knows when to add an ingredient, and how long to bake something and when to stir something. And when to add the next ingredient. And some of it is driven by the feedback from the users from the front-end, which is user initiated actions, but some others are driven by the response from the backend. Let’s say we make a call to the backend API and it fails. Now the state machine is like, “Okay, what do I do when it succeeds versus what do I do when it fails?” So definitely having a state machine is a good way to think about solving this specific example that you were talking about.

Michael Stiefel: When I think about the middle tier and I think of all the technologies and ideas that people have, know state machines, choreography, orchestration, event-driven, what is it that’s most important to make the middle tier successful?

Rashmi Venugopal: That is a great question Michael, and I feel like as engineers we focus on enabling. Building to enable features and enable solving problems, and it’s equally important to know when to get out of the way as well sometimes. So I feel like if you know you’re working in the middle tier, looking at both of those aspects are equally important. How can we enable the UI to do something but at the same time get out of the way when it is a presentation related concern? Another thing that I think of is scaling the mid-tier.

So for example, if you don’t have a way to templatize some of the common interaction patterns between the UI and the mid-tier, then you find yourself rebuilding very similar versions of interactions and how to handle those interactions over and over again because you haven’t invested the time to templatize that or make that component that you have in the mid-tier more reusable. And another prerequisite for the mid-tier to be successful would be the right level of abstraction at the backend.

For example, if we’ve had premature consolidation of APIs at the backend, it becomes very difficult at the mid-tier to be like there’s an API explosion because we have very specific APIs for different scenarios. So when do we use what? Ideally we want to keep collaboration effective, but also at a minimum to do something new. So I feel like having the right level of abstractions at the backend is the third thing that I would say is a prerequisite for a successful mid-tier system.

Michael Stiefel: That sounds in accordance with my experience as well. And when you talk about the replication of efforts, if things are not defined properly, you raise the question of scaling and if you don’t have the right middle tier, in other words, the middle tier has to scale as well as the backend, appropriately, and where do you scale what? As you say, you have to get the abstraction right.

Rashmi Venugopal: And at Netflix we have this engineering guiding principle that says build only when necessary. And I almost mapped that to Martin Fowler’s rule of three where he says, “Hey, refactor the third time you come across that”. Where if you have three different scenarios that have the same common interaction pattern and now is a good time to templatize that and abstract that away to be reused.

Learning From Other People’s Mistakes [24:06]

Michael Stiefel: That makes a lot of sense. I wish I had some of this advice when I first got started many, many years ago because I remember as I started out with client-server where there was no middle tier, and we watched this evolution, and we’ve learned a lot of these principles the hard way. Hopefully people will take from this podcast that they don’t have to make the same mistakes that we already made.

Rashmi Venugopal: True. And I feel the same way. I’m sure five years from now I would wish that I knew more things than I did now. So that’s a never ending cycle.

Michael Stiefel: Well, that is one of my motivations for liking to do these kinds of podcasts, is to get people to focus on things maybe while they’re in their car or maybe they have free time whenever they’re listening to the podcast, to be exposed to something that puts something in their mind that says, hopefully when they come across this, they don’t have to rethink the problem from scratch and understand that people have shed blood and toil before them on these problems.

Rashmi Venugopal: Definitely. And that’s something that I feel like is more and more important as I progress in my career, is not just about learning from my own experiences, but maximizing my learnings by learning from other people’s experiences.

Michael Stiefel: That’s a form of intellectual scale because if you can learn from other people’s experiences, however you learn from them, you’re scaling in a personal way and personal experience. So there’s an interesting segue to the other thing that I find very interesting about your work is we talked about software improvement and technical depth and refactoring and renovation. And so that leads me to the question: when you start a software company, a software company is very small, as you said before, you just want to get things done, and you don’t think very carefully about the middle tier.

Success Causes the Inevitable Software Migration [26:13]

You put just enough in the back-end and the front to get your minimal viable product, but then someday, if you’re successful and you have customers, so you just can’t throw your old product away and start from scratch, how do you evolve your software? I mean, is it inevitable that you’re going to have to reinvent things, and start things over again? I guess it’s the question that you’ve thought a bit about is why are software migrations so difficult? They seem inevitable as the price of success, but is there anything we can do to make them easier?

Rashmi Venugopal: You’re right that it is inevitable, especially in companies that are successful and growing and expanding their scope and the range in which they operate. It is definitely inevitable. I think they are hard to get right for a few reasons because if you think about it, software migrations don’t happen overnight. It takes the time that it takes, but you are also changing the plane while flying it because company priorities are constantly evolving. The world around us is constantly evolving.

Michael Stiefel: There’s turbulence as you would say, as it could go with your analogy.

Rashmi Venugopal: There totally is turbulence. That’s one. The second thing is as your company gets bigger and bigger, and as you add these layers, there is no one person that understands everything end to end. So now you are all individual experts in your own area and you’re trying to come together to see, “Hey, how do we go from where we are today to where we want to go?” And often I would even consider it a success if we can even align on a common idea of where we want to go, because even that’s different sometimes and takes time to find the common ground.

Michael Stiefel: Especially if the market is evolving quickly.

Rashmi Venugopal: Yes. And unlike traditional architect roles in construction where you plan your building, end to end you have the whole plan and then you start building. That doesn’t work for software engineering because your plan is just a direction. It’s good to get started and corral people and get us to move in the same way, but you quickly come across unknown unknowns and challenges and you find out that this particular technology doesn’t work exactly the way you thought it was going to, or this other thing is important for you. Suddenly it’s like, “Hey, security is now more important now versus previously we were just focused on performance”. So the fact that surprises will come your way means that your migration process needs to stay nimble and evolvable and you need to be able to pivot as and when you find issues that need to be solved. So that’s the third thing that I think makes some of this challenging.

The fourth reason why this is challenging is, yes, you’re off to go build this new thing that will be great when it’s done, but hey, we still have product features that we want to innovate on and roll out, and there’s this whole roadmap for the year that we still want to hit while we go do this other thing. So I view that as how do we balance innovation with renovation, and is it a good idea to do both at the same time? When are you going to start building product features in your new systems versus when do you want to still build that in your existing systems to get it out the door sooner?

And how do you isolate some of the changes? For example, let’s say you rolled out a feature in your migration process and you’re like, “This is not doing as well as our previous product did”. How do you even start pinpointing what went wrong? Is it because we have innovated upon it since and added new things, or is it because we changed the underlying technology as part of the migration. So isolating a regression becomes very challenging.

Michael Stiefel: Do you ever see a situation, I mean there are two things that come to mind when you say this, and I’ll mention both of them and then you can just react as you want. One of them is that I often find that tools are not quite where you want them to be, because unlike, for example, you mentioned before architecture of buildings where people understand when you rip out a room, there’s a cost to it. But on the other hand, there are civil engineers who can have the job of building the same bridge over and over again. The fact of the matter is in software, we only do something because we want to do something new. If I want another copy of Microsoft Word, I just copy the bits.

The Inadequacy of Software Engineering Tools [31:37]

So the problem is our tools are backwards facing. They’re automating what we knew how to do yesterday, but not necessarily what we need to do tomorrow. I mean, you think of how people take for granted today with java, .NET or any of the other technologies that people use, of having software libraries. For those of us who have been in the field for a while realize it took decades for us to come up with enough common functionality and enough common areas to have libraries, debuggers, compilers. These were all invented at one point in time, and were all very controversial when they were invented.

The Problem of Data Migration [32:24]

So this is one tension that I see in the software migration, is the tools might not be there where we want to go. The other problem that comes to mind is of course, the one of databases or stores, for example, if you’re doing a migration, you try to get the data model right first, and then move both systems to the new data model and then evolve things. Or do you just create a new system, and then migrate the backend at some point and cross your fingers and hope it all works?

Rashmi Venugopal: Definitely not the latter.

Michael Stiefel: Yes.

Rashmi Venugopal: So if you have a data migration involved, it is definitely important to get the data model right. And even validation once you have the data model. How can we know for a fact that we have a good way of comparing both the systems to validate that, “Hey, the new system actually does everything that we want to, and the way it responds to some of the queries are equivalent to what we used to have”. And how do you abstract that away from say, impacting the UI in this case or the consumers of that data. And you also have to plan around having both the new and the old systems be operational concurrently for a small period of time while you’re switching over because it’s likely not a cut over at midnight situation as opposed to-

Michael Stiefel: Which means you have that back annotation going on.

Rashmi Venugopal: So you’re likely dual writing in both for some time.

Michael Stiefel: Right. Yes.

Rashmi Venugopal: And then validating, using dual reads and then be like, “Okay, now I have confidence, so let me fully switch over and replicate”.

Michael Stiefel: Right, I have never enjoyed those situations. It’s one thing to have a new system and mess up, but when you mess up the old system in the process of moving to the new system, you don’t win very many friends.

Rashmi Venugopal: Oh, no, totally not. And regardless of how many times you do this, I feel like there’s always a new challenge next time you do something that you are having to solve from scratch.

Michael Stiefel: Are there any things that pop up into your mind about what we’ve talked about or things that you want to explore before I move into what the architect’s questionnaire that I like to ask everybody?

The Value of Legacy Systems [34:49]

Rashmi Venugopal: The one thing that I wanted to call out is people sometimes have a tendency to hate on legacy systems. They’re like, “Oh, this is old. This doesn’t work the way I wanted to. And this is built on old technology”. And forget the fact that that was maybe the bread winning system or it lets you do what you wanted to do. And there is something to be said for a system that’s lasted a while because incrementally every month, every year we’ve made improvements that is hard to get right by just switching to a rewrite and like, “Hey, let me do this thing again and expect it to be as stable or be as easily understandable as the old system that’s been around for so long”.

Michael Stiefel: Somebody, I forget who it was, maybe it was David Chappell, I forget, said that “The definition of a legacy system is a system that’s making money now”.

Rashmi Venugopal: Yes, yes, yes.

Michael Stiefel: Which captures exactly what you’ve said, and I think it’s very important because yes, it’s true that some systems do live beyond their useful life, but people are still paying for them. They’re paying your salaries and they’re giving you the opportunity to do the next thing.

The Architect’s Questionnaire [36:16]

One of my favorite parts of the podcast is to humanize the architects that I have on, and get a little more insight into what it means to be an architect.

What is your favorite part of being an architect?

Rashmi Venugopal: I will say though that there is never a dull moment at work. I like the way my role challenges me and keeps me on my toes. And a big part of this is the variety that comes with the staff role at Netflix. The aspect of being an architect is definitely one facet of it, but it also includes other responsibilities like captaining a large cross-functional project, like a tech lead for example. And I like the ability to switch focus every once in a while and have that breadth of avenues to hone my skills against.

Michael Stiefel: What is your least favorite part of being an architect?

Rashmi Venugopal: Oh, I think increasingly we’ve grown to expect, maybe I’m speaking for myself here, I’ll say I have grown to expect fast feedback loops for effort and reward and instant gratification, which is what we have with technology now. But that kind of instant gratification is hard to come by in an architect or a staff role because the feedback loop, if you think about it, the feedback loop for effort to then seeing the impact of your effort come to life only goes up as you move up the ladder. So the feedback loop has gone from being days when you are discovering a bug and putting a fix out to months when you are leading a project or to quarters and years in the staff role. So it takes the time that it takes for your impact to be visible, and it requires a level of patience that I currently do not have, but I’m working towards building that muscle.

Michael Stiefel: Patience is something you can learn, but it takes a lot. It took me a lot of time for me to learn patience because there’s something intoxicating about programming a computer because it works or it doesn’t work. The algorithm, even if you take some time to get it, you get that instant feedback or gratification that it works or doesn’t work. And with being an architect, even the negative feedback that you want, so you learn and sometimes it doesn’t get there in time for you to stop the mistake.

Rashmi Venugopal: Yes. Oh my god. Yes. This resonates with me and Michael. It’s encouraging to know that I have a future in which I will be able to get the level of patience I need.

Michael Stiefel: Yes, yes. Is there anything creatively, spiritually, or emotionally compelling about architecture or being an architect?

Rashmi Venugopal: I think the role requires you to not just focus on your contributions at an individual level, but also think about the influence and impact that you can have by enhancing the productivity and effectiveness of those around you. So your radius of impact then goes beyond your own work as you empower others to achieve more. We call that the multiplier effect internally. So that’s something that I find compelling about the role.

Michael Stiefel: What turns you off about architecture or being an architect?

Rashmi Venugopal: When something is obviously flawed to me, but is not as obvious to others. I find that to be a frustrating situation to be in sometimes. Because I’m like, here I am spending time on trying to convey the problem where this time could have been spent towards solutioning the problem. And I think that’s primarily because I have a bias for action, which has served me well in the past. There’s no denying that, but I feel like right now I find that getting in the way sometimes. So I’m working on changing my perspective and telling myself sometimes that the way I add value is different from how it was in the past.

Michael Stiefel: Yes, I certainly feel that way, especially the further you get from actually touching code. You just have to accept the fact that somebody who has not been down the road you’ve been down, sometimes just has to learn from their own experience.

Rashmi Venugopal: Yes.

Michael Stiefel: Do you have any favorite technologies?

Rashmi Venugopal: It’s just asking if you have a favorite child, Michael. No jokes aside though. I view technology as a means to an end. And we spoke about this earlier too, it’s important to pick the right tool set to solve your problem. So sometimes I feel like being attached to a technology doesn’t serve me well because then I have a bias that keeps me from picking something that might be right for the problem or the scenario that we’re trying to solve. Though. If your question was reversed, I did have an answer if you did ask me, do I have a technology that I dislike?

Michael Stiefel: Well two questions from now I’ll ask you that or maybe I’ll ask it now. What technologies do you hate?

Rashmi Venugopal: Just to have an answer only because of a bad experience in the past. So I actually dislike Scala. I think it’s a great language if you’re trying to do data processing or data oriented things. But I think we… Back when I was at Uber, my tech lead at the time made the decision that we were going to write a data serving app in Scala, and the reason was, “Hey, we’ll have succinct code”. And that was true. We did have, our classes were much smaller than they would have been in say, Java, even Python. But it was so unreadable. It’s like a single line that would do 10 lines worth of stuff in Python or Java.

And added to the fact that at Uber while Go, Java and Python were considered first class programming languages that had support. Scala wasn’t one of them. So we had to solve some of our deploy challenges and operational challenges ourselves that made it even worse. So ever since then, I have had a bias against Scala.

Michael Stiefel: But you also raise an interesting question because I was always, when I wrote code, thought of code as expository literature, which should be clear that one step comes after another and it’s you’re making a technical challenge. In fact, Knuth wrote a very famous essay, I know people are familiar with the computer scientist Knuth who invented TeX, and he wrote an essay on literate programming. It’s a very famous essay, you really should read it. It talks about the importance of code clarity because the thing you have to realize is code is more often read than it’s written.

Rashmi Venugopal: Oh, yes.

Michael Stiefel: Because you write code, you forget about it, but many other people will look at your code and try to figure out what you did. So if you’re writing concise code that no one else can understand, I don’t think you’re doing the maintenance program –

Rashmi Venugopal: Any favors.

Michael Stiefel: … which also leads to one of my dislikes of people who write comments, but the comments don’t evolve as the code changes. I have been misled more than once in my career by reading comments, thinking this is what the code does, and then when I actually read the code and figure out what’s going on, no, that’s not what the code does.

Rashmi Venugopal: And this is assuming documentation exists in the first place.

Michael Stiefel: Yes. Of which is one of the reasons why you want to write readable code, because very often the code is the best documentation.

Rashmi Venugopal: True. And I feel like the other thing that I’ve heard, and I think that’s true, is that code lives longer than you think it’s going to live for when you’re writing it.

Michael Stiefel: That was a saying that was around when I was doing programming, but it just does. Sometimes it lives longer than you at the company.

Rashmi Venugopal: Oh, totally. There are a lot of times when I look up who to blame and I’m like, who is this person?

Michael Stiefel: Yes, yes, yes, yes. Sometimes you don’t always have the nicest feelings about that person.

Rashmi Venugopal: And then that leaves me wondering what people are going to think about me after I leave.

Michael Stiefel: What about architecture do you love?

Rashmi Venugopal: I definitely enjoy the problem solving aspect, which is both challenging and rewarding at the same time. I also like the thousand-foot view that you sometimes are forced to take to then understand the big picture, put all the pieces together and peel back the layers of the onion one by one to then gather the context that you need to go back and think about how to solve a problem.

Michael Stiefel: What about architecture do you hate?

Rashmi Venugopal: This is easy, context switching. So there’s like two types of context switching. One is switching between topics. Sometimes you’re going from this fire to this other fire and sometimes you’re like, as you said in the elevator example of going from the boardroom to the boiler room where you’re zooming in and zooming out pretty constantly. And it takes me some time to reorient myself. So I end up dedicating a day to this and then the next day to that versus if I try to switch between multiple topics on a single day, then I find that I am very ineffective.

Michael Stiefel: I’ll tell you a little secret. As somebody who’s been in this business for a while, it gets harder as you get older.

Rashmi Venugopal: Oh, wow. I’m glad I have heads up so I know what to expect.

Michael Stiefel: What profession, other than being an architect would you like to attempt?

Rashmi Venugopal: This one is interesting because when I think of a profession, I’m like, “Oh, it has to be something I enjoy”. And then I think about, okay, what do I enjoy? I really enjoy dancing, specifically Indian classical dancing, but I’ve never pursued it professionally before. So maybe that will be my answer, but I’d also be wary of the whole “losing your hobby by turning it into a profession” thing. I hope that doesn’t happen to me.

Michael Stiefel: Do you ever see yourself not being an architect anymore?

Rashmi Venugopal: In the short term? No. But in the long term, yes. I don’t think I want to retire in my current role. And with GenAI, it does make you think it’s like can human architects and staff engineers be replaced with a GenAI product? TBD you know it remains to be seen.

Michael Stiefel: I wouldn’t worry about it too much – for your generation – maybe the next generation. But I think first of all, we don’t really understand how GenAI actually works.

Rashmi Venugopal: True.

Michael Stiefel: And as you well know, the GenAI architect will utter truth and stupidity with the same degree of competence.

Rashmi Venugopal: True, true.

Michael Stiefel: And if you look at most of the successes in artificial intelligence, and I hate using the term artificial intelligence, is really in focused applications of machine learning where you have good data and qualified data, and that’s where the successes are, and people tend to extrapolate that to GenAI, which I think is false.

Rashmi Venugopal: Fair.

Michael Stiefel: So I think for the next 10, 15 years, maybe I’m wrong, I don’t think you have to worry about it. Maybe beyond that, as people come to grips with the problems of LLMs? In fact, I was working with ChatGPT this morning and I still found that I had to really revise my prompts over and over again. Because there are things that would have been obvious to you in having a conversation between the two of us, I had to really still explicitly tell the LLM.

Rashmi Venugopal: That’s fair. And maybe this is just a sign of me overestimating the impact of GenAI in the short term, but I know that humans also have a tendency to underestimate impact in the long term.

Michael Stiefel: Correct, correct. Absolutely. I mean, there are some people who will have some good ideas on how to proceed in this area, but really I think we’re slowly reaching a dead end with pure LLMs, and we’re going to need some other trick to get to where we need to be. Because these models don’t understand the real world very well. I mean, think of it this way, that was the third thing I said about when I did software consulting is get your clients to tell you what you want. There’s so much ambiguity that you have to tease out with requirements, or even doing algorithms that LLMs just don’t understand now.

Rashmi Venugopal: I hear you.

Michael Stiefel: Yes.

Rashmi Venugopal: Encouraging.

Michael Stiefel: Yes, and the final question I like to ask is, when a project is done, what do you like to hear from the clients or your team?

Rashmi Venugopal: This is a great question. It’s of course, basically all the usual stuff is like well executed, well done. But what I would really want is for our clients to walk away with the feeling that they trust the processes that were behind the successful execution of a project. Starting from, “Hey, this way of gathering requirements and validating the needs of the users worked well, let’s use this”.

The fact that we did a pre-mortem to understand the risks that was valuable, let’s keep doing that the way we identified milestones and spread out the initiative that process was effective and how we tested and added observability and rolled out and added all the safety features. I would want the clients to walk away with the feeling that they trust that the success is replicable and not just focus on the fact that, “Hey, this one initiative was successful”. So that then they keep coming back and then you build that long-term trust with them.

Michael Stiefel: I really enjoyed doing this podcast very much. You’re very engaging and also very honest as we talked about things. I want to thank you very much for willing to be on the podcast.

Rashmi Venugopal: Thank you for having me, Michael.

Mentioned:

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • Michael Redlich

There was a flurry of activity in the Spring ecosystem during the week of June 16th, 2025, highlighting: the first milestone release of Spring Vault 4.0; and point releases of of Spring Boot, Spring Security, Spring Authorization Server, Spring Session, Spring Integration, Spring Modulith, Spring REST Docs, Spring AMQP, Spring for Apache Kafka, Spring for Apache Pulsar and Spring Web Services.

Release trains for numerous Spring projects will also reach the end of OSS support on June 30, 2025.

Spring Boot

Versions 3.5.1, 3.4.7 and 3.3.13 of Spring Boot (announced here, here and here, respectively) all deliver bug fixes, improvements in documentation and dependency upgrades. New features include: the ability to customize instances of the ConfigData.Options class that are set on the ConfigDataEnvironmentContributors class; and an upgrade to Apache Tomcat 10.1.42 which has introduced limits for part count and header size in multipart/form-data requests. These limits can be customized using the server.tomcat.max-part-count and server.tomcat.max-part-header-size properties, respectively.

Versions 3.5.3 and 3.5.2 (announced here and here) were unscheduled releases to address a difficult regression that was inadvertently introduced in version 3.5.1.

More details on these releases may be found in the release notes for version 3.5.3, version 3.5.2, version 3.5.1, version 3.4.7 and version 3.3.13.

Spring Security

Spring Security 6.5.1, 6.4.7 and 6.3.10 all deliver bug fixes, dependency upgrades and new features such as: a new migration guide that describes the transition from the now defunct Spring Security SAML Extension to built-in support for SAML 2.0; and support for the AsciiDoc include-code extension. Further details on these releases may be found in the release notes for version 6.5.1, version 6.4.7 and version 6.3.10.

Spring Authorization Server

The release of Spring Authorization Server 1.5.1, 1.4.4 and 1.3.7 all ship with bug fixes, dependency upgrades and a new feature that improves logging from the doFilterInternal() method, defined in the OAuth2ClientAuthenticationFilter class, to report on issues with client credentials. More details on these releases may be found in the release notes for version 1.5.1, version 1.4.4 and version 1.3.7.

Spring Session

Spring Session 3.5.1 and 3.4.4 provide dependency upgrades and a resolution to a ClassCastException due to a race condition from integration tests that use the Redis SessionEventRegistry class as it assumes there is only one event type for each session ID. Further details on these releases may be found in the release notes for version 3.5.1 and version 3.4.4.

Spring Integration

Spring Integration 6.3.11 ships with dependency upgrades and a resolution to a NullPointerException from the private obtainFolderInstance() method, defined in the AbstractMailReceiver class, to use the getDefaultFolder() method from the Jakarta Mail Store class if the URL is not provided or null. More details on this release may be found in the release notes.

Spring Modulith

Spring Modulith 1.4.1 and 1.3.7 provide bug fixes, dependency upgrades and improvements: the addition of missing reflection metadata in the JSONPath lookup for application module identifiers when converting to native image with GraalVM; and a resolution to prevent application module misconfiguration from the getModuleForPackage() method, defined in the ApplicationModules class, depending on the order of values stored in an instance of the Java Map interface that may return invalid additional packages. Further details on these releases may be found in the release notes for version 1.4.1 and version 1.3.7.

Spring REST Docs

Spring REST Docs 3.0.4 ships with improvements in documentation and notable changes: support for the Spring Framework 6.2 release train as default version due to the Spring Framework 6.1 release train reaching end of OSS support on June 30, 2025; and a workaround to resolve a breaking change with asciidoctor-maven-plugin 3.1.0 that no longer uses relative paths to build documentation. More details on this release may be found in the release notes.

Spring AMQP

Spring AMQP 3.1.12 provides dependency upgrades and resolutions to issue such as: removal of the cancelled() method from the logic within the commitIfNecessary() method, defined in the BlockingQueueConsumer class that had been causing anomalies in the shutdown process; and an instance of the default Spring Framework ThreadPoolTaskScheduler class created in the doInitialize() method, defined in the DirectMessageListenerContainer class, does not properly shut down when the container is destroyed. Further details on this release may be found in the release notes.

Spring for Apache Kafka

The release of Spring for Apache Kafka 3.3.7 and 3.2.10 delivers bug fixes, dependency upgrades and one new feature that now propagates the trace context when asynchronously handling Kafka message failures. More details on these releases may be found in the release notes for version 3.3.7 and version 3.2.10.

Spring for Apache Pulsar

Spring for Apache Pulsar 1.2.7 and 1.1.13 ship with improvements in documentation and notable respective dependency upgrades such as: Spring Framework 6.2.8 and 6.1.21; Project Reactor 2024.0.7 and 2023.0.19; and Micrometer 1.14.8 and 1.13.15. Further details on these releases may be found in the release notes for verizon 1.2.7 and version 1.1.3.

Spring Web Services

The release of Spring Web Services 4.0.15 features dependency upgrades and a resolution to the SimpleXsdSchema class that references an instance of the Java Element interface, which is not thread safe, that has caused issues when multiple clients have simultaneously requested the schema file. More details on this release may be found in the release notes.

Spring Vault

The first milestone release of Spring Vault 4.0.0 features: an alignment with Spring Framework 7.0; support for JSpecify for improved null safety; and a new ClientConfiguration class that adds support for Reactor, Jetty and JDK HTTP implementations of the SpringFramework ClientHttpRequestFactory interface. Further details on this release may be found in the release notes.

End of OSS Support

Release trains for all of these Spring projects (plus Spring Framework), with links to their respective timelines, will reach end of OSS support on June 30, 2025:

End of enterprise support for all of these projects will reach end of life on June 30, 2026.

MMS • RSS

Upskilling platform Multiverse has appointed Donn D’Arcy as chief revenue officer to support its continued global expansion.

Donn joins from MongoDB, where he led EMEA operations and helped scale revenue to $700 million.

He brings over a decade of experience in high-growth tech companies, including senior roles at BMC Software.

Donn said: “Enterprise AI adoption won’t happen without fixing the skills gap.

“Multiverse is the critical partner for any company serious about making AI a reality, and its focus on developing people as the most crucial component of the tech stack is what really drew me to the organisation.

“The talent density, and the pathway to hyper growth, means the next chapter here is tremendously exciting.”

Donn’s appointment follows a series of recent hires at Multiverse and supports its mission to accelerate AI adoption through workforce upskilling.

The company now works with more than a quarter of the FTSE 100, alongside NHS trusts and local councils.

Multiverse has doubled its revenue over two years and recently pledged to deliver 15,000 new AI apprenticeships by 2027.

Euan Blair, founder and chief executive of Multiverse, added: “Truly seizing the AI opportunity requires companies to build a bridge between tech and talent – both within Multiverse and for our customers.

“Bringing on a world-class leader like Donn, with his incredible track record at MongoDB, is a critical step in our goal to equip every business with the workforce of tomorrow.”

Looking to promote your product/service to SME businesses in your region?

Find out how Bdaily can help →

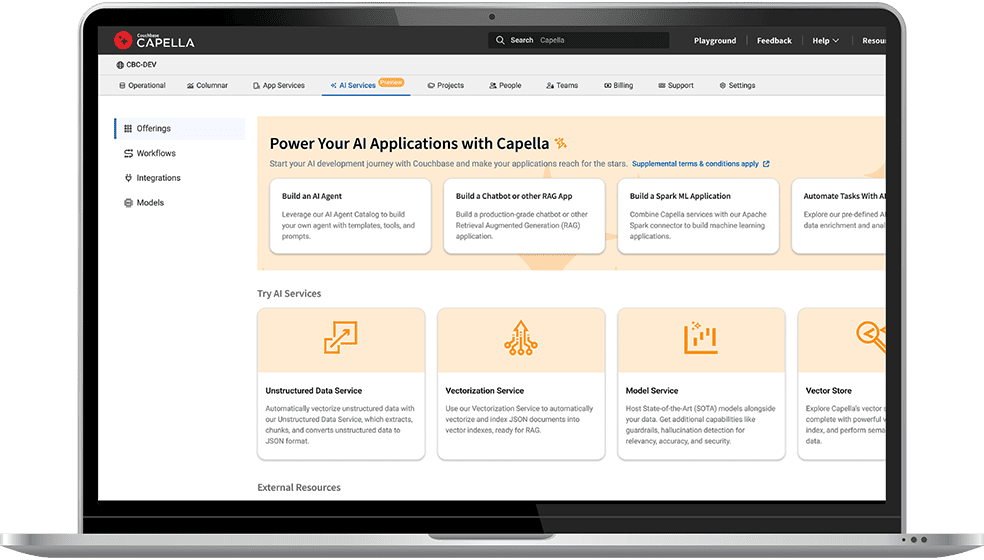

Couchbase enters definitive agreement to be acquired by Haveli for $1.5B – SiliconANGLE

MMS • RSS

NoSQL cloud database platform company Couchbase Inc. has entered a definitive agreement to be acquired by Haveli Investments LP in an all-cash transaction valued at approximately $1.5 billion.

Under the terms of the deal, Couchbase shareholders will receive $24.50 per share in cash, a premium of 67% compared to the company’s closing stock price on March 27 and up 29% compared to Couchbase’s share price on June 18, the last full day of trading before the acquisition announcement.

The proposal does have a go-shop period, a period where Couchbase can solicit other offers; however, that period ends at 11:59 p.m. on Monday, June 23.

Founded in 2011, Couchbase is a distributed NoSQL database company that offers a modern data platform for high-performance, cloud-native applications. The company’s architecture combines JSON document storage with in-memory key-value access to allow enterprises to build scalable and responsive systems across different industries.

Couchbase’s platform offers support for multimodel workloads, including full-text search, real-time analytics, eventing and time-series data, which are all accessible through a SQL-like language called SQL++. The platform is also designed for horizontal scalability, high availability and global data replication, allowing for operations across hybrid and multi-cloud environments.

The company offers both self-managed and fully managed deployments through Couchbase Server and Couchbase Capella, its Database-as-a-Service. It also extends functionality to the edge with Couchbase Mobile, a mobile service that offers offline-first access and synchronization for mobile and IoT applications.

Couchbase is also keen on artificial intelligence, with coding assistance for developers, plus AI services for building applications that include retrieval-augmented generation-powered agents, real-time analytics and cloud-to-edge vector search.

The company has a strong lineup of customers, including Cisco Systems Inc., Comcast Corp., Equifax Inc., General Electric Co., PepsiCo Inc., United Airways Holdings Inc., Walmart Inc. and Verizon Communications Inc.

The acquisition will see Couchbase return to private ownership, after the company went public on the Nasdaq in July 2021. According to Reuters, Haveli Investments already held a 9.6% stake in Couchbase before making its takeover offer.

“The data layer in enterprise IT stacks is continuing to increase in importance as a critical enabler of next-gen AI applications,” said Sumit Pande, senior managing director at Haveli Investments, in an announcement statement. “Couchbase’s innovative data platform is well positioned to meet the performance and scalability demands of the largest global enterprises. We are eager to collaborate with the talented team at Couchbase to further expand its market leadership.”

If another would-be acquirer doesn’t emerge before Tuesday, the deal is still subject to customary closing conditions, including approval by Couchbase’s stockholders and the receipt of required regulatory approvals.

Matt McDonough, senior vice president of product and partners at Couchbase, spoke with theCUBE, SiliconANGLE Media’s livestreaming studio, in March, where he discussed how platforms must not only process vast volumes of real-time data, but also support emerging AI models and applications without compromising performance.

Image: Couchbase

Support our open free content by sharing and engaging with our content and community.

Join theCUBE Alumni Trust Network

Where Technology Leaders Connect, Share Intelligence & Create Opportunities

11.4k+

CUBE Alumni Network

C-level and Technical

Domain Experts

15M+

theCUBE

Viewers

Connect with 11,413+ industry leaders from our network of tech and business leaders forming a unique trusted network effect.

SiliconANGLE Media is a recognized leader in digital media innovation serving innovative audiences and brands, bringing together cutting-edge technology, influential content, strategic insights and real-time audience engagement. As the parent company of SiliconANGLE, theCUBE Network, theCUBE Research, CUBE365, theCUBE AI and theCUBE SuperStudios — such as those established in Silicon Valley and the New York Stock Exchange (NYSE) — SiliconANGLE Media operates at the intersection of media, technology, and AI. .

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a powerful ecosystem of industry-leading digital media brands, with a reach of 15+ million elite tech professionals. The company’s new, proprietary theCUBE AI Video cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.

MMS • Sergio De Simone

Anthropic has recently introduced support for connecting to remote MCP servers in Claude Code, allowing developers to integrate external tools and resources without manual local server setup.

The new capability makes it easier for developers to pull context from their existing tools, including security services, project management systems, and knowledge bases. For example, a developer can use the Sentry MCP server to get a list of errors and issues in their project, check whether fixes are available, and apply them using Claude, all within a unified workflow.

Additional integration examples include pulling data from APIs, accessing remote documentation, working with cloud services, collaborating on shared team resources, and more.

Before Claude Code natively supported remote MCP server, developers had to set up a local MCP server to integrate it with their existing toolchain.

Remote MCP servers offer a lower maintenance alternative to local servers: just add the vendor’s URL to Claude Code—no manual setup required. Vendors handle updates, scaling, and availability, so you can focus on building instead of managing server infrastructure.

For authentication, Claude Code supports OAuth 2.0 over HTTP or SSE, allowing developers to authenticate directly through the terminal without needing to provide an API key. For example, here’s how you can connect Claude Code to GitHub MCP:

$ claude mcp add --transport sse github-server https://api.github.com/mcp

>/mcp

The /mcp command, executed within Claude Code, opens an interactive menu that provides the option to authenticate using OAuth. This launches your browser to connect automatically to the OAuth provider. After successful authentication through the browser, Claude Code store the received access token locally.

Several Reddit users commented on Anthropic’s announcement, downplaying its significance and noting that, while convenient, this feature is far from being a game changer.

Others, however, emphasized the importance of Claude Code gaining support for streamable HTTP as an alternative to stdio for connecting to MCP servers.

According to Robert Matsukoa, former head of product engineering at Tripadvisor and currently CTO at Fractional, this is not just a convenient upgrade, but one that “alters the economics of AI tool integration”:

Remote servers eliminate infrastructure costs previously required for local MCP deployments. Teams no longer need to provision servers, manage updates, or handle scaling for MCP services.

However, Matsukoa notes that using MCP servers tipically increases costs by 25-30% due to the larger contexts pulled from external sources and that remote MCPs, by making this task easier, can actually compound those costs. So, careful consideration is required as to where it makes sense:

MCP’s advantages emerge in scenarios requiring deep contextual integration: multi-repository debugging sessions, legacy system analysis requiring historical context, or workflows combining multiple data sources simultaneously. The protocol excels when Claude needs to maintain state across tool interactions or correlate information from disparate systems.

Conversely, for workflows based on CLIs and standard APIs, he sees no need to go the MCP route.

Anthropic published a list of MCP servers developed in collaboration with their respective creators, but a more extensive collection is available on GitHub.

MMS • RSS

Cherry Creek Investment Advisors Inc. raised its position in shares of MongoDB, Inc. (NASDAQ:MDB – Free Report) by 529.0% in the first quarter, according to its most recent disclosure with the Securities and Exchange Commission. The institutional investor owned 8,058 shares of the company’s stock after buying an additional 6,777 shares during the quarter. Cherry Creek Investment Advisors Inc.’s holdings in MongoDB were worth $1,413,000 at the end of the most recent quarter.

Other hedge funds also recently bought and sold shares of the company. HighTower Advisors LLC lifted its stake in MongoDB by 2.0% during the fourth quarter. HighTower Advisors LLC now owns 18,773 shares of the company’s stock valued at $4,371,000 after buying an additional 372 shares in the last quarter. Jones Financial Companies Lllp increased its holdings in shares of MongoDB by 68.0% in the 4th quarter. Jones Financial Companies Lllp now owns 1,020 shares of the company’s stock valued at $237,000 after purchasing an additional 413 shares during the period. Smartleaf Asset Management LLC increased its holdings in shares of MongoDB by 56.8% in the 4th quarter. Smartleaf Asset Management LLC now owns 370 shares of the company’s stock valued at $87,000 after purchasing an additional 134 shares during the period. 111 Capital acquired a new position in shares of MongoDB in the 4th quarter valued at about $390,000. Finally, Park Avenue Securities LLC increased its holdings in shares of MongoDB by 52.6% in the 1st quarter. Park Avenue Securities LLC now owns 2,630 shares of the company’s stock valued at $461,000 after purchasing an additional 907 shares during the period. 89.29% of the stock is owned by hedge funds and other institutional investors.

Insiders Place Their Bets

In other news, Director Hope F. Cochran sold 1,175 shares of the firm’s stock in a transaction on Tuesday, April 1st. The stock was sold at an average price of $174.69, for a total value of $205,260.75. Following the completion of the sale, the director now directly owns 19,333 shares in the company, valued at approximately $3,377,281.77. This trade represents a 5.73% decrease in their ownership of the stock. The sale was disclosed in a filing with the SEC, which is available through this link. Also, insider Cedric Pech sold 1,690 shares of the firm’s stock in a transaction on Wednesday, April 2nd. The shares were sold at an average price of $173.26, for a total value of $292,809.40. Following the completion of the sale, the insider now owns 57,634 shares of the company’s stock, valued at approximately $9,985,666.84. This trade represents a 2.85% decrease in their ownership of the stock. The disclosure for this sale can be found here. Insiders sold 50,382 shares of company stock worth $10,403,807 over the last three months. 3.10% of the stock is currently owned by corporate insiders.

Analyst Ratings Changes

A number of equities analysts have recently weighed in on the company. Royal Bank Of Canada reiterated an “outperform” rating and issued a $320.00 target price on shares of MongoDB in a report on Thursday, June 5th. Guggenheim upped their target price on MongoDB from $235.00 to $260.00 and gave the company a “buy” rating in a report on Thursday, June 5th. DA Davidson reiterated a “buy” rating and issued a $275.00 target price on shares of MongoDB in a report on Thursday, June 5th. Macquarie reiterated a “neutral” rating and issued a $230.00 target price (up previously from $215.00) on shares of MongoDB in a report on Friday, June 6th. Finally, Piper Sandler increased their price objective on MongoDB from $200.00 to $275.00 and gave the stock an “overweight” rating in a report on Thursday, June 5th. Eight investment analysts have rated the stock with a hold rating, twenty-four have given a buy rating and one has assigned a strong buy rating to the company’s stock. According to MarketBeat.com, the stock presently has a consensus rating of “Moderate Buy” and an average target price of $282.47.

Get Our Latest Stock Analysis on MongoDB

MongoDB Stock Down 1.3%