Transcript

Rajput: I come from the hardware background, and we want to optimize. We run benchmarks, and you know the benchmarks are limited use. I wanted to always understand what customers are doing, what are their environments running. One of the things at QCon, I found at the time that the hottest topic in 2018 and 2019 frame, number one is Java. I think 70% to 80% of attendees were Java enterprise and they were solving related problems. The hottest topics were underneath CPUs, most of the deployment. That’s my experience.

Then, suddenly COVID happened. At the time I used to be Intel, and from Intel I changed to AMD. I’m joining back QCon, and that is what has happened since then. CPU has gone tiny and everything is GPU. The whole conference here is no longer Java, on the products, but it’s all about LLMs. I’m also trying to change with that. The interesting topic we bring you is not exactly the same, but the LLMs in particular, Llama running on the CPU. Hold your thing for the GPU part, but we plan to talk about CPUs.

How many folks are aware of the CPU architecture? The reason I wanted to check because many of the optimizations and discussions we want to do here is software-hardware synchronization. Because when we talk about the performance and we hear from many customers that, we were on-prem or we are going into the cloud, and their goals are, I want to save 10%, 20% of TCO, or I want to actually reduce the latency, or I want to do other things. Sure, there are a lot in the architecture of the application, but you can have actually significant performance improvements just from understanding underneath hardware and leveraging it to the best. We want to show you a particular example, how you can actually be aware of the underneath hardware that would be deployed, and design your thing, or architect. It would have both roles, you would have the deployments because some of the decisions are at the deployment time, and others even when you’re writing the code or application.

Hardware Focused Platform Features

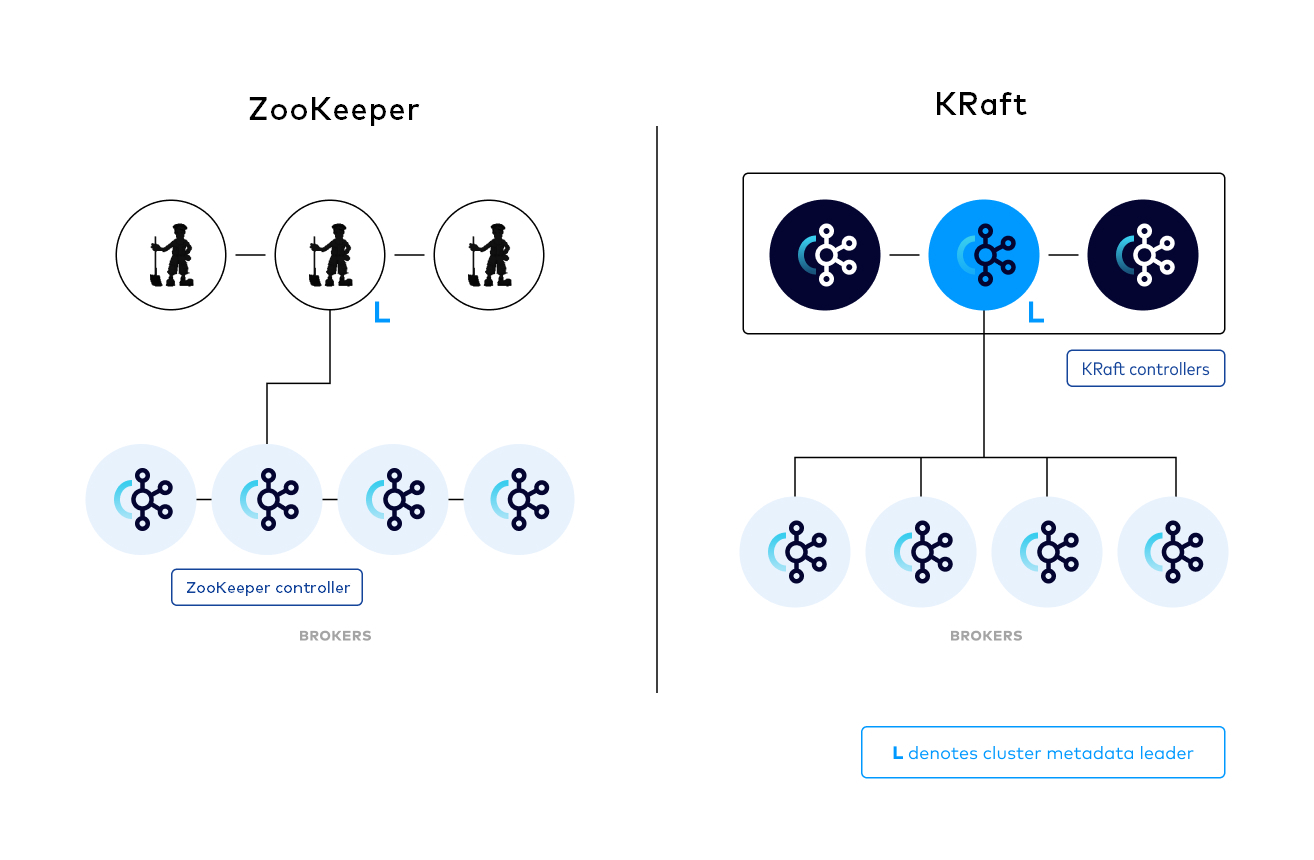

We’re not talking about the GPU, and CPU plus GPU interactions, because those analyses become quite different, and how the data is flowing between them. It’s mostly a Llama model or that area being deployed on a CPU platform. Let me share a couple of components, and I’ll talk about each one of them when we take CPUs, cores, simultaneous multi-threading, or in Intel’s case we call Hyper-Threading, the different kind of caches. In caches particularly, I’ll show later a chiplet architecture which is unified. That’s a big change happening in the last three, four years of the deployment.

Of course, the memory when we talk memory, then there are two things, memory capacity and memory bandwidth. Memory latency too, but you don’t have to worry that much about that part. It’s usually memory capacity and bandwidth. Let me start with the CPU side. I wanted to show you one CPU and the two CPUs. The reason it’s good to know about those parts, is because many platforms have the two CPUs, we call them dual socket, and it becomes like two NUMA nodes. Unless you have to cross the application on both the CPUs, that means a cross-socket communication, you want to avoid that. The only time you need that large database, and it needs to have the memory capacity which is needed from both sockets, then it is good.

Otherwise, you want to keep each process and memory local. If it is a 1P platform, you don’t need to worry, but if it is 2P platform, you want to be a little bit aware. I/O side also becomes more interesting that is the I/O device sitting on which CPU, that’s usually much better to keep your process and thing on that socket than the other one. We are not going into the I/O area. If also you work on the I/O disk or network cards, they’re usually on one of the sockets, and how that processing comes are separate talks, actually. Just be aware, is the platform you’re using a 1P or 2P?

Let me go into a little more detail that when you talk about the CPU, typically, you would see a lot of cores, and within a core, you would have L1, L2 caches, and then L3 cache would be underneath. Then of course, you have the memory and I/O or NIC cards. That is a typical design. When we talk about the core, the core you would have SMT thread, L1 cache, L2 cache. This is the only place on the top, the SMT thread which is something you need to think. Do you need to worry about the SMT thread part?

The only part you need to think about it, it just gives you twice the number of threads. When you are designing from the application side, the thread pool size, if it is going to be, let’s say N core system and SMT on, then you want to make sure your thread pool or setting are not hardcoded, they can check how many vCPUs are available. Then you set accordingly. Because I have seen that kind of mistake where people hardcode, we found even in MongoDB or those kinds of things where they hardcode the core at 32 or 16. Suddenly you see when you try to deploy them, a scale-up model, they don’t scale and then you find that the programmer actually hardcoded it.

Now, let me show you, because I talked about the chiplet architecture, what is the difference between a unified L3 and a chiplet architecture. Most of the Intel systems, Intel Xeon, when their GNR is coming next, GNR will be the first chiplet type. Anything before has been unified, you see all the cores sitting within that socket, same L3. On the chiplet side, we try to create a group of cores associated to L3 in each one. That is the chiplet architecture. It has many benefits at the hardware level on the yield, but it has a benefit on the software side. Let me show you what benefit it gives you. I like to show it this way, a little more clarity on the chiplet. One of the benefits you would see that L3 associated to each chiplet, it cannot consume the whole memory bandwidth. On a unified L3 given few cores, if you run a noisy application, it could actually consume the whole memory bandwidth and the rest of the program may not get much bandwidth left, and poor latency suddenly when the noisy neighbor comes in.

One of the benefit of the chiplet architecture is that each chiplet cannot consume the full memory bandwidth. As an example, one platform, if it has 400 Gbps memory bandwidth, one chiplet can’t do more than 40 to 60. Number one, it protects you from the noisy neighbor scenario. Number two, we have the setting in the bus where you have NPS, NUMA Per Socket partitioning. You can set up to four. In this case, it is actually dividing your memory channel into four categories. Most of the cloud are running with NPS1 because they don’t want to manage the schedule of the memory. When you are running in your on-prem, you could actually create four clear NUMA nodes, and it will give you the memory bandwidth.

One of the benefits you would see in this scenario is that, let’s say you wanted to deploy an application on two chiplets, so two L3s, and then you have another application in another NUMA node. What you would see is that they are not colliding on the same memory bandwidth from the channels, and each of the new applications get their own L3. You are able to deploy an application where you have a consistent performance and they’re not consuming each other’s memory or memory bandwidth or the L3 clashing. These are the benefits. The unified L3 does give the benefit where if you need to exchange the data among the L3 or application, that part is faster. Other than that, most of the chiplet architecture benefits outweigh that kind of bandwidth. That’s the reasoning you will see the GNR also going a very similar path of the chiplet. As these caches are increasing, the number of cores are increasing, you cannot have just everything on one unified. It’s just a limitation in the architecture, by the time you have a huge number of cores.

Focus: Software and Synchronization (AI Landscape and LLMs)

Rema will talk with regards to LLMs or the Llamas, what is the role of the SMT, simultaneous multi-threading, what do you need to think about to leverage it best. Same thing on the core that when you have a lot of cores increasing, how do you want to leverage it or what you need to be aware of. Caches play a very important role, and I can tell you from the EDA tools or other areas that you could have 20%, 30% improvement. Because when you’re dividing your problem, just like in the LLM and other space too, if you fit it into the cache versus not fitting, the performance improvements and differences are not 4% or 5%, they are like 20%, 30% as soon as you start fitting in versus moving out. That part for a particular architecture, the more you are high frequency trading or tools or many response time sensitive, throughput time sensitive, you have profiling tools where you can see, is it fitting in or how much is missing? What do I need to adjust? Those kind of specific optimizations from different tools. That part is very important.

Then the memory capacity and the bandwidth part. Because LLMs, as Rema will show you later, when it is compute bound, when it is memory bandwidth bound, and what kind of decision can you do, and actually memory capacity on that extent. I just wanted to give you these details first because Rema will extensively use, this is my analysis and I’m memory bandwidth bound here, and I’m trying to fit in the cache or other area of the chiplet, in her talk. Just wanted to give you a quick idea that even though LLMs, if you look at the bigger picture, it is actually a pretty tiny piece and very complex piece just for the reference. I’m sure you are all aware where the LLM and ChatGPT fits, and another part also that with regard to the timelines, this part is exponentially increasing and changing. We are in exciting times from this change.

Llama

Hariharan: Let’s Llama. Excited to be talking about this Llama who is right now sitting on top of the Andes and everything. Let’s talk about the real thing that we are all interested in. Why Llama in the first place? Why are we not talking about other models? There are so many GPT models that we are all aware of. One of the main reasons to talk about Llama and why we use it for benchmarking and workload analysis is it is a small model. Particularly when we are talking about running it on a CPU, this is one of the smaller models. It comes in multiple sizes, but we still have the small model available.

Relative to other GPT models, this is the smaller one. Not only that, I think we are all aware of this, that Llama was actually trained on publicly available data and it’s fully open source. That’s something that protects us in whatever usage we have. A lot of our customers like to train it for their own select areas. That keeps them more protected because it was trained on publicly available data. There’s nothing to worry about in terms of lawsuits and stuff. This is why I think it’s a small model, open source, and it can be trained.

Let’s look at what our Llama does in action. How does it actually function here? There are two phases of Llama: the prefill phase and the decoding phase. What happens in the prefill phase? Prefill phase, you type something, and whatever you type, basically the model is loaded, and your input data, which is in this case ‘Computer science is’ is what you’re typing, and it is going to predict what’s the next word or next token. The whole model is loaded and the whole model actually works on everything that you have typed. In this case it’s just three words, but you could be sending a whole book there. You could be typing a whole book and putting it as the input data. It processes the entire data you have submitted and then it produces the very first token. Now it need not be one token, it could be a probability distribution over a bunch of tokens. Without loss of generality, let’s just say it’s one token that comes out of it. This portion of the work is extremely compute intensive. We call this the prefill phase. That’s the first phase of it.

The second phase is the decoding phase. What happens is it takes the previous token, and the KV cache that was built and whatever else was created there, and creates the next token, and then the next token, and the next token, and so on, until it reaches the end of sentence. This is the decoding phase. Because you are actually loading the model over and again every time. I said the model is small, but it’s still not small enough to fit into our caches. You really have to pull the model from the memory and load it multiple times, portions of it every time and so on. When you’re doing that there’s a lot of memory bandwidth involved in the second phase. This decoding phase is highly memory bandwidth intensive.

Basically, what happens in the prefill phase is tokenization, embedding, and encoding, and everything. The decode phase, you are actually going to iterate through the whole thing over and again, either deterministically or probabilistically. Like I said, each token produced is actually a probabilistic distribution over a bunch of tokens. You can set configuration parameters where you are actually just going to be greedy and pick the most probable one and move forward. That’s the fast way to do it, but there are ways to do that. Without loss of generality, let’s just say that these are the two stages of the model and we’re taking one token at a time and moving forward.

Now let’s look at the Llama internals. When I say internals, you’re driving a car. You want to look at the inside of the car. You have the engine, you have the transmission, all these things. If you have to make sure that your car is functioning well, you have to make sure you have a good engine, make sure you have a good transmission, and all those things. Let’s look at the internals here. What we see is when Llama is running, there’s going to be a lot of matrix multiplication that happens. Matrix multiplication is one of the key operations that happens here. Dot product is another one. One is cross product, dot product. Scaling and softmax computation. Weighted sum. Last but not the least, passing through multiple layers and aggregation of everything. These are the primitives that go into the Llama internals.

In order to get the best performance, what we really need to do is to optimize these primitives. Not just optimize these primitives, that happens through all the BLAS and MKL libraries that have been written. Also, optimize it for a given hardware platform. Now, the optimization, some of it is common optimization. Other part is specific to a particular hardware. That’s about something we have to be aware of, what is the latest thing that optimizes these? What are the latest libraries that optimize these for a particular hardware that you are running it on?

Next, let’s talk about metrics. Metrics is clearly something that comes from the user. A user decides what the metrics are, what is important to them. Let’s look at it. I’m showing you pretty much the same diagram that we showed before, but slightly differently placed. What happens in any LLM, not just Llama, you give the input. You are giving the input, and then if you have typed in ChatGPT, which all of us do, we’re waiting for some time, especially if the input is long. It goes blink, blink, blink. I don’t know whether my words are lost in the ether space or what happened. That is the time that the initial prefill phase is working on it. Then at the end of it comes the first token. Something is happening there, and I’m happy.

Then after that, all the tokens follow. Sometimes you can see when the output is large, as you are trying to read, there’s more that’s coming. It doesn’t all appear in one shot. All these tokens are coming slowly. Token is not exactly a word, but tokens are usually converted to words. I won’t go into the details of that at this point. What are the metrics here? The time it took from the time I gave my input to when I started seeing something appearing on my screen. That’s the time to first token. I’m iterating it just for completeness of the talk. Then all these tokens appear all the way to the end. The total latency is something that’s very important. I care whether I got my entire response or not. Inverse of that is the throughput. One divided by latency is the throughput, basically. Throughput is something that’s super important for all of us. We are ready to wait a little bit longer if we are really asking it to write an essay. If I’m just asking a yes or no question, you better be quick. I’m going to pretty much say the same things.

Basically, throughput is a real performance. Throughput actually marks how the system is being used. TTFT is something that’s super important. It is a result of that compute intensive phase. The TTFT is something that can be manipulated quite a bit by giving specialized hardware many times. For example, AMX is used as well. That definitely helps reducing the TTFT. GPUs also reduce TTFT. Throughput, on the other hand, is mostly controlled by the memory bandwidth, because the model is loaded over and again. The larger your output gets as it is pumping through more tokens, the throughput is controlled by the memory bandwidth.

Deployment Models – How Are Llamas Deployed?

Now let’s talk about the deployment models. I just thought it is important to show that our CPUs fit in whether you’re using GPUs or just CPUs. Smaller models particularly, can be run very well on street CPUs. Even when you’re running on GPUs, a CPU is involved. A CPU is connected to the GPUs. That typically suits larger models and allows for mixed precision and better parallelism and all that. Talking about GPUs, you can see deployments like these as well. They basically have a network of GPUs. What is important in this case is how these GPUs are connected. GPUs have to be connected through an NVLink or an InfiniBand. They need fast connections between all these GPUs and the CPU and GPU as well.

Typically, CPU connects to a GPU through the PCIe. It can get even more complex. You can have not just a network of GPUs, but even the inputs can be fed in a different way. You can have audio input, video input, and they’re processed differently, fed into the model, and then there are layers that are being handled by different sets of GPUs. You can make this as complex as you want to. We’re not going to get into all these complexities. Life is difficult even if you take a simple case. Let’s stick to it.

Llama Parameters

Let’s get into the details. First, let’s get familiar with some of the jargon that we use. They’re not exactly jargon, we’re all familiar with it, but let’s get them on the board here. The main three parameters that we’ll talk about are input tokens, output tokens, batch size. Those are three things that you will hear whenever you look at any benchmark publication or workload details corresponding to not just Llama, but in general, all LLMs. Input tokens, clearly that’s what you’re typing on the screen. That’s the input you’re providing. A paragraph, a quick set of prompts, whatever. Output tokens is what it produces. Tokens, again, are not the words that you see, but tokens are related to the words that you see. What is a batch size? Batch size can go all the way from one to whatever that you want. What does it look like here? Basically, if you give just one thing at a time, you give one prompt, wait for the response, then give the next prompt. You can say, tell me a story. Llama tells you a story.

Then, tell me a scary story. It tells you another one, and so on. You can give multiple prompts at the same time, like him, he’s showing an example of batch size equal to 4 there. All of them will be processed together. What can also happen is that some prompts are done earlier than the others. The parallelism doesn’t stay the same throughout. They are also working on things like dynamic batch sizes and so on. We’ll not get into all those details right now here. We’ll keep it simple. We’ll assume that a batch size of 4 is given, and then batch output of 4 is produced, and then next 4, and so on, if I say batch size equal to 4. What is a Llama instance? That’s the first thing I put there. Llama instance is your Llama program that you’re running. You can run multiple instances of these on the same system. You don’t have to run just one. You can run multiple instances. We will talk about how things scale and so on. Each instance is an instantiation of the Llama program.

Selecting the Right Software Frameworks

Let’s talk about selecting the right software frameworks. First, everything started with PyTorch. That’s the base framework that we started with. It has good support. It’s got a good community support, but except it doesn’t have anything special for any particular hardware or anything like that. It’s not optimized. It’s a baseline you can consider. Then came TPP that was created by Intel. TPP was done by Intel. A lot of what you see in TPP is optimized more for Intel. Given that it is optimized for Intel, it actually works pretty well on AMD as well. We also get a good gain going from the baseline to going to using TPP.

Then came IPEX. IPEX actually incorporates TPP right within. IPEX was built on top of TPP. That, again, was done by Intel. Also, benefits Intel a little bit more than it benefits AMD. Last but not the least is our favorite thing, which is ZenDNN. How many of you have used ZenDNN? It was recently released. The thing about ZenDNN is it builds on top of what is already there, obviously. It was recently released. It gives a good boost to the performance when you run it on AMD hardware, particularly. Let’s look at some numbers since I said this is better than this and so on.

As you can see, if I mark the baseline as equal to 1, so what I’m plotting here is various software optimizations: baseline, TPP, IPEX, and then finally Zen. You can see going from baseline to TPP is more than a factor of 2. These are all performance based on our hardware only. There are no competitive benchmarking numbers or anything presented in this talk. Going from baseline to IPEX, you get nearly a 3x. It’s a lot more than that. Then with Zen, you get even more of a boost above where IPEX is. One thing I have to say that in Zen, the advantage that you get will keep increasing as the batch size increases. It is actually optimized to benefit from higher core counts that we have, number one.

Secondly, the large L3 caches that we have as well. There’s a lot of code refactoring that went into it, and there’s a lot of optimization that has gone into it, but the benefits actually increase. As you can see with two batch sizes that I’ve shown, 1 and 16, it shows a 10% advantage over IPEX when you start, and then it goes to a little higher, and then I know I’ve not added those graphs, but the benefit actually does increase as the batch size increases.

Hardware Features and How They Affect Performance Metrics

Let’s come to the core of this talk. Here you have various hardware features, and the question is, how are they going to affect my performance metrics? How do we optimize things in order to use all these things optimally? Let’s first talk about cores. In this graph, what I’m showing here is how Llama scales. I’m using only one instance of Llama, size 16, size 32, 64, and 128. You can see I’ve gone eight times in size from the leftmost to the rightmost. That’s a factor of 8 involved, but the amount of gain that I’ve got is less than 50%. The software does not scale. There are multiple reasons to it. We can get into that.

Basically, the performance that you get with size 16 seems to be mostly good enough. Maybe I should run multiple of 16s rather than run a large instantiation of the same thing. What I plotted earlier, what I showed in the previous graph was basically throughput. The throughput doesn’t scale a whole lot, and that’s the main thing that as somebody who’s trying to use the system and getting the most out of it, I’m interested in throughput. The user is also interested in the TTFT. The TTFT does benefit, not a whole lot, but there is some benefit when you make the instance larger. Not a whole lot. As you can see, going from 16 to 128, it dropped by about 20% or something. Not a whole lot. That parallelism and that CPU capacity that you’re throwing in does benefit in terms of performance.

Moral of the story, additional cores do offer only incremental value. TTFT also benefits. The reason these two have to be taken together is there could be possibly a requirement on the TTFT when you’re working with a customer to say, I want my first token to appear within so many milliseconds or seconds, whatever it may be. You have to bear that in mind when you’re trying to say, can I make the instance really small and have a whole lot of them? There is another thing to it as well, which is, what happens here is when you have too many instances, each instance is going to consume memory. I’ll come to that later. You may not have that much memory to deal with it.

Next, let’s talk about SMT, the symmetric multi-threading. You have a core and then you have a sibling core. On each core, there are two of them that are operating. Are you going to get benefit from using the SMT core? Let’s take a look. The blue lines here show you what’s a performance improvement. I’m only plotting the improvement. I’m not giving you raw numbers, nothing. When you’re running a single instance, so this is a single instance of size 16 that I’m running. What have I done there? There’s nothing else running on the system. Remember my CPU has 128 cores. These are all run on our Turing. We have 128 cores, but I’m using only 16 of them. That means the background is very quiet. Nobody else is using the memory bandwidth. Memory bandwidth wise, we are not constrained at all.

The only constraint that’s coming here is from the core itself. It’s CPU bound. What happens there, you are getting a good boost by using the SMT thread. With and without SMT thread, if you actually run it twice, you can see that you get a good boost. The orange line on the other hand is the kind of boost that you will see when you’re actually running everything. You’re running all the 16, 16, 16, you’re running all of them together. What happens then, you’re actually going to be constrained by the memory bandwidth. Your memory bandwidth becomes a constraint. Really, there is no advantage or disadvantage. As you can see, the percentage is in single digits. The statistical variation is what you’re seeing there, nothing else.

Moral of the story again, SMT does not hurt, even in the fully loaded case, but it is going to give you a lot of benefit if the background is going to be quiet. Particularly that’s important because, let’s say you’re running on a cloud, on AWS or one of these things, you don’t assume everybody is running a Llama. You take an instance of size 16 and you’re running there. Maybe most likely that everybody else is quiet or doing very little thing. You will get that benefit, so use your SMT there.

This is the most important thing. You will see a big difference here, memory bandwidth. What is the role of memory bandwidth? What I did was, on a Turing system, we have a bandwidth of 6,000 megatransfers per second. I clocked it down to 4,800, 20% reduction in the bandwidth. When I did that, the question was, how much are we going to affect the overall performance? Remember I told you that the prefill phase is affected mostly by the CPU and the decoding phase is affected by the memory bandwidth. When you do that, that’s a substantial difference. Here is the opposite role. There are two things that I plotted here.

The first thing is a single instance, the dark brown one. When I just run a single instance, that means it doesn’t matter my memory bandwidth, whether it’s 6,000 or 4,800, I have plenty, for a single instance that’s running. When I run all the instances, that is when you can see the memory bandwidth really hitting you hard and it gets affected very badly. Basically, moral of the story here is that, use as much bandwidth as you can get. If the cloud is going to constrain you for the amount of bandwidth that you’re going to use, it’s worth paying for that if you have to pay for extra bandwidth. I know it can be manipulated how much bandwidth each instance gets.

Next let’s talk about role of caches here. I don’t have a graph here, but I can talk through this. Caching is important. Remember we are constrained on memory bandwidth. If we can get the data from caches, it’s better. However, you cannot fit the whole model into cache. What really happens is your model gets loaded over and again. Just by nature of this particular workload, it’s a use and throw model. That means you’re going to load the weights, use it to compute something, and that’s it. You’re not going to reuse it. The only way you can reuse it is if you use a higher batch size. If you’re going to process 64 of them together, so all 64 will be using the same type of weights in order to do the computation.

Otherwise, if you’re just using a batch size equal to 1, it is a use and throw model. You can actually see a very large L3 miss rate. Using a higher batch size is crucial in order to increase the caches. Earlier I talked about what happens as you scale a particular instance. I’m going to talk about what happens when you change the number of instances, how you use more instances, how does your performance scale. As you can see, now the two bars that you see there, the blue one, that’s what I’m using as a basis, is running just a single 16-core instance. The orange ones are running 16 of the 16-core instances. I’m running 16 of them in parallel.

If everything was ideal and everything you would have had, the height of the orange bar would have been 16. It would have been 16 times performant, but no, there are other constraints that come into play. Your memory bandwidth is a big constraint. You don’t get 16x, but you get nearly 10x, 12x performance overall compared to the single instance where there is no memory bandwidth constraint and you’re running all of them together. This is for different situations. Chat is where you have short input, short output. Essay is where short input, long output. Summary is very long and short. Translation is both long. In all the cases, you’re getting at least a 10x improvement against the baseline that we’re looking at. Basically, running parallel instances is the way to go, pretty much.

I talked about batches a lot. I said use higher batch sizes. What is our return? Throughput-wise, look at the return that we are getting. As the batch size increases, going from 1 to 128, I got more than 128x of performance. The reason is I’m getting much higher L3 hit rates. I’m getting things from the cache instead of going to the memory. I’ve reduced my latency. I’ve made my CPU much more performant here. I’m using my cores a lot more. I’m getting more than 128x return when I’m using 128 batch size. You don’t get anything for free. The place where it hurts is the TTFT. Your TTFT actually goes up also. That’s not a good thing. That makes sense.

If I’m working on 20 projects at the same time, everybody is going to be complaining. All my customers are going to be complaining that I’m not giving them the solution, but yes, I’m working day and night. That’s what matters to us as users of computers. We want to use our machines day and night and get the maximum throughput. After one month, I think everybody will get the answer when I’m working on 20 projects, but next day, probably not. That’s what we are seeing. That comes at a cost of TTFT. The TTFT does grow as well as you are running more in parallel.

These are various things that I already said. Do not use larger instances, if you can afford it. Use more instances. In order to harvest performance, use larger batch sizes. Also, the whole thing is going to be a balancing act for the most part between TTFT and overall memory needs. I said memory needs now, and I want to get into it immediately now after this. TTFT is a requirement that’s going to be placed on you by a customer. Memory needs is something that is going to grow as the number of parallel instances increases and as the batch sizes increases as well. Some formulae out there: fundamentally, I want to say this, the memory need comes from three different factors. Number one, the model itself. The larger the model, the more you’re going to load. If you have an 8 billion model, so each one is going to take 2 bytes. The next thing that is going to add to the memory is activations.

Last but not the least, the KV cache as well. The KV cache keeps growing as you’re building it as well, as you’re processing more. The total requirement comes from all three of them together. Basically, if you’re having multiple instances, in this case, let’s say I computed my need for memory as 41 gigabytes, so 41 times 32, if I start 32 instances in parallel. I said 32 instances, because typically on AMD systems, we like to keep an instance on one thing called the CCD or the Core Complex Die, which has 8 cores. We have 32 of them, so 8 times 32 is our whole system. That comes to 1.3 terabytes, which is really close to the total amount of memory I have on the system, 1.5 terabytes. This is when, when you have too many instances, you start seeing swapping. This calculation is something that we urge you to do in order to get a good idea of how to get the maximum out of the system. You don’t want swapping. Swapping is not a good thing.

I know I did a back-of-the-envelope calculation there, and did that. That calculation, most of the times is slightly under. It depends on which framework you are using. With ZenDNN, it’s pretty close by, as you can see, in most cases. In the case of IPEX, it was using even more memory. I have seen this go the other way as well, not for Llama, for some of the other use cases that we have run, where ZenDNN will take more memory and so on. I’m not making a statement on this here at all. The point is you have to be aware of that this is only a back-of-the-envelope calculation, but you have to look at what your framework is using.

If you’re using ZenDNN or IPEX, whichever it is, just see how much total memory that your instance is going to use. This is again one more thing that I want to say, free floating versus dedicated. Please pin your instances. This is probably the worst case I have shown, happened at least in one case. Doesn’t always happen, but when you pin it, each one is going to run on a different set of cores, and you’re going to get the returns proportionately. When you don’t pin, there is no telling. Pretty much all of them will run on the same bunch of cores, or they will be context switching back and forth. Either way, you pay the penalty. This is the worst case that I’ve shown. It’s not going to be as bad as this, but it can be as bad as this.

Summary

Recommendations for optimization: you know that the initial part of the run is core bound, and the second part is memory bound, so use more memory bandwidth if you can get it. Parallelism helps. Use the best software that you can use for your hardware. For Zen, definitely we recommend zentorch. These things will evolve with time as well, but this is where you have to do your due diligence and homework and identify what is the best software that fits your case. Pin instances as much as possible.

Questions and Answers

Participant 1: How are you capturing some of these metrics? What specific metrics and what tools are you using for the metric calculation of observability?

Hariharan: We know how many tokens we are sending. Typically, when we run Llama, we know our input, output tokens. The output tokens is the total number of tokens that are produced by the model, and we know how long it took to run. That’s what we use to compute the throughput. Again, for TTFT, what we do is, for any particular input token size, we set the output token equal to 1, and run it and estimate what the TTFT is going to be. Typically, we know that that is something that the user is actually simply waiting for.

Participant 1: How about CPU and other physical metrics, especially on cloud providers? Are you using hardware counters? How are you measuring swapping?

Hariharan: I have not run it on the cloud yet. Running it on bare metal, we have our regular tools to measure the utilization and also the counters and everything. We have our own software, and general-purpose software as well.

See more presentations with transcripts