Month: January 2023

Critical Control Web Panel Vulnerability Still Under Exploit Months After Patch Available

MMS • Sergio De Simone

A 9.8 severity vulnerability in Control Web Panel, previously known as CentOS Web Panel, allows an attacker to remotely execute arbitrary shell commands through a very simple mechanism. Although readily patched, security organizations are reporting it is under active exploit.

The unauthenticated remote code execution vulnerability affecting Control Web Panel (CWP) was discovered by Numan Türle of Gais Cyber Security and patched in version 0.9.8.1147, released on October 25. The vulnerability remained undisclosed until the beginning of 2023 to ensure CWP users had enough time to patch their systems.

According to Türle, the vulnerability allows an attacker to run arbitrary Bash commands by sending a maliciously crafted payload to the login endpoint. For example, you could send a POST HTTP message including the string $(whoami) in a URL query parameter to have the Linux shell command whoami executed when the request payload is written to log.

The vulnerability appears to be the result of missing user input validation, which should always be applied to prevent command injection, coupled with the direct use of shell redirection to append a string to a file. At source code level, the vulnerability manifests itself with the use of double quotes in the appending command, which leads to the possibility of command substitution, as seen in the above example. The use of single quotes would have prevented the most trivial attack schemes, yet it would have not prevented all of them in the first place. It fact, it appears that offloading the execution of such a simple task as appending to file to the shell was hardly a justified choice in terms of security.

Türle publicly disclosed the vulnerability on January 3 2023, additionally posting a video showing how easy it is to exploit. It took only a couple of days for attacks attempting to exploit the vulnerability to be detected by GreyNoise, which additionally provided the figure of five distinct IP addresses that were originating them.

Control Web Panel is a Linux server administration software that specifically target enterprise Linux distros. While its popularity is not in the top tier, it is used by over 35k servers worldwide. All organizations using it should ensure they are running version 0.9.8.1147 or higher.

MMS • Tejas Shikhare

Subscribe on:

Transcript

Thomas Betts: GraphQL can be a great choice for client to server communication, but does require some investment to maximize its potential. Netflix operates a very large federated GraphQL platform. Like any distributed system, this has some benefits but also creates additional challenges. Today I’m joined by Tejas Shikhare, who will help explain some of the pros and cons you might want to consider if you try to follow their lead in scaling GraphQL adoption. Tejas is a senior software engineer at Netflix where he works on the API systems team. He has spent the last four years building Netflix’s federated GraphQL platform and helped to migrate Netflix’s consumer-facing APIs to GraphQL. Aside from GraphQL, he also enjoys working with distributed systems and has a passion for building developer tools and education. Tejas, welcome to the InfoQ podcast.

Tejas Shikhare: Thank you so much for having me, Thomas.

Quick overview of GraphQL [01:11]

Thomas Betts: t’s been a while since we’ve talked about GraphQL on the podcast. Our listeners are probably familiar with it, but let’s do a quick overview of what it is and what scenarios it’s useful for.

Tejas Shikhare: GraphQL has gained a lot of popularity over the last few years, and one of the most common scenarios that it’s useful for is building out… If your company has UIs and clients that are product-heavy and they aggregate data from many different sources, GraphQL allows you to not only act as an aggregation layer but also query language for your APIs so that the client can write a single query to fetch all the data that it needs to render. And we can build this into a GraphQL server and then connect to all these different sources of data and get together and return them back to the client. That’s the primary scenario with GraphQL, but at the end of the day it’s just a API protocol similar to REST, GRPC, etc., with the added layer of being a deep query language.

Thomas Betts: Yeah, I think there’s a couple of common scenarios. About a year ago we had the API Showdown on the podcast and we talked about REST versus GraphQL versus GRPC, and I remember over-fetching, and there’s a couple different scenarios, like this is a clear case where GraphQL makes it easier than calling a bunch of different APIs.

Tejas Shikhare: Exactly, and I think what GraphQL gives you is the ability to fetch exactly the data you want, and not more, not less, because you can ask for what data, so you can ask for every single field you want and that’s the only fields that server will give you back. And sometimes in REST, the way it works is you have an endpoint and it returns a set of data, and it might return more data than you need, and so you’re sending those bytes over the wire when the client doesn’t need them, so over-fetching is also another big problem that GraphQL solves.

Why Netflix adopted GraphQL [02:49]

Thomas Betts: What were some of the reasons Netflix decided to use it? I’m assuming you haven’t always had GraphQL, this was an adoption, something you chose to do after you’d had traditional REST APIs.

Tejas Shikhare: We have a pretty rich history on graph APIs in general. GraphQL came out I think in 2014, 2015, was open-sourced and then it started gaining popularity. But even before that, Netflix has already started thinking about something like GraphQL, so we open-sourced our own technology called Falcor, and it’s open source, you can find it on GitHub, but it’s very similar to GraphQL in spirit. And really where these technologies came from, it’s the problem space, and the problem space here lies around building rich UIs that collect data from many different sources and display them.

For us, it was the TV UI. When we started going into the TV streaming space and started building applications for the TV, that’s when we realized there is so much different data that we can show there that something like GraphQL would become really powerful, and that’s where Falcor came in. And we are still using Falcor in production, but GraphQL has gained so much popularity in the industry, it’s community-supported, there’s a lot of open source tooling, and that’s really when we decided, “Okay, why should we maintain something internally when we can use GraphQL, which is getting much more broader support, and then we can get in all the fixes and move with the community?” That’s the reason why we moved to GraphQL.

Benefits of GraphQL [04:10]

Thomas Betts: Gotcha. I like the idea that you had the problem and said, “We need a graph solution,” built your own and then you evolved away from it because there’s a better solution. It’s always hard for companies to admit that what they’re doing in-house isn’t always the best, and sometimes it is better to go get a different… Well, you made Falcor open source, but a different open source solution. Has that made it easier to hire more engineers onto your team working on building out GraphQL or get people who know how to use it?

Tejas Shikhare: The benefits are a lot, because firstly, GraphQL engineers, all over the place that you know you can hire. A lot of people have experience now today with GraphQL, so that’s great, but also the number of languages that support GraphQL. The framework itself has been implemented by the community in many different languages. We use GraphQL Java mostly, but then we also have a Node.js architecture internally that we could easily bring GraphQL onto, so that’s a big advantage, so your technology stack broadens as well, hiring is easier, and really I think you can work with the community to improve GraphQL in the ways that you want to. And that’s also another big win because we have members of our team who are actively involved in the GraphQL working group and advocating for features that we want in GraphQL in front of the open source community.

The history of GraphQL at Netflix [05:24]

Thomas Betts: You recently spoke at Qcon San Francisco and QCon Plus about GraphQL at Netflix. It was a follow-up to a presentation, I think about two years ago, of some of your coworkers. Is that when Netflix started using GraphQL, that was the advent of using federated GraphQL? What was happening then and what’s been happening the last two years?

Tejas Shikhare: Let me go into a little bit of a history. In Netflix, we have three main domains in engineering. Obviously the Netflix product is a big domain of engineering, but in the last few years we’ve also started investing heavily in our studio ecosystem, so think about people who are working on set, working on making the movies, they need applications too, that are backed by the servers. There’s so much complexity in that space. In fact, the data model is way more complex for the studio ecosystem than it is for the actual product, and that’s fairly new, so that started about 2018 timeframe, the investments there. And GraphQL was already thriving at that time, and that’s when we decided, “Okay, why don’t we start using GraphQL for our studio ecosystem?” And a lot of different teams were pretty excited about it, and that’s where GraphQL really got its grounding at Netflix and that’s where it shined.

And we realized very quickly, even within our studio ecosystem, we had 100s of applications, over 100 services, and that’s when we started thinking about we can’t have one GraphQL team maintain the API for all of these applications, so that’s where I think we started thinking about federated architecture, which allows you to break apart and distribute your GraphQL API to many different teams. That allowed us to scale, so really it picked up in our studio ecosystem, but then at the same time we paired up with the Netflix API team, which is responsible for the API for the product, which was still running in Falcor, as I mentioned, at the time. We started investigating how GraphQL could help in that area, and over time we started extending the federated architecture.

Two years ago when we first did the talk, we had mostly launched it for all of studio, but then over the last two years we started launching it for our Netflix product, so if you pull out iOS or Android phone that’s using GraphQL, and our goal is to have a lot more canvases on GraphQL over time. And additionally, we are also using it for our internal tools. You might be familiar with all these applications like Spinnaker, which allows us to do deployments, and we have a lot of internal UI applications that developers use, customer support applications. We are starting to move those to GraphQL as well. Really just all across the company.

Federated GraphQL [07:49]

Thomas Betts: The keyword you keep coming back to is federated, and you said that the federated model allowed more people to work on it. And what’s traditional GraphQL? Is it a monolith?

Tejas Shikhare: Traditionally, even when we first started, think of GraphQL as a BFF. It’s providing a backend for front end where you can aggregate data from many different sources, so if you have a microservice architecture, you can aggregate data from many different sources, put it all together and so the client can build a query against it. Traditionally what we do is we write the schema and for each field in the schema we write data fetchers, and the data fetchers actually fetch the data from the clients and then we solve the n plus one problem with data loaders so that we don’t have inefficient APIs.

That’s how traditionally GraphQL is implemented, but what you quickly observe is if you have a very big company, big organization, you have a lot of data, you have a lot of APIs, and the schema starts to grow pretty rapidly, and the consumers of the schema are also, you probably have more than a handful of applications that the consumers of the schema also start to grow. The central team becomes a bottleneck, so every time you want to add a new feature, the backend teams will add it first to the backend, then it adds it to the GraphQL server, and then the client team consumes from it. That becomes like the waterfall model for creating those APIs.

And what federation allows you to do, essentially, is it allows you to split up the implementation. You still have this unified API, the one schema, but the schema is split across many different teams, and each of those teams then implement the data fetchers for their particular part of the schema.

And then these data fetchers essentially do the same thing, really talk to the database or talk to another service in the backend and get the data, but now you split them up across many services, so you split up the operational burden of those data fetchers, you split up the implementation. And also then as soon as one backend team implements it, it’s directly available for the clients you use, so you don’t have to go through another layer to build it up. That’s where the federation gives you some of the advantages on top of doing the classic monolithic way.

Breaking up the API monolith [09:59]

Thomas Betts: It’s somewhat similar to a move from a monolith to microservices architecture then, that you’re saying. We’re going to have a lot of services because this one monolith is too hard for all of our developers to work in one place. We aren’t building new features fast enough, so we’re going to spread it out. Is that kind of a good analogy?

Tejas Shikhare: And that’s what inspired it, moving to federation, using that kind of a thing. We already did this with our monolith 10 years ago, and realized now we have a new monolith, which is the API monolith because that’s what we ended up building. And now federation allows us to split up the API monolith into smaller sub-graph services and that, but then you also run into the similar kind of challenges as you go from monolith to microservices. It’s not all roses, there are challenges involved, so there are also similar set of challenges when you move from the monolith GraphQL to a federated one.

Thomas Betts: Yeah, let’s dive into that. What’s the first thing? You say, “Okay, we’re going to take it from 1 team to 2 teams, and then 10 teams are going to be contributing to the one API,” because you said there’s still one graph, but now we’re going to have multiple people contribute to that. How does that work when it’s creating your first microservice? How do you create your first federated GraphQL instance?

Tejas Shikhare: In our case, our first federated GraphQL service was the monolith itself. In federated GraphQL… Our schema was exactly the same, so we exposed the monolith as the first sub-graph service in the federated ecosystem, so as far as the client is concerned, they can still make all of the same queries and do all that. Now we started to then reach out to a certain set of teams, so initially we bootstrapped this. Since we were maintaining the monolith initially, we went to the teams that would potentially own a sliver of the functionality of the monolith, went to their team and helped them build a sub-graph service. And basically the idea here is to not affect the clients at all, so clients can still make the same set of queries, and so we had this directive in GraphQL to make this kind of migration possible. It’s an override directive which allows us to specify to the…

In the federated architecture we have a gateway, so let me step back a little bit, and then the gateway talks to the sub-graph services and the gateway is responsible for doing the query planning and execution. And as part of the query planning, it checks each field that was requested in your query and see which service it comes from, and then it looks at this child field and then sees which service it comes from, and then based on that it builds a query plan.

Now what we can do is we have this one monolith, GraphQL. Let’s say we have three different domains within it, like movie, talent and production domain. This is our studio ecosystem. Now let’s say I want to pull out the talent domain and make it into its own sub-graph service, so I’ll identify the types that are specific to the talent and I’ll mark them with the key directive that tells them that this particular type can be federated.

Now, I can redefine that type, I can extend that type in the sub-graph service using the same key directive, so that’s something that they have to agree, and then I can slowly say, “Oh, these are the fields within, say the talent type.” And I can start saying that now for these fields, go to my sub-graph service, the new talent sub-graph service, and you can mark those at override. That tells the gateway, the router, that, oh, for this particular field, we know that this original service can provide it, but also this new service can provide it. And then the future query plan takes into account that, okay, we are going to send it to this new service. That’s how we started, so we did that for one service, the next service, and we slowly started pulling out until our monolith GraphQL became an empty shell and we got rid of it. It took about a whole year to do that because it had a lot of APIs in there, but that’s how we started.

Thomas Betts: Yeah, it sounds like a strangler fig pattern. You can build a new thing and then you start moving it over, so it’s again, following the patterns for how to move to microservices, the same thing for moving to federated.

Moving to GraphQL from Falcor or REST [13:51]

Thomas Betts: Let’s back all the way up though. I wanted to get into, you said that you were using Falcor for a while because you had the need for a graph, but then you had to switch to GraphQL. How is that different for we have a graph architecture for our APIs, versus somebody who doesn’t have that in place and they’re just getting started? You started in a different place than I think most people will be coming to GraphQL.

Tejas Shikhare: The example I described of migration earlier was all GraphQL in our studio ecosystem, because it was already GraphQL. In our consumer’s ecosystem we had Falcor APIs and then we had to migrate them to GraphQL, which is I think what you alluded to. And then also, what would someone who has no GraphQL or no Falcor would do? I hit the first one already, so I’m going to hit the second one, which is how did we do from Falcor to GraphQL, real quickly? As far as Falcor is concerned, it has similar concepts as GraphQL, but really moving from Falcor to GraphQL is a lift. It’s as good as moving from REST or GRPC to GraphQL because there’s not really that much in common in how it works, but conceptually it’s still similar, so it was a little bit easier, but not that easy.

The way we did the Falcor migration is we built a service on top of the Falcor service, a GraphQL monolithic service, a thin layer, and then we mapped the data fetchers for GraphQL data fetchers to call the Falcor routes, and that was additional engineering effort we had to put in, because that allowed us to convert. And then now that we had the GraphQL monolith service, then we applied the same pattern to move it to different services, which actually we haven’t completed yet, so now we are at a stage where we’ve just move to GraphQL and there’s only one service, but eventually our goal is to move it out to different services. Let’s say if you don’t have Falcor, it’s more conceptually different, you have REST APIs in your ecosystem, and you’re thinking about, “Oh, GraphQL is great for all of these things and I want to use it.”

In that case, I would follow a similar pattern where I’ll set up a new service, like a GraphQL service, and then start building the schema and implement the data fetcher so that it calls my existing REST endpoints. If you have a monolith, then maybe you can just build it within your monolithic service, so you have your REST API sitting alongside your GraphQL API, you can put it on a different port, and then have the GraphQL call into either your existing functions, your business logic. You still implement the data fetchers, but then they call into either your existing APIs or business logic. That’s the way I would start, and then once you have this GraphQL API that works and clients start using it, then obviously if you’re a big company, you want to start thinking about federation because you have a lot of services and you can grow and scale that GraphQL API, but really you want to see if that’s working well, maybe just keep going with that for a while. And we did that too for almost a year and a half before we even considered federation.

Thomas Betts: Yeah, I like how you talked about we had Falcor, we couldn’t just jump to GraphQL, we weren’t going to do a full replacement. Again, it was almost that strangler pattern. We’re going to put in an abstraction layer to help us with the transition rather than a big bang approach. You were able to make iterative things.

Why use GraphQL Federation? [16:44]

Thomas Betts: And then let’s go into what you were just saying about when do you get big enough that you say, “Hey, this is becoming hard to maintain.” What are the pros and cons of moving to federation and why would somebody say, “Hey, it’s worth the extra work that we have to do?” And what is the extra work when you get to federation? You don’t get all that for free.

Tejas Shikhare: First, let’s talk about the pros. Remember how I talked about earlier that the GraphQL service could become the central bottleneck? The first thing is you don’t have to implement the feature in the backend service, in the GraphQL service before the client can use it, so that is one of the big problems that federation solves, so you can just have the owning team implement the feature and it’s already available in the graph.

The second one, big pro is operational burden. You can split the operational burden instead of one central team being on call and the first line of support for all your APIs, you can kind of split that up and scale that a little bit better. We’ve seen that the more skill you have, you can see that part of the team, and I’ve known people who have worked on this team for a long time, that you can have some serious burnout on the engineering side. It’s just hard to be on call for frontline services all the time, and it’s stressful too. And you can hire more people to split up the on-call, but ultimately I think splitting up the support burden is very nice. That’s another win from moving to federation.

And then the third benefit, I think it’s a lot of companies, they’ll have these legacy applications that you don’t really develop actively, but you still have to maintain them, you have to expose those APIs, and what Federation allows you to do is you can convert those existing legacy applications into a sub-graph that you can contribute to the overall GraphQL API. It really allows you to modernize your legacy applications that you don’t really maintain, but then expose it to the graph and then the clients can start using the GraphQL API, so that’s a nice one. You can also do it in the monolith, but it’s always falls behind. It’s not like something a priority, but then the team owning it can modernize their own legacy application. I think it’s a nice little win from federation.

Yeah, we covered the wins, but obviously it comes with some of the challenges, and I think that was your primary question, and the challenges are many too, because previously one of the big things is now everyone has to implement their GraphQL APIs. Everyone has to learn GraphQL, because you’re federating the APIs, so each team is exposing a sub-graph service that’s a GraphQL API, and GraphQL, although it has some complexity over REST or GRPC. REST or GRPC it’s action handlers that you implement and then you call into your business logic. In GraphQL you get a query that can fetch multiple different kinds of data and then you learn how to use data fetchers, and then understand data loaders, so there is some complexity and learning curve there, which can be challenging if your entire company has to do it.

The second big challenge is I think the health of the API, like when we are designing an API, it’s easier to collaborate when you’re one team and building it by yourself, but it becomes very challenging when you have multiple teams, in our case 100s of them designing them in their own silo. And then does it combine together nicely to form a well-designed API that’s actually useful to the client? Because ultimately you’re building the API so that the client can consume from it, but if you just build something that’s not what the client needs, then you’re not really solving a problem, so that’s a big challenge with federation and really those are the two things that we’ve been focusing on improving, making GraphQL developer education better, but also making schema development easy.

Tooling to help with federation [20:04]

Thomas Betts: It sounds like you’ve had to have a lot of people working on being able to scale the effort of federation, like you said, learning and coming up with the training tools. Do you also have tools that you’re using to help monitor and learn what’s in the graph and study that? How many people are working on the tooling and the platform compared to how many developers are now using it?

Tejas Shikhare: We’ve been working on a lot of different tools to make implementing federation a little bit better. I’m going to put that into a few different buckets. We have observability, which is an important aspect of any server-side development, and that’s an important… We have a ton of tools that are federation-aware and GraphQL-aware that we’ve done. Then also the schema development and making the schema better, and then also feature development for the backend owners to make that easier. Roughly on the platform side, across these many buckets, my team focuses on GraphQL. We have about 6 or 7 people doing GraphQL-focused tooling, but then we work with say, the observability team, the Java platform team to make the Java platform easier, so maybe a total of 20 individuals from across all the different domains. And then I think on the developer side, we have over 1,000 at this point that are actually building and implementing sub-graphs, because we have the internal tools, the studio applications, the Netflix product API, the new games initiative, all of that we’ll build with GraphQL.

On the observability side, we focused on making distributed tracing easier with GraphQL, so essentially you can track how each request is planned and where it’s spending time. This is really good because it allows client developers to optimize their query by requesting fields that they feel like they need to render early versus render later, so they can see, but also allow backend developers to see where they might be introducing inefficiency in their system. That’s really powerful, and it is aware of these data fetchers that I was talking about earlier that it can track that in the distributed trace.

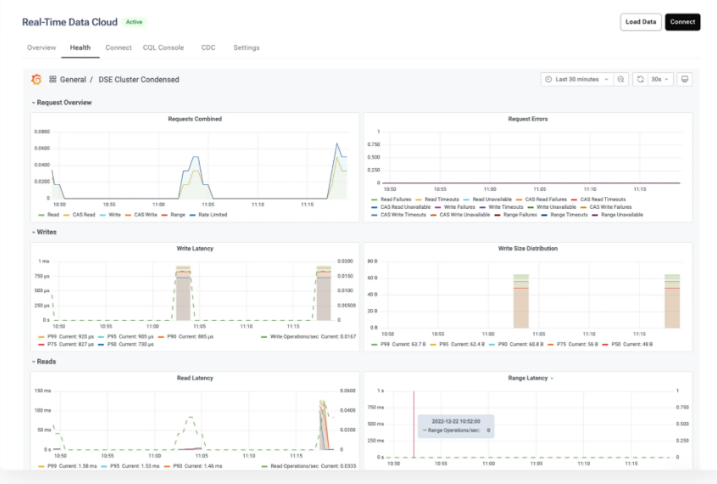

Then we also have metrics, GraphQL-aware metrics. Normally if you have a REST API or GRPC API, you’d create the success scenario and then you’d have all these different kinds of errors, like 400 and all those kind… And then you send it to your metric server.

But GraphQL is a little bit tricky because in GraphQL you can have partial failures and responses, so we had to make GraphQL-aware metrics that we do, and we map them onto the existing metrics that we have so that you can create these charts when there’s an outage to see, oh, what kind of error is happening? And you can track that up.

Really focusing on observability was important, and on top of that, for the schema development, one of the challenges I talked about is here. Observability just was stable stakes. It was something we had before and we needed to have almost the exact same experience with GraphQL and not anything harder.

But with schema design, it was one team doing it before and now we have multiple teams doing it, and firstly we needed a way to track schema health. We started tracking that, but then we realized people were doing too many things and it was impossible to do that, so we created the schema working group where people can come and ask for, showcase their schema, get a review done, and also discuss schema best practices.

And then once we had the schema best practices, we needed a way to enforce them. Enforce is a strong word, but really make people aware. We built this tool called Graph Doctor, which allows teams to get PR comments about what best practices they’re not following in their schema design, so that would come directly on their pull request, and also a lot of sample code to how to do the things right way and then point them to that so that they can go look at it and then just start doing it. Those are the two things that help with schema design.

Then the last part is when you move to microservice, you have to make the development of the service easier with feature branches and things like that, so we had to do a similar thing with GraphQL where we have this overall API, but your team is responsible for this small part of the API, but you don’t want to just push everything to production before… And you test in production, so you need a way to have your part of the graph merge with the rest of the graph in production and then give it to someone to test, so sort of like an isolated feature environment for people to use. That was another thing that we had to build, because previously the API team could just do it in the central monolith, but with this distributed ecosystem that was hard to do, and one of the big challenges. Those are the main key tools we focused on.

Schema-first development [24:25]

Thomas Betts: My question was a little vague and you covered everything I wanted you to say. Going back, you said you’ve got maybe 20 people, but that’s empowering 1,000 developers and that GraphQL, it’s how you’re doing work, but you basically have to treat it as a product that you manage internally and you’re constantly getting, I’m sure feature requests and hey, how can we improve this? How can we improve this part of the developer experience? Your last bit about the schema and the schema working group, I think that’s a whole nother conversation we could have. I believe in your QCon talk you’d said schema-first development was what you proposed. Can you describe what that is?

Tejas Shikhare: Another way to say it is API-first development, so starting with the API, so starting with the needs of the client, identifying the problem you’re trying to solve, working with the product manager, working with the client developers, and coming up with an API together that works for everyone. Because what we often tend to do, and I’ve been a backend engineer and guilty of this many times, is we implement something and we create an API for it, and then we said, “Oh, here’s the API, use it.” And that’s great if you’ve put a lot of thought into the API, you’ve thought of all the different use cases, but that’s not always the case, and what makes that easier is having a schema-first approach or an API-first approach where… Or design-first approach, another way to say it, is you really understand the needs, product needs, client needs, and then working backwards from there and figuring out with the API.

And once you do that, you might realize the stuff you have in the backend doesn’t fit quite nicely into that, and that’s when you’ve built a good API and you have to now start making the, or saying, “Oh, we maybe can provide this,” and then you start taking things out of the API so that it fits with your backend. But then you’ve really done the homework, and that ultimately leads to better APIs, more leverage for the company because these APIs then become reusable for other product features. Yeah, so that’s really what API-first design in my mind means.

Thomas Betts: One of my wonderings about GraphQL is that I could have all these APIs that were created and none of them met my needs as a consumer or the product manager, but I realize if I call three or four of them, I can get what I need, but now I have performance issues. Oh, in comes GraphQL, and it says, “Oh, I can just ask each of those for just the bit that I need and I’ve created this super API.” But you’re now talking about GraphQL as the primary way of doing things, and that that should influence the API development because people are writing those connecting layers and they’re always thinking about the final use of their service, not just, well, we need something that is the talent database here, I’ll just put all the data on one… Fetch one person by name or by id.

Tejas Shikhare: Yeah, exactly. I think that you nailed it there.

Thomas Betts: For non-GraphQL, when we just have REST APIs, one of the approaches is contract-driven development where you write the contract first, you write your open API spec or whatever it is, and then the consumer and the producer both agree to it, which is different than one side versus the other has to use it. There’s different ways you can test this to say, “Hey, I as a producer meet the spec and I as a consumer expect the backend to do that.” Is contract-driven development similar to the schema-first approach you’re doing or is that a different scenario?

Tejas Shikhare: Yes, exactly. I think it’s very similar. I think that’s yet another way to just say the same thing, because ultimately you’re building an API that works for the client and that the producer can provide, and schema is the contract in GraphQL, so oftentimes we refer to it as schema-first development, but really I think conceptually they’re very similar.

The evolution of APIs at Netflix [27:44]

Thomas Betts: You’ve been doing this project for a few years, we talked about some of the migration challenges, and I like that you focused on it as a project that you had to migrate and it’s still ongoing. Where are you at now in that evolution? When do you expect to be done, and what does done look like?

Tejas Shikhare: Where we are, I think we have a lot of people using GraphQL at Netflix for a lot of different things, and it’s almost a little bit chaotic where we are trying to tame the chaos a little bit, and we are in the phase where we are taming the chaos, because people are so excited they started using it and we saw some of those issues and we’re starting to tame the chaos. And really the next step is to migrate our core product APIs, because they experience a lot of scale challenges along with moving to a new kind of technology. It’s almost akin to changing parts of a plane mid-air because we have so high RPS on our product APIs, and really we need to maintain all of the engagement and all that stuff, so I suspect that will take us about a year to two to really move a lot of the core components onto GraphQL, while also making our GraphQL APIs healthier and better, instilling all these best practices, making the developer tools, and one day everything is in place and people are just developing APIs in this ecosystem.

And that’s when I think it’ll be in a complete space. We have the nice collaboration workflow that I talked about, schema-first development between the client and the server, and there’s all the platform tooling exists to enable that, and we have a lot of best practices built up that are enforced by the schema linting tool and things like that. I think we are probably around maybe a midway point in our journey, but probably still quite a bit of ways to go.

For more information [29:29]

Thomas Betts: Well, it sounds like you’re definitely pushing the boundaries with what GraphQL can do, what you’re using it. I’m sure there’ll be a lot of interesting things to look for in the future. Where can people go if they want to know more about you or what Netflix is doing with GraphQL?

Tejas Shikhare: I think for me, you can reach out to me on Twitter. My handle is tejas26. I love reading about GraphQL and engaging with the community there, so definitely reach out to me if you have questions about GraphQL and where we are going with it at Netflix. We have tons of stuff that we’ve published. We have a series of blog posts. We have open source, the DGS framework, which is Spring Boot, which is a way to do GraphQL in Spring Boot Java, which is what we are using internally. We have a couple of QCon talks, and even GraphQL Summit talks from coworkers, so if you just search Netflix federated GraphQL in Google, some of these resources will come up.

Thomas Betts: Tejas, thank you again for joining me today.

Tejas Shikhare: Thank you so much Thomas.

Thomas Betts: And thank you for listening and subscribing to the show, and I hope you’ll join us again soon for another episode of the InfoQ podcast.

Links

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

Google Storage Transfer Service Now Supports Serverless Real-time Replication Capability

MMS • Steef-Jan Wiggers

Recently Google announced the preview support for event-driven transfer capability for its Storage Transfer Service (STS), which allows users to move data from AWS S3 to Cloud Storage and copy data between multiple Cloud Storage buckets.

STS is a service in the Google Cloud that allows users to quickly and securely transfer data between object and file storage across Google Cloud, Amazon, Azure, on-premises, and other storage solutions. In addition, the service now includes a preview capability to automatically transfer data that has been added or updated in the source location based on event notifications. This type of transfer is event-driven, as the service listens to event notifications to start a data transfer. Currently, these event-driven transfers are supported from AWS S3 or Cloud Storage to Cloud Storage.

In a Google Cloud blog post, authors Ajitesh Abhishek, Product Manager, and Anup Talwalkar, Software Engineer, both working at Google Cloud, explain:

For performing the event-driven transfer, STS relies on Pubsub and SQS. Customers must set up the event notification and grant STS access to this queue. Using a new field – “Event Stream” – in the Transfer Job, customers can specify the event stream name and control when STS starts and stop listening for events from this stream.

STS begins consuming the object change alerts from the source as soon as the Transfer Job is created. Any upload or change to an object now results in a change notification, which the service uses to transfer the object to the destination in real-time.

The new STS capability provides several benefits. Aman Puri, a consultant at Google Cloud, explains in a medium blog post the benefits:

Because event-driven transfers listen for changes to the source bucket, updates are copied to the destination in near-real time. As a result, the storage Transfer Service doesn’t need to execute a list operation against the source, saving time and money.

Use cases include:

• Event-driven analytics: Replicate data from AWS to Cloud Storage to perform analytics and processing.

• Cloud Storage replication: Enable automatic, asynchronous object replication between Cloud Storage buckets.

• DR/HA setup: Replicate objects from source to backup destination in order of minutes.

• Live migration: Event-driven transfer can power low-downtime migration, on the order of minutes of downtime, as a follow-up step to one-time batch migration.

Microsoft provides a similar capability in Azure with Event Grid Service, allowing event-driven data transfer from a storage container to various destinations. By leveraging a system topic on the storage and subscribing to blobCreated events through an Azure Function, data from the storage container can be copied to a destination like another storage container, AWS S3 Bucket, or Google Cloud bucket. Alternatively, an event could trigger a DataFactory pipeline.

Currently, the event-driven capability is available in various Google Cloud regions, and pricing details of STS are available on the pricing page.

MMS • Renato Losio

Since January 5th Amazon S3 encrypts all new objects by default with AES-256 to protect data at rest. S3 automatically applies server-side encryption using Amazon S3-managed keys for each new object, unless a different encryption option is specified.

The cloud provider claims that the change puts a security best practice into effect without impacts on performance: S3 buckets that do not use default encryption will now apply SSE-S3 as the default setting. Server-side encryption with customer-provided keys (SSE-C) and server-side encryption with AWS Key Management Service (SSE-KMS) are not affected by the change.

Since 2017 the S3 Default Encryption feature was already an optional setting available to enforce encryption for every object uploaded. Going forward, S3 will automatically apply SSE-S3 for all buckets without any customer-configured encryption setting. Sébastien Stormacq, principal developer advocate at AWS, explains why the change is significant:

While it was simple to enable, the opt-in nature of SSE-S3 meant that you had to be certain that it was always configured on new buckets and verify that it remained configured properly over time. For organizations that require all their objects to remain encrypted at rest with SSE-S3, this update helps meet their encryption compliance requirements without any additional tools or client configuration changes.

The encryption status for new object uploads and S3 Default Encryption configuration is available in CloudTrail logs providing an option to validate that all new data uploaded to S3 is encrypted. To explain the changes, AWS published a Default encryption FAQ, clarifying that S3 only encrypts new object uploads. To encrypt existing objects, the cloud provider suggests using S3 Batch Operations. While no changes are required to access objects, it is no longer possible to disable encryption for new uploads and client-side encrypted objects will now have an additional layer of encryption. Angelica Phaneuf, CISO of Army Software Factory, writes:

This is an amazing release by AWS and will progress the security posture of everyone using their cloud.

Segev Eliezer, penetration tester at LIFARS, comments:

Now they should configure IMDSv2 by default on EC2 instances and update GuardDuty’s IAM findings.

Security blogger Mellow Root thinks that disk encryption in AWS is close to useless and potentially harmful, claiming it is security theater:

I suggest spending your time on IAM permissions, backups, disaster recovery, appsec, or pretty much anything else before disk encryption.

Corey Quinn, chief cloud economist at The Duckbill Group, writes:

This is a clear win for customers. Personally, I find the idea of encrypting objects in S3 at rest to be something of a checkbox requirement and nothing more, but if that box gets checked by default for the rest of time I’m not going to complain any.

The S3 change applies to all AWS regions and there are no costs associated with using server-side encryption with SSE-S3.

MMS • Karsten Silz

Transcript

Silz: My name is Karsten Silz. I want to answer one question in my talk, which is, can we build mobile, web, and desktop frontends with Flutter and one codebase? The answer is yes, we can. I’ll show you. We shouldn’t, because I can only recommend Flutter on mobile. I’ll tell you why.

First, why and how can you build cross-platform frontends? How does Flutter work? I’ll tell you about my Flutter experiences, and then give you some advice on when to use which framework. Who made me the expert here? I did in a sense, because I built a Flutter app that’s in the app stores. I’m also a Java news editor at InfoQ. I help organize the QCon London and QCon Plus tracks on Java and frontend. I know what’s going on in the industry. I’ve also been a Java developer for 23 years. Even though I use Java, Angular, and Flutter, I’m not associated with these projects. I don’t try to sell you books, training courses. I’m not a developer advocate. I’d like to think that I give you options, but in the end, you decide.

Why Cross-Platform Frontends?

Why do we need cross-platform frontends? The reason is because our users are multi-platform. Thirty years ago when I started, I just needed to build a Windows application. These days, we need Mac and Linux, and of course, iOS and Android on mobile. We could try to build with the native SDKs, but that’s too expensive. What we want to do instead is have one framework and one language, and we call that cross-platform. We need cross-platform frontends because they’re cheaper, and they’re good enough. Good enough means good enough for enterprise and consumer apps. I’m not talking about games here. They’ve got separate frameworks. That’s why we need cross-platform frontends because they are cheaper.

How to Build Cross-Platform Frontends

Now that we know why, how can we build them? The answer should be web. That’s our default stack for cross-platform. Why? Because it’s the biggest software ecosystem we ever had, and it’s got the most developers. Granted, it’s a bit hard to learn. You’ve got HTML and CSS, and JavaScript and TypeScript, and Node.js, and npm, and Webpack and stuff. There are a couple of different frameworks out there, React, Angular, Vue are the most popular ones. How does it look like? We use HTML and CSS for the UI, and JavaScript and TypeScript. Case closed? No, unfortunately not because we’ve got issues on mobile. First of all, compared to native applications, we’ve got some missing functionality, mostly on iOS. We’ve got no push notifications, and background sync doesn’t work. We’re also somewhat restricted compared to native applications. We can’t store as much data and don’t have access to all hardware functionality. The apps are often slower and less comfortable in the browser, and they’re missing that premium feel of the native look and feel.

Let me give you an example of why I think native look and feel has advantages. It’s a German online banking app that I use. I had recently started to move from their web version on the left to a native app here on iOS. Why is the native app better here? First of all, on the web version, you need to tap the Hamburger Menu. It’s hard to reach and it’s not obvious, and it’s always two tabs, one for the menu, and then one for functionality. The NavMe on mobile is easy to reach, obvious, and it’s just one tap for the most used functionality. The web application also has these little twisties. I’m not sure my mom would recognize them. That she could actually tap on it. I’m sure she could tap on those big cards on the right-hand side. I think the native app just looks nicer. A native look and feel is important because it looks nicer, and it’s easier to use, because it works like all the other apps on your phone. The Google Apps team agrees with me, because on iOS, they are moving from Material-UI to native iOS UI elements. The responsible Google manager thinks that only with a native look and feel that apps can feel great on Apple platforms. If we do that, we actually have two cross-platform frameworks to juggle, we’ve got web and native, because we keep web for PC, and now we’ve got native for mobile.

I’ve been saying native, but that’s really three different things to me. Number one is it runs natively. It’s in the app stores, and it’s an app. Number two, it’s got a native look and feel. Number three, meaning access to native platform functionality. Given those criteria, what native cross-platform frameworks are out there? There’s a ton and you can find some more on my web page. I looked for open source Java like ones. I’m a Java guy, and I came up with these four: Flutter from Google, React Native from Meta/Facebook, and then Xamarin from Microsoft, which is currently being rewritten as .NET MAUI. It’s supposed to be out in Q2 2022. Then finally, we’ve got JavaFX, which used to be part of Java, but then got open sourced, and it’s now mostly maintained by a company called Gluon.

Which Framework Is Popular?

Which of these frameworks is popular? Why do I care about popularity? A couple of reasons. Number one, a popular technology is easier to use, easier to learn. There’s more tutorials, more training material out there, and more questions and answers on Stack Overflow. It’s also easier to convince your boss and your teammates. I think popularity can make a difference in two situations. If your options mostly score the same, then you could say, let’s go for the most popular one. Or if something is really unpopular, then you could opt to not use their technology. As it just happens, I measure technology popularity in four different ways. First of all, I look at employer popularity. I do that by looking at how often technologies are mentioned in job ads at Indeed. Indeed is the biggest job portal in the world, and I search across 62 countries. Why is that important? If you go ahead and propose new technology in your team, and your teammates rightfully worry, can I find a job? They go to a job portal, and see how many jobs are out there that look for that technology? On the other hand, your boss is worried, can I hire developers for this technology? He also goes and looks in a job portal, because if there aren’t any job ads out there, there may be something wrong with that technology.

Here are the mentions in job ads, going back to August, where React Native had 19,200 mentions, and it now has 25,800. You see these percentages underneath? What do they mean? I use Flutter, the runner-up, as the baseline at 100%. Flutter went from 8,400 to 10,400. We’ve got Xamarin as well going from 5,200 to 5,800. It increased a bit as well, roughly still at 60% of Flutter’s volume. Then JavaFX dropping to about 6% of Flutter’s volume with 600 mentions worldwide. I think the initial two numbers were inflated artificially by wrong measurements on my side. The takeaway here is that React Native added 6,600 adds, whereas Flutter only 2,000. Now I’m looking at developer popularity, how many courses are bought at Udemy, one of the biggest online training sites? Money being spent on courses is another good indicator. It goes back to March of last year. There, Flutter leads, going from 1 million to 3 million students, to 2.1 million. Here, React Native is the runner-up, going from 812,000 to 1.1 million. Xamarin here is a lot less compared to React Native, just about a quarter of its volume.

Then JavaFX on a search recently about plateauing at about 16% of React Native volume. We see Flutter increased by nearly 800k, React Native only by 320k. Another developer popularity measure is Google searches. Starting here, JavaFX peaking in December 2008, and Xamarin the third most popular one peaking in March 2017. React Native as the number two, peaking in July 2019, and Flutter peaking in March 2022. We can see that Flutter has about twice the search volume that React Native has. Last one for developer popularity is questions at Stack Overflow. We can see here JavaFX being number four. It peaked in the end of 2018. Xamarin peaked at the end of 2017. React Native peaked at the end of 2021. Flutter just peaked at the beginning of 2022. We can see that Flutter has about twice the number of questions than React Native. If anything, React Native should probably have more questions, because Flutter is a batteries included framework, and React Native gives you a lot more options and leaves you a lot more freedom. Naturally, you would expect more questions just out of general use, but still Flutter, twice as many questions as React Native. If you want to sum up popularity, employers love React Native, where React Native leads by 2.5x, and it’s pulling away from Flutter. Developers love Flutter, where Flutter leads by 2x, pulling away from React Native. If you’re interested in more technology popularity measurements, in my newsletter, I also measure JVM languages, framework, databases, and web frameworks.

The summary is that web is the biggest ecosystem we ever had with most developers. It’s a bit hard to learn, but we’ve got React, Angular, and Vue as the leading frameworks. For native, meaning running natively, native look and feel, and access to native functionality, we need that on mobile. Flutter is loved by developers, but employers prefer React Native. We also have .NET MAUI and JavaFX, which are less popular. That’s how we can build cross-platform frontends.

How Flutter Works

How does Flutter work? This is DASH, the mascot for Flutter and Dart. Flutter is a Google project, so of course the question on everybody’s mind is, will Google kill Flutter? That’s a hard earned reputation. We’ve got entire websites dedicated to how many projects and services Google killed, here this website counts 266. What’s the answer? Will Google kill Flutter? We don’t know. What’s bad is that Flutter has in-house competition, for instance, Angular. You may not think it but if you look at the Angular web page, for web, mobile web, native mobile, and native desktop. That clearly is very similar to Flutter. It’s confusing. Should I use Angular or should I use Flutter? Then we’ve got Jetpack Compose, which is Flutter for Android. On the plus side, there’s external commitment to Flutter. For instance, Toyota will use Flutter to build car entertainment systems. Canonical uses Flutter to rebuild Ubuntu Linux apps. We also saw that it’s popular with developers, and in second place of popularity with employers. That’s also a plus.

Flutter supports multiple platforms. How long have they been stable? Mobile went first, more than three years ago, then web in March of last year. Then PC, Windows, we saw that earlier this year. Linux and Mac are hopefully becoming stable, somewhere towards the end of this year. Flutter uses a programming language, Dart. It isn’t really used anywhere else, so it’s probably unknown to you if you haven’t done Flutter. That’s why I’d like to compare to Java, because Java is a lot more popular. Here’s some Java code with a class, some fields, and a method. What do we need to change to make it into top code? You saw there wasn’t really a whole lot, just a different way of initializing a list. That’s on purpose. Dart is built to be very similar to Java and C#. Although this is Dart, this is not how you would write Dart. This is what Concise Dart looks like, simpler variable declaration and simpler methods. For comparison, this is what Java looks like, so Java is more verbose. Dart was originally built for the browser and didn’t succeed there, but it did succeed with Flutter. You could say it’s a simplified Java for UI development, but it has features that Java doesn’t, like null safety, sound null safety, so less null pointer exceptions, and async/await borrowed from JavaScript to handle concurrency. Like many UI frameworks, it’s got one main thread, and you can also create your own threads, which are called isolates. In my app, I didn’t use that so far, and UI is still pretty fluid. Doing stuff on the main thread here works in Flutter.

Dart is really only used in Flutter. They both get released together, which means that Google has a chance to tune Dart for Flutter. Let me give you an example of syntactic sugar that Google put into Dart. Here, we’ve got some Dart code, Flutter code to be precise. We’ve got a column with three text fields. If you look closely, then you see we’ve got this, if isAdmin, and it does what you probably expect it does. Only if that isAdmin flag is true, you actually see the password fields, only admins see the password field. Of course, you could do that differently, you could define a variable and then have an if statement. This is more concise, because it’s not separate. It’s used in line here in the declaration. That means it’s more concise, less boilerplate code. Again, that’s an example of syntactic sugar that Google can put into Dart and Flutter.

Libraries are called plugins in Flutter. There’s this portal that you can go to and you see is about 23,000. Most of them are for mobile, not all are for web and desktop. Most of these plugins are open source. There is a good plugin survival ratio. I use many in my app, and over the last year and a half, none of them got abandoned. There’s even a team of developers that takes care of some important plugins if they actually do get abandoned. If something is wrong with a plugin, if you see a bug, or if you want to change it, then you also have the option of just forking it, and then putting the Git repo URL directly in your build file, so it makes it very easy to use a fork of a plugin. UI elements are called widgets. The most important feature here is that widgets are classes. You don’t have a graphical UI builder. There’s no CSS, no XML files. It’s just classes, everything is just attributes and classes and code. That’s really good for backend developers, because that’s the kind of code they’ve always written.

You’ve got to configure widgets. You configure the built-in widgets, mostly, but you can also create your own widgets. The important feature here is that Flutter doesn’t use the native UI element. Instead, it emulates them with a graphic engine called Skia. Skia is used in Chrome, Firefox, and Android. Again, no native SDK UI elements are used because Flutter paints pixels. For look and feel, you get widget sets, and there’s three different categories. The first one is the stuff that you can use everywhere. You’ve got container, row, column, text images. You will use these widgets no matter what platform you’re on. Then you’ve got two built-in widget sets, the Material Design which you can use everywhere, and that’s the native look and feel on Android, and you’ve got the iOS widget set. Then through third party, you get the look and feel on macOS, Windows, and Linux.

Sample Flutter App

I created a sample Flutter app, which has five native look and feel with one codebase. Here’s the sample Flutter app. Here, my sample application is running in an iOS emulator, it is an iOS UI. If I start typing something, then you can see the label disappearing. I got an iOS dialog, but because Flutter just paints pixel, I can switch this over here to look like Android. Now here, as you can see, I can type my name, the label remains and the dialog boxes look like Android. Now I’ve got the sample application running in a web browser, meaning that I’ve got Material-UI with a Hamburger Menu. I’ve got the Material-UI form here with the Material-UI dialogs. Let me do the same thing here. I want to switch over to a Windows look and feel, so now you see on the left-hand side a Windows look and feel and the form fields look different. You can see there’s a different dialog here too. Now I want to switch over to a macOS look and feel. You see it doesn’t work perfectly. There’s something here, a bug, I think it’s a Flutter bug. Now the form here looks different, more like iOS, and the dialog looks different too. That’s all possible because Flutter just paints pixels.

Let me recap what we just saw. We saw five native look and feel, and we could switch between that. We can switch between a look and feel because Flutter just paints pixels, it doesn’t use the native UI elements. You can see that if you dive into the web application, you see everything on the left, you’ve got buttons and a form field. On the right when you look, it’s really just a canvas, which you see down there. Flutter paints pixels. The sample app is on GitHub. How does that switch work? It works probably the way that you expect it to. I created my own widgets. Then each of these widgets has a switch statement, and depending on which platform I’m running, then I create either an iOS, an Android, or macOS, or Windows widget.

Architectural Choices

When you build a Flutter app, you have to make four architectural choices. The first one is, how do you handle global state in your application? The default way is something called provider. I use Redux, known from the web world in my app. Second is, what kind of widget set do you want to use? Do you use Material, do you use native, do you use custom, or do you mix them? I use native iOS and Android. Routing, there’s a simple router built in called navigator, which is fine for most cases. There’s a more complex one, which you probably don’t need. I use the simple one, the navigator. When it comes to responsive layout, that means to adapt to different screen sizes orientation, there’s nothing built into Flutter. You have to resort to a third party plugin. I use a plugin that takes the bootstrap grid, and applies it to Flutter.

Native integration means two different things. Number one is it means using Flutter in native apps, which works on iOS and Android. The WeChat app is an example here. WeChat is that Chinese app that does everything. A couple of screens were added to this app, and they were built with Flutter, whereas the rest of the application remained in native. That doesn’t currently work for web and desktop. At least for web, it’s under construction. What’s more common is the other way, to use native code in Flutter. You mostly do that through plugins. Stuff like camera, pictures, locations, you don’t have to write native code, because you use a Flutter plugin. Now on mobile, you can also show native screens, and the Google Maps plugin uses that to show you the native iOS and Android screens. You can also have a web view and show web pages in your Flutter app. Finally, you’ve got on mobile, at least a way to communicate with native code through channels, which is asynchronous publish and subscribe. Your Flutter code, your Dart code can call into iOS and Android code, and the other way around. Then there’s even a C API for some more hardcore cases.

Flutter apps run natively. How do they do that? They rely on the platform tool chains. That means if you want to build Android, you need to use Android Studio. If you want to build for iOS and macOS, you have to run Xcode on macOS. If you want to build for Windows, you have to run Visual Studio, the real Visual Studio for Windows, Community Edition does on Windows. The engine gets compiled down to JavaScript and C++ on the web, and C++ everywhere else. Your app gets converted, compiled into a native arm library on mobile, JavaScript on the web, C++ on Windows and Linux, and Objective-C on macOS. Code build and deploy is important to Flutter. Why? Because they’re striving for an excellent developer experience. I think they’re succeeding, and I believe that’s part of the reason why developers prefer Flutter over React Native.

If we look at the code, what IDEs can we use? Two are officially supported, IntelliJ/Android Studio and Visual Studio code. Flutter has developer tools, and has a couple of them. The first one is the inspector, which shows you the layout of your application. Then we’ve got the profiler for memory, CPU, and network helping you debug your application. A debugger is there as well. We even have a tool for the junk diagnosis, which means if your application doesn’t run as fluid, doesn’t hit 60 frames per second, you get some help there. The important part is that all of these are not just in an IDE, they also run when you launch your application from the terminal, because they’re built as a Flutter web application so they’re always available.

Project structure is a monorepo, so you get the code for all the platforms in one Git project. You’ve got one folder for Dart, and then one folder per platform. Some of these folders actually contain project for other IDEs. macOS and iOS are Xcode projects. Android is an Android Studio project. Then launching a native application happens through shell files, so you get an app delegate Swift file, or a main activity Kotlin file that kicks off the application.

Flutter has a fast build and deploy. That’s important especially on mobile, because there it’s slow. Deploying your iOS, Android app could easily take 30 seconds, a minute, or even more, just to see your application changes live and an emulator on the phone. Flutter, on the other hand, uses a virtual machine during development. That allows it to do something that’s called hot restart, where within 3 seconds, the entire application gets set back to its starting point. More important, hot reload, where within 1 second, your changes are live and running. That’s really something that keeps you very productive with Flutter, because you can see your changes live, instantly, you don’t have to wait 30 seconds or a minute, like with native development sometimes until your changes are active. I think that’s one of the main reasons why developers like Flutter so much, because within one second, your changes are live.

Flutter Platforms

Let’s take a look at the Flutter platforms. On mobile, I give it a thumbs up. Why? Because you get two apps for the price of one. You get native look and feel. You get access to native functionality. You’re even running faster with build and deploy the native applications. On the web, I give Flutter thumbs down. Why? Because the only UI elements we have is Material-UI versus hundreds or thousands of component libraries and skins on the web. You also don’t have access to the native libraries, which on the web would be all these JavaScript libraries. Because of that, on the web, you just have a tiny amount of libraries versus hundreds of thousands or millions of JavaScript libraries out there, just that subset of the Flutter libraries, those 23,000 libraries that work for web as well. On the desktop, I’m also giving Flutter the thumbs down. Why? First of all, why don’t you use a web application? Why do you want to build a desktop application? Users are used to web applications on the desktop, and web browsers have less restrictions there than on mobile. On the desktop, only Windows is stable, and only just barely for a couple of months. Whereas Mac and Linux are currently not stable, so you couldn’t really use it in production right now. You do have access to native platform functionality through C++. Unfortunately, the UI sets for Windows and macOS are incomplete. For instance, there is a dropdown missing on the macOS side. I’m not sure that all of these widget sets will be accurate and maintained going forward, because that’s a lot of work.

Let me sum up here. Dart is a simpler Java tuned for UI. Plugins are the libraries. We’ve got a decent amount, and they’re easy to work with. Widgets are the UI elements. You can configure the built-in ones or create your own, and they’re emulated. It doesn’t use the native UI elements. Widget set give you the look and feel. Material and iOS ship with Flutter. We’ve got more like macOS or Windows through third party. On mobile, you can embed Flutter into native apps or use native screens and code in Flutter. Flutter runs natively because it compiles to native code using the platform toolchain. Flutter has great DevTools and gives you a monorepo for all platforms, and has a fast build and deploy on mobile. In the platform check, Flutter only really shines on mobile, lesser on web and desktop. That’s how Flutter works.

My Flutter Experiences

On to my Flutter experiences. I am the co-founder of a startup. We are a B2B software as a service for Cat-Sitters. Our value proposition is that our apps remove friction and save time. I wrote all the code. Here is what it looks like under the hood. The backend is Java with Spring in a relational database. I use Firebase for authentication and file storage. The frontend is an Angular application for the manager, and a Flutter application for the Cat-Sitters. What’s the business case for Flutter? Why did I choose to use Flutter? Number one is I wanted to have unlimited storage and push notifications, so no restrictions here. I wanted the app to be as fast and easy to use as possible, and so I need a native UI for them. Then when I looked at my prototype, and looked at Dart, which is similar to Java and the fast code build and deploy cycle, then I realized I could be very productive with Flutter. That’s why I picked Flutter. Flutter on mobile gets a thumbs up for me. It works as designed, you get two apps for the price of one. It has some minor quibbles, like the similar, it doesn’t always stop the app so you kill it. That’s ok. If something goes wrong, then it’s usually Apple breaking stuff. For instance, I can’t paste from the clipboard into the Flutter app in the simulator for a couple of months now. Apple broke it, and I’m not sure when this will be fixed.

What’s good is that the Flutter team actually listens. A year or two ago, there was some concern on bugs piling up, so Flutter started fixing a lot more bugs. They also have quarterly dev surveys where you can give them feedback. Flutter paints pixels. It emulates, it doesn’t use native UI elements. It works well on iOS and Android, at least. It has some quibbles on iOS. For instance, the list tile doesn’t exist as a widget, so I cobbled together my own using some plugins. The one thing that’s a bit annoying is that when you deal with native UIs, Flutter doesn’t really give you help coordinating that. Because the Flutter team, when you ask them, say, you shouldn’t use native UI, you should customize Material-UI instead. It’s manageable.

Native Look and Feel: iOS vs. Android

Let me give you some examples of the native look and feel in my app, iOS versus Android. First, we’ve got the iOS on the left and Android on the right. If you know your way around these two platforms, then you can see on iOS, we have the button in the upper, whereas on Android, we have a floating action button. The iOS has a list indicator, whereas Android doesn’t have that. Here are some detailed screens from iOS. This one here is a recent addition, the animated segment control that took a couple of months until it showed up in Flutter because it needed to be added to the library. Then down here, I told you that there is no built-in list tile for iOS, so this is the custom control that I created based on some plugins and some customization on my own. Then finally, here, we see some buttons. They are the same on Android. This is a place where I use my own design, not using native buttons.

I also give a thumbs up to Firebase. What is Firebase? Firebase is Google’s backend as a service on mobile, web, and server. It’s got mostly free services like authentication and analytics, and some paid features like database or file storage. Especially on mobile, it’s helpful because it gives you one service instead of two. For instance, crash logging and test version distribution, both iOS and Android have their own version. Instead of using these two separate services, you just use the one Firebase service. It works good, and has good Flutter integration. You’ll find some more stuff on my talk page, for instance, how to use the power of mobile devices. How to keep UI cracked with one source of UI truth. How you could be consistent between your web app and your mobile app. How back to basics also applies to mobile apps. These are my Flutter experiences.

Summary

Let’s just sum up what we’ve heard so far. Why do we need cross-platform frontends? We need them because they are cheaper, and good enough. How can we build cross-platform frontends? The default answer is web because it’s the biggest software ecosystem ever with the most developers. React, Angular, Vue are popular frameworks. We need native, running native, native look and feel, and access to native functionality, especially on mobile. Developers like Flutter, employers prefer React Native. Then there’s .NET MAUI, and JavaFX a lot less popular. We also talked about how Flutter works. Dart is a simpler Java tuned for UI. Plugins are the libraries, easy to work with. Widgets are the UI elements, they’re emulated. The widget set give us the look and feel. Material and iOS ship with Flutter. We can get other through third party plugins. Native means access to all native functionality on mobile, which is a plus. Flutter runs natively because it compiles to native code with platform toolchains. It’s got great DevTools, a monorepo for all platforms, and got fast build and deploy on mobile. Flutter really only shines on mobile, lesser on web and desktop. My Flutter experiences, the business case was to overcome restriction, have a fast UI, and be productive. Flutter works, two apps for the price of one. Flutter paints pixels, also works. Firebase is also good because it offers free and paid services, which means one service instead of two separate ones on mobile. It also works.

Flutter vs. World

Let’s compare Flutter versus the world in two cases here. Number one, React Native versus Flutter, the arch enemy here. React Native uses JavaScript. Flutter uses Dart. React Native is a bit slower because it’s interpreted JavaScript at runtime. Flutter compiles to native and that’s why it’s faster. React Native uses the native UI elements, but Flutter paints pixel, emulates stuff. With React Native, we’ve got two separate projects, one for the web, and one for mobile. With Flutter, we get a monorepo for all supported platforms. Desktop support in React Native is unofficial for macOS and Windows, but with Flutter, it’s official: macOS, Windows, and Linux. I think Flutter fits Java very well. Why? Because it’s much more mature and popular than JavaFX. You’ve seen that here. Even though Dart is a different language, it’s similar to Java. It’s a simpler Java. You write UI as code with classes, and you can keep two of the three IDEs in Java for Flutter. The big question, when to use which native cross-platform framework? I go by developer experience. If you’re a web developer, then use React Native. If you’re a .NET developer, then use .NET MAUI. Everybody else I recommend to use Flutter.

Can we build mobile, web, and desktop frontends with Flutter and one codebase? The answer is yes, we can. We shouldn’t. Really only recommending mobile here, not web, desktop. Why? Because on mobile, we get two apps for the price of one. We can do everything that native apps can do with a faster build and deploy. On the web, we only have Material-UI as the UI elements, and we’ve got very few libraries compared to the JavaScript ecosystem. On the desktop, we probably shouldn’t be building a desktop app to begin with, and only Windows is stable there.

Resources

If you want to find the slides and the videos, additional information, the native UI sample app, want to get started with Flutter, links to tutorials and other information, or you want to get feedback, subscribe to my newsletter, then you head to this link, bpf.li/qcp.

Questions and Answers

Mezzalira: Have you ever played with Dart, server side?

Silz: I have not. I’m a Java guy. That’s just a much bigger ecosystem there. No comparison. I think there are some that do Dart on the server side, but there are also people that use Dart for other frameworks. I wouldn’t recommend it. There’s much better options on the backend.

Mezzalira: Knowing the power of Java and the Java community I can understand.

You talk about how Flutter is gaining traction at the developers’ level. What about organization level? Can you share your point of view on how the C-suite would think about Flutter, especially, obviously the technical department? If it has a nice penetration for developers.

Silz: I think there’s probably three things here. Why do people want to hire React Native developers a lot, but when you look at developers, they prefer Flutter? I think there’s three reasons here. Number one, is that if you’re using React on your project, and a lot of people do, then I think React Native as a mobile framework does make sense. That’s what I’m recommending. I think there’s some push from that perspective. The second thing is, I think people are just wary about Google stuff. I hear that often when I talk about Google or a Google project. They say, when are they going to abandon it? There’s a reason why these web pages are out there. I think people are a little bit wary. Then they look at Facebook, and they say, Facebook has React and React mobile, so they can’t kill it, because then they can’t build their apps anymore. The third reason is that Flutter explicitly says, we want to have a great dev experience. Smartly, they published their roadmap for the year and they said, nobody has to use Flutter, because there’s the built-in base options there. There’s other frameworks out there. We have to give developers a great experience so that they actually want to use Flutter. That’s why they put a lot of money into tools. I think that pays off. I think the tool side is probably stronger than what you get on React Native.

Mezzalira: How do you feel about Flutter supporting all the feature changes from the UI style on iOS and Android?

Silz: The background is, as I said, Flutter doesn’t use the native UI set. It uses emulation, so it has to paint everything itself. I think the answer there is threefold. Number one, I’m feeling really good about Material, because the Material stuff is Google’s own design language. They recently went through some changes. It used to be called Material Design. Now it’s Material You, and there are some changes. The Flutter team already said, we’re bringing these changes in there. I think, no worry about the Google Material Design language. It’s got the home turf advantage. On the iOS UI set, or widget set on iOS and iPadOS, ok. There hasn’t really been a big change there. The only thing is they made some changes, left some smaller changes, they came into the widget set as well. For instance, there was an animated segment control. In the past, you had that stay with one and then the animated one where the slider moves around. That took a couple of months, but it’s there. Now, if iOS ever makes a big UI redesign. I think probably, yes, as well. It may take a while. I think where I’m most worried about is the third party ones, the macOS and Windows ones. Those are hobbyists. They’re currently not complete. On macOS, there’s no dropdown in those widget sets. That’s where I’m most worried about. Will they ever be complete, and will they remain up to date? Because both of these UI elements, the macOS and Windows, they are somewhat in flux. That fluent, modern design of Windows still developing, and macOS still going through some changes there, too.

Mezzalira: Does Flutter repaint the entire UI for a small change? If yes, wouldn’t this be a costly operation?