Month: March 2023

MMS • Peter Pilgrim

Article originally posted on InfoQ. Visit InfoQ

Transcript

Pilgrim: My name is Peter Pilgrim. I’m going to be talking to you about this, software engineering, sustainable empathic capacities, an excursion on the lived experience to minor creative genius. I am a Java champion, a senior manager at Cognizant. Previously, I was an independent contractor. I had this expansive view of software engineering. The first school of thought is that I would give an autobiographic view of my lived experience in software engineering. The second school of thought is that we are journeying, at least in my humble opinion, to empathy, to express empathy within our discipline.

Remote Sacking/Firing

First a corporate statement. The company has made a decision that the future of its vehicles going forward will be crewed by a third-party crew provider. Therefore, I’m sorry to say that this need to inform and employ you is terminated with immediate effect upon granted redundancy. Your final day of employment is today. Remote sackings and firings have happened so recently. British people wouldn’t have heard of better.com, but Americans, and Canadians, and North Americans have definitely heard of Vishal Garg, who fired over 900 employees over Zoom, in the 7th of December. How heartless and lacking in empathy was this, in the month of December? Yes, that’s it. Then in 17th of March, 2022, in Britain, on these shores, P&O Ferries dismissed 800 members of its shipping staff primarily from the Port of Dover, and that the British government didn’t take too well with this and the ramifications are still happening now.

What Is Empathy?

What is this thing that we are talking about? What is empathy? Where does it come from? This is a picture of the Homo sapien brain. It turns out, it’s a concoction. It’s from a German word, Einfühlung, which is a German psychological term, literally meaning feeling-in, inner, inside, intrinsic, the inner feeling. Germans are well known, famous for putting and inventing words from two separate words, so combining Ein, which is inner, and fühlung, which is feeling, to have this feeling in, or what we translate now, thanks to two scientists at Cambridge and Cornell University in 1908, as Empathos. Em is for in. Pathos is for feeling. That has been the early translation. This is why we have the word empathy in the English language. Thanks to Susan Lanzoni of Cambridge University Press.

Now for the technical details, or rather, the medical details. What seems to happen is we have an organ deep inside our brain, called the amygdala. This thing that looks like a grape or a squash grape, and we actually have two of this, because this picture is a cross section of the brain. We have our famous gray matter here, or the lobes, the frontal and parietal lobe, and the occipital lobe here, and the famous cerebellum, where we do a lot of our processing at the back of our brain. What seems to happen is that we have five sensitives, and I will concentrate just on one, the visual sense, when a photon hits the retina, a signal is transferred straight through our brain from left to right. Reach in and passing through the hypothalamus, which is this orange-yellow bit that looks like a bug’s head, looks like a squash potato, reaches the back of the occipital lobe here. Enters the cerebellum, and then magically, these signals are transferred via the hippocampus to the amygdala.

What happened recently, it’s been known since the 1960s, is that these signals hit the amygdala very quickly, so quickly they’re known as microexpressions or microsignals. They are the result of, I suppose, voluntary and involuntary emotional response conflicting with another human being. If you think about it, this is the very essence of communication. Here’s another human being, is threatening me, is he friendly, is he foe? What is it? We have to develop these social behaviors. This is because in the purple section here, we have an ancient part of our brain when we first cross-evolved through the millennia, or millions of years from amphibians that first called out of sea. We had lizard brains. What has happened through evolutions, we’ve kept on extending this brain structure, which we need.

It seems the amygdala is the heart of fear and flight anxiety. It’s part of our lizard brain, the limbic system. It’s responsible for connections, emotional learning, and memory regulation. That’s where the cerebellum comes in. It’s very complicated, and scientists are still trying to work out what is going on there. Essentially, emotions and empathy, and any chance of empathy comes from this little organ that looked like a grape or two grapes. Because we have one in the left and one on the right side of our brainstem, in our brains. We have two of them, just like two kidneys.

This is Labi Siffre, the famous singer, songwriter. I was listening to him a couple of days ago in fact, on a radio program, an interview he gave. I just quoted him here. “Humans tend to hate nuance.” I wonder why, especially in 2022. “We want to put things in a box, we want to compartmentalize. However, the reality is that there’s nothing simple about anything we do with human beings.” I love this next part. “We are complexity upon complexity upon paradox on toast.” That describes us. We have toast, the amygdala, and it’s pure. Because we have these lobes and this interpretation, we mix it all up, together. This is from the brilliant Labi Siffre talking about his musical performance, and what he thinks about musical performance. That is from the BBC, Colin Murray show, Midnight Meets, from the 4th of May, 2022.

I love this quote from Vex King. “It is your subconscious mind that is responsible for your beliefs. All that you perceive is a result of what you accept as true in the conscious mind.” You can choose to bury certain facts about the world, or have a total different independent view of the world for most human beings and what is deemed as reality. I would get vexed by Vex King, but you must live in a real world. How many people have had that said to them? The real world is what you actually believe to be true. You never suffer bullies or people when they force or coerce you into accepting something else that you know is true. That is not the way to live.

For me, as I was talking in the QCon London conference there, I had this observation in my life that, a change of contradictions, a series of disruptions that invalidates the assumptions or surroundings is a rug pull. I learned from my physics teacher who said, any news of the world that breaks the illusion, a system in a steady state condition is a whirlpool. My old physics teacher is proven right time over time. This isn’t me doing a somersault or the backflip, but it’s the start of my lived in experience. I went to a secondary school called Spencer Park in South London. Just like Harry Potter, we wore Blazers to school, in a secondary school. I have this coat of arms, Dieu defend le droit, defend the right, yours with God, defends the right, on my blazer here.

Feeling for Another’s Suffering/Vulnerability

I think at 13 years old, I finally figured out what I wanted to be in life. The first thing that happened is that I discovered Research Machines 380Z in the 1980s, because I joined the electronics club, [inaudible 00:10:55] and I, and Pete went to the computer club, and learned BASIC. What got me into computer club was there was a friendly face here, an empathic face. A face that I could ask, how does a computer work? I went to a parents’ evening where you could get the kids into extra-curricular subjects and you can stay behind after school and try certain things. I happened to talk to Mark and ask him, he was also in my school year, how does this computer work? He showed me. From that friendly face, this is why I’m talking to you now. This is proof beyond doubt that empathy does work, at least for me. Eventually, I persuaded my mum to get me an Acorn Electron. What I really wanted was the top right here, the BBC Micro Model B, but that cost £400. I ended up with the lower left, Acorn Electron. In the end, because the Acorn Electron is a cut-down BBC Micro, I was still able to program primitive games, learn assembly language, and write code like this that showed graphics. I honed my chops, just like a guitarist really, or a musician. I started coding. I really wanted to know more about it. I knew at 13 years old I was going to be something to do with computing. I didn’t know if it was going to be a software engineer or architect, I knew this was my vocation. I didn’t know how I was going to get there.

What I’m talking about here, I’m talking about the feelings for another, which is the seventh empathic capacity, which is, we express vulnerability when we’re learning. It takes somebody with compassion, and maybe even pity, and somebody who is formed enough to give us guidance. Mark could have told me to go just bugger off, or he could have been in a bad mood. Because he was there on that parent’s evening. Because I can imagine, I can play this game called perceptual positions, and imagine standing in Mark’s shoes, and a young black kid comes up to him and says, show me how this computer works. Maybe I was rude. I could have been good. I could have asked him. I cannot remember. I owe it to Mark because he set me on the road.

Why Are You Here?

Fast forward. This would have happened to you as well. If you perform and close your eyes, you will know how you found this mentor yourself. Once you always know, because you’re here watching this program, you can make it work for you always, just by using your cerebellum and imagining that you could get back to that moment when someone shows you your first code, your first project, your first laptop, your first ideas, or pointed you in a direction to become who you are or will be tomorrow.

Dealing with Restructuring

Let’s move on with a story. Graduated, ended up working in Germany. Came back to Britain in the mid-1990s just in time for Blur versus Oasis, discovered that. In fact, instead of working in the outskirts of London, the heart of the matter, or the technology was in central London in investment banking. At the same time, I knew about Bjarne Stroustrup and C++. I wanted to be just like the next Stan Lippman. I already became a Linux system admin here, almost did. Then, I happened to chance on to and stumble onto Java. I had stumbled onto Java a year before, but it didn’t click with me. When I joined banking, I said, this is Java? Then my life had changed. I was still interested in C++ a bit, but then Java definitely took over, by the millennium.

In the turn of millennium, Deutsche Bank, which is my first investment bank that I worked for. This is the office environment that I worked on, like hundreds of people with the old cathode ray tube monitors, maybe a few flat screens in this. This is before the financial bubble burst. Then, everything was hunky-dory, just like you’re using the best technology in the summer of the year 2000, and that lasted for another year to 2001. There was Java. There was all these Dotcoms, fabulous technologies. Java 2 was just about to come out. We still didn’t have broadband yet, if I remember, still had a bit of dialogue to get through. I was happy to hack on the bank’s computers there because they had the most up to date technologies there. It’s easy to be trapped in that environment. This is not less about empathy, but you can get trapped in a moment, the rewards of the environments that exist now, that means you can forget about tomorrow. September 11th happened, and the Dotcom bubble burst. I wish I was back at 1988. Life was a little bit simpler.

This is the biography continuing. Ten months later, my job was gone. There was Summer in the City. I became very lazy and very despondent. Ended up watching the football world cup. This is a guy called David Beckham, who’d take fabulous free kicks. Years later, I discovered agility and retrospectives, nearly 10 years later. If I could write or mark this on the board right then in the summer of 2001 and 2002 with a purple marker, this would be my positivity and how I felt. Because restructuring took it all out of me really. I was feeling really chipper, because I got money in compensation. Then everything trended down from there. You might wonder, what has this got to do with empathy? This is George Clooney and Kendrick, and if I’m Up in the Air, the movie, which does talk about restructuring and job loss. When you hit a downturn, you will become depressed, unless you’re one of these very lucky people who managed one week later to be rescued or find the next gig immediately. Banks then, especially Deutsche Bank, they paid for outplacement, a decent compensation, half a year’s salary, and help to kickstart a new job search and a new CV. A very good movie to watch, if you’re going through this.

Then, years later, I realized that everybody is sharing this shared pain, so feeling as another does in the shared circumstance. It’s birth, marriage, bereavement, war, famine, hunger, success, winning the world cup. Those are shared circumstances that you can share, that others can share with you. That is an empathy connection, which is useful in teams, for those of you who are managing, or thinking of managing. The first rule about restructuring, my advice, no matter, because it will happen to you. Your job is made redundant, you are never redundant. Say that again and say it well, your job is made redundant, you are never redundant. If you remember that phrase when it does eventually happen to you, because nobody ever escapes.

What do you have control of? You have control over you, your influence, and your reach. If you set fire to the school, with pupils in, you are influencing and harming students, as well as the teachers and the caretakers, so you have control and influence, and you have reach. The reach is to connect people to the wider community. It’s a very negative example, I know. Then let’s be Afrika Bambaataa about this in hip hop, turn that negative into a positive. That’s what I did with myself. I needed to feel restructuring. I needed that disappointment to lose the semifinal, or quarterfinal, if not the final itself in the tournament. Because I then asked questions about myself like a sportsperson. Where’s my community? What do I want to do? Should I stay with computing or leave it? I had empathetic thoughts. I was feeling these feelings but couldn’t coin a name for them, or couldn’t put my finger on to what I was feeling. Then, I happened to be contracting. I fell into contracting really. Then technology in the times changed, Java EE became big, Spring framework came out, financial services still had that rebound until 2008. Then it crashed again.

Imagining Another’s Thoughts and Feelings

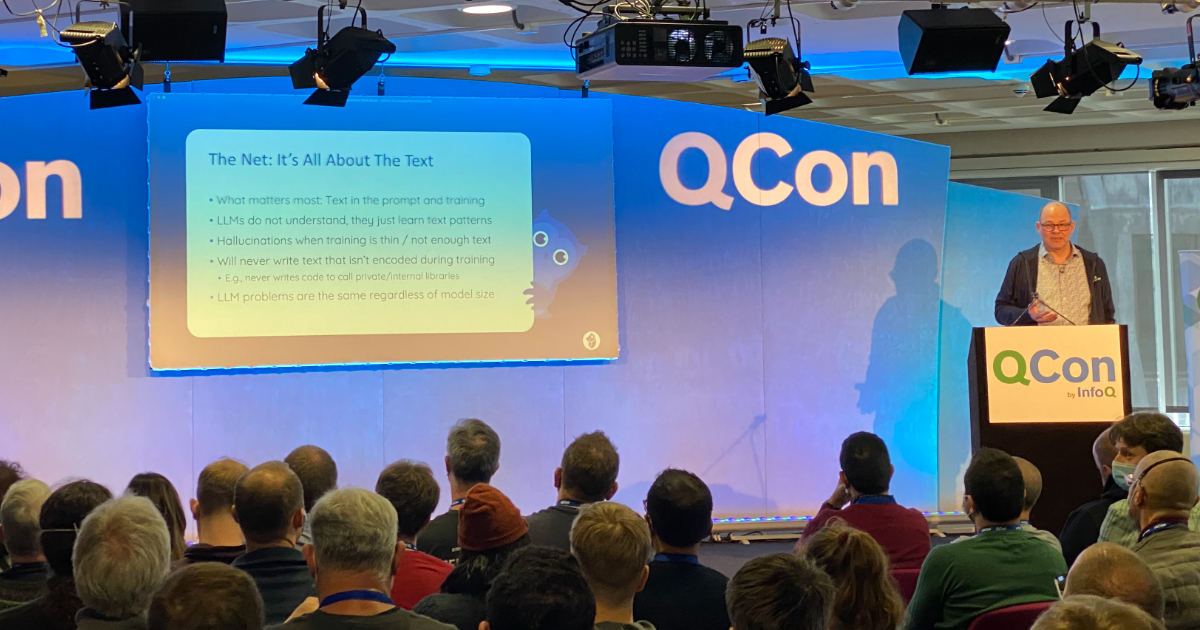

Imagining another’s thoughts and feelings. I had dreams. What about other people in London, and also, working on technology? They had dreams, desires. I wanted to communicate. I wanted to learn and better myself. It just so happened that I finally did it. I didn’t destroy the world or blow up the world, I created this thing called the Java web user group, a user group because I asked a question, are there other independent engineers who are willing to travel with me to JavaOne 2004? It turns out not only I had the money because I was a contractor, but because I started this mini-community, I got good at the MPV parts of it as facilitating, connecting, organizing speakers, getting in contact with Wendy Devolder of Skills Matter. Making presentations, as well as at QCon, and eventually at JavaOne. Getting involved with other people in the wider community to do with Scala, and Groovy, Spring Boot, Hibernate, HTML5, and even JavaFX. There are 20 other technologies that I could list including OSGi, that I could throw in here. Eventually, in 2007, I became a Java champion. It’s because I was a contractor, I had money. I was able to travel and afford to see the greater and wider community that I became one myself. It’s not something that you force yourself or apply to join, other people have to look at your contributions to the wider Java community. This Java Champion program, that was created by Aaron Houston, once of Sun Microsystems, he was the global outreach for Sun Microsystems. He realized that this technology is just not a panacea. It is driven by the people who believe passionately that Java and technologies around Java can help in the world. That’s a huge lesson. He’s still out there somewhere.

At the same time, I was reading Malcolm Gladwell, about connectors are people you seem to know who seem to know everybody. You find connectors in every walk of like. They’re sociable, gregarious, naturally skilled at making and keeping in contact with friends and acquaintances. What Malcolm was talking about was the first genuine influencers, not the influencers that broadcast, but the influencers that facilitate, a facilitating influencer instead of taking money. In order to inspire interest, though, as a user group leader, and I tried to be as fair as I could. Be that connector between groups of diverse people and the audience. Remember to facilitate the conversation. I tried to do that and I did that well for much of my time as well in that user group.

Adopting Another’s Posture for Achievement

What I really did is seeing these disparate speakers and how they presented at not only the user group, the Java web user group, but also at conferences. I learned by watching Neal Gafter, and Joshua Bloch in Java, or Mark Reinhold, or Brian Goetz in Java, by adopting and learning and modeling their accents, and the way they attenuated their tone. They spoke. They informed. They were passionate. They drilled down. They were respectful. They had empathy. That’s who I wanted to be. I wanted to be like them. It’s like Gianfranco Zola, the Italian footballer. He was lucky enough to play with Diego Armando Maradona at Napoli. He always goes on. Zola always goes on, “I was so lucky to see Maradona train.” That’s a sporting, I suppose symbology works in technology too. If you can model excellence in your heroes and heroines, it is a good way to be. In order to do that, you need to understand empathy, which is what this talk is about.

Switching Careers

Hunky-dory came, you know what comes next. The huge financial crisis of Lehman Brothers. Eventually, contracting ended for me. I became full time. I survived Lehman Brothers financial meltdown. I knew many people at Lehman Brothers who were fantastic. On a Friday, sometime in September, everything’s hunky-dory, and over the whole weekend, a bank suddenly, an investment bank, Lehman Brothers suddenly gone. Incredible. This was a big meltdown. I don’t think we’ve had the boom time since 2008. Have you had boom times for the general population? Maybe Elon Musk had some boom times. We haven’t seen it, the ordinary folk in the trough. I stayed with investment banking. I tried up into 2012, I think, Olympic year. Then I’d had enough. Sometimes you do have to switch rails. My partner says, change it, leave it, or accept it. She is an NLP Master Practitioner. She has advised me time and time again, and other people have said the same thing. If you can’t change your organization then change the organization. In order to change the organization, you need empathy. That has to come from you. That’s the secret to that quote.

We get into the penultimate stage here. I left banking. This is the biographical part continuing, I became digital. That was the new way of earning money as a contractor. I stumbled into and I accepted digital web agency development. My head was blown. I heard about this user research and user centric web design, where you have call to action, the serious stuff, copywriting, content generation, structure of English, strategy, content strategy, all these new words. Here it is, including responsive website design. Suddenly, the frontend was taking off. Full stack hadn’t come quite in by 2013. I think full stack is a horrible term, it’s a lack of empathy, because people who are full stack, have a weakness. They may be good at frontend, but they may not be good at the backend, or in the Java side. I’ve never known someone to know all of the platform stack, including DevOps. It’s impossible. Computing subject matter is too big. If you want to get things done, you’re going to have to have empathy. You’ll find out next.

It is because at the same time, the governments were realizing that its digital websites were old, they were antiquated. They were broken, disparate. This lady here, Dame Martha Lane-Fox submitted a paper to Prime Minister David Cameron in 2010, to form gov.uk. This is basically to adopt agile and become saving space. Here, code is a communication vessel of the external intent and behavior, between two or more different human beings. Code itself had changed to be unmaintainable, to definitely maintainable. Somebody has to look at your code, and be able to compile it, or to transpile it, years from now. We have to be agile, because now things were changing.

I’m so ashamed that my first experience of XP was, I think, 2014, or 2015, where I actually paired with a lead developer who was better than I, in terms of the web, JavaScript side. I had to learn pairing with him and her. Then I stumbled into Santander and started programming, become a Spring Boot developer. What I discovered in the Santander project, which was 100% pairing in 2017, is that it really requires empathy. I didn’t have all the times then, but you have to be inclusive. I know this, people are racially inclusive. When you’re coding with a junior, that you have to allow juniors to make mistakes. Likewise, if you’re coding with someone better than you, then you are in the vulnerable state. The other thing about pairing, every two or three days, we would swap pairs, we would follow the Extreme or the Pivotal way of coding. No pair worked on the same story for two or three days, we will all swap a pair. Then we’d get knowledge sharing. That increases knowledge within the team. You’ll see that you quickly need to know each other’s thoughts and feelings for the win in order to get that agile performance and the team. That’s why people were using pair program, and finally I got it.

What I discovered is that in the industry, we do have this negative gamification, which is contempt. It’s a feeling of superiority over people, putting people down. What is required for high performance teams, and if you want to interview for a great team, you need compassion. It is required for effective agility. Then, when I dug deeper into the agile manifesto, one day, I was shocked to read that they’ve been talking about this in 2002. This type of situation goes on every day in marketing, management, external customers, internal customers, and yes, even developers don’t want to make hard tradeoff decisions, so they impose irrational demands for the imposition of corporate power structures. This isn’t merely about software development problem, it runs through Dilbertesque organization. Whoever wrote that as text was suffering because of lack of empathy. They were annoyed at what goes on in their organization. This is in 2001.

Interconnected, Empathetic Software Engineering

I joined that digital development. I had proper daily standups since January 2013. We are in 2020, and the world changed. I enjoyed my contracting days, but suddenly things changed. We had remote communication, remote repositories, remote deployment from being in remote laptops or positions, virtual private network issues. It was a whole smorgasbord, and then some. What’s my opinion of high-quality software engineering? A healthy codebase, systems and application looks like a model of communication between people who exude compassion, and empathy. For interconnected, empathetic software engineering, there are two sides to it. There’s the technical side, the maintainable side where we want less bit rot. We want testable code. We want adaptable code. We want code that is reusable, maybe, that is exchangeable, is that really required? Resilience? This is where we have the non-technical side. We want ethics, teamwork. We want to be open and seen. We want to be inclusive. We definitely need psychological safety, to ensure we also have fairness in the workplace. Because if people aren’t treated fairly, then you’re going to get attrition. We pair in a high-performance team, a high caliber team. Definitely, the people that I’ve worked with in those teams, they have synchronized and composed relationships. They get on. They have that trust and support. They collaborate. Sometimes that leads to innovation. If you want reusable fairness and innovation, get those things right.

Empathetic Toolset

What you need is this empathetic toolset, which first starts with actively listening. Then this is about the culture of content or addressing it. You definitely want to be actively listening, observing, and having that kinesthetic, that feeling. That is touching on the amygdala, listening. There’s three sides to every story. Everybody has strengths and weaknesses. We want to show that we comprehend others and other people. We want to avoid indignation. We want to show people are included. We can do that in the same way we date other people or interview. We mirror. We have rapport. We don’t want platitudes. We want to show that we are self-aware. We are sentient. We are nonjudgement. We also cultivate. We encourage. We collaborate. Then, if we are really lucky, and this is impossible, because even I have a big head, try to be egoless as much as possible. I know Ken Beck and the Scrum XP people proclaim this a lot.

High-Performance Teams

Evidence of high-performance teams. This comes from the Mind Gym, and less so about empathy, but really, is about it. Because the last bit of the sentence, the mixed gender groups consistently outperformed the single gender groups. This was a study about diversity, which is relevant. What’s even more relevant is Kelsey Hightower, who is a Kubernetes [inaudible 00:38:53], Developer Relations at Google. He talks about running a customer empathy session, where he puts Kubernetes engineers through the same challenges that his and her customers face. He’s still having fun. If you think you can avoid empathy, it’s there in the FAANG baby, in those FAANG companies, at least in one of them. It’s coming to a software developing engineering workshop and store that you are in right now. There is no escape, at least.

Empathic Capacities

Daniel Batson, who came up with the empathic capacities, American social psychologist, well known for the empathy-altruism hypothesis. Number one is knowing another’s thoughts and feelings. That is the feeling that we have almost when an event in a circumstance is happening, or when we think, just like the other person. Imagining another’s thoughts and feelings. This is slightly substantively different in that we are in a different plane. We are thinking of what it’s like to occupy that person’s corner of the universe. Adopting the posture of another person, the kind of method acting here. This is really useful for coaching. I believe this is true. Because if you want to learn from the best, then work with the best and model excellence. Feeling as another person does. This is the circumstances shared where you are in that same circumstance. You might be in Ukraine, in Mariupol right now, or luckily, you aren’t, you’re here with us, and you can definitely feel what is going on there.

Number five, imagining how one would feel or fit, or think in another’s place. Again, slightly related to number two and one, but this how is projecting into the future, this one here. If you cause somebody’s harm, or you put ice down that girl’s back, as you did as a child, what stops you doing that? That is not a nice feeling to do, because maybe that happened to you, someone squirted you with water, and you understand pleasure as well as pain. You can imagine how one would feel in another’s place. Feeling distress at another’s suffering. This is an easy one. Bereavement, somebody’s pet has died, family member receives medical bad news. Somebody has taken sick at work. The announcement of terminal cancer is a really severe one, and you can treat it with platitude. If you have a similar distress, or you know a family member that’s had that similar distress, you will know number six. Feeling for another’s suffering, which is the charitable way. That is more to do with pity and compassion. That means you’re not actively involved, but you can feel. It is closely related to five, really, I think. You can feel without actually taking aboard all their emotions. Number eight is the classic projecting oneself into another situation, the actor. Imagine yourself emoting, being in that same person’s shoes, as one says.

Conclusion

An inclusive environment is necessary to reap the benefits of employing a diverse group of people. I’m going to leave you with empathic leadership tips here. For those of you who are leaders, or want to become leaders, you want this seat right now that I’m sitting in, you definitely want to look at and study the things in yellow boxes, which is the blame culture, the unblocking of encumbrances, the sanctuary, and the flexible communication. I’m going to leave you with Bob Odenkirk from Breaking Bad, and Better Call Saul. He says you’re not owed a career just because you want one. How true is that? Empathy. We continuously practice empathy even when we don’t have a lot of it to start with. We share in everyone else’s lived-experiences in order to build that better future for all of us, so say we all.

See more presentations with transcripts

MMS • Mehrnoosh Sameki

Article originally posted on InfoQ. Visit InfoQ

Transcript

Sameki: My name is Mehrnoosh Sameki. I’m a senior program manager and technical lead of the Responsible AI tooling team at Azure Machine Learning, Microsoft. I’m joining you to talk about operationalizing Responsible AI in practice. First, I would like to start by debunking the idea that responsible AI is an afterthought, or responsible AI is a nice-to-have. The reality is, we are all on a mission to make responsible AI the new AI. If you are putting an AI there, and it’s impacting people’s lives in a variety of different ways, you have the responsibility to ensure the world that you have created is not causing harm to humans. Responsible AI is a must-have.

Machine Learning in Real Life

The reason why it matters a lot is, besides the very fact that in a traditional machine learning lifecycle, you have data, then you pass it to a learning phase where that learning algorithm takes out patterns from your data. Then that creates a model entity for you. Then you use techniques like statistical cross-validation, accuracy, to validate and evaluate that model and improve it, leading to a model that then spits out information such as approval versus rejection for loan scenarios. Despite the fact that this lifecycle matters, and you want to make sure that you’re doing it as reliably as you could, there are lots of human personas in the loop that need to be informed in every single stage of this lifecycle. One persona, or many of you, ML professionals, or data scientists, they would like to know what is happening in their AI systems, because they would like to understand if their model is any good, whether they can improve their model, what features of their models should they use in order to make reliable decisions for humans? The other persona are business or product leaders. Those are people who would like to approve the model, should we put it out there? Is it going to put us on the first page of the news another day? They ask a lot of questions from data scientists regarding, is this model racist? Is this biased? Should I let it be deployed? Are these predictions matching some domain experts’ insights that I’ve got from surgeons, doctors, financial experts, insurance experts?

The other persona is end-users, or solution providers. By that I mean either the banking person who works at a bank and is providing people with that end result of approved versus rejected on their loan, or a doctor who is looking at the AI results and is providing some diagnosis or insights to the end user or patient, in this case. Those are people who deal with the end user. Or they might be the end user themselves. They might ask, why did the model say this about me, or about my patient or my client? Can I trust these predictions? Can I make some actionable movements based on that or not? One persona that I’m not showing here, but is overseeing the whole process, are the regulators. We all have heard about the recent European regulations and GDPR’s right to explanation, or California act. They’re all adding lots of great lenses to the whole equation. There are risk officers, regulators who want to make sure that your AI is following the regulations as it should.

Microsoft’s AI Principles

With all of these great personas in the loop, it is important to ensure that your AI is being developed and deployed responsibly. However, even if you are a systematic data scientist or machine learning developer and really care about this area, truth to be told is the path to deploying responsible and reliable machine learning is still unpaved. Often, I see people using lots of different fragmented tools, or a spaghetti of visualizations or visualization primitives together in order to evaluate their models responsibly. That’s our team mission to help you operationalize responsible AI in practice. Microsoft have these six principles in order to inform your AI development and deployment. Those are fairness, reliability and safety, privacy and security, inclusiveness, underpinned by two more foundational ones: transparency and accountability. Our team specifically works on the items that are shown in blue, which are fairness, reliability and safety, inclusiveness, and transparency. The reason why we work on them is because they have a theme. All of these are supposed to help you understand your model better, whether through the lens of fairness or through the lens of how it’s making its prediction, or through the lens of reliability and safety and its errors, or whether it’s inclusive to everyone. Hopefully, help you build trust, improve it, debug it further, and make actionable insights.

Azure Machine Learning – Responsible AI Tools

Let’s go through that ecosystem. In order to guide you through this set of tools, I would like to first start by a framing. Whenever you are having a machine learning lifecycle, or even just data, you would like to go through this cycle. First, you would like to take your model and identify all the issues, aka, fairness issues, errors, that are happening inside that. Without identification stage, you don’t know exactly what is going wrong. Next, another important step is to diagnose why that thing is going wrong. The diagnosis piece might look like that, now I understand that there are some issues or errors in my data. Now I diagnose that the imbalance in my data is causing it. The diagnosis stage is quite important, because that discovers the root cause of the issue. That’s how you can take more efficient, targeted mitigations in order to improve your model. Naturally, then you move to the mitigation stage where, thanks to your identification and diagnosis skills, now you can mitigate those issues that are happening. One last step that I would like to highlight is take action, sometimes you would like to inform a customer or a patient or a financial loan applicant about, for instance, what can they do, so next time they get a better outcome. Or, you want to inform your business stakeholders as what can you give some of the clients in order to boost sales. Sometimes you want to take real-world actions, some of them are model driven, some of them are data driven.

Identification Phase

Let’s start with identify and the set of open source tools and Azure ML integrated tools that we provide for you to identify your model issues. Those two tools are error analysis and fairness tools. First, starting with error analysis, the whole motivation behind us putting this tool out there is the fact that we see people often use one metric to talk about their model’s goodness, like they say, my model is 73% accurate. While that is a great proxy into identifying the model goodness and model health, it often hides this important information, that error is not uniformly distributed in your data. There might be the case that there are some erroneous packets of data, like this packet of data that is only 42% accurate. Versus, in contrast, this packet of data is getting all of the right predictions. If you go with one number, you’re losing this very important information that my model has some erroneous packets, and I need to investigate why that cohort is getting more errors. We released a toolkit called error analysis, which is helping you to validate different cohorts, understand and observe how the error has been distributed across your dataset, and basically see a heat map of your errors as well.

Next, we worked on another tool called Fairlearn, which is also open source, it is to help you understand your model fairness issues. It is focusing on two different types of harms that AI often give rise to. One is harm of quality of service, where AI is providing different quality of service to different groups of people. The other one is harm of allocation where AI is allocating information opportunities or resources differently across different groups of people. An example for harm of quality of service is a voice detection system that might not work as well for say females versus males or non-binary people. An example of harm of allocation is a loan allocation AI or a job screening AI that might be better at picking candidates among white men compared to other groups. The whole hope behind our tool is to ensure that you are looking at the fairness metrics with the lens of group fairness, so how different groups of people are getting this treatment. We provide a variety of different fairness and performance metrics and rich visualizations, in order for you to observe the fairness issues as they occur in your model.

Both of these support a variety of different model formats, Python model using scikit predict convention, Scikit, or TensorFlow, PyTorch, Keras models. They also support both classification and regression. An example of a company putting our fairness tool into production is Philips Healthcare. They put fairness in production into their ICU models. They wanted to make sure that their ICU models that they have out there is performing uniformly across different patients with different ethnicities, gender identities. Another example is Ernst & Young in a financial scenario where they use this tool in order to understand how their loan allocation AI is providing this opportunity of getting a loan across different genders and different ethnicities. They were able to also use our mitigation techniques.

Diagnosis Phase

After the identification phase, now you know where the errors are occurring, and you know your fairness issues. You move on to the diagnosis piece. I cover two of the most important diagnosis capabilities, interpretability and perturbations and counterfactuals. One more to just like momentarily touch on is, we’re also in the process of releasing a data exploration and data mitigation library. The diagnosis piece right now entails the more basic data explorer. I will show that to you in a demo. It also includes interpretability, that’s the module we provide to you, which basically tells you what are the top key important factors impacting your model predictions. How your model is making its predictions. It covers both global explanation and local explanation. How overall the model is making its prediction, and how individual data points for them, how the model has made its predictions.

We do have different packages under Interpret ML capabilities that we have. It’s a collection of black box interpretability techniques that can literally cover any model that you bring to us, no matter if it’s Python, or Scikit, or TensorFlow, PyTorch, Keras. We also have a collection of glassbox models that are intrinsically interpretable models, if you have the flexibility of basically changing your model and training an interpretable model from scratch. An example of that is Scandinavian Airlines. They basically used our interpretability capabilities via Azure Machine Learning to build trust with their fraud detection model of their loyalty program. Of course, you can imagine that in such cases, you want to reduce and minimize and remove mistakes, because you don’t want to tell a very loyal customer that they’ve done some fraudulent activity, or flag their activity by mistake. That is a very bad customer experience. They wanted to understand how their fraud detection model is making their predictions, and so they used interpretability capabilities to understand that.

Another important diagnosis piece is counterfactual and perturbations. You can do lots of freeform perturbations, do what-if analysis, change features of a data point, and see how the model predictions change for that. Also, you can look at counterfactuals and that is simply telling you what is the bare minimum changes to a data point’s feature values that could lead into a different prediction. Say, Mehrnoosh’s loan is getting rejected, what is the bare minimum change that I can apply to her features so that the AI predicts approved next time?

Mitigate, and Take Action Phase

Finally, we go to the mitigation stage, and also take action stage. We do cover a class of unfairness mitigation algorithms that could literally encompass any model. They have different flexibilities. Some of them are just post-processing methods and could adjust your model predictions in order to improve it. Some of them are more like reductions method, combination of pre-processing and in-processing. They can update your model objective function in order to retrain your model and not just minimize error, but also put control on a fairness criteria that you specify. We also do have pre-processing methods that will readjust your data in terms of better balancing it and better representing the underrepresented groups. Then, hopefully, the model that is trained on that augmented data is going to be a fairer model. Last, we realized that a lot of people are using our model, Responsible AI insights, for decision making in the real world. We all know models sometimes take on correlations rather than causation. We wanted to provide you with a tool that works on your data, just historic data, and uses a technique called double machine learning in order to understand whether there are any causal effects of a certain feature on the real-world phenomenon. Say, if I provide promotion to a customer, would that really increase the sales that that customer will generate for me? Causal inference is another capability we just released.

Looking forward, one thing that I want to mention is, while I went through different parts of this identify, diagnose, mitigate, we have brought every single tool I just represented under one roof, and that is called Responsible AI dashboard. The Responsible AI dashboard is a single pane of glass, bringing together a variety of these tools under one roof, same set of API, a customizable dashboard. You can do both model debugging and also responsible decision making with that, depending on how you’re customizing and what you pass to it. Our next steps would be to expand the portfolio of Responsible AI tools to non-tabular data, enable Responsible AI reports for non-technical stakeholders. We do have some exciting work on PDF reports you can share with your regulators, risk officers, business stakeholders. We are working on enabling model monitoring at scoring time just to bring all these capabilities beyond evaluation time and bring it to scoring time, and make sure that as the model is seeing the unseen data, it can still detect some of these fairness issues, reliability issues, interpretability issues. We’re also working on a compliance infrastructure, because we all know that there are nowadays so many stakeholders involved in development, deployment, and testing and approval of an AI system. We want to provide the whole ecosystem to you.

Demo

We believe in the potential of AI for improving and transforming our lives. We also know there is a need for tools to assist data scientists, developers, and decision makers to understand and improve their models to ensure AI is benefiting all of us. That’s why we have created a variety of tools to help operationalize Responsible AI in practice. Data scientists tend to use these tools together in order to holistically evaluate their models. We are now introducing the Responsible AI dashboard, which is a single pane of glass, bringing together a number of Responsible AI tools. With this dashboard, you can identify model errors, diagnose why those errors are happening, and mitigate them. Then, provide actionable insights to your stakeholders and customers. Let’s see this in action.

First, I have here a machine learning model that can predict whether a house will sell for more than median price or not, and provide the seller with some advice on how best to price it. Of course, I would like to avoid underestimating the actual price as an inaccurate price could impact seller profits and the ability to access finance from a bank. I turned into the Responsible AI dashboard to look closely at this model. Here is the dashboard. I can do, first, error analysis to find issues in my model. You can see it has automatically separated the cohorts with error counts. I found out that bigger old houses have a much higher error rate of 25% almost in comparison with large new houses that have error rates of only 6%. This is an issue. Let’s investigate that further. First, let me save these two cohorts. I save them as new and old houses, and I go to the model statistics for further exploration. I can take a look at the accuracy, false positive rates, false negative rate across these two different cohorts. I can also observe the prediction probability distribution and observe that older houses have higher probability of getting predictions less than median. I can further go to the Data Explorer and explore the ground truth values behind those cohorts. Let me set that to look at the ground truth values. First, I will start from my new houses cohort. As you can see here, most of the newer homes sell for higher price than median. It’s easy for the model to predict that and get a higher accuracy for that. If I switch to the older houses, as you can see, I don’t have enough data representing expensive old houses. One possible action for me is to collect more of this data and retrain the model.

Let’s now look at the model explanations and understand how the model has made its predictions. I can see that the overall finish quality, above ground living room area, and total basement square footage are the top three important factors that impact my model’s prediction. I can further click on any of these like overall finish quality, and understand that a lower finish quality impacts the price prediction negatively. This is a great sanity check that the model is doing the right thing. I can further go to the individual feature importance, click on one or a handful of data points and see how the model has made predictions for them. Further, when I come to the what-if counterfactual, what I am seeing here is for any of these houses, I can understand what is the minimum change I can apply to, for instance, this particular house? Which has actually a high probability of getting the prediction of less than median, so that the model predicts the opposite outcome. Looking at the counterfactuals for this one, only if the house had a higher overall quality from 6 to 10, then the model would predict that this house would sell for more than median. To conclude, I learned that my model is making predictions based on the factors that made sense to me as an expert, and I need to augment my data on the expensive old house category, and even potentially bring in more descriptive features that help the model learn about an expensive old house.

Now that we understood the model better, let’s provide house owners with insights as to what to improve in these houses to get a better price ask in the market. We only need some historic data of the housing market to do so. Now I go to the causal inference capabilities of this dashboard to achieve that. There are two different functionalities that could be quite helpful here. First, the aggregate causal effect which shows how changing a particular factor like garages, or fireplaces, or overall condition would impact the overall house price in this dataset on average. I can further go to the treatment policy to see the best future intervention, say switching it to screen porch. For instance, here I can see for some houses, if I want to invest in transforming a screen porch, for some houses, I need to shrink it or remove it. For some houses, it’s recommending me to expand on it. Finally, there’s also an individual causal effect capability that tells me how this works for a particular data point. This is a certain house. First, I can see how each factor would impact the actual price of the house in the market. I can even do causal what-if analysis, which is something like if I change the overall condition to a higher value, what boost I’m going to see in the housing price of this in the market.

Summary

We looked at how these tools help you identify and diagnose error in a house price prediction model and make effective data-driven decisions. Imagine if this was a model that predicted the cost of healthcare procedures or a model to detect potential money laundering behavior, identifying, diagnosing, or making effective data-driven decisions would have even higher consequences on people’s lives there. Learn more about the tool on aka.ms/responsibleaidashboard, and try it on Azure Machine Learning to boost trust in your AI driven solutions.

Questions and Answers

Breviu: Ethics in AI is something I’m very passionate about. There’s so much harm that can be done if it’s not thought about. I think that showing the different tools and the different kinds of thought processes that you have to go through in order to make sure that you’re making models that are going to not only predict well for accuracy, but also that they’re not going to cause harm.

Sameki: That is absolutely true. I feel like the technology is not going to slow down. We’re just starting with AI and we’re expanding on its capabilities and including it in more aspects of our lives, from financial scenarios, to healthcare scenarios, to even retail, our shopping experience and everything. It’s even more important to have technology that is accompanying that fast growth of AI and is taking care of all those harms in terms of understanding them, providing solutions or mitigations to them. I’m quite excited to build on these tools and help different companies operationalize this super complicated buzzword in practice, really.

Breviu: That’s true. In so many companies, they might want to do it, but they don’t really know how. I think it’s cool that you showed some of the different tools that are out there. There was that short link that you provided that was to go look at some of the different tools. You also mentioned some new tooling that is coming out, some data tooling.

Sameki: There are a couple of capabilities. One is, we completely realized that the model story is incomplete without the right data tools, or data story. Data is always a huge part, probably the most important part of a machine learning lifecycle. We are also accompanying this with more sophisticated data exploration and data mitigation library, which is going to land under the same Responsible AI toolbox. That will help you understand your data balances, and that also provides lots of APIs that can rebalance and resample parts of your data that are underrepresented. Besides this, at Microsoft Build, we’re going to release a variety of different capabilities of this dashboard integrated inside our Azure Machine Learning. If your team is on Azure Machine Learning, you will get easy access, not just to this Responsible AI dashboard and its platform, but also a scorecard, which is a report PDF, summarizing the insights of this dashboard for non-technical stakeholders. It was quite important for us to also work on that scorecard because there are tons of stakeholders involved in an end-to-end ML lifecycle. Many of those are not super data science savvy or super technical. There might be surgeons. There might be financial experts. There might be business managers. It was quite important for us to also create that scorecard to bridge the gap between super technical stakeholders and non-technical stakeholders in an ML lifecycle.

Breviu: That’s a really good point. You have the people that understand the data and how to build the model, but they might not understand the business application side of it. You have all these different people that need to be able to communicate and understand how their model is being understood. It’s cool that these tools can do that.

You talked about imbalanced data as well. What are some of the main contributing factors to ethical issues within models?

Sameki: Definitely, imbalanced data is one of them. That could mean many different things. You are completely underrepresenting a certain group in your data, or you are representing that group, but that group in the training data is associated with unfavorable outcomes. For instance, you have a certain ethnicity in your loan allocation AI dataset, however, all of the data points that you have from that ethnicity happen to have rejection on their loans. The model creates that association between that rejection and belonging to that ethnicity. Either not representing a certain group at all, or representing them but not checking whether they are represented well in terms of the outcome that is affiliated with them.

There are some other interesting things as well, after the data, which is probably the most important issue. Then there is the issue of problem definition. Sometimes you’re rushing to train a machine learning model on a problem, and so you’re using the wrong proxies as a predictor for something else. To give you a tangible example, to make it understandable, imagine you do have a particular model that you’re training in order to assign different risk scores to neighborhoods, like security scores. Then you realize that, how is that model trained? That model is trained on a data that is coming from arrest records of the police, imagine. Just using arrest records as a proxy into the security score of a neighborhood is a very wrong assumption to make because we all know that policing practices at least in the U.S. is quite unfair. It might be the case that there are more police officers deployed to certain areas that have certain ethnicities, and way less police officers to some other areas where there are some other ethnicities residing. Just because there are more police officers there, there might be more reporting of certain even like misdemeanors, or something that that police officer didn’t like, or whatever. That will bump up the number of arrest records. Using that purely for proxying to the safety score of that neighborhood, has that dangerous outcome of affiliation between the certain race residing in that neighborhood and the security of that neighborhood.

Breviu: When those kinds of questions come up, I think about, are we building a model that even should be built? Because there’s two kinds of questions when it comes to ethics in AI. It’s, is my model ethical? Then there’s the opposite, is it ethical to build my model? When you’re talking about arrest records, and that kind of thing, and using that, I start worrying about, what is that model going to actually do? What are they going to use that model for? Is there even a fair way to build the model on that type of data?

Sameki: I absolutely agree. A while ago, there was this project from Stanford, it was called Gaydar. It was a project, which was training machine learning models on top of bunch of photos that they had recorded and captured from the internet and from different public datasets. The outcome was to predict whether the person is belonging to the LGBTQ community or not, or gay or not. At that time, when I saw that I was like, who is supposed to use this and for what reason? I think that started getting a lot of attention in the media that, we know that maybe AI could do things like that, questionable, but maybe. What is the point of this model? Who is going to use it? How are we going to guarantee that this model is not going to be used to basically perpetuate biases, stuff like that, against the LGBTQ community that are historically marginalized? There are tons of deep questions that we have to ask that whether machine learning is an appropriate thing to do for a problem, and what type of consequences it could have. If we do have a legit case for AI, could be helpful to make processes more efficient, could be more helpful to expedite certain super lengthy processes. Then we have to accompany it with enough checks and balances, scorecards, and also terms and services as how people use that model. Make sure that we do have a means of hearing other people’s feedback in case they observe this model being misused in bad scenarios.

Breviu: That’s a really good example of one that just shouldn’t have happened. It always tends to be the marginalized, or the oppressed society, or parts of society that are hurt the most, and oftentimes aren’t necessarily the ones that are even involved in building it as well, which is one of the reasons why having a diverse set of engineering for these types of models. Because I guarantee you, if you had somebody that was part of that community building that model, they probably would have said, this is really offensive.

Sameki: They would catch it. I always knew about the focus of the companies on the concept of diversity and inclusion before I joined this Responsible AI effort, but now I understand it from a different point of view that, it matters, that we have representation from people who are impacted by that AI in the room to be able to catch these harms. This is an area where growth mindset is the most important. I am quite sure that even if we are systematic engineers that truly care about this area and put all of these checks and balances, stuff happens still. Because this is a very sociotechnical area where we cannot fully claim that we are debiasing a model. This is a concept that has been studied by philosophers and social scientists for centuries. We can’t come up suddenly out of the tech world and say, we’ve found a solution for it. I think progress could be made to figure out these harms, catching it early on, diagnosing why those happen. Mitigating them based on your knowledge, and documenting what you could not resolve and put some diverse groups of people in the decision making to catch some of those mistakes. Then, have a very beautiful feedback loop where you capture some thoughts from the audience and you are able to act fast and also very solid monitoring lifecycle.

Breviu: That’s actually a good point, because it’s not only just the ideation of it, should I do this? Ok, I should. Now I’m building it, now, make sure that it’s ethical. Then there’s the data drift and models getting stale and needing to monitor what’s happening in [inaudible 00:35:32], so make sure that it continues to be able to predict well, and do that.

Any of these AI tools that you’ve been showing, are they able to be used in a monitoring format as well?

Sameki: Yes. Most of these tools could be, for instance, the interpretability. We do have support of scoring time interpretability, which basically allows you to call the deployed model, get the model predictions, and then call the deployed explainer and get the model explanations for that prediction at runtime. The fairness error analysis pieces are a little trickier. Fairness, basically, you can also specify the favorable outcome, and you can keep monitoring that favorable outcome distribution across different ethnicities, different genders, different sensitive groups, whatever that means to you. For the rest of fairness metrics, or error analysis, and things like that, you might require, periodically upload some labeled data based on your new data, take a piece, maybe use crowdsourcing or human labelers to label that and then parse it. General answer is yes. There are some caveats. We’re also working on a very strong monitoring story that goes around these caveats and helps you monitor that during runtime.

Breviu: Another example, I think of ones where I’ve seen that make me uncomfortable, and this happens, like machine learning models as part of the interview process. It’s one that actually happens a lot. There’s already so many microaggressions and unconscious biases, that using a model like this in the interview process, and I’ve read so many stories about it as well, where having, just even on resumes, how quickly it actually is biased. How do you feel about that particular type of use case? Do you think these tools can work on that type of problem? Do you think we could solve it enough to where it would be ethical to use it in the interviewing process?

Sameki: I have seen both with some external companies, they’re using AI in candidate screening, and they have been interested in using the Responsible AI tools. LinkedIn is now also part of Microsoft family. I know LinkedIn is also very careful about how these models are trained, tested. I actually think these models could be great initial proxies to figure out some better candidates. However, it’s quite important that if you want to trust the top ranked candidates, it’s super important to understand how the model has picked that, and so look at the model explainability, because often, there has been this case of associations.

There are two examples that I can give you. I remember that once there was this public case study from LinkedIn, they had trained a model for job recommendations, how you go to LinkedIn and it says, apply for this and this. Then they realized early on that one of the ways that LinkedIn algorithm was using the profiles in order to match them with the job opportunities was the fact that the person was providing enough description about what they are doing, what are they passionate about? How you have a bio section and then you have your current position, which you can add text to. Then there was a follow-up study by LinkedIn which was mentioning that women tend to have less details shared there, so in a way women tend to market themselves in a less savvy way compared to men. That’s why men were getting better quality recommendations and a lot more matches compared to women or females, non-male, basically: females, non-binary. That was a very great wake-up call for LinkedIn, that, ok, this algorithm is doing this matching, we have to change it in order to not put too much emphasis. It’s great that they have this extra commentary and whatever. First of all, we have to maybe provide some recommendations to people who have not filled those sections as your profile is this much complete, how they give you signals as go and add more context.

Also, we have to revisit our algorithms to really look at the bare minimum stuff, like the latest position posted, experiences. Even then, women go on maternity leave and family care leaves all the time. I still feel like when we have these companies receiving so many candidates and resumes, there is some role that AI could play to bring some candidates up. However, before deploying it in production, we have to look at the examples. We have to also have a little team of diverse stakeholders in the loop to get those predictions and try to take a look at that from the point of view of diversity and inclusion, from the point of view of explainability of the AI, and interfere with some human rules in order to make sure it’s not unfair to some underrepresented candidates.

Breviu: That talks to the interesting thing, I think that you said, one of the beginning things is how the errors are not evenly distributed throughout the data. This is an example where, your model might get a really great accuracy but it was looking at the holistic approach, and realizing that on the non-male, female non-binary side that it was at a very high error rate. That’s like a really good example of that point that you made in the beginning, which I found really interesting. Because many times when we’re building these models, we’re looking at our overall accuracy rate and our validation and loss score. Those are looking at it as a holistic thing, not necessarily on an individual basis.

Sameki: It’s very interesting, because many people use platforms like Kaggle to learn about applied machine learning. Even in those platforms, we often see scoreboards where one factor is used to pick the winner, like accuracy of the model, area under curve, whatever that might be. That implicitly gives out that impression that, ok, there are a couple of proxies, if it’s good, great, go ahead and deploy. I think that’s the mindset that we would love to change in the market through this type of presentations that it’s great to look at your model goodness, accuracy, false positive rate, all those metrics that we’re familiar with for different types of problems. However, they’re not sufficient to tell you about the nuances of how that model is truly impacting the underrepresented groups. Or any blind spots, they’re not going to give you the blind spots. It’s not even always about fairness. Imagine, you realize that your model is 89% accurate or 95% accurate, but you realize that those 5% errors happen to happen for every single time we have this autonomous car AI, and the weather is foggy and dark and is rainy, and the pedestrian is a darker skin tone wearing dark clothes. Ninety-nine percent of the time the pedestrian is missed. That’s a huge safety and reliability issue that your model has. That’s a huge blind spot that is potentially killing people. If you go with one score about the goodness of the model, you’re missing that important information that your model has these blind spots.

Breviu: I think your point about Kaggle too in the ethics thing, kind of just shows where this ethics was an afterthought in a lot of this, and that’s why these popular platforms don’t really necessarily have those tools built in, as Azure Machine Learning does. I think also as we progress and people realize more just about like data privacy as well, I think as data scientists, we’ve always understood the importance of data privacy. I think now it’s becoming more mainstream. I think that part, and then understanding ethics more, I think it really will change how and the way that people build models and think about building models. I think AI is going to keep moving forward exponentially, in my opinion. It needs to move forward in an ethical, fully thought out way.

Sameki: We build all of these tools in the open source first, to help everyone explore these tools, augment it with us, build on it, and bring their own capabilities and components, and put it inside that Responsible AI dashboard. If you’re interested, check out our open source offering and send us a GitHub issue, send us your request. We are quite active on GitHub, and we’d love to hear your thoughts.

See more presentations with transcripts

MMS • Rebecca Parsons Rafiq Gemmail Craig Smith Shaaron A Alvares

Article originally posted on InfoQ. Visit InfoQ

Subscribe on:

Transcript

Shane Hastie: Good day folks. This is the whole InfoQ culture team and special guest for recording our podcast for the Trend Report for 2023. I’m Shane Hastie, I’m the lead editor for Culture & Methods on InfoQ. I’m going to shepherd our conversation today, but we’ll start and I’ll go around our virtual room and ask people to introduce themselves. We’ll start with our special guest, Rebecca Parsons.

Rebecca, thank you so much for joining us. Would you mind just telling us very briefly who’s Rebecca?

Introductions [00:36]

Rebecca Parsons: Well. Thank you Shane for having me. As you said, my name is Rebecca Parsons. I’m the chief technology officer for Thoughtworks and we’re a software consulting company that we basically write software for other people.

Shane Hastie: Welcome.

Rebecca Parsons: Thank you.

Shane Hastie: Craig Smith.

Craig Smith: I’m the business agility practice lead for SoftEd, and I work with you as well Shane. Glad to be a part of the team.

Shane Hastie: And Raf Gemmail.

Raf Gemmail: Hi. Yes, I’m Raf. I am an engineering manager with Marigold in the MarTech space and many years technical coaching, Agile teams.

Shane Hastie: Thanks Raf. Last but certainly not least, Shaaron.

Shaaron A Alvares: Thank you Shane for having me. Very excited to be here and to be with all of you. Shaaron Alveres, I’m located in Seattle, Washington and I am director of GT Agile Transformation and Delivery at Salesforce. I’m working closely with executives to help them get better. I’m supporting of them and their teams in operation and engineering at GT.

Shane Hastie: Welcome folks. This is our motley crew of editors, reporters, and contributors for the InfoQ Culture and Method Space. It’s wonderful to get us all together in one virtual space for a change. It doesn’t happen often, but what’s happening with the Culture and Methods trends for 2023? We were having a bit of a chat earlier and I think the big elephant in the room is the economy and the layoffs and everything that we’ve seen in that space.

What do people think? Shaaron, can we start with you?

The economy and layoffs in tech companies [02:11]

Shaaron A Alvares: Yeah, I’m happy to kick us off. You’re right, Shane, with this new recession, there’s a lot of changes happening in organization, priorities are shifting. Executives are more concerned at the moment about financial results, which led to a number of layoffs globally.

We’ve seen a lot of layoffs globally, in the US as well. Then that led to prioritizing high performance team, productivity, and efficiency. A huge impact I want to say on the culture aspect of organizations, on delivery as well, but a huge impact on culture and maybe a lost understanding how psychological safety is important for organization and teams.

Shane Hastie: Well, we’ve just come through two years where we’ve been focused on wellness and suddenly we’ve shifted. What’s happened?

Rebecca, what have you seen?

Rebecca Parsons: Well, I think when the boom was happening, the tech talent was very clearly in the driver’s seat. You add that to the pressures coming from the war in Ukraine, the global pandemic, increased visibility of these extreme weather events, the earthquake. All of these things have been contributing to a tremendous amount of stress. I think the response from many of the employers, both of the technology companies themselves, but also the enterprises who are trying to build up their own technology competency is they knew they had to fight to get the talent that they wanted.

Thinking about people-care was an opportunity to appeal to the needs of some of the people that they were trying to hire. I think as the macro economic pressures have changed, and we’ve heard this from some of the enterprises that we talk to that are not digital natives or not the technology companies, I don’t have to fight with Facebook anymore for talent, because Facebook’s just laid off all of these people or insert name of technology company here.

I think one of the interesting things that is particularly relevant from a culture and methods perspective is, okay, yes, you’ve got these people who are on the market, but are you going to actually be able to hire them as an enterprise? Even if you can hire them, are you going to be able to retain them when the market does turn around?

I’m reminded of a talk that Adrian Cockcroft gave several years ago where somebody from a more traditional enterprise said, “Well, yes, you can do all these things because you’ve got these wonderful people. And it’s like, but I hired those people from you. I just gave them an environment within which they could be productive.”

I do think we are going to see much more of a focus on how do we actually create an environment where we can get efficiencies and effectiveness, but we don’t impact time to market, because I don’t think we’re going to go back to that era around the turn of the century, where so many places still considered IT as a cost center and that meant you had to stabilize and you had to standardize and you had to lock things down. Consumers are not going to accept that, even in this downturn situation.

Shane Hastie: Thank you. Craig, what are your thoughts on this?

Craig Smith: Firstly, to anyone’s whose listening who’s been through that, I think we share their pain and things that they’re going through, but I think what I’m seeing from the outside is this is a little bit for a lot of organizations actually are a right-sizing that they needed to do that actually there’s a lot of waste that we see in organizations. Whilst all those people were effectively working on things, the size of the organization probably couldn’t sustain that.

What worries me though is that in this, were the leaders responsible for doing this, did they actually take the people from the right places? In other words, rather than just chopping off a whole side of a place here and moving it along, what I’m seeing in some of the organizations that I’ve been working with is that they’ll just say, “Look, we’re just going to get rid of these six teams over here,” but they weren’t the right places to get rid of.

The actual waste was in the actual mechanics of the organization. I think there’s also going to be a realization of that moving through, but you can argue for a lot of those tech companies, particularly in Silicon Valley and the like, maybe they were just too big for what they needed. I think the way we’re seeing some of these things play out in the media, whilst the way … as Ben was talking about … the way that’s played out and the real culture of the company may start to shine through, it’s also going to be interesting to see how this actually plays out in the end.

Do some of those organizations and now there’s a much more radicalized size they have, was that actually what they should have been in the first place? Was there just too many people doing too many things for the organization anyway?

Shane Hastie: Raf, your thoughts.

Raf Gemmail: Listening to them about the culture coming out and the discrepancy in styles of letting people go. One thing I’ve been reflecting on is I remember back to mid 2000s, I was in the environment of US banks and there would be culls every now and then, where people would just pop, pop, pop away from their desks. We went through a transformation after that I saw, where there was a lot more humanism in the workplace. Looking at what’s happened externally and to the people I know, the change management in letting people go in a more respectful fashion for some reason seems to be disappearing.

Yes, there may be an element of right sizing in all of this, but I completely agree with Craig, because when you are doing things with such haste, and maybe I’m projecting, inferring here, but when you’re doing things at such haste, the knock on impact, which I have seen, is that you are left with teams which may not reflect the capabilities and the topologies and communication channels you’d intentionally try to create in the first place. You will have a consequence of that.

I’m looking at it from the perspective of we’ve been through one big shift with COVID and maybe it took us in a particular direction, which I think was potentially positive. Now there’s this fear which is dollar driven, this financial fear, realizable fear. Maybe to some extent that is compromising a humanism as organizations, which have been trying to become more humanistic generally. That disturbs me a little bit.

Shane Hastie: Rebecca, can I put you on the spot? In your role at Thoughtworks, I’m assuming that you’ve had these conversations internally, have we got the right people and so forth? How do you keep that humanistic perspective at the fore?

Keeping workplaces humanistic when faced with pressure [08:29]

Rebecca Parsons: Well, I definitely agree it is one of those fundamental cultural aspects of companies. From the beginning, we have looked at our people as the most important asset that we have. If you truly believe that, and a lot of companies say it, but if you truly believe that, then you know that you have to look at things like capability and capacity and policies from that more humanistic perspective. I often get into debates with people when they’re looking at diversity programs and they want to go immediately to the business case.

Yes, there’s a very strong business case for this. There is a strong business case for having a strong employee value proposition, because turnover is expensive and replacing people is expensive. If you treat people well, you can make all of these wonderful business case arguments, but you can also look at it as it’s just the right thing to do. If you truly believe that your people are your most valuable asset, what do you do with valuable assets?

You invest in maintaining them and nurturing them and helping them grow. You have to look at it from that humanistic perspective. Even now, particularly given the options that are available to technologists, treating somebody poorly in a layoff. It sounds like that is something that is happening in many of the organizations right now, based on the froth that you hear in the social media realm. You’re just dooming yourself, in terms of your ability to hire, because there’s only so much you can get back in terms of that credibility when push comes to shove, okay, we have to do a layoff. Then you’ve got all of these people going off on Twitter and LinkedIn about just how wrong and how cruel, needlessly cruel the process was.