Transcript

Atkinson: I’m Astrid Atkinson. I’m going to be talking about applying tech to what is, in my mind, the greatest problem of our generation and of the world as it stands today. Some of you may have heard of climate change. It’s been becoming more popular in the news. I think within the last 5 or 10 years, we’ve really seen a transition from a general conception of climate change from being an issue that might potentially affect our grandchildren maybe 50 or 100 years from now, to one that is immediately affecting our grandparents, happening today. Bringing about increasingly dramatic impacts to everyday life. This is obviously a really big problem. I talk a lot with folks who are looking to figure out how to direct their careers and their work towards trying to address it. I do also talk a lot with people who have pretty much already given up. I think it’s easy when you look at a problem of this magnitude, and one on which we’re clearly not quite yet on the right track to take a look, shake your head and say, maybe it’s already too late. Maybe the change is already locked in. In my mind, there’s basically two futures ahead of us. In one of them, we get it right. We do the work. We figure out what’s required. It’s technology. It’s policy. It’s politics. It’s people. We put in the technology, and the policy, and the political, and the economic investment that’s required to effectively bend the curve on climate change and keep our warming within about 2 degrees, which is a generally agreed on limit for a livable planet.

Our future is perhaps not the same as our past, but not substantially worse, it’s a recognizable world. I get to hang out with my grandkids, maybe we even get to go skiing. Most of the world gets to continue with something that looks like life today. In the other version of the future, we don’t do that. We cease to take action. We fail to do any better than we’re currently doing. We say that the policy change that’s in place will be good enough. We go on with life as usual, and we get the 3 to 4 degrees or more of change that’s locked in for plans as they stand today. That is potentially catastrophic. We don’t necessarily get to keep the civilization that we have in this model. Maybe some of us do, but definitely not all of us, and it doesn’t look great. When I think about this, personally, I would rather spend my entire life and my whole career working for the first version of the future. Because if we don’t choose to do that, the second one is inevitable. That’s where I stand. That is why I’m in front of you here talking about using technology applications and using technology talent to attempt to address this existential problem.

How to Decarbonize Our Energy System

When we think about decarbonization, about 60%, about two-thirds of the problem is our energy system. The other 30% is things like land use, industrial use, those sorts of things. Those are important too. Energy is a really good place to focus, because it’s a really large problem. The really oversimplified 2-step version of how we decarbonize our energy system is step 1, we electrify everything, so that we can use energy sources that are clean and efficient. Step 2, we decarbonize the grid, which is our delivery system for electricity. Now we have two problems. Talking about the change that’s going to be required just really quickly. This is an older view of energy flowing through just the U.S.’s energy systems. You can see that we have energy coming in from a number of clean sources. Generally speaking, everything that’s currently a fossil source needs to move into that orange box up there. That’s a pretty large change.

In any version of how this goes, the grid needs to do a lot more work. We need to be distributing a lot more energy around. There are plenty of versions of this too where there’s a lot more disconnected generation and so forth. Just to put a frame around this problem. I’m using two sets of numbers on this slide. One is from the International Energy Agency’s Net Zero by 2050 plan, which is effectively international plan of record. One thing to note in that 2050 plan is that buried in the details is the surprising note that industrialized nations, U.S., UK, Australia, Western Europe, supposed to be fully decarbonized by 2035. I’m not sure it’s quite on anybody’s radar to the extent that it needs to be today. The other set of numbers here is from an NREL study on what it would take to decarbonize our electrical system, and therefore energy system by 2035, just looking at the U.S. Of course, this is a national problem, but these numbers are nicely contained and related in that way.

They have multiple scenarios in this view of what the future could look like, ranging from high nuclear to brand new technologies. Any version of this solution set means that we need a lot more renewables. We potentially need a couple of new or expanded baseload technologies. We need a lot of load to be flexible. We need a lot of interconnection between the places where generation can happen and the places where energy is produced. It’s this last one that’s actually really tricky, because any version of this plan relies on somewhere between a 2x to 5x increase of our existing transmission network capacity. I’ll talk a little bit about the transmission network and the distribution network in a little bit. You could effectively think of the transmission network as like the internet backbone of the grid. It’s the large long-distance wires that connect across very long distances. In general, it’s primarily responsible for carrying energy from the places where it’s generated from large generators to local regions. I’ll talk a little bit about different parts of the network in a bit. Any version of this requires very large expansion in that capacity. That’s a really big problem, because it typically takes 5, 10, 15, 30 years to build one new line. That’s a pretty big hidden blocker in any such transition plan. It makes the role of the network as it stands today, increasingly important.

What Role Can Software Play?

Now, also, any version of that plan involves bringing together a lot of different types of technologies. There’s multiple types of generation technologies. There’s solar. There’s wind. There’s hydro, nuclear, geothermal, biomass. There’s also a lot of demand side technologies, and any brief encounter with grid technologies will show you a whole ton of these. In general, all of these demand side technologies, everything from like controllable in-house heat pumps, to electric vehicle chargers, to batteries, fall under the general heading of distributed energy resources. All that really means is just energy resources that are in your home or business. They’re at the edges of the grid. They’re located very close to a part of the demand fabric of the grid. There’s something that is growing in importance in the grid of today and will be a cornerstone of the grid of the future. Because the grid needs to do a slightly different job than it does today. The grid of today is basically responsible for delivering energy from the faraway places where it’s generated to the places where it’s used, must always be completely balanced in real-time for supply and demand. The grid of the future, those things are all true, but we also need to use it to balance and move energy around over time. We need to be able to get energy from the time and place where it’s produced to the time and place where it’s needed. That means moving supply and demand around. That means storage. It also means management. I’m going to talk about what the technology applications around that look like.

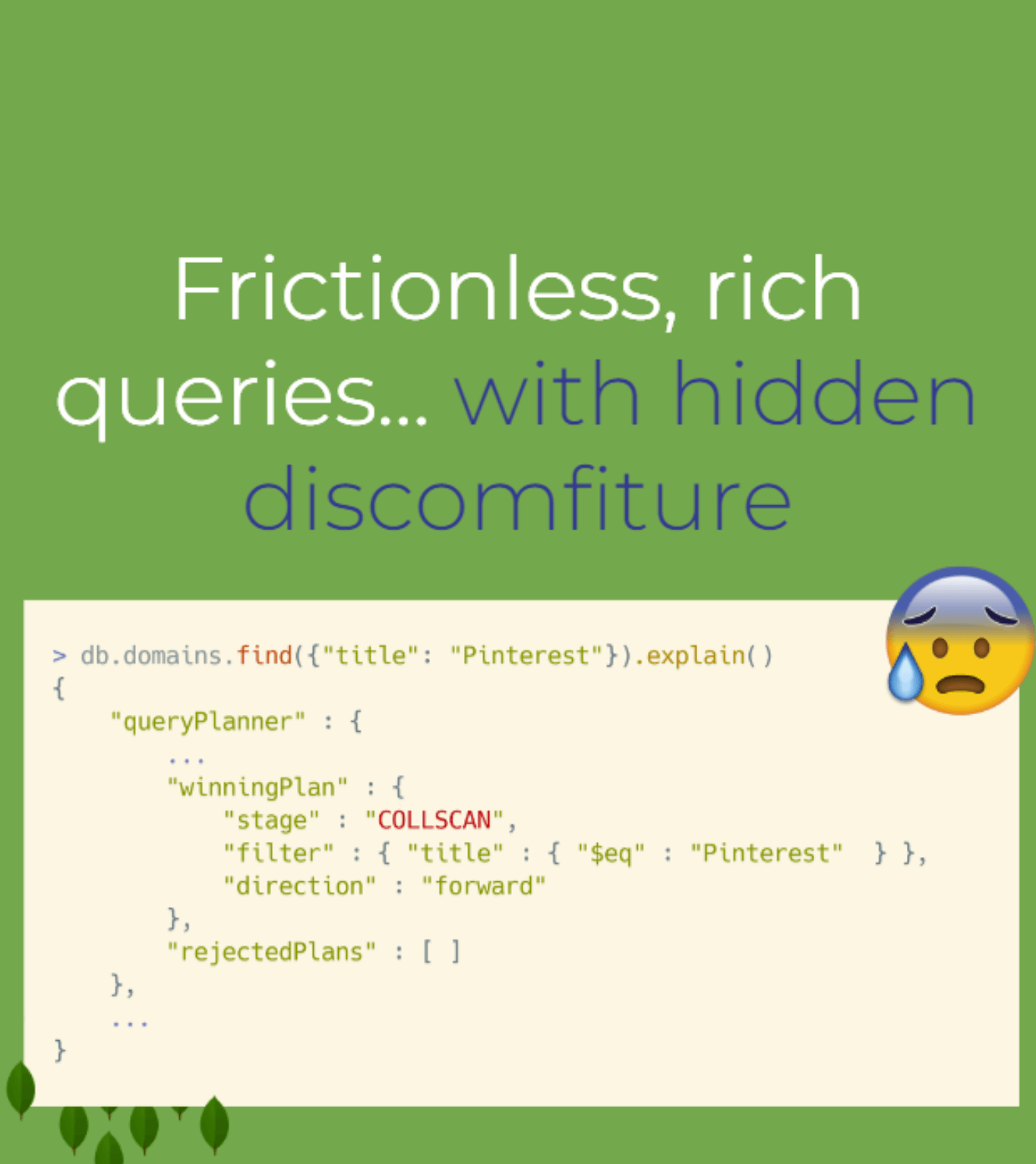

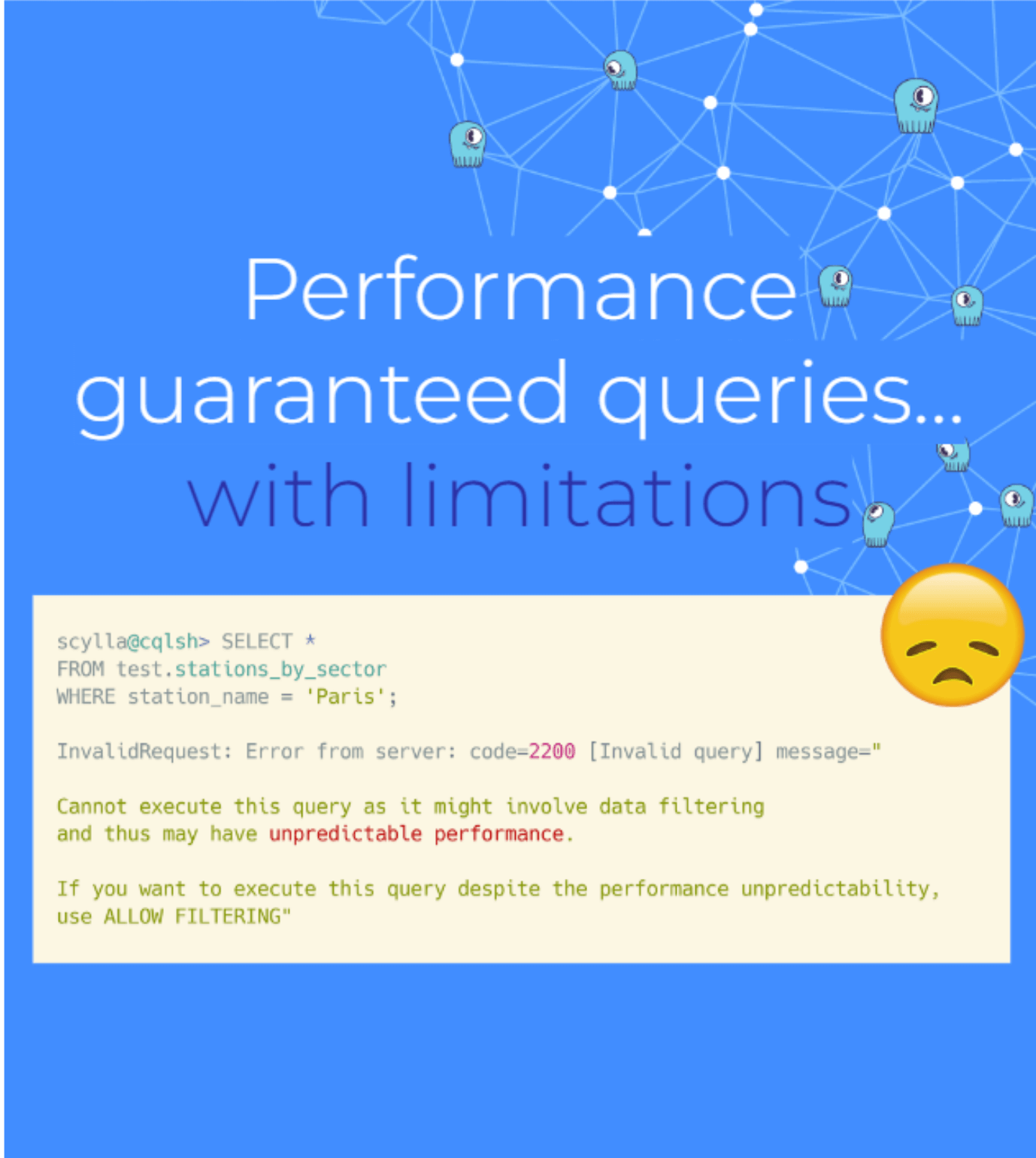

The big catch to all of this is that technology in the grid landscape is pretty outdated. There’s a few reasons for this. One is that when you are responsible for keeping things up and running, keeping the lights on, as many folks will have experience with, there’s an innate conservatism to that. You don’t want to mess with it. Another is just that this is a slow-moving legacy vertical with a lot of money and a lot of pressure on it. For various reasons, a lot of them being like conservatism around things like cybersecurity, almost all grid technology today is on-prem. That means that they don’t have access to the compute scale that you would need to handle real-time data from the grid. They think that data coming off the grid from something like a 15-minutely read on a smart meter is a lot. Anyone knows that that’s really not the case. It’s perhaps medium data, at best. It’s really not a great amount of data by distributed systems standards. If you’re running on-prem with just one computer, gets to be a lot.

The current state of the art in the grid space is basically fairly data sparse, not easily real-time for the majority of data sources. Really dependent on the idea of feeding in data from a small set of sources, to a physics model which emulates the grid, and then solves to tell you what’s happening at any particular point of it. This is nice, but it’s not at all the same thing as real-time monitoring. It is not the foundation for driving massive change, because the only thing you can know in that is the model. You cannot know the system. As anyone who’s worked with a distributed system knows, the data about the real-time system is the foundation of everything else that we do. That is how you know if things are working. It’s how you know how things are working. It’s how you plan for the future in an adaptive way.

Camus Energy – Zero-Carbon Grid Orchestration

That was really the genesis for me of founding my current company, which builds grid management software for people who operate grids, and that’s typically utilities. Suffice to say that, I think that there’s a really important and in fact urgent application of distributed systems technologies in this space, because we need these systems to be cloud native and we need that yesterday. We don’t need it because the cloud is someone else’s computer, we need it because the cloud is global scale or hyperscale computing. We need to be able to process large amounts of real-time data. We need to be able to solve complex real-time balancing optimization, machine learning AI type problems. We need to be able to do that in a very large scale and a very rapid way. That is the reason. That is the technology change that needs to happen in the grid space.

Distribution System vs. Distributed System

I’m going to talk a little bit about some terminology confusion here. I think of myself as a distributed systems engineer, or my work is distributed systems engineering. This is super confusing in the grid space, because people who work on the grid are used to thinking about the distribution system, which is the part of the grid that connects to your house, or your business, or whatever. Utilities like PG&E, ComEd, ConEd, SDG&E, are distribution utilities primarily. Whenever you say the word distribute or distributed, all they’re thinking about is a whole bunch of wires connecting to your house. Because of that, we’ve started to refer to what we do in the software space as hyperscale computing or cloud computing, just because it’s confusing. At its base, the technology change, and the transition that we need to make for the grid is really going from a centralized model of how the grid is operated and engineered, to a distributed model in which a lot of small resources play critical parts. Really, what we’re talking about here is making a distributed system for the distribution system. Again, confusing, but here we are. In fact, many of the terminology, many of the concepts and so forth, are surprisingly common. That’s really what I’m going to talk about, is the ways that we can apply lessons that we’ve learned from distributed systems design, architecture, engineering, and bring that into the grid space to accelerate the pace of change.

Background

I was at Google from about 2004. That was a really important and critical time for both Google but also for the industry. I was originally hired to work on Google’s Cloud Platform about two years before cloud platform was a thing. According to Wikipedia, the term the cloud was first used by Eric Schmidt in a public conference in 2006. In 2004, Google was rolling out its internal cloud and making a really significant transition from that centralized computing model with a few big resources to a highly distributed model of spreading work across millions of computers. This was something that was very new in the industry. We couldn’t hire anyone who had done it before. We were learning as we went. That was a really exciting time. This meme always makes me smile, because it’s from my time at Google. Also, because I put the flamingos on the dinosaur in about 2007 with other folks who are also working on this very large-scale distributed systems transition. It was actually intended to be a distributed systems joke. It was actually, like all the little systems coming to eat the dinosaur system. It was an April Fool’s Day joke. It was one that ended up having pretty significant legs. Years later, I would see someone come bringing flamingos to the dinosaur and just like popping on another flamingo. I love that because I think it also is like a nice metaphor for how systems become self-sustaining and evolve over time, once you see these really big paradigm shifts.

Reliability

The story of how you get from a few big servers to a bunch of little servers is a pretty well told one. Suffice to say that in any model, we’re going from a small number of reliable servers to a large number of distributed servers. This fabric of the system that distributes work across those machines, takes care of failures, lets you move work in a way that is aware of how that’s working within the system as a whole and lets you move work around under simple operator control. Is the fabric of this system that lets you get better reliability out of the system than you can get out of any individual piece of it. In the Google distributed systems context, and this is generally true for most cloud computing, there’s this set of backbone capabilities. I think of monitoring as being the foundation of all of them, because it is always the foundation of how to build a reliable system. There’s also ideas around orchestration, like getting work to machines, or getting work to places where work can be done in terms of load assignment, lifecycle management, fleet management. That’d be a container orchestration type system today. For Google it was Borg, but a Kubernetes or equivalent technology, I think, fits into that bucket. Load balancing, the ability to route work to be done to the capacity locations where work can be executed, is a fundamental glue technology of all distributed systems. As you get through larger systems, finding ways to introduce flexibility to that distribution of work really increases the reliability of the system overall.

As we were going through this process for Google, maintaining Google’s reliability at utility grade, five nines or better, was a core design requirement of all of the changes that we made. Thinking about how you carefully swap out one piece at a time in a system which has tens of thousands of microservice types, not just microservices or instances, and millions to billions of instances, is a high demand process, and one in which you need good foundations, and you need really good visibility. This isn’t just about the technology. It’s also about the tools which keep the system as a whole comprehensible and simple for operators, and allow a small group of operators to engage with it in a meaningful way.

Astrid’s Theory of Generalized Infra Development

Before we go on to talk about the grid, I just want to talk about patterns for how you build infrastructure in a generalizable way in this type of environment. This matters for the grid, because it’s actually very difficult to build systems without a utility. It’s also very difficult to build systems with a utility. In general, anytime you’re building a large-scale infrastructure system, you’re going to be working with real customers. You want to start with more than one, less than five. Very early in the lifecycle of an infrastructure development project, you want to go as big as you possibly intend to serve. Because if you don’t do it very early, you will never reach that scale. That was the successful repeatable pattern that I saw as we went through development of dozens to hundreds of infrastructure services at Google. It is also the one that we’re using in the grid landscape today.

What’s Hard About the Grid Today?

Let’s talk about the grid. Monitoring is a fairly fundamental part, I think, of any reliable and evolving distributed system. That’s actually one of the things that is really challenging in today’s grid landscape. I’ll just run through what that looks like in practice today. Here’s an example distribution grid. We’ve got basically a radial network that goes out to the edges. There’s a few different kinds of grid topologies, but this is a common one and the most cliched and complicated one. As we look at the parts of the grid, there’s the transmission network, which is the internet backbone of the grid today. A transmission network is actually fairly simple, it’s a mesh network, it does not have many nodes. It has thousands, not hundreds of thousands. It’s really well instrumented. It has real-time visibility. It is real-time operated today. Today’s independent system operators like CAISO, or MISO, or whoever, have pretty good visibility into what’s happening with very large-scale substations in a very large-scale network. Out here on the edges, there are some resources that participate in that ecosystem and those markets today, but they tend to be just a few. They’re really big, typically, commercial and industrial customers. They are also required to provide full scale real-time visibility to the transmission network in order to participate.

As we move forward, there’s a goal to have these distributed energy resources that are located at customer locations, become part of this infrastructure. No telemetry requirements yet, so it’s hard to see exactly what might be happening. It’s hard to say from anybody’s perspective what’s happening when they do this. This is the next step from the transmission network’s perspective. Recent Federal Energy Regulatory Commission Order 2222 mandates that this should be required, just doesn’t say how. Doesn’t say anything about telemetry, network management, integration. It’s a really good step, because forcing the outcome helps to force everybody to think about the mechanism.

On the distribution side, the data story is less good. For most distribution operators today, although they do have smart meters mostly, they typically can see what’s happening in any particular meter, 2 to 24 hours ago. This is due to slowness of data collection. They don’t typically have direct instrumentation on any part of the line below the substation. They also don’t necessarily have accurate models of connectivity for the meters to transformers, feeders, phases. Running that model I mentioned, is rather difficult if you don’t have either the data or the model. This is a really big blocker for adding any more stuff to the edges of the grid. It makes it very difficult to feel safe about any change you might possibly make, because you can’t see what is going on.

There’s lots of questions. Everything ok? What’s happening out there? Up until the last probably 5 or 10 years, planning and operations on the distribution network was literally forecasting load growth for the next 10 years. Then overbuilding the physical equipment of the grid by 10x. Then waiting for someone to call you, if anything caught on fire or the power was out. That was literally distribution operations. Obviously, if you’ve got a lot of stuff happening at the edges, that’s not so great. That is not necessarily sufficient. That’s not the model that we need if we want to be able to add a lot of solar, add a lot of battery, add a lot of EVs, whatever, but it is what we have had. The first step to being able to make any significant changes, is basically taking the data that we have, and starting to figure out like what can we do with it. This is the first place where that cloud scale, hyperscale distributed computing approach becomes really relevant. This also happens to be the first place where I’m going to talk about machine learning.

There is a lot of data out there, it’s just not very real-time. It’s not very comprehensive. For most utilities today, it’s also not correlated. When we look at the grid, being able to take the data that’s out there and get something like real-time is actually a really good application for machine learning technologies. There’s a bunch of things we can do. We can forecast and get a nowcast of what’s happening at any individual meter, both the demand and for solar generation. Once we have an accurate model of end loads, we can calculate midline loads. We can pull in third-party telemetry from the devices that are out there. Tesla have really good telemetry on their devices, and the ability to manage them in a large-scale way.

Then we can do a lot to patch together something usable out of the fabric that’s out there today. As we move forward, we need to do better. We need to get hardware instrumentation out there to the edges. We need real-time data from the meters. We need a lot of things. If we have to start there, we’re screwed because any project like that for a utility takes like 5 to 10 years. We need to start with the data that we have while we get the other stuff in place. As I talk about technology applications, yes, these will all be better with better data. We have to start with what we have. Figuring out how to start with what we have for the grid of today, and help take those steps that get us to the grid of tomorrow is nearly 100% of the work because, again, we want to be able to do this work on the grid as it stands by 2035. As far as grid technology goes, that is tomorrow.

The Promise of a 2-Way Grid

What are we building towards? Today’s distribution grid has a little bit of dynamism to it. The biggest factor that’s driving change in the last 5 to 10 years is the role of solar. Solar generation is not necessarily a large part of most grids today, but in some places like Australia and Hawaii, and so forth. Australia is actually the world leader on this, along with surprisingly Germany, where they have really a lot of rooftop solar. Energy supply provided by local rooftop solar is sometimes more than 50% of what’s used during the daytime, sometimes up to 80% or 90%. At that point, it causes really significant problems. The short thing is that as soon as you get above 10% or so, you start to see this curve and the pink line up here, this is called the duck curve. The more solar you get eating into the daytime portion of this curve, the deeper the back of the duck. In Australia they call it an Emu curve because Emus are birds with really long necks, also heard it called the dinosaur curve. This is the grid as it stands today. The steeper those ramps, in the morning and the evening, the larger the problem for ramping up and down traditional generation to fill in those gaps. Because, while those plants are considered to be reliable baseload and theoretically they’re manageable, and so forth, they also can take a day to ramp up and down, so they’re not very flexible. The other thing is that you start to move around things like voltage and frequency and so forth, as you get more of this solar present. The response from most utilities today has been like, slow down. We have an interconnection queue, we’ll do a study, or some version of no.

The grid of tomorrow needs to look quite different. We need to be supplying about three times the demand. We need a lot of that load to be flexible. We need a large role for battery. We need a lot of generation to be coming up locally, because we’re going to have trouble building all the transmission that we need. The more stuff happening locally, the better off we are. We need it to be manageable. We need it to be visible. We need it to be controllable. This is a really big step ahead of where we are today. If we’re talking about, how do we take steps to get from the grid as it stands to the grid as it needs to be? You can start with a few questions like, what’s out there? What’s it up to? Today, there’s not a lot. That’s really in large part, because this change is still starting to happen, because many utilities have pushed back on the addition of a lot of rooftop solar, or battery, or end user technology. It’s also because one of the big factors driving this change is going to be electric vehicles, and that’s really just starting to be a thing in a big way. For the grid as it stands today, there’s actually not that many network problems. Everyone’s been very successful in overbuilding the network, planning really effectively, keeping change slow enough that the pace of change within the utility can keep up with it.

As you start to get a little bit more, you do start to see places where individual parts of the network get stressed. In this picture, this little red triangle is a transformer connecting a couple of different locations where there are smart devices, let’s say EV services, and sometimes that transformer gets overloaded. We can replace it. It’s all right. The more devices we get out there, the more that starts to happen. This is particularly likely to be driven by EVs, but batteries are enough. Most houses run about 6-kilowatt peak load today. The kilowatt peak load of a Ford F-150 Lightning bidirectional charger is 19 kilowatts, which is about 3 to 4 houses worth of load all at once. This is enough to blow smoke out of transformers and catch them on fire.

Now we’ve got this question that utilities are starting to ask, which is, how do I stop that from happening? That is the first thing that they tend to come to. Then there’s, how do I know about this? How do I deal with it? Then you’ve got all these software companies, like mine showing up saying, “This is an easy problem for software, can totally manage this. We’ll just schedule in the vehicle’s charge, it’ll be fine.” A utility operator or engineer looks at this and they ask this question, “Software is great for software but these transformers can explode. If they explode, they catch stuff on fire.” This is true all the way up and down the grid. What you have here is not necessarily just a software problem, but now you have a systems problem, a culture problem, one that’s going to be familiar to anyone who’s worked on large scale systems transitions. This is also a trust problem.

Once you know what’s out there, you start to have some more questions, like, can I manage those resources? Could I schedule that EV charging? Could I get them to provide services to me? Can they help with the balancing or the frequency or the voltage problems that I might have? What’s the value of those services? Because I know from thinking about it for me, if I install some batteries, and PG&E comes knocking and they’re like, I want to manage your batteries. I’m like, get out. If they show up, and they’re like, “I have a program where we can automatically pay you for usage of those batteries, sometimes in a way that’s nondisruptive to your usage of that battery,” and I have a good feeling about PG&E, which is a separate question. I might say yes to that. That’s not an unreasonable thing. Batteries are expensive, I might want to defray the cost. Any model, when I talk about control, bear in mind that probably a very large part of this is also price signal driven. Or you’re ultimately going to be offering money to owners of resources, or aggregators of resources in order to get services from those resources. It’s a little bit of a secondary or an indirect operational signal. Nonetheless, a part of the fabric of the grid of the future.

Introducing Reliable Automation

We have a trust problem. I like to think of trust as a ladder. I’ve done a lot of automation of human-driven processes. This is something we had a lot of opportunity to do at Google as things were growing 10x, 100x, 1,000x, a million x, because the complexity of the system keeps scaling ahead of ability of humans to reason about it. There’s a lot of value to having a few simple controls that an operator can depend on, can use in a predictable way, understands the results of. Anytime you’re adding to that or automating something that they already do, that’s not something where you just go in and you’re like, “I solved it for you. Totally automated that problem.” If anyone here has done that, you know what response you get from the operations team, they’re like, get out. You have to come in a piece at a time. You need to keep it simple. You need to keep it predictable. Each step needs to be comprehensible, and it needs to be trustworthy.

Coming back to our little model of the grid, if we know a little bit about what’s happening, we’ve got something that we can start to work with. Like, do I know what’s happening at that transformer? Do I know what’s happening at the load points that are causing the problems? How much of it’s controllable? Do I have some levers? This is something that we can start to work with. Just keep in mind that as we go through this, the goal of automation is not necessarily just to automate things, or to write software, or whatever. The goal of it is to help humans understand the system and continue to be able to understand the system as it changes. Adding automation in ways that is layered and principled also tends to really map to the layers of abstraction, or it can map to layers of abstraction that help to build comprehensible systems as well. Automation is always best when it is doing that.

We can move up this ladder of automation or ladder of trust for an individual device. I can say like, ok, battery, I trust you to do your local job and back up the grid here. The easiest, most trustworthy way to have that happen is to say, I did an interconnection study for this battery, and I know that it is not capable of pushing more energy out at once than that transformer can manage. That’s what most utilities do today to keep a grip around it. That’s a default safety setting. However, as we start to get more of those, and those upgrades become very expensive, you need to start doing something a little bit smarter than that. You need to know what is your available capacity allocation at any particular point on the grid. This can be very simple. This can be like, I added up the size of all those batteries and I know that I have just this much left. It can also be something more sophisticated, and this is where being able to process our real-time data becomes important. Because if I can get real-time data about the transformer or synthesize it, I can get an idea of capacity allocation that is generally safe, outside of peak times, and then I can start to divide that up amongst the people who need to use it. Now I can safely give the operators the ability to call on these devices, in addition to the day-to-day work that the devices are doing. The simplest model for this is just to be able to get energy out or put energy in at a certain point in time. It’d be like, please charge during solar peak, please discharge in the evening during load peak. Once you have a default safe assumption that the device, when you call on it, won’t blow anything up, you begin to have the ability to get it to do other stuff for you. It is more trustworthy now.

That lets you start to look at it not just at the device level, but also at the system level. Now we know, what’s my capacity all the way upline, from the transformer to the conductor, which is the line, to the upline transformers, to the feeder head, to the substation. I can provide a dynamic allocation that basically lets me begin to virtualize my network capacity. This is not what we do in the grid today, but it is very much what we hope to do in the near future. This is the next step, being able to manage those devices and call upon them in a way that is respectful of limits all the way up and down the line. Then to be able to do that in a way that manages the collective capacity of the grid while also calling on services. This is when we can start to do things like orchestration. I really think of orchestration as being collective management to achieve a goal. It’s not just, can I turn a device on and off, or can I stop it from blowing up a transformer? It’s really, can I collectively look at this set of assets and call on it in a way that optimizes something about the system that I want to optimize, cost, carbon, in these particular cases, while maintaining reliability. This is the fundamental unit of automation that we need for the future grid.

Coming back to our question, yes, of course software can be trustworthy. We do this every day. This is the work of most of the people in this room. We know that it can be with caveats. It just means doing the work. It’s not just doing the technical work though, and that’s the really important part here. It also means doing the systems work. One important side note is that whenever you’re doing systems level work in systems that have a very low tolerance of failure, and this is true for things like self-driving cars, for aerospace applications, self-landing rockets, all of these sorts of things, the role of the simulation environment goes up in importance. If you can’t test safely in the wild, you have to test in sim. This is something that was a really big lesson in transitioning from an environment like Google, where in general, you can test in the wild, to something like rocketry or the grid. I actually spent quite a lot of time talking to a friend who worked on aerospace applications to understand the appropriate role for simulation and guaranteeing safety in a dangerous physical system.

Once you have that, the process of rolling out change in a large-scale physical system like the grid, it’s very similar to rolling out change in any distributed system software environment. You’re going to test it. You’re going to canary it. You’re going to deploy it slowly. You’re going to monitor it. Now you have a repeatable pattern that lets you begin to engage with change in a large scale, in a software driven grid management environment. If you’re curious where we’re at with this, we have several grids that are up and running with control that is in this model. This has proven to be really useful in the grid landscape, and something that I think is a really important learning that we brought in with us from the distributed system space.

The Self-Driving Grid: Reliable High-Scale Automation

What this opens up is the opportunity to really start to look at very large-scale automation. Ultimately, this will be big. It isn’t today, but it needs to be in order to solve the problem that is ahead of us. We need millions of devices. We need a lot of load growth. We need a lot of flexibility. We need it to be simple. As we start to look at that, thinking about the kinds of patterns that make scale predictable, reliable, manageable, and ultimately simple for distributed systems, you’re looking at the unit of a cluster deployment or a data center, or a region, or a service spread across multiple regions. You’re starting to think about, what are those units of self-management or resilience that let you start to think about this, not as a bunch of junk parts, but as a system that you can reason about. Where you could basically set some pieces of it, and expect them to run in a roughly self-sustaining way over a period of time requiring only occasional operator intervention. Starting to think of regions within the grid as being effectively like a cluster level deployment of a service. Where they’re able to operate under a consistent policy within the local set of resources, is a way of thinking about grid scale, that I think maps pretty well from the distributed systems environment up to the full grid environment. This isn’t just me, either. This is a pretty active line of inquiry amongst grid researchers, utilities. This is the model that we think can and will work, is this idea of a fractal grid or a hierarchical grid.

Critical to having the flexibility required to get useful outputs from all of these disparate services, though, is some easily operator controllable flexibility resource. This is where I want to come back to the idea of caching. As we look at what it took to scale up our distributed systems in the web services, or the internet services space, we did start with a very centralized model of capacity allocation, or serving capacity. You’ve got a big data center, you put all the load there. You might have a second one as a backup. When you start thinking about multiple data center locations, you’re also thinking about things like n plus 1 capacity, failover capacity. At first, you’re going to start with something relatively simple. This is basically where the grid is today. A few big generators, n plus 1. Planning for n plus 1 and n plus 2 is a really big thing in the grid as it is for distributed systems. You’re going to forecast load in the grid today and turn generation up and down as needed.

Coming back to our distributed systems example, as you get more demand, the first step is adding more capacity locations, more data centers, more computers. The next step, almost immediately after that, or alongside that, is the idea of caching, being able to have some lightweight compute cost optimal resources that can serve some fraction of your traffic. At the point where the constraint is not CPU or serving resource, but rather the network itself, the role of the cache changes. This is where at Google, in our early days, when we were looking at scaling problems, we were really dealing with capacity constrained scaling, because the constrained resource was compute for web search. The bandwidth piece of that is very small, a web search request or response is not terribly large. As soon as YouTube came along, suddenly, we had a different problem. Now it’s a network cost problem. For Google, this was 100% driven by reliably serving cat videos, which is very important to all of us. I also like dog videos, too.

This is the point at which we started to need to look at a distributed edge caching layer. What this gave Google was basically the ability to not just use caching to defray network costs, to stop that request from traversing all the way across the network. Also, start to use caching as a point of flexibility that increased the reliability of the system from the user’s perspective. If you think about it from the user’s perspective, like your connection to the network might sometimes be down but you can get a result from your browser cache. Your ISP’s connection to the network might be down, or Google may be down or flickering for a second or something, it could occasionally happen, but you can get a response from a local cache if there’s a response available there. It’s not something that replaces the reliability of the central resources, but it is something that substantially augments it. Starting to put these edge caching layers in place, let us get from a point at Google where we were maintaining basically a central serving system at five nines, including the network. The system was ultimately better than six nines reliable and effectively 100% from a user perspective. There’s no reason that it must stay that way, but that was an outcome of this system.

If we start to look at this in the grid perspective, what’s a cache? A battery is a cache. Thinking about the role of batteries in the future grid, it’s not just about energy storage, and being able to store that solar and use it again later. It’s also about providing the flexibility that allows us to use the network a lot more efficiently, and also provide a lot more control and a lot more margin for error for the control that we provide. This is something that I think will be really transformative for surprising resources. This is something that we start to see in the field today. In the limit, we start to have a model of the future grid that looks familiar. We’ve got a bunch of independent serving locations, that are potentially getting policies or updates or whatever, to provide an effective collective management at the system level. Perhaps they can even entirely isolate themselves sometimes or much of the time. Hopefully, they can also provide network services, if paid to do so.

I say, hopefully, because there is a model where this all falls apart as well, and the economics of the collective grid become crushed under people’s desire to go and just like defect and build their own power plants locally. That would be bad, from a tragedy of the commons’ perspective, because when you look at a model of the grid that is not providing any form of economic central connectivity, you’ve got a whole bunch of people who are no longer connected and can no longer effectively receive services. We like the model of the grid being available, because it lets us optimize a lot of things. It optimizes the use of the network. It optimizes the use of the resources. It lets us move energy from places where the wind is blowing to where it’s not, where the sun is shining to where it’s not. Let’s us move resources around and provide a substantial efficiency boost for the generation and the network resources. It isn’t a default outcome, we have to build this if we want it to happen.

Just bringing it back around to like, why are we talking about this in the first place? This is not an academic exercise. It’s not just because we want people to be able to get paid for use of their batteries. It’s not even just because we want transformers not to blow up. It’s because we really need the flexibility that customers and end users provide to be that last 20% of decarbonization of the grid and up our energy supply. If we can do that, we have a really good chance of making our deadlines. We might make it by 2035. We might make it by 2040. 2040 is not bad. 2030 would be the best. Every day that we make it is another day that we’re not emitting the carbon that we have been in the past, and it’s one step towards a better future.

Is This a Job for AI?

As a closing thought, I’m just going to touch on whether or not we will eventually have a giant AI to operate the grid. My short answer to that is no one giant AI, probably, for a couple reasons. One is that predictability and transparency is really important in a system like this, where there are many participants. It needs to be comprehensible, predictable, and it also needs to be something where particularly the financial implications of any action taken are very clear. Having said that, it is a very complex problem. There are a lot of opportunities for ML and AI to make it better. In the limit, I do expect a very large role for AI in optimization and scheduling, and continue to understand and be able to effectively manage the changing patterns of energy usage, to do all of the things that are on this slide. My happy message is that that is something we can evolve as we go. We can start from the tools that we have. There is an opportunity for everyone to find really interesting technical work in the fields that you’re interested in engaging with the grid, whether it’s just simply from a systems and an operation’s perspective, or whether you’re an AI and an ML researcher, and you see ways to really dramatically improve the operations of these systems.

Conclusion

There’s a couple of other resources that are really helpful for engineers who are looking to make a change into the climate space. We are not the only company working in this space, there are a number of very fine ones. I would really encourage you to consider moving in the climate and clean tech direction as you think about where your career goes in the future. Because there is no more important work that we can be doing today.

See more presentations with transcripts