Month: June 2023

MMS • RSS

The Internet of Things (IoT) has become an integral part of our daily lives, with billions of devices connected to the internet, collecting and sharing data. This data explosion has led to a growing need for efficient and scalable data management solutions. One such solution that has emerged as a game-changer in this space is Distributed SQL.

Distributed SQL is a new breed of databases that combines the best of both worlds: the scalability and fault tolerance of NoSQL databases, and the strong consistency and transactional capabilities of traditional relational databases. This powerful combination enables organizations to manage their IoT data at scale, while ensuring data integrity and consistency.

One of the key challenges faced by organizations in the IoT space is the sheer volume of data generated by connected devices. Traditional databases, which were designed for smaller, more predictable workloads, struggle to keep up with the demands of IoT data. This is where Distributed SQL comes in, offering a scalable solution that can handle the ever-increasing data volumes.

Distributed SQL databases are designed to scale horizontally, meaning that they can easily accommodate more data by adding more nodes to the system. This makes them an ideal choice for IoT applications, which often require the ability to store and process massive amounts of data. Additionally, Distributed SQL databases are built to be fault-tolerant, ensuring that data remains available even in the face of hardware failures or network outages. This is particularly important for IoT applications, where data loss or downtime can have significant consequences.

Another challenge faced by organizations in the IoT space is the need for real-time data processing and analysis. IoT devices generate data continuously, and this data often needs to be processed and analyzed in real-time to enable timely decision-making and action. Distributed SQL databases are well-suited to this task, as they are designed to support high-performance, low-latency transactions.

Moreover, Distributed SQL databases offer strong consistency guarantees, ensuring that data remains accurate and up-to-date across all nodes in the system. This is particularly important for IoT applications, where data consistency is critical for accurate decision-making and analytics. In contrast, many NoSQL databases sacrifice consistency in favor of availability and partition tolerance, which can lead to data inconsistencies and inaccuracies.

In addition to these technical benefits, Distributed SQL databases also offer several operational advantages for organizations managing IoT data at scale. For instance, they typically require less manual intervention and maintenance than traditional databases, as they are designed to automatically handle tasks such as data distribution, replication, and recovery. This can help organizations reduce the operational overhead associated with managing large-scale IoT data.

Furthermore, Distributed SQL databases are often cloud-native, meaning that they are designed to run seamlessly in cloud environments. This enables organizations to take advantage of the flexibility, scalability, and cost-efficiency offered by cloud computing, while still maintaining the performance and consistency guarantees of traditional relational databases.

In conclusion, Distributed SQL is a powerful solution for organizations looking to manage their IoT data at scale. By combining the scalability and fault tolerance of NoSQL databases with the strong consistency and transactional capabilities of traditional relational databases, Distributed SQL offers a robust and efficient way to store, process, and analyze the massive amounts of data generated by IoT devices. As the IoT continues to grow and evolve, Distributed SQL databases are poised to play an increasingly important role in helping organizations harness the full potential of their IoT data.

MMS • Matt Campbell

AWS has released AWS Signer Container Image Signing (AWS Signer) to provide native AWS support for signing and verifying container images in registries such as Amazon Elastic Container Registry (Amazon ECR). AWS Signer manages code signing certificates, public and private keys, and provides lifecycle management tooling.

Additional features include cross-account signing, signature validity duration, and profile lifecycle management. Cross-account signing allows for signing profiles to be created and managed in restricted accounts reducing the number of individuals with those permissions. Permission can be granted to other accounts as needed to sign artifacts. AWS CloudTrail can be used to provide audit logs of activities within both accounts.

Signature validity periods can be used to create self-expiring profiles. When creating a signing profile, a validity period can be specified; if no validity period is provided a default value of 135 months (the maximum value) is used.

aws signer put-signing-profile

--profile-name my_conatiner_signing_profile

--platform-id Notation-OCI-SHA384-ECDSA

--signature-validity-period value=10, type='MONTHS'

Profiles can also be canceled or revoked. Canceled profiles are unable to be used to generate new signatures. Revoking the signing profile will invalidate any signatures created after the revocation occurs. This differs from revoking the signature which will cause validation to fail when attempting to deploy a container signed with that signature. Note that revocation is irreversible. The following example shows using the Java SDK to revoke a signature:

package com.examples;

import com.amazonaws.auth.profile.ProfileCredentialsProvider;

import com.amazonaws.services.signer.AWSSigner;

import com.amazonaws.services.signer.AWSSignerClient;

import com.amazonaws.services.signer.model.RevokeSignatureRequest;

public class RevokeSignature {

public static void main(String[] s) {

String credentialsProfile = "default";

String signingJobId = "jobID";

String revokeReason = "Reason for revocation";

// Create a client.

final AWSSigner client = AWSSignerClient.builder()

.withRegion("region")

.withCredentials(new ProfileCredentialsProvider(credentialsProfile))

.build();

// Revoke a signing job

client.revokeSignature(new RevokeSignatureRequest()

.withJobId(signingJobId)

.withReason(revokeReason));

}

}

AWS Signer is integrated with Notation, an open-source Cloud Native Computing Foundation (CNCF) Notary project. As described on the Notation GitHub page, Notation can be viewed as providing “similar security to checking git commit signatures, although the signatures are generic and can be used for additional purposes”. Notation makes use of Open Containers Initiative (OCI) distribution features to store signatures and artifacts in the registry with their associated images.

AWS Signer also integrates with AWS Lambda. This allows for workflows where certified code packages can be generated that can be verified by AWS Lambda. The workflow requires the creation of source and destination S3 buckets. AWS Signer can then be used to pull code packages from the source bucket, sign them, and deposit them into the destination bucket.

More details about AWS Signer can be found in the release blog post. At the time of writing AWS Signer can be used with AWS Lambda, Amazon FreeRTOS, AWS IoT Device Management, Amazon ECR, Amazon EKS, AWS Certificate Manager, and CloudTrail. A list of supported regions is available within the AWS documentation.

GitLab 16: Value Stream Dashboards, Remote Development Workspaces, and AI-Powered Code Suggestions

MMS • Aditya Kulkarni

GitLab has recently announced version 16, which introduces several enhancements. These include Value Streams Dashboards, generative Artificial intelligence (AI)-powered code suggestions, and workflow features with remote development workspaces (in beta).

Kai Armstrong, Senior Product Manager at GitLab summarised the updates in a blog post. GitLab 16 introduces Value Stream Dashboards, which enable continuous improvement of software delivery workflows by measuring value stream lifecycle and vulnerability metrics. The value stream lifecycle metrics utilize value stream analytics, incorporating the four DevOps Research and Assessment (DORA) metrics as part of the package. The Value Streams Dashboard is a highly customizable interface that enhances visibility by displaying trends, patterns, and opportunities for digital transformation improvements.

Value streams are gaining increased traction within organizations as they also align with the shift in mindset from project management and waterfall approaches to product management.

To amplify efficiency and productivity across various GitLab features, updates have been made to GitLab Duo, a suite of AI capabilities, that leverage the power of generative AI. Included in GitLab Ultimate, GitLab Duo provides various features, such as Summarising Merge Requests, Suggest Reviewers, Explain This Vulnerability, and more, to assist in the software development lifecycle. Improvements are made to Code Suggestions, with support for 13 programming languages. Code Suggestions is currently available in VS Code via the GitLab Workflow Extension.

AI and ML systems help organizations discover new opportunities and products. According to Zorina Alliata, senior software managers should understand the basics of AI and ML. Although roles like data scientist, data engineer, and ML engineer may not exist in current software engineering teams, leaders should be prepared to hire and train for these positions.

In addition to various other enhancements, GitLab 16 introduced a beta release of Remote Development Workspaces, which are ephemeral development environments in the cloud. Based on the AMD64 architecture, each workspace is equipped with its unique set of dependencies, libraries, and tools.

Omnibus GitLab, a method of packaging the necessary services and tools for running GitLab, has received updates to simplify the installation process for most users, eliminating the need for complex configurations. This update has garnered attention, as highlighted in a Reddit post by the original poster omenosdev, who mentioned in the comments, “…but I know some people have been waiting a long while to be able to deploy GitLab on RHEL 9…”

Armstrong thanked the wider GitLab community for the 304 contributions they provided to GitLab 16 and has asked the readers to take note of upcoming releases via the Product Kickoff Review page. The updates are made to the page on the 22nd of each month. As of this writing, GitLab 16.1 is released.

Microsoft Azure Event Grid MQTT Protocol Support and Pull Message Delivery Are Now in Public Preview

MMS • Steef-Jan Wiggers

Microsoft recently announced the public preview of bi-directional communication via MQTT version 5 and MQTT version 3.1.1 protocols for its Azure Event Grid service.

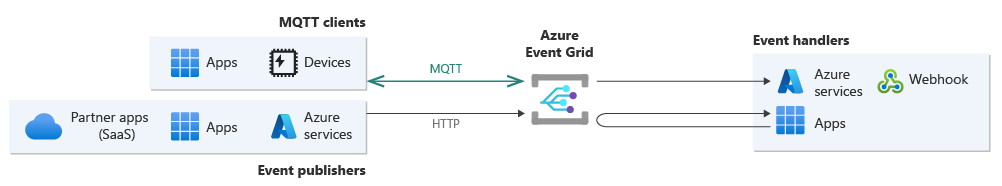

Azure Event Grid is Microsoft’s cloud-based event-routing service that enables customers to create, manage, and route events from various sources to different destinations or subscribers. It allows them to build data pipelines with device data, integrate applications, and build event-driven serverless architectures.

Microsoft has expanded the Event Grid capabilities to enhance system interoperability by introducing support for MQTT v3.1.1 and v5.0 protocols. This allows customers to publish and subscribe to messages for Internet of Things (IoT) solutions. This new support, currently available in public preview, complements the existing support for CloudEvents 1.0.

Ashita Rastogi, a Product Manager at Microsoft, explains in a tech community blog post:

With this public preview release, customers can securely connect MQTT clients to Azure Event Grid, get fine-grained access control to permit pub-sub on hierarchical topics, and route MQTT messages to other Azure services or 3rd party applications via Event Grid subscriptions. Key features supported in this release include MQTT over Websockets, persistent sessions, user properties, message expiry interval, topic aliases, and request-response.

Source: https://learn.microsoft.com/en-us/azure/event-grid/overview

In a YouTube Video on the new MQTT broker functionality in Azure Event Grid, George Guirguis, a Product Manager of Azure Messaging at Microsoft, mentioned what users can do with the MQTT messages:

This will enable you to leverage your messages for data analysis storage or visualization, among other use cases.

Furthermore, Microsoft has responded to customer demands for processing events in highly secure environments by introducing additional “pull” capabilities (also in public preview) to the service. Alongside the MQTT support, these capabilities eliminate the need for configuring a public endpoint and provide customers with enhanced control over message consumption rates, volume, and throughput.

Customers can leverage the new capabilities of MQTT support and pull delivery through the introduction of a resource called Event Grid Namespace. This Namespace simplifies resource management and provides an HTTP and an MQTT endpoint. In addition, this release explicitly supports pull delivery for topics within the Namespace resource. Additionally, the Namespace encompasses MQTT-related resources such as clients, certificates, client groups, and permission bindings.

AWS EventBridge and Google Eventarc offer similar capabilities to Azure Event Grid, allowing companies to build event-driven architectures and enable communication and coordination between different components of your applications or services. In addition, they offer other messaging services like AWS SNS, AWS SQS, and Google Pub/Sub.

Clemens Vasters, a Principal Architect of Messaging Services and Standards at Microsoft, commented in a LinkedIn post:

Azure Messaging supports OASIS AMQP 1.0 as its primary messaging protocol across the fleet, we support the open protocol of Apache Kafka, we support HTTP and WebSockets, and we now pick up OASIS MQTT 5.0/3.1.1 with a full implementation. We literally have no proprietary protocol in active use.

Currently, the public preview of the new capabilities of MQTT support and pull delivery is available in East US, Central US, South Central US, West US 2, East Asia, Southeast Asia, North Europe, West Europe, and UAE North Azure regions.

Lastly, Azure Event Grid uses a pay-per-event pricing model; its pricing details are available on the pricing page.

MMS • Renato Losio

AWS recently announces support for dead-letter queue redrive in SQS using the AWS SDK or the Command Line Interface. The new capability allows developers to move unconsumed messages out of an existing dead-letter queue and back to their source queue.

When errors occur, SQS moves the unconsumed message to a dead-letter queue (DLQ), allowing developers to inspect messages that are not consumed successfully and debug their application failures. Sébastien Stormacq, principal developer advocate at AWS, explains:

Each time a consumer application picks up a message for processing, the message receive count is incremented by 1. When ReceiveCount > maxReceiveCount, Amazon SQS moves the message to your designated DLQ for human analysis and debugging. You generally associate alarms with the DLQ to send notifications when such events happen.

Once the failed message has been debugged or the consumer application is available to consume it, the new redrive capability moves the messages back to the source queue, programmatically managing the lifecycle of the unconsumed messages at scale in distributed systems.

In the past, it was only possible to handle messages manually in the console, with Jeremy Daly, CEO and Founder of Ampt, writing at the time:

It’s not a feature, it’s not an API, it’s an “experience” only available in the AWS Console. Do I want it? Yes! Do I want to log in to the AWS Console to use it? Absolutely not.

To reprocess DLQ messages, developers can use the following tasks: StartMessageMoveTask, to start a new message movement task from the dead-letter queue, CancelMessageMoveTask, to cancel the message movement task, and ListMessageMoveTasks, to get the most recent message movement tasks (up to 10) for a specified source queue.

The feature has been well received by the community with Tiago Barbosa, head of cloud and platforms at MUSIC Tribe, commenting:

This is a nice improvement. One of the things I never liked about using DLQs was the need to build the mechanism to re-process the items that ended up there.

Benjamen Pyle, CTO at Curantis Solutions, wrote an article on how to redrive messages with Golang and Step Functions.

In the configuration of a DLQ, it is possible to specify if the messages should be sent back to their source queue or another queue, using an ARN for the custom destination option. Luc van Donkersgoed, lead engineer at PostNL and AWS Serverless Hero, tweets:

Just redrive to the original queue would have been nice. This is EXTRA nice because it allows us to specify any destination queue. That’s a whole class of Lambda Functions… POOF, gone.

The documentation highlights a few limitations: SQS supports dead-letter queue redrive only for standard queues and does not support filtering and modifying messages while reprocessing them. Furthermore, a DLQ redrive task can run for a maximum of 36 hours, with a maximum of 100 active redrive tasks per account. Some developers question instead the lack of support in Step Functions.

SQS does not create a DLQ automatically, the queue must be created and configured before receiving unconsumed messages.

MMS • Michael Redlich

Day Three of the 9th annual QCon New York conference was held on June 15th, 2023 at the New York Marriott at the Brooklyn Bridge in Brooklyn, New York. This three-day event is organized by C4Media, a software media company focused on unbiased content and information in the enterprise development community and creators of InfoQ and QCon. It included a keynote address by Suhail Patel and presentations from these four tracks:

Morgan Casey, Program Manager at C4Media, and Danny Latimer, Content Product Manager at C4Media, kicked off the day three activities by welcoming the attendees. They introduced the Program Committee, namely: Aysylu Greenberg, Frank Greco, Sarah Wells, Hien Luu, Michelle Brush, Ian Thomas and Werner Schuster; and acknowledged the QCon New York staff and volunteers. The aforementioned track leads for Day Three introduced themselves and described the presentations in their respective tracks.

Keynote Address: The Joy of Building Large Scale Systems

Suhail Patel, Staff Engineer at Monzo, presented a keynote entitled, The Joy of Building Large Scale Systems. On his opening slide, which Patel stated was also his conclusion, asked why the following is true:

Many of the systems (databases, caches, queues, etc.) that we rely on are grounded on quite poor assumptions for the hardware of today.

He characterized his keynote as a retrospective of where we have been in the industry. As the title suggests, Patel stated that developers have “the joy of building large scale systems, but the pain of operating them.” After showing a behind-the-scenes view of the required microservices for an application in which a Monzo customer uses their debit card, he introduced: binary trees, a tree data structure where each node has at most two children; and a comparison of latency numbers, as assembled by Jonas Bonér, Founder and CTO of Lightbend, that every developer should know. Examples of latency data included: disk seek, main memory reference and L1/L2 cache references. Patel then described how to search a binary tree, insert nodes and rebalance a binary tree as necessary. After a discussion of traditional hard drives, defragmentation and comparisons of random and sequential I/O as outlined in the blog post by Adam Jacobs, Chief Scientist at 1010data, he provided analytical data of how disks, CPUs and networks have been evolving and getting faster. “Faster hardware == more throughput,” Patel maintained. However, despite the advances in CPUs and networks, “The free lunch is over,” he said, referring to a March 2005 technical article by Herb Sutter, Software Architect at Microsoft and Chair of the ISO C++ Standards Committee, that discussed the slowing down of Moore’s Law and how the drastic increases in CPU clock speed were coming to an end. Sutter maintained:

No matter how fast processors get, software consistently finds new ways to eat up the extra speed. Make a CPU ten times as fast, and software will usually find ten times as much to do (or, in some cases, will feel at liberty to do it ten times less efficiently).

Since 2005, there has been a revolution in the era of cloud computing. As Patel explained:

We have become accustomed to the world of really infinite compute and we have taken advantage of it by writing scalable and dist software, but often focused on ever scaling upwards and outwards without a ton of regard for perf per unit of compute that we are utilizing.

Sutter predicted back then that the next frontier would be in software optimization with concurrency. Patel discussed the impact of the thread per core architecture and the synchronization challenge where he compared: the shared everything architecture in which multiple CPU cores access the same data in memory; versus the shared nothing architecture in which the multiple CPU cores access their own dedicated memory space. A 2019 white paper by Pekka Enberg, Founder and CTO at ChiselStrike, Ashwin Rao, Researcher at University of Helsinki, and Sasu Tarkoma, Campus Dean at University of Helsinki found a 71% reduction in application tail latency using the shared nothing architecture.

Patel then introduced solutions to help developers in this area. These include: Seastar, an open-source C++ framework for high-performance server applications on modern hardware; io_uring, an asynchronous interface to the Linux kernel that can potentially benefit networking; and the emergence of programming languages, such as Rust and Zig; a faster CPython with the recent release of version 3.11; and eBPF, a toolkit for creating efficient kernel tracing and manipulation programs.

As an analogy of human and machine coming together, Patel used as an example, Sir Jackie Stewart, who coined the term mechanical sympathy as caring and deeply understanding the machine to extract the best possible performance.

He maintained there has been a cultural shift in writing software to take advantage of the improved hardware. Developers can start with profilers to locate bottlenecks. Patel is particularly fond of Generational ZGC, a Java garbage collector that will be included in the upcoming GA release of JDK 21.

Patel returned to his opening statement and, as an addendum, added:

Software can keep pace, but there’s some work we need do to yield huge results, power new kinds of systems and reduce compute costs

Optimizations are staring at us in the face and Patel “longs for the day that we never have to look at the spinner.”

Highlighted Presentations: Living on the Edge, Developing Above the Cloud, Local-First Technologies

Living on the Edge by Erica Pisani, Sr. Software Engineer at Netlify. Availability zones are defined as one or more data centers located in dedicated geographic regions provided by organizations such as AWS, Google Cloud or Microsoft Azure. Pisani further defined: the edge as data centers that live outside of an availability zone; an edge function as a function that is executed in one of these data centers; and data on the edge as data that is cached/stored/accessed at one of these data centers. This provides improved performance especially if a user is the farthest away from a particular availability zone.

After showing global maps of AWS availability zones and edge locations, she then provided an overview on the communication between a user, edge location and origin server. For example, when a user makes a request via a browser or application, the request first arrives at the nearest edge location. In the best case, the edge location responds to the request. However, if the cache at the edge location is outdated or somehow invalidated, the edge location must communicate with the origin server to obtain the latest cache information before responding to the user. While there is an overhead cost for this scenario, subsequent users will benefit.

Pisani discussed various problems and corresponding solutions for web application functionality on the edge using edge functions. These were related to: high traffic pages that need to serve localized content; user session validation taking too much time in the request; and routing a third-party integration request to the correct region. She provided an extreme example of communication between a far away user relative to two origin servers for authentication. Installing an edge server close to the remote user eliminated the initial latency.

There is an overall assumption that there is reliable Internet access. However, that isn’t always true. Pisani then introduced the AWS Snowball Edge Device, a physical device that provides cloud computing available for places with unreliable and/or non-existent Internet access or as a way of migrating data to the cloud. She wrapped up her presentation by enumerating some of the limitations of edge computing: lower available CPU time; advantages may be lost when network request is made; limited integration with other cloud services; and smaller caches.

Developing Above the Cloud by Paul Biggar, Founder and CEO at Darklang. Biggar kicked off his presentation with an enumeration of how computing has evolved over the years in which a simple program has become complex when persistence, Internet, reliability, continuous delivery, and scalability are all added to the original simple program. He said that “programming used to be fun.” He then discussed other complexities that are inherent in Docker, front ends and the growing number of specialized engineers.

With regards to complexity, “simple, understandable tools that interact well with our existing tools” are the way developers should build software along with the UNIX philosophy of “do one thing, and do it well.” However, Biggar claims that building simple tools that interact well is just a fallacy and is the problem because doing so leads to the complexity that is in software development today with “one simple, understandable tool at a time.”

He discussed incentives for companies in which engineers don’t want to build new greenfield projects that solve all the complexity. Instead, they are incentivized to add small new things to existing projects that solve problems. Therefore, Biggar maintained that “do one thing, and do it well” is also the problem. This is why the “batteries included” approach, provided by languages such as Python and Rust, deliver all the tools in one package. “We should be building holistic tools,” Biggar said, leading up to the main theme of his presentation on developing above the cloud.

Three types of complexity: infra complexity; deployment complexity; and tooling complexity, should be removed for an improved developer experience. Infra complexity includes the use of tools such as: Kubernetes, ORM, connection pools, health checks, provisioning, cold starts, logging, containers and artifact registries. Biggar characterized deployment complexity with a quote from Jorge Ortiz in which the “speed of developer iteration is the single most important factor in how quickly a technology company can move.” There is no reason that deployment should take a significant amount of time. Tooling complexity was explained by demos of the Darklang IDE in which creating things like REST endpoints or persistence, for example, can be quickly moved to production by simply adding data in a dialog box. There was no need to worry about things such as: server configuration, pushing to production or a CI/CD pipeline. Application creation is reduced down to the abstraction.

At this time, there is no automated testing in this environment and adoption of Darklang is currently in “hundreds of active users.”

Offline and Thriving: Building Resilient Applications With Local-First Techniques by Carl Sverre, Entrepreneur in Residence at Amplify Partners. Sverre kicked off his presentation with a demonstration of the infamous “loading spinner” as a necessary evil to inform the user that something was happening in the background. This kind of latency doesn’t need to exist as he defined offline-first as:

(of an application or system) designed and prioritized to function fully and effectively without an internet connection, with the capability to sync and update data once a connection is established.

Most users don’t realize their phone apps, such as WhatsApp, email apps and calendar apps, are just some examples of offline-apps and how they have improved over the years.

Sverre explained his reasons for developing offline-first (or local-first) applications. Latency can be solved by optimistic mutations and local storage techniques. Because the Internet can be unreliable with issues such as dropped packets, latency spikes and routing errors, Reliability is crucial to applications. Adding features for Collaboration leverage offline-first techniques and data models for this purpose. He said that developers “gain the reliability of offline-first without sacrificing the usability of real time.” Development velocity can be accomplished by removing the complexity of software development.

Case studies included: WhatsApp, the cross-platform, centralized instant messaging and voice-over-IP service, uses techniques such as end-to-end encryption, on-device messages and media, message drafts and background synchronization; Figma, a collaborative interface design tool, uses techniques such as real-time collaborative editing, a Conflict-Free Replicated Data Type (CRDT) based data model, and offline editing; and Linear, an alternative to JIRA, uses techniques such as faster development velocity, offline editing and real-time synchronization.

Sverre then demonstrated the stages for converting a normal application to an offline-first application. However, trade-offs to consider for offline-first application development include conflict resolution, eventual consistency, device storage, access control and application upgrades. He provided solutions to these issues and maintained that, despite these tradeoffs, this is better than an application displaying the “loading spinner.”

MMS • Frank Yu

Transcript

Yu: We’ll be talking about leveraging determinism, or in more words, trusting deterministic execution to stabilize, scale, and simplify systems. My name is Frank. I’m on the engineering team that built and runs the Coinbase Derivatives Exchange. I’ve got two things I’d like you to come away with. First, I’ll try to make the case that if you’ve got important logic, make it deterministic. You’ll be happy you did. If you’ve already got deterministic logic, don’t be afraid to rerun it anywhere in your stack, in production, even, for efficiency and profit. As a disclaimer, we’ll largely be going over practices directly from our experience running financial exchanges. We don’t rigorously prove any of the concepts we present here. Any references to the canon or any prior art we’re bringing together is for inspiration, and as a case study for what we’ve done. Just remember, n equals 1 here, it’s all anecdotal.

About Us and Our Problems

Let’s talk us and our problems. We built and run what is now the Coinbase Derivatives Exchange, formerly a startup called FairX, which built a CFTC government regulated trading exchange for folks to trade financial contracts with each other. You can submit us an order to buy or sell something for some price, and we’ll let you and the market know asynchronously when you submit that order, and when you trade, and what price you get. Think of exchanges as financial infrastructure. Everyone who participates in finance relies on us to provide the real prices of things traded on our platform, and relies on us to fairly assign trades between folks who want to enter into financial transactions. First thing to think about when running an exchange is mission critical code. A bug on our system could result in catastrophic problems for both ourselves and all of the clients in our platform, because we’re dealing directly with up to millions of dollars of client funds. Potential losses we risk are multiple orders of magnitude more than any revenue we might make from a transaction. In a previous life running a currency exchange, our clients would trust us with millions of dollars for each request, and maybe we’d be happy to earn 0.00001%, give or take a zero on that transaction. Business logic has got to be rock solid. Exchanges absolutely have to be correct.

The second thing about running a financial exchange is that many of our partners expect us to operate as close to instantaneously as possible, and also to be as consistent as possible. If we’re the ground that folks stand on for financial transactions, we’ve got to be predictable and fast. Specifically, here, we expect our 99th percentile response time latencies to stay comfortably under a millisecond. Finally, we got to keep records. We need to allow regulators, customers, debuggers like me to reconstruct the state of the market or the system exactly as it was at any given microsecond instant, for the last seven years. All of this adds up to a super high bar of quality on our core business logic. You might ask here, if risks are so high, correctness bugs are catastrophic, and performance bugs get instantly noticed, how do we actually add features to the system in any reasonable timeframes? We’ve got to make sure our core logic stays simple. In principle, we want to minimize the number of arrows and boxes in our architecture diagram. We don’t want a lot of services that can fail independently in a transaction and result in a partially failed system. When we handle financial transactions, we handle them in an atomic and transactional manner. We also avoid concurrency at all costs in our logic. In the last 10 to 20 years, multiprocessor systems have offered programs an interesting deal with the devil. Run stuff in parallel and you get some more throughput and performance. You’ve got multiple CPUs, why not assign work to all of them at the same time? You just have to make sure you don’t have any race conditions. Your logic is correct for every possible interleaving of every line of code in your concurrent system. Then, if you’re trying to reproduce a bug by running code over again, you can’t count on it to happen, because you’ve left things up to the OS scheduler. Here, I claim that simplicity through deterministic execution, it’ll get you simplicity, but it can also get you more performance and throughput than concurrent logic.

Deterministic Execution

Let’s describe deterministic execution a bit. You can largely consider a traditional program with a variable set to various values in the program counter set to some line of code as a state machine. The idea is this, inputs come into the system and contact with the state of your system and may change it. Inputs also come into your system and may result in some new data or output getting created. In practice, we’ll talk about inputs as requests into the system, API calls, and the change state given a request as the new state of the system. For example, if Alice deposits $1,000 into her account, then the new state of the system would reflect an additional $1,000 in her balance. We also talk about the output of a system as events. This is when Alice submits an order, and we have to asynchronously send a push notification to the rest of the market, informing them of the new bid for a given thing at some given price. You’ll hear me use inputs and requests, and then outputs and events interchangeably here. We’re drawing out that equivalency. A system is deterministic now if given an exact set of inputs, in the same order, we get the exact same state and outputs. In practice, if you take all of your requests, and you sequence them all into a log, and then you apply determinism here, [inaudible 00:07:01], we’ll effectively get replicated state and output events. Again, if we sequence Alice first depositing $1,000, then submitting an order to buy something using that $1,000, our deterministic system should never have Alice using money for an order before she deposited it, even if I rerun my service over again, 1000 times at any point.

Benefits of Determinism

For some of you, replicated state machines might ring a bell, and you might think about consensus. This is a segue to some of the benefits for having a replicated state machine through deterministic execution. The first one here is high availability. We all have high availability concerns these days. Let’s say I’ve got my service running in production, and then some hardware failure crashes my service. Now I’m concerned. I’ve got an outage. I’m getting paged in the middle of the night. I’ve got to start stuff and recover lost data. It’s no fun. It wasn’t even my code that necessarily caused that hardware failure. If I have a deterministic system, I can actually replicate my log of incoming requests to other services running the same logic, and they’ll have the exact same state. If invariably, my service goes down, through no fault of my code, I’m still fine. I can pick a new primary service. Good thing, we have redundancy through determinism. That said, replicating every request into our system is a ton of work. The Raft algorithm says, don’t even start changing your system until you’re sure that your state machine is replicated to most of your machines. If we’re going to operate at microsecond timescales, we’ll need an implementation of consensus that’s really fast. Thanks to Todd Montgomery, and Martin Thompson of Real Logic, we’ve got a very fast open source Raft cluster that we can put our deterministic logic on and get all these nice high availability properties with negligible performance overhead. Hopefully, the civility benefits to determinism are clear.

Let’s talk performance. Another thing that’s nice when your logic is deterministic and serialized, is you can actually run everything in one thread and make use of the consistency and throughput you get from single threaded execution. One barrier to that consistent performance is actually giving up control to the operating system scheduler and hoping that you won’t get context switch out when you start handling a client request. Again, in the vein of keeping things simple, let’s tell that operating system to pin our business logic thread directly to the physical core or a hyper threaded core and not have our logic be preempted for anything else, and remove the complication of having to think about OS scheduling when developing for performance.

Changing tack, it should go without saying that a deterministic program should be easier to test than a non-deterministic program. Certainly, in cases of concurrency where the spaces of tests you need to write expands by n times m of the interleavings of the lines of code running in parallel. There’s some other nice things you can do if you’ve got one deterministic monolith that handles every request as a transaction to test. Each of your user stories basically turns into a test case. You can make use of time travel to forward your system to a very specific date so you don’t crash or have issues every leap year or something like that. Despite all that testing, when something still seems weird with the system, let’s say I’m in prod again, or staging, or anywhere, and something weird starts happening on my service. If I’m in prod, I’m concerned. I don’t know what’s going on. I wish I added log lines for this case. I hope this doesn’t impact too many people. If I’ve got deterministic logic now, when the weird behavior starts, I can go ahead and copy the request log, run it on my laptop. When the problem comes up, maybe I was running the system in a debugger. Now I know exactly why my program got into that weird state, because I’m inspecting all the states in memory and I’m figuring out the problem. I’ve still got some weird behavior in prod, but at least I maybe have a plan for how to deal with it. This idea of being able to take a running system in production, and rerun patch with log lines on your development environment, it’s a superpower. It also can’t be overstated how useful it is for maybe finding some pathological performance situations or tracing bugs. You rarely say, it works on my machine, again, for logic bugs, if your system is deterministic like this.

Business Logic

All this stuff. Great, sold. We’ve built this. What you’re seeing on the screen here is our business logic running in Raft consensus that’s in blue, surrounded by gateways or API endpoints, just like an HTTP JSON endpoint, or special message endpoints that you send finance messages to, those are all in green. Here’s a simple message flow to illustrate our system. Clients are going to send us requests through our gateways, like Alice wants to buy 2 nano-Bitcoin contracts for $20,000. That request gets forwarded onto our business logic service, the Raft monolith, and we go ahead and output events via multicast here to clients that connect to our bare metal data center. This is where the latency sensitive nature of our system impacts our architecture. For the non-latency sensitive use case, or the high throughput use case, or the cold storage use cases, we’re going to go ahead and send events over some transport to the cloud. Then forward them to regulators sitting behind the gateway or a database, so we can actually keep track of what happened long term. This big, red pipe, in other stacks, you might think of them like Kafka or a message queue. In general, you’ve got requests coming in to your business object in blue, and then events coming out of the system, and sometimes over a big transport to another region.

Here’s one thing we noticed. At this point in time, we’re in production. We’re a startup at this point, and we noticed something interesting about our requests. That request, the tuple of Alice buying 2 Bitcoin contracts, it’s 5 pieces of data, really. It’s about maybe 100 bytes coming in, but what comes out after we handle that API call might be huge. What if Alice at this point successfully buys 2 Bitcoin, and let’s say she trades with 2 different people. What are we sending over that big, red pipe? First, we’re sending the event that we successfully handled Alice’s or his order. Then, let’s say she trades 1 Bitcoin with Bob. We tell Alice that she bought a Bitcoin from Bob. We tell Bob that he sold a Bitcoin contract to Alice. If Alice also trades with Charlie in that request, we got to tell Alice that she traded with Charlie, and we got to tell Charlie that she sold to Alice. One request to buy 2 Bitcoin contracts suddenly becomes a mouthful of events once it goes through a logic. Here’s the kicker. We’re sending events from our bare metal data center over to our AWS data center. We’re finding out that AWS’s compute charges are fairly reasonable. Then, network ingress and network egress costs were nuts, we were being charged for our data bandwidth. At the time we were a starving startup, at least burn rate conscious, we weren’t super happy about lighting money on fire sending all this data. Yes, big question here, can we optimize? What’s our problem?

What we’re saying here is that in our situation, and in many situations, in general, business logic is fast and cheap, but reading and writing the data is what’s actually slow and expensive. How does the fact that our business logic is deterministic help here? Here’s a proposal. How about if when handling requests, we still go ahead, and instead of sending a ton of events over this expensive network connection, and lighting money on fire, we replicate only that request over the network. Then we continue on, we only replicate that request to our downstream services. That doesn’t really work, our downstream services want to see the impacts of that request, not the request itself. Let’s just rerun our business logic on the same machines as our downstream services, and send our red big blasts of events over IPC, or interprocess communication. That effectively means that we’ve now lighted all the network costs of egress events: way less network usage, less burning money.

Let’s catch our breath here. For the first time, we had to blink a few times. We’re saying, we can go from sending fat events everywhere, from one little request message, and we can go to just sending the requests everywhere? How does this work? It works because everything is deterministic. Our monolith is just a function that turns requests into their output events. It does the same thing every time as long as the ordering of requests are the same. If we didn’t have a multicast in the data center, if our data center on the left here was actually just in the cloud, we could apply that same trick. We could replicate requests to a monolith that is localized to our gateways, and send all that through IPC. What this has opened up is a whole axis of tradeoffs you can make, where you can now replicate compute instead of data and cases.

Replay Logic to Scale and Stabilize

Why does this work? Here’s what we noticed about inputs and requests. They’re often smaller than outputs. When we receive an order or an API call, maybe one of the first things we do about it when we handle it, is we actually stick an ID on it, and we want to identify the state of that request. We’re sticking a bunch of state on it. We’re contextualizing that request with our own services’ business context. Here’s another interesting thing we noticed. Inputs can probably be more consistent than our output events. You all know about 99 percentile latencies. How often do we think about our 99th percentile network load given some request? Here’s an example. Let’s say Alice sends us one request to sell 1000 Bitcoin contracts at just any price, because she just wants to cash out. Those 100 bytes coming to your system, they’re going to cause possibly thousands of events to happen, because she’s going to trade with the entire market. What you get here is a ton of events coming out of one request. If you’ve got an event driven system, you’ve got a positive feedback loop in here somewhere where you’re creating more events from handling events. This is how you get what’s called thundering herd. This is how massive autoscaling systems in data centers and in the cloud, can go down. Your network buffers completely fill up, because you’re blasting tons of events everywhere. Your services time out, and we’re not able to keep health checks up, or things like that.

Why are we saying here that these input requests can be more predictable, and outputs can’t be? The size of an input, a sequence into your log and the rate of them, they can be validated and rejected. Alice sending one API request is actually not a lot of network usage. If a client had a bug and was just spamming us with tons of requests, we can actually validate their input rate with rate limits, throttles. Your API gateways, your load balancers actually should be doing it for you. When you talk about the size of inputs, if there’s malformed data, you can validate that before it gets into your business logic. It’s not quite so easy with outputs and events. An event, in some sense of fact, it’s a change in your system because of some request. You don’t reject an effect of a request. You can, but it’s pretty difficult. Also, it’s often unclear when a request might cause just a landslide of events to come out. Those are what you would basically call pathological cases. You’ve got to analyze them to be able to predict them and mitigate them. In this case, if your entire network utilization is just your input rate that is validated by all of your external endpoints, then you’ll actually get some pretty consistent network utilization for all of your replication here. You can protect from thundering herd here by not sending blasts of events. If you’re replaying your logs in production, that’s how you’ll stabilize your system. Then, because it’s less network utilization, it’ll also help you scale.

Here’s a third benefit. When you replicate compute, you can also simplify your downstream code. All those blue boxes you saw on that diagram, monolith, monolith, monolith, it’s all the same code. It’s deduplicated. What that means is, you don’t have a lot of endpoints where you might be reimplementing your business logic just so you can materialize it, or have a better view of it. That said, deduplication is optimization here, it’s not architecture. We’re not offering up our firstborn at the altar of DRY, but it’s still pretty cool. Let’s take a look at it in action. Again, here’s my system, with monoliths everywhere. A common use case for any given system is you might want to accept user queries. If Alice wants to get her active orders, she’ll send the request through one of our endpoints. Traditionally, you would forward that query to your datastore. In our case, that’s the Raft monolith. The datastore responds with the data that you’re asking for, so blue coming in, red coming out, and that’s why these arrows are purple.

These requests might come in from all over the world. You might have clients in different regions. That might be another gateway talking to your datastore to go fetch data. We try to solve some of the scaling problems with this by maybe introducing caches in the middle. What that means is you still have to keep that cache up to date. What are you doing? Are you sending data to that cache? Are you doing some a lazy LRU type thing? It’s all just a lot of complication here. There’s a fairly obvious optimization that can be made, given that we’ve got monoliths everywhere. Instead of going to our main piece of business logic, our main datastore, we can just make an IPC call to the local monolith running alongside your endpoints and get the data back super quickly, and get fantastic query performance in this case, because you’re totally localized. We can remove a box from our architecture diagram.

What if we have no choice? We’ve built this great system, we got monoliths everywhere, and then a lot of new features keep getting added: new query opportunities, new gateways, new endpoints, new functionality. Alice wants to go get her balances, and a bunch of other things. You’ve got all these requests coming in from the outside. To scale, we might need a cache again. A really great cache, in this case, is actually our monolith. What we can do is, instead of replicating events, or updating maps, key-value stores, we can actually just replicate that same sequence log of requests. Now that monolith can actually answer queries over the network to all of these gateways who don’t necessarily need to be running the monolith in the same machine. Here’s an example of maybe going a little more in the middle of the spectrum between replicating all the compute and replicating none of the compute.

Challenges and Considerations

What are some things we got to think about when we’ve built a system like this? Here’s a listicle. One, we’ve got to make sure to replicate well tested code, or else your bugs will replicate too. This architecture assumes that you’ve got some battle tested, hardened logic that’s really important to your business, and that you’ll actually test and make sure that it is stable. Because if you don’t, you’ll just have bugs everywhere. Here’s the other thing. When you’ve got a deterministic system, you got to make sure that all of your replaying services are actually deterministic, they cannot drift. A common source of drift is when you change your business logic. One point you’ve got to keep track of is you’ve got to respect old behavior when replaying inputs. What that means is, let’s say on Thursday, you had some undesirable behavior on your system, and you handled some requests, and then you fixed it on Friday, and you deployed it. If your downstream monoliths are replaying logs from Thursday, then they need to make sure to do that incorrect, undesirable behavior before Friday comes and we actually enable that new behavior. What this means is deploying code should not change the behavior of your system, you’ve got to decouple that and enable behavior changes with an API request to your business logic.

Four, we often need randomness in systems. It gives us uniform distributions, and fairness, load balancing, maybe some security benefits. As it turns out, you can do randomness deterministically. There’s tons of deterministic pseudo algorithms out there for you to pass the seed in. Then, all of your systems that are replicating the input log are going to get the same nicely distributed, random looking output, but it’ll all be exactly the same. Five, this one’s fairly subtle. If you’ve got a single threaded deterministic system, then any long task is going to block every other task in your input log. This is called the convoy effect. If you’ve got requests, especially that maybe will explode into more work and then do a bunch more work in a transaction, consider breaking up that computation into multiple stages and allow more request messages to come in, while you’re tracking the state of that computation. This is a tradeoff, of course, between better consistent performance dealing with the convoy effect, and the complexity of having to manage additional state because now you’ve just split up your computation into multiple stages.

Also, if you want a fast Raft service, everything that you’re persisting between requests, you must fit in memory. That said, there’s a lot of commodity RAM out there now, you’d be surprised. You can fit quite a lot of customer data into hundreds of gigabytes of RAM, especially if you’re being maybe judicious about how you’re storing that. If you’re storing data in fixed length fields, and you’re avoiding arbitrarily nested and variable length data, you can easily store millions of accounts worth of information in a megabyte times the number of 64-bit integers you’re using to actually persist that info. Also, you’d be very surprised what fast single threaded code running on one core can get done. If you’re not concerned about writing to disk with each message and you’re not IO blocked, you can easily keep your processing times of your API calls maybe even under a microsecond. That gets you a throughput of a million messages per second all on one box. Really think about that if you come to the decision where you might want to shard, because sharding introduces tons of complexity into your system.

Nine, keep your 99s and 99.9s down. You’ve got that single threaded service, your response rate for everything is impacted by your slowest requests. When running in this manner, and if you want consistent performance, you’ve really got to apply some craft to make sure you avoid pathological performance cases, and make sure you’re not slowing down all of the other requests by iterating through too many things or something like that. Last, our inputs are more consistent if we validate them to a band of acceptable size. You’ve got to make sure that your log, your monolith is protected from any client that maybe suddenly goes nuts, because you can now easily denial of service your entire system if a client goes haywire, and you’re not applying rate throttles.

Simplicity

All this determinism is motivated by a call to simplicity. In general, we say that simplicity is going to get you stability. It’s going to get you performance and development speed. I’m sure you’ve all seen plenty of talks and had engineering mentors talk about the virtues of keep it simple. Hopefully, I don’t need to sell simplicity to you all. What I’m hoping I got across is that deterministic execution help keeps things simple. Again, you’ve got some important logic, make it deterministic. If you’ve got deterministic logic, trust it, replay it, run it anywhere in production, on your laptop, all over the place.

Questions and Answers

Montgomery: In order for this to work, you need the same state everywhere, that is, no external state. How do you do that? I suspect having all the market data at hand with prices changing very frequently is too large to hold in your local data storage.

Yu: If you’re going to run everything basically in a Raft monolith, you are relying on the fact that your performance and everything is basically all in memory, and you rely on a persisted append only log of the requests to give you all that state. What that means is any external state, you want to be persistent, and you want to have access to in between your messages. They’ve got to fit in memory. I think we addressed this one in the listicle. Basically, if you avoid variable length data, so avoiding strings, using lots of fixed length fields, keep on to registers and primitives, you can store quite a lot of data in memory on one process. The question is, when do you shard? The complexity with sharding, and setting up basically some broker to demultiplex, and all that, is a pretty huge decision. In general, cloud systems and all of that tend to go to that shard mechanic looking for shard axes maybe a little too quickly, because having fewer boxes really does help a lot. Yes, this does not work if all of your market data at hand does not fit in memory, but your market data should ideally be maybe three, four numbers, so that’s 32 bytes, 64 bytes. You can fit quite a lot of market data in your systems.

Montgomery: I think also, too, there’s one difference that I know, it’s very common between a lot of the financial systems that operate in this space and a lot of more consumer facing systems. That is things like you’re not storing names, you’re not storing a whole bunch of other static data in those systems. Because you’re just not actually operating on them. How often does someone change their address? Does your business logic at that point need to know address, for example? There is a lot more that you can get in terms of useful information into a system into memory, when you take a look at it and go, “I don’t care about some of this external stuff, because it’s never going to actually change my business logic,” for example. There is an assumption that some of that information that’s normal for other systems to just have available is usually not available, because it’s pared down.

How do you keep determinism when executing the same input run at different times when replicating? On a stateful system, the market changes over time.

Yu: This piggybacks off an important piece of architecture, called the sequencer architecture, where you basically rely on one leader, the leader of your Raft monolith to timestamp and provide the order of the requests that come in. The problem is that having a gigantic consensus pool, where you’re expecting the shard outward to get you easy reads can quickly backpressure your leader. This idea of basically rerunning monoliths outside of Raft consensus, basically, as an optimization, it’s basically separating the writes, which is the writes to the sequencer, and the reads, which are queries of your state machine. The timestamps stay the same. What happens is Alice’s request to come in is timestamped at Monday, 11:59 p.m. That request that is replicated over to a monolith, maybe it’s replaying some historical stuff, like maybe it’s replaying it days later on Wednesday, but that message still has that timestamp of Monday, 11:59.

Montgomery: In other words, you can say that time is actually injected into the log, you’re not grabbing time some other way. It’s just a function of the log itself.

Yu: Agree. If you are comfortable relying on that one clock, you also avoid things like a clock skew. Fundamentally, if you have clocks running on multiple machines, that’s another distributed consensus problem. Ideally, you try to rely on your solution to the consensus problem to give you the things you need to keep things simple.

Montgomery: This can only work if there is no external state, that is, services are purely compute reliant and nothing else. That’s really how services exist, services rely on external state. Is there a version of this that works with services with external state?

Yu: Just refining what we mean by external state, I think of that as maybe a source of truth, or something that is basically feeding in information into your state machine. What this means is, let’s say, we’ve got some clients who have programs that are interacting with our system, strictly the state of their programs is external to our system. Then, how does that state that they’ve got in their system come to us? They come to us in the form of requests. What that means is, if you want any state to end up in your state machine, you make that an explicit part of the state machine’s API. Therefore, the state comes in. When you need to reconcile that application-level protocol, it’s not data reconciliation protocol. It’s fundamentally business logic that needs to reconcile, you end up actually opening APIs to actually support, say, I need to maybe pass in a snapshot of the old state, or maybe please replay me the stuff that came before. You’ve already got that, because we’re replaying. The world is actually this way, and that state comes into a state machine through the external API of the state machine. The state machine itself is a source of truth for all things that it is keeping track of, and taking snapshots of. Obviously, systems don’t exist in a vacuum, they only have any meaning with regards to their interaction with external systems. If you’ve got some external state that is updating, you will go ahead and pass that into your state machine via API calls. You got to expect that your state machine is fast enough to basically handle all of those changes in external state.

Montgomery: To go back to the idea of an account address, maybe your service does accept changing an address. Could it in a way, execute that by sending that off to something else, and then it comes back in through the log of events that says, this has been updated? In other words, it doesn’t really do it itself, it passes it off to something else, and then it comes back in through the input sequence. Would that work?

Yu: Yes, this works for everything that isn’t secret. I think static data can pass through a system. The throughput of data coming in that’s not really being read that just pass back through is some function of your network card and your disk storage or whatnot. Secrets, I think, don’t really fit. We try not to put secrets into our Raft monolith. What that means is, ok, when a secret changes, the business domain impact of that change should be expressed in some feature. Like if a password changed and has been invalidated, if we’ve invalidated some API key, what has been passed in to the Raft monolith is not the new password or anything, but rather, this thing is now invalidated. If your business logic says validate for a valid API key or something, it should then reject requests that are keyed off that API key. I think this model computation isn’t well suited for being the source of truth on just any secrets or things that you don’t want to basically be spreading everywhere.

Montgomery: Is it safe to say then that those things are really our external services, and whether they influence the logic or not, they influence it in such a way that you can send something into the service to change their behavior, like you were saying, invalidating a key. You’re just invalidating that to give its current impact into the state logic. It means what you need to do then is to make sure that you don’t have a lot of leakage of those kinds of things.

Yu: Absolutely.

Montgomery: Is there other places that you have thought about, since you made the presentation that you could possibly be pushing your logic that is not obvious, since you found this pushing this logic into other places, are you looking at certain problems a little differently now?

Yu: I think in general you want to separate out functional logic that is mission critical. In general, I think the reason we’ve spent a lot of time on trying to make a big portion of our logic mission critical is that running exchanges, almost all of it is. This is quite a big hammer, and you don’t want everything to look like a nail here. When going back to any system, like say, if you’re running something that keeps track of customer funds, or is keeping track of an important case where you have highly concurrent access, that determinism is incredibly useful, like scheduling, anything like that. We try to say we should localize this particular high throughput, low latency approach to only things that you intend to have very good test coverage for, and would really benefit from an explicit ordering of all events that come in. When you have massively parallel systems that are highly independent from each other, you don’t really get as much benefit from having one sequence deterministic stream of requests.

See more presentations with transcripts

MMS • Mykyta Protsenko

Transcript

Protsenko: My name is Mykyta. I work at Netflix. My job is basically making sure that other developers don’t have to stay at work late. I call it a win when they can leave at 5 p.m., and still be productive. I work in the platform organization, namely in productivity engineering, where we try to abstract toil away for the rest of engineers. Where we try to make sure that the engineers can focus on solving business problems instead of dealing with the same boring technical issues over again.

Let me ask you a few questions. How many of you work in companies that practice, you build it, you run it philosophy? How many of you are happy that you don’t have any gatekeepers between you and production, that you can deliver features and fixes faster? How many of you have ever faced a situation when you’re dealing with a production incident, and you’re wordless what to do, and you wished it would better be somebody else’s problem? Let’s be honest, because I’ve been there quite a few times myself. Yet, I don’t want to go back to the times of having a big old wall between development, QA, and operations, where you write the code and you throw it over the wall to QA, who find bugs. Then they throw it back to you, and so on, until you both agree, it’s good enough. Then you throw the code over another wall to operations, and then you expose it to users. Then your users find bugs. This whole process repeats, usually, being really painful.

Accelerate: State of DevOps 2021

It’s not just my opinion, the State of DevOps survey highlights year after year that companies with a higher degree of DevOps adoption, they perform better. They have a better change failure rate. Basically, their deployments fail less often, and that happens even though they’re doing much more deployments in general. However, this achievement, this win comes with a price, because operating your own code in production means that you have to wear a pager. You need to be ready to respond to incidents. You may need to actually communicate with real customers who may provide valuable feedback, but they also require time and attention. As a result, you can definitely ship code faster. Your code quality improves. The burden of operational duties, it’s non-negligible on top of that. As the shifting left movement is getting traction, we see even more load being added to the developers’ product.

Shifting Left

How does it happen? Let’s review it on the example of shifting left the testing. The traditional software development lifecycle has testing activity as one of the very last steps. Majority of the testing happens after the code is written. By using things like test-driven development, by automating our tests, we can shift the majority of testing activities to the earlier phases, and that reduces the number and the impact of the bugs. Because the earlier we can catch a bug, the less expensive it is. A bug that is caught at the development phase, it means that no users have to suffer from it. A bug that is caught in the planning phase, it means that we don’t even have to waste time and resources writing a code that is faulty, defective by the very design.

Of course, it’s not free. To achieve that, developers have to learn things like testing frameworks, testing methodologies, things like JUnit test driven development, Jenkins’ pipeline DSL, GitHub Actions. They’re just a few things that now become an everyday part of developers’ work. Yet shifting left doesn’t stop with shifting left the testing, the next step may be shifting left security because, yes, on one hand, we need to care about security, especially as we provide developers freedom to design, deploy, and operate on code in production. On the other hand, it means that developers and security specialists now have to work together and developers have to learn and take care of security related things like static code analysis, vulnerability scanning. Shifting left doesn’t even stop there, more processes are being shifted left as we speak, for example, things like data governance. We have a problem, on one hand DevOps as a concept introduced shifting left to all these different practices. It removes barriers. It let us deliver code faster with more confidence. On the other hand, we’ve got ourselves a whole new set of headaches. We need to navigate between two extremes now. We don’t want to introduce a process so rigid that it gets in the way of development and shipping code, yet we cannot avoid the price that we pay to ensure this constant flow of changes. We can and we should try to minimize this price, make it more affordable. Let’s talk about how we can do it. What can we do to make the complexity of DevOps, complexity of shifting left more manageable? What are the problems that we may face along the way?

Looking At History

First, let’s take a look at the history of the problem to see if we can identify the patterns, to see if we can come up with solutions. I’m going to pay attention to several things when I will be reviewing each step of our DevOps journey. I’m going to take a look at communication, basically, how does the problems that we face affect the way we interact? Who’s interacting with who in the first place? I’m going to take a look at tooling. What tools work best for each step of the journey? How do we even set up those tools? I’m going to look at cognitive load that happens during bootstrapping, during creation of the new projects, and during migration. Basically, I’m going to see how easy it is to set things up and how easy are the changes. I’ll also try to illustrate the journey with some examples from my day-to-day work where I can, to make sure that the things I’m talking about are not just hand wavy stuff.

Ad-Hoc DevOps

How does DevOps adoption start? You may have a small company, or a startup, or maybe a separate team in a larger company where people realize they want to ship things fast, instead of building artificial barriers. They want to identify, automate, and eliminate repetitive work, whatever this work is in their case. Now we may have a cross-functional team that works together on a handful of the services. The members of this team, they exchange information mostly in informal conversations. Even if they have documentation, even if this documentation exists, it’s usually pretty straightforward. They may have a Google Doc or a Confluence page that people can just state it as needed. Tooling the automation is also pretty much ad hoc.

Here’s a quick example. We might start with setting up a few GitHub repositories to store the code. We can create an instance of Jenkins service to monitor the changes in those repositories and run the build jobs to create Docker images, and publish them to Artifactory. We may have another set of Jenkins Jobs that we can set up to test the code and another set to deploy them to our Kubernetes cluster. At this stage, we can totally get away with managing those Jenkins Jobs and Kubernetes clusters, manual or semi manual, because it’s not a big effort. It’s literally a matter of copy pasting a few lines of code or settings here and there. At this point, the goal is more about finding the pain points and automating the toil, removing this manual work from the software development process. Those few scripts and jobs that you set up, they provide an obvious win. You have an automated build and deployment process, which is so much better than deploying things manually. Moreover, putting more effort into managing Jenkins and Kubernetes automatically may require more time compared to those small manual changes. After all, at this point is just a few projects and a few people working together. When something is deployed, everybody knows what happened. It’s not like you have hundreds of projects to watch and investing a lot of time into automating something experimental, something that you’re not even sure it’s going to work for you in the long run, it may not be worth it at all.

What about making changes about migration? Sure, let’s spice things up. What if we decide that regular Kubernetes deployments are not enough? What if we want advanced canary analysis and decide to use Spinnaker instead of Jenkins? Because Spinnaker provides canary support out of the box, so let’s go ahead and use it. It’s a great tool. It’s still pretty straightforward. We can decide to do an experiment. We can choose one of the projects and we can keep tinkering until we get everything right, then we can just proceed with the rest of the services. We can keep disabling Jenkins Jobs and enabling Spinnaker pipelines, project by project, because the risk of something going wrong, the risk of something being left in a broken or incomplete state is low. Because you literally can count the number of places where you need to make changes on one hand and you can do this change in one seat. If you have five microservices, and if you need to upgrade a pipeline for each of them, it’s not too hard to find the owners. It may be just a couple of people in your team or company. As for the tooling, basically anything that works for you, if it solves a problem. It basically doesn’t matter yet how you set it up. Yes, there is some additional work for all involved parties but it’s not overwhelming yet because the size of the effort itself is limited. There is an immediate noticeable win. The developers don’t have to worry about manual steps, about repetitive tasks, they can concentrate on coding. That approach reduces cognitive load for developers at this stage where you just have a handful of services.

Here’s a real-life example. Even in Netflix, with all the best practices, with all the automation, there is still room for solutions that are managed manually, for example, this particular application has some caches, and those caches have to be warmed up because the application has to make sure that it has warm caches before it can start accepting production traffic. If we don’t do that, its performance drops dramatically. This pipeline was just created manually by the team who owns this application, and its mind features these custom delays before starting to accept live traffic. Since this is a single pipeline that serves a single application, maintenance and support is not a huge deal, because updating or tuning this pipeline once a quarter or less is not a huge deal. Since the team that manages this pipeline is small, it’s not hard to find the people who are responsible for maintaining it. Updating it is also pretty straightforward, because the pipeline is pretty much self-contained. It doesn’t have any external dependencies that people need to worry about. They can just make whatever changes they need. This manually maintained pipeline provides an immediate benefit. It solves the performance issues that are caused by cold caches, and it removes the cognitive load created by shifting left those operational duties.

What’s the Next Step?

However, as the number of applications keep growing, as they become more complex, we face new challenges because it’s now harder to keep track of what users want. We may have a few applications that are written in Ruby and the rest of applications written in Java. Some of the Java applications may be on Java 11. Some of those Java applications may be on Java 17 already. On top of that, you have more people managing those applications. Even with bootstrapping, when you have new developers who want to create a new service, it’s not easy for those new developers to find the right example, whatever right means in their particular context. Even finding the right person to talk to about this may be challenging.

The maintenance of applications become more problematic as well, because if I have an improvement that I need to share across the whole fleet, I need to track down all their services, all their owners. That’s not easy anymore. For example, if we take our migration scenario, where we set up Spinnaker pipelines with canary deployments, and we keep disabling all Jenkins Jobs, it looks doable on the first glance. However, when we start scaling this approach, we quickly run into our next issue. Once you’re trying to migrate dozens or hundreds of applications, you cannot just keep doing this manually, because you have too many things to keep in mind, too many things that can just go wrong. When you have hundreds of applications, somebody will forget to do something. Somebody will mess up this setting. They will start the migrations, get distracted, will leave an incomplete, broken state. They will forget about that. Imagine what if the production incident happens and you need to figure out what state your application infrastructure is in? People will be just overwhelmed with this amount of work. Just tracking all the changes, coordinating all the efforts is not an easy problem anymore. That’s exactly the problem we started with. We have created more cognitive load for the developers by shifting left those operational duties, and that cognitive load doesn’t bring any immediate benefits.

The Paved Path