Transcript

Gamble: My name is Ben Gamble. I currently work at a place called Aiven. I work in the developer relations teams as a bit of an open source software Sommelier. I’m here to pair you some Kafka, some Postgres, and maybe some cheese to go with that. My history is a bit of a checkered past between everything from MMO game development from the core networking layers upwards, to medical device development for actual point-of-care diagnostics. I dotted about the place a bit. I’ve actually written production Pascal inside the last 12 months, which was to do MQTT streaming into a previous place I worked. I am currently learning Rust, so I can tell better crustacean jokes. Because there’s this thing going on in the world and it’s been going on for the last, I think, few million years, and it’s called Carcinization. This is where nature repeatedly tries to evolve things into crabs. This is actually a real documented thing, and you can find out about it on the computer if you look it up. It’s this approach that nature loves crabs, and wants everything to be one. This is why I work at Aiven, because it has a lovely crab logo. Eventually you might all use us as well. Aiven is an open source hosting platform for hosting your data needs. We do things like Postgres at scale. We do things like Kafka at scale. We’re there to make open source software easy to access.

Monoliths

The big theme here is that those who cannot remember the past are condemned to repeat it. This is an old quote from George Santayana, about the meaning of reason, from 1905. The painting here is from the Smithsonian. It’s really about the great ideas of man are really just the same thing over again. This is the theme you can see in everything from art to life, but really also software engineering, as more often than not, we’re doing the same thing again, and ideally better. With that in mind, let’s think about APIs, and let’s go and remember monoliths. Remember those things, they were lovely. They were big systems you could stand up and they’d just run. They were a single structure, carved from stone, a bit like that old one from the movie. The key thing here is that there was nearly always something between you, your system, and your customers, often a load balancer. There was a series of tools built out inside it. Then you nearly always had a relational database below it all. Depending on which era you were from, this could be anything from MS SQL to Oracle to Db2, and in more recent times, MySQL and Postgres, and even some more modern variants from this. The cool thing here is that we really only had one big thing in between. As you scaled up, you just added more RAM sticks, or faster CPUs, particularly back in the day, you could literally just upgrade your CPU every year, and you’re probably just fine.

The SOAP Protocol, and REST

When we talk about the APIs of these systems, this time we remember SOAP. Unlike the movie, we had a talk about SOAP. Let’s talk about what SOAP really is. SOAP was a protocol. It defined how you spoke over things. It defined encryption. It defined a way to lay out your data over the wire, in this case, XML. As it described all the different steps along the way, it was a rather complete thing. SOAP was a wonderful stepping stone in the evolution of APIs particularly talking over the web. It allowed us to build structured applications which understood where they’re coming from. It grew out of that original CORBA type, RPC type frameworks, but was really geared around sharing data. It was complex and heavyweight. Implementing SOAP interfaces took time. It required a lot of bits. It wasn’t really agile enough to cope with the growing boom of modern software. With that in mind, it was time for REST. We’re talking about Representational State Transfer here. Famously an evolution as the alternative to SOAP, and seen as a big step forward, and until relatively recently, was the de facto way to do things online. The best thing about REST is it’s simple to make and simple to launch. This is the boom of almost web 1.0 and 2.0, was the ability to just throw up REST APIs really quickly with stacks, like the original LAMP stack, Linux, Apache, MySQL, and often Perl, PHP, or Python. Classically, PHP for LAMP stack, spawned the modern web. With its then sincere flattery and imitation, we came up with systems like Ruby on Rails, Django in Python, and Node.js with Express. These were the kinds of things that really exploded the web as we know it. As with anything like this, these were simple to make, simple to launch, and hell to scale. Some of this is simply due to the single threaded nature of most of the actual software they’re built in. That’s not the whole story. The real problem comes up with when you actually start to try and do this practically.

You start out with scaling with best practices. You have your REST API, some frontend talking to it. You think, I’ve got contention on my database here, I’m just going to spawn more versions of this API and have a highly available database. I’ve got lots of APIs, which are all exactly the same. As I scale up my databases, I can only move the read load somewhere else. The next thing is, I’m still going slow, because nearly always, the database is the first thing that kind of crunch it under the load. You go, let’s add some caching, it makes everything better. Redis is like the dark magic of the web world, it just added and makes things go away, really, it’s moving problems. The next thing you do is as features increase, your API becomes more complicated because there are different types of users using your API. The mobile experience is very different to the web experience, because there’s different expectations, there’s different networking loads. Also, there’s this concrete form factor problem you’re using to serve different information. As this happens, you end up specializing how you serve data, wherever it’s by exposing a GraphQL endpoint, or by actually having specialized data services behind the scenes, you’re now speaking more different APIs and having to expose more stuff. You think, “This is getting complicated. Let’s put something in between to help.” You separate out the APIs. Maybe you have one system that does one set of stuff, and another system that does another. Maybe one serves your inventory and catalog and the other actually handles login, and you shove an API gateway in the front. This is the early stage of working out what you want to do.

You don’t really do anything other than say, this system serves one set of purposes, this system serves another set of purposes, kind of the service-oriented architecture from the mid-2010s. You shove it all behind an API gateway, which acts as your load balancer. This gets you a really long way. You can go from this share nothing approach where everything has to be inside one machine, to a share almost anything in real time. You can actually separate out these API planes so that the person building the top API doesn’t necessarily need to know about the person building the bottom one. As you start doing this out, there are more things to do because you suddenly see that you can do things like not just search, but instead actionable insight, because you have an API plane which can do anything because you can just add more bits to it. With systems behind the scenes, you can surface this data to the people who need it. It’s about preemptively improving user experiences. Because what happens now is we have a facade in front of everything we’re doing, and we have the ability to just throw processing engines behind the scenes to start serving real time, or even just appropriate data over the web.

This is where we have the idea of the right microservices with the right data. This is where that microservice thing I hinted at earlier came from, which is the idea of a service that does one thing, and then has the data to go with it. This is the modern data stack you keep hearing about. If you’ve watched some of the talks also on this track, you’ll have heard about choosing the right data tool to match the needs of your microservices. However, this is not the end of the story because what we’ve got here is you’ve got all these various services, and some of them talk over a wonderful data plane like Kafka, or service meshes, or almost anything else. However, what’s really not being shown here in a big way is how many internal APIs there are. Literally every edge of this diagram has an API, every box, even inside those boxes, talks between them. You’ve got numerous things talking SQL, some things talking Redis, lots of things talking Kafka, everything talking REST at one level or another. Then again, other things talking CQL. You start to get to this thing where it’s like, how many APIs do I really have? Do I just have the one at the top or the ones between my API gateway and my services, or after I got to consider all of them. Then, you’ve got to remember that the next thing you do might have a different database. It might have a different caching policy. It might have an entirely different API. It might require even more rules. Because what happens when you add a compliance service, which can only be operated inside Azerbaijan, due to some legal requirement, now this API has to have additional rules.

To put this in context, let’s tell a story. This is going to be related to something I used to do at my previous employer. You’ve got a platform as a service. In this case, we’re talking about big streaming systems. We want to create an API to allow for programmatic configurations. This is because, previously, you got really far by having this wonderful dashboard, be able to configure their applications, and they just made it go, and then the applications grew and scale automatically. The problem is that as the infrastructure as code movement ran onwards, but also the fact that your system really had a lot of very high flexibility to it, it became quite imperative to be able to drive changes in your actual setup remotely. Of course, the first thing you think is, I’ve heard of the OpenAPI Initiative, which is a board of open source people who have built out a way to specify REST APIs. The thing about this is like, you think, I can just specify it, and you think, a REST API. REST APIs are great, because everyone knows what they do. There’s idempotent verbs in there, so the idea of a GET is you’ll get the same thing every time, or PUT, you do it once, it’ll do the same thing every time. POST is the only one you have to really worry about, but is the most common one. More importantly, it’s widely adopted. It’s an open specification, so there’s no real limitations here. You think, yes, I’ve got this platform, I’m going to add an API to it. I’m going to make it happen. Everyone’s allowed to drive it as they want.

What Could Go Wrong – Leaky Abstractions

Let’s talk about what can go wrong here, because we tried to do this, but it turned out to be quite complicated. The first and biggest problem ended up being about leaky abstractions. What’s happening here is, our whole platform had become a microservice to everyone else’s platforms. As you build up your system of microservices, you’re now bringing in external systems as a separate service with an API. Where we come to on leaky abstractions is actually quite serious, because what happened was, the first time, we had a way leaky API which exposed details of production systems. This was like the logical grouping of where the APIs were, were not semantically grouped according to what they actually did, but instead of when they were built and added to the system at the backend, because that’s how they were grouped in the backend, or what piece of internal technology they hung off. It meant that without a lot of inside knowledge, you ended up with a problematic system, which was very hard to understand. Also, because someone just went and mirrored one of the internal APIs, we have a very much uncontrolled attack surface, because we had no real way to know all these various APIs that we’re going to hit. Made sense, because it was just a mirror. There wasn’t anyone thinking, this is something right.

The other end of the system is the way to abstract. I’ve seen a few of these, which was another attempt at a different company again. What happened was, we built a magical button, one shot API, was do a thing. What it did was, in this case, configured out an engine that could do address verification. The address verification engine setup was basically say, give me an engine, and set its settings to 5. What’s good about that is it’s very simple to do. What’s terrible about it is, you don’t really have any control, because your API was designed in mind of the user rather than in minds of the system. A user might be used to dialing a few dials up to the right settings, but it doesn’t really reflect how the internals work in any meaningful way. You’re too abstract. It means something like, if I set this button to 11, what really happens? Is 11 actually one more point than 10, or is it 100 times more? You end up with requiring a very long explaining documentation to try and get people into the right mindset to actually use your software.

This is where you don’t want to leak implementation details, but you want to project implementation realities. If the fact is that setting 1 is just 1 machine, and setting 3 is actually 30 machines, you might want to rename those to something more appropriate to what’s going on on the inside. However, if you never have a setting between the two, you definitely want to make sure it’s just exposed in a way that makes it very obvious that there’s no in-between settings. Getting this right is no end of the challenge. In the case of where we had the very leaky abstraction the first time round, we ended up having to rearchitect it a lot to try and mirror where we were going. However, we ended up with people who had this in production, and suddenly, you can’t really remove certain leaky abstractions. We had to plow on ahead and make it work. We then plugged all the holes, constrained the attack surfaces, and added more stuff behind the scenes, but the API was out. Once something’s out, it will be used and depended on.

Availability Issues

The next real problem was availability. Often, with a platform as a service, you’ll offer a very fine SLA, like some number of nines. In this case, five was the lowest we offered, which is a fun thing to say the lowest. However, your dashboard services and the services on your control plane of your system are not always the same, it’s a very different matter to provision something new than it is to actually operate it. The ability to say, give me a new instance of X, Y, Z in region ABC is very different from the ability to access the region once it’s there. You got to plan depending on different parts of the system, they’re not often hardened to the same place or the same level. What’s really worrying here is a lot of this time, your actual frontend of your website is all one thing. This is less true now, and increasingly is less true. For a while, it was a thing. I’ve dealt with a lot of systems where this came up. Suddenly, what happened was you’ve coupled your API surface to your blog, and now because you’ve gone monolithic in some places, and not in others, you now have to have your blog as robust as your core service. That’s not necessarily a problem, but it does raise the bar of where your actual uptime issues are coming from. Is it ok that your five nines availability service that doesn’t actually get that many hits might suddenly get swamped by a Stack Overflow comment going perfectly validly viral? The answer is no. You’ve got to really think very hard about this.

The answer of course here was, you’ve got to decouple it. You’ve got to decouple it in such a way that means you’re not breaking things, wherever it means you just make sure that nothing is under the same resource pool, or you separate out your load balancer somewhere, or you actually have an API gateway that can deal with this for you. It’s a dealer’s choice to a certain extent. The key point here is the story. The story is simply that if you actually have a system that things depend on, it has become part of your product. Therefore, your product SLA will apply to set APIs. APIs are products, whether or not you think of your product as an API itself, or it might be some service. Fundamentally, you’re interacting with it through an API. Therefore, the API itself is a product. The configuration, if you offer it as programmatic stuff, is now part of the product as well. That bit us pretty hard. It was rectified and it was a great piece of work to see it done. Making a Rails API, which was originally just a monolith in one region suddenly run in the 15 to 20 we needed it to run in, was really problematic, the first time around. Doable and fine, but suddenly, we were scaling things we wouldn’t tend to scale.

What About Internal APIs?

What about internal APIs, we’ve just been talking about external ones? This is where we get to the real challenges, because there’s always more of them. In general, you only present a few specific APIs to the world. However, internally, even in that briefcase, you saw how many APIs we really had. The obvious option there is like, we know how to do REST, we’ll use REST internally. We have ways to specify it, we can actually spec out what things come back and forwards, and we can have all these systems talking to each other. This can work. It can work really well, but you’re using REST APIs. These are Representational State Transfers again. You’re now calling something every time. If you’re not in HTTP/2, you’re doing a full SYN-ACK every time. This is quite heavyweight, and you’re now reliant on blocking request chaining through your system. Do you really want to have load balancers and caches inside your firewall? Because you think about it like, your external services might be hit rather hard but as systems propagate across internal systems, you might have 1000 internal systems, and every syscall from the externals might take 50 to 150 of those things they go through. Suddenly, every call amplifies 100 times or maybe 30 times or maybe 1000 times, so you might now need to have load balancing groups of your actual internal services. Is this what you really want? You’re having to couple all this stuff together. You can do it, but I really don’t recommend. You can do it for some things, but not others. Some systems are built to do this. In general, you’re into this world where it’s like, are you sure you want to couple things that tightly?

Pub/Sub

Let’s talk about Pub/Sub. We’re not talking about nautical themed drinking establishments. This is where we come to the evolution step about what APIs really have become. Because we have this swarm of microservices, this is from Netflix, and it shows things going through their web to all their backend services, you can see the routes they all trace. Of course, you want to plug it all together. Plugging APIs together is not too bad, except the problem is you want to unify this, so you know lots of things are talking with the same technology so you’re not having 100 different pieces of things to maintain. You can go for the Pub/Sub pattern. The Pub/Sub pattern is basically just a decent way to decouple things. There is the idea of a publisher, a broker, and a subscriber.

There are various ways you can do this in various technologies. The cool core concept here is that you’re decoupling the art of making something happen from the art of listening to it happen. Particularly with things like microservices, this means that you publish a thing saying, do a job, do an action, make something so. Any number of systems can pick it up afterwards, without any dependency for the first system still being alive. You loosely couple those systems together with an internal system built specifically to be robust. Common patterns here are RabbitMQ, which is a lovely example of how you never need to go above version 0.9 to be successful. NATS, and JetStream, and things like that is a lovely fun CNCF project showing that if you rewrite things enough times, you’ll eventually get something quite cool, as it originally started as a very fire and forget service in Ruby, and is now almost the same as Pulsar. Pulsar, being another first-class Apache project is the logical end point of what these big streaming systems are about with more distributed systems than the rest of your network put together as it is three, not one. Then, last of all, we’ve got Kafka. It’s that kind of your database inside-out thinking. It is heavily write optimized, designed to be robust from day zero, and relatively simple, which belies how much you can do with it.

Is It Time to Start Stream Processing?

Then the question then is, is it time to start streaming? Because this is where we come to, it’s like, we’ve now built up these ordered systems, which send messages around, they’re in logs, or queues. Is it time to start doing stream processing? In that context, let’s tell you a story. This is way back from my time as a CTO. Let’s say you build a SaaS platform, it might be for delivery and logistics, like one of the ones I built previously. What happens then is you’ve got a loosely connected network of microservices, using queues and streams. In this case, what it was, was, we had a very big computationally expensive task, which was to compute routes. This would do the full vehicle routing problem solved. It’s an NP-hard problem, you can guess how much CPU crunch that actually required. We could have GPU’d it up, but that would have required a lot more experience at the time, but also, it would have involved a lot of code we just didn’t have available. We had a bunch of very large CPU intensive machines, and the ability for them to pull work off a work queue. Originally, we tried to do this with some of the other systems that exist. We ended up with semi-custom queue stuff with logs attached as well, because we wanted to do this idea of fair load balancing combined with stream processing. This worked great. We went from a system which was REST powered, and we had uncontrolled bursts of unknown amount of work, to a system which actually really handled the throughput. This scaled great, until it didn’t.

What happened was we were onboarding clients pretty successfully, as we onboarded them, what we didn’t realize was behind the scenes, they were quite fragmented. We came with this problem where it’s a JSON file and a JSON file are not necessarily the same. One has dimensions in mass. One has dimensions in centimeters. One has a typo in it. You get to this simple problem where everything upstream which is REST comes downstream into streaming systems, which are designed for computationally heavy workloads, and they are not the same. This ended up with a bunch of poison pill type messages flowing through our APIs, which we didn’t fully know about. Down the line, we did some work to try and actually add more verification upstream. The problem ended up being that once again, once you expose an API to the world, people are going to use it. That means people are going to depend on it. Often, they don’t even have full control over what else is actually in their own system. In this case, these objects were basically just big binary BLOBs to them, because they’d come from other third-party systems. It’ll fall into your magical process network to try and solve it.

What became really bad was, because, fundamentally, we’d never had an item that failed in this particular case before, so it’ll be dead lettered at various points in the way, and a dead letter queue system was like, is this a real message? It would go, yes, this is a real message, and it will keep coming back into the system at various different stages. Eventually, we ended up with this problem where it was falling off the end of dead letter queues that were never supposed to be dead letter queues. Because something couldn’t read it well enough to put it back in the right place, or even log it. Once again, these things are the stuff you learn when you realize that an unconstrained API surface is really dangerous, but even a relatively constrained one doesn’t make the same assumptions all the way through. As we had systems in Python, which could be generally a bit like, that’s probably going to work, and were still things in Clojure which were designed to just handle stuff and interpret messages that they came across. Then when they hit some of the C++ systems at the backend, it was like, yes, no, we’re rejecting that. We’re either going to throw an exception and crash your server, or we’re just going to discard it. We came up with a simple fact of we could not match impedances across our network.

Schemas

This is where we wanted specs. You’re thinking, what can we do about this, because API specs are all well and good, but they only protect us so far? Because most of what is being sent in is JSON and it has to be to maintain flexibility for the outside world. You want schemas. That’s the first thing, is like, what is a message? JSON schema in the top is just a way of basically saying at least we know what these fields are. If you think about it, what is a JSON file? It’s text. How much precision is in a float? Is it a double? The answer is you don’t know. You also end up with a lot of stuff where not everyone is formally using JSON in the same way. Even doing a verification on it could cause some coercion, which you just can’t know about, unless you’re being exactly the same all the way through, particularly as you start using different deserializers. You can never quite be sure what’s going to happen next.

Then we get to the next options here, which is the idea of like protobuf, or protocol buffers from Google, and Avro from the Apache foundation. Protocol buffers, originally for gRPC use are the classic schema driven, we’ll generate some code for you and it’ll just work. They do. They’ve become very popular because gRPC is incredibly useful. However, they’re not without cost. I worked at a place where we had very high-speed messages all encoded in protobuf, which needed to be decoded within a single digit number of milliseconds. They need to be decoded in batches where there could be various different schemas. What you find quite rapidly there is the actual implementation of the protocol buffer system behind the bottom where it does the allocations to lay out objects in the new format, and that thunk, unthunk thing is quite problematic, and causes massive heap fragmentation because it’s just not designed to go at speed. gRPCs are great, but they’re very much more, do a thing, go away, done. Occasionally, it’ll stream a few of exactly the same thing in a row and it’ll be ok, so the arena allocators hold up.

As you go faster and trying to stress the system a bit, you rapidly find it doing allocations per item. I don’t mean just like, against a pool, I mean it’ll do in an arena of its own. It’s not even going to get to do jemalloc, the jemalloc type thing is where it’s going to go. Then it’s going to do that thing where it goes, I’ve already got the pool, don’t worry, or even snmalloc if you’re being clever. That just won’t happen because it’s a custom arena, so it’ll just thrash your arena. Apache Avro is a similar type of thing, but more focused from yesteryear, on that kind of for the evolution type model. Once again, very similar in operation, much smaller in actual data load over the wire, a bit slower on encode and decode. I’m quite a fan of this protocol, because it really understands the idea of precision really well, and has amazing interoperability with most of the Kafka ecosystem. Yes, I work heavily with Kafka. Where it has a bit of a slipup is people have implemented things on top of it. There’s actually a few different flavors of Avro flying around, and that can cause some issues. Particularly with Kafka, there are some magic bits added to certain things inside schema registries, which will make your life hard.

On the other side, we have binary yet more friendly formats. FlatBuffers is a logical evolution of protocol buffers. I strongly recommend you look at those if you’re a fan of protocol buffers. FlatBuffers are directly designed that they encode and decode much better into their end user formats. They were built from the idea of making protocol buffers work for games. Games have much tighter bandwidth and latency requirements than most SaaS software. Then there’s MessagePack, which is basically by saying, we want it JSON but we want it binary. It’s beautiful, in fact, as a schemaless yet still really flexible and often smaller than protobuf wire packet. Yet, we still have infinite flexibility, because this binary actually maintains bit alignment, and you maintain precision of an object at the other end. However, once again, without a schema, you’d still need something else to provide formal verification. You’re making a big tradeoff here of saying, I still might need a JSON schema to match my MessagePack. I fall heavily into the use Avro and/or MessagePack type approach. I strongly recommend against protobufs most of the time and say FlatBuffers as the better version of it. Other systems like Cap’n Proto are great. They work. I have no strong opinions on them, because I’ve never had to implement them.

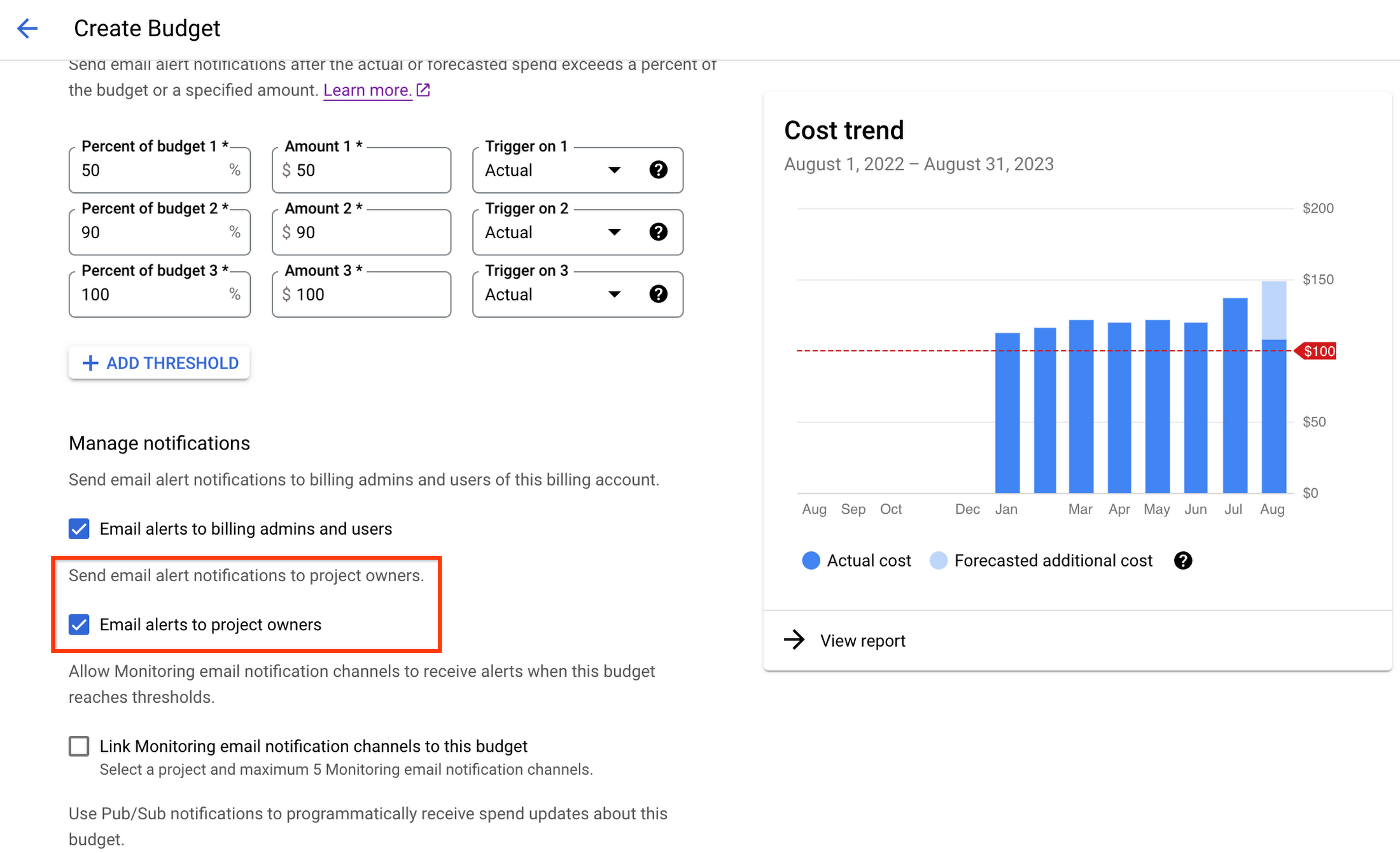

Then when we start dealing with this stuff, there are actually answers. The Linux Foundation has recently, in about the last three years, onboarded a project called AsyncAPI, which is like the idea of taking a mix of OpenAPI systems like RAML and other such things to basically specify out what an asynchronous API is, things like MQTT, things like WebSockets, things like Kafka. These are systems that don’t just do fire and forget. Instead, often, open streams port on longer-lived connections. The specification is actually quite detailed, because it specifies what a broker is in addition to just endpoints, so you actually know more about what’s going on. Also, it has, of course, JSON schema attached so you can specify up things. Because it’s JSON schema, you can translate that pretty easily into other types with the right level of extension. Tools like SpecMesh are starting to appear now, which make these really useful as a consumer of this stuff to basically spec out your system as a data catalog and say, “This is my data catalog. This is the spec for it. This is how I access it.” Other tools like LinkedIn’s data catalog tooling doesn’t quite go this far. Such things as Aiven’s own internal tool called Klaw, which is a way to do governance on Kafka, with things like Karapace, still also don’t go quite as far as SpecMesh do. They’re all tending towards the same thing, which is the idea of, we need specifications that cover all kinds of outcomes. It’s really fun to see this thing evolve in front of me. I first ran into them recently at a meetup and I was like, this is insane. Why has no one done this before? Which is the best thing to think because it means you don’t have to go build it. These are, of course, all are fully open source.

Should APIs Be Services on Their Own?

The real question this comes round to is like, should APIs be their own services? Because we’re in this distributed world, we have many different devices, and they’re all connected up. Why aren’t APIs on their own? This is actually a thing that keeps coming round again, because if you think about it, it’s like, I want to ask you the question, are we repeating ourselves? Because we’ve now got these things which need to talk, they need to be specified how they talk. Then we need to define what they do at those edges. The real question we have here is, look at the word itself, the acronym APIs, which is an application program interface. If we ask ourselves about repetition, we look at this and think, if an API is just an interface, microservices are objects, and then we think, we’ve done this before, which is wonderful, because it means you can borrow stuff. You think about the SOLID principles of object-oriented design. This whole thing hinges on this principle that we’ve basically recreated object-oriented design, with everything from observer patterns with streaming microservices to SOLID design principles when it comes to actual API design itself. The key thing you really have to do, though, is you look at SOLID and you think, how does this map? Really quite closely. We’ve got the idea of single responsibility. This API does one thing, and it might be, it might expose my other APIs to the world. It might be, I manage an OpenSearch cluster. It might be, I handle payroll.

Then, the open and closed principle really is the idea of versioning. This is a huge, big thing in APIs. You must be open to adding new versions and new stuff, but you can’t ever deprecate stuff which actual things rely on, hence the open/closed principle. We’re going to ignore the substitution thing for the important reason of, this does not hold true here. This is one of the few areas of SOLID design I’m not a big fan of, because, fundamentally, although these are objects, the concept of inheritance does not exist. You can inherit bits and pieces from other APIs like authentication, however, your API is specific to its role. This is the only bit that doesn’t hold true. Then, when we get further into this with interface separation thing, this is the idea of the external interface where your API is the external interface for the system itself. This is where you only expose what is supposed to be rather than what these internal details all do. The idea of dependency inversion is really about being a first-class citizen in your system, because you have a specification and you live with it, because if you don’t do this, what happens is you have these tightly coupled services which the actual API is tightly coupled to its interiors, and there’s now this opinion to make it separated out. This is like interface. The reason you use interfaces is to decouple the accessor from the actual stuff behind the scenes by inverting the dependency to the interface, not to the actual implementation.

SAFE API Design

If you actually reword all these things out a little bit and think about, what else have we got? We’ve got what I call the philosophy of SAFE API design: Single responsibility, API versioning, First Class Specifications, and an External interface. The ordering is not quite perfect but the word looks good. As with the best acronyms ever, this is where I’m landing. In a distributed system, this is even more important, because as you build out various blocks, it doesn’t really matter how many you add, as long as they only really do one thing. You should never really have two different APIs for payments, unless they’re substantially different. You have to version your APIs. You have to have first class specifications if you want to actually make things at scale without things exploding on you. You should really design an external interface so your API is usable. When we add additional layers on, like observer principles and everything else we’ve learned from software design, and treat API blocks as if they were just objects inside your normal code, you start to see there are many more ways we could extend this with many more letters. For the sake of a nice picture, I’m leaving it as-is. The next question you’ll ask is, you looked at this previously and you think about repeating yourself? Let’s throw one more in just for the thing. If you think about APIs as interfaces, microservices as objects, I posit that the AWS Lambda and all the functions and the services are Monads.

See more presentations with transcripts