Deno introduced Queues to support offloading parts of an application or scheduling future work, plus AI plays by Cloudflare and MongoDB.

Sep 30th, 2023 4:00am by

Photo by Cátia Matos via Pexels.

JavaScript runtime Deno launched a new tool this week called Deno Queues to support offloading parts of an application or scheduling future work to run asynchronously.

Queues is designed to guarantee at least one delivery, a Deno post stated. It’s built on Deno KV, a global database that’s currently in open beta. Queues leverages two new APIs that have zero configuration or infrastructure to maintain. The APIs are:

- .enqueue ( ): Pushes new messages into the queue for delivery immediately or in the future; and

- .listenQueu ( ): A handler used for processing new messages from the queue.

Queues uses SQLite when running locally but FoundationDB when running on Deno Deploy.

“You can also combine Queues with KV atomic transactions primitives, which can unlock powerful workflows,” the post stated. “You can also update Deno KV state from your listenQueue handler.”

Queues can be useful when scaling applications because it allows the servers to offload async process and schedule future work. The post discusses three use case examples:

- Scheduled email notifications

- Reliable webhook processing

- Slack reminder bot

Cloudflare Launches Three New AI Solutions

On Wednesday, content delivery network Cloudflare unveiled three new AI products, including Workers AI, an AI inference as a service platform that will allow developers to run well-known AI models on Cloudflare’s global network of GPUs.

AI inference is the process of using a trained model to make predictions on new models. AI inference has a wide range of applications, including natural language processing, image recognition and fraud detection.

Workers AI works seamlessly with Workers + Pages but is platform-agnostic. It’s available via a REST API.

“Through significant partnerships, Cloudflare will now provide access to GPUs running on Cloudflare’s hyper-distributed edge network to ensure AI inference can happen close to users for a low latency end-user experience,” the company stated in a press release. “When combined with our Data Localization Suite to help control where data is inspected, Workers AI will also help customers anticipate potential compliance and regulatory requirements that are likely to arise as governments create policies around AI use.”

It includes a model catalog to help developers jump-start their AI app with use cases such as LLM, speech-to-text, image classification and sentiment analysis.

The company also launched:

- Vectorize, a vector database that allows developers to build full-stack AI applications on Cloudflare; and

- AI Gateway, which provides observability features to understand AI traffic and help developers manage costs with caching and rate limiting.

MongoDB Rolls out AI to Improve Developer Experience

MongoDB is leveraging generative AI to offer new capabilities it says will improve developer productivity and accelerate application modernization.

“By automating repetitive tasks, AI-powered tools and features can help developers save significant time and effort and deliver higher-quality applications faster,” Sahir Azam, chief product officer at MongoDB, said in a prepared statement.

MongoDB announced new capabilities and data streaming integration for its Atlas Vector Search that promised to make it faster and easier for developers to build their own generative AI applications. The search simplifies aggregating and filtering data and improving semantic information retrieval. It also purports to reduce hallucinations in AI-powered applications.

The company said that new performance improvements can reduce the time to build indexes by up to 85%, which of course would speed up application development time. MongoDB Atlas Vector Search is integrated with fully managed data streams from Confluent Cloud to make it easier to use real-time data from a variety of sources to drive AI applications.

As part of the Connect with Confluent partner program, developers can also use Confluent Cloud data streams within MongoDB Atlas Vector Search to provide generative AI applications with accurate information that reflects current conditions, in real-time, from a variety of sources across the business. Configured with a fully managed connector for MongoDB Atlas, developers can make applications more responsive to changing conditions and provide end-user results with greater accuracy.

Four MongoDB products are also offering new AI-powered features:

- MongoDB Compass will now generate queries and aggregations from natural language to speed and simplify building data-driven applications;

- MongoDB Atlas Charts can now create data visualizations from natural language to speed up dashboard creation and business intelligence;

- MongoDB Documentation has incorporated a new AI chatbot to provide answers to technical questions and help troubleshoot applications; and, finally,

- MongoDB Relational Migrator can convert SQL to MongoDB Query API syntax by using AI to help automate migrations from relational databases.

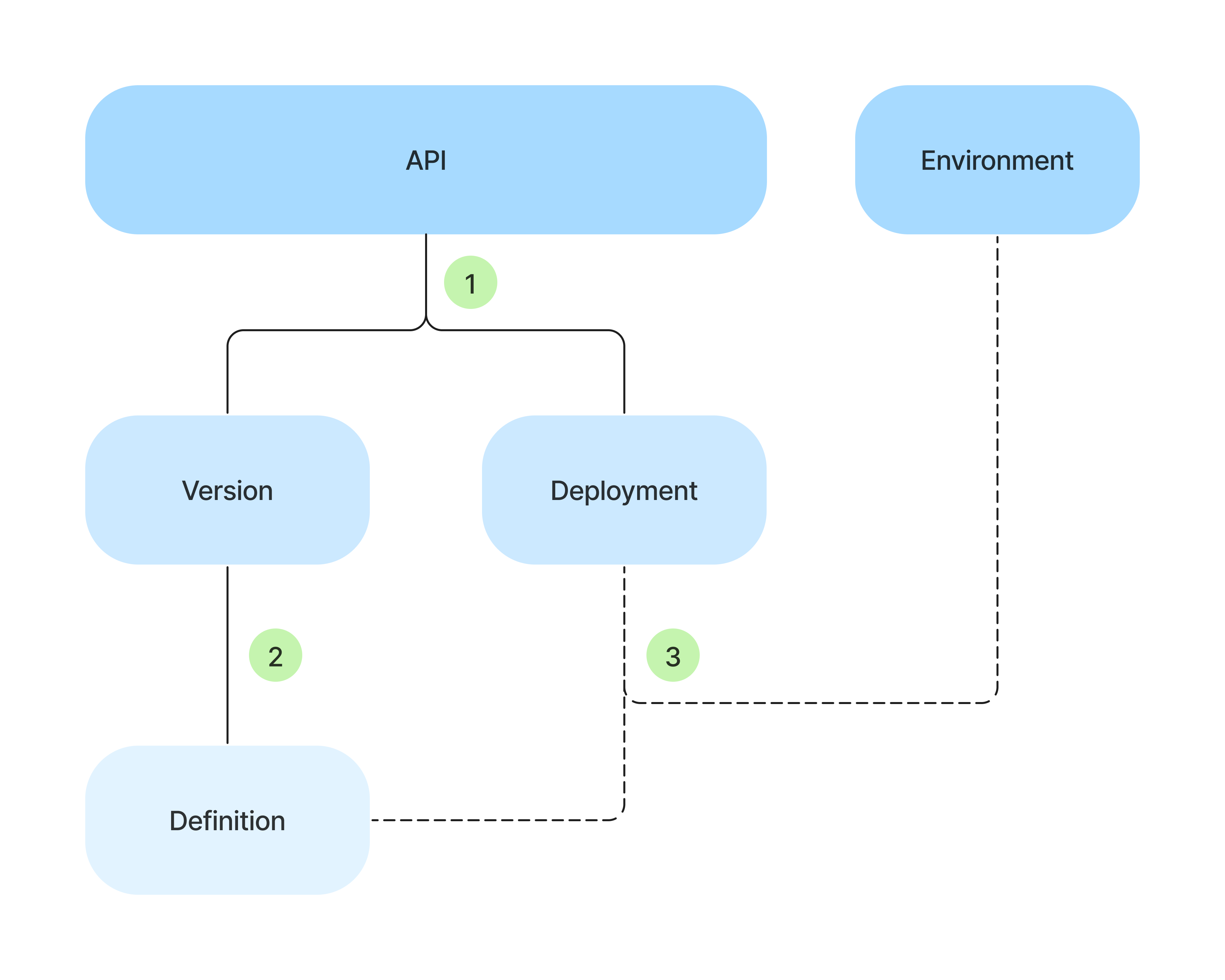

In other news, MongoDB officially launched its command-line interface built specifically for MongoDB Atlas called, descriptive enough, Atlas CLI. It allows developers to interact with their Atlas database deployments and Atlas Search and Vector Search from the terminal with intuitive commands. The tool can be used to set up, connect to and automate common management tasks.

“Developers can now use the Atlas CLI to connect to local development environments to develop, prototype, and test with Atlas, and then deploy their locally built applications to the cloud quickly and efficiently,” the press release explained. “This eliminates the need to set up a cloud environment to quickly prototype and test applications on laptops or other self-managed hardware.”

Created with Sketch.

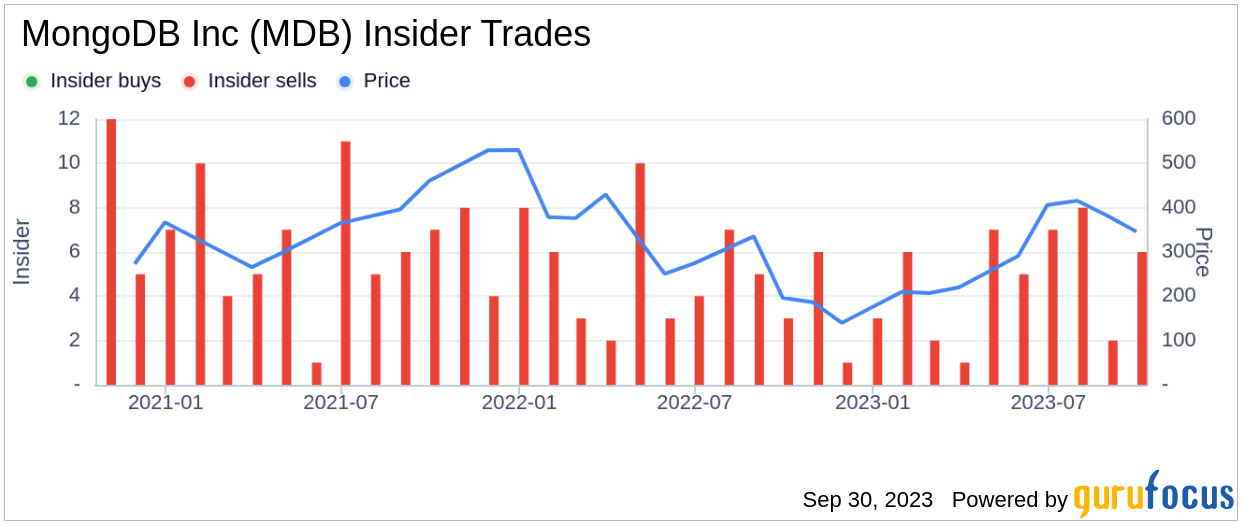

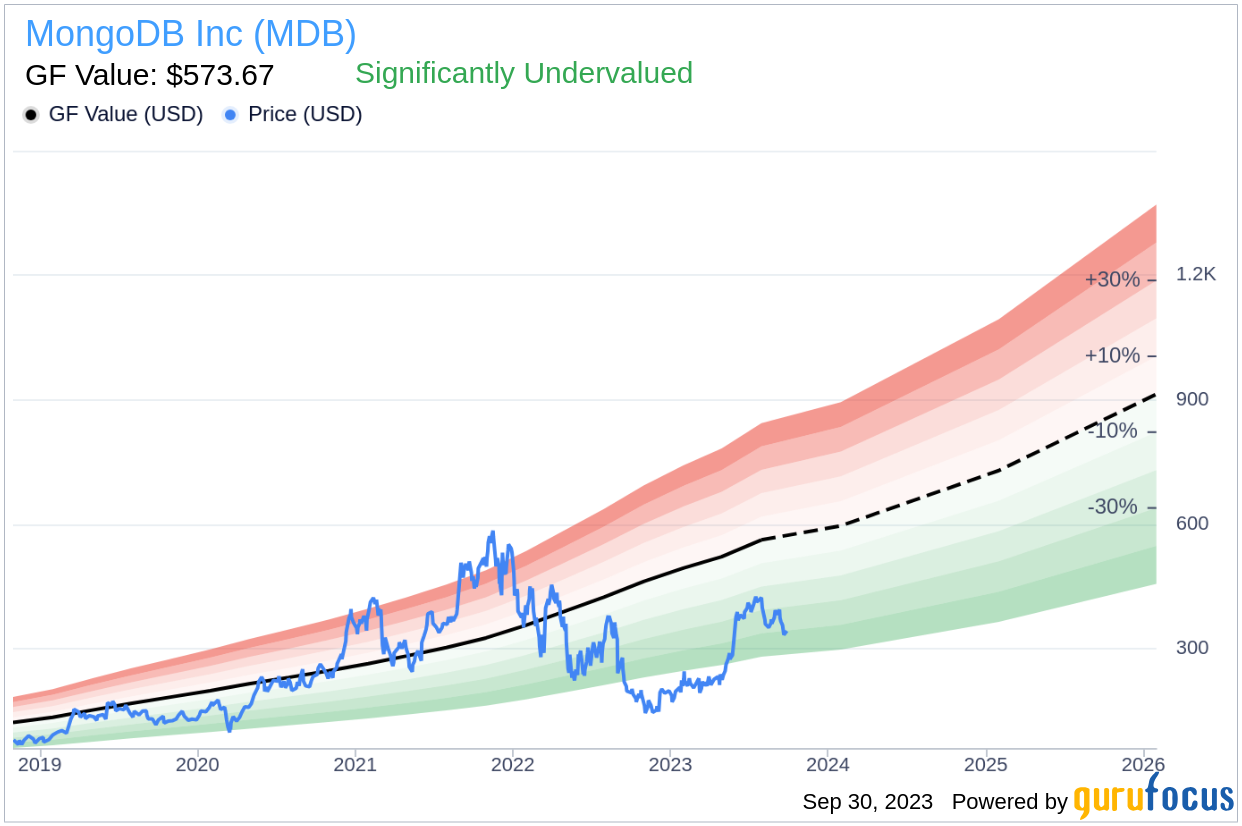

MongoDB, Inc. (

MongoDB, Inc. (