Month: January 2024

MMS • RSS

To ensure this doesn’t happen in the future, please enable Javascript and cookies in your browser.

If you have an ad-blocker enabled you may be blocked from proceeding. Please disable your ad-blocker and refresh.

MMS • RSS

To ensure this doesn’t happen in the future, please enable Javascript and cookies in your browser.

If you have an ad-blocker enabled you may be blocked from proceeding. Please disable your ad-blocker and refresh.

MMS • Anthony Alford

Google Research recently published their work on VideoPoet, a large language model (LLM) that can generate video. VideoPoet was trained on 2 trillion tokens of text, audio, image, and video data, and in evaluations by human judges its output was preferred over that of other models.

Unlike many image and video generation AI systems that use diffusion models, VideoPoet uses a Transformer architecture that is trained to handle multiple modalities. The model can handle multiple input and output modalities by using different tokenizers. After training, VideoPoet can perform a variety of zero-shot generative tasks, including text-to-video, image-to-video, video inpainting, and video style transfer. When evaluated on a variety of benchmarks, VideoPoet achieves “competitive” performance compared to state-of-the-art baselines. According to Google,

Through VideoPoet, we have demonstrated LLMs’ highly-competitive video generation quality across a wide variety of tasks, especially in producing interesting and high quality motions within videos. Our results suggest the promising potential of LLMs in the field of video generation. For future directions, our framework should be able to support “any-to-any” generation, e.g., extending to text-to-audio, audio-to-video, and video captioning should be possible, among many others.

Although OpenAI’s ground-breaking DALL-E model was an early example of using Transformers or LLMs to generate images from text prompts, diffusion models such as Imagen and Stable Diffusion soon became the standard architecture for generating images. More recently, researchers have trained diffusion models to generate short videos; for example, Meta’s Emu and Stability AI’s Stable Video Diffusion, which InfoQ covered in 2023.

With VideoPoet, Google returns to the Transformer architecture, citing the advantage of re-using infrastructure and optimizations developed for LLMs. The architecture also supports multiple modalities and tasks, in contrast to diffusion models, which according to Google require “architectural changes and adapter modules” to perform different tasks.

The key to VideoPoet’s support for multiple modalities is a set of tokenizers. The Google team used a video tokenizer called MAGVIT-v2 and an audio tokenizer called SoundStream; for text they used T5‘s pre-trained text embeddings. From there, the model uses a decoder-only autoregressive Transformer model to generate a sequence of tokens, which can then be converted into audio and video streams by the tokenizers.

VideoPoet was trained to perform eight different tasks: unconditioned video generation, text-to-video, video prediction, image-to-video, video inpainting, video stylization, audio-to-video, and video-to-audio. The model was trained on 2 trillion tokens, from a mix of 1 billion image-text pairs and 270 million videos.

The research team also discovered the model exhibited several emergent capabilities by chaining together several operations, for example, VideoPoet can use image-to-video to animate a single image, then apply stylization to apply visual effects. It can also generate long-form video, maintain consistent 3D structure, and apply camera motion from text prompts.

In a Hacker News discussion about VideoPoet, one user wrote:

The results look very impressive. The prompting however, is a bit weird – there’s suspiciously many samples with an “8k”-suffix, presumably to get more photorealistic results? I really don’t like that kind of stuff, when prompting becomes more like reciting sacred incantations instead of actual descriptions of what you want.

The VideoPoet demo site contains several examples of the model’s output, including a one-minute video short-story.

MMS • Sichen Zhao Shane Andrade

Transcript

Zhao: Have you watched a tennis game before, or have you played a tennis serve before? What makes a perfect tennis serve? It mainly needs two things, speed and accuracy. Speed is intuitive. You need to hit the ball hard enough so that the opponent has very little time to react to it. That gives you a better chance to score. How about accuracy? The ball needs to land in bound of the serving box, and not right in the middle of the serving box, but on the edge of the serving box or on the lines, so that even if the opponent player has a very fast reaction, they need to move one step or two steps aside in order to reach the ball. It gives you a better chance to score.

Why am I talking about tennis in a QCon architecture track? The reason is, in our mind, building a ClickHouse Cloud service from ClickHouse open source database is very similar to a perfect tennis serve. First, we need speed. In this competitive business world, we need to build something fast. Speed is essential. Second, we need accuracy as well. We need to build something that really meets the customer need and solves the customer pain points. That’s not only including features, but also scalability, security, reliability as well. That makes building ClickHouse Cloud even harder than making a perfect tennis serve. In tennis, you still get the lines in the field, so the player can aim at the right spot in order to get the accuracy. In the business world, those lines are blurry. There’s no lines, sometimes. That means we need to talk with many customers multiple times in order to listen to what they really want us to build.

Outline, and Background

In today’s talk, we will go through our journey of building ClickHouse Cloud from ClickHouse open source database. I first introduce, what is ClickHouse? We’ll introduce the database and some of the common use cases. Then, we’ll dive deep into ClickHouse Cloud architectures. We’ll share some of the big decisions we made to build ClickHouse Cloud. Then we will look back on our timeline and key milestones and share what we did, when and how. Finally, we’ll summarize it with key takeaways and lessons learned in the retrospective.

My name is Sichen. I’m a Senior Software Engineer at ClickHouse.

Andrade: I’m Shane Andrade. I’m a Principal Software Engineer at ClickHouse.

What is ClickHouse?

Zhao: What is ClickHouse? ClickHouse is a OLAP database. It’s good for analytics use cases, good with aggregation queries, good for support of data visualizations. It’s good with mostly immutable data. It’s been developed since 2009. It was open sourced in 2016. Since then, it gets a lot of popularity. It’s a distributed OLAP database. It supports replication, sharding, multi-master, and cross-region, so it’s production ready as well. Finally, it’s fast. That’s the thing that is most important about ClickHouse. That is the thing which makes ClickHouse special. How fast is ClickHouse? Let’s take a look at this query. This is a simple query in a SQL, running against a ClickHouse Cloud instance that aggregates all the new videos uploaded between 2020 August to 2021 August. It aggregates by month and calculate the sum of the view counts on each month, and also the percentage of how many of those videos has subtitles. The bottom line shows how many data it processed by this query alone. It’s more than 1 billion rows processed and more than 11.75 gigabytes of data touched. Let’s have a guess how long this query will take. I’ll give you three options. A, 1 to 2 minutes, B, 1 to 60 seconds, and C, under 1 second. It’s under 1 second, 0.823 seconds to process this much of data.

How is it possible? Why is ClickHouse so fast? In a traditional database, all the data are organized in rows. For queries like the previous slide shows, even if the query is interested in only three columns, basically month, view, and has_Subtitles, the aggregation queries will need to not only retrieve the three columns, but also all the other columns that present in the same row of those data. That creates a lot of waste. In ClickHouse, on the other hand, we will organize data by columns. For the aggregation queries like the previous slide shows, we only need to retrieve the data and compute the data of those three columns that the query is interested in. That makes ClickHouse fast. From the animation you can also tell. Other than the high-level columnar database architecture that makes ClickHouse fast, there are a lot of other columnar databases out there in the market. What makes ClickHouse even faster than those columnar databases competitors? For that I need to mention bottom-up optimization. This is just one example I pick out of hundreds or even thousands of examples in ClickHouse open source code. If you know C or C++, you must know memcpy. It basically copies a piece of memory from source to destination. Mostly it’s provided by the standard library itself on the right-hand side as shown. In ClickHouse, after running the aggregation queries, and after running memcpy in a loop for many times for those big aggregation queries, we figure that there might be some better way of implementing memcpy, that’s on the left. On a high level, it adds a little bit of right padding on the memcpy each time, so that when we use this left-hand side, small amounts of memcpy in the loop. We can load a little bit more data, and that allows us to do SIMD, so Single Instruction, Multi-Data Optimization on CPU level. A little bit of parallelism on each time we do a loop.

I just want to show this example, as one example out of hundreds or even thousands of bottom-up optimizations we did in ClickHouse. We have our own implementation for special cases like this for a standard library of C++. We also have special implementation of hashing on compression, sometimes optimization on compiler level or hardware specific level as well.

Accumulating all of these optimizations together, we’re able to achieve faster speed compared to the competitors. As a result, along the year of ClickHouse lifespan, we attract different use cases and different customers as well. Because ClickHouse is fast, so real-time data processing naturally kicks in as a common use case of ClickHouse. Also, because ClickHouse is able to process a large amount of data, good with aggregation, good for supporting data visualization, BI customers can use ClickHouse as a good use case as well. Also, as you see, ClickHouse supports billions of rows of per second read and supports millions of rows inserts as well. Logging, metrics for those large amounts of machine generated data, ClickHouse performs well as a tool as well. Also, recently, we support vector database functionality as well, along with the aggregation and big data processing mechanism we already supported, ML and data science use cases starts to come to ClickHouse as well.

ClickHouse Cloud Architecture

Andrade: As you saw, we had this wildly successful open source database. We knew at this point we wanted to put it into the cloud and offer it as a service for people to use. That’s great, but we had to actually ask ourself this question, how do we actually take something that’s in open source and put it into the cloud? Think about that for a second? Because the answer isn’t obvious and it wasn’t obvious to us. We actually had a lot of decisions to make. We didn’t start with any architectures or any designs, but we did have a lot of decisions, especially a lot of technical decisions. Within AWS, for example, there’s a variety of services that we’re able to use, this is just a subset of them. For compute, we have things like Kubernetes, we have EC2. Even within EC2, we have different flavors of that, we have VMs, we have bare metal. On the more managed side of services, we have things like lambda, Amazon ECS, and Fargate. For storage, there’s a number of options. We have things like Amazon S3 object storage, we also have AWS EBS volumes, and even some EC2 instances have SSD disks attached to them. For networking, there’s a variety of options as well. VPCs themselves can be configured in a variety of ways. There’s a variety of load balancers to choose from, including classic load balancers, ALBs and NLBs.

Knowing that we had to make all these decisions, how did we do so knowing that we were making the right decisions? To do that, we had to actually come up with some guiding principles. These guiding principles, you can think of them almost like the gutters that you would find in a bowling lane, and that you would put in the gutter. These bumpers are going to push your ball down the lane further and keep it centered within the lane so it’s not going to veer off course and miss the target at the end of the lane, the pins. This is exactly how we treated our guiding principles. They were a way for us to navigate these decisions and let us reach our goal of being able to deliver this cloud product. What were these guiding principles? The first one is we knew that we wanted to offer our customers a serverless experience. This was very important to us from the beginning, because we knew that a lot of people coming into our cloud, were not going to be people who were familiar with ClickHouse and probably have never seen it before. We wanted to make it as easy as possible for them to get started. Ideally, as simple as pushing a button. In order to do this, we wanted to remove the barriers to entry to get started. No having to learn about all the different ClickHouse server configurations, figure out the right sizing for your cluster. We wanted to make this really simple.

The second guiding principle was performance. ClickHouse is known to be extremely fast, and we wanted to keep that same level of performance that our ClickHouse users were already used to and offer that in the cloud as well. The third guiding principle was around this idea of separation of compute and storage. By doing this, we knew that it would unlock a few things for us, that we would be able to do, such as being able to temporarily pause the compute while still maintaining the data that’s stored within the cluster. It would also allow us to scale these two resources independently of each other. We saw we had a variety of different use cases, and we had to be able to support all those different use cases on the cloud. Those different use cases are going to have different scaling properties, each for compute and storage. Having them separated lets us scale those independently. The fourth guiding principle was around tenant isolation and security. Being a SaaS company and having users trust us with their data and upload it to us, we knew that we had to keep that data safe and secure. We knew that we needed to prevent situations such as one cluster being able to access the data of another cluster. Our last guiding principle was around multi-cloud support. We knew that we wanted to be wherever our customers were, meaning that if they’re running workloads on AWS, we should have a presence there. If they’re running workloads on GCP, we should have a presence there as well. Same for Azure. In order to support multiple clouds, we knew that we needed to make architectural decisions that were going to be portable across these different clouds.

With these guiding principles in place, how exactly did we use them? This was one early decision we had to make is what kind of compute do we want to run our cloud on. As you saw on one of the previous slides, there were just a number of options for compute just within Amazon. Using these guiding principles, we can actually already discard a few of them. For example, the bare metal option just wasn’t going to work for us because it didn’t align with our serverless vision. Some of the managed services such as lambda, ECS, and Fargate, those are specific to Amazon, they’re not going to be multi-cloud. That really only left us with two viable options, we have Kubernetes, and we have virtual machines. As you can see, Kubernetes actually checks more of the boxes for us. It provides a better serverless experience. It’s basically an abstraction over the hardware. We’re dealing with pods instead of the actual hardware, and servers. It also gives us better separation of compute and storage with built in primitives like persistent volume claims. Lastly, it also provides a better multi-cloud support, and that it’s much more portable across clouds, again with that hardware abstraction that it provides for us.

Now that we’re able to actually start making decisions, we can actually come up with an architecture. This is a very high-level overview of what our cloud looks like. At a high level, there’s two primary components, we have the control plane at the top and the data plane at the bottom. The control plane you can think of as essentially our customer facing piece of our architecture. It’s essentially what our users interact with when they log into our website, or if they do write any automation against our API that’s going to interact with the control plane. The control plane offers a number of services and features for our customers, including things like cluster and user management. It provides the authentication into the website. It also handles user communication, such as emails and push notifications. This is also where the billing takes place. On the other side of things, we have the data plane. This is essentially where the ClickHouse clusters themselves reside, as well as the data that belongs to those clusters. The data plane provides a number of sub-components that offer additional features for ClickHouse in our cloud, including things like autoscaling and metrics. This is also where our Kubernetes operator lives.

When users want to connect with their cluster, they do so through a load balancer that’s provided by the data plane. This allows them to connect directly to the data plane rather than going through the control plane, saving on a few network hops. When the control plane needs to communicate with the data plane, one such example would be when a user wants to provision a new cluster, the control plane has to reach out to the data plane, and it will talk to the data plane via the API that it exposes. It’s just a simple REST API. The data plane will start working on that provisioning process. Once that’s done, it’s going to communicate back to the control plane via an Event Bus and send it an event. The control plane can then consume that event and update its own internal state of the cluster once it’s completed, and also inform the user that their cluster is ready for use.

Using that same example, I want to take you through what actually happens on the data plane when someone provisions a cluster. Once the control plane calls the API of the data plane, the API is going to drop a message into a queue. This queue is going to be consumed by our provisioning engine which resides on the data plane. The provisioning engine has a number of responsibilities during this process. The first thing it’s going to do is it’s going to start updating some cloud resources. In AWS, it’s going to start creating some IAM roles and update our Route 53 DNS entries. We use IAM here, going back to that tenant isolation principle, this is one of the ways we enforce that. These IAM roles are created specifically for this cluster, and it allows only this cluster to access the location in S3 where this cluster’s data will reside. We also have to update Route 53 because each cluster that gets created in our cloud gets a unique subdomain associated with it. In order to properly route things, of course, we have to make some DNS entries for that.

The second thing provisioning engine will do is it will update some routing in Istio. Istio is a Kubernetes based service mesh that is built on top of Envoy. We use this to do all of our routing from our external requests coming from our users, using that unique subdomain that was generated as part of their cluster, and route things accordingly. It’s basically going to put some routing rules in there so Istio knows where to send requests for that subdomain to. Lastly, provisioning engine is going to then actually start provisioning resources in Kubernetes, specifically the compute resources. We do this through a pattern called the operator pattern in Kubernetes. The operator pattern in Kubernetes, essentially, lets us define and describe a ClickHouse cluster in a declarative manner using something like YAML. The operator knows how to interpret this YAML file and create the corresponding cluster that reflects that specification or configuration for that cluster. In this YAML, you’re going to have things that are specific to this cluster, such as the number of replicas, the size of each replica, so the amount of CPU and memory. Also, other things that are specific to ClickHouse like different ClickHouse server configurations, for example.

Now that we’re able to actually provision a cluster, it’s up and running, how does the user actually connect with? They do so through this load balancer that I mentioned before on the previous slide. The load balancer is a shared load balancer per region. All the clusters in that region, all the traffic goes to the same load balancer. All it does is hand off the request to Istio, which already has been updated with the routing rules when the cluster was provisioned, so Istio knows exactly where to send that to. It’ll hit the underlying cluster in Kubernetes. Once that request hits ClickHouse, it’s probably going to have to start interacting with some data. Depending if you’re doing an insert or a select, it’s going to either read or write some data. To do that, it’s going to go out to S3. S3 is where we keep all of our cluster’s data. It’s our persistent and durable storage for all of our customers cluster’s data.

Going back to our guiding principles before, we had this idea of separation of compute and storage, and this is one such example of that. With open source ClickHouse, this wasn’t the case, we actually had local disks with open source ClickHouse. This is something we had to build specially for our cloud within ClickHouse to be able to support this separation, and be able to store this data on S3. That being said, this also introduced some network latency, because we’re no longer going to a local disk, we have to go out to S3 and fetch that data. We have two layers of caching on top of that. We have an EBS volume, and we also have an SSD. We only use instance types that have SSDs attached to them. The reason for that is because EBS volumes, you can think of them as essentially network attached storage devices. Because they are network attached, there is still a network latency involved there. They’re not as fast as a hardware attached SSD, so we’ve also introduced a second layer of caching the SSDs. With this setup here, we essentially get more or less the same performance as you would with a self-hosted ClickHouse in our cloud.

If you heard me mention that we have a shared load balancer, you might be wondering, how does that align with our guiding principle of having this tenant isolation, if we’re having shared infrastructure? We actually didn’t start with that, we actually started with something like this, where we had a dedicated load balancer per cluster. The provisioning engine instead of updating Istio, which wasn’t in place at the time, we actually provisioned a brand-new load balancer every time someone requested to provision a cluster, and each cluster had a load balancer. There were a few problems with this approach. The first problem is around the provisioning times. These load balancers on AWS could take anywhere up to 5 minutes sometimes, which wasn’t a great experience for our customers, because the cluster was completely unusable until that was provisioned. The second problem with this was around cost. Because we have a load balancer per cluster, this was essentially eating into our margins. The third problem was around potential limits we could run into with AWS, for example. I think by default AWS has a pretty conservative limit on the number of load balancers you can have per account, something like 50. As we were approaching beta and GA, we knew that we were going to be provisioning hundreds, if not thousands of these clusters. This just wasn’t going to be a scalable approach for us to having to constantly reach out to AWS support to increase our service limits for load balancers. That’s why we actually decided to bypass the tenant isolation principle, but this was a compromise or a tradeoff here to provide better user experience and also reduce some of the costs and stuff.

That being said, we still want to offer things like tenant isolation, so we did so using Cilium. Cilium is essentially a Kubernetes network plugin that provides things like network policies that you can use within Kubernetes, and this coupled with the logical isolation we already get from Kubernetes in the form of namespaces. All of our clusters’ resources within Kubernetes, all belong within the same namespace. With the network policies on top of that, we can actually prevent cross namespace traffic, so one cluster cannot call into the network of another cluster running in the same Kubernetes cluster. If you want to read more about how we use Cilium, there’s a link there at the bottom. There’s a white paper from the CNCF, that’s the Cloud Native Computing Foundation. You can read more about our use case and how we use Cilium.

We have this architecture that we’re able to provision clusters with. Now we need to start scaling those out. Because we’re offering the serverless experience, we don’t have any ways for customers to be able to tell us how much resources they need or how big of a cluster they need. We need to do this in some automated fashion. We do this with something called autoscaling. There are two types of autoscaling that we have. We have vertical and horizontal. On the vertical side, this refers to the size of the individual replicas, so the amount of CPU and memory that they each have. There are some technical complications with this. The first is that it can be disruptive. Because we’re using Kubernetes, when we have to resize a Kubernetes pod, we actually have to terminate the old pod. That means any workloads that are running on that pod that is the target of a vertical autoscaling operation, we either have to wait for that to finish, or we have to terminate it and kill it. Not a great experience for users if that’s maybe an important query that’s running. Also, not a great experience if there are important system processes such as backups running on that pod. Because we have automated backups in our ClickHouse Cloud, we didn’t want to be interrupting backups in emergency situations when customers might actually need them. We don’t want to interrupt their queries or their backups.

The second problem with vertical autoscaling is around cache loss. I showed how we have two layers of cache. We have the EBS volumes and the SSD disks. Because the SSD disks are actually attached to the underlying hardware, if a pod gets autoscaled, or if a cluster gets autoscaled, when the pod moves, there’s no guarantee from Kubernetes that that pod is going to be rescheduled on the same node, meaning that it may not have access to that same SSD cache that it had before. In that situation, the way we actually get around that is by the use of the EBS volumes. I mentioned that they are network attached. They can actually follow the pod when they get rescheduled, so if they end up on another node, we can just reattach that EBS volume and provide it a warm cache instead of a hot cache that the SSD might provide.

On the other side, we have horizontal autoscaling. This actually refers to the size of the cluster itself, so how many replicas it has. By default, our clusters come with three replicas. Horizontal autoscaling can either increase or decrease that number. Some technical challenges around horizontal autoscaling. The first is around this idea of data integrity. This has to do with the way you can specify your write level in ClickHouse. When you’re inserting data into ClickHouse, you can specify what quorum level you want. You can ask for a full quorum, meaning that all replicas have to acknowledge that write before you consider it successful. At the other end of the spectrum, you can say, I just want to insert it, I don’t really care if anyone else acknowledges it, and that’s fine. What can happen in that situation is if the replica that received that insert, in the case of no quorum, that data is only going to reside on that replica until it’s replicated in the background to the other nodes. Until that happens, if that replica got removed, that data only resides there, meaning that that data would be lost if it’s the target of a horizontal downscale. The second problem with horizontal autoscaling has to do with the way ClickHouse clusters work. Each replica has to be aware of every other replica, so that they can communicate properly. What can happen is, if you remove a replica without unregistering it, the other replicas will still try to talk to it. This is a problem for certain commands, such as, when you’re trying to create a table, all the other replicas have to be aware of that change. If it cannot reach one of the replicas, the statement will fail. If you don’t properly unregister the replica during the horizontal downscale, then you’re going to end up in that situation and your CREATE TABLE will not execute. With these challenges in place for horizontal autoscaling, we actually realized that these are actually pretty risky things. We decided to actually launch beta and GA with just the vertical autoscaling piece. We’re still working through some of the kinks, these ones specifically for horizontal autoscaling, but it should be out shortly.

We did launch with vertical autoscaling, and I want to go into the details of that and show you how we solved the disruptive problem for vertical autoscaling. With vertical autoscaling, we want this to be done in an automated fashion. In order to automate this, we have to really understand what’s happening on the cluster. To do that, we have to publish some metrics to some central metric store. These usage metrics include things that are internal to ClickHouse, so metrics that are already generated by ClickHouse, we ship those to the metric store. We also ship operating system level metrics, so for example, the memory and CPU utilization. Some of the other signals that we publish are going to be things that are going to tell us, for example, how many active running queries are happening on that cluster, as well as number of active backups that are currently happening. Now that we’ve captured those metrics, we’re able to make smarter decisions about whether or not it’s safe to proceed with a vertical autoscaling operation. The second piece of vertical autoscaling is this scale controller. A controller in Kubernetes is essentially a control loop that watches for Kubernetes resources and is able to react to them. What is the scale controller watching for? It’s actually watching for these resources called recommendations coming from this recommender component. The recommender will wake up every so often and take a look at the metrics store and basically generate recommendations based off of the metrics coming out of those clusters, and send those to the scale controller. The scale controller at that point can make the decision whether or not to scale up or scale down based off the current state of the cluster and the incoming recommendation. This is how we’re actually able to provide vertical autoscaling.

Now that we’re able to scale these individual clusters, how do we scale our cloud? Because one of the things that often companies run into is running into these cloud provider limits, and service limit quotas. How did we get around this, again, knowing that we were going to be provisioning hundreds, if not thousands of these clusters? Taking a look at our architecture, again, we actually were able to identify that there’s a distinct line that could be drawn between two pieces of our infrastructure. We have a more static side and a more dynamic side. The top part in yellow is our static piece, meaning that it doesn’t really change based off of the number of clusters running in our cloud. The other piece is dynamic and does change and grow based off of the number of clusters we do have running. If we give these names of management and cell, and draw a more simplified version of this, and instead now think of these as individual AWS accounts, so we have a management account and a cellular account. All the resources on the previous page were in their corresponding accounts. Once we start running up to limits on the cell account, because that’s the part that grows as the number of clusters grow, we can actually just add another cell account and register that with the management account. We can continue to do that without running into individual limits. This is how we were able to scale our cloud. This is called a cellular architecture.

To wrap up the architecture portion, I just want to bring it back to the guiding principles, because that’s what allowed us to get to this point. We wanted to offer our users a serverless experience to make things as easy as possible to get started. We also wanted to maintain that same level of performance that users were already used to with ClickHouse in our cloud. We wanted to offer this separation of compute and storage to be able to scale them independently and be able to support a variety of workloads. We also wanted to provide tenant isolation and security so that our users trust us with their data. Lastly, we knew that we wanted to have multi-cloud support in AWS, Google, and Azure. The architecture I showed earlier was actually built in AWS and we were able to port it successfully to Google, which is going to be launching soon. We’re also already looking at Azure using the same architecture.

Timeline and Key Milestones

Zhao: Now that you all know about our architectures, and all of those hard decisions that we made along the way to build ClickHouse Cloud, how did we fit all of this software development work within just one year? Let me share with you. Using the initial tennis metaphor, now you know our techniques as a player, how did we end up getting the trophy, which is launching ClickHouse Cloud within one year? It all started from the end of 2021, when our co-founder got initial founding and started the company. From the beginning of 2022, we had a very aggressive timeline set on ourselves, which is launching cloud service within just one year of time. We break this entire one year of a goal into three key milestones, which is private preview in May of 2022, public beta on October, and then GA on December. What did we do for each of these milestones? For the private preview, since ClickHouse is already an open source database, there are already a lot of customers using ClickHouse, so we invited those existing customers of ClickHouse trying to use ClickHouse Cloud. We build basic cloud offering. This type of offering is limited in terms of functionalities. We don’t have autoscaling in place. We don’t have metering, because we were not going to charge customers for private preview, but we have all the other essential stuff in place, that includes SOC 2 Type 1 compliances. We think about security from the very beginning, as those customers are real customers with real customer data so we don’t want to leak any of this data. We want to be responsible even for our private preview. Also, we have all the fully managed system, so that customers can come and self-serve themselves. They have an end-to-end experience of cloud service, so they don’t need to ask us to provision for them manually. On top of that, we also have well-defined and well-developed monitoring, alerting, and on-call system. We have the full reliability in place so that customers are self-serve, and all the things are monitored. We take care of whatever clusters they created on cloud in private preview. Internally, we also prioritize our automation on infrastructure as code and CI/CD. That allows us to move quickly before private preview, and after for the second half of the year, when after we got the private preview feedback, we needed to speed up and build even more than what we initially planned for.

What did we do for public beta? Initially, we planned mainly two things. One is autoscaling, which is very hard to do, as you see in the previous slides that Shane talked about, all those challenges. It takes a lot of time. Then metering as well. We are going to start charging customers on public beta, so we need to build metering. On top of this, too, because of the private preview feedbacks we get, we also did enhance security features like private link, IP filtering, and auditing, which all of those enterprise customers asked us to do. Also, because public beta is going to be public, it’s going to be our first appearance in the general market. We did rounds of reliability and scalability testing before public beta launch. That’s when we decided to do cellular architecture internally as well.

After public beta, we’re heading towards GA with just two months. What did we do there? First two are mainly about customer feedbacks from public beta. After we launched public beta, we heard feedback about our SQL console is not having enough functions. We had our enhanced cloud console built within just two months. Then, after public beta, we also realized that the public beta customers are a little bit different from our private preview customers. For private previews, those customers mostly are enterprise customers, they’re requesting features like you see in the public beta phase. All the security features, compliances, big companies worry about those. After public beta, we got a lot of traffic from smaller individual developers who use ClickHouse to build their own project or to learn ClickHouse as a database. For those individual customers, all the security fancy features of durability, reliability, highly available database is not as appealing as the low cost in their credit card. As a result, we start to support Developer Edition in the general availability. Also, we keep on going with our reliability and security, for supporting uptime SLA for GA, and also SOC 2 Type 2 compliances.

Key Takeaways

Looking back, in retrospective, back to our initial question, how did we fit all those software development work within just one year? We think there are three key things that helped us to build something that is speedy, and accurate to solve customer problems. Those three things are, first, milestone-driven development. We set ourself a very aggressive timeline from the very beginning. This helped the entire company across different teams to have the same goal. We also set three milestones on different times of that year. That’s, clear the goal of everybody in the company, that’s one. Also, we respect the timeline, regardless of what’s going on. Maybe sometimes we underestimate some of the projects, sometimes some of the projects slip through. We respect the timeline. We never change the timeline. We keep a long list of prioritization list to keep changing the priority so that when something happens, we are able to move around the priority of different projects that we are targeting, and cost, scope, instead of postponing the time. That makes sure the time is always met, and everybody is believing that we are going to launch ClickHouse Cloud within just one year.

Second, reliability and security are features as well. From the beginning, as you see, even before the private preview, we invested a lot in reliability and security features from the starting point. That’s because throughout the years of experience of us building cloud service in different places, we find it’s easy to track features, but it’s hard to track and prioritize security and reliability. On the other hand, as a cloud service provider, we need customer trust in order to put their valuable data on us. Reliability and security is the founding stone of our company’s existing success. We started early on reliability and security, so that we build a lot of reliability and security features ahead of time before the final crunch. Also, we continue to invest in reliability and security for public beta, and also GA, to even iron down the reliability and security offering we had for our customers. That makes sure our final product is production ready for big enterprise customers, as well as small customers. Finally, listening to users early and often is the key as well to build an accurate product that solves customer pain. As you see in our previous slides, in private preview and public beta, we both heard customer feedback and we change our prioritizations based on customer feedback. We had enhanced security feature for public beta. We have enhanced console and Developer Edition for GA. Both times, it proves the fact that we’re able to act fast and react to customer feedbacks, really delight customers and earn more customer trust so that they are willing to put more workloads onto our cloud service as a result.

Network Latency Difference

Andrade: With the SSDs there’s not going to be a network latency involved. They’re much speedier, and we did actually see a pretty big performance increase once we introduced those. They weren’t part of our original design. They came a little bit later, once we saw EBS volumes weren’t going to be enough for us. With the local SSDs, we’re able to essentially match what we get in terms of performance with self-hosted ClickHouse.

Zhao: The difference is huge. It’s a few times difference.

ClickHouse’s Istio Adoption

Participant: I’m just curious about your adoption of Istio and whether you’re using it for any other things besides load balancing and traffic management, and if you have any hurdles or stories there to share.

Andrade: We use it only in one place. Another feature we get with Istio is we can actually idle our instances using Istio. What that means is if there’s a period of inactivity on our clusters, we can actually suspend them to free up the compute and save us some costs, and our customers some cost as well. We use Istio to essentially intercept the calls coming in. If the underlying cluster is in an idle state, Istio will hold that connection while it triggers the steps in order to bring that cluster back up and serve that connection. That’s another use case we use for it. It’s the same instance of Istio.

See more presentations with transcripts

MMS • RSS

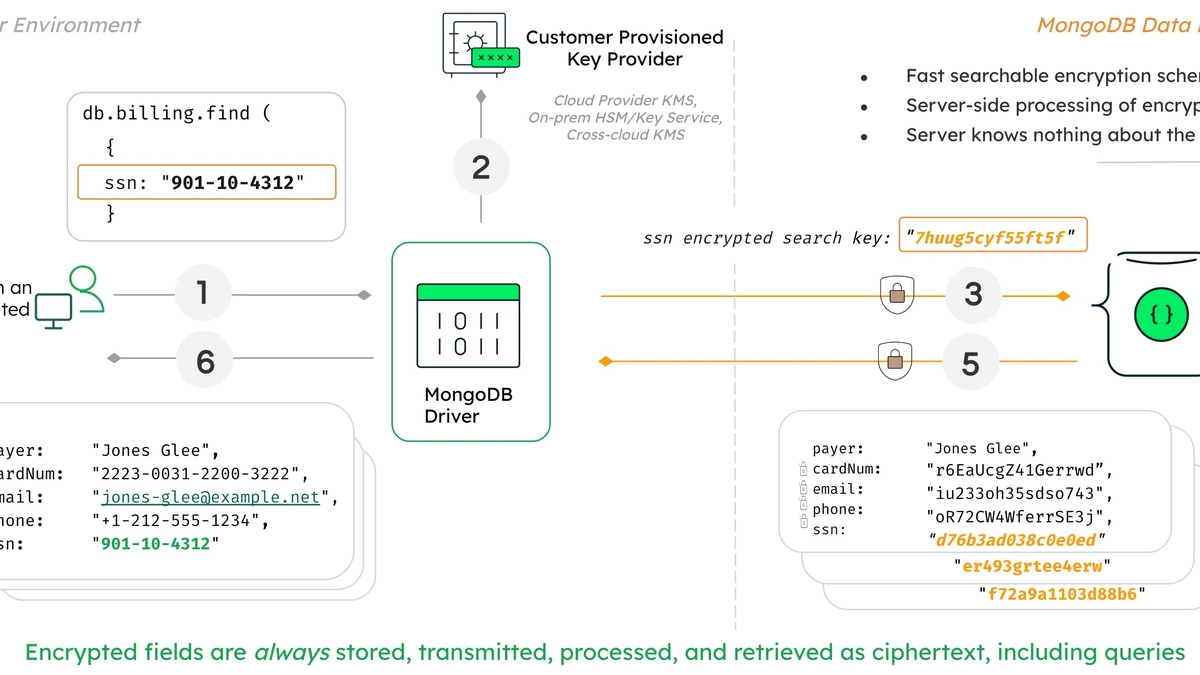

MongoDB Unveils Developer Data Platform and Queryable Encryption: An Interview with CTO Mark Porter

Leading database platform, MongoDB, has unveiled a state-of-the-art developer data platform aimed at fulfilling the contemporary prerequisites of scalability, speed, and security, crucial for today’s developers. The platform, MongoDB Atlas, is designed to be perpetually accessible, scalable on demand, and user-friendly, enabling developers to focus on addressing business issues rather than grappling with database complexities.

Empowering Developers in India

MongoDB is committed to endowing developers in India with critical tools and support for crafting top-notch scalable software and applications. The company is actively engaged in hosting local events, workshops, webinars, hackathons, and other initiatives to foster a vibrant developer community in India.

AI Integration for Enhanced Development Cycle

Moreover, MongoDB is striving to enhance developers’ experience throughout the entire application development cycle by infusing AI into its products and services. This integration includes features like natural language processing in MongoDB Compass, AI-propelled data visualizations in Atlas Charts, and the automated conversion of SQL queries to MongoDB Query API syntax within MongoDB Relational Migrator.

Partnership with Amazon for Next-Gen Applications

MongoDB is also charting plans to amalgamate MongoDB Atlas Vector Search with Amazon Bedrock. This strategic move is to empower organizations to construct next-generation applications on Amazon Web Services (AWS) and their leading cloud infrastructure.

A Conversation with MongoDB’s CTO, Mark Porter

In a recent interview, MongoDB’s CTO, Mark Porter, delved into his technology background, the importance of data platforms, MongoDB’s unique market proposition, the crucial role of scalability, and the game-changing impact of Queryable Encryption. Porter’s counsel for organizations contemplating a shift from traditional relational databases to modern architectures is to initiate on a small scale and progressively migrate critical business components. He underscores that a gradual approach is more efficacious than attempting a large-scale immediate transition.

In essence, MongoDB is dedicated to offering tools and services that not only keep abreast with industry trends but also equip developers to steer innovation in India’s rapidly evolving technology landscape.

MMS • RSS

Sumitomo Mitsui Trust Holdings Inc. trimmed its stake in shares of MongoDB, Inc. (NASDAQ:MDB – Free Report) by 2.1% during the 3rd quarter, according to the company in its most recent Form 13F filing with the Securities & Exchange Commission. The institutional investor owned 174,093 shares of the company’s stock after selling 3,721 shares during the period. Sumitomo Mitsui Trust Holdings Inc. owned 0.24% of MongoDB worth $60,212,000 at the end of the most recent quarter.

Sumitomo Mitsui Trust Holdings Inc. trimmed its stake in shares of MongoDB, Inc. (NASDAQ:MDB – Free Report) by 2.1% during the 3rd quarter, according to the company in its most recent Form 13F filing with the Securities & Exchange Commission. The institutional investor owned 174,093 shares of the company’s stock after selling 3,721 shares during the period. Sumitomo Mitsui Trust Holdings Inc. owned 0.24% of MongoDB worth $60,212,000 at the end of the most recent quarter.

Other institutional investors have also modified their holdings of the company. GPS Wealth Strategies Group LLC acquired a new stake in MongoDB in the 2nd quarter worth about $26,000. KB Financial Partners LLC purchased a new position in shares of MongoDB in the second quarter valued at approximately $27,000. Capital Advisors Ltd. LLC boosted its stake in shares of MongoDB by 131.0% in the second quarter. Capital Advisors Ltd. LLC now owns 67 shares of the company’s stock worth $28,000 after acquiring an additional 38 shares during the last quarter. Bessemer Group Inc. purchased a new stake in shares of MongoDB during the fourth quarter worth approximately $29,000. Finally, BluePath Capital Management LLC acquired a new position in MongoDB in the 3rd quarter valued at $30,000. Institutional investors and hedge funds own 88.89% of the company’s stock.

MongoDB Trading Down 0.3 %

NASDAQ MDB opened at $391.59 on Monday. The company has a debt-to-equity ratio of 1.18, a current ratio of 4.74 and a quick ratio of 4.74. MongoDB, Inc. has a one year low of $179.52 and a one year high of $442.84. The firm has a 50 day simple moving average of $397.23 and a 200-day simple moving average of $380.81.

MongoDB (NASDAQ:MDB – Get Free Report) last announced its quarterly earnings data on Tuesday, December 5th. The company reported $0.96 earnings per share (EPS) for the quarter, beating the consensus estimate of $0.51 by $0.45. The business had revenue of $432.94 million for the quarter, compared to analysts’ expectations of $406.33 million. MongoDB had a negative return on equity of 20.64% and a negative net margin of 11.70%. The company’s revenue for the quarter was up 29.8% compared to the same quarter last year. During the same period last year, the firm earned ($1.23) earnings per share. As a group, equities analysts forecast that MongoDB, Inc. will post -1.64 earnings per share for the current year.

Insider Activity

In related news, CAO Thomas Bull sold 359 shares of the firm’s stock in a transaction that occurred on Tuesday, January 2nd. The shares were sold at an average price of $404.38, for a total value of $145,172.42. Following the sale, the chief accounting officer now owns 16,313 shares of the company’s stock, valued at $6,596,650.94. The sale was disclosed in a filing with the SEC, which can be accessed through this hyperlink. In other news, CEO Dev Ittycheria sold 100,500 shares of the firm’s stock in a transaction dated Tuesday, November 7th. The stock was sold at an average price of $375.00, for a total transaction of $37,687,500.00. Following the sale, the chief executive officer now owns 214,177 shares of the company’s stock, valued at approximately $80,316,375. The sale was disclosed in a filing with the Securities & Exchange Commission, which is available through this hyperlink. Also, CAO Thomas Bull sold 359 shares of the business’s stock in a transaction that occurred on Tuesday, January 2nd. The stock was sold at an average price of $404.38, for a total value of $145,172.42. Following the sale, the chief accounting officer now owns 16,313 shares of the company’s stock, valued at approximately $6,596,650.94. The disclosure for this sale can be found here. In the last quarter, insiders sold 147,029 shares of company stock worth $56,304,511. 4.80% of the stock is currently owned by company insiders.

Analyst Ratings Changes

Several analysts recently issued reports on the stock. Capital One Financial raised shares of MongoDB from an “equal weight” rating to an “overweight” rating and set a $427.00 price objective for the company in a research note on Wednesday, November 8th. UBS Group reaffirmed a “neutral” rating and issued a $410.00 price target (down previously from $475.00) on shares of MongoDB in a report on Thursday, January 4th. Needham & Company LLC boosted their price objective on MongoDB from $445.00 to $495.00 and gave the stock a “buy” rating in a research note on Wednesday, December 6th. Truist Financial reaffirmed a “buy” rating and issued a $430.00 target price on shares of MongoDB in a research note on Monday, November 13th. Finally, Barclays lifted their price target on MongoDB from $470.00 to $478.00 and gave the stock an “overweight” rating in a report on Wednesday, December 6th. One equities research analyst has rated the stock with a sell rating, three have assigned a hold rating and twenty-one have given a buy rating to the stock. According to data from MarketBeat, the stock currently has an average rating of “Moderate Buy” and an average price target of $430.41.

Get Our Latest Research Report on MDB

MongoDB Profile

MongoDB, Inc provides general purpose database platform worldwide. The company offers MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premise, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Recommended Stories

Want to see what other hedge funds are holding MDB? Visit HoldingsChannel.com to get the latest 13F filings and insider trades for MongoDB, Inc. (NASDAQ:MDB – Free Report).

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

Visa Hiring Graduates, MBA, Post Graduates for Senior Software Engineer Post – Studycafe

MMS • RSS

Visa Hiring Graduates, MBA, Post Graduates for Senior Software Engineer Post

Overview:

Visa is hiring an experienced Senior Software Engineer – Java Platform Development at their Bangalore location.

The complete details of this job are as follows:

Eligibility Criteria:

Basic Qualifications

- 2+ years of relevant work experience and a bachelor’s degree, OR 5+ years of relevant work experience

Preferred Qualifications

- 3 or more years of work experience with a Bachelor’s Degree or more than 2 years of work experience with an Advanced Degree (e.g. Masters, MBA, JD, MD)

- Knowledge of MVC design pattern

- Experience of programming in Java, J2EE

- Experience with web services standards and related technologies (HTTP, Spring, XML, JSON, REST)

- Experience on Big Data Cluster [ Solr, Kafka etc]

- Solid understanding of database technologies and SQL.

- Knowledge of NoSQL Databases is a plus.

- Strong foundation in computer science, with strong competencies in data structures and algorithms.

- Proven problem solving skills and an ability to respond resourcefully to new demands, priorities and challenge

- Solid coding practices including good design documentation, unit testing, source control (GIT, SVN etc)

- Experience with build tools (Maven, Gradle etc).

- Ability and desire to learn new skills and take on new initiatives.

Roles and Responsibilities:

- Develop next generation payment applications, write solid code following best development practices.

- Work as a part of scrum team executing the products requirements working architect, product management and other teams in an agile manner.

- Develop web framework based on best practices.

- Execute POCs for visionary initiatives.

Disclaimer: The Recruitment Information provided above is for informational purposes only. The above Recruitment Information has been taken from the official site of the organization. We do not provide any Recruitment guarantee. Recruitment is to be done as per the official recruitment process of the company or organization posted the recruitment Vacancy. We don’t charge any fee for providing this Job Information. Neither the Author nor Studycafe and its Affiliates accepts any liabilities for any loss or damage of any kind arising out of any information in this article nor for any actions taken in reliance thereon.

Join StudyCafe Membership. For More details about Membership Click Join Membership Button

In case of any Doubt regarding Membership you can mail us at [email protected]

Join Studycafe’s WhatsApp Group or Telegram Channel for Latest Updates on Government Job, Sarkari Naukri, Private Jobs, Income Tax, GST, Companies Act, Judgements and CA, CS, ICWA, and MUCH MORE!”

Podcast: 2023 Year in Review: AI/LLMs, Tech Leadership, Platform Engineering, and Architecture + Data

MMS • Thomas Betts Shane Hastie Srini Penchikala Wesley Reisz Dani

Subscribe on:

Welcome to the InfoQ podcast year in review! Introductions

Daniel Bryant: Hello, it’s Daniel Bryant here. Before we start today’s podcast, I wanted to tell you about QCon London 2024, our flagship conference that takes place in the heart of London next April 8th to 10th. Learn about senior practitioners’ experiences and explore their points of view on emerging trends and best practices across topics like software architectures, generative AI, platform engineering, observability, and the secure software supply chain. Discover what your peers have learned, explore the techniques they’re using and learn about the pitfalls to avoid.

I’ll be there hosting the platform engineering track. Learn more at qconlondon.com. I hope to see you there. Hello, and welcome to the InfoQ podcast. My name is Daniel Bryant, and for this episode, all of the co-hosts of the In InfoQ podcast have got together to reflect on 2023 and look ahead to the coming year. We plan to cover a range of topics across software delivery, from culture to cloud, from languages to LLMs. Let’s do a quick round of introductions. Thomas, I’m looking in your direction. I’ll start with you, please.

Thomas Betts: Hi, I’m Thomas Betts. In addition to being one of the hosts of the podcast, I’m the lead editor for Architecture and Design at InfoQ. This year, I got to actually be a speaker at QCon for the first time. I’ve been a track host before and done some of the behind-the-scenes work. That was probably my highlight of the year, getting out to London, getting up on stage and meeting some of the other speakers in that capacity. Over to Shane.

Shane Hastie: Good day, folks. I’m Shane Hastie. I host the Engineering Culture podcast. I’m the lead editor for Culture and Methods on InfoQ. I would say QCon London was also one of my highlights for this year. It was just a great event, I track-hosted one of the people tracks and was just seeing some of the interesting stuff that’s happening in the people and culture space. Over to Srini.

Srini Penchikala: Thanks, Shane. Hello, everyone. I am Srini Penchikala. I am the lead editor for Data Engineering AI and ML at InfoQ. I also co-host a podcast in the same space, Data and AI and Ml. I’m also serving as the programming committee member for the second year in a row for QCon London. Definitely, it has become one of my favorite QCon events, so looking forward to that very much and also, looking forward to discussing the emerging trends in the various technologies in this podcast. Thank you.

Wes Reisz: Hi, I’m Wes Reisz. I am one of the co-chairs here on the InfoQ podcast. I haven’t done a whole bunch this year, so I’m excited to actually get back in front of the mic. I had the privilege of chairing QCon San Francisco last year, and I also hosted the “Architectures you’ve always wondered about” track. Had some amazing talks there, which I’m sure we’ll talk a bit about. My day job, I work for ThoughtWorks. I’m a technical principal, where I work on enterprise modernization cloud.

Daniel Bryant: Fantastic. Daniel Bryant here, longtime developer and architect, moved more into, dare I say, the world of go-to-market and DevRel over the last few years, but still very much keeping up-to-date with the technology. Much like yourselves, my highlights of the years revolve around QCons, for sure. Loved QCon London. I think I got to meet all of you. That’s always fantastic meeting out with fellow InfoQ folks. I also really enjoyed QCon New York, and met a bunch of folks there. I hosted the platform engineering track at QCon SF, and that was a highlight for me.

Is cloud native and platform engineering all about people and processes [03:02]

Daniel Bryant: Yet, it actually leads nicely onto the first topic I wanted to discuss. Because the platform engineering track really turned into a people and leadership track. We all know the tech is easy, the people are hard. I’d love to start and have a look at what are folks seeing in regard to teams, and leadership. Now, I know we’ve talked about Team Topologies before.

Wes Reisz: I’ll jump in. First off, that track at QCon San Francisco that you mentioned that you ran, I really enjoyed that track because it did focus on the people side of platform engineering. I consider myself a cloud native engineer. When I introduce myself, I talk about being a cloud native engineer. Over the last couple of years, for every single client that I’ve worked with, it seems that the problem that we’re really solving is more of around the people problem. As you rightfully mentioned just a second ago, there with Team Topologies, which, just to make sure everyone is level-set, Team Topologies is a book that was written by Matthew Skelton and Manuel Pais, both also InfoQ editors who happened to talk about the first idea of this book, if you read the intro at QCon London many years back.

Regardless, Team Topologies is a book about organizing engineering teams for fast flow, removing friction, and removing handoffs to be able to help you deliver software faster. Back to your question, Daniel, where you talked about platform engineering, and you talked about the importance of people on it. I really enjoyed that track at QCon San Francisco because it really truly did focus on the people challenges that are inside building effective platform teams. I really think that shows that we’re not just talking about the needs of having a platform team, but how to build platform teams more effectively. I found that a really super interesting track. What was your reasoning for putting together the track? What made you lean that way?

Daniel Bryant: It actually came from a discussion with Justin Cormack, who’s the CTO of Docker now, and he was championing the track, he’s on the QCon SF PC. He was saying that a lot of focus at the moment on platform engineering is very much on the technology. I love technology. I know you do, Wes, as well. The containers, the cloud technologies, infrastructures, code, and all that good stuff. He was saying that in his work, and I’ve seen this in my work as well, that the hardest thing often is the vision, the strategy in the people, the management, the leadership. He was like, can we explore this topic in more depth? I thought it was a fantastic idea. I reached out to a few folks in the CNCF space, and explored the topic a bit more in a bit more depth. Super lucky to chat with Hazel Weakly, David Stenglein, Yao Yue, Smruti Patel, and of course, Ben Hartshorne from Honeycomb as well.

Those folks did an amazing job on the track. I was humbled. I basically stood there and introduced them, and they just rocked it, as in fantastic coverage from all angles. From Hazel talking about the big picture leadership challenges. David went into some case studies, and then Yao did a use case of Twitter.

What came through all the time was the need for strong leadership for a clear vision. Things like empathy for users, which sounds like a no-brainer. You’re building a platform for users, for developers, and you need to empathize with them. Definitely, in my consulting work, even 10 or more years ago, I would see platforms being built, someone recreating Amazon but not thinking about the internal users. They build this internal Amazon within their own data centers and are all happy, but then no one would use it because they never actually asked the developers, what do you want? How do you want to interact with that?

What’s new in the world of software delivery leadership? [06:28]

Thoroughly enjoyed that QCon SF track. It was genuinely a privilege to be able to put the ideas together and orchestrate the folks there. Again, the actual speakers do all the fantastic work. I encourage folks to check out the QCon SF write-ups on InfoQ, and check out the videos on QCon when they come out as well.

Shane, as our resident culture and methods expert on InfoQ. In general, I’d love to get your thoughts on this, are you seeing a change of leadership? Is there a different strategy, different vision, or more product thinking? What are the big questions? I’d love to get your take on that, please.

Shane Hastie: “Is it a generational shift?” is one of the questions that sits at the back of my head in terms of what’s happening in the leadership space, in particular. We’re seeing the boomers resigning and moving out of the workforce. We’re seeing a demand for purpose. We’re seeing a demand for ethics, for values that actually make sense from the people who we’re looking to employ. As a result of that, is organizational leadership changing? I honestly think yes, it is. Is it as fast as it could be? Maybe not.

There is a fundamental turning the oil tanker, I think, going on in leadership that is definitely bringing to the fore social responsibility, sustainability, values, and purpose; developer experience touches it. Just treating people like people. On the other side, we’ve had massive layoffs. Anecdotally, we are hearing that those have resulted in lots more startups that are doing quite well.

That was a hugely disruptive period that seems to have settled now. Certainly, I would say the first six, maybe even nine months of 2023 were characterized by that disruption, the hype or is it hype around what is programmer productivity? The McKinsey article blew up, and everybody objected just what do we mean by programmer productivity? How do we measure programmer productivity? Should we measure programmer productivity? Then are we actually getting some value out of the AI tools in the developer space? Certainly, I can say for myself, yeah, I’ve started using them, and I’m finding value. The anecdotal stories, again, there’s not a lot of hard data yet, but the anecdotal stories are that AI makes good programmers great. It doesn’t make bad programmers good.

I think one of the things that scares me or worries me – and I see this not just in programming, but in all of our professions – that good architects get great because they’ve got the tools at their fingertips. Good analysts get better because they’ve got the tools at their fingertips.

One of the things that I think we run the risk of losing out on is how we build those base competencies upon which a good architect can then use and leverage the AI tools, upon which a good programmer can then use copilot to really help them get faster. There’s that learning that needs to happen right at the beginning as we build early career folks. Are we leaving them in a hole? That’s my concern. A lot going on there.

What is the impact of AI/LLM-based copilots on software development? [10:08]

Daniel Bryant: There are a few things that tied together, Shane, as well, in terms of, what we talked I think last year on the podcast; about hybrid working and how not being in the office impacts exactly what you are saying.

I mean, Thomas, on your teams, are you using Copilot? Are you using things like that? To Shane’s point, how do you find the junior folks coming on board? Do you bootcamp them first and then give them AI tools, or what’s going on, I guess?

Thomas Betts: We took a somewhat cautious approach. We had a pilot of Copilot, and a few people got to use it because we’re trying to figure out how this fits into our corporate standards. There are concerns about my data, like Copilot is taking my code and sending it up and generating a response, where is my code going, and whether it is leaking out there.

Just one of those general concerns people have about these large language models, what are they built on? Is it going to get used? For that reason, we’re taking it cautiously. The results are pretty promising and we’re figuring out when does it make sense? There’s a cost associated with it, but if you look at the productivity gains, it’s like, well, we pay for your IDE licenses every year. This is just one more part of that. It does get to how you teach somebody to be good at it.

I think there are a couple of really good use cases for Copilot and tools like this, like generating unit tests, helping you understand code, throwing a junior developer at, here’s our code base, there’s 10,000 lines and they just, eyes glaze over. They don’t know what it means, and they might not be comfortable asking every person every day, what does this do? What does this do? I can now just highlight it and say, “Hey, Copilot, what does that do?” It explains it.

As long as everyone understands that it’s mostly correct, it doesn’t have the same institutional knowledge as the developer who’s been around for 20 years and knows how every bit of the system works. From what does the code do, it can help you understand that and get that junior person a little bit of an extra step-up to be able to understand, and then how do I feel confident about modifying this?

Can I do this? How would I interject this code? Even the experienced people, I’ve got 20-plus years of development. I’ve fallen into the trap of, just “add this method”. I’m like, great. It’s like, that doesn’t exist. It’s like, yep, I made that up. The hallucinations get us all.

There’s still time to learn how all this works, but I still go back to the days before the Internet when you had to go to the library and look stuff up in a card catalog. Now, we have Google. Google makes me a much better researcher because I have everything at my fingertips. I don’t have to have all that knowledge in my brain. I don’t have to have read 10,000 books. Should I not have Google and search engines? No. It’s another tool that you get to use to be better at your job.

Daniel Bryant: I love it. Thomas, love it. Srini, I know we’re going to go into this, the foundations and some of the tech behind this later on with you. You did a fantastic trend report for us earlier last year. Srini, is your team using things like Copilot, and do you personally think there’s value in it? Then what are you thinking of doing with that kind of tech over the coming year?

Srini Penchikala: I agree with Shane and Thomas. These are tools that will make programmers better programmers, but they’re not going to solve your problems for you. You need to know what problems you need to solve. I look at this as another form of reuse. For example, let’s say I need to connect to a Kafka broker, whether in Java programming language or Python. I can use a library that’s available or write something by myself, or now I can ask ChatGPT or GitHub Copilot, “Hey, give me the code snippet on how to connect to Python.” How to connect to Kafka using Python or Java program.

It’s another form of reuse if we use it properly and get better at being productive programmers. Definitely, I see this being used, Daniel, Copilot, especially. ChatGPT is not there yet in terms of mass adoption in the companies. Copilot is already there because of the Microsoft presence right there. Definitely, I think these tools are going to help us become more productive in the areas that they are good candidates to help.

Wes Reisz: Daniel, when people ask me about gen AI and large language models, the way I try to go back to it is how my car, it helps me drive these days, and that really, in my opinion, is what these tools are doing for us. It’s helping developers. It’s helping developers drive our code. It’s augmenting what we’re doing. I think there’s quite a bit of hype out there about what’s happening. At the base of it, the core of what we do as software developers is we think about problems, we solve them. Gen AI is not replacing how we think about problems. We still need to understand and be able to solve these problems. What it’s doing, it’s really just helping us work at a higher level of abstraction. It’s augmenting. I think Thomas just mentioned that. This is really what gen AI is really about, in my opinion. Now, that’s not to say there are not some amazing use cases that are out there that could be done, but it’s a higher level of abstraction

Thomas Betts: Copilot is for developers, but I think the large language models in ChatGPT are useful for other people in the software development landscape. I like seeing program managers and product managers who are trying to figure out how to write better requirements.

How do I understand what I’m trying to say? Our UX designers are going out and saying, how do I do discovery? What are the questions I should ask? What are some possible design options? It’s the rubber duck. I like having the programmer ask a question too. Everybody can benefit from having that assistant. Especially, going back to we’re all hybrid, we’re remote. I can’t just spin my chair around and ask the guy next to me or the woman over there or find someone on Slack because I’m working at 2:00 in the morning. It doesn’t sleep. I can ask ChatGPT anytime to help me out with something. It’s always available. If you find those good use cases, don’t replace my job, but augment it. It can augment everybody’s job in some different way. I think that makes software development accelerate a little bit better.

Wes Reisz: The rubber duck is a great example, ChatGPT truly is, it’s a great rubber duck.

Shane Hastie: I’ve certainly seen a lot of that happening in the product management space, in the UX, in that design space. There are dedicated tools now, with ChatGPT being the true generalist tool. One I’ve been using quite a bit is Perplexity, because I find that one of the great things for me as a researcher is it gives you its sources. It tells you where it found this, and you can then make a value judgment about whether “is this a credible source”, or not. Then you’ve not got that truly black box.

Daniel Bryant: Fantastic. One thing that’s touching on something you said, Thomas as well, is I was actually reading a bunch of newsletters, like catching up on everyone’s predictions for the years, and Ed Sim is a Boldstart VC, said AI moves beyond GitHub, Copilot and coding to testing, ops, and AIops, slash, again, kind of thing. I know we all hate the AIOps, but it’s something you said there, Shane, that made me think of that, too, in terms of referencing. As we move into the more operational space, you’ve got to be right pretty much all the time.

You’ve also got to say, the reason why I think these things are going wrong in production or the reason why I think you should look here is because of dot, dot, dot. I do think taking the next step for a lot of operational burdens being eased by AI, we are going to need to get better at explaining the actions and actually referencing why the system recommends taking the following actions.

Wes Reisz: Daniel, I think it’s more than even just a nice to have, it’s a legal requirement. If you think about GDPR in the EE, the general data protection regulations that came out a few years ago, it talks specifically about machine learning models. One of the requirements of it is explain-ability. When we’re using LLMs, not just setting aside for a second where the data comes from and how it collects it, there’s a whole range of areas around that. The “explainability” of things that you add is a legal requirement that GDPR requires systems to be able to have.

How are cloud modernization efforts proceeding? Is everyone cloud native now? [17:48]

Daniel Bryant: Switching gears a little bit, Wes, when you and I last caught up in QCon SF and KubeCon Chicago as well, you mentioned that you’ve been doing a lot more work in the cloud modernization space. What are you seeing in this space, and what challenges are you running into?

Wes Reisz: Thanks, Daniel. Quite a bit of the work that I’ve done over the last year has been in the space of enterprise cloud modernization work. I’ll tell you what’s been interesting. It’s like we go to QCons, we get into an echo chamber, and we think just cloud is a forgone conclusion that everybody is there. Finding this over the last couple of years that there are quite a few shops out there that are still adopting, and migrating, and moving into a cloud. I guess, the first thing in the space to point out is that when we talk about the cloud, it’s not really a destination. Cloud is more of a mindset, it’s more of a way of thinking. If you look at the CNCF ecosystem, it really has nothing to do with whether a particular set of software is running on a certain cloud service provider.

It has nothing to do with that. It has to do with the mindset of how we build software. I think Joe Beda, one of the creators of Kubernetes, when he defined the cloud operating model, he defined it as self-service, elastic, and API-driven. Those were the characteristics of a cloud. When we really talk about cloud migration and modernization, we should first focus that it’s not a destination, it’s not a location. It’s really a way of thinking. A way of thinking means we’re talking about making things ephemeral, we’re talking about making things very elastic, leveraging global scale. It doesn’t necessarily mean it involves a particular location from a CSP. One of the things that I found really successful as we’re talking about cloud modernization this year is revisiting the seven Rs that I think AWS originally came out with.

That’s, if you remember, it was like retire, replatform, refactor, rearchitect. I think those are fantastic, but a lot of what I’ve been talking about this year is a little bit going beyond just rearchitect and actually talking about reimagining and resetting. What I mean by that is it’s not just enough to rearchitect an application. Sometimes you have to really reimagine.

If you’re going from a database that runs in your data center to a global scale database, something like AWS Aurora, Azure’s Cosmos DB, or even CloudFlare, CloudFlare’s D1. They have an edge-based relational database now that’s designed for CloudFlare workers. When you move to something designed like that, it changes how you think about databases. I think it requires a bit more of a reimagining of your system, how you do DR, and how you think about things like blue/green. All those changes when you start talking about a global scale database. It’s not just there, it has to do with how you incorporate things like serverless and all the myriad of other cloud functions.

Again, it’s a trap just to think about the cloud as a location. Because while I just mentioned serverless, and it certainly originated from cloud service providers, you don’t have to have a cloud itself to be able to operate in the cloud native way. It’s critical to be able to make sure we understand that cloud is a way of thinking, not a destination. Reimagining is one that I think is really important when you start considering cloud migrations and modernization work today. Then that last one hearkens back to your first question there at the very beginning, where you were asking about Team Topologies.

A lot of times, when you’re making that move into a more cloud-native-based ecosystem, there’s a bit of a cultural reshift that has to happen. That cultural reset is, I think, super important. If you don’t do that reset, then you continue the practices that maybe weren’t present when you weren’t using cloud native in a cloud system. I think that causes a lot of antipatterns to exist. Two things that I really think about in this space today are reimagining and resetting when it comes to thinking about how you do cloud modernizations.

Daniel Bryant: Big plus one to everything you said there, Wes. I’m definitely seeing this shift, if you like, with the KubeCons, in particular. I’ve been going to KubeCon pretty much since version zero. I was involved in organizing the London KubeCon back in the day, and shout out to Joseph Jacks, and the crew that put that one together. The evolution has been very much from the really innovative tech-focused type folks, like myself. We’re all in that space to some degree, but more towards the late adopters now. The late adopters, the late majority, have a different set of problems. They just want to get stuff done. They’re not perhaps so interested in the latest tech. I’m super bullish on things like EBPF and Wasm.