Transcript

Chen: My name is Will. I’m a director and tech fellow at BlackRock. I work on the Aladdin Wealth product. We do portfolio analysis at massive scale. I’m going to talk about some of those key lessons we’ve learned from productizing and from scaling up portfolio analysis as part of Aladdin. First off, just to establish a little bit of context, I want to talk about what we do at BlackRock. BlackRock is an asset manager. Our core business is managing money, and we do it on behalf of clients across the globe. Those assets include retirement funds for teachers, for firefighters, nurses, and also for technology professionals too, like you and me. We also advise global institutions like sovereign wealth funds and central banks too. We also built Aladdin. Aladdin is a comprehensive, proprietary operating system for investment management. It calculates and models sophisticated analytics on all securities, and all portfolios across all asset classes. It’s also a technology product. We provide it as a service to clients and we get paid for it. We have hundreds of client firms that are using it today. It’s a key differentiator for BlackRock within the asset management and finance industry.

Then, finally, we have Aladdin Wealth. Aladdin Wealth is a distinct commercial offering within Aladdin, and it’s targeted towards wealth managers and financial advisors. We operate at massive scale. Every night, we compute a full portfolio analysis on more than 15 million portfolios that are modeled on our system. These portfolios are real portfolios that are held by people like you and me, or your parents and your siblings. They’re held at one of our client firms. These portfolio analyses that we produce, they’re an important part of managing these portfolios, and also helping us achieve our financial goals. On top of those 15 million portfolios, we also process more than 3 million portfolio analyses during the day through API calls. We also get sustained surges of more than 8000 portfolios in a single minute. These API calls are also real portfolios, but we’re getting them through our system integrations with client firms. We’re providing them an instant portfolio analysis on-demand as an API. During the course of this talk, another 300,000 to 500,000 portfolios are going to have gone through our system for analysis.

Example: Portfolio Factor Risk

To give you a sense of what is a portfolio analysis, there’s a lot of stuff that goes into it. It’s a broad range of analytics. It’s a lot of complex calculations. They’re all an important part of understanding what’s in your portfolio, how it’s positioned, and how it’s going to help you achieve your goals. I do want to dive in on one particular number, though, a very important number that we calculate, and that one is portfolio factor risk. I’m going to spend a little bit of time explaining what it is, why it’s important, and also how we actually go about and calculate this thing. Risk is a measure of the uncertainty in your portfolio’s value, and how big the potential losses can be. If your portfolio’s got a risk of 10%, what that means is that we expect it to lose less than 10% of its value over a 1-year period. We have a one standard deviation confidence of that. If you’re going to take your portfolio’s returns on a forward-looking basis, and chart them out over a year, you’ll get that histogram on the left over here. That purple x I’ve got over there, that would be what your portfolio risk is. If you have a lot of risk, that x is going to be big, distribution will be very wide. Whereas if you have very low risk, your portfolio’s distribution is going to be very narrow. Knowing what that x is, is very important. Because if you don’t know what that x is, and the distribution is wider than you think, you could be set up to take on a lot more losses than you’d expect, or that you can tolerate financially given your circumstances.

To calculate that x, we need the stuff on the right, which is, we need factors and factor modeling. Factors are your long-term drivers of returns on securities and portfolios. They’re going to be things like interest rates, spreads, FX rates, oil, gold, all sorts of things like that. When we calculate risk, we use more than 3000 factors to do that. That lets us tell you not just what your portfolio’s total risk is, what that x is, but we also tell you how that x is broken up into all these different factors and can tell you where it’s coming from. To calculate this on the portfolio level, like I mentioned, there’s a lot of data required, there’s a lot of modeling required. At the portfolio level, it fundamentally boils down to that equation I put down there, which is a matrix vector equation. It’s E, C, and E, which is exposure, which is a vector, C is a covariance matrix, and E is another exposure vector. That covariance matrix in the middle, when you deal with factor risk, is going to have 3000 rows and 3000 columns in it, one for every factor, and it’s going to be a dense matrix in here. There are about 9 million entries in that matrix. We have to compute this value hundreds, or maybe even thousands of times to calculate a portfolio analysis for one portfolio.

1. Trim Your Computational Graph (Key Insights from Scaling Aladdin Wealth Tech)

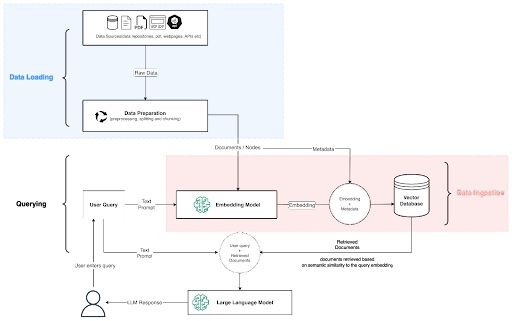

How have we achieved this level of scale? First of all, languages matter, frameworks matter. Writing good and efficient code is, of course, very important. We’ve also found that other higher-level factors matter more. I’m going to talk about six of them. The first insight we’ve learned is to trim down your computational graph. What I mean here is that if you think about any financial analytic that you may deal with in your day-to-day work, it usually depends on evaluating a complex computational graph, that graph is going to have a lot of intermediate nodes and values that have to be calculated. It’s also going to depend on a ton of inputs at the very tail end. For the purposes of this example, I’m going to be talking about the calculations we do, so portfolio risk and portfolio scenarios, which are marked in purple. I’ve marked them in purple over here. This is really a generic approach for a generic problem, because a lot of the business calculations that you’re going to deal with are basically just graphs like this. If it’s not a graph, if it’s just a page or a pile of formulas that have been written out by a research or Quant team at some point, you can actually try to write it out as a graph like this so you can apply this technique. Over here we have this simplified graph of how to compute portfolio risk and portfolio scenarios. To actually find out how to get that number, you have to walk backwards from the purple output node, all the way back to your inputs. If you look at portfolio scenarios, for example, portfolio scenarios at the bottom over there, it’s got two arrows coming out of it: it’s got position weights and security scenarios. Then your security scenarios itself has two more arrows. One arrow comes from factor modeling at the top in orange, and then another arrow comes from security modeling on the left. Then both of these nodes are going to come from a complex network of calculations. These things are going to have a whole host of inputs and dependencies like prices, reference data, yield curve modeling risk assumptions and factors. If you were to ask me with pen and paper or with a computer, actually, to calculate one of these portfolio scenarios, it would take you minutes, or it could even take you hours to actually do that even once.

To apply this principle, this insight here of trimming down the graph, we’re going to look at all the different nodes and see what you can actually lock down or see what you can reuse across your different calculations. In the case of risk, jump into that other purple node, it’s got three nodes leading into it. The first node is the position weight. That’s something that you can’t lock down, because every portfolio is going to look different. You might not even know the position weights of your portfolio until right before you need to actually calculate it. That’s not something you could do anything about, you have to leave that one as a trim variable here. You can move over to the orange side at the top. If you can lock down your risk parameters, which are your assumptions, your approaches, your parameters that you use to lock down your factor modeling, you can lock down your factor levels to say, I know what the interest rates are, I know the price of gold and all these other things are. That lets you lock down the covariance between factors, because if you lock down the two input nodes, everything underneath can be locked down. Then you can treat factor covariance as if it’s a constant, and you can just instantly fetch it and reuse it across all of your calculations.

Then the same approach also applies on the left over here with the security exposure. You can basically saw off most of that graph here, as long as you can lock down equity ratios, that’s a stand-in for all reference data, prices, and your yield curve, because when those are constants, everything to the right of it just becomes a constant as well. What you’ve done here is that you’re left with a much simpler graph here, in that there’s only three inputs. The maximum calculation depth of this graph, the maximum steps you have to walk backwards from a purple node is just one. When you have a graph like this, that lets you memoize, and so you can memoize these three intermediate nodes that have these locks on them, meaning you can take the results of these calculations, which are expensive to calculate, you can store them in a cache somewhere, and you can key them based on the inputs that came in at the very left or the very tail nodes. Then that lets you reuse them across these calculations. It can reduce the work required to calculate one of these things from minutes and hours down to less than a second. One of the issues with doing this, if you just memoize these nodes on-demand, is that the first time you calculate one of these things, is going to be prohibitively slow and lead to a bad experience for everyone.

The other part to think about here is, when can you lock these down, not just whether or not you can lock them down? If you have a reactive system for running your financial models, something that can trigger downstream processes when the price of the security gets updated, or risk assumptions are modeled, they can map all that out, that can be very easy to do. In many cases, and what’s true in our case as well, is that our financial models are run on batch systems that tend to be a little bit older and hard to operate. In these scenarios, you have to be practical about what you can do and try to capture as much of the benefit as you can, with the least amount of operational complexity. For us, it’s easy for us to just lock things down after the market closes, after the economy is modeled, and then we can run things overnight. Then they can be processed at the security or factor level well before the business day starts.

The last point on the slide is to not be afraid of challenging the status quo when you’re doing this. Because when you’re looking at one of these computational graphs, chances are you didn’t create that one. Tons of smart people have worked on making this graph the way it looks today, so that I can calculate everything it needs to do, so that it can be as powerful, as fast and efficient, and so on. If your job is to make this scale up by orders of magnitude, if your job is to make this faster, if your job is to make the tail latency a lot better, you fundamentally have to give something up. There’s no free lunch here. For us the thing to give up is really that variability and flexibility. In our situation, that’s actually feature, because when we lock down our risk assumptions it actually lets us compare things apples to apples across portfolios. It’s not something that our business users actually needed or wanted. That’s an easy tradeoff for us to make.

2. Store Data in Multiple Formats

The second insight is to store your data in multiple formats. What I mean by this is that you can take copies of the data that you generate from your analytical factory, from your models, and all that stuff, and you can store multiple copies of it in a bunch of different databases. Then when you actually need to query the data out, you can pick the best database for what you’re trying to do with it, and best meaning cheapest, fastest, or otherwise, most appropriate. For example, if you need to fetch out a single row, based on a known key, you could go to your large-scale join engine, like Hive, or Snowflake, or something like that, and you can get that row back out, and you’ll get the right answer. It’ll be very slow and very inefficient to actually do that, because it’s got to run a table scan in most cases. If you have a second copy of the data inside of a key-value store like Cassandra, we can go straight to Cassandra, get it out at very low cost. It will happen very fast, and it’ll be reliable as well, and as cheap.

On the flip side, if you need to do a large-scale join across two large datasets, you technically could run that off of your key-value store, as long as you have a way to iterate out the keys that you need. That would be a very foolish way to do that, your application join would be brittle. You might as well take advantage of the copy of the data that you have in Hive instead. In Aladdin Wealth, we basically progressively added more copies of the data as our use cases have grown. We started off by using Hive as our one database. Hive was great. It was incredibly scalable, could handle a lot of data, but it had a couple of tradeoffs associated with it. One was that it was very slow and actually unstable when you had a lot of concurrent usage. Second of all, it was just not as fast as we needed to be when we were looking at a small slice of the data, or when we wanted to just take a single rollout. Most of our use cases were one of those two things. To accommodate that, we actually introduced Solr as a replacement. Solr can do both of those things very well using Lucene indices. Things were great. It was handling concurrency very well. Then we also had to introduce Cassandra after that, because we had to deliver an external API with very strict SLAs and financial implications for breaching them. We needed something that was even faster, and more importantly, that was more reliable, that had a much tighter tail. After that, we actually got to have it tie back into the mix, when we have to do some large-scale joins. That has really been our journey is really adding more copies of this.

One of the tradeoffs with dealing with this approach is that when you have a lot of different databases, and your data exists in a lot of different places, it can potentially become inconsistent. That sounds bad. It’s not always the end of the world, because there’s a couple of things that you can do to deal with it. First of all, one of the things you can do is to introduce data governance and controls. What I mean by that is you can introduce processes, to make sure that if you at least attempted to write data to all of the databases that you have copies of here. You can also introduce processes that are reconciled to make sure that what actually ended up there converged upon some set at an eventual point of time. You can also have monitoring of like dead letters, if stuff fails to write, you should make sure somebody is looking at it. There’s all sorts of stuff you could do there.

A second approach is to actually isolate your sensitive use cases, meaning that you should look at all of your workloads and see what really, truly cannot tolerate any degree of inconsistency, even for a moment. In our experience, within our domain, there have been very few of those use cases. Whenever you ask your business users, can data be inconsistent? Of course, it sounds bad to say that data is inconsistent. The real question that you want to ask your business users is, what are the real-world tangible commercial implications if I have two APIs, and they come up with a different answer over a 1-second period, over a 1-minute period, or over a 1-hour period? When you do that, you might find that it’s actually not as bad as you think. Then you can also start to have the more interesting conversations about, how am I going to find out if one of these things is inconsistent? If I find out one of these things is inconsistent, do I have a way to fix it? We like to call that detection and repair, and making sure that you have a detection method and a repair method for these things. Then the final way to deal with inconsistency is to not care at all. I think there can be some cases where that works, and is appropriate. In most situations, I think data governance and controls can cost very little. They’re very cheap to implement. They can go a long way towards making things feel more consistent.

3. Evaluate New Tech on a Total Cost Basis

The third insight is to evaluate new tech on a total cost basis. What I mean by this is that when you’re looking at the languages, the frameworks, and the infrastructure that are going to make up your software system, make sure to think about it from a total cost of ownership perspective, within the context of your enterprise. If you think about existing tech at your firm and your enterprise, it’s going to be enterprise ready by definition, because it exists in your enterprise. There’s prior investment in it. It’s integrated with all the other stuff that’s worth running at your company. You’ve got operational teams that are staffed and trained to operate and maintain it. Then you have developers that know how to code against it, too. On the flip side, enterprise ready tech tends to be more on the older side, and not very cool. By cool, I mean have a lot of like developer interests outside of your company or even within your company. Coolness does matter, because it affects your ability to hire developers, to inspire developers, to retain and train them, and all that stuff. There’s a real consideration here. With new tech, when you’re thinking about onboarding new tech into your enterprise, you need to weigh that enterprise readiness versus that coolness factor and whatever other tangible benefits that you believe it’ll bring. It could be faster, or more scalable, or more reliable. If you have to weigh that against, I have to put in the work to make this integrate better with my systems, I have to get my operational teams bonded and trained on maintaining this, and so on. Just make sure to weigh those both sides carefully.

On the slide here, I’ve laid out the technology landscape from the perspective of my enterprise, or at least within Aladdin Wealth. We have enterprise ready stuff on the right, and then we have less enterprise ready stuff on the left. This picture is going to look very different for every enterprise. For us, we’ve stuck mostly with the enterprise ready side, so the right side of the graph, but we did make a choice to onboard something that wasn’t quite enterprise ready, and that was Spark in the middle here. That was the right choice for us, because at the time, the alternative to Spark to do some distributed computing work was just very hard to code against. It was also that we had a Hadoop enterprise installation at the firm that was geared towards research workloads. We had something existing that we could just invest a little bit to fully operationalize and make it fully production ready to run our stuff. The last benefit of Spark here was also that it’s independent of resource managers. In theory, we could port this workload across a lot of different clusters. This landscape is what it looks like for us. It is going to look different for other enterprises. I do want to highlight, Cassandra is something that I personally think is very cool. It’s also very enterprise ready, because we have DBA teams operating it, and we have years of experience with it. It may not be enterprise ready tech at your enterprise. On the flip side, I put Kubernetes and gRPC there. This isn’t brand new tech either. It could very well be production ready at your enterprise, but for us, it just is not.

4. Use Open Source, and Consider Contributing

The next insight is to use open source and to consider contributing. Many hard problems have already been solved in open source. If you find something in the open source that does almost everything you need, but not quite everything, it’s missing a key piece of functionality, you’ve got two choices as an enterprise. Choice one is to get a bunch of developers together, and try to build an internally developed equivalent of that open source product. Your development teams will probably have a lot of fun doing this, because developers love to write code, and they love to build complex things. At the flip side, it’s going to take a lot of time, it’s going to take a lot of money. Then, what you end up with at the end is not going to do everything that the open source product does, it’s going to be missing all these triangles and squares of functionality in there, and it probably just isn’t going to be as good either. On top of that, your enterprise then has to go and maintain this forever.

The other approach, which is path 2 here, is to follow your firm’s open source engagement policy. If you have an OSPO, that would be a good place to start, and work with the community and engage with the maintainer of those projects, to see if you can contribute that missing functionality back in as that purple box over there. This doesn’t always work out. It’s not a guarantee. It could fail for many reasons. It could be that it’s trade secret. It could be that the purple box doesn’t align with the direction of the open source project. When it does work out, it’s a win-win for everybody involved. The community benefit from your contribution, and then your company benefits in so many ways, because your company can do this with less time and less money. The product you end up with is even better than what you could have gotten before. The open source community tends to enforce higher standards on code than internal code. Then, finally, your firm doesn’t have to invest in maintaining this by themselves forever. They can invest as part of the community and be part of the community in keeping this thing alive. Then, finally, for the developer themselves, there’s a key benefit here, which is that you get some fame and recognition because you get a public record of your contribution. It’s good for everybody when you can follow path 2.

In Aladdin Wealth, we of course use tons of open source software. We use like Linux, Java, Spring, Scala, Spark, all sorts of stuff like that. We also tend to follow path 1 more than path 2, but we want to be doing more of path 2, and be contributing more in open source. I do want to share one story which is about matrix vector multiplication. That is something that’s very much core to our business, like I described earlier. We’ve actually taken both path 1 and path 2 for matrix operations. We started off our business and our product by using netlib BLAS. That is a open source state of the art library from the ’70s. It’s written in Fortran. Everybody is using that for matrix operations. We use that to bootstrap our product and bootstrap our business. We achieved a significant level of scale with that, and things were great. What we found out was that as we expanded to other regions, as we expanded our capabilities and took on more complex portfolios, and added more functionality, we found that BLAS was actually not fast enough for us. It was running out of memory in some cases. That seems like a surprise, given how old and battle tested this library is. That’s fundamentally because we did not have a general-purpose matrix problem, and BLAS is highly optimized for dense vector, dense matrix operations. Our matrix is dense, and it’s also positive definite and symmetric, because it’s covariance matrix. Then our vectors, though, are almost always a little sparse, or extremely sparse. We weren’t able to take advantage of that problem formulation with use of BLAS. What we did is we gradually unwound more of our implications with BLAS methods and replaced them with internal equivalents that were optimized for our use cases with these sparse vectors and dense matrices. It got to the point where we were only using the open source library for the interface of a class that defines, what’s a matrix? How do I iterate the entries, those kinds of things? It’s not a great situation to be in, because you’re pulling in a bunch of code in an interface.

What we did is we looked out at the open source matrix library landscape in Java, and we found that there was one library, EJML, which did most of what we needed. It even had one of the optimizations that we had added. It was optimized for a slightly different setup, in that you could have a sparse matrix and a dense vector, but we actually needed the reverse. We also needed one that was exploiting the symmetry as well. What we did is we worked with the maintainer through GitHub, by raising an issue, by raising a PR, by exchanging comments. We were able to add that optimization in as a very small piece of code, an additional method on top of a very comprehensive, very fully featured library within EJML. Then we were able to pull that code back, and we’re going to be able to retire a bunch of internal code as a result of that. That’s the value of open source. We’re getting to retire our internal code, and we’re also contributing back to the community. The story gets even better, because when we raised our PR with our optimization, the maintainer actually took a look at it, when he was reviewing it, he made another optimization on top of that, and that optimization actually yielded a 10% throughput increase in certain cases. That optimization, that extra 10% is something that we would have never been able to benefit from or found out from if we hadn’t first taken the step to open source our own, with not that bespoke use case. We’ve got a post on the BlackRock Engineering blog at engineering.blackrock.com, which talks about this in detail.

5. Consider the Dimensions of Modularization

The next insight I want to talk about is about the dimensions of modularization. There are three of them that I want to talk about, there’s change, capabilities, and scale. I’m going to go through these in detail over the next couple of slides. There are a couple of different approaches to drawing module boundaries when you’re designing a software system. One of the very classic approaches dates back to a 1971 paper by David Parnas. His key insight, his conclusion at the end of that paper was that you should consider difficult design decisions or design decisions which are likely to change. Then your design goal becomes to hide those decisions, ideally inside of a single module. Then that lets you change those design decisions later on, just by modifying that one module, and you don’t have to touch everything else. The very common example of this is going to be your database. If your software system lives for a long time, if it’s successful, you may want to change out the database. It could be because of commercial reasons, you want to swap out your vendor. Or it could just be that you want to upgrade to a non-compatible version, or you want to take your code and run it somewhere else with a totally different database. To apply this principle, you would draw boundaries around your database access. That way, you can just swap out that one module later on when you actually need to do this, and you don’t have to rewrite your calculation logic for your pipelines or anything else like that. That was the dimension of change.

The next dimension is capabilities. What I mean here is that you should think about breaking up your modules by their functional capabilities. These defines what they do. Make sure that those capabilities are high level enough, they’re real intangible things that product managers and business users can describe. They can tell you in plain language what they do, how they interact, and why they’re important in their daily lives. When you do that, your modules can become products themselves with their own product lifecycle, and their own product managers. Even better, they become building blocks that product managers can then combine in a lot of different ways to build many different products, depending on whatever use case they’re trying to target. In the wealth space, our capabilities, our building blocks are going to be things like portfolios, like securities and portfolio analysis. A product manager could reasonably take those modules, those services and APIs, and say, “I want to build a portfolio supervision app. I want to be able to monitor portfolios, check when they’re within tolerance, make sure that they’re going to achieve my end client’s goals.” They can just combine those building blocks with a compliance alert building block, and they’ve got a product right there. Then another product manager can go and take those same building blocks, and build a portfolio construction app. Something that’s geared towards making changes to portfolios, and analyzing those changes and making sure they’re appropriate, and using optimization to do that. You can do that with those same building blocks, and you can layer on optimization capabilities, householding data about your customers, all sorts of stuff like that. The best part about this is that that layering, that construction of these little building blocks, if you’ve done your job right, your product managers don’t need you to think about that. Your product managers can do that without you. That frees you up to think about the harder engineering problems that you need to be dealing with.

When you do that, if you take change and scale up change and the capabilities as your dimensions, that lets you treat batch as long-running and interactive. What I mean here is that if you’ve taken your capability, and you’ve taken the business logic of that capability and created that as a pure library that has no dependencies on where it’s rubbing, so there’s no idea what the database is, or anything like that, you can tailor the deployment of that capability based on what you want. If you take a capability like portfolio analysis, you may want to run it tens of millions of times on batch infrastructure, but you also need to be able to provide that as an API. You need to be able to handle one portfolio very quickly and very reliably. You can’t fail on that one, or as a batch when you can fail and retry it in 30 minutes or something like that. To do that, you would take your library, and you would bind it to reliable databases, stuff that’s geared towards that reliability, that RPC or API gateways, and you can deliver it as an API. Then you can take that same library, you can bind it to Spark, you can use files in HDFS for I/O, stuff that’s really geared towards that throughput, and you can run the same capability across tens of millions of these portfolios. The nice thing about this approach is because your library is totally stateless, you can use the same code in both places. You can be sure that the numbers you’re calculating here, your analytical consistency is there between the two different approaches. You also get the benefit of, if I’m going to add a new feature, if I’m going to add a new analytic, I get it for free in both sides because I’ve created this as a library. If I make things faster, if I swap out this EJML library, it’s going to be faster on both sides.

One of the interesting things, though, is that when you’re actually in your IDE, trying to do this, when you’re actually going through your code and modules, you’ll find that you’re adding interfaces that feel like they shouldn’t exist. It feels like a lot of extra work. It’s going to feel like a lot of extra complexity just for the sake of this, just to unchain your logic from your database, from your RPC, and all that stuff. At the end of the day, we’ve found that it’s really worth it to put that extra complexity in there, because it lets you unchain your capability from how it’s running. Then you can stamp out all the deployments you need. We’ve talked about here being an API deployment, a batch deployment. You can also have ones that are more geared, further skewing towards the reliability end, or whatever. There could be three or four other deployments you’ve got here. We actually didn’t follow this guidance to begin with. We actually did the obvious thing, which was to code everything inside of our Spark job in Scala. We had all of our calculation logic in there tightly bound to our Spark jobs at first. We had to rewrite the whole thing when we had to build an API. Now we know and we’re going to apply that to everything else we do.

The final dimension on the modularization is scale requirements. The way to think about this, or the way I think about this is that if you have two workloads, and if those workloads are fundamentally related, they deal with the same set of data, they deal with the same domain, but one of them has a very different scale profile from the other. You might be tempted to put them in as one module, but you should consider drawing the line between them to make them two separate modules. For example, portfolio analysis, the one I keep talking about, is going to be that purple box here. It’s a very calculation heavy capability, and it needs to run at very large scale, but it fundamentally only ever reads from a database. It’s very lightweight on your database. You can accelerate that with caches, and all that stuff. Then on the other hand, we also have low scale workloads that depend on, if someone is making a trade on a portfolio, or someone’s updating a portfolio. It’s still dealing with portfolios but it’s at a much lower scale. On the other hand, it also writes to a database, the writes tend to be more expensive than your reads. If you were to couple those two things in one module, then you’d have to scale them up together too. To scale up your purple boxes, you’d have to scale up your orange boxes in the same way. If you draw a line in between them, and you have two separate capabilities and two separate modules here, you can run them as separate services, and you can scale them up exactly the way you need. You end up with one orange and three purples instead of three oranges and three purples. This isn’t a hard and fast rule. I use the example of read versus writes from databases, but there are plenty of cases where you actually don’t want to draw that boundary, even if there is that big scale difference. For example, if you have a CRUD service, chances are like a lot of other people are calling that read, call on it much more often than the rest, but you’re not going to create a CRUD service to achieve your CRUD. We will cut in our service to achieve that. We like to call this splitting between stateless services, stateful services, even though technically they’re all stateless, because they’re not maintaining their state. As much as possible, we try to break things up into stateless things and try to carve out the stateless part of a workload so that we can scale it up. To summarize this fifth point here, we found that it’s valuable to consider multiple dimensions all at once, when you’re drawing your module boundaries. There’s a dimension of change from Parnas’s paper, and there’s a dimension of capability, so basically trying to think about what are your product building blocks. Then there’s also the dimension of scale. Thinking about, what are your scale requirements? There’s no core playbook, or there’s no universal right answer here. This is just something to think about, considering all these things all at once when you’re designing your system.

6. No Backsies Allowed

The final insight I want to talk about now is no backsies allowed. The point here is that you need to be careful about what you expose externally, what you expose outside of your walls. Because once you expose something, your callers, your customers, they’re going to expect it to always work. They’re going to depend on it. They’re going to create these key critical workflows that require that. You’re never going to be able to take that back. A very common way this happens is that if you add some features that are hard to maintain, they’re complex, and you don’t expect them to be that useful, but then one person, one client firm happens to use it, you’re stuck with that. You have to carry it on forever. The other way this takes shape is also with SLAs. If you’re overly permissive with your SLAs, if you set them too high, or if you choose accidentally not to enforce it, you’re going to be in a lot of trouble. It’s going to reduce your agility, being too permissive will reduce your agility. It’s going to increase the fragility of your system. An example of that would be if you get a calculation request, you get an analysis request that’s super large, or super complex in some way, and you happen to be able to process that successfully within a certain amount of time. The only reason that happened was because there was no other traffic going on, and so all of the typical bottlenecks were just totally free for that to happen. Your customers don’t care that that was the case. Their callers are going to say, it worked once, it’s going to work a second time. I’m going to keep going on and on. You’re going to be stuck with that. This can also happen with retention, so data retention requirements. If you said that, I’m going to purge data or not make data available after a certain amount of time, and then you don’t do that, someone is able to fetch out their old data, they’re going to quietly start depending on that too. In both of these cases with SLAs, these are real things that have happened to us, and your only choice is to find out how you’re going to make that work. There’s at least two ways you can do that. One way is you can spend money to make it work. You can spend money on hardware, whether it’s the memory, or the disk, or CPU cores, and you can make sure that you can continue that quality of service. The other way that this can happen is that your developers can be so inspired by these challenges, and they can try to find more optimizations and performance out of their code. That’s actually a good thing. You want your engineers to be challenged and challenged to innovate and delivering for your clients. It’s still a cost that you didn’t have to pay at that time. The key is that you should be conservative about your throttles, and then make sure you have throttles on everything you do. That way you don’t get into the situation. If you have service readiness checklists as part of deployments, you should think about adding a throttle check, and reasonable validation of that throttle to that service readiness check. Also, yes, don’t expose any features that you don’t plan to support forever, because, otherwise, you’re stuck with these things, they’re going to be like those anchors on the page here. They’re going to prevent you from getting things done, and otherwise slowing down.

Summary

We’ve achieved a lot of scale with Aladdin Wealth. We’ve got more than 50 million portfolios analyzed on our system every night. We’ve got more than 3 million API calls that we do throughout the day. We’ve got surges of more than 8000 portfolios per minute. To achieve this level of scale, these have been some of the key insights that we’ve learned along the way. I also want to leave you with one other point here, which is that, running great code, profiling your page with all sorts of tools, and just generally being passionate about the performance of your applications and your customer experience here is all going to be very important to being successful. On top of that, people: hiring, retaining, and developing the people on your team is going to be a key part of whether or not you can achieve this.

Questions and Answers

Participant 1: I was curious about like, when you want to break things out into modules and dissolve them into their own products. You’ve done this a few times, it sounds like. Have you been taking more of like an incubator approach where you know ahead of time that some service is going to be used by multiple teams, and so you build it upfront with that way in mind, like not committing to any particular use case? Or, are you still starting off with one pure use case, it seems like it has a lot of potential broad applicability, and then you bring it out into your platform once it’s proven itself in one case.

Chen: I think we try to do the former. We try to build capabilities first, and so make sure that we map out what we think a capability needs to be. That it’s not very specific to one like, I think put as a hero use case, and we try to shape it and all that stuff. We are also an enterprise B2B business, and so sometimes we do have to really over-index on one use case versus another. It’s a balance, in that you need your product managers to get a little bit more technical to actually do this. For them to also think from a platform-first approach and think about the platform as being the product itself, not the actual experience of the end users being your product.

Participant 2: You mentioned about the business problem, like saying business may be ok if data is not matching between the formats. There is a lot of data replication here because you’re showing us four different data streams. As an enterprise, is your policy ok to make it that kind of data replication?

Chen: I did mention data inconsistency as a tradeoff, but the other one is cost, because there’s going to be a lot of copies of these things there. That is something that you’ll have to weigh in terms of what is the benefit you’re getting out of that. For example, if most of your Solr use cases can be satisfied with Snowflake or Hive, and the reasonable expectations of performance from the user do not demand what you get from Solr, then there’s a cost dimension here that you can say, I choose to remove one copy of this data, save on storage, but then you suffer a little bit somewhere else. That’s the story of that. This is a tradeoff between cost and user experience here.

Participant 2: It’s not more from the cost perspective, which one is the certified data? If you’re feeding the data to a downstream system or to an external party, what is the criteria you’re using?

Chen: You do have to pick one of those things as being your source of truth. That source of truth when you’re dealing with analytical data can be something like files, like something that you’re going to archive. If you have a factory that’s generating the stuff overnight, you can say, I’m going to snapshot at this point, this is what I’m going to put into files. In our line of business, that’s actually pretty helpful, because we also need to deliver large-scale extracts to analyze to say like, this is what we actually saw. This is what we computed. This is what the universe looks like. This is the stuff that you can go and warehouse in your data warehouse.

Participant 3: I want to hear, since you talked about a couple of migrations there, just about the role of testing, and how you kind of thought of that, especially dealing with these fairly advanced linear algebra problems and making sure that you’re getting those same numbers before the migration, after the migration, and going into the future. Maybe just hear a little bit about that side of things.

Chen: Like I mentioned, analytical consistency across the deployments is very important, and so is like after releases. I think that’s definitely an evolving story for us on an ongoing basis. I will talk about one of those migrations, which was when we moved from our Scala-based risk engine to our Java one. The testing for that took months. We built specialized tooling to do that, to make sure every single number we calculated across all of our client firms was the same afterwards. It actually wasn’t the same all the time. It turns out we had a couple weird cases or like modeling treatments that had to get updated. You do have to build tooling and invest in that to make sure that’s the case. Because when you’re dealing with these models, your customer client firms trust that you’re right. It’s complex to calculate these things, but if also they shift, and there’s some bias that’s introduced, like that’s a huge deal. We also have checks and controls in place at all levels. When you think about that factory and those nodes, your yield curve model is going to have its own set of backtesting results after every change and all that. Every aspect of that graph will get validated.

Participant 4: I work in AgTech, and so we have, at a smaller scale, but a similar scenario like what you outline where you’ve got an analysis engine. It’s stateless, so you take some inputs, you generate a whole bunch of analyses outputs. Even though the engine is technically stateless, it does have an extra dependency on some public data that’s published by the government. Some of the parameters that feed into the engine are pulled dynamically by the engine out of that database. That’s not part of the inputs that are going in. If your system has this kind of extra database dependency, is it still viable to have that multi-deployment that you described where you’ve got the engine here in an API, you’ve got the engine over here in batch? Is it practical and easy to move around that way when you’ve got an external dependency like this? Is that maybe a problem that you have encountered.

Chen: We do have external dependencies. I do think it depends very much on the circumstances and the nature of what that external dependency is. We do have some of those things that we depend on, for example, maybe a security model that is slow and unpredictable, and that we don’t control within the rest of Aladdin. What we do there is we evaluate to see if we should either rebuild that thing or have a specialized cache for that, and then take ownership of that, basically creating a very clean boundary. Think about the modularization, like drawing a module around there. You might have to add some code to do that. That’s something that we have done. We’ve chosen to like, we’re going to make a copy of these things and make it available on the outside.

Participant 4: It sounds like you take the dependencies, and you just go ahead and copy them over, as far as you’re concerned for your use case, it’s worth. That’s just a choice we have to make.

Participant 5: I imagine that you work with hundreds of thousands of customers that are all different, no two customers are alike. When you’re designing an engineering system, so how do you account for that? To what extent, do you try to have a system that works for at least most of the customers? Do you treat them as different channels, give them different instances, or the pipeline, or the architecture, different data storage? I’m really interested in your perspective.

Chen: I think for the most part, we like to be building one platform and one set of technology to back it, and all sorts of stuff like that. I’m going to show the computational graph here. We do like to build one product and one thing, but when you have clients across the globe, and you have client firms that are using this for tons of different people with a lot of different circumstances, you’re right, they’re going to have a lot of different things. Doing business in Europe is very different. It’s not just GDPR, it’s also how they manage money. They don’t even use the term financial advisor over there. They have very different business models. When you’re global, you have to be able to adapt your system to all of these different markets if you want to succeed. I talk about locking these things down. You have to also open up certain points of configuration, but you want to be very intentional about which parts you’re unlocking because you’re trading off your scale as a result. For example, like every client firm is going to have a different set of risk assumptions. They may choose to weight the different half-lives. They may choose to do different overlaps, when you think about some of these data analysis techniques. They may have different wants, and so you have to be able to allow them to vary them, before that one set for that one enterprise, and that’s one of the beauties of B2B enterprise business. You can lock it down at that point. In the same way, like client firms’ view on what is an opportunity, what is a violation, all these kinds of things, like compliance alerts need to be specific to your customers as well. Also, one key thing about Aladdin is that we don’t have any investment views ourselves, we have to be able to take investment views from our customers. That’s another point of configuration. Each one of those things you do, you have to weigh those tradeoffs again. What is the value? What is the commercial impact of opening that up versus the cost of how are you going to be able to scale there? Really requires very close partnership.

See more presentations with transcripts

_cptybzmh.jpg)