Month: August 2024

Allspring Global Investments Holdings LLC Lowers Holdings in MongoDB, Inc. (NASDAQ:MDB)

MMS • RSS

Allspring Global Investments Holdings LLC trimmed its holdings in MongoDB, Inc. (NASDAQ:MDB – Free Report) by 20.5% in the 2nd quarter, according to its most recent filing with the SEC. The institutional investor owned 387,176 shares of the company’s stock after selling 99,967 shares during the quarter. Allspring Global Investments Holdings LLC owned about 0.53% of MongoDB worth $96,778,000 at the end of the most recent reporting period.

Allspring Global Investments Holdings LLC trimmed its holdings in MongoDB, Inc. (NASDAQ:MDB – Free Report) by 20.5% in the 2nd quarter, according to its most recent filing with the SEC. The institutional investor owned 387,176 shares of the company’s stock after selling 99,967 shares during the quarter. Allspring Global Investments Holdings LLC owned about 0.53% of MongoDB worth $96,778,000 at the end of the most recent reporting period.

Other large investors also recently bought and sold shares of the company. Transcendent Capital Group LLC bought a new stake in MongoDB in the 4th quarter worth about $25,000. MFA Wealth Advisors LLC bought a new stake in shares of MongoDB during the 2nd quarter valued at about $25,000. YHB Investment Advisors Inc. bought a new stake in shares of MongoDB during the 1st quarter valued at about $41,000. GAMMA Investing LLC bought a new stake in shares of MongoDB during the 4th quarter valued at about $50,000. Finally, Sunbelt Securities Inc. grew its stake in shares of MongoDB by 155.1% during the 1st quarter. Sunbelt Securities Inc. now owns 125 shares of the company’s stock valued at $45,000 after purchasing an additional 76 shares during the period. 89.29% of the stock is currently owned by institutional investors.

MongoDB Price Performance

MDB opened at $242.69 on Tuesday. MongoDB, Inc. has a 12-month low of $212.74 and a 12-month high of $509.62. The company has a current ratio of 4.93, a quick ratio of 4.93 and a debt-to-equity ratio of 0.90. The business’s fifty day simple moving average is $245.72 and its 200-day simple moving average is $320.26. The company has a market capitalization of $17.80 billion, a price-to-earnings ratio of -87.54 and a beta of 1.13.

MongoDB (NASDAQ:MDB – Get Free Report) last posted its quarterly earnings results on Thursday, May 30th. The company reported ($0.80) earnings per share (EPS) for the quarter, hitting analysts’ consensus estimates of ($0.80). MongoDB had a negative return on equity of 14.88% and a negative net margin of 11.50%. The business had revenue of $450.56 million for the quarter, compared to the consensus estimate of $438.44 million. On average, equities research analysts anticipate that MongoDB, Inc. will post -2.67 earnings per share for the current fiscal year.

Insider Buying and Selling

In related news, CAO Thomas Bull sold 138 shares of the company’s stock in a transaction on Tuesday, July 2nd. The stock was sold at an average price of $265.29, for a total transaction of $36,610.02. Following the completion of the sale, the chief accounting officer now directly owns 17,222 shares in the company, valued at approximately $4,568,824.38. The sale was disclosed in a document filed with the Securities & Exchange Commission, which is available at the SEC website. In other MongoDB news, Director John Dennis Mcmahon sold 10,000 shares of the stock in a transaction dated Monday, June 24th. The stock was sold at an average price of $228.00, for a total value of $2,280,000.00. Following the completion of the sale, the director now directly owns 20,020 shares in the company, valued at $4,564,560. The transaction was disclosed in a document filed with the SEC, which is available at this link. Also, CAO Thomas Bull sold 138 shares of the stock in a transaction dated Tuesday, July 2nd. The shares were sold at an average price of $265.29, for a total value of $36,610.02. Following the completion of the sale, the chief accounting officer now owns 17,222 shares of the company’s stock, valued at $4,568,824.38. The disclosure for this sale can be found here. Insiders sold 30,179 shares of company stock worth $7,368,989 in the last three months. 3.60% of the stock is owned by insiders.

Analysts Set New Price Targets

MDB has been the subject of a number of recent research reports. Scotiabank cut their price target on MongoDB from $385.00 to $250.00 and set a “sector perform” rating for the company in a research note on Monday, June 3rd. JMP Securities cut their price objective on MongoDB from $440.00 to $380.00 and set a “market outperform” rating for the company in a research note on Friday, May 31st. Stifel Nicolaus cut their price objective on MongoDB from $435.00 to $300.00 and set a “buy” rating for the company in a research note on Friday, May 31st. Citigroup cut their price objective on MongoDB from $480.00 to $350.00 and set a “buy” rating for the company in a research note on Monday, June 3rd. Finally, Bank of America cut their price objective on MongoDB from $500.00 to $470.00 and set a “buy” rating for the company in a research note on Friday, May 17th. One research analyst has rated the stock with a sell rating, five have given a hold rating, nineteen have assigned a buy rating and one has given a strong buy rating to the company. According to data from MarketBeat.com, MongoDB presently has an average rating of “Moderate Buy” and a consensus target price of $355.74.

Check Out Our Latest Research Report on MDB

MongoDB Profile

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

See Also

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

MMS • RSS

NEW YORK, Aug. 28, 2024 /PRNewswire/ — MongoDB, Inc. (NASDAQ: MDB) today announced that it will present at three upcoming conferences: the Citi 2024 Global TMT Conference in New York, NY, the Goldman Sachs Communacopia + Technology Conference in San Francisco, CA, and the Piper Sandler Growth Frontiers Conference in Nashville, TN.

- Michael Gordon, Chief Operating Officer and Chief Financial Officer, and Serge Tanjga, Senior Vice President of Finance, will present at the Citi Conference on Wednesday, September 4, 2024 at 3:50 PM Eastern Time.

- Dev Ittycheria, President and Chief Executive Officer, and Michael Gordon, Chief Operating Officer and Chief Financial Officer, will present at the Goldman Sachs Conference on Monday, September 9, 2024 at 8:50 AM Pacific Time (11:50 AM Eastern Time).

- Michael Gordon, Chief Operating Officer and Chief Financial Officer, and Serge Tanjga, Senior Vice President of Finance, will present at the Piper Sandler Conference on Wednesday, September 11, 2024 at 9:00 AM Central Time (10:00 AM Eastern Time).

A live webcast of each presentation will be available on the Events page of the MongoDB investor relations website at https://investors.mongodb.com/news-events/events. A replay of the webcasts will also be available for a limited time.

About MongoDB

Headquartered in New York, MongoDB’s mission is to empower innovators to create, transform, and disrupt industries by unleashing the power of software and data. Built by developers, for developers, MongoDB’s developer data platform is a database with an integrated set of related services that allow development teams to address the growing requirements for today’s wide variety of modern applications, all in a unified and consistent user experience. MongoDB has tens of thousands of customers in over 100 countries. The MongoDB database platform has been downloaded hundreds of millions of times since 2007, and there have been millions of builders trained through MongoDB University courses. To learn more, visit mongodb.com.

Investor Relations

Brian Denyeau

ICR for MongoDB

646-277-1251

ir@mongodb.com

Media Relations

MongoDB

press@mongodb.com

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/mongodb-inc-to-present-at-the-citi-2024-global-tmt-conference-the-goldman-sachs-communacopia–technology-conference-and-the-piper-sandler-growth-frontiers-conference-302233087.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/mongodb-inc-to-present-at-the-citi-2024-global-tmt-conference-the-goldman-sachs-communacopia–technology-conference-and-the-piper-sandler-growth-frontiers-conference-302233087.html

SOURCE MongoDB, Inc.

MongoDB, Inc. to Present at the Citi 2024 Global TMT Conference, the Goldman … – IT News Online

MMS • RSS

Copyright 2024 PR Newswire. All Rights Reserved

2024-08-28

NEW YORK, Aug. 28, 2024 /PRNewswire/ — MongoDB, Inc. (NASDAQ: MDB) today announced that it will present at three upcoming conferences: the Citi 2024 Global TMT Conference in New York, NY, the Goldman Sachs Communacopia + Technology Conference in San Francisco, CA, and the Piper Sandler Growth Frontiers Conference in Nashville, TN.

- Michael Gordon, Chief Operating Officer and Chief Financial Officer, and Serge Tanjga, Senior Vice President of Finance, will present at the Citi Conference on Wednesday, September 4, 2024 at 3:50 PM Eastern Time.

- Dev Ittycheria, President and Chief Executive Officer, and Michael Gordon, Chief Operating Officer and Chief Financial Officer, will present at the Goldman Sachs Conference on Monday, September 9, 2024 at 8:50 AM Pacific Time (11:50 AM Eastern Time).

- Michael Gordon, Chief Operating Officer and Chief Financial Officer, and Serge Tanjga, Senior Vice President of Finance, will present at the Piper Sandler Conference on Wednesday, September 11, 2024 at 9:00 AM Central Time (10:00 AM Eastern Time).

A live webcast of each presentation will be available on the Events page of the MongoDB investor relations website at https://investors.mongodb.com/news-events/events. A replay of the webcasts will also be available for a limited time.

About MongoDB

Headquartered in New York, MongoDB’s mission is to empower innovators to create, transform, and disrupt industries by unleashing the power of software and data. Built by developers, for developers, MongoDB’s developer data platform is a database with an integrated set of related services that allow development teams to address the growing requirements for today’s wide variety of modern applications, all in a unified and consistent user experience. MongoDB has tens of thousands of customers in over 100 countries. The MongoDB database platform has been downloaded hundreds of millions of times since 2007, and there have been millions of builders trained through MongoDB University courses. To learn more, visit mongodb.com.

Investor Relations

Brian Denyeau

ICR for MongoDB

646-277-1251

ir@mongodb.com

Media Relations

MongoDB

press@mongodb.com

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/mongodb-inc-to-present-at-the-citi-2024-global-tmt-conference-the-goldman-sachs-communacopia–technology-conference-and-the-piper-sandler-growth-frontiers-conference-302233087.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/mongodb-inc-to-present-at-the-citi-2024-global-tmt-conference-the-goldman-sachs-communacopia–technology-conference-and-the-piper-sandler-growth-frontiers-conference-302233087.html

SOURCE MongoDB, Inc.

MongoDB, Inc. to Present at the Citi 2024 Global TMT Conference, the Goldman … – Stock Titan

MMS • RSS

NEW YORK, Aug. 28, 2024 /PRNewswire/ — MongoDB, Inc. (NASDAQ: MDB) today announced that it will present at three upcoming conferences: the Citi 2024 Global TMT Conference in New York, NY, the Goldman Sachs Communacopia + Technology Conference in San Francisco, CA, and the Piper Sandler Growth Frontiers Conference in Nashville, TN.

- Michael Gordon, Chief Operating Officer and Chief Financial Officer, and Serge Tanjga, Senior Vice President of Finance, will present at the Citi Conference on Wednesday, September 4, 2024 at 3:50 PM Eastern Time.

- Dev Ittycheria, President and Chief Executive Officer, and Michael Gordon, Chief Operating Officer and Chief Financial Officer, will present at the Goldman Sachs Conference on Monday, September 9, 2024 at 8:50 AM Pacific Time (11:50 AM Eastern Time).

- Michael Gordon, Chief Operating Officer and Chief Financial Officer, and Serge Tanjga, Senior Vice President of Finance, will present at the Piper Sandler Conference on Wednesday, September 11, 2024 at 9:00 AM Central Time (10:00 AM Eastern Time).

A live webcast of each presentation will be available on the Events page of the MongoDB investor relations website at https://investors.mongodb.com/news-events/events. A replay of the webcasts will also be available for a limited time.

About MongoDB

Headquartered in New York, MongoDB’s mission is to empower innovators to create, transform, and disrupt industries by unleashing the power of software and data. Built by developers, for developers, MongoDB’s developer data platform is a database with an integrated set of related services that allow development teams to address the growing requirements for today’s wide variety of modern applications, all in a unified and consistent user experience. MongoDB has tens of thousands of customers in over 100 countries. The MongoDB database platform has been downloaded hundreds of millions of times since 2007, and there have been millions of builders trained through MongoDB University courses. To learn more, visit mongodb.com.

Investor Relations

Brian Denyeau

ICR for MongoDB

646-277-1251

ir@mongodb.com

Media Relations

MongoDB

press@mongodb.com

![]() View original content to download multimedia:https://www.prnewswire.com/news-releases/mongodb-inc-to-present-at-the-citi-2024-global-tmt-conference-the-goldman-sachs-communacopia–technology-conference-and-the-piper-sandler-growth-frontiers-conference-302233087.html

View original content to download multimedia:https://www.prnewswire.com/news-releases/mongodb-inc-to-present-at-the-citi-2024-global-tmt-conference-the-goldman-sachs-communacopia–technology-conference-and-the-piper-sandler-growth-frontiers-conference-302233087.html

SOURCE MongoDB, Inc.

MMS • RSS

NEW YORK, NY / ACCESSWIRE / August 28, 2024 / Leading securities law firm Bleichmar Fonti & Auld LLP announces a lawsuit has been filed against MongoDB, Inc. (Nasdaq:MDB) and certain of the Company’s senior executives.

If you suffered losses on your MongoDB investment, you are encouraged to submit your information at https://www.bfalaw.com/cases-investigations/mongodb-inc.

Investors have until September 9, 2024 to ask the Court to be appointed to lead the case. The complaint asserts claims under Sections 10(b) and 20(a) of the Securities Exchange Act of 1934 on behalf of investors in MongoDB securities. The case is pending in the U.S. District Court for the Southern District of New York and is captioned John Baxter v. MongoDB, Inc., et al., No. 1:24-cv-05191.

What is the Lawsuit About?

The complaint alleges that the Company misrepresented the purported benefits stemming from the restructuring of its sales force. This includes how the restructuring helped reduce friction in acquiring new customers and increased new workload acquisition among existing customers.

These statements were allegedly materially false and misleading. In truth, MongoDB’s sales force restructuring resulted in a near total loss of upfront customer commitments, a significant reduction in actionable information gathered by the sales force, and hindered enrollment and revenue growth.

On March 7, 2024, the Company allegedly announced that due to the sales restructuring, it experienced an annual decrease of approximately $40 million in multiyear license revenue, anticipated near zero revenue from unused Atlas commitments (one of its core offerings) in fiscal year 2025, and provided a disappointing revenue growth forecast that trailed that of the prior year. This news caused the price of MongoDB stock to decline $28.59 per share, or about 7%, from $412.01 per share on March 7, 2024, to $383.42 per share on March 8, 2024.

Then, on May 30, 2024, the Company again announced significantly reduced growth expectations, this time cutting fiscal year 2025 growth projections further, again attributing the losses to the sales force restructuring. On this news, the price of MongoDB stock declined $73.94 per share, or nearly 24%, from $310.00 per share on May 30, 2024, to $236.06 per share on May 31, 2024.

Click here if you suffered losses: https://www.bfalaw.com/cases-investigations/mongodb-inc.

What Can You Do?

If you invested in MongoDB, Inc. you have rights and are encouraged to submit your information to speak with an attorney.

All representation is on a contingency fee basis, there is no cost to you. Shareholders are not responsible for any court costs or expenses of litigation. The Firm will seek court approval for any potential fees and expenses. Submit your information:

https://www.bfalaw.com/cases-investigations/mongodb-inc

Or contact us at:

Ross Shikowitz

ross@bfalaw.com

212-789-3619

Why Bleichmar Fonti & Auld LLP?

Bleichmar Fonti & Auld LLP is a leading international law firm representing plaintiffs in securities class actions and shareholder litigation. It was named among the Top 5 plaintiff law firms by ISS SCAS in 2023 and its attorneys have been named Titans of the Plaintiffs’ Bar by Law360 and SuperLawyers by Thompson Reuters. Among its recent notable successes, BFA recovered over $900 million in value from Tesla, Inc.’s Board of Directors (pending court approval), as well as $420 million from Teva Pharmaceutical Ind. Ltd.

For more information about BFA and its attorneys, please visit https://www.bfalaw.com.

https://www.bfalaw.com/cases-investigations/mongodb-inc

Attorney advertising. Past results do not guarantee future outcomes.

SOURCE: Bleichmar Fonti & Auld LLP

View the original press release on accesswire.com

Microsoft Releases Prompty: New VS Code Extension for Integrating LLMs into .NET Development

MMS • Robert Krzaczynski

Microsoft released a new Visual Studio Code extension called Prompty, designed to integrate Large Language Models (LLMs) like GPT-4o directly into .NET development workflows. This free tool aims to simplify the process of adding AI-driven capabilities to applications. The official release post includes a practical example demonstrating how Prompty can be used in real-world scenarios.

Prompty is available for free on the Visual Studio Code Marketplace and offers .NET developers an intuitive interface to interact with LLMs. Whether creating chatbots, generating content, or enhancing other AI-driven functionalities, Prompty provides an easy way to integrate these capabilities into existing development environments.

While Prompty has been well-received for its innovative approach to integrating AI into .NET development, some community members have expressed concerns about its availability. On LinkedIn, Jordi Gonzalez Segura expressed disappointment that Prompty is not accessible to those using Visual Studio Professional.

Using Prompty in Visual Studio Code involves several steps:

- Installation: Developers start by installing the Prompty extension from the Visual Studio Code Marketplace.

- Setup: After installation, users configure the extension by providing API keys and setting up necessary parameters to connect to the LLM, such as GPT-4o.

- Integration: Prompty integrates into the development workflow by allowing users to create new files or modify existing ones with embedded prompts. Commands and snippets are provided to easily insert prompts and handle responses from the LLM.

- Development: Developers can write prompts directly in the codebase to interact with the LLM. Prompty supports various prompt formats and offers syntax highlighting, making it easier to read and maintain prompts. These prompts help in generating code snippets, creating documentation, or troubleshooting by querying the LLM for specific issues.

- Testing: Prompty enables rapid iteration and testing, allowing developers to refine prompts to improve response accuracy and relevance.

Bruno Capuano, a principal cloud advocate at Microsoft, prepared a real-world use case demonstrating how Prompty can enhance a .NET WebAPI project. In this example, Prompty generates detailed weather forecast descriptions, transforming the standard output into richer and more engaging content. Developers can dynamically produce detailed weather summaries by defining prompts within a .prompty file and setting up the necessary configurations to interact with the LLM. This practical application leverages the Semantic Kernel to automate the generation of descriptive text, improving the quality and informativeness of the application’s output.

Additional information about Prompty, its features, and integration into development workflows can be found on the Prompty Visual Studio Code extension page or in the Prompty source code available on GitHub.

MMS • Heather VanCura

Transcript

VanCura: I’ll highlight some of the things that were shared, but not go into too deep with details, but really talk about how can you take those lessons and take that back to your environment and your work place, and really be the leaders to continue to innovate and collaborate within your own company based on some of the things that you’ve learned here.

I work for Oracle, so I do have some forward-looking things here. Primarily, I want to talk about how you can get involved and how you can bring forward your group and the Java improvements that we’ve talked about. We even talked about in the unconference session on, how do you keep up with the latest versions of Java? How do you innovate and collaborate? That’s really what my role is all about, as chair of the JCP.

That’s really the perspective that I’m speaking to you from, is in that role leading the Java Community Process. I’ve been involved with the Java developer ecosystem for over two decades. I’ve seen quite a few changes come. I’m really excited about the future. The future is really bright for Java. I’m going to show you some of the reasons why in my slides.

JVM Trends

We talked about the things that we learned here. I think Netflix and LinkedIn really shared some interesting examples of the performance improvements that they’ve been able to achieve by migrating past Java 8, so especially migrating to Java 17, all the productivity improvements and performance improvements that they’ve been able to see. What I’d like to do here is show you how you could also do that in your teams when you go back to work. I think what you see when we talk about things in the community, you see that working together we achieve more.

There’s an African proverb that I like to talk about in my work, which is, if you want to go fast, go alone, but if you want to go far, go together. I think that the Java community is really unique in that perspective. LinkedIn talked about how easy they thought the migration was. I think that’s a great example. We have something to learn from LinkedIn here. Alex from LinkedIn was sharing some of the things that you can do. I’m going to share some of those things with you here.

Java continues to be the number one choice for developers and for enterprises. What I’ve seen over the last 5 years since we moved to the 6-month release cadence with a new version of Java every 6 months, is that the innovation pipeline has never been richer or stronger. You may or may not be aware that we did move to this release cadence back in 2017, where we have a new version of Java every 6 months. Not only have we seen more innovation, but we’ve also seen more collaboration.

That really is the topic of what we’re talking about here, and why I selected that topic for my talk, because we’re seeing more innovation in Java, and we’re seeing more collaboration. As a result of the way that you can contribute to not only consume the new innovations and the new features that are put out to the Java platform every 6 months, but how you can contribute to it so that your fixes and your innovations can become part of the Java platform. We have continued to focus on these five main tenets of Java, and that also ties in today, the performance of the platform, along with the stability and the security, and ensuring that we have compatibility throughout the ecosystem. Java is unique, in that we have such a rich ecosystem, with so many different companies being able to provide their implementations that give you as developers and enterprises choice, but also continuing to have code that’s maintainable in your projects.

When we assess the landscape, and we look forward to the next 25 years, as I showed, we’re celebrating 25 years of the evolution of the Java Community Process program, but 28 years of Java. As we look forward to the future of Java, it’s important to understand that there’s two delicate forces at play. There’s this thing that we want fast innovation, and we want to adopt the industry trends. Then we also want to ensure that we have a stable platform, and that we keep existing programs running without breaking them, and also have this extremely low tolerance for incompatibility.

We try to balance both of those things with the evolution. We support that through having new ports for hardware, as well as software architectures, while remaining compatible and offering stability. That’s what we’ve been able to continue to do with this new release model is that we have innovations every 6 months, which are digestible for developers. You can really get a sense of what’s in every release, but also that it’s predictable and stable for enterprises. We’ve really been able to achieve the best of both worlds through some of these innovations that we’ve done over the last 5 years.

Moving Java Forward

Moving Java forward, what we try to do is continue to build that trust, and also continue to offer that predictability, and continue to bring forward the innovation so that Java continues to be one of the top languages overall, and we continue to see JVMs running in the cloud, and all over in different environments. I think IBM’s talk was just focused on the cloud. Increasingly, the focus is on the cloud. If you look at the numbers, those numbers continue to become closer together. You also see Java on billions of other devices all over on the edge, in addition to in the cloud.

Really, the focus of the platform is to continue to maintain Java as the number one platform of choice in a cloud environment. As I mentioned, the 6-month release cadence really does deliver innovations, but also predictability. If you look at this chart, you can see on the left where we had those feature releases, where you pick one big main feature, and you wait until that feature is ready, and the release comes out maybe every 3 to 4 years. In those times, like for example, with JDK 9, we had 91 new features in that release. That’s not only a lot for developers and enterprises to digest, but it also makes it really difficult to migrate.

That’s one of the things that we talked about in the unconference session, as well as what Netflix and LinkedIn talked about in terms of being able to migrate between versions. When you have fewer new features, it’s much easier to migrate in between versions. What you see now is since we moved to a 6-month release cadence versus having new releases every 3 to 4 or so years, you have no idea when the release is going to happen. It gets pushed out based on when this huge feature is going to be ready. Then it’s a huge release. Now you can see, on average, the lowest release had 5 new features, and at most, we had 17 new features going into each release of the Java platform. You can see with this last release, we had 15 new features coming out with Java 21.

Moving Java forward, we continue to invest in long-term innovation. While we do have this release model of a new release every 6 months, whatever is ready at that time goes into it. If you want to see a new project sooner, try to contribute and give feedback to that release. While we have these releases that are time based on a train model, we still have the projects going on in OpenJDK. One of the big projects that came out of Java 21 came out of Project Loom, but that doesn’t mean that Project Loom is over. One part of Project Loom that’s virtual threads, came out and was available in Java 21.

Within OpenJDK, we continue to have these projects where the innovations of the future happen. I picked just a few of the projects. I couldn’t list all the projects in OpenJDK on one slide. What I’ve done is I picked out a few of the projects where we’re continuing to see some integrations that go back to those tenets that I talked about: the predictability, productivity, security, those types of things are coming out of these projects. Project Amber is one that over the last 5 years, we’ve seen continuous innovations coming out around developer productivity with small incremental language changes.

A lot of people have been talking about reducing startup times. I even talked to several people about Project Leyden. Definitely check that one out. Panama, I think Monica in her talk about hardware was talking about Project Panama, and connecting JVM to native code, and making JNI better. ZGC is one that not only Netflix and LinkedIn talked about, but at a panel I hosted, I had maybe 10 people on the panel, I asked them to talk about what their favorite things were. Many of them, in addition to Loom and virtual threads, brought up ZGC, so generational ZGC coming out. That’s part of Java 21, as well. These are some of the key projects that we’re investing in for the long term, that are open and transparent and available for you to follow. OpenJDK is where you’re going to get the vision of what’s happening long term, not just in the next 6 months, but over the next 3 to 5 years.

JDK 21

Java 21 is available now. It was available as of September 19th. It had 15 JEPs in it. Also, thousands of performance and security improvements, just like every 6-month feature has. Just looking at the areas of Java 21, there were many new improvements. I’ll highlight just a few of them for you and some of the key areas. Obviously, we had language features, performance improvements. We had new features for preview. One thing that you might want to do when you download the new versions of Java is notice what features are available for preview. As of 2017, we introduced preview features.

That means that they’re fully specified and implemented, but they’re not turned on by default. Why they’re preview features is we’re looking for more feedback from people who are using them. What we’ve typically seen now since 2017, is that if a language feature is designated as a preview feature, it will be there two or three times, there’s no designated amount, but just until we collect enough feedback. There are some features that are preview features. There’s library improvements, like virtual threads. There’s also some additional library improvements that are there as preview features.

A couple of the language features that I’ll highlight came out of Project Amber. These are features that make it easier to maintain code and also makes it easier for you to code. One of them was record patterns. Java 21 adds record patterns, which make it easier to deconstruct records and operate on their components. Also, pattern matching for switch. Pattern matching for switch bring switch expressions and statements. Using this feature, we can test an expression against a number of different patterns. We also had some library improvements. The Java libraries are one of the reasons why Java has become so popular. It’s also one of the reasons why you have maybe some things that aren’t ready yet and why it makes your life a little harder when you’re trying to migrate in between versions. If you follow some of the lessons that were talked about, you’ll be able to overcome those hurdles.

A couple of the things that were in this category were of course, the virtual threads. That’s coming out of Project Loom. Virtual threads has had a lot of early adoption already. Netflix talked about it in terms of Spring, having support for Java 21 already with Spring 3.2. Also, IntelliJ has that out already. Virtual threads are one of the most highly anticipated features. This was mentioned as well, since Java 8 with Lambda expressions. Virtual threads are a really exciting technology that I think is really going to be pushing people to adopt and migrate to newer versions of Java. Also included in Java 21 is sequenced collections.

The collection framework was lacking a collection type to represent a sequence of elements with a defined encounter order. We’re lacking a uniform set of operations that applies to all such collections. We included that with Java 21. In addition, we added some performance improvements, so just like before, but only better. The one that I’m going to highlight for you here is generational ZGC. Generational ZGC is not the default when you download Java 21. You have to configure it to be a default. We’re looking towards the future, whereas generational ZGC will be the default in a future release. Generational ZGC is available as a regular feature in Java 21. Initially, generational ZGC is available alongside non-generational ZGC.

OpenJDK: Issues Fixed in JDK 21 per Organization

That was just a very quick overview of what’s in Java 21, as I transition now to talking about how you can contribute. What we’ve seen, as I mentioned earlier in my talk, was that as we have this faster release cadence every 6 months, this red part of this chart has gotten gradually smaller. The red part is contributions by Oracle. You can see that Oracle is still the number one contributor and leader and supporter of the Java ecosystem. What we see over time is that we have increasing contributions from other people, and contributors in the community.

This is ideally what I’d like to see, is continue to move this chart to the left side, so we have fewer contributions from Oracle and more from the community. I think with Java 21, we’ve had a record which is 2585 Jira issues marked as fixed, and over 700 of them were contributed by other members of the community. What you can see here is you have not only vendors, but also users of the technology in addition to hardware vendors. People like Arm and Intel are contributing to bug fixes to enable OpenJDK to be better on all the different hardware ports. I think that started also after Java 9. This new release model, what it really does is it motivates people to contribute their fixes, because they’re not going to have to wait 3 or 4 years to see their fix being put into a platform release.

It’s going to be coming out every 6 months. We continue to see this increase in the number of contributions into OpenJDK. Also, we have this long-term support model now a part of the Java releases. That means that every 2 years at this point, we have one of the releases designated as long-term support releases. I talked about new releases coming out every 6 months. Java 21 is a release that will be offered as long-term support. There won’t be another long-term support release until Java 25. There are interim releases, which happen every 6 months. One thing that’s important to note is that regardless of whether a release is going to be offered as long-term support or not, it’s treated technically the same in terms of the features that go into it. Every release is production ready, and it can be used in production.

It’s just a matter of whether Oracle or another vendor wants to provide long-term support for that release. What we’ve seen in the Java ecosystem is that, typically, if one vendor is offering long-term support releases, most of the other vendors follow the same cadence. For instance, Java 8 was designated as available for long-term support. Java 11 was a long-term support release. Java 17 was a long-term support release. Now Java 21 is a long-term support release. What we’ve seen is that other providers other than Oracle have offered that same cadence of offering long-term support, but people can offer support in any way they choose. It does seem that the community tends to adopt the long-term support releases, but there really is technically no reason why you couldn’t go ahead and adopt every release as it comes out every 6 months.

Java Developer Community

There’s strength in the numbers. What we see now is that we’re growing our Java developer community. We also have user groups, Java champions. What I found with the Java ecosystem is that there’s this rich support in the community. I encourage you, if you’re local, to take advantage of that with the San Francisco Java user group. There’s close to 400 Java user groups all over the world. No matter where you are, if you’re visiting from a different place, you can probably find a Java user group that you can go to. One of the things that are done in the Java user groups is people share their experience.

They share about the things that they’re working on. They share about some of the new projects that are being worked in OpenJDK or in other open source projects around the community. It’s a way where you can find support for the work that you’re doing. It’s also a way that you can grow your network and your career. It’s also a way that you can learn of other people’s success.

Just like we heard here, some of the people’s success, and talking about some of the performance improvements that they’ve been able to achieve by migrating past Java 8, and talking about how easy it was and how they’re getting their libraries and dependencies updated. You can do that in your local community that meet on average, once a month, and share some of the work that you’re doing. You can also enhance a lot of your critical communication skills, which sometimes are harder to practice, and you practice those skills in person. Those are all things that you can do in the Java community.

Now I’m going to transition a little bit to talk about another community, which is the JCP. Much of what we’ve talked about in terms of innovation that is happening in the Java ecosystem as a result of some of the foundation that’s been established in programs like the JCP and through OpenJDK. This is also how you can set your path for migrating in the future to new versions of Java. We’re celebrating 25 years of the JCP this year. Does anyone know what the JCP is? The JCP is the organization that defines the Java specification and language and platform. It operates as an organization.

I act as the chair of the Java Community Process, or JCP program. We have an executive committee. The bulk of the work is done by specification leads or spec leads who lead JSRs. Basically, the JCP is where that work happens. The work is led by specification leads. Oracle is the specification lead for the Java platform. Oracle doesn’t do that work alone. Contributions come in from the community, and every JSR has to have a spec lead and an expert group, and feedback from the community.

Reviewing this work is how you can get ready to know what’s coming next in future releases of Java to enable you to be ready to migrate to new versions of Java. Members of the JCP can serve on these JSRs. We have members who are companies, nonprofit groups, Java user groups, as well as individual developers. The majority of our members are now individual developers all over the world. There are nonprofits such as universities and groups like the Apache Foundation and the Eclipse Foundation, who are members.

In addition to just our general membership, we have an executive committee. This is one of our first face to face meetings that we had earlier this year. We were in New York. We did a panel at BNY Mellon, they hosted us there. On the executive committee, we also have that cross representation. We have Java user groups, companies, users. BNY Mellon is a user of the technology, as well as a couple of individuals. They’re there to represent the needs of the general ecosystem, but they also vote on all JSRs. I know a lot of you have heard of a JSR. How does that work? We have JCP members, and we have JSRs. JSRs are actually three things.

It’s the specification, the reference implementation, which is the code, and the test suite. When I say this is the foundation, this is really what goes back to some of the tenets that I talked about earlier around compatibility and ecosystem choice. This is really the foundation that establishes the ability to have choices in your implementation, and also that rich ecosystem of frameworks and libraries that you have to choose from. We have the Java specification request, the membership, which also consists of the executive committee. Then we have an expert group serving on the JSRs.

Those three things really work together. We have a specification, that’s essentially your documentation. That’s required by the JCP. That’s part of the reason why Java is so well documented. Then we have the reference implementation or the code that implements the specification. Then a test suite to ensure that we can have multiple implementations. Not just one implementation, but multiple implementations that creates that ecosystem of choice.

Those three deliverables work together to provide the foundation of the work that we do in the JCP. Every JSR gets voted on by the executive committee. Every JSR provides multiple drafts of the specification, as well as the reference implementation. I’ll talk about later how you can download and test the early access builds. We need feedback on all of these things as it goes through the process. You’re free to provide feedback whether you’re a member of the JCP or not.

Java is unique, in that it’s standard and open source. We believe that we need both. We have the reference implementation being developed in OpenJDK, which is an open source project. Then we have the JCP ratifying the specification. We continue to maintain that we need both. The implementation is developed in OpenJDK, collaboratively with an open source license. It’s also available on GitHub now as well. We have a mirror on GitHub, where you can find all the projects, since most developers are hanging out on GitHub for most of their other projects. There’s also a mirror of OpenJDK on GitHub now as well. That was part of one of the earlier Java platform releases a couple of years ago.

Early Access Builds

Now down to one of the keys that I talked about with you earlier, in terms of how are you going to innovate and collaborate and be able to migrate your applications to new versions of Java. The key really is here in the early access builds. How many of you are aware that there are early access builds of Java every two weeks? There are early access builds of the JDK put out every two weeks. We’ve had several already put out now for Java 22. LinkedIn and Netflix talked about how they’ve been testing out Java 21 for 4 or 5 months, and they’re almost ready to migrate to Java 21 now, because they were actually actively participating in this program.

It’s not actually a program where you have to sign up for it, anyone can do it. The builds are put out there every two weeks. You don’t have to download them every two weeks, but they’re available for you there to download every two weeks. When they’re early access builds, that means that you can’t use them in production. You can download them and run your applications against them to find out what dependencies you’re going to have and what changes you’re going to need to make to migrate in between new versions. As I talked about earlier, when I showed you the chart with the new features, and how many are in each new release, if you’re on Java 8, you are going to have quite a few changes that you might need to make to migrate past Java 8.

If you’re on a version after Java 8, you saw with my chart that we have 10 to 15 new features put into every release. Once you’re past Java 8, it’s going to become part of your software development lifecycle to migrate in between versions, because you can build this early access testing into your software development lifecycle. Instead of making Java migrations a month-long project, it just becomes part of your everyday workflow.

Whereas you’re downloading early access build, running your applications against it, identifying any dependencies and fixing those, and migrating very close to the release of the next version of Java, whether you choose to migrate every 6 months, or you choose to migrate to long-term support versions, so like migrating from Java 17 to Java 21.

The builds are there every two weeks. You can download and test and also provide feedback and make adjustments. Very few people actually do that part of providing the feedback. If you want to make sure that your comments or needs are addressed, I encourage you to just share your feedback in OpenJDK, because you will be recognized and remembered if you’re one of the few people who are doing that.

Rather than just consuming the technology, like we talked about, the theme is collaborate. That counts as collaboration and a contribution. Sharing what happened when you downloaded the early access build, and you tested your application against it. Share with us what happened. That’s what makes the Java releases better. It also helps you to migrate in between new versions of the Java platform.

If you haven’t seen this page before, I just showed an example. This is for JDK 21. Every Java release has a page that’s exactly the same. I mentioned some of the 15 JEPs that went into Java 21. I just gave you a very brief overview of a few of them, not all of them. If you wanted to go and read about every JEP that’s included in JDK 21, you could do that on this page. Every platform release has this exact same page. It gives you the dates of the releases, and it gives you links to every JEP or Java Enhancement Proposal. Every release of the Java platform is made up of a collection of JEPs or Java Enhancement Proposals.

That’s the term that OpenJDK uses. The JEPs come out of those projects, like Project Amber, Project Loom, Project Valhalla. Once they have a prototype ready, and the work gets far enough along, they put them into JEP proposals. Then, every 6 months, they collect the JEPs that they think are going to be ready for the next platform release. Then, every JDK release is a collection of Java Enhancement Proposals, usually around 10 or 15, at this point. Those are then put into a JSR. That’s what’s voted on by the executive committee. One JSR, and on average, at this point, 10 to 15 JEPs that make up every Java platform release.

If you want to go and dig into any of those features that I talked about, they’re available on OpenJDK, and you can read all about the JEP. You can also find links to those pages from jcp.org. That’s the home of the Java Community Process. This is the page for JSR 396, that’s Java 21. You can find the link to the project page on OpenJDK as well as the issue tracker and the mailing list. I’ll talk a little bit more about the mailing list, but I mentioned it when I talked about downloading the early access builds. The mailing lists are where you can share your feedback. You can find links to that on jcp.org, as well as on OpenJDK.

There’s already a page for JSR 397, which is Java SE 22. There’s already a few JEPs that have been identified. The schedule is there, and you can bookmark this page to find out what other work is going to be targeted for this release. That will be in flux for the next several months. Public review time is when that set of JEPs typically gets frozen. Then the work continues to happen on those before the JCP executive committee votes to ratify the specification and it goes to final release.

Quality Outreach Group (OpenJDK)

Also, going back to the dependencies, in OpenJDK, we have a group that’s called the quality outreach group. The quality outreach group is a collection of free and open source projects that are not supported by companies. Often, people when you’re downloading the early access builds and testing your application, you’ll probably identify a few dependencies, depending on how many open source libraries you’re using. According to LinkedIn, they didn’t have any. I know people who’ve had thousands. In the quality outreach group, you can find a collection of 200 free open source projects or FOSS projects.

The idea here is that we have a wiki where you can see what’s the status, so where is this project in terms of migrating to the newest release of Java. Also, who you can contact if you wanted to contribute a bug fix to one of these projects. I’ve worked with several of the project leads here, and they’ve actually been able to identify some easy early entry bug fixes that might be good for somebody who’s looking to get experience in an open source project. Obviously, if this is a dependency of yours to be able to migrate to a newer version of Java, you also could volunteer.

Just because it’s a collection of FOSS projects, doesn’t mean that companies can’t have individuals contributing to them. This is a great place and a great resource for you to go for a couple of different things, like I said, for those dependencies that you may have identified, but also, if you wanted to get involved with an open source project. These are all projects that are looking to actively migrate to the latest release of Java and, as I mentioned, oftentimes will have identified bugs. If you’ve found something as you’re doing your testing, you can also contact them and go ahead and enter it in their issue tracker.

I encourage you to take a look at that. As I mentioned, that’s a project in OpenJDK, so you go to OpenJDK, and then just look for the quality project. On the left-hand side is where there’s the nav bar, there are projects listed in alphabetical order, quality outreach, and go ahead and take a look at that. You can also join the project if you’re maintaining an open source library. You’ll find all that information there.

Java in Education

Lastly, I just want to highlight another thing that we’re focused on in the JCP, which is bringing Java to the next generation of developers. I talked about in the beginning that we want to continue to see Java be the number one programming language. We believe that the key to doing this is also outreaching to that younger generation of developers and ensuring that they’re learning Java, and are aware of the benefits. We’ve organized a program that’s available off of jcp.org, where we talk about how you could host a workshop.

Right now, we have three different presentations that you could deliver to a group of students, how you can reach out to universities, some best practices for doing that, as well as how you might offer mentorship. The idea behind this program is basically collecting together the resources where students and younger professionals can learn about Java, not that you would actually teach them Java, but that you would give them an example of what it’s like to work in the ecosystem, and you would point them to the resources that are available. Because, as I mentioned, in the beginning, there’s this rich ecosystem of materials that are available through Oracle University, Oracle Academy, as well as so many other providers that teach Java to students.

The idea behind this program was really to show younger professionals what it’s like to work in industry. If you’re interested in getting involved with that program, that’s been super motivational and inspiring to me to be able to see the excitement. Even one of the standalone specifications that we have for Java now is JSR 381, which is a visual recognition specification, so, basically, machine learning using Java and not Python. That’s been really cool to talk about that with the students. While it’s not part of the Java platform, it’s an optional standalone package that’s led by some individuals that have a deep interest in AI and machine learning, and to be able to show that to students who think that Java isn’t the language for the future with AI and machine learning. It’s a real eye opener. That’s been exciting.

Getting Involved with the Java Ecosystem

I’m going to close with a few ways for you to get involved if anything I’ve said has piqued your interest and motivated you to get more involved in the Java ecosystem. You can join the JCP in multiple ways. If you wanted to join as an individual, you would do that on jcp.org, as an associate member. If you’re part of a nonprofit university, or a Java user group, you would join as a partner member. Then we have the full members, which is really designed for companies. We have new companies joining every year. Actually, Microsoft is one that joined most recently, and Amazon a few years before that.

We’re always eager to have new perspectives come into the JCP. That’s really the full members. We also have some consultants and university professors who join as full members. Then, of course, you can join OpenJDK. You can follow OpenJDK on Twitter. One of the most important things that you can do is join and follow the OpenJDK mailing lists. There are many mailing lists on OpenJDK. What I encourage you to do is share your feedback in the adoption group. That’s where you can share your feedback when you download those early access builds, so join that mailing list. There’s also a mailing list for the quality outreach.

Every one of those projects that you might be interested in that I mentioned, like Amber, or Leyden, or Valhalla, or Panama, they also have their own mailing list. There was someone that was asking me about Panama, and I encouraged him to share his feedback on the mailing list. While conferences like these are a great way to have discussions and share ideas, if you really want to make an impact, share it on the mailing list. Make sure you join the mailing list of your interest. If you want to consume information, we have a couple cool new websites where you can learn more about the technologies, and those are dev.java and inside.java. There, you could get more deep dives on some of the technical features of the new release, as well as contributions from the community. With Java 21, we now have community contributors on articles on both of those websites.

Giving feedback and collaborating, you’re going to find that you are exponentially increasing your network in terms of collaboration. Giving feedback is going to help you to not only grow your career, but establish you as a leader and help you to migrate to the new versions of Java and learn about the new features of Java before anyone else has had that opportunity. Really, that’s one of the most valuable things about getting involved in some of these projects that I talked about is being able to share your knowledge. That’s what I find the most inspiring about the Java ecosystem is that everything’s there and available for you, in a transparent way.

It’s really a matter of you taking the time to go and research the information, learn about it, and then share what you’ve learned. It could be as simple as sharing on social media, that you downloaded the new early access build of Java 22, and this is what happened, or even Java 21 just came out, so you download it and checked it out, and what’s your favorite new feature. There’s always a different perspective that you can bring, and you can share that not only on social media, but within your team as well.

That’s one of the things that I’ve talked with some community members about is having that as a regular part of some of their team meetings, just sharing that they went and checked out this JEP or this project, and this is really cool, and this is going to be coming next. It’s a way to establish yourself as a professional and a leader, as well as have fun meeting people who are in a different environment than you are. Doing these types of activities, I’ve met people from all over the world, even though I don’t see them all over the world. I see them online. I’m able to see how they’re using Java and what their unique perspectives are.

Resources

In all my work with developers, I just recently published a book. It’s called, “Developer Career Masterplan.” That is a book about how to move your career from junior to senior and beyond. It’s all the things that you might not think about when you think about your career. All the things that really can add that extra bit of visibility into your career.

Questions and Answers

Beckwith: I think one of the things that was really interesting, and I know it because I was a part of the JCP at Arm and [inaudible 00:44:22]. Of course, I like reading JSRs also.

VanCura: More people should. Actually, you can differentiate yourself if you’re one of the few people who reads a specification. You don’t have to read the whole thing. You can just read a part of it and you can provide a comment on that part. Or if you refer to a specification and maybe something wasn’t clear, then you could provide some feedback just on that part, “I refer to this part.”

Beckwith: It’s just the first one that you read, it may get overwhelming, but once you get used to it, it’s easier. You know what to skip and where to get to if you need some information, because there’s a wealth of information there.

VanCura: I met with a professor a few weeks ago, and he was actually asking me for pointers. He was wanting to know where he could read the specification. It’s good to know that it’s there if you need it. Like I said, one of the reasons why it’s always there is because it’s required by the JCP. Every specification has to be complete, and it has to be fully documented in the specification.

Beckwith: I was going to say that JSR for 9 was probably one of the most read specifications ever, because we had the module system that was in the JPMS. Every company, every JCP member I know has read that.

VanCura: Yes, the JCP members.

Participant 1: There’s just like, people run stuff in the cloud, and then to lower cost there’s always this question about maybe Arm, and how is it going to perform, or is it going to have any issues on Arm. Who’s responsible for making sure that Java runs without introducing bugs on a platform like Arm?

VanCura: Arm was in my chart as one of the bigger contributors, so they had their own square of contributions. They contribute fixes and bug issues that would address those things that are specific to their port. They would contribute those in OpenJDK. Then, of course, they are a company and they would have things that they do outside of OpenJDK, but I’m just speaking specifically to OpenJDK.

Participant 1: There’s multiple companies, there’s Ampere. There’s just different companies that license the ISA. Is it Arm?

VanCura: Arm is one of the biggest contributors to OpenJDK. That’s why they’re on the executive committee. Obviously, Ampere is an Arm provider.

Participant 1: Probably when you’re running in the cloud you’re running on Ampere, [inaudible 00:47:56]?

VanCura: Ampere could decide to run in the executive committee and make contributions. I just noticed when I look at the chart, Arm is one of the biggest contributors. Intel is also a really big contributor on the hardware side. Those are the two biggest, Arm and Intel.

Beckwith: Arm does the IP. One of the things they also help with is enablement. Like, for example, I was talking about the vector unit, they don’t want SVE or SVE2 to fall behind. I was working at Arm too, so we used to make sure that we are visible in the OpenJDK community because we do want people to come and have a say, [inaudible 00:48:41]. Ampere and ThunderX2 systems, Marvell, there were lots of people that actually do have an endline product that’s based on Arm ISA and different versions.

Then they enable the stack from there onwards. There are multiple others. I’ve had, for example, for Linux on Arm, they are the number one contributor, probably very close to Arm as well, because [inaudible 00:49:13]. I was talking in my talk about the OpenJDK port Windows on Arm. Microsoft did that. Microsoft helped with the OpenJDK. Mac at one point as well. It’s a community effort. The reason that we helped with the M1 port was because we did the porting to Windows. It was easier for us to enable the hooks already. It was easier for us to enable it for M1 as well.

VanCura: Microsoft, definitely you’re a big contributor.

Beckwith: It seems surprising, but we are a part of the OpenJDK community, and that’s how it works. If you work on the OpenJDK, and you find a bug, somebody will jump on it. If you are the best person to fix it, or at least to provide more details on it, then you should continue doing that, because we welcome any help we get.

VanCura: Microsoft is also on the executive committee. You’re definitely called out in the chart as a big contributor to OpenJDK, so is Netflix. What you see is when you do start contributing, it just becomes easier to migrate. You become the leaders in the ecosystem. That’s that connection there. It’s interesting that people that I see at JVMLS are people who are speaking here and saying, “Yes, we migrated. It was so easy.”

Beckwith: That’s very true. We have to keep up in understanding what the problems are. Sometimes we see, there’s a solution already, so all we need to do is move over here. We’re the early adopters also, in our curve.

Participant 2: I have a question related to the JDK, and then seeking the suggestions, recommendations of JDK upgrade. We’re behind in upgrading. Say if we are in JDK 8 in production, what would be the next optimal version upgrade to, like JDK 11, JDK 17? What would be the most, like smoother path for us?

VanCura: I think in the unconference people were saying that they would recommend to migrate to 11 first, but I’ve talked to other people who say just go straight to 17 or straight to 21. I think that’s more common.

Participant 1: We went straight to 17, and now 21. Actually, the best thing is, because we do real-time streaming, the ZGC benefit was amazing. It is like 1 millisecond pause time. That was great.

Participant 2: It’s backward compatible?

Participant 1: No, we did make some changes and upgrade Spring into libraries. It took a little bit of work, but it was absolutely worth it.

Participant 2: There are other libraries, possibly?

Participant 1: It depends what stack you are.

Participant 2: They also are Spring Boot.

Participant 1: We had to upgrade Spring Boot and the dependencies in our application. That is where we began. It was pretty straightforward.

VanCura: I definitely have heard from more people to do it that way, which is migrate to the latest version. If you’re on 8, just go straight to the latest version.

See more presentations with transcripts

MMS • Steef-Jan Wiggers

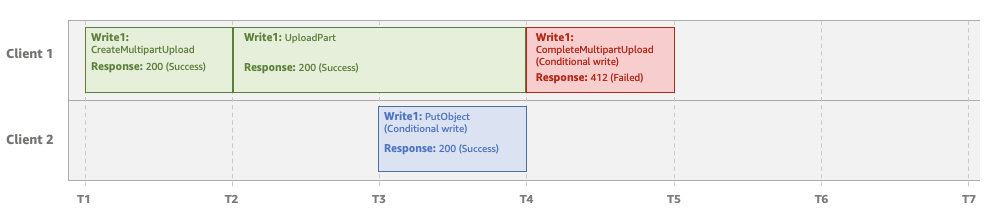

AWS recently announced support for conditional writing in Amazon S3, allowing users to check for the existence of an object before creating it. This feature helps prevent overwriting existing objects when uploading data, making it easier for applications to manage data.

Conditional writes simplify how distributed applications with multiple clients can update data in parallel across shared datasets. Each client can write objects conditionally, ensuring that it doesn’t overwrite any objects already written by another client. This means there’s no need to build client-side consensus mechanisms to coordinate updates or use additional API requests to check for the presence of an object before uploading data.

Instead, developers can offload such validations to S3, which improves performance and efficiency for large-scale analytics, distributed machine learning, and highly parallelized workloads. To use conditional writes, developers can add the HTTP if-none-match conditional header along with PutObject and CompleteMultipartUpload API requests.

A put-object using the AWS CLI to upload an object with a conditional write header using the if-none-match parameter could look like this:

aws s3api put-object --bucket amzn-s3-demo-bucket --key dir-1/my_images.tar.bz2 --body my_images.tar.bz2 --if-none-match "*"

On a Hacker News thread, someone asked if most current systems requiring a reliable managed service for distributed locking use DynamoDB. Are there any scenarios where S3 is preferable to DynamoDB for implementing such distributed locking? With another one answering:

Using only s3 would be more straightforward, with less setup, less code, and less expensive

The conditional write behavior is as follows, according to the company’s documentation:

- When performing conditional writes in Amazon S3, if no object with the same key name exists in the bucket, the write operation succeeds with a 200 response.

- If an existing object exists, the write operation fails with a 412 Precondition Failed response. When versioning is enabled, S3 checks for the presence of a current object version with the same name.

- If no current object version exists or the current version is a delete marker, the write operation succeeds.

- Multiple conditional writes for the same object name will result in the first write operation succeeding and subsequent writes failing with a 412 Precondition Failed response. Additionally, concurrent requests may result in a 409 Conflict response.

- If a delete request to an object succeeds before a conditional write operation completes, the delete request takes precedence. After receiving a 409 error with PutObject and CompleteMultipartUpload, a retry may be needed.

412 Precondition Failed response (Source: Conditional Requests Documentation)

Paul Meighan, a product manager at AWS, stated in a LinkedIn post:

This is a big simplifier for distributed applications that have the potential for many concurrent writers and, in general, a win for data integrity.

Followed by a comment from Gregor Hohpe:

Now, that’s what I call a distributed system “primitive”: conditional write.

Currently, the conditional writes feature in Amazon S3 is available at no additional charge in all AWS regions, including the AWS GovCloud (US) Regions and the AWS China regions. In addition, samples are available in a GitHub repository.

Presentation: Applying AI to the SDLC: New Ideas and Gotchas! – Leveraging AI to Improve Software Engineering

MMS • Tracy Bannon

Transcript

Bannon: I’ve been navigating the city. It really got me thinking about something. It got me thinking about the fact that I could use my phone to get anywhere I needed to go. It got me to think about how ubiquitous it is that we can navigate easily anywhere we want to go. It’s built into our cars. I rode bicycle, and I have a computer on my road bike. We always know where I am. You can buy a little chip now and you can sew it into the back of your children’s sweatshirts, and things, and always know where they’re at. It’s really ubiquitous. It didn’t start out that way.

When I learned to drive, I learned to drive with a map. As a matter of fact, I was graded on how well I could refold the map, obviously a skill that I haven’t worried about since then. I was also driving during the digital transition when all of that amazing cartography information was digitized. Somebody realized, we can put a frontend on this, and we can ask people where they’re starting, where they’re going. Then we can give them step by step, a place to go. They still had to print it out. If you happened to be the first person who was in the passenger seat, you got to be the voice, “In 100 meters, take a left, the ramp onto the M4.”

It wasn’t long until we had special hardware. Now we had a Garmin, or we had a TomTom. It was mixing the cartography information. It was mixing the voice aspect, and was mixing that hardware together. It was fantastic. When my children started to drive, they started with a TomTom, but I made them learn to read a map, because if you can see what it says there, the signal was lost. Now, it’s everywhere. It is ubiquitous for us. In 2008, the iPhone was released, the iPhone 3G, and it had that sensor in it. Now everywhere that we went, we have the ability to tell where we are. We can track our packages. We can track when the car is coming to pick us up. We can track all sorts of different things. We’ve just begun to expect that. What does that have to do with AI, with software engineering? That’s because I believe that this is where we’re at right now. I think we’re at the digital transition when it comes specifically to generative AI and leveraging that to help us to build software.

My name is Tracy Bannon. I like word clouds. I am a software architect. I am a researcher now. That’s been something newer in my career over the last couple of years. I work for a company called the MITRE Corporation. We’re a federally funded research and development. The U.S. government realized that they needed help, they needed technologists that weren’t trying to sell anything. I get paid to talk straight.

AI in Software Engineering

Let’s go back in time everybody, 2023, where were you when you heard that 100 million people were using ChatGPT? I do remember that all of a sudden, my social feed, my emails, newsletters, everything said AI. Chronic FOMO. It’s almost as though you expect to go walking down the aisle in the grocery and see AI sticker slapped on the milk and on the biscuits and on the cereal, because obviously it’s everywhere, it’s everything. Please, don’t get swept up in the hype. I know here at QCon and with InfoQ, we prefer to talk about crossing the chasm. I’m going to use the Gartner Hype Cycle for a moment.

The words are beautiful. Are we at the technology trigger when it comes to AI in software engineering? Are we at the peak of inflated expectations, the trough of disillusionment? Have we started up the slope of enlightenment yet? Are we yet at the plateau of productivity? Where do you think we are? It’s one of the few times that I agree with Gartner. We are at the peak of inflated expectations. Granted, Gartner is often late to the game. By the time they realize it, oftentimes I believe that we’re further along the hype cycle. What’s interesting here is, 2 to 5 years to the plateau of productivity.

How many people would agree with that? Based on what I’m seeing, based on my experience, based on research, I believe that’s correct. What we do, as software architects, as software engineers is really complex. It’s not a straight line in any decision that we’re making. We use architectural tradeoff. I love the quote by Grady Booch. The entire history of software engineering is one of rising levels of abstraction. We’ve heard about that. We’ve heard about the discussions of needing to have orchestration platforms of many different layers, of many different libraries that are necessary to abstract and make AI, generative AI in specific, helpful.

Where Can AI Be Used with DevSecOps?

I have the luxury of working with about 200 of the leading data scientists and data engineers in the world. I sat down with a couple of them and said, “I’m going to QCon. This is the audience. How would you explain to me all of the different types of AI that exist, the ML universe beyond generative AI?” Did we draw frameworks? We had slide after slide. I came back too and said, let’s take this instead like Legos and dump them on the table. What’s important to take away from this slide, is that generative AI is simply one piece of a massive puzzle.

There are many different types of AI, many types of ML, many different types of algorithms that we can and should be using. Where do you think AI can be used within DevSecOps, within the software development lifecycle? The first time I published this was in October of last year, and there are at least a half a dozen additional areas that have been added to that during this time. What’s important is that generative AI is only one piece of the puzzle here. We’ve been using AI, we’ve been using ML for years. How do we get after digital twins, if we’re dealing with cyber-physical systems? We’re not simply generating new scripts and new codes. We’re leveraging deterministic algorithms for what we need to do. Remember that generative AI is non-deterministic. With it, though, it has groundbreaking potential, generative AI in specific, groundbreaking potential. It has limitations and it has challenges.

Treat generative AI like a young apprentice. I don’t mean somebody who’s coming out of college. I mean that 15-year-old, brings a lot of energy, and you’re excited to have them there. Occasionally they do something right, and it really makes you happy. Most of the time, you’re cocking your head to the side and saying, what were you thinking? We heard that with stories in the tracks especially around AI and ML. Pay very close attention.

I’m going to take you back for a moment, and just make sure that I say to you that this is not just my opinion. This is what the research is showing. There are service providers who have provided AI capabilities who are now making sure that they have all kinds of disclaimers, and they have all kinds of advice for you that they’re providing guidance that says, make sure you have humans in the loop. Do you think that generative AI contradicts DevSecOps principles? It does. When I think about traceability, if it’s being generated by a black box that I don’t know, that’s much more difficult.

How about auditability? That’s part of DevSecOps. How am I going to be able to audit something that I don’t understand where it came from, or the provenance for it? Reproducibility? Anybody ever hit the regenerate button? Does it come back with the same thing? Reproducibility. Explainability, do you understand what was just generated and handed to you? Whether it’s a test, whether it’s code, whether it’s script, whether it’s something else, do you understand? Then there’s security. We’re going to talk a lot about security.

There was a survey of over 500 developers, and of those 500 developers, 56% of them are leveraging AI. Of that 56%, all of them are finding security issues in the code completion or the code generation that they’re running into. There’s also this concept of reduced collaboration. Why? Why would there be reduced collaboration? If you’re spending your time talking to your GAI (Generative AI) friend, and not talking to the person beside you, you’re investing in that necessary prompting and chatting.

It has been shown so far, to reduce the collaboration. Where are people using it today for building software? We’ve spent a lot of time talking about how we can provide it as a capability to end users, but how are we using it to generate software, to build the capabilities we deliver into production? I don’t ignore the industry or the commercial surveys, because if you’re interviewing or serving hundreds of thousands of people, even tens of thousands of people, I’m not going to ignore that as a researcher. Yes, Stack Overflow friends.

Thirty-seven thousand developers answered the survey, and of that, 44% right now are attempting to use AI for their job. Twenty-five additional percent said they really want to. Perhaps that’s FOMO, perhaps not. What are they using it for, of that 44% that are leveraging it? Let me read you some statistics. Eighty-two percent are attempting to generate some kind of code. That’s a pretty high number. Forty-eight percent are debugging. Another 34%, documentation. This is my personal favorite, which is explaining the code base. Using it to look at language that already exists. Less than a quarter are using it for software testing.

AI-Assisted Requirements Analysis

This is a true story. This is my story from the January timeframe about how I was able to leverage with my team, AI, to assist us with requirements analysis. What we did was we met with our user base, and we got their permission. “I’m going to talk with you. I’m going to record it. We’re going to take those transcriptions, are you ok if I leverage a GPT tool to help us analyze it?” The answer was yes. We also crowd sourced via survey. It was freeform, by and large.

Very little was rationalized using like, or anything along that line. When we fed all of that in through a series of very specific prompts, we were able to uncover some sentiments that were not really as overt as we had thought. There were other things that people were looking for in their requirements. When it comes to requirements analysis, I believe it is strong use of the tool, because you’re feeding in your language and you’re extracting from that. It’s not generating on its own. Things to be concerned about. Make sure you put your prompt into your version control.

Don’t just put the prompt into version control, but keep track of what model or what service that you’re posting it against. Because as we’ve heard, as we know, those different prompts react differently with different models. Why would I talk about diverse datasets? The models themselves have been proven to have issues with bias. It’s already a leading practice for you to make sure that you’re talking to a diverse user group when you’re identifying and pulling those requirements out. Now you have that added need that you have to make sure that you are balancing the potentiality that the model has a bias in it. Make sure that your datasets, make sure that the interviews, make sure the people you talk to represent a diverse set. Of course, rigorous testing, humans in the loop.

AI-Assisted Testing Use Cases, and Testing Considerations