Transcript

Bento: I think the first thing most people think when talking about monorepos is the opposite, which is the poly-repo setting. I’m going to start comparing both approaches. Here you can see some setups that you may recognize from diagrams that you may have in your company, in your organization. The white boxes there are the representation of a repo, and the blue cubes are representing artifacts that these repos produce. It can be like Docker images, or binaries, JARs, anything that you get out of the code that you have can be understood as the blue cube.

The first example here, you have a bunch of repos that have a very clearly defined dependency relationship, and they all together work to produce a single artifact. This is, I think, very common, where people have multiple repos to build a monolith. No mystery there. Then we move on to the next one, where we have a single repo, but we also produce a single artifact. That already has a question mark here from my side, because, is that a monorepo or not? Then, moving on, we have a more complicated setting, where you have multiple repos that have more dependency relationship. You have code sharing between them.

In the end, the combination is three artifacts. That, to me, is very clearly a poly-repo setting. Then the last one is what to me is also very clearly a monorepo setting. You have a single repo, and after a build, you have multiple artifacts. Then, other complicated cases where it’s not so obvious whether it’s a monorepo or a poly-repo setting. For example, here you have repos that in a straight line produce a single artifact, and they are not connected with each other in any way. Is that a poly-repo, if you don’t have sharing of code between multiple artifacts that, in the end, get produced? Conversely, if you have a single repo and the internal modules do not share any code, it’s the analogs of this situation. Can you say that this is a monorepo, or is it just a big repo?

Then, most of us work in a setup that is very similar to this, where you have everything mixed together and you cannot tell whether or not you’re using poly-repo or monorepo, or both, or neither. A simple way to understand them, in my view, what defines if a setting of repos that produce artifacts can be understood as a poly-repo or a monorepo setting is the way you share code between these pieces that you have to have to produce the artifacts. When you add the arrows that represent the dependency relationship between the repos that make artifacts share code, I think you can very clearly say that this is a poly-repo setting. The same is true for a monorepo setting where now you have code sharing.

Monorepos (Overview)

Giving a definition of what a monorepo can be understood as, in this presentation, and in my view, you can say that monolithic applications are not always coming from monorepos. Also, putting code together on the same repo does not characterize a monorepo as well, because if you don’t have the sharing relationship, then it’s just a bunch of modules that are disjoint and produce artifacts from the same place. Of course, to have a monorepo, we have to have a very well-defined relationship between them, so you can very distinctively draw the diagram that represent your dependency relationships.

Also, very important is that the modules on a monorepo are part of the same build system. Most of us, I think, come from a Java background, or a Go background here, and you’re very familiar with Maven builds. We have a lot of modules that are part of the same reactor build. This produces a single artifact in the end, or multiple smaller artifacts that may or may not be important to you, as far as publication go to a registry, or for third parties to consume, or even first parties to consume. Then, in the blue box are things that are not very well defined when talking about monorepos, but I wanted to give you my opinion and what I understand as a monorepo.

Monorepos are not necessarily big in the sense of, there’s a lot of code there, a mess everywhere. Nobody knows what depends on what. No, that’s not the case. Also, for me, monorepos are repos that contain code that produce more than one artifact that is interesting to you after the build. If you’re publishing, for example, two Docker images from one single repo, in my view, that’s a monorepo already. Of course, the code sharing. You need to have ability for a single module in your monorepo to be reused by final artifacts that you have. That’s the definition. We’ll build from there.

Right off the bat, you can do this claim. You can tell me, yes, but nobody has a monorepo, everyone has multiple repos. That is true. It’s very rare to see a company that has everything inside the same repository. That’s not the case at all. When we talk about monorepos, we actually are not talking about putting everything inside the same repo. I’m going to try to explain the way I understand that and how you can benefit from it. Conversely, using the definition that I just gave, you can also say that everyone has a monorepo, because if you think about a repository that has many libraries in it that get published, you have one single repository that publishes multiple artifacts that are interesting after the build. If you have a multi-module Go repo, or if you have a multi-module Maven repo, for me, you have a monorepo.

This session will try to answer this question, should you have a monorepo? The way I’ll try to do that is talking a little bit about code-based structure, and how teams operate together, how people do software, and how code reuse is understood and implemented in the industry. In the end, I hope that by looking at the examples and my personal experience that I’m going to share with you, you’ll be able to make a more informed decision of whether or not you can benefit from a monorepo or if you stick to a more traditional poly-repo setting.

Example 1 – Microservice E-commerce

Let’s see an example where our code base is composed by these repos. We have five microservices at the top, followed by two Backend for Frontends repos. Then we have two frontend applications. Then we have five repos that contain common code. In the end, we have the end-to-end tests repository. Everyone can relate in some way to this example, although oversimplified. The experience I had multiple times is very closely related to this, where you present something very simple, and all of a sudden everyone has an opinion of what should be done, of what’s a better way to do that, or even like personal taste: I like it, I don’t like it, whatever.

First thing I wanted to talk is, if you’re in this situation, chances are you’re not ready to have a conversation about whether or not to pursue a poly-repo or a monorepo setting. Because you have very fragmented opinions, and you don’t have people going to the same direction, even if they agree or not. My advice would be trying to get everyone moving in the same way so that everyone can be exposed to the same problems, understand what comes after them, in case of library building, or in case of the ops team deploying heavy Docker images or something. That is the way that this conversation starts. This conversation is very slow, very lengthy, and very complex.

It’s not something you can decide in a one-hour meeting when you put all the stakeholders together, or the architects, or whatever, and then you say, ok, voting, monorepo or not? It’s not going to go like that. Very important, people, teams, and your uniqueness, the uniqueness of your operating dynamics, come before choosing what to do. Then, even if you do decide what to do, having everyone align and understanding why you’re doing something. Put that in writing. Document it. Make presentations. Make people watch them. Whatever works for you comes before actually pursuing that change, implementing monorepo or poly-repo.

Let’s continue with our little e-commerce setup with the repos I showed before. Let’s break it down in teams. We can look at the repos, and we can see some affinity between them and the way that I as the architect or CTO or whatever, understand how people should be working together. This is valid. It’s something that someone is doing somewhere. Also, you could have something like this, where we have different teams, we have different names, we have different operating dynamics. This is very unique to your organization. Nobody is doing software exactly the same way. We’re sure, borrowing from colleagues and people from other companies, tech presentations, but in reality, the operations is very distinct in wherever you look. This is also valid.

Interesting, we have this now, where three teams are collaborating on the same repo. Also, here, we have all teams collaborating on the end-to-end tests. That’s pretty normal. Everyone owns the tests, so nobody does. Also, this is a very valid possibility where you have a big code base, you have your repos there. You have two main teams doing stuff everywhere, helping everybody out. Then you have a very focused team here doing only security, so they only care about security. You have another very focused team that only cares about tests. This is also valid. Why am I saying all this? Because you as individuals, as decision makers, as multipliers, you know how your team operates much better than me or anyone else that gives advice on the internet, or whatever.

By looking at your particular case and evaluating both techniques, both monorepo and poly-repo, you can see where you could benefit from each one of them. You don’t have to choose. You can pick monorepo for a part of your org, or poly-repo for another part.

So far, list of repos. Everybody had opinions. We sorted that out. Now we have our little team separation here. We know that this is important. We didn’t talk about the dependency relationship between the repos. It’s somewhat obvious from the names, but even so, people can understand them differently. This is a possibility. I know there’s a lot of arrows, but yes. I have the microservices there depending on a lot of common library repos here. Then they get used by the end-to-end tests.

Then you have the frontends, which is like separate, and they have their own code sharing with the design system, or very nasty selects that you implemented. This can be something that someone draws in your company by looking at the repos. Or, you can see someone understand it like this, where the BFFs actually depend on the user service as a hard dependency, and not just like API dependency or something. This is a valid possibility too. More than that, these are only the internal arrows that you would have if you would draw this diagram for your company in this example. There’s a lot of other arrows, which are third-party dependencies that every repo is depending on. You cannot build software without depending on them.

To make things more complicated, this is also something that many of us are doing. We’re versioning our own libraries and publishing them so that they can be consumed by our services. You can see this becomes very complicated very quickly, because it’s hard to tell what libraries are being used and what are not, and what versions of services are using what versions of libraries. It can be that you see this and you solve it like this.

Every time a library publishes a release, we have to update all the services to have everyone on the same page using the same version, no dependency conflicts. Everything works. Or, you can go one step further and you say, every time we make a change on a repo, this gets automatically published to the services. They rebuild, redeploy, do everything. In this case, why do we have repos then? We could put everything inside the same repo and call them modules. It’s a possibility.

I wanted to go through this example to highlight that making software inherently has these problems. If you haven’t, go watch this talk, “Dependency Hell, Monorepos and beyond”. It’s 7 years old, but it’s still current. It’s very educational to understand how software gets built and published. Very great material. We can all identify with these problems here. At some point, we had multiple releases just to consume a tiny library, or we had one person depending on a version of a library, and then this version has a security vulnerability, so you have to run and update everything.

That’s one thing I wanted to say, like making software is hard, even if you were in complete isolation, just you and your text editor, pushing characters there is already difficult. Then, if you start pointing to third-party dependencies, it gets more difficult, because now we have to manage the complexity of upgrading them and tracing them and see if they are reliable or not. Then, if you want to make your software be reusable by others, then that’s even harder, because now you have to care about who’s using your software. If you’re doing all at the same time, which is everyone, then this is the hardest thing ever. It’s really complicated.

Example 2 – Upstream vs. Downstream

Taking a step back, back to the monorepo and poly-repo conversation. How can we define them, after all this? Poly-repo is usually understood as very small repos that contain software for a very specific purpose, and they produce a single artifact, or like very few. Every time you publish something from a repo on a poly-repo setting, you don’t care about who’s using it. It’s their responsibility to get your new version and upgrade their code.

On a monorepo, you have multiple modules that can or cannot be related. The way you reuse code internally is by just pointing to a local artifact. On purpose I put that line there saying that builds can be fast on a monorepo, because if you build it right you can always filter the monorepo and build exactly the part that you want without having to build everything else that’s in there. That’s how you scale a monorepo.

I think we can move to the second example where we have a better understanding of the way I’m presenting poly-repo versus monorepo. This next example has to do with the concepts of upstream and downstream. Many of you may be familiar with it already, especially if you’re in a poly-repo setting. That’s repos in purple, artifacts in blue. Let’s imagine you’re there all the way to the right. What you call upstream is whatever comes before you in the build pipeline. Libraries you use, services you consume, that’s upstream. Everything that depends on you, your libraries, your Docker images, your services, that’s downstream.

In this example, we have one repo that’s upstream and one repo that’s downstream. Pretty easy. If you’re someone making changes to this repo, now your downstream looks like this, which is a lot of stuff. In this cut of the code base structure, that’s the entirety of your publishable artifacts. Changing something in that particular repository has implications in all artifacts you produce. Let’s go through the most common setup to share code, which is publishing libraries using semantic versioning. You can have this setup and you make a change of the very first one there where the red arrow is. What happens is you make a change, then you publish a new version.

Then, people have to upgrade their dependencies to use that new version. If you want to continue that and release the new artifacts using that new version of that library, then you have to continue that chaining, and you have a new version of this repo, and you publish a new version of this one. Then they update the version that they’re consuming. Then you publish a new version of those repos that represent the artifacts, and then you push the artifacts somewhere. This is how many of us are doing things. Depending on who’s doing what, this may be very wasteful. If there’s the same team managing all these repos, why are they making all those version publications, if their only purpose was to update the artifacts in the first place?

We can argue that, let’s use a monorepo then and put every component there in a module inside the same repo under the same build system, and then I make a change, and then I release. That’s not all good things, because on the last setup, the team doing changes here did their change, published their versions, continue to the next task. Then the other teams started upgrading their code. Now you can have something like this where you have a very big change to make, and you have to make this change all at once, which can take weeks especially if you’re doing a major version bump or something. You make the changes and then you can release.

This is the part where I take the responsibility out of me a little bit and hand it over to you, because you know what you’re doing, what your code looks like, what your teams prefer, and everything related to the operating dynamics of your code base. If you look at all this and you say, who’s responsible for updating downstream code? Each one of us will have a different answer. That answer has impact on whether or not you should be using a monorepo. Another question would be, how do you prefer to introduce changes? Where on a poly-repo, you do it incrementally. A team updates a library. This library gets published, and slowly everyone adopts it. Whereas on a monorepo, the team that’s changing the library may have to ask for help updating downstream code because they may not have the knowledge, so it may take longer.

Once you do it, you do it for everyone, so you know that the new artifacts are deployed aligned. This is my take. I don’t think poly-repo and monorepo are either/or. I think they’re complementary. It depends on where you are and what you’re doing and who you’re doing it with. They’re both techniques that can coexist in the same code base, and the way you structure it has to be very tightly coupled with the way you operate it, and the skill set that people have, and everything related to your day-to-day. Some parts of your organization are better understood and operated as a detached unit.

For example, if you have a framework team, or you’re doing a CLI team, where you have a very distinct workflow, they need to be independent to innovate and publish new versions, and you don’t need to force everyone to use the latest version of this tool. That happens. That exists. It’s a valid possibility. The other part is true as well. Some parts of your organization are better understood and operated as a unified conglomerate, where alignment and synchronicity are mandatory, even for success. For me, monorepos or poly-repos is not a question of one or the other. It’s a question of where and when you should be using each one of those.

Apache KIE Tools

I wanted to talk a little bit more about my experience working on a monorepo. We have a fairly big one. That’s the project I work on every day. It’s called Apache KIE Tools. It’s specialized tools for business automation, authoring and running and monitoring and everything. Some stats, our build system is pnpm. We are almost 5 years old. We have 200 packages, give or take. Each package is understood like a little repo. Each package has a package.json file, borrowed from the JavaScript ecosystem, that contains a script that defines how to build it. This package.json file also defines the relationship between the modules themselves.

It’s really easy for me to a simple command, say, I want to build this section of the monorepo, and select exact what part of the tree that I want to build. We have almost 50 artifacts coming from that monorepo, ranging from Docker images to VS Code extensions and Maven modules and Maven applications, and examples that get published, and everything. The way we put everyone under the same build system was by using standardized script names. Each package that has a build step has two commands called build:dev and build:prod, and packages that can be standalone developed, have a start command.

Then, all the configuration is done through environment variables, borrowing from the Twelve-Factor App manifesto. We have built an internal tool that manages this very big amount of environment variables to configure things like logo pass, or whether or not to turn on the optimizer or minifier, or run tests or not. Everything that we do is through environment variables. Every time we need to make a reference to another package, so, for example, I’m building a binary that puts together a bunch of libraries. We do that through the Node modules there, also borrowed from the JavaScript ecosystem.

Through symbolic links and the definitions we have in package.json, we can safely only reference things that we declare as a dependency. We’re not having a problem where we are just going back to directories and entering another package without declaring it as a dependency. This is something that we did to prevent ourselves from making mistakes, and during builds that select only a part of the monorepo, forget to build something.

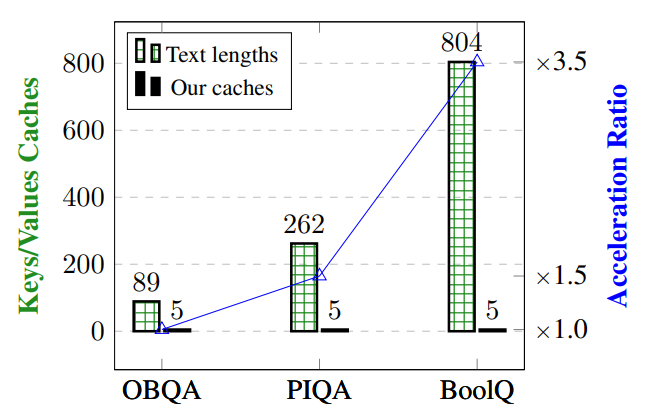

Then, one thing that we have that’s very helpful, is the ability to partially build the monorepo in PR checks. Depending on the files you changed, we have scripts that will figure out what packages need to be rebuilt, what packages need to be retested, and things like that. The stats there are, for a run that changed only a few files in a particular module, the slowest partition build in 16 minutes, and all the other ones were very fast. For a full build, you can compare the times. Having the ability to split your builds in partitions and in sections of the tree is very important if you want to have speed on a monorepo that can scale very well.

Also, to make things more complicated, we have many languages in there. We are a polyglot monorepo. We have Java libs and apps building with Maven. We have TypeScript, same thing. We have Golang. We have a lot of container images as well. Another thing, and that’s all optimization, is that we have sparse checkout ability, so you can clone the repo and select only a portion of it that you want. Even if it gets really big, you can say on the git clone that you only want these packages, and they will be downloaded, and everything else is going to be ignored. The build system will continue working normally for you.

Apache KIE Tools (Challenges)

Of course, not everything is good. We have some challenges and some things that we are doing right now. One of those things is that we’re missing a user manual. A lot of the knowledge we have is on people’s heads and private messages on Slack, or Zulip chat for open source, and that’s not very good. We’re writing a user manual with all the conventions that we have, the reasoning behind the architecture of the repo and everything. Then, we’re also improving the development experience for Maven based packages, especially with importing them in IDEs and making sure that all the references are picked up, and things become red when they’re wrong, and things like that.

Then we have a very annoying problem, which is, if you change the lockfile, the top level one, our partitioning system doesn’t understand which modules are affected. We have a fix for it. We’re researching how to roll that out. I’m glad that we found a solution there. If that works the way it should, we’re never going to have a full build whenever our code change, unless it’s of like a very root level file, like the top-level package.json or something. Then we’re also, pending, trying a merge queue. Merge queue is when you press the merge button, the code doesn’t go instantly to the target branch. It goes to a queue where it simulates merges, and when a check passes, you can merge automatically. You can take things out of the queue if they’re going to break your main branch.

That’s a very cool thing to prevent semantic conflicts from happening, especially when they break tests or something. We’re pending trying that. Also, we’re pending having multiple cores available for each package to build. We can do parallel builds, but we don’t have a way to say, during your build, you can use this many cores. We don’t have that. We’re probably going to use an environment variable for that. Next one is related to this. You saw we have two commands to build, build:dev and build:prod, and sometimes there’s duplication in these commands, and it’s very annoying to maintain. One thing we’re researching is how we can use the environment variables to configure parameters that will distinct a prod and a dev build.

For example, on webpack, you can say the mode, and it will optimize your build or not. The last one, which I think is the most exciting, is taking advantage of turborepo, also a test runner that will understand package.json files. It has a very nice ability, which is caching, so you can, in theory, download our monorepo and start an app without building anything. You can see how powerful that is for development and for welcoming new people in a code base. Of course, if you look at a poly-repo, you don’t have the caching problem because you’re publishing everything, so you don’t have to build it again: tradeoffs.

Using a Monorepo (Yourself)

I wanted to close with some advice of, how can you build a monorepo yourself, or even improve existing monorepos that you might have? The first one is, if you’re starting a monorepo now, if you think this is for you, if you’re doing research, if you’re doing a POC or something, then don’t start big. Don’t try to hug every part of the code base. Start small. Pick a few languages or one. Choose one build tool, and go from there. You’re going to make mistakes. You’re going to learn from them. You’re going to incorporate the way your organization works. You’re going to have feedback. Start small. Don’t plan for the whole thing.

Then, the third bullet point there is, choose some defaults. Conventionalize from the beginning, like this is the way we do it. It doesn’t matter if it’s good or bad, you don’t know. You’re just starting. The important thing is that everyone is doing the same, and everyone is exposed to the exact same environment so they can feel the same struggles, if they happen. Chance is, there will be some. Then, fourth is, make the relationship between the modules easy to visualize. It’s really easy to get lost when you have a monorepo, because you have very small modules, and if you don’t plan them accordingly, you’ll have everyone depending on everything. This is not good. That also happens with a poly-repo.

Number five, be prepared to write some custom tools. Your build necessities are very unique too. Maybe your company has a weird setup because of network issues, or maybe you’re doing code in a very old platform that needs a very special tool to build, and you need to fetch it from somewhere and use an API key. Be prepared to write custom scripts that look like they are already made for you, tailored for your needs. This is something that’s really valuable. Sixth, be prepared to talk about the monorepo a lot, because this is a controversial topic, and people will have a lot of opinions, and you will have to explain why you’re doing this all over again multiple times.

Then, number seven is, optimize for development. That comes from my personal experience. It is much nicer for people to clone and start working right away, rather than having a massive configuration step. Our monorepo has everything turned off by default, everything targeting development, localhost, all the way, no production names, no production references, nothing. Everything just is made for being ran locally without dependencies on anything. Then some don’ts, which are equally important, in my opinion. Don’t group by technology.

Don’t look at your code base and think, yes, let’s get everything that is using Rust and put on the same monorepo. Because, yes, everything’s Rust. We have a build system built for it. No. Group by operating dynamics, by affinity of teams. Talk to people, see how they feel about interacting with other teams more often. Maybe they don’t like it, and maybe this is not for them, or maybe you’re putting two parts of your code base that are using the same technology but have nothing to do with each other. Don’t do that. Don’t group by technology, group by team affinity, by the things that you want to build and the way people are already operating.

Then, number nine, don’t compromise on quality. Be thorough about the decisions you make and why you’re making them, because otherwise you can become a big ball of mud very quickly. Say no. If some people want to put a bunch of code in your monorepo just to solve an immediate problem that they might be having, think about it. Structure it. Plan. Make POCs. Simulate what’s going to be like your day-to-day if the code was there. Have patience. Number 10 is, don’t do too much right away. I mentioned many things like partial build, sparse checkouts, caching, and unified configuration mechanism. You don’t have to have all these things to have a monorepo. Maybe your monorepo is small in the beginning, and it’s fine if you have to build everything every time, maybe.

Eleventh, I think, is the most important one, don’t be afraid if the monorepo doesn’t work out for you. Reevaluate, incorporate feedback. Learn from your mistakes, and hear people out. Because the goal of all this is to extract the most out of the people’s time. There’s no reason for you to put code in a monorepo if this is going to make people’s life harder. That’s true for the opposite direction too. There’s no reason to split just because it’s more beautiful.

Questions and Answers

Participant 1: Do you have any preference on the build tools? Because we have suffered a lot by choosing one build tool and then we quickly get lost from there.

Bento: You mean like choosing Maven over Bazel or Gradle?

Participant 1: You mentioned a lot of tools, so I was wondering which one is your preferred choice, like Gradle or Maven?

Bento: This is very closely related to your team’s preference and skill set. On my team, it was and it still is, very hard to get people to move from the Maven state of mind to a less structured approach that we have with like package.json and JavaScript all over the place. I don’t have a preference. They all are equally good depending on what you’re doing. It will depend.

Participant 2: Do you have any first-hand opinion on coding styles between the various modules of a monorepo? I’m more interested in a polyglot monorepo, if possible, like how the code is organized, various service layers, and all that.

Bento: I’m a very big sponsor of flat structures on the monorepo. I don’t like too much nesting, because it’s easier to visualize the relationship between all the internal modules if you don’t have a folder that hides 20, 100 modules. I always tell people, give the package name a very nice prefix, and don’t be afraid of creating as many as you want. Like the monorepo that we built is built for thousands of packages. We are prepared to grow that far. IDE support might suffer a little bit, but depending on what IDE you’re using, too. This is the thing that I talk about the most. Like flat structures at the top, easy visualization. If you go to the specifics of each language, then you go to the user manual and you see the code style there.

Participant 3: I’m from one of those rare companies that does pretty much use a monorepo for the entire code. There’s lots of pros and cons there, of course. We’re actually in the process of trying to unwind that. I just wanted to add maybe one don’t, actually, to your list, which is, try to avoid polyglot repos. Because you can easily get into a situation where you have dependency hell resulting from that, if you have downstream dependencies from that and upstream dependencies. You have a Java service that’s depending on a Python library being built, or something like that.

Bento: I don’t necessarily agree with that don’t, but it is a concern. Polyglot monorepos are really fragile and really hard to build. You can look at stories that people who use Bazel will tell you, and it can become very messy very quickly. For our use case, for example, we really do have the need of having this cross-language dependency because we’re having like a VS Code extension that is TypeScript depending on JARs, and these JARs usually depend on other TypeScript modules. The structure that we created lets us navigate this crazy dependency tree that we have in a way that allows us to stay in synchrony.

Participant 3: I was talking about individual repos having multiple languages in them. Maybe it’s specific to our company. We have pretty coarse-grained dependencies, so it’s like code base.

Bento: This is a good example, like your organization, it didn’t work out for you, so you’re moving out of it. That’s completely fine. Maybe a big part of your modules are staying inside a monorepo, which is fine too. You’re deciding where and when.

Participant 4: When you were talking about your own company’s problems, one of the bullets said that the package lockfile would change, or the pnpm-lock file would change, and it was causing downstream things to build, and you fixed that. Why is it a bad thing for when dependencies change, for everything downstream to get rebuilt?

Bento: It’s not a bad thing. It’s actually what we’re aiming for. We want to build only downstream things when a dependency changes. Our problem is that when the lockfile which is in the root folder changes, the scripting system that we have to decide what to build will understand that a root file changed so everything gets built. Now we have a solution where we leverage the turborepo diffing algorithm to understand which packages are affected by the dependencies that changed inside the lockfile. You’re right, like building only downstream when a dependency changed is a good thing.

See more presentations with transcripts