Month: September 2022

MMS • Bruno Couriol

Article originally posted on InfoQ. Visit InfoQ

The Preact JavaScript framework recently released Signals, a new set of reactive primitives for managing application state. Like other frameworks (e.g., Svelte, VueJS), the React-compatible framework lets developers associate parts of the user interface with state variables independently of the UI’s component tree. Alleged benefits of the extra 1.6KB: fast re-renders that are independent of the shape of the UI component tree and excellent developer ergonomics.

The Preact team explains the rationale behind the new API as follows:

Over the past years, we’ve worked on a wide spectrum of apps and teams, ranging from small startups to monoliths with hundreds of developers committing at the same time. […] We noticed recurring problems with the way application state is managed.

[…] Much of the pain of state management in JavaScript is reacting to changes for a given value, because values are not directly observable. […] Even the best solutions still require manual integration into the framework. As a result, we’ve seen hesitance from developers in adopting these solutions, instead preferring to build using framework-provided state primitives.

We built Signals to be a compelling solution that combines optimal performance and developer ergonomics with seamless framework integration.

Most popular JavaScript frameworks have adopted a component-based model that allows building a user interface as an assembly of parts, some of which are intended to be reused and contributed by open-source enthusiasts and other commercial third parties. In the early years of React, many developers credited component reusability and ergonomics (JSX, simplicity of conceptual model) for its fast adoption.

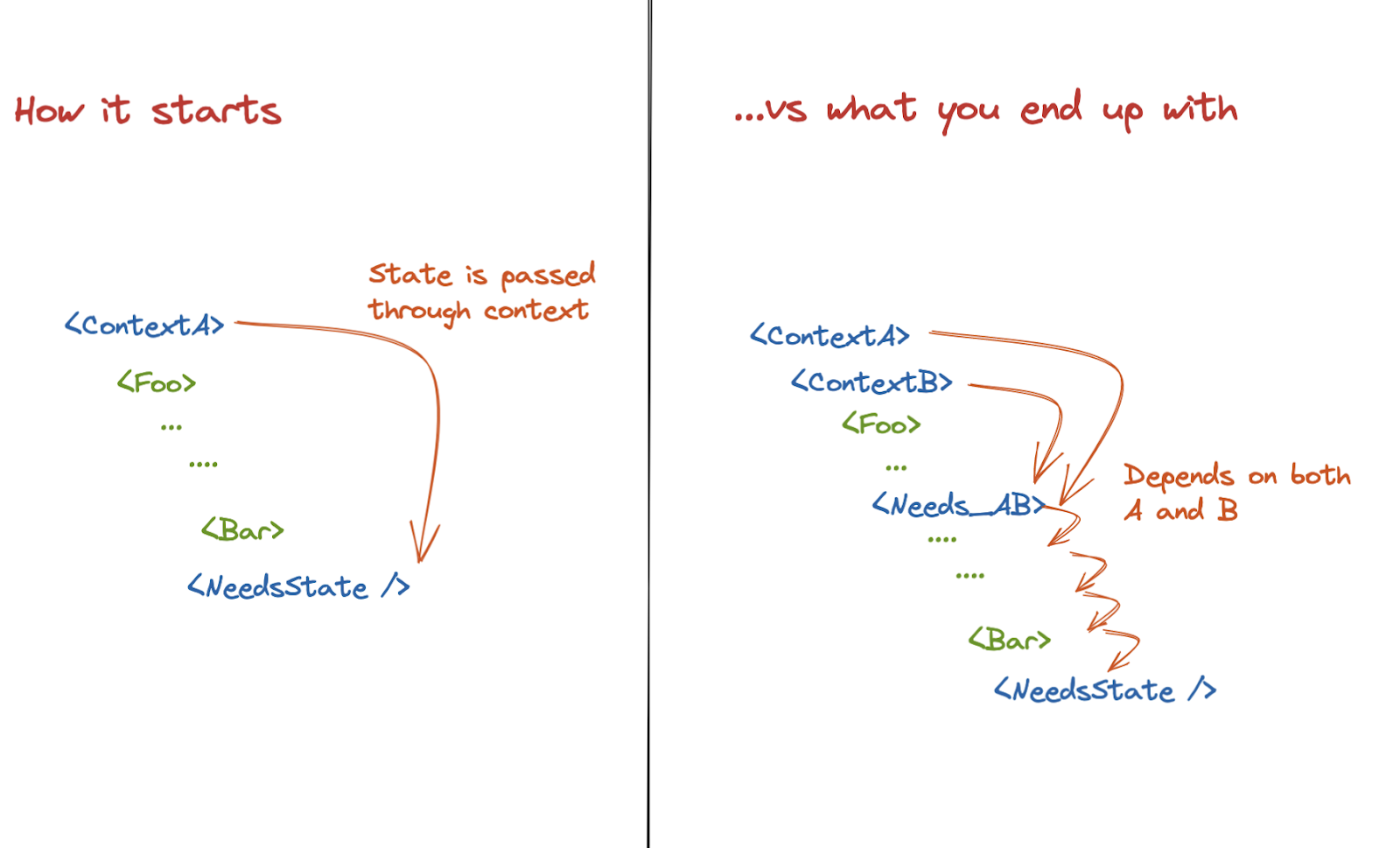

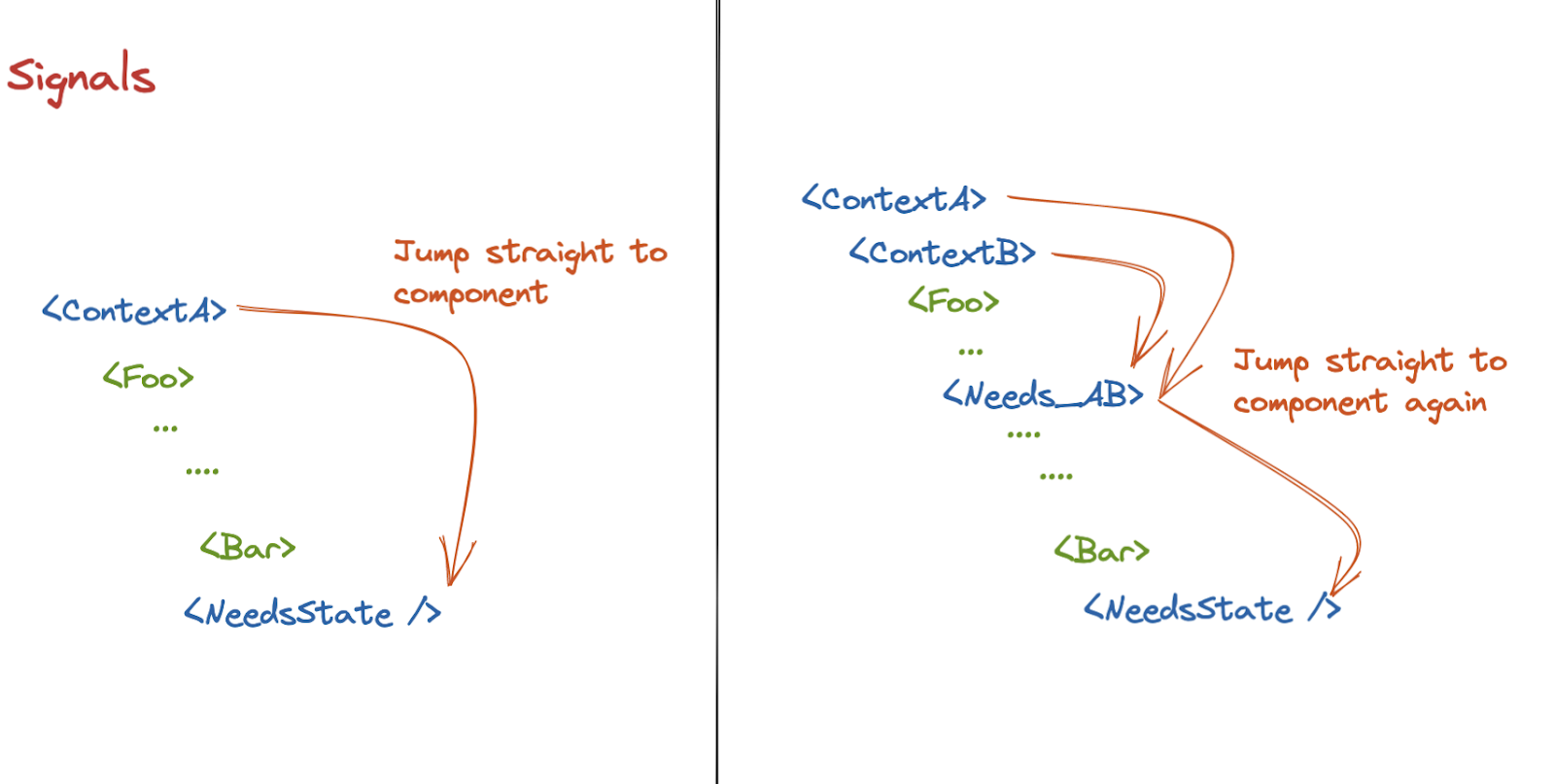

In large-enough applications, some pieces of state are often required by unrelated components of the user interface component tree. A common solution is to lift a given piece of state above all components that depend on it. That solution and the corresponding API (often called Context API) however may result in unnecessary rendering computations. The number of components which must be synchronized with a piece of context state may be fairly small when compared with the size of the component tree to which the context state is passed. A change in context state will however trigger the recomputation of the whole component tree:

(Source: Preact’s blog)

In some cases (e.g., very large component trees, expensive component renders), the unnecessary computations may lead to performance issues. Preact’s Signals API seeks to eliminate any over-rendering:

Beyond framework integration, the Preact team also claims excellent developer ergonomics. The blog article provides the following implementation of a todo list application (cf. code playground):

import { render } from "preact";

import { signal, computed } from "@preact/signals";

const todos = signal([

{ text: "Write my first post", completed: true },

{ text: "Buy new groceries", completed: false },

{ text: "Walk the dog", completed: false },

]);

const completedCount = computed(() => {

return todos.value.filter(todo => todo.completed).length;

});

const newItem = signal("");

function addTodo() {

todos.value = [...todos.value, { text: newItem.value, completed: false }];

newItem.value = "";

}

function removeTodo(index) {

todos.value.splice(index, 1)

todos.value = [...todos.value];

}

function TodoList() {

const onInput = event => (newItem.value = event.target.value);

return (

<>

<input type="text" value={newItem.value} onInput={onInput} />

<button onClick={addTodo}>Add</button>

<ul>

{todos.value.map((todo, index) => {

return (

<li>

<input

type="checkbox"

checked={todo.completed}

onInput={() => {

todo.completed = !todo.completed

todos.value = [...todos.value];

}}

/>

{todo.completed ? <s>{todo.text}</s> : todo.text}{' '}

<button onClick={() => removeTodo(index)}>❌</button>

</li>

);

})}

</ul>

<p>Completed count: {completedCount.value}</p>

</>

);

}

render(<TodoList />, document.getElementById("app"));

While the provided example does not showcase how reactive primitives eliminate over-rendering, it nonetheless showcases the key new primitives. The signal primitive declares reactive pieces of state. The computed primitive declares a reactive piece of state as computed from other reactive pieces of state. The value of the reactive pieces of state may be accessed with .value.

Developers debated on reddit the importance of performance in today’s web applications and compared ergonomics with that of React, other frameworks’ reactivity primitives (e.g., Vue 3, Solid), and other libraries (e.g., Redux, mobX, jotai, recoil).

Others worried that with this new API Preact was straying farther away from React. One developer said:

Hooks and Classes are the supported and encouraged architecture to utilize and Preact’s Signals move intentionally away from that, further defining them as a unique framework, NOT a “React add-on”.

Another developer on reddit mentioned the need for guidance and additional examples:

The problem here is that you’re going to get a lot of confusion around how to performantly utilize signals and so people are going to use them wrong all the time, unfortunately.

Preact self-describes as the “fast 3kB alternative to React with the same modern API”. Preact is an open-source project under the MIT license. Contributions are welcome and should follow the contribution guidelines and code of conduct.

MMS • Ben Linders

Article originally posted on InfoQ. Visit InfoQ

Working in outdated ways causes people to quit their work. Pim de Morree suggests structuring organizations into networks of autonomous teams and creating meaningful work through a clear purpose and direction. According to him, we can work better, be more successful, and have more fun at the same time.

Joost Minnaar and Pim de Morree from Corporate Rebels will speak about unleashing the workplace revolution at Better Ways 2022. This conference will be held in Athens on September 23, 2022.

According to de Morree, the way we currently work is outdated. This is caused by using an outdated idea of how work should be done:

100+ years ago we created structures to manage work in a certain way. That worked really well, but times have changed. However, our way of working has remained largely the same.

While the world is changing at a much faster pace, most organizations continue to rely on bureaucracy, top-down control and predictability. And that’s not working.

As a result, a huge amount of people are disengaged, burned out, or bored out of their mind at work, de Morree argued. It’s not that surprising that people are quitting in droves.

De Morree suggests structuring organizations into networks of teams where teams have skin in the game. Split the organization up into small teams (mostly 10-15 people) that are highly autonomous:

Think of giving teams the power to make decisions around strategy, business models, reward distribution, hiring, firing, and so on. Power to the people, to the teams I should say.

This is not an easy thing to do. But, according to de Morree, if done well it has a huge impact on motivation and success.

Pioneering organizations are changing their way of working. Many are focusing on networks of teams, transparency, distributed decision-making, and supportive leadership, de Morree claimed. They focus on creating meaningful work through a clear purpose and direction. They reduce hierarchy and unleash autonomy. They provide freedom and responsibility while allowing people to work on things they love to do.

The benefits that de Morree has seen include increased engagement, productivity, innovation and reduced sickness, mistakes, and attrition.

InfoQ interviewed Pim de Morree about exploring better ways to work.

InfoQ: What is keeping organizations from changing the way they work?

Pim De Morree: It´s a lack of understanding of how things can be done differently. It’s hard to reimagine things when you’re very much used to doing things a certain way. 99.9% of our education system, our institutions and our businesses are organized in traditional ways and therefore it’s hard to envision a different reality.

However, there are pioneers who are showing that things can look very different. In a very good way.

InfoQ: How can organizations foster freedom and trust, and what benefits can this bring?

De Morree: First of all, it’s important to understand that with freedom comes responsibility. It doesn’t make sense to just give employees freedom. To unleash true motivation, it’s important to also provide lots of responsibility.

Create clarity around the purpose of the organization, the goals of each and every team, the guiding principles to take into account, and the constraints that people have to deal with. If you set the framework properly, it’s much more interesting for teams to self-organize within that framework.

InfoQ: What can be done to establish a culture of radical transparency?

De Morree: As the term suggests, radical transparency is about opening up all kinds of information. Think of creating transparency around the company financials, important documents, team financials, salary levels, and so on. The more information people have about the company, the more they are able to make great decisions. Otherwise, they might have authority to make decisions, but no information to do so properly.

MMS • Kate Wardin James Stanier Miriam Goldberg James McNeil

Article originally posted on InfoQ. Visit InfoQ

Transcript

Verma: We are here for a panel discussion on remote and hybrid teams.

We’re mostly tech people here and we should just recognize that this is such a privilege for us to be remote. Although like, last couple years, we’ve felt like we’re forced into this remote work, but we should recognize that there are other people who were bound by their duty and motivated by their compassion to continuing being in-person, and definitely thankful for all of that.

Background, and Perks of Working Remote vs. In-Person

I’m Vishal. I’m an engineering manager at Netflix. I have some remote working experience. As we introduce everybody, I wanted to hear a little bit about your background, especially in the context of remote and hybrid teams. Why don’t you, as you introduce yourself, tell us a perk that you miss from working in-person, or a perk that you enjoy because of being remote now that we are in this world.

McNeil: I’m James McNeil. I’m an SRE at Netlify. I’ve been there for a bit over a year now. That role has been fully remote. Netlify is a fully remote company. Before that, though, I was at Pivotal and I was working on a hybrid team. Two of my teams were in different countries in different time zones, and because Pivotal was the place where you pair that also involved fully remote pairing, which was a very interesting experience. There’s one thing that I absolutely do not miss is commuting. I don’t think people should have to commute ever. It makes no sense to me. There’s a lot of very interesting stuff about how cities were developed, and why commute. It’s not a natural state of being. I do miss free breakfasts for those of us who had them.

Stanier: I’m James. I’m Director of Engineering at Shopify. I’ve been at Shopify for not long, two months. Prior to that I was a seed engineer at a startup. Then over the course of 10 years, we grew it and exited. Remote for a couple of years now. I previously didn’t think remote working was for me, but the pandemic changed all that. The perk now is that we’ve relocated to be near all of our family, which is the best possible thing that could have happened to us. I agree with James, I do miss snacks and free food and that kind of thing, but being close to family is a bit better. That’s all good.

Wardin: Kate Wardin. I’m an engineering manager at Netflix. I am in Minneapolis. What I love about working remote is of course taking care of my house plants during the day. That has been a wonderful new hobby for me. Also, of course being closer to family, getting to have lunch with my daughter and my husband, and take my dog on a walk when it’s not brutally cold, is wonderful. I’ve been working remote since March of 2020, as a lot of us have. For those of you who have visited Minneapolis, I worked downtown and we had skyways, and so you would go on one on one walks through the skyways. You could walk the equivalent of miles all inside so you don’t have to put on your jacket, go to a good bunch of different restaurants and stuff. I really miss the skyway walks. That was so fun and just a great way to support local businesses too. I miss that and I miss seeing people of course, but I’m happy to be home.

Verma: That’s so sweet, the walks. I can almost picture people walking or myself walking. It’s such a wonderful experience.

Goldberg: I’m Miriam. I’m an engineering manager at Netflix. I manage our Federated GraphQL team. I’ve been at Netflix for about seven months, and I am fully remote out of Philadelphia. I started working remotely in 2018. When I was working at Braintree, I had started in-person, and then moved back to Philly, went remote, switched into management. It was a lot of changes. What I really miss, honestly, is having a beer with my co-workers after work. I miss that a lot. I also love that I get to be in my hometown, just like you Kate. I love Philly. I love that I can be here again and work in an industry that I love. It’s a great perk.

Verma: Again, now, imagining glasses clink with co-workers or friends. That’s obviously one thing I miss. You’re right, so many good things, so many things we miss also. I miss an espresso machine so much. I’ve considered buying it, obviously, but I think it works better when you have it at a company.

Interesting Developments around the Concept of Location

The big thing I want to start with is, the concept of location used to be so important. There’s been so much written about it. We know that people have migrated countries over centuries to be in the same place at the same time. I was recently reading about Leonardo da Vinci, how he moved to Florence and he did a lot of his important work there. We all know about Silicon Valley, people migrating there. The bigger question I have for all of you, is that location used to be so important, and now we’re saying that it’s not anymore. We’re at least saying that. What are some interesting developments you are seeing towards the concept of either location ceasing to be important anymore, news from around the world?

Stanier: This one’s interesting to me, because part of going remote and relocating was that I now live in a very rural remote part of the UK. This is where my family grew up. This is where her family are from. I think the really positive thing is that when she was 18, and she was leaving home, she had to go to London six hours, seven hours away in order to have a chance at a career. I think, obviously, the whole mentorship and seniority thing in our industry, remote is hard and that’s another conversation topic. I think now at least the possibility that anyone can grow up anywhere and have the same possibility of a career is incredible. The next generation of engineers could be out here in the west of Cumbria, for all I know, it used to be the universities, the cities. I think that’s a really exciting prospect.

Verma: How about you, Kate, why don’t you add your lens on how are you seeing the industry evolve, and do you think this is for real, like these changes for you?

Wardin: First, James, I love what you said. I think this change does make a career in tech a lot more accessible to people who might not have had an opportunity to get into it before. Also, for folks who might need to do something a little bit more like part time or that freelance opportunities first, there are so many more opportunities to network and make connections if you don’t live in these major hubs. In fact, I know a lot of organizations are strategically and intentionally looking at outside of those core cities that were traditionally where people would source a lot of that technology talent. I think that’s wonderful in so many different ways.

I think this is a change for good. I think there’s too many people in the industry who are like, now I’ve moved and I’m loving it, and I’m not going back. A lot of people are going to be picky on where they’re choosing to work. I think that it is here to stay, at least for a lot of organizations, just because of the talent that we can reach and the opportunities that we can provide to people who wouldn’t have had those before. I think there’s a lot of positives.

I’ll speak to a negative. I even think about Minneapolis, and just how some of those small businesses are struggling, if their proximity was servicing folks who commuted downtown, like parking ramps, restaurants, bars. We have to figure out ways to make sure that we’re still keeping them in business, too, and so I think getting creative about that. Of course, we know the success stories, and unfortunately, some of the downfalls of that. There’s pros and cons.

Verma: Excellent point about empathy with people who might be seeing the negatives of that. Definitely not as much talked about. Actually, while I take that context, what you said to Miriam and James McNeil. James McNeil and Miriam, you’ve been doing remote before the pandemic. Going back to the bigger question of remote like, I want to ask the same thing, what are you seeing from your point of view? Is this thing real? How different is it from before when you were doing remote?

McNeil: It’s real. I can speak for myself. I work for a company that doesn’t even actually have a registered entity in the UK, and I wouldn’t have that opportunity unless they were open to remote work. In fact, not dissimilar to James, I’ve within the past week moved to a much smaller city on the west side of the country, because my partner is from Wales, which is just across the border. I think that freedom and openness to different geographies, and to people working in different places so long as they are able to work is definitely here to stay. From our perspective, I’ve seen, and just looking at the makeup of our company, we’ve definitely hired much more internationally since the pandemic. I think we were a remote company but once we took the brakes off and went fully all in, suddenly, we’re like, we can hire people from all over the world. There’s so much talent out there that doesn’t want to go to Silicon Valley or can’t. One of the two. I think that companies that are open to that and that realize that are profiting from that.

I think one thing, though, is that, it’s going to be very important to realize that location is not setting. We’ve I think in all of our talks talked about, it’s a privilege to be able to work from home, both like financially and with having the right employer who will let you do that. I could totally see myself working from a co-working space in Bristol, and I’m sure at points in my life, I will have to, just because of not having the space where I’m living. I haven’t done it personally. We definitely offer a stipend if not pay entirely for our colleagues’ co-working spaces. I think that model, sort of a WeWork plus where there are places where you can go that have the right space, but also the right security setup from an IT perspective and from an infrastructure perspective, and people’s employers, for them to feel comfortable working there. Because it’s not necessarily about working from home, it’s about working where suits you.

Goldberg: Building on what you were saying, James, about co-working spaces. When I first went remote, I worked out of a co-working space that happens to be two blocks from my house. I had no commute. It was fantastic. The co-working space shut down for COVID. They’ve since reopened, but I’ve chosen to continue to work from home. I think it depends on the co-working space. When I transitioned into management I began talking more over the course of the day, and shortly before COVID I was getting a couple of stinky looks every now and then. That I was making too much noise in this space, and maybe I should hire a private office within the co-working space and spend more time in phone booths. At that point, I was like, I make a lot of noise. I talk a lot, so I should just work from home. I think that co-working spaces are great. It was nice to have a community of people even if they were not my direct colleagues.

I think opening up these opportunities around the world and in areas where the tech industry isn’t super well established is very exciting. I lived in San Francisco for 10 years working in tech there. Moving back to Philly, I just remember my first week back here, I went to a coffee shop. I was shocked that there were people over the age of 50, drinking coffee and talking about things that were not tech. It hadn’t even occurred to me that that would be unusual, but living in the mission of San Francisco, that’s what it was. You go to a coffee shop, and everyone’s just talking about their startup. I’m hopeful that that may have some effects on diversifying the industry, getting people to think outside of industry bubbles, and get a little bit more creative and make things that are relevant to people outside of that.

Verma: The co-working space story or angle is so interesting. Normally, we would see co-working spaces in particular cities. I wonder if the trend is going to now take co-working spaces to pretty much everywhere, especially the more scenic places maybe, like where people would want to live. If anybody has any co-working space trends they want to share, I know it’s still COVID, probably maybe not taking off as much.

Wardin: The athletic club is big here, and so you’re seeing the co-working spaces like attached to a gym so you can go to a workout class, and then have your co-working space. They just opened a new one downtown Minneapolis, and then there’s one right by my house, so having it in proximity to a workout facility.

Verma: That’s such a good idea. I did not think about that. Now I’m thinking what other things you can add on to a location to make it even better for people. James Stanier, do you have any observations?

Stanier: Interestingly, just related to this, there’s a startup called Patch. Patch.work is their website. They’re now wanting to open up co-working spaces everywhere, and you register your interest, and then they try and acquire a coffee shop or a building in your location. I think they’ve launched, I saw on Twitter the other day, but the whole idea is it’s, work near home becomes a thing. I live in a very rural place, there’s not really a coffee shop here I can work from, but if there was, I would totally be there. In the same way like in the UK, the pub is like your other room in your house and you go to the pub quite a lot to hang out. Again, it’s like another extension of the home in your community, which I think is great. It drives revenue into these communities as well, that may not necessarily have had lots of people there.

Verma: Definitely the business models will evolve with where the demand goes. This is definitely a new area where all those businesses Kate was talking about are suffering, will find newer avenues. People do want to be out there and do things, and be part of the community.

Goldberg: Yes, about co-working spaces, and the move to remote. I think in the run-up to my going remote, one of the biggest gripes of the office that I worked in was that it was an open office plan. We were in a shop that pair programed full time, which was wonderful. I love pairing and I love collaborating, but there were times when it was really hard to do work because there was so much crosstalk. It was really noisy, people are walking through. I think that there are a lot of things about the office environment that are broken, particularly for ICs and people who are doing work that requires deep thought and consideration and focus that in some cases is solved by working from home.

I think that there’s an interesting impedance mismatch because the people who are making the decisions about whether you can work from home are upper management and leadership who don’t do that work. You get into the burden of space planning and making a space that is really productive for software engineers. Sometimes working from home is better. Even with my team now, we have the option to work out of the office at Netflix, and my team has settled on going in once a week to collaborate and see each other in person and build those connections. When they need to sit down and knock out some code, unanimously, they agreed that they prefer to do it from home.

Learnings and Adjustments from Pre-Pandemic Remote Work to Now

Verma: Some of you have experienced this before the pandemic started. The question is around, what adjustments did you make when you moved from pre-pandemic remote work to now, or what are some learnings that you’ve applied there?

McNeil: I think pre-pandemic, I wasn’t working fully remotely. It was, I think, two days a week or something like that. In a sense, I didn’t really take it seriously. I was working. It was like an attachment to my work week, where it was like something we’re trying out. In a way, it was for the benefit of my colleagues who were working remotely, because that’s an important muscle to flex essentially, and to understand where some of the rough edges of remote work are. I hadn’t properly set up a desk. I had a place where I worked with a screen, but it wasn’t my office. I think also, and to what Miriam was saying about offices, this is, I think, a point that we don’t quite have with co-working spaces yet, or home offices, if we don’t have the space, was that my partner and myself were in the same room. We both talk a lot for work, but in very different ways. When I’m talking something very bad is happening and I’m on a call with someone. She’s running conferences and talking to colleagues, let’s say in more of a business setting. Once the pandemic started, she left the room, and we just figured out, given the space that we had, where her office was and where my office was.

I think that what we don’t have from co-working spaces yet, because a lot of them are coffee shops and modified spaces, are places for people to either work quietly, or talk without it being those sort of cubicles that started popping up in offices, because everything was open plan, and you closed the last door, and you’re hermetically sealed away. I think that there’s probably something in the design of these co-working spaces or offices, where we could probably get away from the giant open plan with the huge table. That’s what I’ve noticed. You need a room of one’s own, to quote Virginia Woolf.

Verma: The infrastructure basically getting in your way, like whether you’re in a rural place where you’re not able to have a good internet connection, or a co-working space that haven’t quite caught up to the need for us to be talking all the time. I wonder if the technology will come in and step in and solve these problems. I almost remember seeing a tweet sometimes like if somebody could invent an AI based mic that just filters out everybody else, like just turns on itself when needed and only listens to you, that will be like a billion dollar company. It seems like a lot of things need to happen here and a lot of opportunity coming in terms of what we could do in the future.

Team Building and Personalization

I know that many of you are leaders, you hire people, you’re managers, or directors. I want to touch the aspect of the team building. I want to hear from both you as an employee and a manager. One of my theories is that as we are going into this new world, people are going to have more freedom to personalize the kind of teams they are in. They’ll affect the culture of the team much more than they were able to. James McNeil touched on some teams having international people, other teams might be more localized, so I feel like people have a lot more freedom. As you are building your team, if you’re a manager, let’s talk about like, how are you personalizing your team to be good at the job they do?

Wardin: The last question you asked is, how do you personalize the experience being on the team so that they’re good at what they are expected to do, being remote? I lead a dev productivity team. One thing that they noticed pretty quickly after not being co-located, is that a lot of those, like forums where they would receive some of the more organic feedback from the developers that we support, even just like walking by cubes, and you’re hearing someone complain about the time it takes to deploy, or build something. They’ll be like, how can we help? Where you just be a fly on the wall in some discussions. We’ve had to be really intentional being remote. This quarter, we’re trying this experiment of having developer productivity champions. Each team that we support has a dedicated champion, so they attend their demos, their retrospective, so that they can pick up on some of those things that they are missing from not being in the office. That’s one thing.

Also, surveys, but a lot of people have survey fatigue. We’re trying to find other creative ways to get feedback, so that we can understand like, how do we drive our priorities and know that we’re working on the right things. I know that that’s a pretty unique use case being a dev productivity team, as opposed to a product team. Those have so far worked for us. Also, just ways to personalize knowing that we have folks from all over the world potentially in our teams. I mentioned the photo the weekend thread. I love to say, show me something fun you did this weekend. In Slack, just post a picture that helps people get to know each other, and that trust, that bonding is going to make us a better team. We’ll do an icebreaker question. Maybe you say, tell me something cool about your hometown. Just ways to again, humanize our colleagues, get to know each other, build that camaraderie and that trust so that we can be as best effective as possible.

Intentional Things in Personalizing Teams for Remote and Hybrid Work

Verma: I want to hear also from James Stanier, since you are building a team in Europe, how are you going about it? What are some intentional things you are doing in personalizing your team for remote and hybrid work?

Stanier: The interesting thing for us is like, there’s not many Shopify engineers in Europe, in our area of the company. We’re pretty much like rolling the road out in front of us as we are going. I’m new, my leads are new. I’m hiring leads, they’re hiring their teams. We’re all in it together. One is being very open about the uncertainty and not really knowing the answers to anything goes a long way. One thing that we’re trying to do is every week, try and meet with three to five people from different parts of the organization, have a coffee, or have a chat with some of the other principal engineers, with other directors and the VPs, just talk. Because I think if you do that every single day, every single week, you start to build up a network graph in your brain of like, how does this company do things? Who does what? Then that’s great for your team as well. We could say, “We’re doing this new technical thing. Last week, I spoke to one of the other principal engineers, he spends 30% of his week mentoring people. He’s probably got some time to pair with you, just go and have a chat.” You have to be much more intentional and proactive, as Kate was saying, with your connections. You have to think of the office metaphor of corridors of unintentional bumping into people, a fly on the wall, and then convert those metaphors into intentional actions in the asynchronous and internet based world for sure.

Culture Personalization as a Remote or Hybrid Worker

Verma: That’s insightful. We’re already seeing a contrast. Kate, your team is keying on certain factors. James Stanier is talking about certain other factors, because the journey his team is in, maybe social connections are much more important right now. That speaks to how during a journey of a team, like different knobs will become more important, and we cannot personalize even during the lifetime of a team, different elements. Let’s talk to James McNeil. You talk in an SRE specific environment. You’ve already done things to make sure things work well. What are some other things you would do to personalize the culture of your org or your team as a remote worker or a hybrid worker?

McNeil: I think one thing that’s going to be interesting from an organizational perspective to look back on the past couple years, is that, we, I imagine are going to see a different interpretation of Conway’s Law. Because there’s so much more fluidity to the org structure and there are no hallways, or water coolers. Teams aren’t geolocated, and that’s going to probably break down some of the barriers that you got around, your systems reflect your organizational structure. It also creates other ones, to some of the points that some of the others have made. Some of the stuff that we do as parts of onboarding, would be essentially trying to reach out to as many people who are adjacent to the work that you’re doing. This is something that the engineering manager will put together, essentially a package for every new joiner, of these are the people on the team. This is roughly the people we work with. This is the SRE that’s associated with that team. Then, it’s essentially up to that new joiner to contact them, to put in some time in their calendar, and to get those introductions going. Because one thing that we don’t have is a lot of that informal conversation unless you make that happen.

There’s a really interesting book by a woman named Marie Le Conte, about the way that the UK Parliament works, called, “Haven’t You Heard?” Her thesis is that all of UK politics is based on gossip. Actually like being in the halls of power and seeing people in, I think there was like 10 pubs or something like that, has a big influence on the way that things work. I think that, in a colloquial sense, encouraging gossip, encouraging people to talk amongst themselves and not just get either the messages from on high or their track of work, is very important in an organization that’s fully remote. Because that organic transfer of knowledge is sometimes where some of the best ideas come from, sometimes where some of the thorniest problems get solved, because people address things in different ways. I don’t know if I’ve given too many solutions, but ways of encouraging people to just have informal chats I think are incredibly important.

Verma: I think, more solutions, the better. Right now we’re all trying to figure things out and there are so many different situations. Maybe Miriam you can add your perspective, like you worked in payments industry also. I was wondering, does this work for everybody, like every industry or like some industry, just like not, like just inimical to this thing.

Goldberg: When I went remote, we were hybrid. At Braintree, our two largest offices were in San Francisco and Chicago. Then we had a smattering of independently remote people mostly around North America. We made it work. I think it worked pretty well, because we were already broken up across offices. We had good VC. We were used to working across time zones, and making sure that we were including people who weren’t in the room on decisions. I think of a lot of the stuff that James McNeil has been saying because in payments, SRE in offices is pretty intense. That stuff is always remote, because you can’t just plan to be paged when you’re in the office. It always seems to happen at 3:00 in the morning. Yes, we did it.

I think that one thing that we haven’t been able to do since COVID, when I moved to Philadelphia, I worked really closely, for instance, with the Docs team, because I was on APIs. We worked a lot with our Docs team to document our public APIs. They were mostly based in Chicago. Some of that was very creative, open ended, like how are these things going to work together, and we flew to Chicago before COVID. I could hop on a plane at 6 a.m., and be home by 10 p.m. the same day. I would do that every couple of months if I needed to, or spend the night, or whatever. That obviously hasn’t really been an option for most people in the last couple of years. It certainly is not an option if you’re talking about cross country or transatlantic travel. I am excited to start peppering our remote work with some more in-person travel as things loosen up a bit. It’s definitely valuable. I just don’t think you need to over-index on it.

Sustainability of Remote/Hybrid Work

Verma: Since we have all taken on this, I want to talk about sustainability of this thing. We’ve taken on remote and hybrid work, we want to make it last. Obviously, so far what I’ve heard, I’m not hearing that there’s a definite point in future where we’re going to switch. Seems like we’re leaning more into this. I want to talk about sustainability. What are the things people should be doing, companies should be doing, teams and managers should be doing to make it last?

Stanier: There’s a lot to unpack in this particular area. I think a lot of it comes from managers and leadership trying to set the tone for how you make this a marathon and not a sprint. It’s so easy when you’re working remotely to check all of your messages all evening, maybe even wake up in the middle of the night and reflexively check them on your phone. You have to be much more attentive to how you spend your time and your attention. I think it’s making sure people don’t work too long hours, and the leaders of the company being very vocal about that. There’s this concept of leaving loudly, which came out of this thing, like the Australian branch of Pepsi or something. It was a program they had in their leadership where anyone who’s like C level, VP level, you leave at the end of the day, reasonable time, and you tell everyone what you’re doing. It is like, “3:30, I’m off to pick up the kids. I’m out. See you.” You embed the culture that, no, it’s not right to be checking all your emails all night, because that makes you better or you’re not missing out. You have to really prioritize that thing. At Shopify, we’ve already had the email saying, basically, we’re closed over Christmas, unless you have critical work. That’s the expectation. It really sets the tone. I think there’s a lot to unpack in this area with regards to burnout, and mental health, and FOMO, and all these kinds of things, especially if you’re in a different time zone.

Verma: Such a great point about being explicit, and leading by example, or setting the tone so others can be comfortable doing it. I want to maybe touch a little bit, of how company culture plays a role here. Maybe Miriam and Kate, since you are at Netflix, I want to talk about, like Netflix gives people a lot of freedom in terms of how they want to take time off. We don’t even have an official calendar. How are you navigating this, making it sustainable for your teams, this concept of remote work?

Goldberg: As a manager, I am always telling people to take time off. Like, you have a tickle in your throat, go home and sleep. Your kids are a lot today, go hang out with them. I have never had a manager who has really hovered over me and expected me to put in hours, and I certainly don’t want to do that to anyone else. It feels inhumane and counterproductive. I don’t know if this is true, I have a theory, just from observation and my own experience, that going remote at a company that you worked at in-person for, sets you up to have a harder time setting boundaries. If you join a company fully remote, it’s easier to set those boundaries. I think possibly some of it is just the social bonding that happens when you work in-office with people and perhaps with the company. Maybe a little bit of emotional transference that you have in those situations where you really do feel like duty bound to show up all the time for work, that maybe you don’t build those bonds. I think maybe that’s much healthier with your employee.

Going back to, I think James McNeil you mentioned the HashiCorp guy, was like you’re not going to make friends. I really like my co-workers at Netflix a lot. I enjoy working here. It is different than companies where I started in-person, and that’s ok. I think the people who I manage now all started in-person at Netflix, actually, with one exception, we’re very bonded to the work, and bonded to each other, and bonded to our mission. Just reminding them that there’s a whole world outside of work for themselves and their families and their lives, and just go do that. Go pay attention to it and nurture it.

Wardin: James Stanier, mirroring what you said, like leaving loudly. I wrote that and I love that. I think it is up to the leader to lead by example. To Miriam’s point, there is life outside of work. I’m going to take care of X, Y and Z priority, and making that ok, and actually encourage to do that. Miriam, you described so beautifully. That is exactly how it is. Of course, I was at Target before this, and I just felt this obligation. From being in the office together 40 hours a week, every single day, I felt this obligation to show up, and all the time be there. Whereas when I joined Netflix, I was like, there’s not this expectation. I’m getting my work done, and then I’m done.

An additional thought to make this sustainable, is to make sure that we have an equitable experience for folks who are remote, as equitable as the folks who are maybe going back on site. James McNeil, you spoke to, things get done, those water cooler chats. The fact that we do have to simulate those in-person interactions, those people are literally getting those interactions in-person, how do we make sure that it is equitable for folks online? Even as simple as, if you’re in a meeting room with three people in the room, and then two people online, please just turn your cameras on on your laptop so that you’re not like an ant and I can’t even see your expressions. Things like that can make a huge difference if you are intentional, or do just like call out if the experience isn’t equitable, being remote if there are folks in-person.

See more presentations with transcripts

MMS • Bryan Stallings

Article originally posted on InfoQ. Visit InfoQ

Key Takeaways

- Certain bad habits and collaboration anti-patterns that have been a part of in-office work for a long time are exacerbated by the dynamics of working remotely or hybrid.

- The impact of not addressing these habits is deteriorating team morale, lack of trust and connection required to do meaningful collaboration, and more silos between roles.

- Learn specific strategies for recognizing and addressing four such habits, including optimizing for in-office employees, over-reliance on desk-side chats, and backchannel gossip.

- Leaders can utilize better facilitation skills and best practices to help eliminate bad habits and improve the way their team collaborates.

- By addressing the bad habits carried over from in-person work, leaders can help remote and hybrid teams experience stronger psychological safety and inclusion, creating more engaged and innovative teams.

If you’re a leader, you’ve likely spent significant time thinking about how work—the way we communicate, share information, hold meetings, ship code, make decisions, and resolve conflict—has changed as a result of increasing remote and hybrid work.

You’ve had to learn how to deal with entirely new etiquette questions as a result of remote work, from how to deal with a constant barrage of “pings” from your corporate chat app, to whether or not it’s considered rude to have your camera off during Zoom calls. And you’ve had to adjust to all of these on the fly.

But what about all the bad work habits we should have tackled a long time ago?

It’s time to clean up our collaboration habits

Certain bad habits that were already important to address when in-office work was the standard are now exacerbated by hybrid and remote work. Instead of continuing to tolerate and accept them as the status quo, perhaps now is an opportunity instead to finally check them off your to-do list.

Why is it so important to address these patterns now, rather than later?

- Bad habits kill collaboration: The accumulated grime of bad work habits can hold your team back from feeling safe, comfortable, and valued at work—all essential components of being able to collaborate and be creative as a team. The good news? You’re holding the power washer and just need to turn it on. Set an example as a leader that you are committed to continually changing for the better—and that you want a culture where it’s okay to have hard conversations about things that need to change.

- Employees are asking for change: In a recent study on the ways companies and teams collaborate, 80% of surveyed knowledge workers said that virtual meetings are an essential part of their jobs—yet 67% of people still prefer in-person meetings. There is a clear opportunity (and need) for virtual meetings to improve, and creating better collaborative experiences for attendees is a huge piece of the puzzle.

- The stakes are incredibly high: As businesses face both recession and widespread resignation, any cultural habits that don’t serve collaboration and innovation are ultimately going to be detrimental to your bottom line. The impact of not addressing these habits is deteriorating team morale, increased burnout, and a growing lack of trust and connection required to collaborate in meaningful ways.

Four bad collaboration habits you can tackle today

So, what are the habits we need to address to improve collaboration in today’s hybrid world?

Here are four, along with recommended solutions, that I’ve observed during two decades of Agile coaching, professional facilitation, and management consulting.

Bad habit #1: Disorganized flow of information

It used to be relatively easy to pop over to a colleague’s desk to touch base on a task, quickly troubleshoot an issue, or follow up on an earlier conversation. And because of close physical proximity, it was also much easier to include other relevant parties.

This kind of impromptu collaboration is invaluable for fast-moving teams, but it’s been hard to find an effective replacement within a remote team without contributing to meeting overload. Any off-the-cuff chat between two remote employees tends to require finding spare time on a calendar. And if any part of that chat becomes relevant to someone not in the meeting, it’s not easy to pull them in because they may be in a different meeting.

All of this contributes to a very disjointed flow of information for remote teams. To combat it, teams often try to focus on cutting down on meetings in the name of communicating the most important information in larger group meetings. But this in turn can hamper teams from moving quickly by bottlenecking important information until “everyone is in the same room.”

For teams to be able to collaborate effectively, they need the right information at the right moment—and they shouldn’t have to twiddle their thumbs or schedule three separate meetings to get it.

Solution: Invest in better documentation and asynchronous coordination

If people are constantly having to schedule follow-up meetings to catch up on missed details, learn technical engineering processes, or understand the structure of a marketing program, it presents an opportunity to improve your documentation—and save yourself and others from having to schedule another meeting down the road.

If that sounds overwhelming, it doesn’t have to be! Documentation doesn’t need to be as formal as in the past. Instead of feeling like you have to invest a lot of time and energy creating documentation, you can use the approach of building an innovation repository.

With better documentation, instead of imposing on someone to find a time on their calendar to have a chat to resolve a problem, the onus can be on you to review the documentation and then follow up asynchronously with any gaps or additional questions you might have.

Bad habit #2: “One size fits all” collaboration

From the same study, a majority of the respondents felt that virtual meetings—especially those attended by both remote and in-person workers—are dominated by the loudest and most active voices. And we could see this during in-person meetings, too.

For example, many teams default to holding “brainstorming sessions” the same way each time: You have a loose topic you want to discuss, so you open it up to free-wheeling discussion for 30 or 60 minutes. The person who scheduled the meeting might be taking notes, or they might not. The team converges around the ideas of the people who spoke up first or most confidently (especially if they are in a leadership position), deferring to their vocal command of the room.

Yikes! For teams to collaborate effectively, we need to learn to not associate extroversion with engagement (or good business sense) and realize that there is more than one way to collaborate and participate as a teammate.

Solution: Make space for all voices to contribute

One effective way to avoid one-size-fits-all collaboration is to accommodate for common collaboration styles:

- Expressive: Some team members like to see ideas sketched out with drawings, graphics, and sticky notes, and are likely to express themselves with GIFs and emojis. Expressive collaborators may have trouble engaging in hybrid meetings dominated by text-heavy documents and rely more on unstructured discussion to feel their most creative.

- Relational: These collaborators gravitate toward technology that enables direct, human-to-human teamwork and connection. Fast-paced virtual meetings can feel draining to relational collaborators, so more intimate activities like team exercises or breakout sessions can help them surface their best ideas.

- Introspective: These naturally-introverted collaborators like to collect their thoughts before offering a suggestion, and gravitate toward more deliberate approaches to collaboration. They may be frustrated with virtual meetings that appear aimless or poorly facilitated, and they prefer to have a clear agenda and formalized processes for documenting follow-up.

Not everyone fits cleanly into one of these three categories, but the principle at hand is the same: You need to be open to different collaboration styles and check in regularly to make sure you’re not skewing these interactions toward one style over the others.

Bad habit #3: Optimizing meetings for in-office employees

Once when my siblings and I visited my parents, I noticed an interesting phenomenon. After a family dinner, we migrated toward the living room to chat and play games, but half an hour later, we paused and asked, “Wait, where’s mom?” Only then we realized she was cleaning up in the kitchen alone. Even though we were adults who knew better, we were unintentionally behaving like our once-teenager selves!

A similar thing often happens with hybrid teams: remote team members often get unintentionally left behind from social experiences that in-office employees are enjoying together.

This can happen in a variety of ways, especially with how teams approach collaboration. For example:

- Those physically present in a meeting overlook the need to adapt the conversation to equitably include those joining remotely. A meeting paced to in-office participants might move on from topics before someone who is remote has a chance to unmute and chime-in.

- Remote employees may miss the camaraderie-building chat that happens before and after meetings, such as discussion around where everyone is going for lunch, what they did that weekend, or a funny inside joke.

- In-office employees have the benefit of physical proximity to interpret body language, while remote employees may miss out on those more subtle cues or reactions.

When these things happen, “in groups” and “out groups” unintentionally form, making it hard to collaborate as an aligned, unified team.

Solution: Thoughtful facilitation and inclusion

Good facilitation helps create equal footing for all participants in collaborative meetings and can prevent remote employees from feeling like second-class citizens. To improve collaboration, facilitators might:

- Take responsibility to record the meeting for those who were unable to attend or may want to listen to the discussion again.

- Host a shared whiteboard or simple document for collaborative note-taking where participants may offer input and questions without coming off mute.

- Monitor the pacing of the meeting, making sure to pause when necessary, ask someone to repeat a comment that remote employees may not have caught, or seek engagement from someone that has yet to participate.

- Provide context and “room resets” to those joining late, or those who missed a pre-meeting chat, so that no one feels excluded.

It may not seem like a big deal, but going above and beyond in these ways is an important aspect of helping people feel like part of a whole—and that feeling helps them feel safe enough to contribute their best ideas.

Bad habit #4: Backchannel chat and gossip

Bias is at the core of much of the gossip that happens in offices. We make assumptions on the barest of information because we’re separated and only see faces across screens. When we’re all busy and exhausted, it becomes easy to turn a misunderstanding between coworkers into full-blown contempt for that person, quickly undermining the morale and connection of distributed teams.

And because it’s so easy to Slack someone a sarcastic comment during a company all-hands, or send that eye-roll emoji during a team meeting, we can easily pull people into our negativity, which isn’t fair to them or the person we’re creating gossip about.

Solution: Create a psychologically-safe culture that addresses conflict

It doesn’t matter whether it’s someone reheating fish in the office microwave or you feel a team member dropped the ball on a project– any conflict has the potential to derail a team’s interconnectivity and their ability to collaborate effectively.

The way to cut through that is to resist venting to our work friend. We need to address things head on! One easy place for this to happen is during retrospectives and post-mortems. With facilitation and a solid agenda in place, everyone on the team can air their frustrations and bring narratives into the light instead of keeping them in Slack DMs.

By treating each other courteously, and acknowledging that conflict is a natural part of working together, we can move past those issues and create stronger teams as a result.

Enacting change to improve collaboration

As a leader, it’s within your power to enact change. Making changes to cultural habits and patterns that have long plagued organizations is not a waste of time; it not only improves your team’s collaboration dynamics, but has an impact on your bottom line, as better collaboration means faster innovation.

By helping your teams have psychological safety, comfortability, and confidence that they can share their ideas and be treated as an equal on a team, you also give them permission to move quickly, trust their ideas, execute with autonomy, and grow your business.

MMS • Renato Losio

Article originally posted on InfoQ. Visit InfoQ

AWS recently announced that Event Ruler, the component managing the routing rules of Amazon EventBridge, is now open source. The project is a new option for developers in need to match lots of patterns, policies, or expressions against any amount of events in near real-time.

Written in Java, Event Ruler offers APIs for declaring pattern-matching rules, presenting data records (events) and finding out at scale which rules match each event, allowing developers to build applications that can match any number of rules against events at several hundred thousand events per second.

Events and rules are JSON objects, but rules additionally can be expressed through an inbuilt query language that helps describe custom matching patterns. For example, a JSON event of an image:

{

"Image" : {

"Width" : 800,

"Height" : 600,

"Title" : "View from 15th Floor",

"Thumbnail" : {

"Url" : "http://www.example.com/image/481989943",

"Height" : 125,

"Width" : 100

},

"Animated" : false,

"IDs" : [116, 943, 234, 38793]

}

}

can be filtered by a JSON rule that filters for static images only:

{

"Image": {

"Animated" : [ false ]

}

}

Source: https://aws.amazon.com/blogs/opensource/open-sourcing-event-ruler/

Rishi Baldawa, principal software engineer at AWS,,explains:

This offers a novel solution for anyone in need to match lots of patterns, policies, or expressions against any amount of events without compromising on speed. Whether the events are in single digits or several hundred thousand, you can route, filter, or compare them in near real-time against any traffic. This speed is mostly independent of the number of rules or the patterns you define within these rules.

Event Ruler is in production in multiple Amazon services, including Amazon EventBridge, the serverless event bus service that helps developers and architects to connect applications with data from a variety of sources. The new open source project includes features that are not yet available on the AWS managed service. Nick Smit, principal product manager for Amazon EventBridge at AWS, tweets:

You’ll notice it has some features such as $or, suffix match, and Equals-ignore-case, which are not yet in EventBridge. We plan to include those in future. Excited to see the new ideas the community will bring!

Talking about use cases for the new project in a “Hello, Ruler” article, Tim Bray, formerly VP and distinguished engineer at AWS and one of the developer behind Event Ruler, writes:

The software is widely used inside AWS. Will it be useful outside the cloud-infrastructure world? My bet is yes, because more and more apps use loosely-coupled event-driven interconnections. For example, I think there are probably a lot of Kafka applications where the consumers could be made more efficient by the application of this sort of high-performance declarative filtering. Yes, I know there are already predicates, but still.

A year after announcing OpenSearch, there are different new AWS open source projects and initiatives, mostly covered in the AWS Open Source Blog and the AWS open source newsletter, with many believing that AWS is improving its open source reputation. Bray adds:

AWS has benefited hugely from the use of open-source. So it is nice to see them giving something back, something built from scratch, something that does not particularly advantage AWS.

Event Ruler is available on GitHub under the Apache 2.0 license.

MMS • Suhail Patel

Article originally posted on InfoQ. Visit InfoQ

Transcript

Patel: I want to talk about curating the best set of tooling to build effective and delightful developer experiences. Here’s a connected call graph of every one of our microservices. Each edge represents a service calling another service via the network. We rely heavily on standardization and automated tooling to help build, test, deploy, and monitor, and iterate on each of these services. I want to showcase some of the tools that we’ve built to help us ship these services consistently and reliably to production.

Background Info

I’m Suhail. I’m one of the staff engineers from the platform group at Monzo. I work on the underlying platform powering the bank. We think of all of the complexities of scaling our infrastructure and building the right set of tools, so engineers in other teams can focus on building all the features that customers desire. For all those who haven’t heard about Monzo, we are a fully licensed and regulated UK bank. We have no physical branches. You manage all of your money and finances via our app. At Monzo, our goal is to make money work for everyone. We deal with the complexity to make money management easy for all of you. As I’m sure many will attest, banking is a complex industry, we undertake the complexity in our systems to give you a better experience as a customer. We also have this really nice and striking coral debit cards, which you might have seen around. They glow under UV light.

Monzo Chat

When I joined Monzo in mid-2018, one of the initiatives that was kicking off internally was Monzo chat. We have quite a lot of support folks to be a human point of contact for customer queries for things like replacing a card, reporting a transaction, and much more. We provided functionality within the Monzo app to initiate a chat conversation, similar to what you have in any messaging app. Behind the scenes, the live chat functionality was previously powered by Intercom, which is a software as a service support tool. It was really powerful and feature rich and was integrated deeply into the Monzo experience. Each support person had access to a custom internal browser extension, which gave extra Monzo specific superpowers as a sidebar within Intercom. You can see it on the right there showing the transaction history for this test user directly and seamlessly integrated into the Intercom interface. With the Monzo chat project, we were writing our own in-house chat system and custom support tooling. Originally, I was skeptical. There’s plenty of vendors out there that provided this functionality really well with a ton of customizability. It felt like a form of undifferentiated heavy lifting, a side quest that didn’t really advance our core mission of providing the best set of banking functionality to our customers.

Initially, the Monzo chat project invested heavily in providing a base level experience wrapped around a custom UI and backend. Messages could be sent back and forth. The support folks could do all of the base level actions they were able to do with Intercom, and the all useful sidebar was integrated right into the experience. A couple years in and on the same foundations, Monzo chat and the UI you see above, which we now call BizOps, has allowed us to do some really innovative things like experiment and integrate machine learning to understand the customer conversation and suggest a few actions to our customer operations staff, which they can pick and choose. Having control of each component in the viewport allows us to provide contextual actions for our customer operations folks. What was previously just a chat product has become much more interactive. If a customer writes in to say that they’ve lost their card, we can provide a one click action button to order a new card instantly and reassure the customer. We had this really nice UI within the BizOps Hub, and the amazing engineers that worked on it spent a bunch of time early on writing modularity into the system.

Each of the modules is called a weblet, and a weblet forms a task to be conducted within BizOps. The benefit of this modular architecture is that weblets can be developed and deployed independently. Teams aren’t blocked on each other, and a team can be responsible for the software lifecycle of their own weblets. This means that a UI and logic components can be customized, stitched together, and hooked up to a backend system. We’ve adopted the BizOps Hub for all sorts of internal back office tasks, and even things like peer reviewing certain engineering actions and monitoring security vulnerabilities. What was a strategic bet for more efficient customer operations tool has naturally become a centralized company hub for task oriented automation. In my mind, it’s one of the coolest products that I’ve worked with, and a key force multiplier for us as a company.

Monzo Tooling

You’re going to see this as a theme. We spent a lot of time building and operating our own tools from scratch, and leveraging open source tools with the deep integrations to help them fit into the Monzo ecosystem. Many of these tools are built with modularity in mind. We have a wide range of tools that we have built and provide to our engineers, things like service query, which analyzes a backend service and extracts information on the various usages of that particular service. Or this droid command that Android engineers have built to help with easier testing and debugging of our Android app during development.

Monzo Command Line Interface

One of the most ubiquitous tools across Monzo is our Monzo command line interface, or the PAN CLI as it’s known internally. The Monzo CLI allows engineers to call our backend services, manage service configuration, schedule operations, and interact with some of our infrastructure, and much more. For example, I can type a find command with a user ID, and get all the information relating to that particular user from our various internal APIs. I don’t need to go look up what those APIs are, or how they’re called, or what parameters are needed. Here, I’ve used the same find command, but with a merchant ID, and I automatically get information about the merchant. The CLI has all of that knowledge baked in on what IDs are handled by which internal API sources. Engineers add new internal API sources all of the time, and they are integrated automatically with the PAN CLI.

These tools don’t just function in isolation, behind the scenes, a lot of machinery kicks in. On our backend, we explicitly authenticate and authorize all of our requests to make sure that only data you are allowed to access for the scope of your work is accessible. We log all of these actions for auditing purposes. Sensitive actions don’t just automatically run, they will create a task for review in our BizOps Hub. If you were inclined, you could construct these requests done by the CLI tool by hand. You can find the correct endpoint for the service, get an authentication token, construct the right card request. To this day, I still need to look up the right card syntax, parse the output, and rinse and repeat. Imagine doing that in the heat of the moment when you’re debugging a complex incident.

Using the CLI tooling, we have various modules to expose bits of functionality that might constitute a chain of requests. For example, configuration management for our backend microservices is all handled via the PAN CLI. Engineers can set configuration keys and request peer review for sensitive or critical bits of configuration. I see many power users proud of their command line history of adaptable shell commands, however, write a small tool and everyone can contribute and everyone benefits. We have many engineering adjacent folks using the PAN CLI internally because of its ease of use.

Writing Interactive Command Line

Writing one of these interactive command line tools doesn’t need to be complicated. Here’s a little mini QCon London 2022 CLI tool that I’ve written. I wanted to see all of the amazing speaker tracks at QCon on offer. I’m using the built in command package within Python 3, which provides a framework for line oriented command interpreters. This gives a fully functioning interactive interpreter in under 10 lines of code. The framework is doing all of the heavy lifting, adding more commands is a matter of adding another do underscore function. It’s really neat. Let’s add two more commands to get the list of speakers and the entire schedule for the conference. I’ve hidden away some of the code to deal with HTML parsing of the QCon web page, but we can have a fully functional interactive command line interpreter in tens of lines of code. We have the entire speaker list and schedule accessible easily right from the command line. A sense of competition for the QCon mobile app. If you’re interested in the full code for this, you can find it at this link, https://bit.ly/37LLybz. Frameworks like these exist for most programming languages. Some might be built in like the command library for Python 3.

The Shipper CLI

Let’s move on to another one of our CLI tools. This is a tool that we call shipper. We deploy hundreds of times per day, every day. Shipper helps orchestrate the build and deployment step, providing a CLI to get code from the engineer’s fingertips into production. Engineers will typically develop their change and get it peer reviewed by the owning team for that particular service or system. Once that code is approved and all the various checks pass, they can merge that code into the mainline and use shipper to get it safely into production. Behind the scenes, shipper is orchestrating quite a lot of things. It runs a bunch of security pre-checks, making sure that the engineer has followed all of the process that they need to and all the CI checks have passed. It then brings the service code from GitHub into a clean build environment. It builds the relevant container images, pushes them to a private container registry, and sets up all the relevant Kubernetes manifests. Then kicks off a rolling deployment and monitors that deployment to completion. All of this gives confidence to the engineers that the system is guiding them through a rollout of their change. We abstract away all of the infrastructure complexity of dealing with coordinating deployments, dealing with things like Docker and writing Kubernetes YAML, behind a nice looking CLI tool. We can in the future change how we do things behind the scenes, as long as we maintain the same user experience.

We see the abstraction of infrastructure as a marker for success. Engineers can focus on building the product, knowing that the tooling is taking care of the rest. If you’re building CLI tools, consider writing them in a language like Go or Rust, which gives you a Binary Artifact. Being able to ship a binary and not have to worry about Python or Ruby versioning and dependencies, especially for non-engineering folks means there’s one less barrier to entry for adoption. There’s a large ecosystem for CLI tools in both languages. We use Go for all of our services, so naturally, we write our tools in Go too.

Monzo’s Big Bet on Microservices

Monzo has betted heavily on microservices, we have over 2000 microservices running in production. Many of these microservices are small and context bound to a specific function. This allows us to be flexible in scaling our services within our platform, but also within our organization as we grow teams and add more engineers. These services are responsible for the entire operation of the bank. Everything from connecting to payment networks, moving money, maintaining a ledger, fighting fraud and financial crime, and providing world class customer support. We provide all of the APIs to make money management easier, and much more. We integrate with loads of payment providers and facilitators to provide our banking experience, everything from MasterCard, and the Faster Payments scheme, to Apple and Google Pay. The list keeps growing as we expand. We’ve been at the forefront of initiatives like open banking. We’re expanding to the U.S., which means integrations with U.S. payment networks. Each of these integrations is unique and comes with its own set of complexities and scaling challenges. Each one needs to be reliable and scalable based on the usage that we see.

We have such a wide variety of services, you need a way to centralize information about what services exist, what functionality they implement, what team owns them, how critical they are, service dependencies, and even having the ability to cluster services within business specific systems. We’re quite fortunate. Early on, we standardized on having a single repository for all of our services. Even so, we were missing a layer of structured metadata encoding all of this information. We had CODEOWNERS defined within GitHub, system and criticality information as part of a service level README, and dependencies tracked via our metric system.

The Backstage Platform

Eighteen months ago, we started looking into Backstage. Backstage is a platform for building developer portals, open sourced by the folks at Spotify. In a nutshell, think of it as building a catalog of all the software you have, and having an interface to surface that catalog. This can include things like libraries, scripts, ML models, and more. For us to build this catalog, each of our microservices and libraries was seeded with a descriptor file. This is a YAML file that lives alongside the service code, which outlines the type of service, the service tier, system and owner information, and much more. This gave us an opportunity to define a canonical source of information for all this metadata that was previously spread across various files and systems. To stop this data from getting out of sync, we have a CI check that checks whether all data sources agree, failing if corrections are needed. This means we can rely on this data being accurate.

We have a component in our platform that slaps up all the descriptor files and populates the Backstage portal with our software catalog. From there, we know all the components that exist. It’s like a form of service discovery but for humans. We’ve spent quite a lot of time customizing Backstage to provide key information that’s relevant for our engineers. For example, we showcase the deployment history, service dependencies, documentation, and provide useful links to dashboards and escalation points. We use popular Backstage plugins like TechDocs to get our service level documentation into Backstage. This means all the README files are automatically available and rendered from markdown in a centralized location, which is super useful as an engineer.

One of the features I find the coolest is the excellent score. This is a custom feature that we’ve developed to help grade each of our services amongst some baseline criteria. We want to nudge engineers in setting up alerts and dashboards where appropriate. We provide nudges and useful information on how to achieve that. It’s really satisfying to be able to take a piece of software from a needs improvement score to excellent with clear and actionable steps. In these excellent scores, we want to encourage engineers to have great observability of their services. Within a microservice itself at Monzo, engineers focus on filling in the business logic for their service. Engineers are not rewriting core abstractions like marshaling of data or HTTP servers, or metrics for every single new service that they add. They can rely on a well-defined and tested set of libraries and tooling. All of these shared core layers provide batteries included metrics, logging, and tracing by default.

Observability

Every single Go service using our libraries gets a wealth of metrics and alarms built for free. Engineers can go to a common fully templated dashboard from the minute their new service is deployed, and see information about how long requests are taking, how many database queries are being done, and much more. This also feeds into our alerts. We have automated alerts for all services based on common metrics. Alerts are automatically routed to the right team, which owns that service, thanks to our software catalog feeding into the alerting system. That means we have good visibility and accurate ownership across our entire service graph. Currently, we’re rolling out a project to bring automated rollbacks to our deployment system. We can use these service level metrics being ingested into Prometheus to give us an indicator of a service potentially misbehaving at a new revision, and trigger an automated rollback if the error rate spikes. We do this by having gradual rollout tooling, deploying a single replica at a new version of a service, and directing a portion of traffic to that new version, and comparing against our stable version. Then, as we continue to roll out the new version of the service gradually, constantly checking our metrics until we’re at 100% rollout. We’re only using RPC based metrics right now, but we can potentially add other service specific indicators in the future.

Similarly, we’ve spent a lot of time on our backend to unify our RPC layer, which every service uses to communicate with each other. This means things like trace IDs are automatically propagated. From there, we can use technologies like OpenTracing and OpenTelemetry, and open source tools like Jaeger to provide rich traces of each service level hop. Our logs are also automatically indexed by trace ID into our centralized logging system, allowing engineers to filter request specific logging, which is super useful across service boundaries. This is important insight for us because a lot of services get involved in critical flows. Take for example, a customer using their card to pay for something. Quite a few distinct services get involved in real time whenever you make a transaction to contribute to the decision on whether a payment should be accepted. We can use tracing information to determine exactly what those services and RPCs were, how long they contributed to the overall decision time, how many database queries were involved, and much more info. This tracing information is coming back full circle back into the development loop. We use tracing information to collect all of the services and code paths involved for important critical processes within the company.

When engineers propose a change to a service, we indicate via an automated comment on their pull request if their code is part of an important path. This indicator gives a useful nudge at development time to consider algorithm complexity and scalability of a change. It’s one less bit of information for engineers to mentally retain, especially since this call graph is constantly evolving over time as we continue to add new features and capabilities.

Code Generation