Month: December 2022

MMS • Sam Newman

Article originally posted on InfoQ. Visit InfoQ

Transcript

Newman: Welcome to this virtual presentation of me talking about the pitfalls associated with getting the most out of microservices, and the cloud, or cloud native microservices, as we now call them. I’ve written books about microservices. You probably don’t care about that, because you’re all here to hear about interesting pitfalls, and also some tips about how to avoid the nasty problems associated with adopting microservices on the cloud. Also, some guidance about the good things that you can do to get the most out of what should be a fantastic combination. This combination of cloud native and microservices. Microservices which offer us so many possibilities. Many of you are making use of microservices to enable more autonomous teams able to operate without too much coordination and contention. Teams that own their own resources can make decisions about when they want to release a new version of their software. Reducing the release coordination in this way helps you go faster. It can improve your sense of autonomy, in turn has benefits in terms of motivation, and employee morale, even. This allows us to ship software, get it into the hands of the users more quickly. Get fast feedback about whether or not these things are working. These models nowadays are shifting towards a world where we’re looking for our teams to have full ownership of their IT assets: you build it, you run it, you ship it. It’s amazing how developers that have to also support their own software end up creating software that tends to page the support team much less frequently.

Why Have Cloud Native and Microservices Not Delivered?

Cloud native and microservices should have been a wonderful combination. It should have been brilliant. It should have been like Ant and Dec, or Laurel and Hardy, or Crypto and Bros. Instead, it’s ended up being much more like Boris and Brexit. Something that’s been really well marketed, but unfortunately has failed to deliver on some of its promises, has ended up costing us a lot more money than we thought, and left many of us feeling quite sick. Why has cloud native and microservices often not delivered on some of the promises that we might have for them? Many of these big enterprise initiatives you may have been involved with around these transformations have somehow failed to deliver. Part of this, I think is about confusion. Let’s look at the CNCF landscape, and it’s easy to poke a degree and follow this. This is a sign of success, really. This is all the different types of tools and product in the different sectors that the CNCF manages. This is a sign of success, but it’s a bewildering sign of success. How do you navigate this to pick out which pieces of the big CNCF toolkit you could use? It’s ok because help is at hand that is through an interactive website now where you can navigate this space and try and find the thing you want. Although whenever I read this disclaimer, I have some other view in my head. Namely, this is going to cost me a lot of money, isn’t it? In terms of money, the CNCF landscape also talks about money quite a bit. It talks about how much money has been pumped into this sector. If we just look at the funding of $26 billion, where does all that money go? Obviously, a lot of that money goes into creating excellent and awesome products. A lot of that money also goes into marketing, which leads to confusion, dilution of terms, misunderstanding of core concepts, and increased confusion.

Undifferentiated Heavy Lifting – AWS (2006)

Back in 2006, AWS launched in a way, which was interesting. At the time, we didn’t really realize what was going to come, because at this point, we were dealing with our own physical infrastructure, we were having to rack and cable things ourselves. Along came AWS and said, no, we can do that for you. You can just rent out some machines. When you actually spoke to the people behind AWS about why they were doing this and why they thought it was beneficial, this term, undifferentiated heavy lifting would come back again. This describes how Amazon thought about their world internally. We want autonomous teams to be able to focus on the delivery of their product, and we don’t want them to have to deal with busy work. It’s still heavy work, the heavy lifting, but your ability to do that work to rack up servers doesn’t differentiate what you’re doing compared to anybody else. Can we create something whereby you can offload a lot of that heavy lifting to other people that can do it better than you can? If you think about what AWS gave us, it gave us, hopefully, these capabilities, in the same way that Google Cloud does, or Azure, or a whole suite of other public cloud providers.

APIs

Really, when you distill down the important part of a lot of this, it was around the APIs they gave us. A few years ago, I went up to the north of England. It’s not necessarily quite as cold and hospitable as this, but it definitely has better beer than the south. I was chatting to some developers at a company up there. I was doing a bit of a research visit, which I do every now and then. I was talking to them about what improvements they wanted to see happening in terms of the development environment that would make them more productive. This was just, I’m interested in what developers want. Also, I was going to feed some information back to CIOs, so they could get a sense of key things they could do to improve. These developers said, what we want is we’d love access to the cloud, we’d like to get onto the cloud, so that we don’t have to keep sending tickets. I went and spoke to the CIO, and the CIO says, we already have the cloud. I went back to the developers, I said, developers, you already have the cloud. They were confused by this.

I dug a bit deeper, and it turned out that this company had embraced the cloud in a rather interesting manner. What happens is, a developer, if they wanted to access a cloud resource, or spin up a VM, what they would actually do would be to raise a ticket using Jira. That Jira ticket would be picked up by someone in a sysadmin team, who would then go and click some buttons on the AWS console, and then send you back the details of your VM. Although the sysadmin side of this organization had adopted the public cloud for some value of the word adopted, from the point of view of the developers, nothing had changed. This is an odd thing to do. Using public cloud services, which give us such great ability around self-service, it is fashion. It’s really bizarre. It’s like putting a wheel clamp on a hypercar. You could do it. Should you really do it?

Why Do Private Clouds Fail?

I’m a great fan of cherry picking statistics and surveys that confirm my own biases. I was very glad to find this survey done by Gartner at their data center conference several years ago. This is from people who went to a data center conference run by Gartner. That’s already an interesting subsection of the overall IT industry. They were saying, what is going wrong with your private clouds? Why are your private clouds installs, are they working, are they’re not working? They find out only 5% of people thought it was going quite well. Most people found significant issues with how they were implementing private cloud. Really, interestingly, the biggest focus amongst people who went to a data center conference run by Gartner was accepting that they’d been focusing on the wrong things. That by implementing a private cloud, they had been focusing on cost savings, rather than focusing on agility. Also, as part of that, not changing anything around how they operate, or how the funding models work. Thinking back to that previous example, this seemed to tie up. You adopted the public cloud, but didn’t really want to change any of the behaviors around it.

Pitfall 1: Not Enabling Self-Service

This leads to our very first pitfall around this whole story, and that’s not enabling self-service. If you want to create an organization, where teams have more autonomy, have the ability to think and execute faster, you need to give them the ability to self-service provision things like the infrastructure they’re going to run on. You need to empower teams to make the decisions and to get things done. AWS’s killer feature wasn’t per hour rented managed virtual machines, although they’re pretty good. It was this concept of self-service. A democratized access via an API. It wasn’t about rental, it was about empowerment. You only saw that benefit if you really changed your operating models.

Top Tip: Trust Your People

A lot of the reasons I think that people don’t do this and don’t allow for self-service really comes down to a simple issue, you need to trust your people. This is difficult, because for many of us, we’ve come from a more traditional IT structure, where you have very siloed organizations who have very small defined roles. You had to live in your box, the QA did the QA things, the DBA did the DBA things, the sysadmins did the sysadmin things. Then there’d be some yawning chasm of distrust over to the business who we were creating the software for. In this world of siloed roles and responsibilities, the idea of giving people more power is a bit odd. It doesn’t really fit. We’re moving away from this world. We’re breaking down these silos. We’re moving to more poly-skill teams that can have full ownership over the software we’ve delivered.

Stream Aligned Teams

I urge all of you to go read the book, “Team Topologies,” which is giving us some terms and the vocabulary around these new types of organizations in the context of IT delivery. In the “Team Topologies” book, they talk about these ideas of stream aligned teams. Instead of thinking about short-lived project teams, you create a long-lived product oriented team who owns part of your domain. They are focused on delivering a valuable stream of work. Their work cuts across all those traditional layers of IT delivery. They’re not thinking about data in isolation from functionality, or thinking about a separate UI from the backend, you’re thinking holistically about the end-to-end delivery of functionality to the users of your software. This long-lived view is really important, because you get domain expertise, in terms of what you own. This is why microservices can be so valuable, because you can say, these microservices are owned by these different streams. That strong sense of ownership is also what gives you the ability to make decisions and execute without having to constantly coordinate with loads of other teams.

The issue, obviously, with this model is that there is lots of other things that need to happen. There’s other work that needs to be done to help these teams do their jobs. Traditionally, those kinds of roles would be done by separate siloed parts of the organization, there were often a functional handoff. I had a need for a security review. I need a separate Ops team to provision my test environment or deploy into production. That work still needs to be done, and we can’t expect these teams to do all that work as well. Where does all that extra work go?

Enabling Teams

This is where the “Team Topologies” authors introduced the idea of enabling teams. You’ve got your stream aligned teams, and they’re your main path to focusing on delivery of functionality. What we need to do is to create teams that are going to support these stream aligned teams in doing their jobs, so we might have a cross-cutting architecture function, maybe frontend design, maybe security, or maybe the platform. These enabling teams exist to help the stream aligned teams do their job. We want to do whatever we can to remove impediments to help them get things done. This isn’t about creating barriers or silos. It’s about enablement. At this point, many of you who have got microservices are sitting there face nothing going, but that’s ok because I’ve got a platform.

Amazon Web Services (2009)

Let’s go back in time a bit further. Back in 2009, I found myself accidentally involved in helping create the first ever training courses for AWS. At this point, AWS itself were just saying, here’s our stuff, use it. They weren’t putting any effort into helping you use these tools well. I remember myself and Nick Hines, a colleague at the time, we’re having a chat with them. The AWS view was, “We’re like a utility. We just sell electricity.” Nick turned to them and said, “Yes, that’s all well and good, but your customers are constantly getting electrocuted.” Without good guidance about how to use these products well, you can end up making mistakes, and you may then end up blaming the tool itself. It’s amazing that still in 2022, I meet people that run entirely out of one availability zone, for example. To be fair to AWS, they spotted this gap and have plugged it very well. If you go along to the training certification section that they run, and this is the same story for GCP, and Azure as well, you’ll see a massive ecosystem of people able to give you training on how to use these tools well. Because all of these vendors recognize that without that training and guidance, you won’t get the best out of them.

Pitfall 2: Not Helping People Use the Tools Well

This is a lesson that we need to learn for our own tools that we provide to our developers. Having cool tools is not enough. You’ve got to help people use them. This is another common pitfall I see, people bring in all these tools and technology, here is some Kubernetes, and here is some Prometheus. Unless you’re doing some work to help people get the most out of those tools, you’re not really enabling them. If you’re somebody working on the platform team, your job isn’t just to build the platform. It’s actually to create something that enables other people to do their job. Are you spending time working with the teams using your platform? Are you listening to their needs? Are you giving them training when they need it? Are you actually spending time embedded with them on day-to-day delivery to understand where the friction points are? Because if you’re not doing these things, the platform can end up just becoming another silo.

Top Tip: Treat Your Microservices Platform like a Product

This leads me to my next tip, you should treat your platform like a product. Any good product that you create is going to involve outreach, is going to involve chatting to your customers, understanding what they need and what they want. This is the same thing with a platform. Inside an organization, talk to your developers. Talk to your testers. Talk to your security people. What is it that you need to do in whatever platform you deliver to help them do their job? This is all about creating a good developer experience. Although maybe the term developer experience should be delivery experience, because obviously, there are many more stakeholders than just the developers. Yes, that’s right, developers, there are people other than you out there. Think about that delivery experience if you’re the person helping drive the development of the platform team. I actually think this is a great place to have a proper full time product owner. Have a person who has got product management experience or product owner experience. Have them head that team up, and drive your roadmap based on the needs of your customers.

If you are the person providing that platform into your organization, it is your job to navigate this mess. Again, I don’t mean to beat up the CNCF at all. Absolutely not. This is a sign of success, but it is bewildering, trying to navigate this world. If we go and look at the public cloud vendors, we also see a huge array of new products and services being released all the time. AWS is in many ways the worst culprit. Again, a sign of success. It’s interesting that the easiest way to keep up with all the different things that AWS are launching is actually go to a third party site. This particular site tracks the various different service offerings that are out there. I screencaped this probably about three months ago. The number of products offered by AWS is constantly growing. As of 2021, they had as many as 285 individual services. That’s 285 individual services, many of which overlap with each other. Each of those services can have a vast array of different features. How are you supposed to navigate this landscape?

Top Tip: It’s Ok To Provide a Curated Experience

There is this idea that if I’m going to allow you to use a public cloud provider, like Azure, GCP, or AWS, and I want to let you do that in a self-service fashion, that I should just throw you in at the deep end and say, just go for it. No, you should train your support people in using those tools well. It is also true that it’s ok to provide a curated experience. If you’re somebody working as the experts in that platform team who’s providing these services to the people building your microservices, you are the person who should be responsible for helping them navigate this landscape and curating the right platform for you. If you’re going to build your own private cloud, it’s something I don’t tend to advise you to do, you might start with Kubernetes as your core. Then you’re going to have to pick the right pieces to create the right platform for your team, for your organization.

Governance

Another potential issue around the platform, though, comes in the shape of governance. Governance often gets a bad name. Partly I feel not because of what governance is, but because of how governance is often implemented. We see governance as a barrier. On the face of it, governance is an entirely acceptable and sensible thing that we should be doing. Governance is, simply put, deciding on how things should be done, and making sure that they are done. I think this is totally fine. In many small teams, you’re doing governance without ever realizing it. In a large scale organization, you have different governance that needs to take place. This is all governance is. How should things be done, and making sure that they are done. The problem is that when people start seeing, especially people who are more familiar to more centralized command and control models, who are being dragged kicking and screaming into the world of independent autonomous teams, and they’re like, how do I use my skills to operate in this environment? Then they see the platform, they go, I can use the platform to decide what people can do.

Pitfall 3: Trying To Implement Governance through Tooling

This leads to another pitfall. Because if you say, what we’re going to do, is we’re going to basically use the platform as a way of enforcing what people do. We’re going to limit what you do. We’re going to stop you doing things. We’re going to do that through the platform, through the tools that we give you. The issue with that mindset is the moment you say that, the next thing follows, which is you say, because if you use the platform, we know you’re doing the right thing, therefore, you must use the platform. Because if we enforce that you use the platform, we know you’ll be doing the right thing, and my job is done. This is another real problem with people who are adopting platforms. Enforcement of tools and its function really undermines the whole mindset around this. If you force people to use a platform, it’s not about enablement, it’s about control. Here’s the reality. If you make it hard for people to do their jobs, people are either going to bypass your controls into the world of shadow IT, or they’re going to leave. There are also the other people that will just put up with it. Often, those people have already been beaten down by other problems and issues in the organization.

I remember Werner Vogels telling a story many years ago. He was going into these fortune 500 companies, early days of AWS, and they were trying to encourage these big U.S. companies to take AWS seriously. The CIOs would say things like, we’re never going to use a bookseller to run our compute. He said, “You already are.” He put out a list of all the people that worked at that company that were already billing AWS, paying for things on their corporate credit cards. People used AWS as a way of getting their job done without having to go through the traditional corporate controls. A lot of people are horrified by this idea. This is what we now call shadow IT. IT that isn’t centrally provisioned and isn’t centrally managed. Shadow IT is massive now and is growing. People want to get their jobs done, they find a way to get their job done. It’s almost like a logical manifestation of the world of SaaS. SaaS has made it so easy to provision software services that people are cutting out the middlemen.

In an environment like this, you can try and use a platform, so you’ve got to use a platform, and this is a way of stopping you doing stuff. Is it really going to stop people anyway? Because here’s the thing, those people that actually bypass your controls to get the job done, they’re motivated. They’ve gone out of their way to find a better solution to solve the problems that they’ve got, because they want to do the job. They’re motivated. These are the people you probably want to promote, or at the very least listen to and help. You don’t want to sideline them or make it so difficult for them to do their job and become so demotivated, they just go somewhere else. As part of governance, it’s about talking about what should be done and making sure it is done. Part of that is being clear and communicating as to why you’re doing things in a certain way. If you explain the why you want things done in a certain way, it’s going to be much easier for people to make the right decisions. It’s also completely appropriate for you to make it as easy as possible to do the right thing.

Top Tip: Provide a Paved Road

I love this metaphor of the paved road. Creating an experience for your delivery teams that makes doing the right thing as easy as possible. We’ve laid a path out in front of you, if you just do these things, everything’s going to be absolutely fine and rosy. If you realize that the path isn’t quite right for you, you can head off into the woods yourself, but you’re going to have to do a bunch of work yourself. You’re still obligated to follow what should be done. You’re still accountable for the work you do, but you’re going to be a bit more on your own. On the other hand, the paved road is all going to be gravy, because in many situations, you can justify going into the woods in niche situations, and that’s ok.

Provide that paved road. Create an experience that makes it easy for people to do the right thing. If you make it easy for people to do the right thing and explain why those things are done in a certain way, you’ll also find that when people do need to go off that beaten path, they can be much more aware of what they’re doing and how that fits in. When you identify that people aren’t using your paved road, that becomes feedback back into your platform. What was it about what we gave them that didn’t help them do their job? Why did they go to this third party service? Is that actually something we’re not bothered about, and actually, their situation is niche enough that we don’t have to worry? Or does that speak to a gap in what we’re offering our customers? Our customers in this case being our fellow developers, and testers, and sysadmins, and everything else.

There are some great examples of companies doing things like this, creating not only a paved road, but also something that doubles as an educational tool. Sarah Wells has spoken before about the use of BizOps inside the “Financial Times,” which on the face of it looks almost like a service registry, but it goes further than that. It talks about certain levels of criticality, and what microservices and other types of software products need to deliver to reach those levels of criticality. If you want to be a platinum service you have to do this and this. A lot of those checks are automated. There’s links you can go to, to find out how to solve those problems. At a glance, you can see what you should be doing, what you are doing, and get information about how to address those discrepancies. This isn’t the big stick. This is the paved road with guidance, with a map.

Top Tip: Make the Platform Optional

This leads us on to maybe one of the more controversial tips that I’m going to give you. This is actually a piece of advice that comes straight from the “Team Topologies” book. Many of you who run a platform might be quite worried by this idea, but it’s this, you should make the platform you give to your microservice teams, optional. This is scary. Why would I make it optional? Partly, because of reasons I’ve already talked about. We don’t want to just put arbitrary barriers in front of people. If we really want to create independent, autonomous teams that are focused on delivering their functionality, they might have real needs that aren’t met by the platform. If we force them to use the platform, and all aspects of the platform, we’re actually effectively undermining their ability to make decisions that are best for them. That’s part of it. Absolutely.

There’s another thing which is a little bit more insidious. If you make the platform optional, it means that the owners of the platform are going to be focused on ease of use. If everyone has to use a platform, and that’s mandated, it’s very easy for the platform team to stop caring about it, in terms of what it’s like to use about that delivery experience. Whereas if the platform is optional, then one of the key things that’s going to be driving how successful your platform is viewed is how many people are using it. By making it optional, you will go out of your way to make sure that it’s easy to use and easy to adopt. It triggers you into doing that outreach, aside from also, of course, enabling this self-service where it’s warranted.

Summary (Pitfalls)

The big pitfall we started off with was giving people these awesome tools, these awesome platforms without enabling self-service. Once you’ve given people these tools that maybe do allow for self-service, it’s not helping people use those tools well. Then, finally, we talked about the challenges of trying to implement governance or enforcement around governance through the tooling and all the pitfalls associated with that.

Summary (Top Tips)

Firstly, and really importantly, you’ve got to understand to trust your people. This might be difficult, but fundamentally, this is where a lot of the journey starts from, trust your people. I didn’t say verify. Verification is also useful. You should start with trusting. You need to treat your platform like a product. You need to treat the people that use that product like users. Understand what they want, do the outreach. As part of that platform, it is ok to deliver a curated experience. Make it easy for people to navigate their world. This lines up really nicely with this idea of the paved road. The paved road helps deliver the things that people need most of the time. Finally, but maybe controversially, make the platform optional. Making the platform optional signals that it is ok to use alternative products where warranted. Also, it makes sure the team that builds a platform is going to be focused on making that platform as easy to use as possible.

If we need to distill all of it down, when I’ve looked back at these different companies I’ve worked at and people I’ve chatted to, it still feels that so many people are using the cloud without really using it. Many of us have bought the hypercar and stuck the wheel clamp on it. Taking that wheel clamp off all starts with trusting your people.

Resources

There’s more information about what I do over at my website, https://samnewman.io, including information about my latest book, the second edition of, “Building Microservices.”

See more presentations with transcripts

Article: The Most Common Developer Challenges That Prevent a Change Mindset—and How to Tackle Them

MMS • Shree Mijarguttu

Article originally posted on InfoQ. Visit InfoQ

Key Takeaways

- Encourage a culture of being hungry to learn: When guiding an engineering team, you’ve got to encourage constant growth and learning, like exploring different tech stacks.

- Larger companies and corporations may not expect developers to master several tech stacks—but startups do. What can we learn from those environments?

- Give yourself and your team a window of time to work out certain problems, then move on. Developers work for long periods of time on difficult tasks with slow progress.

- Believe in an approach where everyone in the organization is responsible for developing and maintaining a culture of opportunity.

- Build a business-impact-first mindset, which means treating technology as an enabler, not a driving force.

Some 83% of developers stated they are feeling burnout, and that’s because many aspects of their jobs are preventing a flexible mindset.

The truth is, being a software developer is synonymous with the continuous cycle of tight deadlines. Programming is a creative profession, yet much of our time is spent working on difficult tasks that slow down productivity: It takes away the joy from day-to-day projects, resulting in frustration.

There’s often pressure to solve last-minute defects and stick to set timeframes, but another stress is the ever-growing digital world.

New technologies entering the space are becoming essential for software developers overnight. So, to succeed in this fast-paced industry, they better stay on top of the latest tools.

This is where chief technology officers (CTOs) or senior leaders overseeing developer teams can help shift old patterns, ignite a growth mentality, and teach developers to be flexible and agile. In other words, a change mindset.

This buzzword is used across many industries and spoken about in publications like HBR and Forbes, among others.

The idea is that we have to stop waiting to be forced into change, and instead be ahead of the game, when we live in a rapidly evolving world. In his book Think Again, Adam Grant writes:

“… We need to spend as much time rethinking as we do thinking.”

So, here’s what obstacles software engineering teams face and what to do to cultivate a change mentality.

The common pitfalls

A phrase that particularly rings true with software engineering when talking about mindset is:

“Your team is only as strong as its weakest member.”

One negative team member slacking can throw a spanner in the works, demoralizing and demotivating the rest of the team. Watch out for these team members who make excuses for not getting work done, don’t accept their inability, and make others doubt their own work – it could quickly lower productivity.

It’s also common knowledge that the more automation a company has, the more likely it will free up developers’ time to focus on digital innovation that benefits customers. This is one of the ideas behind BOS Framework, a product that I am proud to be one of the engineers of.

Still, today, developers spend forever doing manual tasks, especially when troubleshooting. There are multiple tools out there that enterprises are slow to embrace, like code editors, defect trackers, and even Kubernetes, an open-source system helping automate applications’ deployment and operations.

And another factor is unrealistic deadlines, which impact software engineers’ work-life balance, and their motivation can plummet as a result. The best investment for developers is to invest in their own development, especially in this ever-evolving technical landscape. But when working overtime and bogged down with manual tasks, it’s no wonder developers lack the motivation to take up new skills.

All these aspects impact dev teams’ change mindset. So what can team leads do about it?

Encourage a culture of being hungry to learn

Satya Nadella, CEO at Microsoft, initiated the “tech giant’s cultural refresh with a new emphasis on continuous learning” to change employees’ behavioral habits. He called it moving from being a group of “know-it-alls” to “learn-it-alls.”

When fresh talent appears every second, those “oldies but goodies” in the industry with 10+ years of developer experience, may get left behind if they don’t up their game too.

Therefore, when guiding an engineering team, you’ve got to encourage constant growth and build learning into the daily routine. Start with quizzes, guides, and games and end with offering developers opportunities to work on projects with different tech stacks – a forcing mechanism to expand their knowledge.

For example, if an employee says they have become an AWS-certified architect, that’s wonderful. At our company, we suggest they also look at other cloud platforms to be more grounded in their architectural ability. This, of course, needs to be a mutual agreement between the two parties and not forced on the employee.

Ultimately, if your team focuses on certain tools too much without looking at other options, you could quickly become outdated and stop your company from innovating. Companies that build cultures of hunger for learning mean that the developers are always ahead of the curve, finding the best solutions for users and stakeholders.

Learn from startup environments

Larger companies and corporations may not expect developers to master several tech stacks simultaneously – but startups do. So what can we learn from those environments?

Steve Jobs was already ahead of the game by organizing Apple like a startup for improved collaboration and teamwork. He referred to Apple as the “biggest startup on the planet,” where you could trust colleagues to come through on their side of the bargain without watching them all the time or having corporate committees.

The biggest advantage of working in a startup or smaller company is that you can wear many hats and learn about every aspect of the company. I joined my current job right out of college as a Software Engineering Intern and have been with them since. Over the past 10 years, I worked with many technologies, from web and mobile development to databases, IoT applications, and data science projects. From this experience, I quickly built a base of knowledge for various technology stacks and noticed the nuances of each one.

Developers often get attached to specific tools and want to apply them to everything. But if you just have a hammer, you treat everything like a nail. Working at a startup is an exciting opportunity for developers to build from scratch, pitch crazy ideas, and be part of a team attempting to solve complex problems.

It’s not like being a regular employee; you are part of something bigger and crafting a company’s future. Developers in these environments can often stop being lone rangers and learn to communicate effectively across various departments.

Therefore, when building a change mindset in a dev team, look to startups for inspiration and view startup experience on a CV as a plus. And if you already are a startup, learning is limitless – so look to competitors.

Work on problems for a certain time

Give yourself and your team a window of time to work out certain problems, then move on to something new. Developers’ change mindset is often inhibited as they work for hours and hours on difficult tasks or get stuck on bugs with slow progress.

“It’s part of the job,” they’d say. However, I have a clear rule: If your team spends more than four hours on a problem, get them to take a walk. Then, they can come back to it with a mindset of change. If they spend another four hours on something with little traction, it’s time for them to reach out to their peers; somebody is bound to have an answer. If developers learn to open themselves up to other team members’ advice, it will stimulate – yet again – a change in mindset.

This is also why developers and engineers should never fall in love with only one programming language or technology; they’ve got to keep moving. The sky is the limit if they always investigate alternative solutions – that’s the basic expectation of engineers.

Believe in an approach where everyone is responsible

Everyone in a dev team should believe in the mission and vision of the organization where they work. But they should also be equally concerned about building and maintaining a culture of opportunity and transformation. This is a shared responsibility that relies on every single developer’s contribution.

The unofficial agreement is that companies are responsible for giving employees opportunities on a plate. I’ll always be thankful to my seniors for pushing me to test myself and try new things, like training airmen in software development and data science at the Department of Defense.

There are always appropriate moments to force incredible opportunities onto team members.

However, a top-down approach to building culture doesn’t always work: I believe in creating an organizational culture where everyone shares accountability. Culture should be adaptable, not just established by leaders. And employees must also show they are willing to explore and be adventurous; this is the difference between a good employee and an outstanding one.

Before going remote, every Friday at the workplace, we would pick a team member to choose a topic – anything in the field of software development, technology, cloud, and CI/ CD – and educate the entire team. It put developers out of their comfort zone and helped other team members learn something new every week.

Tech team leaders shouldn’t just expect developers to follow a culture set in stone. Instead, they should allocate resources to ensure employees understand it, vet it, uphold its principles, and add to it. That way, developers are encouraged to think for themselves and criticize, boosting that much-needed change mindset.

Build a business-impact-first mindset

Change in an engineering team also comes from cultivating a business-impact-first frame of mind – one of the main pillars of success at BOS Framework and the philosophy on which I was trained. In other words, it means an ingrained culture where developers speak both business and engineering languages, viewing technology as a tool to achieve business outcomes.

This is because engineers with an entrepreneurial mindset will love to get it right while getting it done. Obviously, a stark over-exaggeration, but engineers are mostly perfectionists, while entrepreneurs don’t have time to overthink, are better at delegating, and learn things just in time. Engineering teams must not forget that their projects should have a commercial end.

Engineering leads must build a business case for every project that allows non-technical and business stakeholders to weigh in and inform decisions. Meanwhile, developers must start communicating with business stakeholders, peers, and other departments in corporate jargon to deliver tech-enabled business impact. Bridging the gap between product stakeholders and development teams helped me progress in my career and adapt to new roles more easily.

Developers can get stuck in an endless loop where they can’t remember the last time they learned new things at their job or impacted company culture. That’s why building a change-ready mindset in your company is essential to help break career plateaus: It puts team members at the core of processes, says no to staying with one tech stack for too long, and encourages constant progression.

MMS • Steef-Jan Wiggers

Article originally posted on InfoQ. Visit InfoQ

Recently TriggerMesh, a cloud-native integration platform provider, announced Shaker, a new open-source AWS EventBridge alternative project that captures, transforms, and delivers events from many out-of-the-box and custom event sources in a unified manner.

The Shaker project provides a unified way to work with events using the CloudEvents specification. It can be used with event sources and targets in AWS, Azure and GCP, Kafka, or HTTP webhooks. In addition, it includes a transformation engine based on a simple DSL, which can be controlled via code-based transformations if necessary.

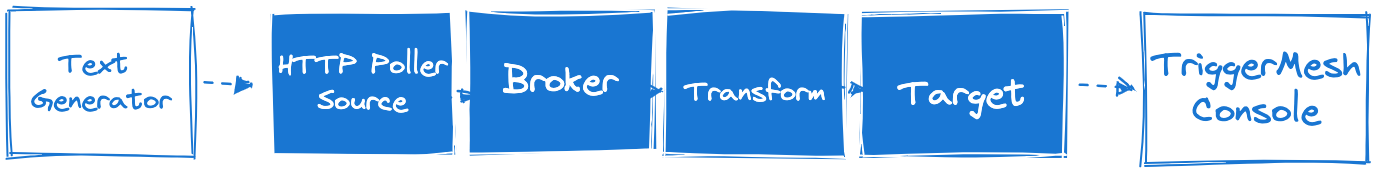

With TriggerMesh’s command line interface, tmctl, developers can create, configure and run TriggerMesh on a machine with Docker. Next, they optionally leverage TriggerMesh’s Bumblebee transformation component and route to an external target.

Source: https://docs.triggermesh.io/get-started/quickstart/

Jonathan Michaux, a Product Manager at TriggerMesh, explains in a blog post:

TriggerMesh is designed to be cloud-agnostic, in fact, it works brilliantly to connect different clouds as well as on-premises together. It can run anywhere because all the functionality is provided as containers that can be declaratively configured. tmctl makes it easy to run these containers on Docker, and the TriggerMesh CRDs and controllers will run them natively on any Kubernetes distribution. This means Shaker is easy to embed into existing projects, such as internal developer platforms or commercial SaaS software, and can be operated in the same way as any other containerized workloads.

With Shaker, the company is aiming for DevOps, SREs, and platform engineers looking for a one-stop shop to produce and consume events to build real-time applications. It is similar to AWS EventBridge capabilities; however, it is open-source and can run anywhere that supports Docker or Kubernetes. In addition, it is designed to capture events from all cloud providers and SaaS or custom applications.

TriggerMesh co-founder and CEO Sebastien Goasguen told InfoQ:

As AWS announced AWS EventBridge Pipes for point-to-point integration, TriggerMesh Shaker provides an open-source alternative that can run on GCP, Azure, or Digital Ocean with a set of event producers and consumers from each of those major Cloud providers.

In addition, Kate Holterhoff, an analyst at RedMonk, said in a press release:

The paradigm of event-driven architecture has become increasingly important to the process of application development and enterprise modernization. The Shaker project from TriggerMesh is an open-source solution for developers and platform teams to unify events across disparate sources and connect their own platforms to new event sources.

MMS • Sergio De Simone

Article originally posted on InfoQ. Visit InfoQ

Part of the upcoming Grafana Tempo 2.0, TraceQL is a query language aiming to make it simple to interactively search and extract traces. This will speed up the process of diagnosing and responding to root causes, says Grafana.

Distributed traces contain a wealth of information that can help you track down bugs, identify root cause, analyze performance, and more. And while tools like auto-instrumentation make it easy to start capturing data, it can be much harder to extract value from that data.

According to Grafana, existing tracing solutions are not flexible enough when it comes to search traces if you do not know exactly which traces you need or if you want to reconstruct the context of a chain of events. That is the reason why TraceQL has been designed from the ground up to work with traces. The following example shows how you can find traces corresponding to database insert operations that took longer than one second to complete:

{ .db.statement =~ "INSERT.*"} | avg(duration) > 1s

TraceQL can select traces using spans, timing, and durations; aggregate data from the spans in a trace; and use structural relationships between spans. A query is built as a set of chained expressions that select or discard spansets, e.g.:

{ .http.status = 200 } | by(.namespace) | count() > 3

It supports attribute fields, expressions including fields, combining spansets and aggregating them, grouping, pipelining, and more. The next example shows how you can filter all traces that crossed two regions in a specific order:

{ .region = "eu-west-0" } >> { .region = "eu-west-1" }

TraceQL is data-type aware, meaning you can express queries in terms of text, integers, and other data types. Additionally, TraceQL supports the new Apache Parquet-compatible storage format in Tempo 2.0. Parquet is a columnar data file format that is supported by a number of databases and analytics tools.

As mentioned, TraceQL will be part of Tempo 2.0, which will be released in the coming weeks, but it can also be previewed in Grafana 9.3.1.

MMS • Phil Estes

Article originally posted on InfoQ. Visit InfoQ

Introduction

Estes: This is Phil Estes. I’m a Principal Engineer at AWS. My job is to help you understand and demystify the state of APIs in the container ecosystem. This is maybe a little more difficult task than usual because there’s not one clear, overarching component when we talk about containers. There’s runtimes. There’s Kubernetes. There’s OCI and runC. Hopefully, we’ll look at these different layers and make it practical as well that you can see where APIs exist, how vendors and integrators are plugging into various aspects of how containers work in runtimes, and Kubernetes.

Developers, Developers, Developers

It’s pretty much impossible to have a modern discussion about containers without talking about Docker. Docker came on the scene, 2013, definitely huge increased interest in use in 2014 mainly around developers. Developers love the concept, and the abstraction that Docker had put around these various set of Linux kernel capabilities, and fell in love really with this command line, simplicity of docker build, docker push, docker run. Of course, again, command lines can be scripted and automated, but it’s important to know that this command line has always been a lightweight client.

The Docker engine itself is listening on a socket, and clearly defines an HTTP based REST API. Every command that you run in the Docker client is calling one or more of these REST APIs to actually do the work to start your container to pull or push an image to a registry. Usually, this is local. Again, to many early users of Docker, you just assumed that your docker run was instantly creating a process on your Linux machine or cloud instance, but it was really calling over this remote API. Again, on a Linux system would be local, but could be remote over TCP, or a much better way was added more recently to tunnel that over SSH if you really need to be remote from the Docker engine. The important fact here is that Docker has always been built around an API. That API has matured over the years.

APIs are where we enable integration and automation. It’s great to have a command line, developers love it. As you mature your tooling and your security stack and your monitoring, the API has been the place where there have been other language clients created, Python API for Docker containers, and so on. Really, much of the enablement around vendor technology and runtime security tools, all these things have been enabled by that initial API that Docker created for the Docker engine.

What’s Behind the Docker API?

It will be good for us to understand the key concepts that were behind that API. There are three really key concepts that I want us to start to understand, and we’ll see how they affect even higher layer uses via other abstractions like Kubernetes today. The first one is what I’m going to call the heart of a container, and that’s the JSON representation of its configuration. If you ever use the Docker inspect command, you’ve seen Docker’s view of that. Effectively, you have things like the command to run, maybe some cgroups resource limits or settings, various things about the isolation level. Do you want its own PID namespace? Do you want the PID namespace of the host? Are you going to attach volumes, environment variables? All this is wrapped up in this configuration object. Around that is an image bundle. This has image metadata, the layers, the actual file system.

Many of you know that if you use a build tool or use something like docker build, it assembles layers of content that are usually used with a copy-on-write file system at runtime to assemble these layers into what you think of as the root file system of your image. This is what’s built and pushed and pulled from registries. This image bundle has references to this configuration object and all the layers and possibly some labels or annotations. The third concept is not so much an object or another representation, but the actual registry protocol itself. This is again separate from the Docker API. There’s an HTTP based API to talk to an image registry to query or inspect or push content to a remote endpoint. For many, in the early days, this equated to Docker Hub. There are many implementations of the distribution protocol today, and many hosted registries by effectively every cloud provider out there.

The Open Container Initiative (OCI)

The Open Container Initiative was created in 2015 to make sure that this whole space of containers and runtimes and registries didn’t fragment into a bunch of different ideas about what these things meant, and to standardize effectively around these concepts we just discussed that record to the Docker API and the Docker implementation. That configuration we talked about became the runtime spec in the OCI. The image bundle became the core of what is now the image spec. That registry API, again, more recently, wasn’t part of the initial chart of the OCI, has now been formalized into the distribution spec. You’ll see that even though there are many other runtimes than Docker today, almost all of them are conformant to these three OCI specifications.

There’s ways to check that and validate that. The OCI community continues to innovate and develop around these specifications. In addition, the OCI has a runtime implementation that can parse and understand that runtime spec and turn it into an isolated process on Linux. That implementation, many of you would know as runC. runC was created out of some of the core underlying operating system interfaces that were in the Docker engine. They were brought out of the engine, contributed to the OCI, and became runC today. Many of you might recognize the term libcontainer, most of that libcontainer code base is what became runC.

What about an API for Containers?

At this point, you might say, I understand about the OCI specs and the standardization that’s happened, but I still don’t see a common API for containers. You’d be correct. The OCI did not create a standardized API for container lifecycle. The runC command line may be a de facto standard. There have been other implementations of the runC command line, therefore allowing someone to replace runC at the bottom of a container stack and have other capabilities. That’s not really a clearly defined API for containers. So far, all we’ve seen is that Docker has an API, and we now have some standards around those core concepts and principles that allow there to be commonality and interoperability among various runtimes. Before we try and answer this question, we need to go a bit further in our journey and talk a little bit more than just about container runtimes.

We can see that Docker provided a solid answer for handling the container lifecycle on a single node. Almost as soon as Docker became popular, the use of containers in production showed that really at scale, users needed ways to orchestrate containers. Just as fast as Docker had become popular, now there are a bunch of popular orchestration ideas, everything from Nomad, to Mesos, to Kubernetes, and Docker even creating Docker Swarm to offer their own ideas about orchestration. Really, at this point, we have to dive into what it means to orchestrate containers, and not just talk about running containers on a single node.

Kubernetes

While it might be fun to dive in and try and talk about the pros and cons of various ideas that were hashed around during the “orchestration wars,” effectively, we only have time to discuss Kubernetes, the heavyweight in the room. The Cloud Native Computing Foundation was formed around Kubernetes as its first capstone project. We know the use of Kubernetes is extremely broad in our industry. It continues to gain significant amounts of investment from cloud providers, from integrations of vendors of all kinds. The CNCF landscape continues to grow dramatically, year-over-year. Our focus is going to be on Kubernetes just given that and the fact that we’re continuing to dive into what are the common APIs and API use around containers.

When we talk about orchestration, it really makes sense to talk about Kubernetes. There’s two key aspects since we’re talking about APIs that I’d like for us to understand. One coming from the client side is the Kubernetes API. We’re showing one piece of the broader Kubernetes control plane known as the API server. That API server has an endpoint that listens for the Kubernetes API, again, a REST API over HTTP. Many of you, if you’re a Kubernetes user would use it via the kubectl tool. You could also curl that endpoint or use other tools, which have been written to talk to the Kubernetes API server.

At the other end of the spectrum, I want to talk a little bit more about how the kubelet, this node specific daemon that’s listening to the API server for the placement of actual containers and pods. We’re going to talk about how the kubelet talks to an actual container runtime, and that happens over gRPC. Any container runtime that wants to be plugged into Kubernetes implements something known as the container runtime interface.

Kubernetes API

First, let’s talk a little bit more about the Kubernetes API. This API server is really a key component of the control plane, and how clients and tools interact with the Kubernetes objects. We’ve already mentioned, it’s a REST API over HTTP. You probably recognize if you’ve been around Kubernetes, or even gone to a 101 Kubernetes talk or workshop, there are a set of common objects, things like pods, and services, and daemon sets, and many others, these are all represented in a distributed database. The API is how you handle operations, create an update, and delete. The rest of the Kubernetes ecosystem is really using various watchers and reconcilers to handle the operational flow for how these deployments or pods actually end up on a node. The power of Kubernetes is really the extensibility of this declarative state system. If you’re not happy with the abstractions given to you, some of these common objects I just talked about, you can create your own custom resource objects, they’re going to lay into that same distributed database. You can create custom controllers to handle operations on those.

Kubernetes: The Container Runtime Interface (CRI)

As we saw in the initial diagram, the Kubernetes cluster is made up of multiple nodes, and on each node is a piece of software called the kubelet. The kubelet, again, is listening for state changes in the distributed database, and is looking to place pods and deployments on to the local node when instructed to do so by the orchestration layer. The kubelet doesn’t run containers itself, it needs a container runtime. Initially, when Kubernetes was created, it used Docker as the runtime. There was a piece of software called the dockershim part of the kubelet that implemented this interface between the kubelet and Docker. That implementation has been deprecated and will be removed in the upcoming release of Kubernetes later this month. What you have left is the container runtime interface created several years ago as a common interface so that any compliant container runtime could serve as the kubelet.

If you think about it, the CRI is really the only common API for runtimes we have today. We talked about this earlier that Docker had an API. Containerd, the project I’m a maintainer of, we have a Go API as well as the gRPC API to our services. CRI-O, Podman, Singularity, there are many other runtimes out there across the ecosystem. CRI is really providing a common API, although truly, the CRI is not really used outside of the Kubernetes ecosystem today. Instead of being a common API endpoint that you could use anywhere in the container universe, CRI really tends to only be used in the Kubernetes ecosystem and pairs with other interfaces like CNI for networking and CSI for storage. If you do implement the CRI, say you’re going to create a container runtime and you want to plug into Kubernetes. It’s not just enough to represent containers, there’s the idea of a pod and a pod sandbox. These are represented in the definition of the CRI gRPC interfaces. You can look those up on GitHub, and see exactly what interfaces you have to implement to be a CRI compliant runtime.

Kubernetes API Summary

Let’s briefly summarize what we’ve seen as we’ve looked at Kubernetes from an API perspective. Kubernetes has a client API that reflects this Kubernetes object model. It’s a well-defined API that’s versioned. It uses REST over HTTP. Tools like kubectl use that API. When we talk about how container runtimes are driven from the kubelet, this uses gRPC defined interfaces known as the container runtime interface. Hearkening back to almost the beginning of our talk, when we actually talk about containers and images that are used by these runtimes, these are OCI compliant. That’s important because fitting into the broader container ecosystem, there’s interoperability between these runtimes because of the OCI specs. If you look at the pod specification in Kubernetes, some of those flags and features that you would pass to a container represent settings in the OCI runtime spec, for example. When you define an image reference, how that’s pulled from a registry uses the OCI distribution API. That summarizes briefly both ends of the spectrum of the Kubernetes API that we’ve looked at.

Common API for Containers?

Coming back to our initial question, have we found that common API for containers? Maybe in some ways, if we’re talking in the context of Kubernetes, the CRI is that well defined common API that abstracts away container runtime differences. It’s not used outside of Kubernetes, and so therefore, we still have other APIs and other models of interacting with container lifecycles when we’re not in the Kubernetes ecosystem. However, the CRI API is providing a valuable entry point for integrations and automation in the Kubernetes context. For example, tools maybe from Sysdig, or Datadog, or Aqua Security or others can use that CRI endpoint. Similar to how in the pre-Kubernetes world they might have used the Docker Engine API endpoint, to gather information about what containers are running or provide other telemetry and security information, coalesce maybe with eBPF tools or other things that those agents are running on your behalf. Again, maybe we’re going to have to back away from the hope that we would find a common API that covers the whole spectrum of the container universe, and go back to a moniker that Docker used at the very dawn of the container era.

Build, Ship, Run (From an API Perspective)

As you well know, no talk on containers is complete without the picture of a container ship somewhere. That shipping metaphor has been used to good effect by Docker throughout the last several years. One of those monikers that they’ve used throughout that era has been build, ship, and run. It’s a good representation of the phases of development in which containers are used. Maybe instead of trying to find that one overarching API, we should think about for each of these steps in the lifecycle of moving containers from development to production, where do APIs exist? How would you use them? Given your role, where does it make sense? We’re going to take that aspect of APIs from here on out, and hopefully make it practical to understand where you should be using what APIs from the container ecosystem.

Do APIs Exist for Build, Ship, and Run?

Let’s dive in and look briefly at build, ship, and run as they relate to APIs or standardization that may be available in each of those categories. First, let’s look at build. Dockerfile itself, the syntax of how Dockerfiles are put together, has never been standardized in a formal way, but effectively has become a de facto standard. Dockerfile is not the only way to produce a container image. It might be the most traditional and straightforward manner, but there’s a lot of tooling out there assembling container images without using Dockerfiles. Of course, the lack of a formal API for build is not necessarily a strong requirement in this space, because teams tend to adopt tools that match the requirements for that organization.

Maybe there’s already a traditional Jenkins cluster, maybe they have adopted GitLab, or are using GitHub Actions, or other hosted providers, or even vendor tools like Codefresh. What really matters is that the output of these tools is a standard format. We’ve already talked about OCI and the image format and the registry API, which we’ll talk about under ship. It really doesn’t matter what the inputs are, what those build tools are, the fact that all these tools are producing OCI compliant images that can be shipped to OCI compliant registries is the standardization that has become valuable for the container ecosystem.

Of course, build ties very closely to ship, because as soon as I assemble an image, I want to put it in a registry. Here, we have the most straightforward answer. Yes, the registry and distribution protocol is an OCI standard today. We talked about that, and how it came to be coming out of the original Docker registry protocol. Pushing and pulling images and related artifacts is standardized, and the API is stable and well understood. There’s still some unique aspects to this around authentication that is not part of the standard. At least the core functionality of pushing an image reference and all its component parts to a registry is part of that standard.

When we talk about run, we’re going to have to really talk in two different aspects. When we talk about Kubernetes, the Kubernetes API is clearly defined and well adopted by many tools and organizations. When we step down to that runtime layer, as we’ve noted, only the formats are standardized there, so the OCI runtime spec and image spec. We’ve already noted the CRI is the common factor among major runtimes built around those underlying OCI standard types. That does give us commonality in the Kubernetes space, but not necessarily at the runtime layer itself.

Build

Even though I just said that using a traditional Dockerfile is not the only way to generate a container image, this use of base images and Dockerfiles, and the workflow around that remains a significant part of how people build images today. This is encoded into tools like Docker build, BuildKit, which is effectively replacing Docker build with its own implementation, but also used by many other tools. Buildah from Red Hat and many others, continue to provide and enhance this workflow of Dockerfile base images, adding content. The API in this model is really that Dockerfile syntax. BuildKit has actually been providing revisions of the Dockerfile, in effect its own standard and adding new features. There are interesting new innovations that have been announced even in the past few weeks.

If you’re looking for tools that combine these build workflows with Kubernetes deployments and development models, they’re definitely more than the few ones in the list. You can look at Skaffold, or Tekton, or Kaniko. Again, many other vendor tools that integrate ideas like GitOps and CI/CD with these traditional build operations of getting your container images assembled. There are a few interesting projects out there that may be worth looking at, Ko. If you’re writing in Go, maybe writing microservices that you just want static Go binaries on a very slim base, ko can do that for you, even build multi-arch images, and integrates push, and integrates with many other tools.

Buildpacks, which has been contributed to the CNCF, coming out of some of the original work in Cloud Foundry, brings interesting ideas about replacing those base layers without having to rebuild the whole image. BuildKit has been adding some interesting innovations. Actually, just have a recent blog post about a very similar idea using Dockerfile. Then, dagger.io, a new project from Solomon Hykes and some of his early founders from Docker, are looking at providing some new ideas around CI/CD, again, integrating with Kubernetes and other container services. Providing a pipeline for build, CI/CD, and update of images.

Ship

For ship, there’s already a common registry distribution API and a common format, the OCI image spec. Many build tools handle the ship step already by default. They can ship images to any OCI compliant registry. All the build tools we just talked about support pushing up to cloud services like ECR or GCR, an on-prem registry or self-hosted registry. The innovations here will most likely come via artifact support. One of the hottest topics in this space is image signing. You’ve probably heard of projects like cosign and sigstore, and the Notary v2 efforts.

There’s a lot of talk about secure supply chain, and so software bill of materials is another artifact type that aligns with your container image. Then there’s ideas about bundling. It’s not just by image, but Helm charts or other artifacts that might go along with my image. These topics are being collaborated on in various OCI and CNCF working groups. Therefore, hopefully, this will lead to common APIs and formats, and not a unique set of tools that will all operate slightly differently. Again, ship has maybe our clearest sense of common APIs, common formats, and it continues to do so even with some of the innovations around artifacts and signing.

Run – User/Consumer

For the run phase, we’re going to split our discussion along two axes, one as a user or a consumer, and the other as a builder or a vendor. On the user side, your main choice is going to be Kubernetes, or something else. With Kubernetes, you’ll have options for additional abstractions, or not just whether you depend on a managed service from a cloud provider or roll your own, but even higher layer abstractions around PaaSs like Knative, or OpenFaaS, or Cloud Foundry, which also is built around Kubernetes.

No matter your choice here, the APIs will be common across these tools, and there’ll be a breadth of integrations that you can pick from because of the size and scale of the CNCF and Kubernetes ecosystem. Maybe Kubernetes won’t be the choice based on your specific needs. You may choose some non-Kubernetes orchestration model, maybe one of the major cloud providers, Fargate, or Cloud Run, or maybe cycle.io, or HashiCorp’s Nomad. Again, ideas that are built around Kubernetes, but provide some of those same capabilities. In these cases, obviously, you’ll be adopting the API and the tools and the structure of that particular orchestration platform.

Run – Builder/Vendor

As a builder or vendor, again, maybe you’ll have the option to stay within the Kubernetes or CNCF ecosystem. You’ll be building or extending or integrating with the Kubernetes API and its control plane, again, giving you a common API entry point. The broad adoption means you’ll have lots of building blocks and other integrations to work with. If you need to integrate with container runtimes, we’ve already talked about the easy path within the Kubernetes context of just using the CRI.

The CRI has already abstracted you away from having to know details about the particular runtime providing the CRI. If you need to integrate at a lower point, for more than one runtime, we’ve already talked about there not being any clean option for that. Maybe there’s a potential for you to integrate at the lowest layer of the stack, runC or using OCI hooks. There are drawbacks there as well, because maybe there’ll be integration with microVMs like Kata Containers or Firecracker, which may prevent you from having the integration you need at that layer.

Decision Points

Hopefully, you’ve seen some of the tradeoffs and pros and cons of decisions you’ll need to make either as someone building tools for the space or needing to adopt a platform, or trying to understand how to navigate the container space.

Here’s a summary of a few decision points. First of all, the Docker engine and its API are still a valid single node solution for developers. There’s plenty of tools and integrations. It’s been around for quite a while. We haven’t even talked about Docker Compose, which is still very popular, and has plenty of tools built around it, so much so that Podman from Red Hat, has also implemented the Docker API and added compose support. Alternatively, containerd, which really was created as an engine to be embedded, without really a full client, now has a client project called nerdctl that also has been adding compose support and providing some of the similar client experiences without the full Docker engine.

Of course, we’ve already seen that Kubernetes really provides the most adopted platform in this space, both for tools and having a common API. This allows for broad standardization, so, tools, interoperability, used in both development and production. There’s a ton going on in this space, and I assume, and believe that will continue. It’s also worth noting that even though we’ve shown that there’s no real common API outside of the Kubernetes ecosystem for containers, most likely, as you know, you’re going to adopt other APIs adjacent even to your Kubernetes use, or container tools that you might adopt. You’re going to choose probably a cloud provider, an infrastructure platform. You’re going to use other services around storage and networking. There will always be a small handful of APIs, even if we could come into a perfect world where we defined a clear and common API for containers.

The API Future

What about the future? I think it’s pretty easy to say that significant innovation around runtimes and the APIs around them will stay in Kubernetes because of the breadth of adoption, and the commonality provided there. For example, SIG-Node, the special interest group in Kubernetes, focused on the node that includes the kubelet software and its components and the OCI communities, are really providing innovations that cross up through the stack to enhance capabilities. For example, there have been Kubernetes enhancement proposals still in flight for user namespaces, checkpoint/restore, swap support.

As these features are added, they drive this commonality up through being exposed in the CRI, and also implemented by the teams managing the runtimes themselves. You get to adopt new container capabilities all through the common CRI API and the runtimes and the OCI communities that deal with the specifications, do that work to make it possible to have a single interface to these new capabilities.

There will probably never be a clear path to commonality at the runtimes themselves. Effectively at this moment, you have two main camps. You’ve got Docker, dependent on Containerd and runC, and you have CRI-O, and Podman, and Buildah, and crun, and some other tools used in OpenShift and Red Hat customers via RHEL and other OS distros. There are different design ideologies between these two camps, and it really means it’s unlikely that there will be absolutely common API for runtimes outside of that layer above, in the container runtime interface in Kubernetes.

Q&A

Wes Reisz [Track Host]: There was nerdctl containerd approach, does it use the same build API as the Dockerfile syntax?

Estes: Yes, so very similar to how Docker has been moving to using BuildKit as the build engine when you install Docker. That’s available today using the Docker Buildx extensions. Nerdctl adopts the exact same capability, it’s using BuildKit under the covers to handle building containers, which means it definitely supports Dockerfile directly.

Reisz: You said there towards the end, no clear path for commonality at the runtime almost kind of CRI, Podman, buildah, versus Docker containerd. Where do you see that going? Do you see that always being the case? Do you think there’s going to be unification?

Estes: I think because of the abstraction where a lot of people aren’t building around the runtime directly today, if you adopt OpenShift, you’re going to use CRI-O, but was that a direct decision? No, it’s probably because you like OpenShift the platform and some of those platform capabilities. Similarly, containerd is going to be used by a lot of managed services in the cloud, already is.

Because of those layers of platform abstraction, again, personal feeling is there’s not a ton of focus on, I have to make a big choice between CRI-O or do I use Podman for my development environment, or should I try out nerdctl? Definitely in the developer tooling space, there’s still potentially some churn there. I try and stay out of the fray, but you can watch on Twitter, there’s the Podman adherents promoting Podman’s new release in RHEL. It’s not necessarily the level of container wars as when we saw Docker and Docker Swarm and Kubernetes.

I think it’s more in the sense of the same kinds of things we see in the tooling space where you’re going to make some choices, and the fact that I think people can now depend on interoperability because of OCI. There’s no critical sense in which we need to have commonality here at that base layer, because I build with BuildKit. I run on OpenShift, and it’s fine. The image works. It didn’t matter the choice of build tool I used, or my GitHub Actions spits out an OCI image and puts it in the GitHub Container Registry. I can use that with Docker on Docker Desktop. I think the OCI has calmed any nervousness about that being a problem that there’s different tools and different directions that the runtimes are going in.

Reisz: I meant to ask you about ko, because I wasn’t familiar with it. I’m familiar with Cloud Native Buildpacks and the way that works. Is ko similar just from a Go perspective? It just doesn’t require a Dockerfile, creates the OCI image from it. What does that actually look like?

Estes: The focus was really that simplification is I’m in the Go world, I don’t really want to think about base images, and whether I’m choosing Alpine or Ubuntu or Debian. I’m building Go binaries that are fully isolated. They’re going to be static, they don’t need to link to other libraries. It’s a streamline tool when you’re in that world. They’ve made some nice connection points where it’s not just building, but it’s like, I can integrate this as a nice one line ko build, and push to Docker Hub. You get this nice, clean, very simple tool if you’re in that Go microservice world. Because Go is easy to cross-compile, you can say, through an AMD64, or an Arm and a PowerPC 64 image all together in a multi-arch image named such and such. It’s really focused on that Go microservice world.

Reisz: Have you been surprised or do you have an opinion on how people are using, some might say misusing, but using OCI images to do different things in the ecosystem?

Estes: Daniel and a few co-conspirators have done hilarious things with OCI images. At KubeCon LA last fall, they wrote a chat application that was using layers of OCI images to store the chat messages. By taking something to the extreme, showing an OCI image is just a bundle of content, and I could use it for whatever I want.

I think the artifact work in OCI, and if people haven’t read about that, search on artifact working group or OCI artifacts, and you’ll find a bunch of references. The fact is that, it makes sense that there are a set of things that an image is related to. If you’re thinking object oriented, you know what this object is related to that. A signature is a component of an image or a SBOM, a software bill of materials is a component of an image. It makes sense for us to start to find ways to standardize this idea of what refers to an image.

There’s a new part of the distribution spec being worked on called the Refers API. You can ask a registry like, I’m pulling this image, what things refer to it? The registry will hand back, here’s a signature, or here’s an SBOM, or here’s how you can go find the source tarball for, if it’s open source software, and it’s under the GPL. I’m definitely on board with expanding the OCI, not the image model, but the artifact model that goes alongside images to say, yes, the registry has the capability to store other blobs of information. They make sense because they are actually related to the image itself. There’s good work going on there.

Reisz: What’s next for the OCI? You mentioned innovating up the stack. I’m curious, what’s the threads look like? What’s the conversation look like? What are you thinking about the OCI?

Estes: I think a major piece of that is the work I was just talking about. The artifact and Refers API are the next piece that we’re trying to standardize. The container runtime spec, the image spec, as you expect, like these are things that people have built whole systems on, and they’re no longer fast moving pieces. You can think of small tweaks, making sure we have in the standards all the right media types that reference new work, like encrypted layers, or new compression formats. These are things that are not like, that’s the most exciting thing ever, but they’re little incremental steps to make sure the specs stay up with where the industry is. The artifacts and Refers API are the big exciting things because they relate to hot topics like secure supply chain and image signing.

Some of the artifact work is like how, as people are going to build tools, that’s already happening. You have security vendors building tools. You have Docker released their new beta of their SBOM generator tool. The OCI piece of that will be, ok, here’s the standard way that you’re going to put an SBOM in a registry. Here’s how registries will hand that back to you when you ask for an images SBOM. The OCI’s piece will again be standardizing and making sure that whether you use tools from the handful of security vendors and tools out there that they’ll hopefully all use a standard way to associate that with an image.

See more presentations with transcripts

MMS • Michael Hausenblas

Article originally posted on InfoQ. Visit InfoQ

Pros and Cons of a Distributed System