Month: January 2023

MMS • RSS

Enterprises are creating huge amounts of data and it is being generated, stored, accessed, and analyzed everywhere – in core datacenters, in the cloud distributed among various providers, at the edge, in databases from multiple vendors, in disparate formats, and for new workloads like artificial intelligence. In this fast-evolving space, a database vendor that is aiming for a broad reach is going to have to adapt quickly.

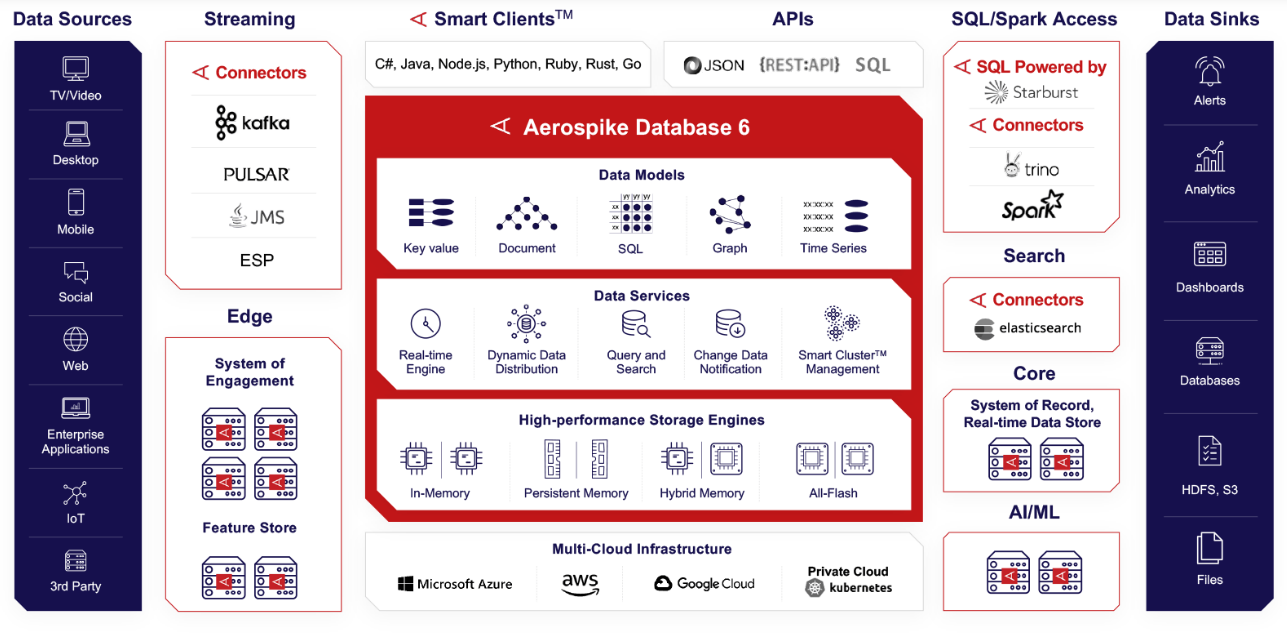

Aerospike is one of those vendors who has been constantly adapting. The company – going on 14 years, after starting off in 2009 as Citrusleaf before taking its current name in 2012 – offers its eponymous database that is the foundation of its Aerospike Real-Time Data Platform. The platform lets organizations to instantly sprint through billions of transactions, power real-time application at sub-millisecond speeds, climb to petabyte scale, and do so while reducing the server footprint by as much as 80 percent.

As we’ve mentioned in previous coverage, the goal is low latency for high throughput jobs.

At the same time, the company understands that if it wants as many transactions as possible to run through its flash-based NoSQL platform and to pull into its gravity as many organizations as possible, it needs to be able to reach into and support as many data sources as possible.

“We have supported strong consistency, which is a little rare in the SQL database,” Lenley Hensarling, Aerospike’s chief product officer, tells The Next Platform. “We find that some people actually move back to an Oracle or DB2 and this is one of the reasons that PostgreSQL has kept growing. But in the NoSQL world, we have strong consistency. We have the ability to do that for up to 12 billion transactions a day and we have some people doing more than that. But all of that data gets captured by Aerospike. As that has happened, more and more customers have said, ‘We need to make use of this data and provide this data wherever it can be used.’”

Over the years, Aerospike has moved down multiple avenues to ensure its platform is covering those bases, including through its growing portfolio of connectors, used to integrate the Aerospike Database with open-source frameworks, including Spark, Kafka, Pulsar, and Trino. The company this month unveiled Connect for Elasticsearch, an open-source search and analytics tool that will let data scientists, developers, and others to rapidly run full-text searches of the real-time data in the vendor’s database.

“We built a connector for that,” Hensarling says. “We could have built textual search into our database, but one of the things we pride ourselves on is the efficiency in handling transactions, both read and write, and being able to handle that massive ingestion. We’re very cognizant that it’s a distributed application and it can connect to other distributed applications or infrastructure like Elasticsearch.”

The Elasticsearch connector dovetails with the change data notification and change data capture abilities that first appeared in Aerospike Database 5 and has continued on in Database 6, which was released last spring.

<>

The Aerospike technology is “key to streaming data,” he says. “It’s key to being able to push data off to where it can be used best. It’s also, in this case, used to update the indices in Elastic and to put the data in Elastic to use that textual, very flexible, fuzzy search capability. We push only those fields that are necessary to do that over into Elastic. We accompany that with the digest, which is essentially the address in our database. That’s one hop back to the actual record in Aerospike. If you want to do a textual search for all the things – and sometimes companies do it not just for what’s in Aerospike, but what might be in other databases, too – and they get back those results from Elastic. But for our data, they can go directly back using that digest to the actual record. And it’s incredibly fast to do that. … We have a number of customers who said, ‘We’re using Elasticsearch. We want to make your data accessible through Elasticsearch.’ That’s what we’ve done.”

Database 6 introduced highly parallel secondary indexes, giving such queries the same speed and efficiency found in primary indexes, and also supports SQL through the Spark and Trino integrations.

The Elasticsearch Connector followed on other moves over the past year to cast a wide net over what the real-time platform and database can do and support. In Database 6, the company included support for JSON document models regardless of scale and enhanced support for Java programming models, including JSONPath for storing and searching datasets and workloads. Aerospike for four or five years has support document and object-style records, but JSON helped the company continue to push into the mainstream, according to Hensarling.

Also last year, the vendor partnered with Trino-based Starburst to launch Aerospike SQL Powered by Starburst, an integrated distributed SQL analytics engine in Aerospike Database 6 based on Starburst Enterprise and leveraging Trino. The revamped secondary indexes in Database 6 gave Aerospike better search capabilities, which Hensarling told The Next Platform the company “can support through a push down from the Starburst worker and the connector that’s in that Starburst worker. That model allows us to do a lot of search and analytic capability through Starburst and make that open up our data to our customers, to new constituencies, like data engineers, compliance people, audit people.”

All these steps – from Elasticsearch and Starburst to the other connectors and more features in Database 6 – let Aerospike extend its reach into more datasets and data sources that enterprises are using and to better compete with the likes of other NoSQL databases like CouchDB and MongoDB.

“People talk about a data pipeline,” he says. “There’s not a data pipeline in any companies. There are literally hundreds or thousands of data pipelines for different users, and we can distribute the right data to the right places in some definition of real time. The global data mesh winds up being in sync, in real time to some extent. That’s our picture of the world and how we sit in that. More and more companies are starting to see that they’re being overrun with data, but we can’t just say we’re only going to take certain things. We have to handle it all and all at the same time, by some definition. It might be milliseconds. For some it might be seconds, for others it might be you go off and do machine learning training for hours someplace else.”

The vendor is seeing some momentum behind its efforts. Aerospike last February said 2021 saw worldwide sales double and that its roster of customers – which already included PayPal, Wayfair, and Yahoo – added others like Critero in France, India-based Dream11, and Riskified in Israel. The first half of 2022 continued the trends, according to the company.

One number that stood out in 2021 was the 450 percent jump in year-over-year recurring revenue for Aerospike’s Cloud Managed Service. Hensarling says that, like other tech vendors, the company’s cloud services got a boost from the COVID-19 pandemic, when enterprises had to rapidly accelerate their cloud efforts. He describes the managed services as the “high-end production workload thing.”

The company this year is taking a deeper step into the cloud with its Database 6-based Aerospike Cloud database-as-a-service running on Amazon Web Services (AWS). Aerospike in November announced early availability and Hensarling says it will be in trials with early customers into the early part of the second quarter, with general availability later in the quarter. Customers were telling the vendor that more of their projects were starting in the cloud.

“That’s what’s driving this, being able to have an idea, go implement it, not have to think about buying cycles for hardware or availability, operations staff in your company and things like that,” he says. “That is something that we see as really critical to growth going forward.”

Aerospike this year will expand its capabilities among other data models, including graph databases. Enterprises are using more models as they triangulate to get more answers from the data. There is the push for “multi-modal database capabilities from one vendor,” Hensarling says. “That’s driven some of our investments. For 30 years, we converged on relational databases and that was the answer. Then all of a sudden we said, ‘No, there are other things. There are reasons to be more elastic and more scalable.’ So NoSQL started happening. But there’s also these new capabilities like graph databases to get different kinds of answers. But people don’t want to deal with five, six, seven different vendors.”

Similarly, they don’t want to be beholden to a single cloud provider, which means companies like Aerospike – which also supports Microsoft Azure, Google Cloud, and Kubernetes-based private clouds – will have to continue to be cloud-agnostic and expand to meet customer demands, he says.

MMS • Kai Waehner

Transcript

Waehner: This is Kai Waehner from Confluent. I will talk about resilient real-time data streaming across the edge and hybrid cloud. In this talk, you will see several real world deployments and very different architectures. I will cover all the pros and cons so that you would understand the tradeoffs to build a resilient architecture.

Outline

I will talk a little bit about why you need resilient enterprise architectures and what the requirements are. Then cover how real-time data streaming will help with that. After that, I cover several different architectures, and use real world examples across different industries. I talk about automotive, banking, retail, and the public sector. The main goal is to show you a broad spectrum of architectures I’ve seen in a real world so that you can learn how you architect your own architecture to solve problems.

Resilient Enterprise Architectures

Let’s begin with the definition of a resilient enterprise architecture, and why we want to do that. Here’s an interesting example from Disney. Disney and their theme parks, Disney World in the U.S., had a problem. There was an AWS Cloud outage. What’s the problem with the theme parks, you might wonder? The theme parks are still there, but the problem is that Disney uses a mobile app to collect all the customer data and provide a good customer experience. If the cloud is not available anymore, then you cannot use the theme park, you cannot do rides, you cannot order in the restaurants anymore. I think this is a great example why resilient architectures are super important, and why they can look very differently depending on the use case. That’s what I will cover, show you edge, hybrid, and cloud-first architectures with all their pros and cons. In this case, maybe a little bit of edge computing and resiliency might help to make the customers happy in the theme parks.

There’s very different reasons why you need resilient architectures. This is a survey, one of my former colleagues and friends Gwen Shapira did, actually the query is from 2017 but it’s still more or less up to date. The point is to show there’s very different reasons why people build resilient architectures. One DC might get nuked, yes, and disaster recovery is a key use case. Sometimes it’s also about latency and distance or implications of the cost regarding where you replicate your data. Sometimes it also is legal reasons. There’s many reasons why resilient architectures are required. If we talk about that, there’s two terms we need to understand. It doesn’t matter what technology you use, like we today talk about real-time streaming with Kafka. In general, you need to solve the problem of the RPO and the RTO. The RPO is the Recovery Point Objective. This defines how much data you will lose in case of a downtime or a disaster. On the other side, the RTO, the Recovery Time Objective means, what’s the actual recovery period before we are online, again, and your systems work again? Both of these terms are very important. You always need to ask yourself, what do I need? Initially, many people say, of course, I need zero downtime and zero data loss. This is very often hard to architect, as we have seen in the Disney example before, and what I will show you in many different examples. Keep that in mind, always ask yourself, what downtime or data loss is ok for me? Based on that, you can architect what you need.

In the end, zero RPO requires synchronous replication. This means even if one node is down, if you’re replicated synchronously, it guarantees that you have zero data loss. On the other side, if you need zero downtime, then you need a seamless failover. It’s pretty easy definitions, but it’s super hard to architect that. That’s what I will cover. That also shows you why real-time data is so important here, because if you have replication in a batch process from one node to another, or from one cloud region to another, that takes longer. Instead, if you replicate data in real-time, even in case of disaster, you lose much less data with that.

Real-Time Data Streaming with the Apache Kafka Ecosystem

With that, this directly brings me to real-time data streaming. In the end, it’s pretty easy. Real-time data beats slow data. Think about that in your business for your use cases. Many of the people in this audience are not business people, but architects or developers. Nevertheless, think about what you’re implementing. Or when you talk to your business colleagues, ask yourself and your colleagues, if you want to get this data and process it, is it better now or later? Maybe later could be in a few minutes, hours, or days. In almost all use cases, it’s better to process data continuously in real-time. This can be to increase the customer experience, to reduce the cost, or to reduce the risk. There’s many options why you would do that. Here’s just a few examples. The point is, real-time data almost always beats slow data. That’s important for the use cases. Then also, of course, for the resiliency behind the architectures, as we discussed regarding downtime and data loss, which directly impacts how your customer experience is or how high the risk is for your business.

When we talk about processing data in real-time, then Apache Kafka is the de facto standard for that. There’s many vendors behind it. I’ve worked for Confluent, but there’s many other vendors. Also even companies that don’t use Kafka, but another framework, they at least use the Kafka protocol very often, because it became a standard. While this presentation is about success stories and architectures around Apache Kafka, obviously, the same can be applied to other technologies, like when you use a cloud native service from a cloud provider, like AWS Kinesis, and so on, or maybe you’re trying to use Apache Pulsar or whatever else you want to try. The key point, however, is that Kafka is not just a messaging platform, or ingestion layer, like many people think. Yes, it’s real-time messaging at any scale, but in addition to that, a key component is the storage. You store all the events in your infrastructure, within the event streaming platform. With this, you achieve true decoupling between the producers and consumers. You handle the backpressure automatically, because often consumers cannot handle the load from the producer side. You can also replay existing data.

If you think about these characteristics of the storage for the decoupling and backpressure handling, that’s a key component you can leverage for building a resilient architecture. That’s what we will see in this talk about different approaches, no matter if you’re at the edge, in the cloud, or in a hybrid architecture. In addition to that, Kafka is also about data integration with Kafka Connect, and data processing, in real-time, continuously, with tools like Kafka Streams, or KSQL. That in the end is what Kafka is. It’s not just a messaging system. This is super important when we talk about use cases in general, but then also about how to build resilient architectures, because messaging alone doesn’t help. It’s about the combination of messaging and storage, and integration, and processing of data.

Kafka is a commit log. This means it’s append only from the producer side. Then consumers consume it whenever they need or can, in real-time, in milliseconds, in near real-time, in seconds, or maybe in batch, or in an interactive way with a request. It’s very flexible, and you can also replay the data. That’s the strength of the storage behind the streaming log. With that, Kafka is a resilient system by nature. While you can deploy a single broker of Kafka, and that’s sometimes done at the edge, but in most cases you’ll deploy Kafka as a distributed system, and with that, you get the resiliency out of the box. Kafka is built for failure. This means a broker can go down, the network can go down, a disk can break. This doesn’t matter because if you configure Kafka correctly, you can still guarantee zero downtime and zero data loss. With that, that’s the reason why Kafka is not just used for analytics use cases, but also for mission critical workloads. Without going into more detail, keep in mind, Kafka is a highly available system. It provides other features like rolling upgrades and backwards compatibility between server and client so that you can continuously run your business. That’s what I understand under a resilient system. You don’t have downtime from maintenance, or if an issue occurs with the hardware.

Here’s an example for a Kafka architecture. In this case, I’m showing a few Confluent components, obviously working for Confluent. Even if you use open source Kafka or maybe another vendor, you glue together the right components from the Kafka ecosystem. In this case, the central data hub is the platform, these are the clusters. Then you also use Kafka Connect for data integration, like to a database or an IoT interface, and then you consume all this data. However, the messaging part is not really what adds the business value, the business value is to continuously process the data. That’s what stream processing does. In this case, we’re using Kafka native technologies like KSQL and Kafka Streams. Obviously, you could also use something like Apache Flink, or any other stream processing engine for that. Then of course, there’s also data sink, so Kafka Connect can also be used to ingest data into a data lake or database, if you don’t need or don’t want to process data only in real-time. This is then the overall architecture, which has different components that work together to build an integration layer, and to build business logic on top of that.

Global Event Streaming

With that then, if we once again think a little bit about bigger deployments, you can deploy it in very different flavors. Here’s just a few examples of that, like replication between different regions, or even continents. This is also part of the Kafka ecosystem. You build one infrastructure to have a highly scalable and reliable infrastructure. That even replicates data across regions or continents, or between on-prem and the cloud, or between multi-cloud. You can do this with open source Kafka using MirrorMaker 2.0, or with some more advanced tools like at Confluent, we have cluster linking that directly connects clusters together on the Kafka protocol as a commercial offering, no matter what you choose for the replications. The point is, you can deploy Kafka very differently depending on the SLAs. This is what we discussed in the beginning about the RPO and RTO. Ideally, you have zero downtime, and no data loss, like in the orange cluster, but you stretch one cluster across regions. That’s too hard to deploy for every use case, so sometimes you have aggregation clusters, where some downtime might be ok, especially in analytics use cases, like in yellow. With that, keep in mind, you can deploy Kafka and its ecosystem in very different architectures. It has always pros and cons and different complexity. That’s what you should be aware of when you think about how to architect a resilient architecture across edge, hybrid, and multi-cloud.

Here’s one example for that. This is now a use case from the shipping industry. Here now you see what’s happening in the real world. You have very different environments and infrastructures. Let’s get started on the top right. Here you see the cloud. In this case now, ideally you have a serverless offering, because in the cloud, in best case, you don’t have to worry about infrastructure. It has elastic scaling, and you have consumption based pricing for that. For example, with Confluent Cloud in my case, again. Here you have all your real-time workloads and integrate with other systems. In some cases, however, you still have data on-prem. On the bottom right, you’ll see where a Kafka cluster is running in the data center, connecting to traditional databases, old ERP systems, the mainframe, or whatever you need there. This also replicates data to the cloud and the other way back. Once again, as I said in the beginning, real-time data beats slow data. It’s not just true for business, but also for these kinds of resiliency and replication scenarios. Because if you replicate data in real-time, in case of disaster, you’re still not falling behind much and don’t lose much data, because it’s replicated to the cloud in real-time. Then on the other side, on the left side, we have edge use cases where you deploy a small version of Kafka, either as a single node like in the drone, or still as a mission critical cluster with three brokers on a ship, for edge analytics, disconnected from the cloud, or from the data center. This example is just to make clear, there’s many different options how to deploy Kafka in such a real world scenario. It always has tradeoffs. That’s what I will cover in the next sections.

Cloud-First and Serverless Industrial IoT in Automotive

Intentionally, I chose examples across different industries, so that you see how that is deployed. Also, this is now very different kinds of architectures with different requirements and setups. Therefore, the resiliency is also very different depending on where you deploy Kafka and why you deploy it there. Let me get started with the first example. This is now about cloud-first and serverless. This is where everybody’s going. Ideally, everything is in the cloud, and you don’t have to manage it, you just use it. That’s true, if it’s possible. Here is an example where this is possible. This might surprise some people. This is why I chose this use case. Actually, it is a use case where BMW is running event streaming in the cloud as a serverless offering on Azure. However, they are actually directly connecting to their smart factories at the edge. In this case, BMW’s goal is to not worry about hardware or infrastructure if you don’t need to, so they deploy in the cloud first, what’s possible. In this case, they leverage event streaming to consume all the data from the smart factories, machines, PLCs, sensors, robots, all the data is flowing into the cloud in real-time via a direct connection to the Azure Cloud. Then the data is processed in real-time at scale. A key reason why BMW chose this architecture is that they get the data into the cloud once. Then they provide it as a data hub with Kafka to every business unit and application that needs access to it, tap into the data. With Kafka, and because it’s also, as I discussed, a storage system, it doesn’t matter what technology consumes the data. It doesn’t matter what communication paradigms consumes the data. It’s very flexible. You can connect to that from your real-time consumer, Kafka native maybe. You can consume from it from your data lake, more near real-time or batch. You can connect to it via a web service and REST API from a mobile app. This is the architecture that BMW chose here. This is very interesting, cloud only, but still connecting to data at the edge. The cloud infrastructure is resilient. Because it also has a direct connection to the edge, this works well from an SLA perspective for BMW.

Let me go a little bit deeper into the stream processing part, because that’s also crucial when you want to build resilient architectures for resilient applications. When we talk about data streaming, then we get sensor data in all the time. Then we can build applications around that. An application can be anything, like in this case, we’re doing condition monitoring on temperature spikes. In this case, we’re using Java code with Kafka Streams. Here, you see every single event is processed by itself. That’s pretty straightforward. This scales for millions of events per second. This can run everywhere where a Kafka cluster is. In this case, if we deploy that in a use case like BMW, this is running in the cloud, and typically, next to the serverless environment for low latency. There can also be advanced use cases where you do stateful processing. In this case, you do not just process each event by itself, but different events, and correlate them for whatever the use case is. In this case, we are creating a sliding window and continuously monitor the last seconds or minutes, or in this case, hour, to detect a spike in this case of a temperature for continuous anomaly detection. As you can see here, you’re very flexible with use cases and deploy them. Still, it’s a resilient application, because it’s based on Kafka, with all the characteristics Kafka has. That’s also true for the application, not just for the server side. This code, in this case, it’s KSQL now, automatically handles failover. It handles latency issues. It handles disconnectivity, outages of hardware, because it’s all built into the Kafka protocol to solve these problems.

Then you can even do more advanced use cases, like in this case, we’ve built a user defined function to embed a TensorFlow model. In this case, we are doing real-time scoring of analytic models applied against every single event, or against a stateful aggregation of events, depending on your business logic. The point here is that you can build very resilient real-time applications with that. In the same way, this is also true for the replication you do between different Kafka clusters, because it’s also using the Kafka protocol. It is super interesting if you want to replicate data and process it even across different data centers.

Multi-Region Infrastructure for Core Banking

This was an example where we see everything in the cloud. However, reality is, sometimes resiliency needs even more than that. Sometimes you need multi-region deployments. This means even in case of disaster, a cloud goes down or a data center goes down, you need business continuity. This is in the end where we have an RTO of zero, where we have no downtime, and an RTO and RPO of zero, no data loss. Here’s an example from JPMorgan. This is financial services. This is typically in most critical deployments with compliance and a lot of legal constraints so that you need to guarantee that you don’t lose data even in case of disaster. In this case, JPMorgan deploys a separate independent Kafka cluster to two different data centers. Then they replicate the data between the data centers using real-time replication. They also handle the switchover, so if one data center is down, they also switch the producers and the consumers to the other data center. This is obviously a very complex approach, so you need to get that right, including the testing and all these things. As you can see the link here, JPMorgan Chase talked about it in 45 minutes, just about this implementation. It’s super interesting to learn how the end users deploy such an environment.

However, having said that, this example is still not 100% resilient. This is the use case where we replicate data between two Kafka clusters asynchronously. In this case, it’s done with Confluent Replicator, but the same would be true with Mirrormaker if you use open source. The case is, if there is disaster, you still lose a little bit of data, because you’re replicating data asynchronously between the data centers. It’s still good enough for most use cases, because it’s super hard to do synchronous replication between data centers, especially if they are far away from each other, because then you have latency problems and inconsistency. Always understand the tradeoffs between your requirements and how hard it is to implement them.

I want to show you another example, however. Here now, we’re really stretching a single Kafka cluster across regions. With this solution then, you have synchronous replication. With that, you can really guarantee zero data loss even in case a complete data center goes down. This is a feature of Confluent platform. This is not available in open source. This shows how you can then implement your own solution or buy something where you can then solve the problems that are even harder to do. In this case, how this works is that, as discussed, you need synchronous replication between data centers, to guarantee zero data loss. However, because you get latency issues and inconsistencies, then, what we did here, we provide the option so that you can decide which topics are replicated synchronously between the brokers within a single stretch Kafka cluster. As you see in the picture on the left side, the transactional workloads, that’s what we replicate in a synchronous way, zero data loss even in case of disaster. For the not so relevant data, we replicate it asynchronously within the single Kafka cluster. In this case, here is data loss in case of disaster. Now you can decide per business case what you replicate synchronously, so you still have the performance guarantees, but still also can keep your SLAs for the critical datasets. This is now a really resilient data center. We battled tested this before the GA across the U.S., with U.S.-West, Central, and East. This is really across hundreds of miles, so not just next to each other. This is super powerful, but therefore much harder to implement, and to deploy. That’s the tradeoff of this.

On the other side, here’s another great open source example. This is Robinhood. Their mission is to democratize finance for all. What I really like about their use cases using Kafka is that they’re using it for everything. They’re using it for analytical use cases, and also for mission critical use cases. In their screenshots from their presentation, you see that they’re doing stock trading, clearing, crypto trading, messaging and push notifications. All these critical applications are running in real-time across the Kafka cluster between the different applications. This is one more point to mention again, so still too many people in my opinion think about Kafka just for data ingestion into a data lake and for analytical workloads. Kafka is ready to process transactional data in mission critical scenarios without data loss.

Another example of that is Thought Machine. This is just one more of the examples to building transactional use cases. This is a core banking platform and a cloud native way for transactional workloads. It’s running on top of Kafka, and provides the ecosystem to build core banking functionalities. Here, you see the true decoupling between the different consumers’ microservices, and not all of them need to be real-time in milliseconds, you could also easily connect a batch consumer. Or you can also easily connect Python clients so that your data scientist can use his Jupyter Notebook and replay historical data from the Kafka cluster. There are many options here. This is my key point, you can use it for analytics, but also for transactional data, and resilient use cases like core banking.

Even more interestingly, Kafka has a transaction API. That’s also what many people don’t know. The transaction API actually is intentionally called Exactly-Once Semantics. Because in distributed systems, transactions work very differently than in your traditional Oracle or IBM MQ integration where you do a two-phase commit protocol. Two-phase commit doesn’t scale, so it doesn’t work in distributed systems. You need to have another solution. I have no idea how this works under the hood. This is what the smart engineers of the Kafka community built years ago. The point is, as you see in the left side, you have a transaction API to solve the business problem to guarantee that each message that is produced once from a producer is durable and consumed exactly once from each consumer, and that’s what you need in a transaction business case. Just be aware that this exists. This has not much performance impact. This is optional, but you can use it if you have transactional workloads. If you want to have resiliency end-to-end, then you should use it, it’s much easier than removing duplicates by yourself.

Hybrid Cloud for Customer Experiences in Retail

After we talked a lot about transactional workloads, including multi-region stretch clusters, there is more resilient requirements. Now let’s talk about hybrid cloud architectures. I actually choose one of the most interesting examples, I think. This is Royal Caribbean, so cruise ships for tourists. As you can imagine, each ship has some IT data. Here, it’s a little bit more than that. They are running mission critical Kafka clusters on each ship, for doing point of sale integration, recommendations and notifications to customers, edge analytics, reservation, loyalty platform. All these things you need to do in such a business. They are running this on each ship, because each ship has very bad internet connection, and it’s very expensive. They need to have a resilient architecture at the edge for real-time data. Then, when one of the ships comes back to the harbor, then you have very good internet connection for a few hours, then you can replicate all the data into the cloud. You can do this for every ship after every tour, and in the cloud. Then you can integrate with your data lake for doing analytics. You can integrate with your CRM and loyalty platform for synchronizing the data. Then the ship goes on the next tour. This is a very exciting use case for hybrid architectures.

Here is how this looks like in more general. You have one bigger Kafka cluster. This is maybe running in the cloud, like here, or maybe in your data center, where you connect to your traditional IT systems like a CRM system, or a third party payment provider. Then also, you see in the bottom, we also integrate with the small local Kafka clusters, they are required for the resilient edge computing in the retail store or on the ship. They also communicate with the central big Kafka cluster, all of that in real-time in a reliable way, using the Kafka protocol. If you go deeper into this edge, like in this case, the retail store, or in the example from before from the ship, here, now you can do all the edge computing, whatever that is. For example, you can integrate with the point of sale and with the payment. Even if you’re disconnected from the cloud, you can do payments and sell things. Because if this doesn’t work, then your business is down. This happens in reality. All of this happens at the edge. Then if there’s good internet connectivity, of course, you’re replicated back to the cloud.

We have customers, especially now in retail in malls, where during the day, the Wi-Fi is very bad, so they can’t replicate much. During the night, when there is no customers, then they replicate all the data from the day into the cloud. This is a very common cloud infrastructure or hybrid infrastructure. Kafka is so good here, because it’s not just a messaging system, it’s also a storage system. With this storage, you truly decouple the things, so you can still do the point of sale, but you cannot push it to the cloud where your central system is. You keep it in the Kafka log, and automatically when there is Kafka connection to the cloud, then it starts replicating. It’s all built into the framework. This is how you build resilient architectures easily by leveraging the tools for that and not building that by yourself because it’s super hard.

With that, we see how omnichannel works much better also with Kafka. Again, it’s super important to understand that Kafka is a storage system that truly decouples things and stores things for later replay. Like in this case, we had a newsletter first, 90 and 60 days ago. Then, 10 and 8 days ago, we used our Car Configurator. We configured a car and changed it. Then at day zero, we’re going into the dealership, and here the salesperson already knows all historical and real-time information about me. He knows real-time because it’s a location with service with my app, I’m walking into the store. In that moment, the data about me is replayed from the Kafka log historical data. In some cases, even better, it’s advanced analytics, where the salesperson even gets a recommendation from the AI engine in the backend, recommending a specific discount because of your loyalty and because of your history, and so on. This is the power of building omnichannel, because it’s not just a messaging system, but it’s a resilient architecture within one platform where you do messaging in real-time, but also storage for replayability, and data integration and data processing for correlation at the right context at the right time.

Disconnected Edge for Safety and Security in the Public Sector

Let me go even deeper into the edge. This is about safety critical, and cybersecurity, and these kinds of use cases. There’s many examples. The first one here is, if you think about a train system. The train is on the rails all the time. Here, you also can do data processing at the edge, like in a retail store. This has a resilient architecture, because the IT is small, but it’s running on computers in the train. With that, also, it’s very efficient while it’s resilient. You don’t need to connect to the cloud all the time when you want to understand, what is the estimated time of arrival? You get this information once pushed from the cloud into the train, and each customer on the train can consume the data from a local broker. The same is when you, for example, consume any other data or when you want to do a seat reservation in the restaurant on the train. This train is completely decoupled from another train, so it can be local edge processing. Once again, because it’s not just messaging, but a complete platform around data processing and integration, you can connect all these other systems, no matter if they’re real-time, or a file based legacy integration, like in a train you often have a Windows Server running. That’s totally flexible how you do that.

Then this is also more about safety and about criticality for cyber-attacks. Here’s an example, where we have Devon Energy, in the oil and gas business. Here, on the left side, you see these small yellow boxes at the edge. This is one of our hardware partners where we have deployed Confluent platform to do edge computing in real-time. As you see in the picture, they’re collecting data, but they’re also processing the data. Because in these resilient architectures, not everything should go to the cloud, it should be processed at the edge. It’s much more cost efficient. Also, the internet connection is not perfect. You do most of the critical workloads at the edge, while you still replicate some of the aggregated data into the cloud from each edge site. This is a super powerful example about these hybrid architectures, where the edge is often disconnected and only connects from time to time. Or sometimes you really run workloads only at the edge and don’t connect them at all to the internet for cybersecurity reasons. That’s then more an air-gapped environment. That’s what we also see a lot these days.

With that, my last example is also about defense. Really critical and super interesting. That’s something where we need a resilient streaming architecture even closer to the edge. You see the command post at the top, this is where a mission critical Kafka cluster is running for doing compute and analytics around this area in the military, for the command post. Each soldier actually also has installed a very small computer, which also is running a Kafka broker, not a cluster here, it’s just a single broker, it’s good enough. This is collecting sensor data, when the soldier is moving around. Like he’s making pictures, he’s collecting other data, whatever. Even if he’s outside of the internet wide area, he can continue collecting data, because it’s stored on the Kafka broker. Then when he’s going back into the Wide Area Network, the Kafka automatically starts replicating the data into the command post. From there, you can do the analytics in the command post for this region. You can also replicate information back to Confluent Cloud in this case, where we collect data from all the different command posts to make more central decisions. With this example, I think it has shown you very well how you can design very different architectures, all of them resilient, depending on the requirements you have, on the infrastructure you have, and the SLAs you have.

Why Confluent?

Why do people work with us? Many people are using Kafka, and it’s totally fine. It’s a great open source tool. The point is, it’s more like a car engine. You should ask yourself, do you want to build your own car, or do you want to get a real car, a complete car that is safe and secure, that provides the operations, the monitoring, the connectivity, all these things. Then, that’s why people use Confluent platform, the self-managed solution. Or if you’re in the cloud, and you’re even more lucky, because there we’re providing the self-driving car level 5, which is the only truly fully managed offering of Kafka and its ecosystem in the cloud. In this case, across all clouds, including AWS, Azure, Google, and Alibaba in China. This is our business model. That’s why people come to us.

Questions and Answers

Watt: One of the things I found really interesting was the edge use cases that you have. I wanted to find out, like when you have the edge cases where you’ve got limited hardware, so you want to try and preserve the data. There’s a possibility that the edge clusters or servers won’t actually be able to connect to the cloud for quite some time. Are there any strategies for ensuring that you don’t lose data by virtue of the fact that you just run out of storage on the actual edge cluster?

Waehner: Obviously, at the edge, you typically have limited hardware. It will of course depend on the setup you have. Like in a small drone, you’re very limited. Last week, I had a call with a customer who was working with wind turbines. They actually expect that they are sometimes offline for a complete week. That really means high volumes of data. Depending on this setup, of course, you need to keep storage because without storage, you cannot keep it in a case of disaster, like disconnectivity. Therefore, of course, you have to plan depending on the use case. The other strategy, however, is then, if you really get disconnected longer than you expect, maybe not just a week in this case, but then really a month, because this is really complex to fix, sometimes in such an environment.

Then the other strategy is a little bit of workaround because even if you store data at the edge, in this case, there are still different kinds of value in data. In that case, you can embed a very simple rules engine by saying, for example, if you’re disconnected longer than a week, then only store data, which is XYZ, but not ABC. Or the other option is instead of then storing all the data, in this case now we pre-process the data at the edge and only store the aggregations of the data, or filter it out in the beginning already. Because reality is in these high volume datasets, like in a car today, you produce a few terabytes per day, and in a wind turbine even more, and in these cases, anyway, most of the data is not really relevant. There is definitely workarounds. This again, like I discussed in the beginning, always depends on the SLAs you define, how much data can you lose? If a disaster strikes, whatever that is, what do you do then? You also need to plan for that. These are some of the workarounds for that kind of disaster then.

Watt: That’s really interesting, actually, because I think sometimes people will think, I just have to have a simple strategy, like first-in, first-out, and I just lose all the last things. Actually, if you have that computing power at the edge, you can actually decide based on the context, so whether it’s a week or a month, maybe you discard different data and only send up.

How good is the transactional feature of Kafka? Have you used it in any of your clients in production?

Waehner: People are always surprised when they see this feature, actually. I’ve worked for Confluent now for five years. Five years ago, this feature was released. Shortly after I started, exactly once a month, things were introduced into Kafka, including that transaction API. The API is more powerful than most people think. You can even send events to more than a single Kafka topic. You open your [inaudible 00:38:28], you say, send messages to topic one, to topic two, to topic three. Either you send all of them end-to-end, or none of them. It’s transactional behavior, including rollback. It’s really important to understand that how this is implemented is very different from a transactional workload, from a traditional system like an IBM MQ connecting to a mainframe, or to an Oracle database. That is typically implemented with two-phase commit transactions, a very complex protocol that doesn’t scale well. In Kafka, it works very differently. For example, now you use idempotent producers, a very good design pattern in distributed architectures. End-to-end, as an end user, you don’t have to worry, it works. It’s battle tested. Many customers are using it, and so you really don’t have to worry about that. Many customers have this in production, and this is super battle tested in the last years.

Watt: We’ve got some clients as well actually, that take advantage of this. As you say, it’s been around for a little while. It has certain constraints, which you need to be aware of, but it certainly is a good feature.

Waehner: In general, data streaming is a different paradigm. If you’re in an Oracle database, you typically do one transaction, and that’s what you think about. If you talk about data streaming, you typically have come data in, then you correlate it with other data, then you do the business transaction, and you do more data. The real added value of the transactional feature is not really just about the last consumer but it’s about the end-to-end pipeline with different Kafka applications. If you use transactional behavior within this end-to-end pipeline where you have different Kafka applications in the middle, then with transactional behavior, you don’t have to check for duplicates in each of these locations, and so on. Again, because the performance impact is very low, it’s really something like only 10%. This is a huge win if you build real stream processing applications. You really also not just have to think differently about how the transactions work under the hood, but also that a good stream processing application uses very different design patterns than a normal web server and database API application.

Watt: Another question is asking about eventual consistency and real-time, and what the challenges are that you see from that perspective.

Waehner: That’s another great point because of eventual consistency. That’s, in the end, the drawback you have in such a distributed system. It depends a little bit on the application you build. The general rule of thumb is that also, you simply build applications differently. In the end, it’s ok if you do receive sometimes a message not after 5 milliseconds, but sometimes if you have a spike of p99, and it’s 100 millisecond, because if you have built the application in the right way, this is totally ok for most applications. If you really need something else, there is other patterns like that. I actually wrote a blog post on my blog about comparing the JMS API for message queues compared to Kafka. One key difference is that a JMS API, out of the box, provides a request-reply pattern. That’s something what you also can do in Kafka, but it’s done very differently. Here again, there’s examples for that. Also, if you check my blog, there’s a link to the Spring Framework, which uses the Spring JMS template that even can implement synchronous request-reply patterns with Kafka.

The key point here to understand is, don’t think about your understanding from MQ, or ESPs, or traditional transactions and try to re-implement it with Kafka, but really take a look at a design pattern on the market, like from Martin Fowler, and so on for distributed systems. There you learn, if you do it differently, you build applications in another way, but that’s how this is expected then. Then you can build the same business logic, like in the example I brought up about Robinhood. Robinhood is building the most critical transactional behavior for trading applications. That’s all running via Kafka APIs. You can do it, but you need to get it right. Therefore, the last hint for that, the earlier you get it right in the beginning, the better. I’ve seen too many customers that tried it for a year by themselves, and then they asked for review. We told them, this doesn’t look good. The recommendation is really that in the early stages, ask an expert to review the architecture, because stream processing is a different pattern than request-reply, and then transactional behavior. You really need to do it the right way from the beginning. As we all know, in software development, the later you change something or have to change it, the more costly it is.

Watt: Are there cases actually where Kafka is not the right answer? Nothing is perfect. It’s not the answer to everything. What cases is this not really applicable?

Waehner: There’s plenty of cases. It’s a great point because many people use it wrongly or try to use it wrongly. A short list of things. Don’t use it as a proxy to thousands or hundreds of thousands of clients. That’s where REST proxy is good, or MQTT is good, or similar things are good. Kafka is not for embedded systems. Safety critical applications are C, C++, or Rust. Deterministic real-time, that’s not Kafka. That’s even faster than Kafka. Also, Kafka is not, for example, an API management layer. That’s big if I use something like MuleSoft, or Apigee, or Kong these days, the streaming engines get in the direction. Today, if you want to use API management for monetization, and so on, that’s not Kafka. There is a list of things it does not do very well. It’s not built for that. I have another blog post, which is really called, “When Not to Use Apache Kafka.” It goes through this list in much more detail, because it’s super important at the beginning of your project to also understand when not to use it and how to combine it with others. It’s not competing with MQTT. It’s not competing with a REST proxy. Understand when to use the right tools here and how to combine them.

See more presentations with transcripts

MMS • Rob Zuber

Subscribe on:

Transcript

Shane Hastie: Hey, folks, QCon London is just around the corner. We’ll be back in person in London from March 27 to 29. Join senior software leaders at early adopter companies as they share how they’ve implemented emerging trends and best practices. You’ll learn from their experiences, practical techniques, and pitfalls to avoid, so you get assurance you’re adopting the right patterns and practices. Learn more at qconlondon.com. We hope to see you there.

Good day, folks. This is Shane Hastie for the InfoQ Engineering Culture podcast. Today, I’m sitting down with Rob Zuber. Rob is the CTO of CircleCI, and has been there for about eight years, I believe, Rob.

Rob Zuber: Yeah, that’s right. A little over.

Shane Hastie: Well, first of all, thank you very much for taking the time to talk to us today.

Rob Zuber: Thanks for having me. I’m excited.

Shane Hastie: And let’s start with who’s Rob.

Introductions [00:57]

Rob Zuber: So as you said, CTO of CircleCI. I’ve been with the company quite a while now, joined through an acquisition, but it was a very different company at the time, small company acquiring small company sort of thing. And so prior to that was also doing some CI and CD related activities. Specifically around mobile, was the CTO of three of us. We didn’t even really have titles. It’s kind of silly at that point, but many startups before that, so have been, I guess both a practitioner from a software development perspective, a leader. And then now as you know, we’re specifically in the space of CI/CD, trying to help organizations be great at software delivery.

I have this really fun position of thinking about how we do it, but also thinking about how everyone else does it and looking at, oh, well that’s interesting. I’d be interested to learn more from this customer. Or going and meeting customers and sharing with them and understanding their problems and talking to them about how we do things. So for me, it’s having worked in many different spaces, from telco to consumer marketplaces to embedded devices. Selling to me, I guess is the best way to think about building a product for me as the customer, both as a leader and an engineer, it’s just been a really, really fun ride. I’ve always enjoyed software and it’s so fun to just be at the core of it.

Shane Hastie: So what does it mean to be great at delivering software?

What does it mean to be great at delivering software? [02:09]

Rob Zuber: Oh, wow. At the highest level, I think being great at delivering software comes down to a couple things. And this is not necessarily what we would put in our stats or the DORA metrics or whatever, but feeling like you have confidence that you can put something out in front of your customers and you can learn from it successfully. And I don’t mean feeling like everything you build is going to be perfect and it’s going to be what customers want. Because I think that’s never true. What’s true is that you’ll get to what they want if you feel really comfortable moving quickly, trying things and putting things in front of your customers in a way that doesn’t throw off their day, but gives you real feedback about what it is they’re trying to do. Whether the thing that you’ve done most recently is helping them and really kind of steer towards and adapt towards the real customer problem.

I’ll say customers, but I’m a customer of many different platforms that this is so that I’m including myself in this. We’re sort of notoriously bad at expressing what it is that we actually, the problem we want to solve. We sort of say, “Oh, it would be really cool if you had this, or it’d be really cool if you had this,” but we don’t understand what it is we’re talking about 50% of the time.

I think designers are on the receiving end of this more than anybody. Everybody’s a designer, but the best they can come up with is, “I’ll know it when I see it.” And so feeling like you can really move quickly so you have all the confidence in terms of the quality of your software and knowing what you’re building and that you’re building it correctly, but you being able to use that to really adapt and learn and solve customer problems. If you’re not solving customer problems that are real and are important and are a pain point, and ideally doing that faster than your competition, then there’s areas where you could be better, I guess is the best way I would say.

Shane Hastie: Within CircleCI, how do you enable your teams to do this?

Building a culture of safety and learning [03:50]

Rob Zuber: One of the things that I called out in there is having the confidence that you could do that. And a lot of that stems from tooling. Unsurprisingly, we use our own tooling, we think about the customer problem, try to orient ourselves around the customer problem. That’s all important. But that confidence piece is also in how you behave. Building that model that says, that mental model that says we are trying to learn. What we’re trying to do here is we’re trying to learn, we want to put things out. Those things are going to be small. There’s not an expectation that we’re going to be perfect and know everything in advance, but rather we are learning by testing. We’re starting with a hypothesis, we’re validating that hypothesis and we’re building on top of it. And so I have my own podcast and on that, we spent the last season of it talking about learning from failure.

And I think that’s a really, really important part of this is, A, how you frame failure in the first place. There’s a big difference between, we thought we were going to be perfect and it didn’t work out versus, we’re trying an experiment, we have a hypothesis, and we know going in that we’re trying to learn. It creates a safe space to learn and move quickly. And then how you react when you actually did expect something to go well. And that might be maybe it was an operational issue or something else like that. And creating the space where the response to something going poorly, let’s say, is great. What can we learn from this? How can we be better next time? Versus how could an individual have done this thing? Sadly, this comes up probably in all engineering organizations, but you’ll see the difference. The issues come up everywhere.

The difference between you should have known better and how on Earth does the system allow you to be in a place where this could happen? Those are very, very different questions. And my belief at this point is, if you allow humans to do things in systems manually as just as an example, you’ve set yourself up to have a problem. And it’s certainly not the person who did the manual thing and finally made the mistake that everyone knew was coming sort of thing. So A, it’s that overall cultural picture. And then as you start to identify those things, you say, “Okay, cool. How do we fix the system? How do we create a system where it’s safe for us to experiment and to try?” The culture has to be safe and the systems have to be safe. You need to have risk mitigations basically to allow you to try things, whether it’s the product or shipping new capabilities.

Shane Hastie: How do you make sure that you do that when things go wrong?

Leaders need to model safety and curiosity, especially when things are going wrong [06:11]

Rob Zuber: It’s an excellent question. I think if you’re asking me personally, and there’s two levels to this, how do you do that personally and then how do you build that in an organization? I think the best thing that I can do as a leader is signal. My behaviors, unfortunately for me, carry more weight than other behaviors in the organization. If an individual engineer says, “Why’d you do that?” They’re peer individual engineers, like, “You don’t understand how hard this is. Whatever, I’m going to get on with my day.” When a CTO shows up and says, “Why would you do that?” That carries a lot more weight. So really, it’s hard, particularly if we’re talking about, we’ll call them the catastrophic failures. Hey, we were experimenting, we knew this. That’s easy. It’s easy to set up, it’s easy to structure. We knew that in advance. The catastrophic failures actually, we allowed this project to run for nine months and it turns out it was a complete waste of money or something happened and we were operating with the best information that we had at the time, but that information wasn’t very good.

And now we’ve got a big problem on our hands. Those are usually crisis moments. And I would say people’s guards are down. You’re not carefully crafting every word. And so it’s practice. That has to be the habit. And I will say it’s been a long time and nobody’s perfect. I would say I’m not perfect, but acknowledging and recognizing the impact. And so still speaking to the leader point of literally everything you say and do and taking that moment to stop and think, “Okay, what is the opportunity here? What is the learning opportunity? How do I help people get through?” Often it’s the crisis in the first place. How do I get through this situation? And then, how do we learn from it? And also, I mean if we’re talking about specifically those crisis moments, usually there’s crisis remediation and then sort of learning and acknowledging and separating those things, I think it’s easy.

I’ve seen this behavior before to show up in… We’re still solving the crisis and start asking questions, how could this ever have happened? And that is just not important right now. We need to get through that moment and then we need to make sure we have a long look to say, “How did we get ourselves into this situation? What is it about?” I mean, the reality in the situation… I’ve used examples, just this required human intervention and humans ultimately are going to make a mistake. It just happens, right? I don’t care how well you document something, how careful you ask someone to be. It doesn’t help. You need a system in place. So there’s that. But if you look at other more complex scenarios, what you’ll find is they’re complex. Someone else has been asking for something or creating a sense of urgency around something that maybe wasn’t as urgent or something was misinterpreted.

Backing your way through all of that is challenging. And I think again, for me, it’s about curiosity. If you’re truly curious, if you’re genuinely curious about what is it in this organization that’s leading to these types of scenarios, well, I guess that’s number one and then if you act. Right? If I say, “I’m open to your feedback, what’s going on?” And then you give me some feedback, I’m like, “No, no, that’s not it.” Are you going to give me more feedback? I doubt it. Right? That was just a waste of your time. And it was. You’re like, “No, I’m literally seeing this problem.” If I say, “Oh, tell me more about that, let’s sit down and think through it. What could we do differently? How would this turn out differently next time?” And then go, I mean you’re not always going to succeed, but go try to implement some change around that so it’s safe and the action gets taken, then that’s where you start to have people offer up ideas. Right?

And come out and say, “Actually, I was in this scenario where I made this mistake or whatever. I didn’t understand the…” I mean I’m using sort of specific examples, but it could be anything from I decided to launch this feature that it turns out wasn’t helpful and we invested money in it. We had a production issue where we, whatever, pick any example, it maps anywhere. We honestly, openly, curiously explore the issue, right? Sure, we can say this person took this action, but when the reason we’re asking, understanding that is, what did that person know? What could they have known? Why were they prevented from knowing that thing, et cetera. Then that’s an open, honest, just curious exploration versus I need names, whatever. And so all of that, I guess I’ll just stop at signaling. At a high level, if your leaders don’t behave in that way, the rest is a waste of time to talk about, honestly.

Shane Hastie: Looking back over your career, and we can start the progression from developer through to senior exec today, what would you share? What advice would you give others who are looking at the same pathway when they’re along that journey?

Advice for aspiring leaders [10:26]

Rob Zuber: The first piece of information I usually give is don’t take my advice. Mostly because my progression is not at all a progression. So maybe there’s something in there. I started out working in an electronics factory actually doing process engineering because that was related to what I had studied in school, which was not software. Built some software to kind of understand our systems. Enjoyed that. Went to work at a startup, late ’90s, it was the time and the rest is history. And then did a bunch of small startups. So I didn’t really have a clear progression, but it’s actually one of the things I talk about with a lot of people getting into the field or even considering what field they want to get into, which is that you don’t have to have a straight progression. You can bring value to yourself in terms of this expression from the book Range, “match quality”, the thing that you end up ultimately doing, being a really good match for the strengths that you bring to the table, the most likely way that’s going to happen is not by filling out some form before you apply to college.

It’s actually going and doing a bunch of things and saying, “Wow, I’m really good at this and I’m really bad at this.” And just being able to say, “I’m actually really not that good at this,” whatever. I mean, I was a head of business development in an organization for a brief stint. I have a ton of empathy for people who do business development and sales. I think that makes me a better executive, but I was absolutely garbage at that job. I was like, “Oh, we’re doing business development. I should probably write some code to get this done.” The idea of cold calling, trying to build relationships with business, it’s not my thing. So that’s fine, good to have done it because it helps me understand where my strengths are and it helps me understand what other people might do. And so I think a lot of people get on this, I need to be a developer and then a senior developer and then a staff and then this, and then that.

And then if I’m not moving forward, I’m moving backwards, but part of the non-linearity of my career is I’ve been a CTO for over half of it because I tried a bunch of things and then I went and started some companies and I was like, “Well, there’s no one else here to be the CTO, so I guess I’ll just do that.” And it wasn’t the same CTO job that I have now, but in particular, if you want the full spectrum and I guess a crucible of learning, starting a business and being responsible for other people and trying to build a product and trying to find product market fit, all of those things is a great way to get that learning. But it’s not for everybody. So there’s other ways you could get that learning, but really for me, I kind of ignored the path, not because I was brilliant, but because I didn’t really have a plan and I believe it paid off for me.

Shane Hastie: Circling around, why is the culture at CircleCI good?

What makes the Circle CI culture good? [12:54]

Rob Zuber: Well, I think there’s a few things that play into it. I mean, one, company was founded by people. So I’m not a founder of CircleCI. When I say we, there was three of us that were acquired in and one is now the CEO. So we stepped into an environment where we were fortunate to inherit some things. And the company was founded over 10 years ago, but culture lasts. And so we stepped into a group of people who were genuinely curious about a problem, cared deeply about their customers, and were building a product that people were excited about. The people that worked there were excited about, the customers were excited about. So there was a lot of connection between customer and the engineers building every day. Of course, in a 14-person company, which is how big it was, there’s always going to be that kind of connection.

Might be possible to not have it, but I mean, we did our own support calls and everything else, you’re much closer. But being able to tap into that and build on it and then make tweaks as you go, I think is a great thing to start from. We weren’t coming in and saying, “Oh, my gosh, we got to turn 180 degrees. And I think one of the things that I reflect on sometimes is, there were great goals culturally that we had to reimplement as we grew, but we appreciated the goals. So one of those is transparency. I think this was common at the time, I don’t know if this still happens, but it was an organization where every piece of information was shared with everybody. Everybody was on every email distribution list. Everything was just wide open. And we’ve never been concerned with that level of transparency other than the overhead.

If I give you A, the reams of data, you will selectively choose whether it’s emails or anything else, you’ll selectively choose which parts to look at because they’re interesting to you. And if you and I look at it, even exactly the same data, we’ll draw different conclusions. So it’s hard to keep alignment with raw data. It’s important for leaders, others in the organization to say, “Hey, I looked at this and this is the conclusion I drew.” Here’s the data if you’re interested in challenging my conclusions, but let’s come to agreement on the conclusions because that’s what’s going to drive how we behave now. So I think that openness and with that, the willingness to say, “You might have something really interesting to add here.” I’m not saying I have all the answers, please just take my answers. I want to build that context and share the context.

But again, if I just give you the raw data, you’re going to go do something totally different because we have different interpretations of what really matters. So let’s get together and align on that, but not because I’m just telling you, “Here’s the answer,” because I actually really value people around me challenging my interpretation. I don’t have all the answers. I’m open to asking the questions, and if no one has any suggestions, I’ll seed the conversation or try to ask better questions to get people to think a little bit more deeply. There’s always someone who knows more than me. And I mean I’m sort of making this about me, but I think the leadership team that we have built is reflective of… So Jim is our CEO, he and I worked together before CircleCI, but our perspective of how we want to work, and we’re always looking for people that will challenge us comfortably.

Like, “Oh, that’s interesting, but maybe you didn’t consider this. Because when I looked at it, I saw that this other way.” “Oh, okay, tell me about that.” There’s always someone who’s closer to the problem. There’s always someone who knows more or there’s someone coming in from the outside with a different set of experiences, maybe more experience in a particular area or just a, “Hey, we tried this at my other organization, have you considered that?” Right?

So I think that willingness and openness to that and then to go experiment, right? Say, “Cool, we have a lot of ideas, let’s try something. Now let’s not sit here and talk about all our ideas, but let’s try something and let’s know that we’re trying something.” Right? When everyone frames it that way. And again, we’re not perfect, but I think that this is a thing that we really strive for is let’s try and we might be wrong, we might be right, but when we try, we’ll learn something. We’ll have new information and then we can try something that we know to be closer to the ultimate goal or the ultimate answer. Whether that’s product design, whether it’s how we run our organization, could be anything. But I’ll happily sit down and have an open conversation with you about what I’m seeing. I’ll share the context from where I’m seeing it, and then let’s kind of see how your information and knowledge can combine with mine to come up with a great answer. That would be my favorite part. Call it that.

Shane Hastie: So when you’re bringing new people into the organization, what do you look for?

Bringing new people into the organization [17:06]

Rob Zuber: There’s a couple big things. What I’ve just described in terms of open, comfortable, bringing different perspectives to extend our view of a particular problem set or in a particular area, whatever that might be. This is me personally, but also belief that we would have in the organization. Not a huge fan of you’ve done this thing, this exact thing before, because the length of time for which that thing is going to be our problem is probably measured in hours or days. And we don’t measure hires in hours or days. If I need to understand how to do a very, very specific thing, I can hire a consultant for a week who’s going to tell me how to do it. And I got the knowledge that I need. I want to know how did you learn to do that thing? What were the circumstances in which you were trying to do something and you realized this was a great solution to that problem, and how did you quickly learn how to take advantage of this thing and make it work?

On the flip side, I guess this is the balance. If there is a known solution, I don’t want you to quickly learn a new thing just because that would be fun for you. I want you to be focused on the customer problem and I want to scope down what we do to solving the problems of our customers. If there’s a thing we’re doing that has been solved a million times before, let’s take one of those million and focus on the thing that we do that’s unique and valuable in what our customers pay us for.

But in solving that problem where we do have unique, interesting challenges, I want to know that you’re comfortable not just doing the thing that you did before, but seeing the customer problem, identifying with it, and then finding a path to learn as quickly as possible, get there, solve it, we’ll implement something new if we have to, and being able to see the difference. So I think that balance is really important. And then perspective, if everybody thinks the same way, if everybody comes from the same back, I mean, known issue but not solved, then you end up in this group think and you get the solution you’re going to get instead of actually seeing it from a bunch of different angles and being able to get usually a really novel and exciting new perspective.

Shane Hastie: So how do you deliberately get different perspectives, the diversity of viewpoints?

Deliberately bring in diverse perspectives and backgrounds [19:06]

Rob Zuber: I don’t have a great answer to this, but I’ll tell you what I’ve been thinking about lately. Because if I’m not going to be honest, then who’s going to be honest with me? So one of the things that I’ve been thinking about a lot lately after wrapping up a season on failure, now we’re talking about teams. We’re building up a season, is building teams. In software, and this is true in other departments. Obviously, I’m from an engineering background and in a company delivering products to engineers, but this is true in other departments for sure. But we deliver software as a team, right? We work as a team every day. Or I say, right, if you’re a solo open source developer, not true. But for most organizations you’re building teams and then teams of teams. And then we often hire, and by we, I mean in the industry, but we’re imperfect as well, as if every person is being measured individually, as if every person needs to come with a set of skills and capabilities and whatever that would cover the whole thing.

And so that makes it not only not okay to be an imbalanced… And I’m saying I’m totally imbalanced. I’ve already said I’m really good at some things and I’m absolutely garbage at other things. And then there’s spots in between. And if you know that and can say, “Hey, I’m really good at this. I have gaps over here. It would be great to have someone else on the team who’s strong over here.” And this isn’t, not getting to all of the ways that we could talk about diversity, but just, I am actually trying to build the strength of the team. And I have junior people on the team. I probably need a senior person on the team or two or whatever, however you think about the makeup of your team. But it’s great to have energy and enthusiasm and be super smart and whatever. But there’s pathways you don’t have to go down that someone can say, “Oh, yeah, I did that before. Here’s some additional context to consider. Would you still go that way or would you try something else?”

You could save a lot of time when someone has experienced something, balanced against we never try anything new because we just do whatever they say. And so I think that team unit is the thing that’s really, I’m trying to pick apart and think better about how to build for, because again, that’s how we do all of our work. And not everyone is into sports analogies, but I think it’s really easy to make them in this regard. You build a team of players with different strength. And I think about any team that I’m structuring in that way, meaning not everyone has to be able to do everything. The team needs to be able to perform with all of their responsibilities.

And that might be like, I understand databases and you understand front end or whatever the full scope of our responsibility is. And it might be I’m super extroverted and I love presenting our work to the rest of the organization. You’re really quiet, but you spot the avenues that we were about to go down that are just going to ruin us. We have very, very different perspectives, but as a team, we achieve everything that we… We save ourselves some really big problems and we make sure everyone knows what we’re working on. If we just hired extroverts, that would be a really weird challenge in software engineering, but we would have a whole bunch of people who were clamouring and then would be fighting over who got to do the presentation. It’s usually not the case, but I need someone or someone for whom that’s a goal, and that might be the way that I fill it in.

It’s like, oh, we don’t necessarily have all the skills here, but the manager is really good at that and we’re hiring someone who wants to grow into that and we see the potential in them. So let’s try to summarize this. The way we prevent ourselves from diversifying and getting a lot of different perspectives and different backgrounds and all of the other forms is we fashion one archetype and say all of our team members need to be this, but it’s solving the wrong problem. It’s the team that I want to be great and so I need some coverage in this area and some coverage in this area and some coverage in this area. And I can have people who are great at each of those things if I allow for them to be not as great in other areas because there are people who are great in those other areas. And the team as a unit is great.

Shane Hastie: Thank you very much. Some really interesting thoughts there. I’d like to end with, what’s the biggest challenge facing our industry today and what do we do about it?

Complexity is a big problem for software development [23:04]