Month: March 2023

Podcast: Becoming a Great Engineering Manager and Balancing Synchronous and Asynchronous Work

MMS • James Stainer

Subscribe on:

Transcript:

Shane Hastie: Good day folks, this is Shane Hastie for the InfoQ Engineering Culture podcast. Today I’m sitting down across about 13 time zones with James Stanier. James, welcome. Thanks for taking the time to talk to us today.

James Stanier: No, no worries. Just before we started recording, talking about time zone difference and how you are in a beautiful mid-summer weather at 9:00 PM and I’m 8:00 AM in the morning in freezing cold. So, the magic of the internet, huh?

Shane Hastie: It is indeed. We can be together remotely and I can experience my summer. You are the Director of Engineering at Shopify. You were also the track host at the recent QCon San Francisco conference, looking at what’s next in terms of remote and hybrid work. But before we delve into either of those, I’d like to just explore who’s James?

Introductions 01:04

James Stanier: That’s a very good question. I asked myself that on a regular basis. So, you’ve said my job title already. I work for Shopify. I’m Director of Engineering. I run a bunch of initiatives there in engineering. Also, I guess I could call myself an author. I’ve written a couple of books. The first one was called Become an Effective Software Engineering Manager and it’s with Pragmatic Programmers and that book’s done really well.

And it’s all about getting into leading a team for the first time and what are the tools that you need to do so. And then I wrote a follow-up to that book about a year ago now called Effective Remote Work, which is very similar template of how do you get into something for the first time and what are the tools that you need. But this time turning towards remote work as the thing that people are learning. And really both of those, I wanted to be field guides to that particular area.

It’s super practical, super hands-on. And I guess moving away from work even further, leave the work at the side, I’m a pretty normal human being. I like music, I like playing music, I like taking photos, I like being outside. I like going on hikes and runs and cycles and all those nice things.

Shane Hastie: Thank you very much. So, let’s start by maybe exploring the two books. What does it take to be an effective software engineering manager?

What it takes to be an effective software engineering manager 02:09

James Stanier: It’s a good question. And I think where I went with the book, where did it come from? That’s probably a better question to ask myself. So, I probably in about 2013, a while ago now, became a team lead for the first time, engineering manager, whatever you want to call it. The landscape for material is better now for sure. There’s other authors as well as myself that has written really good field guides. But back then there wasn’t a huge amount of really good material as to how to run teams practically in a modern way for software engineering folks.

So, when I started that and I was part of the startup at the time, I joined at the seed round and then we did a few venture rounds and we grew very, very quickly. I had the opportunity to step up and lead the team, great opportunity and I just didn’t know how to do it. And I think I found myself surrounded by lots of startuppy people who had never done it either. So, I didn’t have good mentors and I didn’t have lots of people in my network who were great managers, because I was fairly fresh out my PhD at the time. So, I just wrote a blog and I wrote every week on my blog stuff that I was learning, things that I was thinking about on that management topic.

And I wrote that blog for four years and that kind of manifested into the book. And really what the book was, to come back to your initial question as to what does it take? It really was writing every single week my thoughts and then those thoughts coalescing over a longer period of time into what I thought were really good tools for people to use. And I use the word tools very specifically there, because I think there’s a lot of advice out there for managers, programmers, authors, whatever, any kind of industry, that’s extremely prescriptive.

There’s lots of articles that say, “Here are the top five things you need to do to do this thing.” And often that’s very shallow. So, I was very careful to write the book in such a way that was super practical and hands-on, but didn’t prescribe too much. Instead it introduced techniques, tools, frameworks, and then kind of gave that to the reader to say, “Hey, go try this out. It could go this way, it could go that way, but here’s like the core of what I think the job is and here’s the scaffolding you should build it upon.” And as a follow on to that, I think that’s very much the framework I went with with effective remote work too.

It’s not, “You have to do this, because this is the way you do remote work.” Instead it was, “Here’s the toolbox. Go and learn how to become a carpenter with the tools,” rather than here’s how to do it.

Shane Hastie: What’s a piece of practical advice for a new software engineering manager, a new team lead in that space?

Practical advice for new managers 04:35

James Stanier: There’s a couple of things. So, one of them, which I think is probably the core principle, is that it’s fundamentally a different job and you have to get into that mindset very quickly in that it’s a new set of skills, your output is defined differently. And the quicker you can get into that mindset, the better that you will do. What I mean by that is when you’re leading a team, when you’re managing a team, your output is the output of the team for the first time. Whereas when you’re an individual contributor, you are very much focused on your output. So, how can you be as efficient as possible? How can you ship the most code or build the best architecture? But you’re very much judged by your own personal output in the context of the team. But when you are a manager, your output is the output of the team.

So, what that might mean is that from one week writing some code might be the most impactful thing that you could do for the team, because it may move them forward. But on another week, coaching people for most of your time and not writing any code whatsoever might be the thing that makes the output of the team the best. So, getting into that mindset early, and I guess as a follow on from that, being very mindful that most people who get into technology and become individual contributors have spent an awful lot of time practicing that thing. So, maybe they went to university, they went to college, maybe they hacked around with programming languages as a teenager growing up, they’ve had a lot of hours of practice into becoming the craftsmen. And often when people step into a management role for the first time at work, they’ve never really done any formal training whatsoever.

Taking a management role should not be a one-way door 06:06

So, they might not even know if they’re going to be good at it. And this isn’t necessarily just for the individual, but for the person who is the new manager and the contract that they have with their manager to know that, “Hey, you’ve never done this before.” Obviously that person doing that job for the first time should get some training, some support, some continual coaching, yes. But also having a safety net I think is super important to say, “Hey, can I do this for a certain period of time with a two-way gate where I can go backwards and go back to individual contributor if it doesn’t work out?” And that being a perfectly acceptable outcome.

And I think that’s something that I’ve done a lot with my reports over time who wanted to become managers, is try and give a safe environment to say, “Hey, this is totally new in terms of a job. So, if you want to try it for a bit and then go back, that’s cool. Not a problem whatsoever,” because there is no formal training.

Shane Hastie: Thank you very much. And let’s jump to the effective remote. Obviously that’s become so much a thing since COVID, but it was around before, but when we were chatting earlier you made the point, it’s a hygiene factor now. Organizations don’t have a choice. People want to work remotely or have a hybrid work environment. There’s still a lot of, I would say leaders in organizations, who are struggling with this.

The ability to work remotely work is a hygiene factor in technology organisations now 07:39

James Stanier: It’s true, it’s true. I mean one thing before I start talking about this that I have to catch myself or my own biases. I work in technology, you work in technology. So, when we talk about everybody, we usually mean everybody in technology. And I’m going to scope my answer to that to begin with, because often it can get a little bit tricky to answer, but there is a lot of inertia. Why I don’t know. Now I’m biased in my opinions towards remote work, because I work for a fully remote company and that was a choice of mine. It was a culture that I wanted. In terms of those that are struggling, I can’t answer why people may want to go back to the office. There are many reasons. Community, some people really like that home life separation in the physical space, all of that’s great.

Hybrid is hard and should be avoided 08:24

But the thing that’s hard is hybrid. And I think hybrid is way harder than being one or the other. Being in office, being remote, you are just doing one thing and you can focus on doing that one thing well. So, if you want to go all in on the office, have great offices, make sure that they’re always filled with people so that when you go into work, there’s lots of people to talk to and interact with, you get that office environment and that’s great. If you want to go fully remote, then that’s also great because you can focus all of your time, your effort and your money into making the remote experience really good. So, if you’re not paying for any offices which are very expensive, then you can probably afford to have a stipend that kits everyone’s home office out really well.

So, it works if you’re doing one or the other. But if you’re doing hybrid, it’s hard because how do you do two things well? And that’s not just in terms of allocation of time and money, i.e. how do you have amazing offices that are full of people, because it really sucks going into an office when there’s only three people in out of a potential 250. It just feels dead. It’s not the same. But how do you provide a world-class office experience and a world-class remote experience? And laid on top of that is that the environment that you’re in, whether you like it or not, and even if you are the most disciplined practitioner of asynchronous working and using all the tools that we have primarily rather than synchronous in person stuff, you just can’t fight the office when you’re in it.

It’s so easy to have conversations with people in the physical space and then forget that three quarters of the team just haven’t heard what you’ve talked about because you’ve just done it in person. So, it’s that layer of hybrid that’s super hard. And personally I am curious to see over the next say five years how it works out. Will those on the fence drift back to their preference and choose one only, or will we still be in this middle ground? It’s really tricky actually at the moment, isn’t it, with the economy and the recession and certainly the layoffs that we’ve seen in tech. Companies are trying to save money, and doing hybrid well and saving money at the same time is even harder. So, I’m curious as to what you think as well. Is hybrid something that you can see happening forever, or will people choose?

Shane Hastie: Hoisted on my own petard. My personal feeling, and I will confess a bias as well, I’ve worked remotely for the last seven years and loved it. And I find the opportunity to get together in person occasionally very valuable, but I wouldn’t particularly want the once a week. I would want this to be once a month, maybe every couple of months, and then have that together time, very, very focused on collaboration and building relationships and so forth. But going into an office to spend seven hours on Zoom calls with people who are not there just doesn’t work.

James Stanier: Yeah. And I think that’s the taste that people got during the pandemic that I think almost lifted the curtain on what was happening, so I can fully understand it. And I have been part of companies where everyone is in the office and there’s nobody else anywhere else around the world. I’ve been part of a small startup just in one building, one room, and that is a nice magical experience. But the reality is that most companies will grow, they’ll be successful and someone will be remote in some way. You’ll have another office in another location. It might even be on the same time zone. But you certainly, as you say, begin to find that each meeting that you’re having in a physical space starts to get more and more people who are on the end of a video call.

And the normalizing effect of the pandemic, which was that everyone started to experience those meetings with their own camera, their own microphone. Okay, we can’t fix time zones, that’s still a difficult thing, but when that communication playing field was completely leveled and everyone experienced what was a form of digital equality in some way, that the whole office thing seems like a bit of a strange charade after that, didn’t it? I mean I agree with you, at Shopify we do a few times a year have teams meet up very intentionally for things that are just easier to do in person. If you’re going to try and think about your next six-month roadmap and just jam loads of ideas together for a couple of days, yeah, it’s way easier to do that if you’re in the same room. But a couple of times a year is enough for me. Meet people, get the energy from that, but then bring it back and then focus on building things at home.

Shane Hastie: So, let’s talk about Shopify. You mentioned to me earlier 14,000 people fully remote. How do you build a great culture with 14,000 people in 14,000 locations?

Building a great culture with 14000 remote workers 12:41

James Stanier: That’s a good question. So, obviously I can’t take personal credit for everything they’ve done now. I joined in 2021, Shopify, so I’ve been there for a year and a quarter now. I think the first thing that comes to mind is intentionality. So, when Shopify went fully remote, it didn’t do so half-heartedly. It was very much, we are now fully remote, and that is how everyone is all the way from the CEO down to the individual contributor in every team. There is no physical center of gravity of the company anymore. Everyone is remote completely. And the money that was being spent on offices, a few of them remained open but were converted to meeting spaces. And I can always talk to that a bit more in a sec.

There was no office desk for anybody anymore. There was nowhere to go. You had to work from home and the money was reinvested into making sure that people had remote setups that work for them, being able to get super good quality standing desks, chairs, monitors, all the computer set up at home that you need. So, one was definitely intentionality. And then two was the tools. So, really shifting everything so that it was async friendly. And I think this was before Shopify went remote, but centralizing all the company documentation in a place that’s effectively an internal wiki and there’s a newsfeed where everything important flows by, making sure that all important meetings are streamed in such a way that you can play them back later async if they’re outside of your time zone, that they’re rebroadcast in different time zones for the important town halls so that different communities of people in different time zones can watch them together.

So, really just going all in I think was the main thing. And embracing it, seeing is exciting. And I think also mapping the experience of working there to the experience of the people that we are serving as well. So, it’s an eCommerce company, we have millions of merchants that use us around the world of all sizes of business and they are globally distributed and we are able to serve them. So, surely we can also work together collaboratively to build that product while not being in an office also.

Shane Hastie: Where does Conway’s law come into play?

Structure teams deliberately to take advantage of Conway’s law 14:38

James Stanier: I would say that in my experience, I guess for listeners that aren’t familiar with Conway’s law, it’s that the shape of the organization or the way the organization’s communication is structured reflects the way in which the software is organized and shipped. The classic sort of siloing thing. I think Conway’s law, in my experience, I mean one, it still happens when you’re remote but not so much from geographical clustering, more so on the way you organize your teams for sure. You see teams organized around a particular product and they focus completely building on that product and maybe they haven’t had the chance to stick their head up above where they’re working to go, “Oh, actually we could maybe reuse this piece of work over here that this other team’s doing.” So, the problems I think are the same whether you are remote or not remote.

And I think good team structure is the first thing you try and solve. And that’s not just individual teams, but also good group structures that are not necessarily siloed around building particular products, but instead have important metrics and missions that they contribute towards. That’s the way that we try to break it. So, we are very intentional with each team having KPIs that are meaningful. And the nice thing about working for an e-Commerce company is that everything that happens with our product is people running their business and using it to make money and to be successful. And that does make KPIs easier to ladder up because you can look at user adoption, you can look at the GMV, you can look at revenue of our merchants. So, we make sure that every team has a north star that they can go towards fairly autonomously in a way that doesn’t solve the problem by building things in a silo, and then structuring those groups so that everything ladders up to larger goals and encourages collaboration.

So, if you have a wider group which contains say eight or nine teams and they’re all working towards the same metrics, that naturally facilitates collaboration because you find the teams look to each other to go, “Hey, how can we all work together? Maybe if we are building this thing in this quarter, you could then fast follow in your team next quarter using what we’ve built so that it’s a multiplicative effect.” So, Conway’s law does happen, but I think you can fix it with metrics and team structure. And to address the siloing thing, I think communication is key there. So, this is something that we are still trying lots of different ways, trying to get better at. I don’t think there’s one solution to it, but how do you have teams communicate in such a way that you just naturally in your day-to-day get the sort of smell and the sight of other things that are interesting to you in your area?

The impact of removing all meetings from everyone’s calendars 16:39

And one thing we’ve been trying in the last few weeks was in the news that we had this kind of Shopify refactor thing in January where we had what was called the chaos monkey that was an automated script that went round and killed all the meetings in everyone’s calendar that were over three people. And this isn’t us saying that you should never have meetings, but I think there’s a leadership aspect of Shopify that says fight against silos, fight against wasting time, make sure you are spending your time on building things and then sharing what you’re building. And our teams over the last few weeks have been trying out, they’re not sprints, I’m not a huge fan of the word sprints, but sort of weekly heartbeats of teams where on Monday the teams really think about what they’d like to achieve in that week and then they think about how could that be demonstrated on Friday by recording a demo and sharing it more widely asynchronously.

And then that kind of bubbles up in the internal systems that we are using in order to share information. So, you sort of fight it by team structure, good metrics and then also just lots and lots of sharing. I can’t say that we’ve solved it, I’m not going to say that we’ve solved, it will always exist, but we’re fighting it.

Shane Hastie: So, asynchronous and your example there of removing all the meetings that are more than three people, pushing much more towards that asynchronous work. How do we keep relationships and bonds while still working very asynchronously?

Balancing synchronous and asynchronous work 17:54

James Stanier: I guess I’ll append onto your description of the meeting killing thing there, that we again haven’t said that you can’t ever have a meeting ever again, but I think there is great power in maybe once a year just setting fire to your calendar because it just accumulates cruft and you accumulate status meetings or group meetings that maybe once were relevant, but then you’ve just kept doing them forever because they’re just in your calendar. So, meetings are being booked back in, but we are asking people to be very, very intentional as to why they exist and also to just kill them when that importance does diminish and just kill them. The asynchronous meaning collaboration is difficult and personal bonds is difficult, is just truth. You can’t change that. And when we work asynchronously, typically the unit that is synchronous is still the team at Shopify.

So, we do, at least in my area, in the areas around me, we do structure teams so that they have a lot of time zone overlap. So, if you are on a team, your manager, your peers, usually only one to two hours difference. So, if you’re thinking about that in terms of continents, East Coast, West Coast of the US and Canada, Europe shares a fairly wide overlap in time zone. So, we still have teams so that they can be online at the same time most of the day. And that’s great, because there’s things that we do a lot of like pair programming, collaborative design, all the general facilitated group activities. It’s just way easier synchronous. But within a larger division of many teams, the boundaries between those synchronous teams is asynchronous, and that’s where it comes in as to sort of the information sharing between teams. If you are a manager, you don’t need as much overlap as your own manager or the other peer managers and your team, because you’re mostly writing to each other anyway. So, within teams is synchronous, between teams, those edges that the communication flows along are asynchronous.

Shane Hastie: How do you tackle re-teaming? How often do you reform teams? How do you move people around?

Be deliberate about reteaming 19:49

James Stanier:There’s a few things there. So, typically we find that people don’t want to move team too much themselves. And I can go onto my reasoning behind that in a second. Usually, and this isn’t actually a Shopify thing, this is now my own personal opinion. I think that reorgs are healthy because the business changes, the business needs change and you want to align teams to the business needs. So, you have to change the teams. It happens. Making sure that every team and area has clear metrics makes re-teaming way easier, because the purpose of changing the teams then is self-evident. Where I’ve optimized in the past at previous companies is the managers of teams stay fairly solid. So, if there’s some particular product, we try and keep the same manager if they want to be on it for a long period of time, but optimize so that people can self-select onto different teams if they want to over time.

Recently we went through an exercise where we sort of effectively just sent out a survey and said, “Are you happy on your current team? Would you prefer to work somewhere else?” And then we can get all that data in and then you can stack it up side by side with where is the business need for this year? And then you can take the overlap of those two and you go, “Okay, well, we need to rejig the teams a little bit, but also we have a whole bunch of people who would very willingly do something different,” which just makes the whole thing a lot easier. And I say every 18 months or so, it’s fairly healthy to re-team. And I think if you have structured your teams previously, by no means am I saying that we do things perfectly, but if you have structured teams well in the first place that they are very metrics driven rather than very siloed around particular parts of the architecture or particular products, then re-teaming is way easier.

Because I think people just naturally gravitate towards making an impact as opposed to naturally gravitating towards clinging onto their products. But I think a good mixture is every 18 months, try and do it intentionally, rip the bandaid off, be very clear in your communication, be bold, get it done. Do it in such a way that you think will last for at least 18 months, and also add in an element of self selection if you can, because I think that also has a really positive effect on people’s retention is that if you have the ability to sort of say, “Hey, I’d love to go and work in a different team,” and you can actually go and do it, is a net win for everybody.

Shane Hastie: And onboarding, this is one of the things that we hear of the horror stories about onboarding in person is tough, onboarding in remote is fraught with difficulty, or is it?

Onboarding new people in remote teams 22:13

James Stanier: It’s still challenging for sure. Onboarding is just hard full stop. Remote onboarding is challenging, but I think it has some benefits in the sense that what you really want in a good onboarding experience is some structure and some space and to make it really clear what you need to learn, and then have a really good handoff with your team at the end. I think the Shopify onboarding that I did in the program that we still run is excellent. And I’ve written about this and spoken about it before, which means I can tell you about it. And effectively when people join the company, it’s a four-week period of onboarding where we are very open and say, “Hey, this is your onboarding time.” That removes the stress of thinking that on day one you’re going to join the company and then we’re going to throw you into a team, give you a hazing with a high priority bug and then you stress out.

Instead we realize that onboarding somebody well, you only get one chance and that chance is when they join. So, take advantage of it, design a really good program, think about the funnel of the general things you need to know about the company and the mission and the values and then taper it down to their role over time. And you said remote? Well, Shopify is reasonably large now and we do fortunately have the staff that can facilitate these things, and our program is facilitated by people in our knowledge management team and they start in week one with what is quite passive learning for the new joiner, where in your cohort because everyone joins at the same time every month. So, you’ll have a cohort of people who are also new, which is quite nice because you can all be on Slack together, you can all be new together, you can sort of be protected together in a safe space.

And the first week is all about the company and the mission and the values, and you pretty much in your cohort receive information. So, you watch some videos together, you start using the product together, you start understanding what it is that we do. You start understand who are the people that use our software and what makes them successful. And then in your first week you also ship something into production, which is quite fun. You get to use our tooling and get something out there, but as the onboarding progresses, it becomes more active and it becomes more specialized. So, week one, you could have any employee whatsoever go through that week in any department.

So, you get to, if you think about sort of reuse that, that’s a really good part of your program. So, for everyone that week one funnel is super relevant. The week two funnel is also super relevant for everybody, because week two is all about understanding what the periphery of the business looks like. So, answering support tickets paired up with support agents, listening in to support calls and understanding, “Okay, so when someone has a problem and when someone has a difficulty, what actually happens and what kind of things do they have problems with?” Also in week two you build your own store, like you’re given a brief to pretend you are a merchant who is in Mexico selling beer and you have to work out how to sell it in different jurisdictions using different taxes and real life scenarios where you get to not just see the happy path through the product, but also the things that are really challenging for some people.

And you really get into a merchant’s mindset in week two. So, the end of week two, you’ve understood the company in week one, week two, you know how to use the product at a high level when you’ve started to experience like what works really well, what’s really challenging for merchants. Just in general, taxes is very challenging. And then we taper off in week three and four to more craft specific things. So, if you’re in engineering, in week three you get access to a whole bunch of learning materials around our architecture, you do group work, you look through the code, you get more hands on and you understand how everything is put together. And then week four is purposefully light, because in week four we effectively have a whole bunch of self-serve learning that you can do.

So, we know that everyone that we hire might not have coded in Ruby, or maybe hasn’t done a particular React framework before. So, we make it so that if you want, you can then spend half a day and go into a crash course in any of those things. And we have some internal materials, internal courses, so that really at the end of that fourth week you should be up to speed on what we do, our mission and values, our culture, understand what our merchants do, you understand the big box and arrow diagram of how things are put together, and you also managed to get your hands a little bit dirty in a crash course as to anything that you want to skill up on.

And then there’s that manager handoff, and that’s where you exit the formal program and your manager, and every team will be different here, will have put together here’s the welcome to our team. Then you go into the Slack channels. And that’s one thing that’s worth noting, is that we purposefully make it so that our onboarding staff don’t go into their teams Slack channels and emailing lists and rituals immediately. That’s sort of a thing that happens in the fourth week gradually. So, yes, you’ll meet your manager in the first week, you’ll have a quick one-to-one, just to say hi. But really that one-to-one is all about, “Hey, I’m here, we can talk, let me know what you need at any time, but the most important thing is that you do the onboarding and you focus your attention there and you enjoy it.”

Because it is a sort of a special fun time where the people who were in my onboarding cohort when I joined the company, I still talk to them every day. You make a really nice bond with that cohort that you go through in the first month, and that gives you some hooks into different parts of the business just by default as well, which is really nice.

Shane Hastie: It’s a really, really important topic, and that’s a great model. Thank you very much for sharing that. Reflecting back to Qcon San Francisco, you were the track host for the Hybrid and Remote: What Next track. What stood out for you from that track?

Where is hybrid and remote work heading in the future? 27:41

James Stanier: One is it’s an area where there still is a huge amount of uncertainty, not just uncertainty about what the future holds for somebody as a worker in the industry to think where is this going? But I think also a huge amount of uncertainty from leaders as to what they should be doing. Not just how they implement remote or how they implement hybrid, but just where is this going. I think we’ve all seen how quick to react humans can be to different situations and I think it’s extremely hard to predict what people are going to want in the next five years and also how to prepare. And as we said at the beginning of this conversation, it’s so much easier going fully remote or fully office-based. You can imagine in the future that in the same way that remote was niche for a particular type of worker, say 15 years ago, and there was only a tiny handful of companies that did it, but those that did attracted a certain type of person who really thrived.

You wonder whether physical office-based work may become the niche of the future where there are just some people who love working in offices and companies may design themselves in such a way that actually in-person collaboration is all they really do. That’s the most important thing. And if that’s for you, then you will find one of these companies to work for. But I think the reality is that any company succeeding means growing geographically to a point where you have to deal with different locations, different time zones, different cultures, and you begin to butt up against the challenges of remote, whether you like it or not. So, I think also the other thing that people are trying to think about at the moment is what are the core principles of remote work that still also apply to office work, to hybrid work? And that that’s partially what I got into with the book was like, yes, it was pitched as a book about doing remote work well, but I think what I did was write it in such a way that everyone is remote to each other in some way, even if you are in the same time zone, even if you’re in the same building, you might be on a different floor.

And the primary way in which we are communicating with each other is digital in terms of digital artifacts, digital communication, and all of our code is in GitHub, isn’t it? It’s all asynchronously, contributable towards and work on-able. So, what are the core tenets of doing distributed work well? I think is what is on everyone’s mind at the moment and whether or not that manifests in the future as remote, hybrid, not remote, I think we’ll see where people vote with their feet. But maybe we can use remote as an opportunity to really think about the way in which we communicate intentionally in a way that’s going to work now and into the future and just reset our habits a bit. Because I think doing remote well and using our tools well benefits you regardless of where you are working. And I think that’s what we’re trying to extract from it at the moment. What are the best practices that mean that we could be smarter and more efficient together in the future?

Shane Hastie: James, thank you very, very much for taking the time to talk to us. If people want to continue the conversation, where do they find you?

James Stanier: Twitter is probably the easiest way to contact me and it’s @jstanier as one string. And if you want to read my blog, it’s theengineeringmanager.com and that’s a many year archive of thoughts about remote, management, software, so on. So, those two places are probably the two main vectors that you can get me on. And please let me know if you have any thoughts, opinions, if you disagree with me, I’d love to talk. So, do get in touch.

Shane Hastie: Thank you so much.

Mentioned

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • Steef-Jan Wiggers

Recently, the Dapr maintainers released V1.10 of Distributed Application Runtime (Dapr), a developer framework for building cloud-native applications, making it easier to run multiple microservices on Kubernetes and interact with external state stores/databases, secret stores, pub/sub-brokers, and other cloud services and self-hosted solutions.

Microsoft introduced Dapr in October 2019 and has had several releases since its production-ready release V1.0 in February 2021. Since the V1.0 release, Dapr has been approved by Cloud Native Computing Foundation (CNCF) as an incubation project, received approximately 43 new components, and has over 2500 community contributors.

The Dapr project continued releasing new versions, with the V1.10 release introducing several new features, improvements, bug fixes, and performance enhancements such as:

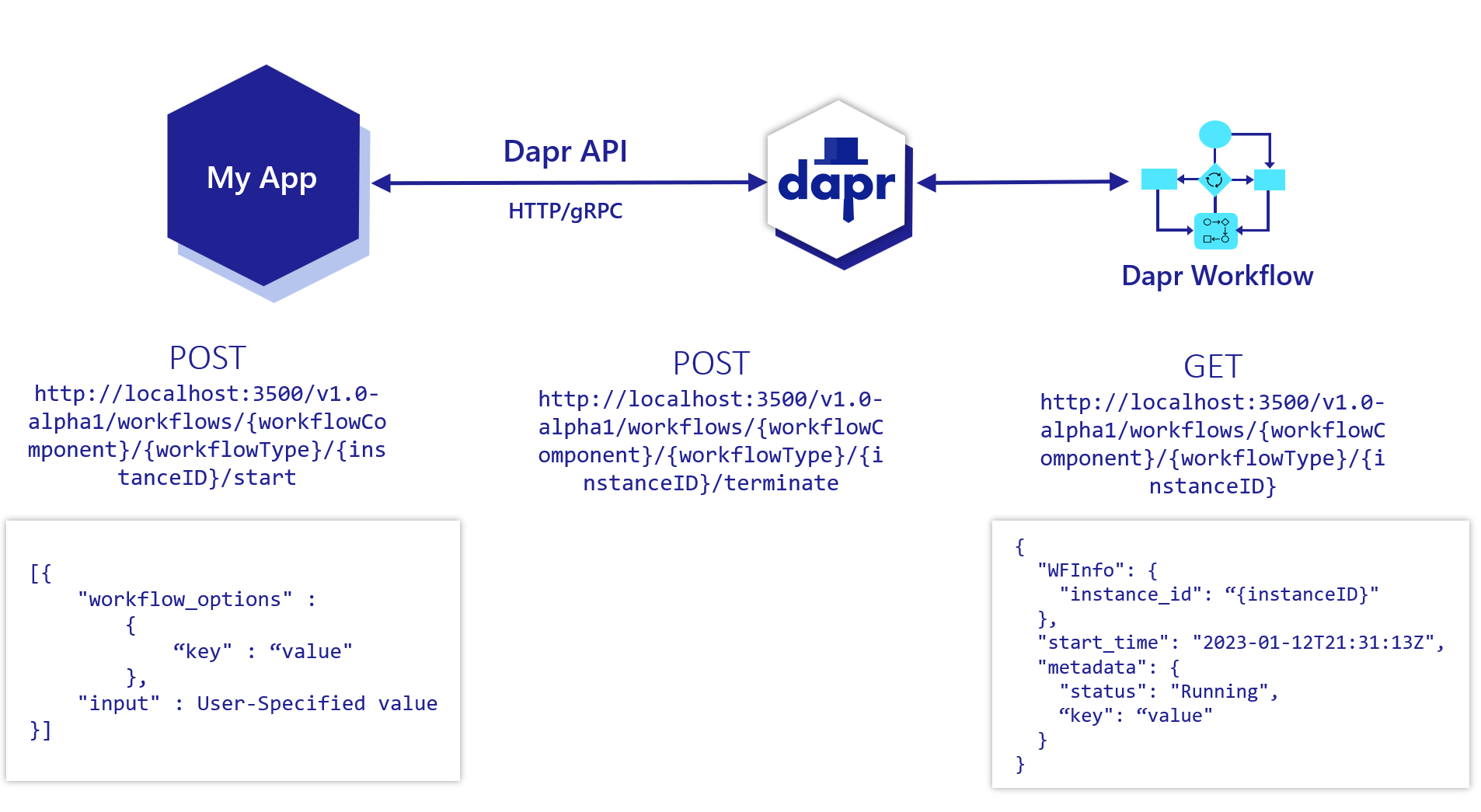

- Dapr Workflow: a new alpha feature that allows developers to orchestrate complex workflows using Dapr building blocks and components. For example, a workflow can provide task chaining – chain together successive application operations where outputs of one step are passed as the input to the next step. In addition, a quickstart for workflows is available.

Source: https://v1-10.docs.dapr.io/developing-applications/building-blocks/workflow/workflow-overview

- Stable resiliency policies: Dapr provides a capability for defining and applying fault tolerance resiliency policies (retries/back-offs, timeouts, and circuit breakers). The resiliency policies, first introduced in the v1.7.0 release, are now stable and production ready and apply to all building block APIs, such as Pub/Sub, State Management, Bindings, and Actors.

- Multi-App Run template: a new template for the Dapr CLI that allows developers to run multiple Dapr applications with a single command, simplifying the local development and testing experience.

- Pluggable component SDK: a new SDK that allows developers to create custom components for Dapr, such as state stores, pub/sub systems, and bindings.

- Publish and subscribe to bulk messages: a new feature that enables Dapr applications to publish or subscribe to multiple messages in a single request, improving the throughput and efficiency of pub/sub scenarios.

In a stack overflow thread, a respondent, nedad, commented on the performance of the Bulk-Publish API:

It turns out that the Dapr team is already working on a Bulk-Publish API, which gives much better performance. I was able to increase it to 9000 messages/sec with a batch-size of 100.

The Dapr 1.10.0 release also includes many fixes and improvements in the core runtime and components, such as support for multiple namespaces in Kubernetes, gRPC reflection in Dapr sidecar, custom headers in HTTP bindings, Azure Key Vault with managed identities and improved logging and error handling.

Marc Duiker, a Microsoft MVP and senior developer advocate at Diagrid, told InfoQ:

Dapr is excellent when you need to build event-driven applications. It provides a standardized set of API building blocks that allow developers to focus on their business logic. With release 1.10, many components have graduated to the stable level, ready to be used in production workloads. In addition, new features and components have been added that expand the functionality of Dapr.

In addition, he added:

Dapr Workflow is a new building block allowing developers to write long-running and resilient workflows in code. Improvements to the local development experience have also been made, like Multi-App Run, which simplifies running microservice-based applications locally by starting multiple apps with just one command. Both Workflow and Multi-App Run are alpha/preview features. Wait to use them in production, but try them out, either locally or in a test environment, and give feedback to the Dapr team, so they can keep improving the project.

InfoQ also spoke to Nick Greenfield, a Dapr maintainer who was able to comment on the project roadmap decided by project maintainers and the STC (Steering Technical Committee):

Dapr will continue investing in building blocks that help developers implement and solve common distributed system challenges. Furthermore, two open proposals for introducing two new Dapr building blocks are Cryptography API and Document Store API. Additionally, the Dapr project will continue to invest in building the community and look to expand the STC, as well as project maintainers and approvers.

Lastly, the Dapr 1.10.0 release is available for download from the Dapr GitHub repository or via the Dapr CLI. Furthermore, the Dapr documentation provides detailed instructions on how to install, upgrade, and use Dapr.

MMS • Renato Losio

AWS recently introduced global condition context keys to restrict the usage of EC2 instance credentials to the instance itself. The new keys allow the creation of policies that can limit the use of role credentials to only the location from where they originated, reducing the risk of credential exfiltration.

The two new keys are aws:EC2InstanceSourceVPC, a condition key that contains the VPC ID to which an EC2 instance is deployed, and aws:EC2InstanceSourcePrivateIPv4, a condition key that contains the primary IPv4 address of the EC2 instance.

IAM roles for EC2 are used extensively on AWS, allowing applications to make API requests without managing the security credentials but the temporary credentials were at risk of credential sprawls. Sébastien Stormacq, principal developer advocate at AWS, recently explained the risk and showed how to use GuardDuty to detect EC2 credential exfiltration:

Imagine that your application running on the EC2 instance is compromised and a malicious actor managed to access the instance’s metadata service. The malicious actor would extract the credentials. These credentials have the permissions you defined in the IAM role attached to the instance. Depending on your application, attackers might have the possibility to exfiltrate data from S3 or DynamoDB, start or terminate EC2 instances, or even create new IAM users or roles.

Until now, developers had to hard-code the VPC IDs and/or IP addresses of the roles in the role policy or VPC Endpoint policy to restrict the network location where these credentials could be used. Liam Wadman, solutions architect at AWS, and Josh Levinson, senior product manager at AWS, explain:

By using the two new credential-relative condition keys with the existing network path-relative aws:SourceVPC and aws:VpcSourceIP condition keys, you can create SCPs to help ensure that credentials for EC2 instances are only used from the EC2 instances to which they were issued. By writing policies that compare the two sets of dynamic values, you can configure your environment such that requests signed with an EC2 instance credential are denied if they are used anywhere other than the EC2 instance to which they were issued.

{

"Statement": [

{

"Effect": "Deny",

"Action": "*",

"Resource": "*",

"Condition": {

"StringNotEquals": {

"aws:ec2InstanceSourceVPC": "${aws:SourceVpc}"

},

"Null": {

"ec2:SourceInstanceARN": "false"

},

"BoolIfExists": {

"aws:ViaAWSService": "false"

}

}

}

]

}

Example of a deny policy using the ec2InstanceSourceVPC key.

While many developers like the new context keys, Liz Fong-Jones, field CTO at Honeycomb.io, comments:

I’m shocked this wasn’t automatically default-on enforced before and now looking at how quickly, exactly, we can do the opt-in for this across all our IAM policies.

In the “fixing AWS temporary credential sprawl the messy way” article, Seshubabu Pasam, CTO at Ariksa, agrees:

Unless you are on top of every new announcement from AWS, this is not going to surface to common users for a while. Completely misses the mark on secure by default. Like a lot of new security-related announcements, this and other security features are completely useless even in a new account because it is not on by default.

The new condition keys are available in all AWS regions.

Global NoSQL Database Market Size Business Growth Statistics and Key Players Insights 2023-2030

MMS • RSS

New Jersey, United States – Our report on the Global NoSQL Database market provides a comprehensive overview of the industry, with detailed information on the current market trends, market size, and forecasts. It contains market-leading insight into the key drivers of the segment, and provides an in-depth examination of the most important factors influencing the performance of major companies in the space, including market entry and exit strategies, key acquisitions and divestitures, technological advancements, and regulatory changes.

Furthermore, the NoSQL Database market report provides a thorough analysis of the competitive landscape, including detailed company profiles and market share analysis. It also covers the regional and segment-specific growth prospects, comprehensive information on the latest product and service launches, extensive and insightful insights into the current and future market trends, and much more. Thanks to our reliable and comprehensive research, companies can make informed decisions about the best investments to maximize the growth potential of their portfolios in the coming years.

Get Full PDF Sample Copy of Report: (Including Full TOC, List of Tables & Figures, Chart) @ https://www.verifiedmarketresearch.com/download-sample/?rid=129411

Key Players Mentioned in the Global NoSQL Database Market Research Report:

In this section of the report, the Global NoSQL Database Market focuses on the major players that are operating in the market and the competitive landscape present in the market. The Global NoSQL Database report includes a list of initiatives taken by the companies in the past years along with the ones, which are likely to happen in the coming years. Analysts have also made a note of their expansion plans for the near future, financial analysis of these companies, and their research and development activities. This research report includes a complete dashboard view of the Global NoSQL Database market, which helps the readers to view in-depth knowledge about the report.

Objectivity Inc, Neo Technology Inc, MongoDB Inc, MarkLogic Corporation, Google LLC, Couchbase Inc, Microsoft Corporation, DataStax Inc, Amazon Web Services Inc & Aerospike Inc.

Global NoSQL Database Market Segmentation:

NoSQL Database Market, By Type

• Graph Database

• Column Based Store

• Document Database

• Key-Value Store

NoSQL Database Market, By Application

• Web Apps

• Data Analytics

• Mobile Apps

• Metadata Store

• Cache Memory

• Others

NoSQL Database Market, By Industry Vertical

• Retail

• Gaming

• IT

• Others

For a better understanding of the market, analysts have segmented the Global NoSQL Database market based on application, type, and region. Each segment provides a clear picture of the aspects that are likely to drive it and the ones expected to restrain it. The segment-wise explanation allows the reader to get access to particular updates about the Global NoSQL Database market. Evolving environmental concerns, changing political scenarios, and differing approaches by the government towards regulatory reforms have also been mentioned in the Global NoSQL Database research report.

In this chapter of the Global NoSQL Database Market report, the researchers have explored the various regions that are expected to witness fruitful developments and make serious contributions to the market’s burgeoning growth. Along with general statistical information, the Global NoSQL Database Market report has provided data of each region with respect to its revenue, productions, and presence of major manufacturers. The major regions which are covered in the Global NoSQL Database Market report includes North America, Europe, Central and South America, Asia Pacific, South Asia, the Middle East and Africa, GCC countries, and others.

Inquire for a Discount on this Premium Report @ https://www.verifiedmarketresearch.com/ask-for-discount/?rid=129411

What to Expect in Our Report?

(1) A complete section of the Global NoSQL Database market report is dedicated for market dynamics, which include influence factors, market drivers, challenges, opportunities, and trends.

(2) Another broad section of the research study is reserved for regional analysis of the Global NoSQL Database market where important regions and countries are assessed for their growth potential, consumption, market share, and other vital factors indicating their market growth.

(3) Players can use the competitive analysis provided in the report to build new strategies or fine-tune their existing ones to rise above market challenges and increase their share of the Global NoSQL Database market.

(4) The report also discusses competitive situation and trends and sheds light on company expansions and merger and acquisition taking place in the Global NoSQL Database market. Moreover, it brings to light the market concentration rate and market shares of top three and five players.

(5) Readers are provided with findings and conclusion of the research study provided in the Global NoSQL Database Market report.

Key Questions Answered in the Report:

(1) What are the growth opportunities for the new entrants in the Global NoSQL Database industry?

(2) Who are the leading players functioning in the Global NoSQL Database marketplace?

(3) What are the key strategies participants are likely to adopt to increase their share in the Global NoSQL Database industry?

(4) What is the competitive situation in the Global NoSQL Database market?

(5) What are the emerging trends that may influence the Global NoSQL Database market growth?

(6) Which product type segment will exhibit high CAGR in future?

(7) Which application segment will grab a handsome share in the Global NoSQL Database industry?

(8) Which region is lucrative for the manufacturers?

For More Information or Query or Customization Before Buying, Visit @ https://www.verifiedmarketresearch.com/product/nosql-database-market/

About Us: Verified Market Research®

Verified Market Research® is a leading Global Research and Consulting firm that has been providing advanced analytical research solutions, custom consulting and in-depth data analysis for 10+ years to individuals and companies alike that are looking for accurate, reliable and up to date research data and technical consulting. We offer insights into strategic and growth analyses, Data necessary to achieve corporate goals and help make critical revenue decisions.

Our research studies help our clients make superior data-driven decisions, understand market forecast, capitalize on future opportunities and optimize efficiency by working as their partner to deliver accurate and valuable information. The industries we cover span over a large spectrum including Technology, Chemicals, Manufacturing, Energy, Food and Beverages, Automotive, Robotics, Packaging, Construction, Mining & Gas. Etc.

We, at Verified Market Research, assist in understanding holistic market indicating factors and most current and future market trends. Our analysts, with their high expertise in data gathering and governance, utilize industry techniques to collate and examine data at all stages. They are trained to combine modern data collection techniques, superior research methodology, subject expertise and years of collective experience to produce informative and accurate research.

Having serviced over 5000+ clients, we have provided reliable market research services to more than 100 Global Fortune 500 companies such as Amazon, Dell, IBM, Shell, Exxon Mobil, General Electric, Siemens, Microsoft, Sony and Hitachi. We have co-consulted with some of the world’s leading consulting firms like McKinsey & Company, Boston Consulting Group, Bain and Company for custom research and consulting projects for businesses worldwide.

Contact us:

Mr. Edwyne Fernandes

Verified Market Research®

US: +1 (650)-781-4080

UK: +44 (753)-715-0008

APAC: +61 (488)-85-9400

US Toll-Free: +1 (800)-782-1768

Email: sales@verifiedmarketresearch.com

Website:- https://www.verifiedmarketresearch.com/

MMS • Oghenevwede Emeni

Microsoft has released the TypeScript 5.0 beta version, which aims to simplify, speed up and reduce the size of TypeScript. The beta release incorporates new decorators standards that enable users to customize classes and their members in a reusable manner.

One of the key highlights of this beta release is the incorporation of new decorators standards that enable users to customize classes and their members in a reusable manner. Daniel Rosenwasser, program manager of TypeScript, wrote in a recent post on the Microsoft blog that these experimental decorators have been incredibly useful, but they modeled an older version of the decorators proposal and always required an opt-in compiler flag called --experimentalDecorators. Rosenwasser stated that developers who have been using “--experimentalDecorators” are already aware that, in the past, any attempt to utilize decorators in TypeScript without enabling this flag would result in an error message.

Rosenwasser described the long-standing oddities around enums in TypeScript and how the beta release of TypeScript 5.0 has cleaned up some of these problems while reducing the number of concepts needed to understand the various kinds of enums one can declare.

TypeScript is an open-source programming language and a superset of JavaScript, which means it builds upon and extends the functionality of JavaScript. It was developed and is maintained by Microsoft.

The new decorators proposal in TypeScript 5.0 allows developers to write cleaner and more maintainable code with the added benefit of being able to customize classes and their members in a reusable manner. While the new decorators proposal is incompatible with --emitDecoratorMetadata and does not support parameter decoration, Microsoft anticipates that future ECMAScript proposals may be able to address these limitations.

In addition to the new decorators proposal, TypeScript 5.0 includes several improvements such as more precise type-checking for parameter decorators in constructors, const annotations, and the ability to allow the extends field to take multiple entries. It also includes a new module resolution option in TS, performance enhancements, and exhaustive switch/case completions.

TypeScript targets ECMAScript 2018, which means that Node.js users must have a minimum version of Node.js 10.

To start using the beta version, users can obtain it through NuGet or use the npm command:

npm install typescript@beta

MMS • Steef-Jan Wiggers

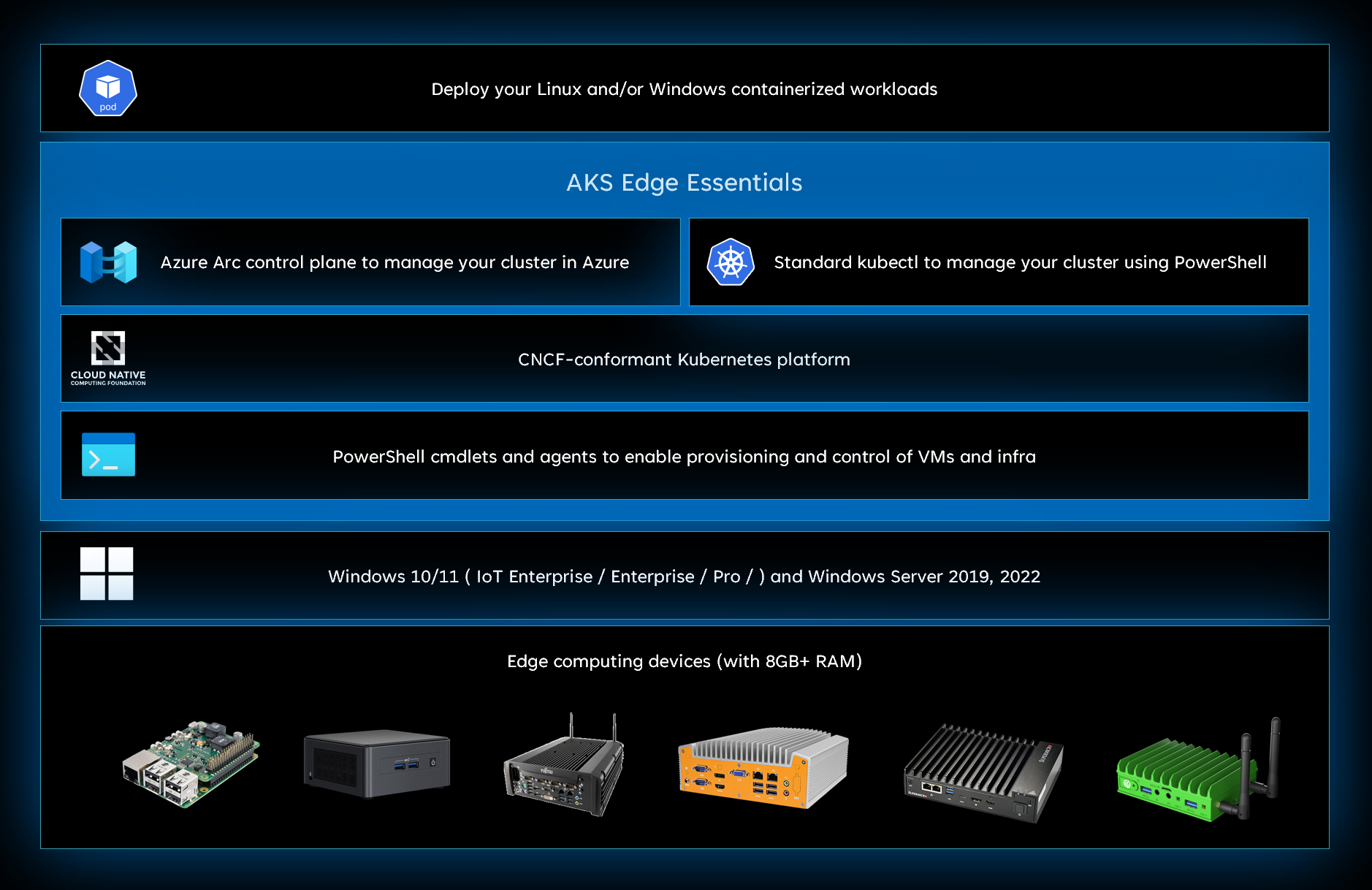

Microsoft recently announced the general availability release of AKS Edge Essentials, a new Azure Kubernetes Service (AKS) offering designed to simplify edge computing for developers and IT professionals.

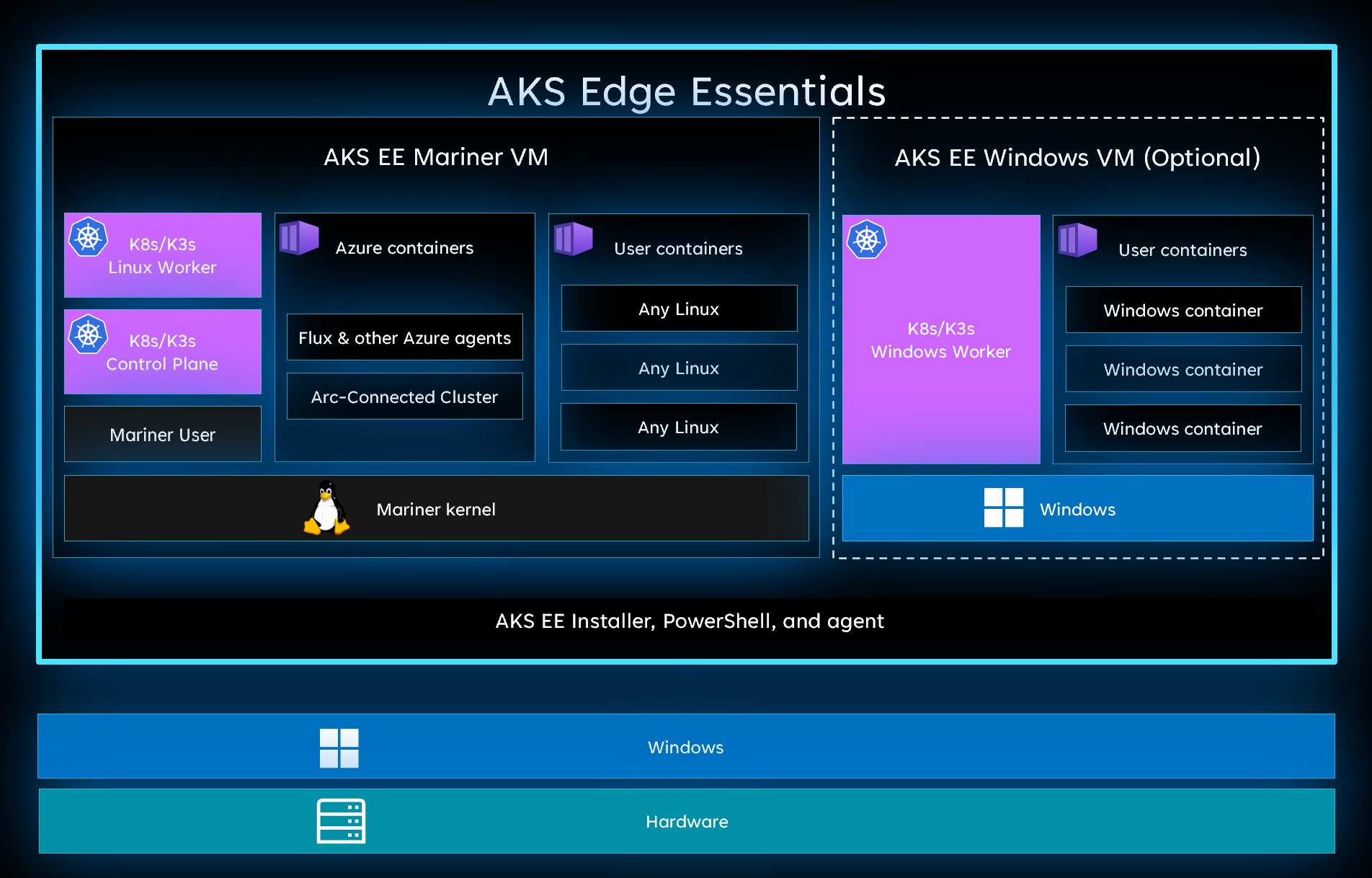

AKS Edge Essentials is a lightweight, CNCF-conformant K8S (Kubernetes) and K3S (Lightweight Kubernetes) distribution supported and managed by Microsoft. It simplifies the process of Kubernetes setup by providing PowerShell scripts and cmdlets to set up Kubernetes and create single or multi-node Kubernetes clusters. In addition, it fully supports both Linux-based and Windows-based containers that can be easily deployed at the edge on any Windows PC class device with Windows 10 and 11 IoT Enterprise, Enterprise, and Pro.

After setting up on-premises Kubernetes using AKS Edge Essentials and creating a cluster, customers can manage their infrastructure through the Azure portal. In addition, various Azure Arc-enabled services like Azure policy, Azure monitor, and Azure ML services enable them to ensure compliance, monitor their clusters, and run cloud services on edge clusters.

Source: https://learn.microsoft.com/en-us/azure/aks/hybrid/aks-edge-overview

When customers create an AKS Edge Essentials deployment, AKS Edge Essentials creates a virtual machine for each deployed node. In addition, ASK Edge Essentials manages the virtual machines’ lifecycle, configuration, and updates. Deployments can only create one Linux VM on a given host machine, acting as both the control plane node and as a worker node based on the customers’ deployment needs. Optionally, a Windows node can be created if customers need to deploy Windows containers.

Source: https://learn.microsoft.com/en-us/azure/aks/hybrid/aks-edge-concept-clusters-nodes

With AKS Edge Essentials, Microsoft will support and manage the entire stack, from hardware drivers to cloud services and everything in between. In addition, customers can choose the 10-year Long-Term Servicing Channel (LTSC) version of the Windows IoT OS, ensuring long-term stability with critical and security fixes.

Furthermore, Microsoft has partnered with Lenovo, Scalers AI, Arrow, Anicca Data, and Tata Consultancy Services (TCS) to enable AKS Edge Essentials across more devices and develop new solutions that allow customers to get started with AKS Edge Essentials quickly.

Jason Farmer, principal program manager, Azure Edge + Platform, told InfoQ:

With this release, customers can now run a fully Microsoft-supported, simple, and managed Kubernetes distribution on Windows. Now, any workload built for Kubernetes can be run and securely maintained by Microsoft across the ecosystem of Azure-connected devices built on the Windows OS.

In addition, Kevin Viera, a cloud infrastructure engineer, tweeted:

Microsoft is making it really easy to get started with k8s with Azure Kubernetes Service Edge Essentials “AKS Edge Essentials” which is basically an on-prem Kubernetes with cloud mgmt via Azure Arc.

More service details are available on the documentation pages, including a QuickStart.

Lastly, the company will update the product with new capabilities like commercial support for multi-machine deployment, Windows containers, and additional Azure Arc-enabled services on AKS Edge Essentials clusters.

MMS • Alex Porcelli

Transcript

Porcelli: We are living in a world, almost post-pandemic. We have an unprecedented shortage of software engineers, especially the most senior ones. One very hot topic in the recent times has been the staff-plus career. In this talk, I will cover the staff-plus career, from the lens of open source, more specific, how open source engagement can accelerate and solidify your staff-plus career. Our staff-plus engineers that I know, they create their own unique path to reach that level. It’s hard to find a set of guidelines, or receipts, or steps that you follow to reach that point, and especially when you reach there, how to stay there. I don’t think we have much understanding on that. However, we as engineers, we always try to look and identify patterns across a population. Looking into the staff-plus engineers, we can have a similar approach, try to find what’s common in terms of skills, achievements, and how they do their work as staff-plus engineers. This is exactly the great work that these two particular books have done. The Staff Engineers by Will Larson. Then, Staff Engineer’s Path from Tanya Reilly. These books cover a lot of information, from skills, achievements, and daily work of staff-plus engineers. Of course, that’s not the scope of this talk.

Skills and Achievements

However, the scope of this talk is looking specifically to skills and achievement, that you can sharpen and gain through the open source engagement. I have this list of seven items, four them are skills, and three of those are achievements. The first skill that we’ll cover is the written communication. As you’ll see later, open source software is all based on communication, written communication. Open source, long ago, has been a completely async and fully remote execution. Then, we’ll tackle, manage technical quality. In open source you have exposure to a lot of source code from different perspectives, and you definitely contributed to your manage technical quality. Then we go to the last two skills. One is the leadership, that to lead you have to follow. I love this aspect of the Will Larson book. Last, the art of influence. How you influence open source communities. That’s very interesting, because in the world of open source, there is no exact hierarchy, there is no power that you do, you are just a community member. That’s very related to how staff-plus engineers have to influence their organization. Then we have here the achievements, how you can expand your network, and create visibility. Last, the staff-plus project.

Community Members: Growth

Before diving into those skills and achievements, let’s look at what’s the common growth path community members take. When you join an open source community, usually you start as a user. You’re using that software, you’re probably not sure about many things. You started to use the communication channels of that particular community to ask questions, in the beginning, probably beginner’s questions: how you set up, how you use. As you advance, you probably ask, what’s the best practice to use that? You interact and start to create relationships in that mailing list while you are evolving your knowledge about that particular software. Over time, it’s natural that you progress if you continue engaging on that community, you progress to become an expert. That’s the point that you contribute back to the community. You start to help new users, new people that start to struggle with that technology, you are able to help them. That’s a very big thing for the community, that empowers that community. That’s very welcome and it’s very common to happen for the majority of engineers engaged in open source software.

Then, you can jump to the next session, the next step of the growth is to become a contributor. Not necessarily code yet, but you can start promoting that technology. You may start blogging about that, writing articles, that will somehow help new users or experienced users to better take advantage of that software. Depending on how engaged you are, creating content for that particular piece of software in that community, you may spark the need to get more deep on the code base once you understand the internals. Why not start contributing to code? Then you open the world of possibilities. Again, that’s not mandatory, that you take this approach, there are many users that stay as experts. It’s up to you to define your path. If you are interested in the open source space, I think that’s the common path. You get into the code. You start contributing. You start contributing in a regular pace. I think you become a community member, a very engaged community member. That’s fantastic. From here, you already benefited your career at this point. If you are really into open source, if you get into it like I did, you’ll have the opportunity from here to maybe become a maintainer of a component of that open source software, and over time, to become a leader of that open source community.

Background

My name is Alex Porcelli. I’m a Senior Principal Software Engineer at Red Hat. I’m an engineer leader for Red Hat Business Automation product line. I’ve been a staff-plus engineer for the last 10-plus years. I have 25 years of experience, 15-plus years dedicated to open source. My passions are open source, business automation, leadership, and my family. Back to that growth path, and I will relate my personal path to that. I’m not looking from when I became a Red Hat employee forward, because I think that’s unfair comparison, because my daily job is to write code in the open source community. I will relate to how I got into the open source world. That’s the story.

I started as a user of a framework called ANTLR, that is a technology to help you build parsers. I’ve always been fascinated by building parsers. I built a lot of parsers in my free time in the past. I started to engage in open source ANTLR community, asking questions, how this works, why this is not working. I progressed in that community, and became an expert. In a point in time, I became well known in that community, because I was providing a lot of instructions to new members. In a point in time, another person joined the community, and started also asking questions about the grammar, the structure of grammar, what the errors that they’re facing was like. That’s why I start to exchange a lot of emails as an expert. That was the Drools team member asking questions related to the Drools language, that we have a rules engine. The Drools project has a rule language that has the parser, written in ANTLR. I got very engaged. Moving a little bit forward, I ended up being hired as a Red Hat employee to work in that particular compiler and the parser. Today, I’ve become an open source leader. I all started that in the past as a user, as a beginner. Like me there are many other people that I know in the open source community that follow a little bit of this path. I had some contribution to the ANTLR project as well. Where I am today, we’re more towards my Drools contribution.

Contributing to Open Source

This is not a talk about how to contribute to open source. There’s a lot of material out there about that. However, I think it’s important to level set. I’ll cover how to get started contributing to open source. The first important thing to do is find your community. To find that community, you take a few things into account. First, try to invest your time in something that you are already a user. It will help a lot to understand how that technology is used and the context of that technology. Try to look at something that is related to work. This will help especially if you’re looking at the staff-plus career, and have the impact in your career, something that is related to your work will benefit in the long run. If possible, look for something that’s an important piece and part of your work infrastructure. Today, it’s very common. Majority of data center infrastructure is built on top of open source. It’s not that hard to find a piece of software that your business runs on top of. After you find this community, join the communication channels, the mailing lists, the IRC, Slack, Zulip, Discord. Each community has their particular way to communicate. Join all these communication channels. Start to ask questions there, or at least observe how they interact. Because that will give you a sense, what’s the pulse of that community. In parallel, you can start having your hands dirty. It’s time to try and build it. I think it’s a very important aspect, try to build the project by yourself. Sometimes that may not be as easy as you would expect. Some configuration is going to be missing. Some projects will not have detailed configuration instructions for you. Take the opportunity to go back to the communication channel, ask the questions, iterate until you get the first build in your environment.

At this point, you have it built. You are now almost ready to focus on the code contribution. Almost ready, because before you define what you’re going to implement, I think you need to keep in mind to narrow the scope. Some open source projects are huge, complex modules. There are others that are not that big, but it’s still a complex code base. Focus on something, again, back to what you understand. If you are already a user of a particular module of that software, try to focus on that area, because it’s going to easy for you to understand the context of the code, and also understand the real use cases associated with that. Also, it will help you test and implement. Look for something small. Don’t try to shoot for the stars at the beginning. Try to avoid a little bit of snacking. Snacking in this context means that something that is low value to the product. For example, changing typos. Those changes are important, but it’s not what you’re looking to have the impact in your career. Try to look for something simple, but still provides additional value. A good starting point also is bug fixing. There’s also lots of open source projects that publish in their issue tracking a label specifically for the first time contributors. I think if there is such a list, starting there is also a great opportunity to get your first contribution.

At this point, you are almost ready to jump in the code. Almost ready because before working on something, unless you already have an issue defined, open the issue, discuss in the community. Even if there is an issue, and you have defined that you want to tackle that issue, is this the issue? Try to engage in the community and ask feedback for a proposed solution. If there’s no issue open, open the issue. Focus on the problem, and try to engage again with the community to get the feedback to build the solution together. Don’t jump directly into the solution. At this point, if you follow that, you are almost ready to start coding. You open an IDE, but before typing, take a look at the community standards. Try to follow the naming conventions, the project structure, and project format. This is important. This seems to be a silly advice, but many times external contributors follow different formats that ended up frustrating them because their code wasn’t merged. Don’t expect that maintainers or project leads will be able to do it for you. They have a busy life as everyone has, and the backlog and adding some adjustments to the code base of external contributors is not exactly on their top priority. Try to follow the community standard. Many projects have these published somewhere. Some even have predefined configuration that you can import in an IDE.

You start to code, and you get engaged, but try also to publish a draft pull request early in the development cycle. Because if you invest too much of your time on something and just try to collect the feedback, in the end, the community may nudge you to a different path. If you collect this feedback early on, instead, do this, go to that, there’s other references in other parts of the code that you can check. These will help you to be more effective, and your time also in a way that’s more effective. Once you’re done, you are ready to open your pull request. Good title, good description, and link to the original issues are a good starting point. Also, it’s mandatory to look to automated tests. These days, I don’t know any open source software that would accept external contribution without automation tests.

Engagement for Career Impact

At this point, if you follow all these instructions, it’s almost certain that you have your first contribution merged in open source. You already check-boxed that item in your bucket list. It’s a fact that you celebrate. However, it may not create the impact that you are looking for in your career. If you’re looking through the eyes that you want to land or establish your staff-plus career, you need more than that. You need engagement for that impact. What do I mean by engagement? The first thing that means is present. Try to be present on those communication channels that I mentioned. This is important, because in there, you build a relationship with other community members, not only build the relationship, but you’ll be able to support new users, communicate and discuss things through the channels. Be present. It gives you in the long run, also, visibility to that project. Another thing is, try to provide feedback, try new features, suggest new features. If those features are implemented, or you have new features available, try to provide meaningful feedback for the community. Try it in your company environment. Of course, try it in a sandbox safe environment, but bring back that input to the maintainers. They’ll always be happy to listen more of your experience with that new feature. Then, of course, you have the contribution. You can contribute also beyond the code. Promoting the technology is also a great way to contribute. Blog about it. Publish articles about that technology. This is very helpful. It keeps you engaged in that community. Share it on the communication channels, when you write something.

Of course, code contribution. Expected contribution on the code is also expected on the staff-plus perspective. You have to contribute code to grow on your path. Try to build a pace that is constant. Do a contribution every x weeks, because then you become an active member of that community. Again, you will strengthen your relationships over time, and you become a more integral part of that community. Of course, this demands your time investment on that. I think it’s majority of the things that you want to benefit in the long run. The investments on open source and engagement in open source is no different. This will pay off in the future. For that payoff, you need to invest a lot of your time. Again, if you connect it with your work, you’re probably able to manage a little bit better how you invest, instead use just personal time, you connect with the work somehow. Try to be smart on your choices. That’s the content of engagement.

Written Communication

Let’s jump on the skills, and then followed by achievements that you can get by contributing to open source. Written communication is key in open source. It’s the foundation. It’s very important. Open source software is built async and remote, way before the pandemic where a majority of the people were working remote. Writing is the best form for async communication, and a critical skill for all staff-plus engineers. There’s also the fact that the best way to get better in writing is writing. I don’t know another way to practice this particular skill than practicing. The written communication, after the pandemic, in a world that remote work is more common, became part of some interview loops of some companies to ask for engineers to write an essay that you highlight your writing skills. Sharpening this skill will further your career, for sure. This is the foundation. Why is it the foundation? Because as everything is done async and remote, all the other skills you have or the achievements that you are looking for in the open source engagement will happen through the written communication. That is a fundamental, important skill to have.

What are the writing opportunities that you see when you contribute to open source? One of them is blog posts. Others are interacting with the mailing lists, submitting feature requests, and pull requests. You have these four items. You have more, but these are basically a few items that can sharpen your writing skills. Let’s talk about the blog post. That’s the lower bar here to contribution first. It is a great way to promote technology. That’s the strongest way to contribute to open source software without coding. It’s also the best way to learn a new technology. One of the best ways I have when I want to learn something, is try to teach the same technology to someone else. This gives me a better understanding of that technology by creating my own mental models about that software. Again, practice makes improvement: write.

Here, I will talk about a friend of mine, Mauricio Salatino, also known by Salaboy. He started writing about open source software around 2008, on his personal blog, and he was a constant blogger. He still is. He blogs a lot. He still blogs a lot. He’s originally from Argentina. He started to blog in Spanish. He started to post every week or more than once a week, blog posts about open source technology. One in particular was JBPM. He was blogging a lot about JBPM. He started to share the blog posts in the open source community channels to collect feedback. That’s the point that he noticed that his audience started to increase, and the audience were more than just Spanish speakers. He could use the analysis and could notice a lot of people from other countries that don’t necessarily have and probably were using resources like Google Translate. He ended up changing his approach. He started to blog just in English. This was around 2009. He continued to blog about the technology, JBPM specifically, many times. He got involved in the community, and as naturally, it happens to engineers, wanted to get involved in the code base. He started to contribute to the JBPM project. That was fantastic for him. Not only that, that created an opportunity that later on he was hired as a Red Hat engineer to maintain and to work on JBPM. Today, Mauricio is a staff engineer at VMware working on Knative. He’s still blogging. He’s a very active open source advocate, and blogging about technologies.

Let’s go over the mailing list. We have the users’ mailing list. There are multiple ways to engage your mailing list. I will talk about the developer mailing list. The developer mailing listing, a parallel that you can do with the staff-plus daily work, is that dev mailing lists sometimes look like the architectural review lists that you may have in your company. That you go through the RFCs, being architecture reviews, architecture documents, or design documents that you have to review or write to. It’s very similar to the approach that you can take with developer mailing lists. Maybe one difference that you see is that in that mailing list, you have a little bit more diversity. Open source projects are usually in the global scale, multiple contributors from different parts of the world, what will reach you to have all this exposure to different cultures and backgrounds. Here’s one example from OpenJDK mailing list that one engineer is sending a draft of a proposal to collect feedback. Again, that’s what you’re looking for in this engagement. You want to collect feedback. You want to interact with other engineers across the globe, so you collect and improve whatever proposal for a code or whatever you’re looking for.