Month: April 2023

HashiCorp Policy-as-Code Framework Sentinel Adds Multiple Developer Experience Improvements

MMS • Matt Campbell

HashiCorp has released a number of improvements to Sentinel, their policy-as-code framework. The new features include an improved import configuration syntax, a new static import feature, support for named functions, and per-policy parameter values. There are also new helper functions to determine if a value is undefined.

The 0.19 release introduced an improved import configuration system. This provides a standardized naming convention and a more consistent import configuration that makes use of HCL syntax. The import block also now allows for overriding the default configuration for the imports and plugins that are used within a policy. This new syntax is shown below:

import "plugin" "time" {

config = {

timezone = "Australia/Brisbane"

}

}

import "module" "reporter" {

source = "./reporter.sentinel"

}

Version 0.19 also introduced a new static import feature. This allows for importing static, structured JSON data into policies. The block takes two configuration attributes: source representing the path to the data; and format which only supports JSON at this time.

import "static" "people" {

source = "./data/people.json"

format = "json"

}

Once imported, the data can be leveraged within the policy. Assuming the JSON has a key called names, the length of that object could be found using length(people.names). HashiCorp has indicated that support for additional data formats will be added in a later release.

Named functions were introduced in version 0.20. This functionality allows for defining functions that cannot be reassigned or reused. Note that anonymous functions can still be re-assigned, potentially causing the policy to fail if that function is called after. The syntax for named functions looks like this:

func sum(a, b) {

a + b

}

Version 0.21 added two helper functions to determine if a value is defined. In previous releases, policy authors had to use the else expression to recover from undefined values and provide an alternative value.

foo = undefined

// In versions prior to 0.21

foo else false is false // false

foo else true is true // true

// In version 0.21+

foo is defined // false

foo is not defined // true

This release also added per-policy parameter values. These are supplied once per policy and take precedence over globally supplied values. Previously, parameter values were supplied once within a config and shared across all policies.

policy "restrict-s3" {

source = "./deny-resource.sentinel"

params = {

resource_kind = "aws_s3_bucket"

}

}

Recent versions of Terraform Cloud have also added support for Open Policy Agent (OPA) as an alternative policy-as-code framework. OPA is an open-source policy engine that makes use of a high-level declarative language known as Rego.

Sentinel is available for download from the HashiCorp site. More details on these releases can be found on the HashiCorp blog or within the changelog.

Java News Roundup: String Templates, Quarkus, Open Liberty, PrimeFaces, JobRunr, Devnexus 2023

MMS • Michael Redlich

This week’s Java roundup for April 3rd, 2023 features news from OpenJDK, JDK 21, Quarkus 3.0.0.CR2 and 2.16.6.Final, Open Liberty 23.0.0.3, Apache Camel 3.18.6, PrimeFaces 12.0.4, JHipster Lite 0.31.0, JobRunr 6.1.3, Gradle 8.1-RC3 and Devnexus 2023.

OpenJDK

JEP 430, String Templates (Preview), has been promoted from Candidate to Proposed to Target status for JDK 21. This preview JEP, under the auspices of Project Amber, proposes to enhance the Java programming language with string templates, string literals containing embedded expressions, that are interpreted at runtime where the embedded expressions are evaluated and verified. The review is expected to conclude on April 13, 2023.

Gavin Bierman, consulting member of technical staff at Oracle, has published the first draft of the joint specification change document for JEP 440, Record Patterns, and JEP 441, Pattern Matching for switch, for review by the Java community.

JDK 21

Build 17 of the JDK 21 early-access builds was also made available this past week featuring updates from Build 16 that include fixes to various issues. Further details on this build may be found in the release notes.

For JDK 21, developers are encouraged to report bugs via the Java Bug Database.

Quarkus

The second release candidate of Quarkus 3.0.0 delivers new features: a quarkusUpdate Gradle task for updating Quarkus to a new version; Dev UI 2 is now default via the /q/dev or /q/dev-ui endpoints (Dev UI 1 is accessible via the /q/dev-v1 endpoint); and a new HTTP security policy mapping between roles and permissions. More details on this release may be found in the changelog.

Quarkus 2.16.6.Final, the sixth maintenance release, provides notable changes such as: a removal of the session cookie if ID token verification has failed; allow the use of null in the REST Client request body; support for repeatable @Incoming annotations in reactive messaging; and dependency upgrades to GraphQL Java 19.4, Wildfly Elytron 1.20.3.Final and Keycloak 21.0.1. Further details on this release may be found in the changelog.

Open Liberty

IBM has released Open Liberty 23.0.0.3 featuring bug fixes and support for: JDK 20; the Jakarta EE 10 Platform, Web and Core profiles; and the core MicroProfile 6.0 specifications.

Apache Camel

The release of Apache Camel 3.18.6 delivers big fixes, dependency upgrades and improvements such as: allow HTTP response headers with empty values to be returned to support applications that require this; improved handling of allowing or disallowing an HTTP request body; and a fix for the potential to block the Vert.x event loop if the route processing coming after the vertx-websocket consumer executes blocking operations. More details on this release may be found in the release notes.

PrimeFaces

The release of PrimeFaces 12.0.4 ships with bug fixes and new features: a restoration of the getExcelPattern() and validate() methods defined in the CurrencyValidator class;

Further details on this release may be found in the list of issues.

JHipster

The JHipster team has released version 0.31.0 of JHipster Lite with many dependency upgrades and notable changes such as: a fix for generating the same customConversions beans for use in MongoDB and Redis; a fix for the Apache Kafka producer and consumer; and a removal of the Jest testing framework dependency as it was only used for the optional-typescript module. More details on this release may be found in the release notes.

JobRunr

JobRunr 6.1.3 has been released to deliver a fix for the high number of calls to jobrunr_job_stats view that allows developers to disable Java Management Extensions (JMX) for the JobStats class.

Gradle

The third release candidate of Gradle 8.1 features: continued improvements to the configuration cache; support for dependency verification; improved error reporting for Groovy closures; support for Java lambdas; and support for building projects with JDK 20. Further details on this release may be found in the release notes.

Devnexus

Devnexus 2023 was held at the Georgia World Congress Center in Atlanta, Georgia this past week featuring speakers from the Java community who delivered workshops and talks on topics such as: Jakarta EE, Java Platform, Core Java, Architecture, Cloud Infrastructure and Security.

Devnexus, hosted by the Atlanta Java Users Group (AJUG), has a history that dates back to 2004 when the conference was originally called DevCon. The Devnexus name was introduced in 2010.

Developers can learn more about Devnexus and AJUG from this Foojay.io podcast hosted by Frank Delporte, senior technical writer at Azul, who interviewed Pratik Patel, Vice President of Developer Advocacy at Azul and AJUG president, and Vince Mayers, developer relations at Gradle and AJUG treasurer.

Visual Studio Extensibility SDK Preview 3: New Features for Building Productivity Extensions

MMS • Almir Vuk

Microsoft has released the third public preview of their VisualStudio.Extensibility SDK, introducing new features that enhance the productivity, customization, and debugging capabilities for developers who are developing Visual Studio Extensions. VisualStudio.Extensibility is a new framework for developing Visual Studio extensions.

With Preview 3, developers can leverage the Debugger Visualizers to simplify the debugging experience, Custom Dialogs to create tailored user interfaces, Query the Project System to access and interact with the project system, Editor Margin Extensions to add custom components to the margin of the code editor, and Extension Configuration options to give users more control over their extensions. These new features provide developers with more efficient and intuitive ways to create powerful extensions for Visual Studio, improving the overall development experience and productivity. The official GitHub repository contains the sample for creating the custom dialogs.

Preview 3 of VisualStudio.Extensibility now offers the ability to create custom debugger visualizers using Remote UI features, which enhance the debugging experience by allowing developers to create custom views of complex data types. Furthermore, the Preview 3 release introduces more customizable and visually appealing dialog features, allowing developers to interact with users in a more engaging manner.

These features are implemented using VisualStudio.Extensibility SDK, and provide developers with greater flexibility in creating custom dialogs that reflect their brand and improve the user experience. The use of WPF enables the creation of interactive and visually-rich dialog visuals, while Remote UI features to ensure the reliability and performance of the dialogs.

In addition to the debugger visualizers and custom dialogs, VisualStudio.Extensibility Preview 3 also includes the ability to query the project system for projects and solutions. This new feature lets developers obtain information about projects and solutions that match specific conditions, providing users with relevant experiences to their current code. The sample for this feature is available on the official GitHub project repository.

To enhance the development experience even further, VisualStudio.Extensibility Preview 3 now offers editor margin extensions. This feature allows developers to create front-and-center experiences in the editor margin, leveraging Remote UI to boost productivity. With editor margin extensions, developers can offer simple features such as word count or document encoding, or create custom navigation bars and headers to improve workflow. By utilizing this feature, developers can increase the visibility of their work and offer greater functionality to users, improving the overall development experience.

Lastly, VisualStudio.Extensibility Preview 3 makes configuring your extensions easier than ever before! Many components defined in extensions require configuration to determine how or when they appear in the IDE. With this release, Microsoft has listened to community and user feedback and completely overhauled the extension configurations for better usability and discoverability. You can now configure your extension with strongly-typed classes and properties, and easily discover predefined options with the help of IntelliSense. Configuration properties allow developers to place their commands in newly created menus and toolbars they have full control over.

The original release blog post provided a lot of code samples and explanations for all the new features and implementations. One of those is the sample code for setting a keyboard shortcut for a command using simple sets of keys and modifiers:

public override CommandConfiguration CommandConfiguration => new("%MyCommand.DisplayName%")

{

Shortcuts = new CommandShortcutConfiguration[]

{

new(ModifierKey.ControlShift, Key.G),

},

};

In addition to an original release blog post, and as part of the development process, the development team calls on developers to test the new release and share feedback through the issue tracker. Users are also invited to sign up for future user studies to help shape the future of this software development kit, visit the official GitHub project repository, and learn more about this project.

MMS • Daniel Dominguez

Bloomberg has released BloombergGPT, a new large language model (LLM) that has been trained on enormous amounts of financial data and can help with a range of natural language processing (NLP) activities for the financial sector. BlooombergGPT is a cutting-edge AI that can evaluate financial data quickly to help with risk assessments, gauge financial sentiment, and possibly even automate accounting and auditing activities.

According to a statement from Bloomberg, an AI that is specifically trained with financial information is necessary due to the complexity and distinctive vocabulary of the financial business. The Bloomberg Terminal, a computer software platform used by investors and financial professionals to access real-time market data, breaking news, financial research, and advanced analytics, will be accessible to BloombergGPT.

BloombergGPT represents the first step in the development and application of this new technology for the financial industry. With the use of this model, Bloomberg will be able to enhance its current financial NLP capabilities, including sentiment analysis, named entity recognition, news classification, and question-answering, among others.

Additionally, BloombergGPT will open up fresh possibilities for organizing the enormous amounts of data available on the Bloomberg Terminal. This will allow it to better serve the company’s clients and bring AI’s full potential to the financial industry.

According to the Bloomberg research paper, general models eliminate the requirement for specialization during training because they are capable of performing well across a wide range of jobs. Results from existing domain-specific models, however, demonstrate that general models cannot take their place.

While the vast majority of applications at Bloomberg are in the financial area and are best served by a specific model, they support a very big and diversified collection of jobs that are well serviced by a general model. Bloomberg’s aim was to create a model that could not only perform well on general-purpose LLM benchmarks, but also excel in producing superior outcomes on financial benchmarks. When compared to GPT-3 and other LLMs, BloombergGPT demonstrates competitive performance on general tasks and surpasses them in several finance-specific tasks.

The field of financial technology has numerous applications for Natural Language Processing (NLP), including tasks such as named entity recognition, sentiment analysis, and question answering. The introduction of BloombergGPT marks a significant milestone in AI-based financial analysis, furthering the potential of NLP in the financial industry.

MMS • Bruno Couriol

With Safari 16.4 recently adding support for import maps, JavaScript developers can now use import maps in all modern browsers. Older browsers can use a polyfill. Import maps bring better module resolution for JavaScript applications.

An import map allows resolving module specifiers in ad-hoc ways. With import maps, developers can separate the referencing of module dependencies from their location (on disk or a remote server). Developers can use bare module specifiers to import dependencies (e.g., lodash in import { pluck } from "lodash";). Dedicated tooling can handle the mapping between dependencies and their location without touching the application code.

As an immediate result of cross-browser support, the JSPM CLI has been relaunched a few days ago as an import map package management tool. The JSPM CLI for instance automatically outputs the following import map for the lit library:

<script type="importmap">

{

"imports": {

"lit": "https://ga.jspm.io/npm:lit@2.7.0/index.js"

},

"scopes": {

"https://ga.jspm.io/": {

"@lit/reactive-element": "https://ga.jspm.io/npm:@lit/reactive-element@1.6.1/reactive-element.js",

"lit-element/lit-element.js": "https://ga.jspm.io/npm:lit-element@3.3.0/lit-element.js",

"lit-html": "https://ga.jspm.io/npm:lit-html@2.7.0/lit-html.js",

"lit-html/is-server.js": "https://ga.jspm.io/npm:lit-html@2.7.0/is-server.js"

}

}

}

</script>

Guy Bedford, core open source contributor to the JSPM CLI and maintainer of the import maps polyfill, explained how import maps can improve tooling and developer experience when using JavaScript modules:

JSPM respects

package.jsonversion ranges and supports all the features of Node.js module resolution in a browser-compatible way. It supports arbitrary module URLs and CDN providers e.g. by just adding--provider unpkgto the install command (or even localnode_modulesmappings via--provider nodemodules).

Better apps are written when there are fewer steps between the developer and their tools, fewer steps between development and production, and fewer steps between applications and end-users.

Alternatively, developers can provide the import maps manually and remove the need for build tools in some cases. The Lit team provided such a use case on Twitter (see online playground). One Twitter user commented:

Frontend without needing to install npm is something I’ve dreamed of for years.

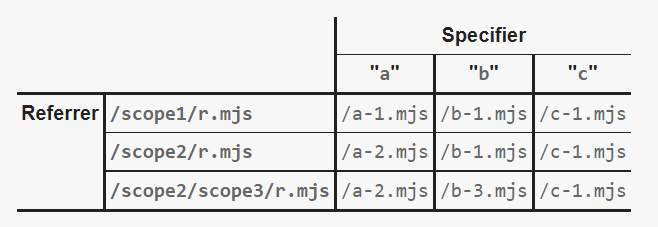

The import map WhatWG specification details a mechanism to resolve module specifiers based on the content of two fields (imports and scopes) passed as a JSON object. The following import map

{

"imports": {

"a": "/a-1.mjs",

"b": "/b-1.mjs",

"c": "/c-1.mjs"

},

"scopes": {

"/scope2/": {

"a": "/a-2.mjs"

},

"/scope2/scope3/": {

"b": "/b-3.mjs"

}

}

}

results in the following module resolutions (using relative URLs for brevity):

The previous table illustrates that when a script (the referrer) located in /scope2/scope3 imports code from module a (the specifier), that module will be loaded from the /a-2.mjs location. As there is no map configured under the scope /scope2/scope3 for module a, the resolution algorithm uses the closest available (i.e., the one for /scope2).

Import maps are included in HTML files with a tag in the section of the document:

<script type="importmap">

... JSON import map here

</script>

Older browsers will benefit from the es-module-shims polyfill. Support of import maps in browsers can be detected as follows:

if (HTMLScriptElement.supports('importmap')) {

Developers can refer to the full import maps specification for additional technical details.

MMS • Aditya Kulkarni

Recently, the open-source Git project released its latest version 2.40, bringing some new features and bug fixes. Highlights of this release include updates to the git jump tool, enhancements to cat-file tool, and faster response on Windows.

Taylor Blau, Staff Software Engineer at GitHub provided a detailed walkthrough of the updates in Git 2.40. git jump, an optional tool in Git’s contrib directory, now supports Emacs and Vim. The git jump tool works by wrapping Git commands, such as git grep, and feeding their results into Vim’s quickfix list.

If you are using Emacs, git jump can be used to generate a list of locations using the command M-x grepgit jump --stdout grep foo. The command will then show all matches of “foo” in your project, enabling easy navigation. Git jump also works with diff and merge.

Git’s cat-file tool is commonly used to print out the contents of arbitrary objects in Git repositories. With Git version 2.38.0, cat-file tool got support to apply Git’s mailmap rules when printing out the contents of a commit. To understand the size of a particular object, --batch-check and -s options were used with cat-file tool. However, the previous versions of Git had an issue causing incorrect results when using the --use-mailmap option with the cat-file tool in combination with the --batch-check and -s options. With Git 2.40, this has been corrected, and the --batch-check and -s options will now report the object size correctly.

The git check-attr command is used to determine which gitattributes are set for a given path. These attributes are defined and set by one or more .gitattributes files in a repository. For complex rules or multiple .gitattributes files, check-attr git command was used:

$ git check-attr -a git.c

git.c: diff: cpp

git.c: whitespace: indent,trail,space

Previously, check-attr required an index to be present, making it challenging to use in bare repositories. A bare repository is a directory with a .git suffix without a locally checked-out copy of any of the files under revision control. Now in Git 2.40 and newer versions, there is a support for --source= option to scan for .gitattributes in, making it easier to use in bare repositories.

GitHub’s Twitter handle posted the highlights, which caught the attention of the tech community on Twitter. One of the Twitter users Andrew retweeted the announcement with a quote, “git jump seems pretty useful! https://github.com/git/git/tree/v2.19.0/contrib/git-jump“

Git 2.40 also includes improvements to rewrite old parts of Git from Perl or Shell to modern C equivalents. This allows Git commands to run faster on platforms like Windows. Now, git bisect is now implemented in C as a native builtin and the legacy implementation git add --interactive has been retired.

There are some improvements to Git’s CI infrastructure in version 2.40. Some long-running Windows-specific CI builds have been disabled, resulting in faster and more resource-efficient CI runs for Git developers.

MMS • A N M Bazlur Rahman

JEP 444, Virtual Threads, was promoted from Candidate to Proposed to Target status for JDK 21. This feature provides virtual threads, lightweight threads that dramatically reduce the effort of writing, maintaining, and observing high-throughput concurrent applications on the Java platform. This JEP intends to finalize this feature based on feedback from the previous two rounds of preview: JEP 436, Virtual Threads (Second Preview), delivered in JDK 20; and JEP 425, Virtual Threads (Preview), delivered in JDK 19.

With this JEP, Java now has two types of threads: traditional threads, also called platform threads, and virtual threads. Platform threads are a one-to-one wrapper over operating system threads, while virtual threads are lightweight implementations provided by the JDK that can run many virtual threads on the same OS thread. Virtual threads offer a more efficient alternative to platform threads, allowing developers to handle a large number of tasks with significantly lower overhead. These threads offer compatibility with existing Java code and a seamless migration path to benefit from enhanced performance and resource utilization. Consider the following example:

try (var executor = Executors.newVirtualThreadPerTaskExecutor()) {

IntStream.range(0, 10_000).forEach(i -> {

executor.submit(() -> {

Thread.sleep(Duration.ofSeconds(1));

return i;

});

});

}

The JDK can now run up to 10,000 concurrent virtual threads on a small number of operating system (OS) threads, as little as one, to execute the simple code above that involves sleeping for one second.

Virtual threads are designed to work with thread-local variables and inheritable thread-local variables, just like platform threads. However, due to the large number of virtual threads that can be created, developers should use thread-local variables with caution. To assist with the migration to virtual threads, the JDK provides a system property, jdk.traceVirtualThreadLocals, that triggers a stack trace when a virtual thread sets the value of any thread-local variable.

The java.util.concurrent package now includes support for virtual threads. The LockSupport API has been updated to gracefully park and unpark virtual threads, enabling APIs that use LockSupport, such as Locks, Semaphores, and blocking queues, to function seamlessly with virtual threads. The Executors.newThreadPerTaskExecutor(ThreadFactory) and Executors.newVirtualThreadPerTaskExecutor() methods provide an ExecutorService that creates a new thread for each task, facilitating the migration and interoperability with existing code that uses thread pools and ExecutorService.

Networking APIs in the java.net and java.nio.channels packages now support virtual threads, enhancing efficiency in concurrent applications. Blocking operations on a virtual thread free up the underlying platform thread, while I/O methods in the Socket, ServerSocket and DatagramSocket classes have been made interruptible. This update promotes consistent behavior and improved performance for Java developers working with concurrent applications.

The java.io package, which provides APIs for streams of bytes and characters, has been updated to avoid pinning when used in virtual threads. Pinning in virtual threads refers to a lightweight thread being “stuck” to a specific platform thread, limiting concurrency and flexibility due to blocking operations. BufferedInputStream, BufferedOutputStream, BufferedReader, BufferedWriter, PrintStream, and PrintWriter now use an explicit lock instead of a monitor when used directly. The stream decoders and encoders used by InputStreamReader and OutputStreamWriter now use the same lock as their enclosing InputStreamReader or OutputStreamWriter.

Java Native Interface (JNI) has introduced a new function, IsVirtualThread, to test if an object is a virtual thread. The JNI specification otherwise remains unchanged.

The debugging architecture, consisting of the JVM Tool Interface (JVM TI), the Java Debug Wire Protocol (JDWP), and the Java Debug Interface (JDI), has been updated to support virtual threads. All three interfaces now support virtual threads, with new capabilities and methods added to handle thread start and end events and bulk suspension and resumption of virtual threads.

JDK Flight Recorder (JFR) now supports virtual threads with new events such as jdk.VirtualThreadStart, jdk.VirtualThreadEnd, jdk.VirtualThreadPinned, and jdk.VirtualThreadSubmitFailed. These events provide insight into the behavior of virtual threads in the application.

Java Management Extensions (JMX) will continue to support only platform threads through the ThreadMXBean interface. A new method in the HotSpotDiagnosticsMXBean interface generates the new-style thread dump to support virtual threads.

While virtual threads bring significant performance improvements, developers should be aware of compatibility risks due to changes in existing APIs and their implementations. Some of these risks include revisions to the internal locking protocol in the java.io package and source and binary incompatible changes that may impact code that extends the Thread class.

Virtual threads mark a significant milestone in Java’s journey to support highly concurrent and scalable applications. With a more efficient and lightweight threading model, developers can now handle millions of tasks with ease and better utilize system resources. Developers can leverage more details on JEP 425, which can be found in this InfoQ news story and this JEP Café screen cast by José Paumard, Java developer advocate with the Java Platform Group at Oracle.

MMS • Steef-Jan Wiggers

Microsoft recently announced a new offering for learning Azure with Learn Rooms, a part of the Microsoft Learn community designed to allow learners to connect with other learners and technical experts with similar interests in Azure.

The learning rooms are open and available to anyone who wants to be part of a supportive and interactive community to enhance their learning experience. They are designed for group learning and facilitated by an expert in the field. The learning rooms offer synchronous and asynchronous conversations and office hours, and many primarily focus on Microsoft Azure.

With learn rooms, the company offers a new experience combined with the Tech community learning hub and Microsoft Q&A.

Lanna Teh, product marketing manager, Azure Marketing, explains in an Azure blog post:

Learning rooms focus across several technology areas. They include Microsoft Cloud and Azure subjects, such as Azure Infrastructure, Data and AI, and Digital and Application Innovation, and their small size ensures that you get exactly the support you need. Each room is led by Microsoft Learn experts, who are validated technical subject matter experts present throughout our community resources with experience in technical skilling, community support, and a deep knowledge in the room’s specific topic area.

In addition, John Deardurff, Azure SQL Microsoft Certified Trainer, tweeted:

Have you heard about the NEW Microsoft Learning Rooms to assist in your certification journey? Find connections with peers and engage with experts to dive into topic-specific questions via discussions and virtual sessions.

Learners who like to join a learning room can check the learning rooms directory. Furthermore, they must have a Microsoft account and fill out a form to join a room. Experts, such as Microsoft Most Valuable Professionals (MVPs), Microsoft Certified Trainers (MCTs), and Microsoft Technical and Trainers (MTTs), on the other hand, are selected by invitation only.

Microsoft’s competitors in the cloud space, AWS, and Google, have similar learning offerings. AWS provides anyone interested in learning their platform with AWS Training, while Google offers Cloud Skill Boost.

Lastly, Microsoft also offers other programs for learning with Azure Skills Navigator guides and Connected Learning Experience (CLX).

MMS • Renato Losio

Azure recently announced the preview of the ND H100 v5, virtual machines that integrate the latest Nvidia H100 Tensor Core GPUs and support Quantum-2 InfiniBand networking. According to Microsoft, the new option will offer AI developers improved performance and scaling across thousands of GPUs.

With generative AI solutions like ChatGPT accelerating demand for cloud services that can handle the large training sets, Matt Vegas, principal product manager at Azure, writes:

For Microsoft and organizations like Inflection, Nvidia, and OpenAI that have committed to large-scale deployments, this offering will enable a new class of large-scale AI models.

Designed for conversational AI projects and ranging in sizes from eight to thousands of Nvidia H100 Tensor Core GPUs, the new servers are powered by 4th Gen Intel Xeon Scalable processors and provide interconnected 400 Gb/s Nvidia Quantum-2 CX7 InfiniBand per GPU. According to the manufacturer of graphics processing units, the new H100 v5 can speed up large language models (LLMs) by 30x over the previous generation Ampere architecture. In a separate article, John Roach, features writer and content strategist, summarises how “Microsoft’s bet on Azure unlocked an AI revolution“:

The system-level optimization includes software that enables effective utilization of the GPUs and networking equipment. Over the past several years, Microsoft has developed software techniques that have grown the ability to train models with tens of trillions of parameters, while simultaneously driving down the resource requirements and time to train and serve them in production.

But the new instances do not target only the growing requirements of Microsoft and other enterprises implementing large-scale AI training deployments. Vegas adds:

We’re now bringing supercomputing capabilities to startups and companies of all sizes, without requiring the capital for massive physical hardware or software investments.

Azure is not the only cloud provider partnering with Nvidia to develop a highly scalable on-demand AI infrastructure. As reported recently on InfoQ, AWS announced its forthcoming EC2 UltraClusters of P5 instances, which can scale in size up to 20000 interconnected H100 GPUs. Nvidia recently announced as wellthe H100 NVL, a memory server card for large language models.

Due to the massive spike in demand for conversational AI, some analysts think there is a huge supply shortage of Nvidia GPUs, suggesting some firms might be turning to AMD GPUs and Cerebras WSE to supplement a shortage of hardware.

The preview (early access) of the Azure ND H100 v5 VMs is only available to approved participants who must submit a request.

MMS • RSS

Businesses are 24/7. This includes everything from the website, back office, supply chain, and beyond. At another time, everything ran in batches. Even a few years ago, operational systems would be paused so that data could be loaded into a data warehouse and reports would be run. Now reports are about where things are right now. There is no time for ETL.

Much of IT architecture is still based on a hub-and-spoke system. Operational systems feed a data warehouse, which then feeds other systems. Specialized visualization software creates reports and dashboards based on “the warehouse.” However, this is changing, and these changes in business require both databases and system architecture to adapt.

Fewer copies, better databases

Part of the great cloud migration and the scalability efforts of the last decade resulted in the use of many purpose-built databases. In many companies, the website is backed by a NoSQL database, while critical systems involving money are on a mainframe or relational database. That is just the surface of the issue. For many problems, even more specialized databases are used. Often times, this architecture requires moving a lot of data around using traditional batch processes. The operational complexity leads not only to latency but faults. This architecture was not made to scale, but was patched together to stop the bleeding.

Databases are changing. Relational databases are now able to handle unstructured, document, and JSON data. NoSQL databases now have at least some transactional support. Meanwhile distributed SQL databases enable data integrity, relational data, and extreme scalability while maintaining compatibility with existing SQL databases and tools.

However, that in itself is not enough. The line between transactional or operational systems and analytical systems cannot be a border. A database needs to handle both lots of users and long-running queries, at least most of the time. To that end, transactional/operational databases are adding analytical capabilities in the form of columnar indexes or MPP (massively parallel processing) capabilities. It is now possible to run analytical queries on some distributed operational databases, such as MariaDB Xpand (distributed SQL) or Couchbase (distributed NoSQL).

Never extract

This is not to say that technology is at a place where no specialized databases are needed. No operational database is presently capable of doing petabyte-scale analytics. There are edge cases where nothing but a time series or other specialized database will work. The trick to keeping things simpler or achieving real-time analytics is to avoid extracts.

In many cases, the answer is how data is captured in the first place. Rather than sending data to one database and then pulling data from another, the transaction can be applied to both. Modern tools like Apache Kafka or Amazon Kinesis enable this kind of data streaming. While this approach ensures that data make it to both places without delay, it requires more complex development to ensure data integrity. By avoiding the push-pull of data, both transactional and analytical databases can be updated at the same time, enabling real-time analytics when a specialized database is required.

Some analytical databases just cannot take this. In that case more regular batched loads can be used as a stopgap. However, doing this efficiently requires the source operational database to take on more long-running queries, potentially during peak ours. This necessitates a built-in columnar index or MPP.

Databases old and new

Client-server databases were amazing in their era. They evolved to make good use of lots of CPUs and controllers to deliver performance to a wide variety of applications. However, client-server databases were designed for employees, workgroups, and internal systems, not the internet. They have become absolutely untenable in the modern age of web-scale systems and data omnipresence.

Lots of applications use lots of different stove-pipe databases. The advantage is a small blast radius if one goes down. The disadvantage is there is something broken all of the time. Combining fewer databases into a distributed data fabric allows IT departments to create a more reliable data infrastructure that handles varying amounts of data and traffic with less downtime. It also means less pushing data around when it is time to analyze it.

Supporting new business models and real-time operational analytics are just two advantages of a distributed database architecture. Another is that with fewer copies of data around, understanding data lineage and ensuring data integrity become simpler. Storing more copies of data in different systems creates a larger opportunity for something to not match up. Sometimes the mismatch is just different time indexes and other times it is genuine error. Combining data into fewer and more capable systems, you reduce the number of copies and have less to check.

A new real-time architecture

By relying mostly on general-purpose distributed databases that can handle both transactions and analytics, and using streaming for those larger analytics cases, you can support the kind of real-time operational analytics that modern businesses require. These databases and tools are readily available in the cloud and on-premises and already widely deployed in production.

Change is hard and it takes time. It is not just a technical problem but a personnel and logistical issue. Many applications have been deployed with stovepipe architectures, and live apart from the development cycle of the rest of the data infrastructure. However, economic pressure, growing competition, and new business models are pushing this change in even the most conservative and stalwart companies.

Meanwhile, many organizations are using migration to the cloud to refresh their IT architecture. Regardless of how or why, business is now real-time. Data architecture must match it.

Andrew C. Oliver is senior director of product marketing at MariaDB.

—

New Tech Forum provides a venue to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to newtechforum@infoworld.com.