Month: May 2023

MMS • Aviran Mordo

Subscribe on:

Transcript

Shane Hastie: Good day, folks. This is Shane Hastie for the InfoQ Engineering Culture podcast. Today I’m sitting down with Aviran Mordo, who is the VP of Engineering of Wix. Aviran, welcome. Thanks for taking the time to talk to us today.

Aviran Mordo: Hello and good evening.

Shane Hastie: Thank you, and good morning to you. I’m a New Zealand Aviran is in Israel, so the miracles of modern technology, we are able to communicate in real time. Aviran, the place I’d like to start with our guests is who’s Aviran?

Aviran Mordo: I’m VP of Engineering for Wix I’ve been at the company for 12 years. Before that, I worked at a lot of big companies like Lockheed Martin. I had my own startups. So I’ve been around for quite a while. I’ve been a very vocal advocate of the DevOps culture at Wix. We call it the developer-centric culture where we put the developers in the middle and then the organization helps developers to ship software faster, and that’s our goal, to try to figure out ways of how to ship software faster.

Shane Hastie: So what does a developer-centric culture look like and feel like?

In a developer-centric culture, the whole organisation is optimised to help ship software faster [01:24]

Aviran Mordo: If we think about development lifecycle, like an assembly line for cars, for instance, the developers are the assembly line because they build the product. So the software is the product, and they know the product the best. They know it better than the QA, testing, they know it better than the product that try to define them because they actually coded the actual product. So the whole developer-centric idea is the entire organization have a support circle with specialized professions that supports developers and help developers basically shift software faster.

Shane Hastie: And for the individual developer, how do we prevent this from feeling just like, “Work harder?”

Aviran Mordo: Because it’s not work harder, it’s work smarter. You do more things in the same amount of time that would’ve taken you before this whole continuous delivery movement or finding ways to do smarter things. If we’re talking about platform engineering or low code development where you codified a lot of the things ahead and you basically have to write less code and achieve much more in a lower amount of time with fewer people, which we know developers are very scarce and hard to recruit. So if you can do more, you just basically need less developers.

Shane Hastie: So one of the things you mentioned before we started recording was the concept of platform as runtime. What do you mean by that?

Platform as runtime [02:52]

Aviran Mordo: Platform as a runtime, this is a new concept that we have been internally working on at Wix. It relates to platform engineering. However, if you hear about all the talks about platform engineers and a lot of companies are talking with regard to platform engineering on the CI, Kubernetes, all those areas of the platform and Wix are basically past that thing because we built this platform many years ago and we took the platform engineering to the next level to codify basically a lot of the concerns that developers have in their day-to-day life.

But if we’ll take our own areas of business, which is basically business models, we build com platforms, we build blogs, we build events platforms or booking platforms. So those are all business applications. And for all business applications, you have basically the same concepts or the same concerns that you need to do, like throw domain events, model your entities in a way that is multi-tenant, you know, GDPR concerns, GRPC communications and how all those things are working together.

So usually most companies, we build our own frameworks and libraries that the common libraries that developers just build into their microservices deployables. And that creates a problem because while we build those libraries, we also build tons of documentations that developers need to understand to use those libraries. Okay, for GDPR, you have to be expert in privacy. And how does the AB testing system works for each company have their own AB test for feature flag systems, and how do you communicate via GRP? What are the headers that you need?

So tons of documentations that developers need to learn and understand. And what we did is that we codified a lot of these concepts into our platform. So basically we looked at the amount of lines of code that developers have to write, and by analyzing them, we saw that 80% of the lines of code are basically wiring stuff and configuring things and not actually writing the business logic that you have to write, the business value, that this is what we get paid for, to bring business value to the business, not to wire things.

And that’s 80% of our work is wiring stuff. So what we did is we coded it into a very robust framework or platform. We took this framework and instead of having developers to compile this loaded framework, which is basically 80% of your deployable is the framework. And we build a serverless cloud, our own serverless infrastructure, and we put the framework on the serverless cloud. And now developers, they don’t need to compile this code themselves, they just build against an interface of some sort, and they deploy their deployable into the cloud where they get the framework.

Benefits from platform as runtime [05:58]

Aviran Mordo: So the platform is basically their runtime. It runs their business logic for them. What that actually also gives us is the ability to have a choke point and to update. If you think about it, Wix have about 3000 unique microservices clusters. If we think about the Log4j security vulnerability that was discovered a few months ago now, what we had to do before we have the platform as a run time is to ask all the teams in Wix to rebuild 3000 microservices to recompile with, “Okay, we update the framework, we update the dependency. Now everybody have to rebuild, recompile and redeploy everything into the cloud.”

And that takes a lot of time. And of course, a lot of things get missed on the way. So by taking out the platform, the framework from the microservice itself and put it outside of the microservices as a runtime, we can control these whole common libraries that appears everywhere else in all the microservices and control it from a central place as the runtime. And that gives us a huge velocity gain. So before we build, we codified all this logic. So now developers don’t need to read dozens of documents just to understand how things works. Things just works for them.

And during this process, we were able to eliminate between 60 to 80% of the lines of code that developers have to write to achieve the same goal and reduce the time for development tremendously. From weeks, it took us like two to three weeks for a new microservices that doesn’t really do much. Basically a basic crowd operations because you really have to work hard to do all these wiring things into a matter of hours. So now developers in a matter of two or three hours, they can have a new microservice running on the cloud with all the legal requirements, the business requirement, the infrastructure, the ecosystem requirements that’s out there.

Shane Hastie: Who are the team that maintain this platform?

Maintaining the platform [07:59]

Aviran Mordo: So we have an infrastructure team and they work just like any other product team. So we looked at our platform as a product and we have technical product that go into the different teams at the company. They sit with them, they analyze their code, they build with them code and try to extract and find commonalities between different business units. They say, “Okay, this is something that is common to a lot of developers. So it’s common for Wix’s business areas. And we extract those things and we will create a team of people from different parts of the company. We try to understand, “Okay, what is the commonality code?” Put that into the platform and have that as a product. We had this concept before we kind of did this mind shift. Infrastructure teams, they tend to build things that are cool that they say, “Okay, this is what developers should know and understand.”

And then a lot of times the products that the infrastructure teams are building are not being used or being used in very small parts of the organizations. So what we did in our platform engineering team is we put one main KPI for our infrastructure team is you do something, you commit on adoption, you’re not succeeding just by finishing this new shining infrastructure. It’s just like when you develop a business product, you want customers to come and buy your product. So same for infrastructure teams. We commit on adoption. Our success is how many developers in the organization actually use this new infrastructure. And if they don’t use it means that we didn’t succeed in building the right product for them.

Shane Hastie: How do you prevent this from becoming a single point of failure? How do you keep quality in this platform?

Maintaining quality in the platform [09:52]

Aviran Mordo: There is always a single point of failure in every system. At Wix, we put a lot of emphasis, and especially in the infrastructure team, on quality. We are doing the best practices of test driven development and constantly monitoring things. But we also have the single point of failure is not really single because it is a distributed system. There are hundreds of servers running. So unless there is a bug, then you have to fix it. But for that, you know, got test, you got gradual rollout, you got AP testing, feature flex.

So all the best practices in the industry to prevent it. But if a service goes down, then that’s just one cluster of hundreds of cluster or thousands of clusters that are coming down. But it happens rarely, but it does happen. But we look at this runtime as a critical infrastructure, just like you would look at an API gateway. Just a critical infrastructure that essentially it is a single point of failure. So we look at it just as another layer, just like a load balancer or a gateway server. This is the platform, this is the runtime, it has to be at the highest quality and the best performance that we can get.

Shane Hastie: Another thing that you spoke about before we started recording was shifting left in the design space. What are you doing there? It sounds pretty interesting.

Shifting left in design [11:13]

Aviran Mordo: We talked about how we constantly trying to try ways in improving our development velocity. So up until now we talked about the backend side. So let’s talk about the frontend side. Since Wix is a website building platform, we employ in Israel the most amount of frontend developers in Israel and the largest design studio in the country. So we have a lot of experience of seeing frontend developers and designers how they actually work together.

And what we saw that they don’t really work together, they work alongside each other. So in most cases, when you want to design a new web application or a website, you have the designer design in whatever design tool that they want via Photoshop or Figma or any design tool that they feel comfortable with. And then they hand over the designs to the developers and they try to copy to the best of their ability and their tools into HTML and CSS.

It’s a web browser. So it’s a two different tools that are not always compatible in their capabilities. And there is always this back and forth ping-pong between designers and developers. And then for every change, even during the life cycle of a product, for every change that marketing want to do or the business product want to do, they have to go back to the developers, “Hey, I need to move this button from here to there. Please change this color or we want to do a test.” Or something like that. And that is kind of wasting the time and talent of developers because if I need to move something on the screens, it’s something that you don’t really need a developer to do. So we want developers and designers to work on the same tools. So this is where the movement of flow code comes into place.

So which is one of the players in this area. So we have our own product, it’s called Editor X, where designers and developers basically can work on the same tools while designers move components around. They put it on the screen, developers go in the same IDE, it’s basically a visual IDE and codify their components. So instead of having developers … I wouldn’t call it wasting their time, but investing their time in trying to move things around or doing the pixel perfect, messing around with CSS and browser compatibilities, developers and designers work together as one team.

So the design becomes just another developer with their own responsibility and developers codify the actual business logic that runs behind the component. So we created a holistic team that can actually work faster and better. And if product managers, they want to change something on the screen, they don’t need to go to developers, which is basically the most expensive resource in the organization. Our developers, they can have the designers or the UX expert just play around with the design, change the design without affecting the code because you are working on the same environment.

Shane Hastie: So this is a tighter collaboration. These are often people with very different skill sets, but also perspectives. How do you bring them together into this one team? How do you create the one team culture?

Enabling one-team culture [14:50]

Aviran Mordo: So this is something that is ingrained in the way that Wix is working. Our organizational structure is basically defined as we call them companies and guilds. So companies think about it as a group of people that are responsible for business domains. So they actually build the product and they have their own 1, 2, 3, how many teams that they need to work. So in this team, you have developers, you have QA, you have product managers, you also have UX experts and designers, and they’re all part of the same team.

And then you have the guilds, the professional guilds that are responsible for having the standards and the professional expertise and building the tools for each profession. So having them working on the same team, having the same business goals, it creates a holistic team that instead of throwing the work over defense, and this is tied up to all the DevOps, continuous delivery culture that instead of throwing the responsibility, “Okay, I’m done with my work now. Okay, product manager done with the work, now go developers, develop now, QA tested.” And in our system they operated, we create this whole, again, it’s this whole dev-centric, developer-centric culture that it’s one team with experts, with subject expert, they’re all working together as a team to basically help the developers basically ship the product. So designers just become an integral part of the development team.

Shane Hastie: That structure makes sense with the companies and the guilds, what you’re calling the company there, I would possibly have called it a value stream. How are these companies incentivized?

Incentivizing internal companies [16:33]

Aviran Mordo: Well, they have their own business KPIs, and it’s not a P& L, especially at Wix because we have companies that their product earn money. For instance, the e-commerce platform, this is a product that makes money. You have the people going and buying and stuff. But we have another company that’s, for instance, the blog company. The blog is an add-on. It’s a necessity for the whole Wix ecosystem because most websites need blog. But blog is a free product. So each company have their own KPI. So if the blog KPI will be adoption or a number of customers, they’re not paying customers.

But we see that there is an adoption to the product. A lot of websites have installed blogs in their website while let’s say the econ platform or the payments company that are responsible for receiving processing payments and stuff, they have their own KPIs. So payments is not something that you sell, but it’s something that you process. So their payments KPI will be the number of transactions that we processed or how many customers decided to install, for instance, Wix payments as opposed to just use PayPal or any alternative payments, so they have their own KPIs and the ecom platform have their own KPIs, right? Number of stores or in the stores, they know the number of transactions or the GPV, each company have their own metrics.

Shane Hastie: And how do you keep those aligned with the overall organizational goals?

Aviran Mordo: That comes to our organizational structure. We specifically call them companies and not value streams because we treat them as startups within the big organizations. So each company have their own quote unquote CEO. We call them head of company and they have their own engineering manager. And basically they get funded by Wix as a whole. And while they have their own KPIs, if you think about startups, startups have board of directors that they align them with strategic goals. So each company have, we call them a chairman, which is basically the board of director.

The chairman is one of the Wix’s senior management. And between the head of company and chairman, they can decide like 95 of the things they can decide together without involving all of Wix’s management. So the chairman, which is serves as the board of director, aligns the company with the needs of Wix and also considers the needs of this mini-company itself within Wix. So it’s just like any other startup.

Shane Hastie: Interesting structure and sounds like an interesting place to work. Thank you very much. Some great examples of tools, techniques to improve velocity, and I think there’s a lot that our audience will take away from this. If people want to continue the conversation, where do they find you?

Aviran Mordo: They can find me on LinkedIn mostly. I’m also on Twitter, but mostly on LinkedIn.

Shane Hastie: Wonderful. Thank you so much.

Aviran Mordo: Thank you very much. It’s been a pleasure.

Mentioned:

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • Tapabrata Pal

Transcript

Pal: I’m Topo Pal. Currently, I’m with Fidelity Investments. I’m one of the vice presidents in enterprise architecture. I’m responsible for DevOps domain architecture and open source program office. Essentially, I’m a developer, open source contributor, and a DevOps enthusiast. Recently, I authored a book called, “Investments Unlimited.” It is a story about a bank undergoing DevOps transformation, and suddenly hit by a series of findings by the regulators. The book is published by IT Revolution and available on all the major bookselling sites and stores, so go check it out.

Interesting Numbers

Let’s look at some interesting numbers. By 2024, 63% of world’s running software will be open source software. In AI/ML, specifically, more than 70% software is open source. Top four OSS ecosystems have seen 73% increase in download. Python library downloads have increased by 90%. A typical microservice written in Java has more than 98% open source code. If you look at the numbers, they’re quite fascinating. They’re encouraging, they’re interesting, and scary, all at the same time. Interesting is just the numbers, 63%, 70%, these are all just really interesting numbers. Encouraging is big numbers, just seeing where open source has come to. The scary is, again, those big numbers from an enterprise risk standpoint, which basically means that most of the software that any enterprise operates and manages are out of their hand, out of their control. They’re actually written by people outside of their enterprises. However, about 90% of IT leaders see open source as more secure. There’s another argument against this, the bystander effect. Essentially, everyone thinks that someone else is making sure that it is secure. The perception is that it is more secure, but if everybody thinks like that, then it may not be the reality, in whatever it is, just the fact that 90% leaders trusting open source is just huge. Until this happens, everything goes in smoke when a little thing called Log4j brings down the whole modern digital infrastructure.

Log4j Timeline

Then, the next thing to look at is the timeline of Log4Shell. I took this screenshot from Aquasec’s blog. This is one of the nicest timeline representations of Log4j. This is just for reference. Nothing was officially public until the 9th of December 2021. Then, over the next three weeks, there are three new releases and correspondingly new CVEs against those, and more. It was a complete mess. It continued to the end of December, and then bled into next year, January, which is this year. Then February, and maybe it’s still continuing. We’ll see.

Stories About Log4j Response

Let’s start with the response patterns. I’ll call them stories. As a part of writing this paper, we interviewed or surveyed a handful number of enterprises, across financial, retail, and healthcare companies of various sizes. These companies will remain anonymous. The survey is not scientific by any means. Our goal was not to create a scientific survey, we just wanted to get a feel of what the industry and the people in the industry experienced during this fiasco, how they’re impacted. I think most companies will fit into one of these stories. These stories are not like individual enterprises that we picked from our sample. This is kind of, collected the whole stories and grouped them in three different buckets, and that’s how we are presenting. Even though it may sound like I’m talking about one enterprise, in each of these stories, but they’re not. I also thought about the name of the story, the good, the bad, and the ugly. I don’t think that that’s the right way to put it. We’ll do number one, number two, and number three, as you will see that there is nothing bad or ugly about it. It’s just about these enterprises’ maturity. The stories are important here, because you’ll see why things are like what they are.

The question is, what did we actually look at when we built the stories and created these response patterns? These are the things that we looked at. Number one, the detection, or when someone in a particular enterprise found out about this, and then, what did they do? Number two is declaration. At what point there is a formal declaration across the enterprise saying that there is a problem. Typically, this is from a cyber group or information security department, or from the CISO’s desk. Next is the impact analysis and mitigation. How did these enterprises actually go for impact analysis and mitigation? Mitigation as in stop the bleed. Then the remediation cycles. We call it cycles intentionally, because there was not just one single remediation, there are multiple of those there, because as I said, multiple versions came along in a period of roughly three weeks. Then the cycles are also some things, some cycle that are generated by the enterprise risk management processes that go after this set of applications first, then the next set, then the third set. We’ll talk about those. The last one is the people. How did the people react? What did they feel? How did they collaborate? How did they communicate with each other? Overall, how did they behave during this period of chaos? We tried to study that. Based on these, we formed these different patterns, the three patterns, and I’m calling that, three stories.

Detection

With that, let’s look at the first news of Log4j vulnerability, the power of social media. It came out at around 6:48 p.m., December 9, 2021. I still remember that time because I was actually reading that when it popped up on my Twitter feed. I actually called our InfoSec people. That’s the start of Log4j timeline. With that, let’s go to the detection. On 2021 December 9th, let’s talk about the detection. Note that, InfoSec was not the first one to know about it. Maybe they knew about it, but they’re not the first one. Right from the get-go, there’s a clear difference between the three with regards to just handling the tweet. Number one, cyber engineering, some engineers in the cyber security department noticed the tweet and alerted their SCA, or software composition analysis platform team immediately. Then other engineers noticed that too in their internal Slack channel. SCA platform team sends inquiry email right then and there to the SCA vendor in that evening itself.

Number two, similarly, some engineers noticed that tweet and sends the Twitter link to the team lead. Then the team lead notifies the information security officer, and it stops there. We don’t know what happened after that, in most of the cases. Number three, on the other hand, there’s no activities to report, nobody knows if anybody has seen that, or if they did anything. Nothing to track. Notice the difference between number one and number two. Both of them are reacting, their engineers are not waiting for direction. We don’t know what the ISOs did after number two, actually. The team lead in number two enterprise notified the ISO, and we don’t know what the ISO did after that. Let me now try to represent this progress in terms of some status so that we can visualize it and understand what’s going on here. In this case, number one is on green status on zero day. Number two is also green. Number three is kind of red because we don’t know what happened. Nobody knows.

Declaration

With that, let’s go to the next stage, which is declaration, or in other words, when InfoSec or cyber called it a fire, that we have a problem. On December 10th, morning, the SCA vendor confirms zero day. Engineers at the same time almost start seeing alerts in their GitHub repository. Then the cyber team declares zero day, informs the senior leadership team and the mass emails go out. This is all in the morning. I think they are on green on day one, they reacted in a swift manner that put them in the green status. Number two, ISO notifies the ISO who was informed about this, on the previous day. He notifies the internal red team. The red team discusses with some third-party vendors, and they confirm that it is a zero day. Then CISO is informed by midday. Then, senior leadership meeting during the late afternoon, they discuss the action plan. They’re on yellow because, first of all, that’s late in the day that they’re actually discussing about the senior leadership team, and about action plan. They should have done it early in the morning, I think, as number one. Number three, on the other hand, the red team receives daily feed in the morning about their security daily briefing. Then Enterprise Risk Office is notified before noon. Then the risk team actually met with the cyber and various “DevOps” teams. The first thing they do is enter the risk in their risk management system. That’s why they’re yellow.

Impact Analysis and Mitigation

On the impact analysis and mitigation process, this is about, first try to stop the bleed. Second, where the impact is, which application, which platform, which source code? Then, measure the blast radius and find out what the fix should be, and then go about it. Number one, before noon on December 10th itself, right after they send out that mass email, they actually implemented the new firewall rule to stop the hackers coming in. By noon, the impacted repositories were identified by the SCA platform team, so they have a full list of repositories that needed to be looked at or at least impacted from this Log4j usage. By afternoon, external facing applications start deploying the fix via CI/CD. Note that these are all applications that are developed internally. These are not vendor applications, not vendor supplied or vendor hosted applications. Overall, I think number one has done a good job. They responded really quickly. On the same day, they started deploying the changes to external facing applications. Number two, on the other hand, they implemented the firewall rule at night. You may ask, why did they do that, at night or wait until the night? I do not know that answer. Probably, they’re not used to changing production during the day, or maybe they needed more time to test it out.

Number two, however, struggled to create a full impact list. They had to implement custom scripts to detect all Log4j library usage in their source control repositories. I think they are a little bit behind, because they actually took a couple extra days to create the full impact list. They really did not have a good one to start with. All those custom scripts were created. Number three, on the other hand, they went to their CMDB system to create a list of Java applications from their CMDB. There are two assumptions to it. One is that they assume that all Java applications are impacted, whether or not they used that particular version of Log4j. Number two is they assumed their CMDB system is accurate. The question is, how did the CMDB system know which Java applications there are. It’s, essentially, developers are developing things manually, identify their CMDB entries to have Java programming languages in those. Based on that CMDB entry list, email is sent out to all the application owners, and then project management structure was established to track the progress. Nothing is done yet. Nothing is changed. It’s just about how to manage the project and getting a list. I think they are great, on the fourth day.

Remediation Cycle

Let’s look at the start of the remediation cycle. Of course, there are multiple rounds of remediation. One thing I’ll call out is that in all the enterprises that we spoke to, they all had the notion of internal facing application versus external facing application. Any customer facing application is external facing application, the rest are internal facing application. Every enterprise seemed to have a problem in identifying these applications and actually mapping them with a code repository or a set of code repositories, or an artifact in their artifact repository. It is more of a problem if you use some shared library or shared code base all across your applications across the whole enterprise. Then, we also notice that all enterprises have a vulnerability tracking and reporting mechanism that is fully based on these application IDs in their IT Service Management System, where, actually, the applications are marked as internal versus external, they have to fully rely on that. We may or may not agree with this approach, but this is a common approach across all the big enterprises that we talked to. I think it’s a common struggle.

Back to the comparison. Number one, during December 11th and 12th, they fixed all their external facing applications and then they started fixing their internal facing applications on December 13th. I think they’re a little bit yellow on that side, and I think they struggled a bit in finding out these two sets of applications, internal versus external, and spent some cycles there. Number two, on the other hand, they actually reacted to the new vulnerability on 13th, now they are in the middle of fixing for the first one, the second one came in, and then confusion. On December 14th, they opened virtual war rooms all on Zoom, because people are still remote at that point, 50% of external facing applications fixed their code, and some of them are in production, and most of them are not. Number three, on the other hand, they are manually fixing and semi-manually deploying over the period of December 14th and 16th. They do have incorrect impact analysis data, so basically, they’re fixing whatever they can and whatever they know about. Project is of course in red status, and we don’t disable that, so we put a red status at this remediation cycle.

Remediation cycle continues. Number one, through the period of 13th to 19th, they even fixed two new vulnerabilities, two new versions, Log4j 2.15 and then 2.16. That’s two more cycles of fixes all automated, and then all external facing applications are fixed. Number two, the war room continues through the whole month of December, external facing applications fixed multiple times via semi-automated pipeline. Internal facing applications must be fixed by end of January 2022. They did actually determine that with the firewall mitigation and external facing applications being fixed, they don’t have that much risk with internal facing. They took that towards that, let’s give one month to the developers to fix all the internal facing applications. Number three, some applications are actually shut down due to fear. This is quite hard to believe, but this is true. That they got so scared that they actually shut down some customer facing applications so that no hacker from outside can come in. At that time, they basically did not have a clear path in sight to fix everything that were really impacted. At this point, I’d say number one is green, and number two is kind of, on the 20th day, they’re still red, because they’re not done yet. Number three, who knows? They’re red anyway.

Aftershock Continues

Aftershock continues. Vendors start sending their fixes starting January 2022 for number one, but I think overall, within 40 days they fixed everything. I think they’re green. For number two, it took double the time, but I think they reached, so that’s why they’re green. They at least finished it off. Number three, most known applications are fixed. The unknowns are not of course fixed. As I said, at least number one and number two, they claimed to have fixed everything. The interesting part, maybe it’s because of the number three, is that from Maven Central standpoint, Log4j 2.14 and 2.15 are still getting downloaded as I speak. Which enterprises are downloading? Why? Nobody knows, but they are, which basically tells me that not all Java applications that were impacted by Log4j 2.14 actually got remediated, so they’re still there. Maybe they have custom configurations to take care of that, or they’re not fixed. I don’t know. I couldn’t tell. At this point, if you look at the status, as I said, it’s number one, green, number two, green, and number three is red.

Effect on People

Let’s talk about the effect on people. Most of us, the Java developers, and people around the Java applications, they want to forget these few weeks and months. Actually, it was the winter vacation that got totally messed up. Everyone saw some level of frustration, burnout, and had to change their winter vacation plan. Number one, the frustration was mostly around these multiple cycles of fixes, you fixed it and you thought you’re done, and you’re packing up for your vacation. Then, your boss called you and said that, no, you need to delay a little bit. Overall, few had to delay their vacation for a few days. Overall, no impact on any planned vacation. Everybody who had planned for vacation actually went for vacation happily. Number two, people got burnt out. Most Java developers had to cancel vacation. Many other canceled their vacations around the Java development teams like management, scrum masters, and release engineers, and so on. The other impact of this was that for most of these enterprises, January and February actually became their vacation months, which basically means that the full year, 2022’s feature delivery planning got totally messed up because of that. Number three, people really got burnt out. Then, their leadership basically said that, ok, this is the time where we should consider DevSecOps, as a formal kickoff of their DevOps transformation. We don’t know, we did not keep track as to what happened after that.

Learnings – The Good

That’s the story. We wrapped up the story, or the response patterns. Let’s go through the learnings. The learnings have two sides to it. One is, let’s understand first, the key characteristics of these three groups that we just talked about. Then, from those characteristics, we will try to form the key takeaways. Let’s look at the characteristics for the good one, or the number one. They had software composition analysis tool integrated with all of their code repository, so every pull request got scanned. If any vulnerabilities were detected in that repository, they got feedback through their GitHub Issues. They had complete software bill of material for all repositories. I’m not talking about SPDX or CycloneDX form of SBOM, these are just pure simple dependency lists, and they have metadata for every repository. They also had fully automated CI/CD pipeline. As you noticed here, that unless a team had a fully automated CI/CD pipeline, manually fixing and deploying three changes in a row within two weeks is not easy all across the enterprise.

They had mature release engineering and change management process that are integrated with their CI/CD pipeline that helped them actually to carry out this massive amount of changes within a short timeframe, across the whole enterprise. They also had good engineering culture collaboration, as you have seen during the declaration and detection phases. The developers were empowered to make decisions by themselves. As I mentioned, during the declaration phase, the developers knew that it is coming and it is bad, and it’s going to be bad. They did not actually wait for the mass email that came out from the CISO’s desk. They actually were trying to fix right from the get-go. They noticed it first and then they took it upon themselves to fix that thing. They did not wait for anyone. That’s called developer’s empowerment. Also, the culture that the developers can make a decision by themselves, whether to fix it or not, and actually not wait for InfoSec. Then, of course, this resulted in good DevOps practices, good automation all the way through. Most of these applications in these enterprises, they resided on cloud, so no issue there.

Learnings – The Not-So-Good

On the other hand, the not-so-good, or number two. They had SCA coverage, but limited. It was not shifted left. Most of these enterprises for most of these applications, they scanned right before the release, which was too late. They are on the right side of the process, not on the left side. They did not have the full insight into the software bill of materials or simple dependency tree. They had some. They had semi-automated CI/CD pipeline, which basically means complete CI automation, but not so much on the CD side or the deployment side. The did have good change management process. What I’m saying is that these enterprises are actually very mature enterprises. It’s just that they got hit with Log4j in a bad way. They do have good team collaboration. The cyber engineers saw that, then immediately the engineer passed it on to the team’s lead who passed it on to the ISO. That’s a good communication mechanism and team collaboration. They did have a bit of hero culture, since they did not have full SCA coverage, basically, some of their developers stood up and said that we are going to write a script, and they’re going to scrape the whole heck out of their internal GitHub and report out on all the repositories that had a notion of Log4j in their dependency file, or pom.xml, or Gradle file. With that, they struggled quite a bit.

Learnings – The Bad

Let’s talk about number three, they did not have anything. No SCA, no tooling. As I said, classic CMDB driven risk management. It’s basically, look at your CMDB, look at all the Java applications and just inform the owner, and it’s their responsibility. We’re just going to track from a risk management perspective. They did have DevOps teams, but they were not so effective. They’re just called DevOps teams, probably, they’re just renamed Ops team and not so much DevOps. Of course, as you can tell, that they have a weak engineering culture and silos. Basically, even if somebody saw something, they would not call cyber, because they don’t know that they’re supposed to do that, or they’re empowered to do that, or anybody would entertain anybody’s communication that way. We don’t know, so let’s not make any judgment call. I think, overall, what we found is that they had weak engineering culture across the enterprise.

Key Takeaways

The key takeaways from these. I would like to take a step back and try to answer these four questions. Number one, what are the different types of open source software? How do you bring them in, in your enterprise? Then, once we bring them in, how should we keep track of their usages? Then the last question is, what will we do when the next Log4Shell happens? Are we going to see the same struggle or things are going to improve? Let’s go through one by one. The first thing, open source come in many forms. Some are standalone executables. Some are development tools, utilities. Some are dependencies, that’s the Log4j’s. These are the things that get downloaded from artifact repositories and gets packaged with the actual application. Even source code that gets downloaded from github.com, and then they get into the internal source code repositories or source code management system. They come in different formats too, like jar, tar, zip, Docker image. These are the different forms of open source, Log4j is just the third bullet point, they fall into the dependencies. Most of the time, we don’t look at the other types. They also come in via many paths. First, developers could directly download from public sites. Some enterprises have restrictions around that, but that creates a lot of friction between the proxy team and the developer or the engineering team. Some enterprises chose not to stop that, which basically means developers can download any open source from public sites.

Pipelines can download build dependencies from their continuous integration pipeline. Most of these build dependencies, in all the enterprises that we saw, in number one and number two group, they actually downloaded from an internal artifact repository like Artifactory, or Nexus. For standalone software tools and utilities, many enterprises have an internal software download site. Then production systems sometimes directly download from public sites, which is pretty risky, but it happens. Then, open source code gets into internal source code. We don’t usually pay attention to all of these paths, we just think about the CI pipeline downloading Log4j and all that, but the other risks are already there. All of these are known, if we narrow it down, we could write down more actually. Then, what are the risks involved with that? We need to do the risk mitigation or risk analysis on that anyway.

To track how they’re used or if you want to manage this usage, you firstly need to know what to manage. What to manage comes from what we have. First thing is for all applications, whether they are internally developed applications, or vendor software, or standalone, another open source software, we need to gather all of them and their bill of materials, and store them in a single SBOM repository. This could be, as I said, internally developed application, runtime components, middleware, databases, tools, utilities, anything that is used in the whole enterprise. Then we need to continuously monitor that software bill of materials, because without the continuous monitoring of the software bill of materials, it’s hard to tell whether there is a problem or not, or when there is a problem. With continuous SBOM monitoring, we need to also establish a process to assess the impact. If that continuous monitoring detects something and creates an alert, what is going to happen across the enterprise? What is the process of analyzing that impact? Who is going to be in charge of that? All this process needs to be documented. Then, establish process to declare vulnerability. At which point, who is going to declare that we have a big problem and what is the scope of the problem? Who is going to get notified and how? All this needs to be written down, so that we do not have to manufacture these, every time there is a Log4Shell. Without these, we will run into communication problems, communication breakdown. It will unnecessarily cause a lot of pain or friction, to a lot of folks, that we do not want.

In terms of mitigation and remediation, we need to decide whether we can mitigate only, or we need to remediate or fix the root cause as well. In some cases, just mitigation is good enough. Next, do we need to manually remediate or we need to automate that? It depends upon what it is, how big it is, and whether we have the other things to support us in this automated mitigation process. Prioritization is another big thing, because as I said, external versus internal, or what kind of open source it is, the prioritization will depend upon that, and its risk profile. That prioritization itself can be a complex one and be time consuming one too. Then, deploying the remediation, automation is the only way to go.

The last thing, probably the most important tool that we have is the organizational culture. I’m convinced that the Log4Shell response within an enterprise talks a lot about the enterprise’s DevOps and DevSecOps technical maturity and culture, the engineering culture, leadership style, organizational structure, how people react and collaborate, and their overall business mindset. Do they have blameless culture within the organization? That is also something that comes out, out of these kinds of events. We often use the term shift left security. We most likely mean that having some tools in our delivery pipeline or code will solve the problem, but it is more than that. We need to make sure we empower the developers to make the right decisions while interacting with these tools. Each of these tools will generate more work for the developers. How do we want the developers to prioritize these security issues over feature delivery? Can they design? Are they empowered to design themselves? Lastly, practicing chaos. Just like all other chaos testing, we need to start thinking about practicing CVE testing, chaos testing. Practice makes us better. Otherwise, every time there is a Log4Shell, we will suffer. Spend months chasing this, and we will have demotivated associates, and that will not be good. Because the question is, not if, but the question is, when will there be another Log4Shell?

Questions and Answers

Tucker: Were there any questions in your survey that asked about the complete list of indicators of compromise analysis?

Pal: Yes, partially, but not a whole lot. We just wanted to know the reaction more than the action. We did ask about, how do you make sure that everything is covered? We did ask about whether you completely mitigated, or remediated, or both. That was not our intent of the questions.

Tucker: As a result of all the research that you’ve done, what would you prioritize first to prepare for future vulnerabilities, and why?

Pal: First, we have to know what we have. The problem is that we don’t know what we have. That is because, first of all, we don’t have the tools. Neither do we have a collective process of identifying the risks where we need to focus on. There are many forms of open source that we don’t have control over. There are many ways that we can get them in our environment. We only focus on the CI/CD part, and that too in a limited way. We have a lot more way to go. The first thing is, know what you have first before you start anywhere else.

Tucker: Are you aware of any good open source tools or products for helping with that, managing your inventory and doing that impact analysis?

Pal: There are some good commercial tools. In fact, on GitHub itself, you have Dependabot easily available and few other tools. Yes, start using that. I think we do have some good tools available right away, you should just pick one and go.

Tucker: Once you know what you have that you need to deploy, and you’re able to identify it, I know that you talked about automation for delivery and enabling automated deployment release. What technical foundations do you think are really important to enable that?

Pal: In general, to enable CD, continuous delivery in a nice fashion, I think the foundation block is the architectural thing. Because if it is a monolithic architecture, and we all know that if it is a big monolithic application, yes, we can get to some automated build and automated deployment, but not so much on the continuous integration in the classic sense, and in the continuous delivery in the classic sense. That can be automation, but that’s about it. To achieve full CI and CD, and I’m talking about continuous delivery, not continuous deployment, we actually need to pay attention to what our application architecture looks like, because some architecture will allow you to do that, some architecture will not allow you to do that, period.

Tucker: What are some other patterns that you would recommend to allow you to do that?

Pal: For good CI/CD, decoupling is number one, so that I can decouple my application with respect to anything else, so that I can independently deploy my changes, and I don’t have to depend upon anything else, or anybody else around me. Number two is, as soon as I do that, then the size of my thing comes into picture. The ideal case is, of course, the minimal size, which is the microservice, I’m going towards that term without using that term. Essentially, keep your sizes low, be lean and agile, and deliver as fast as possible without impacting anybody else.

Tucker: One of the things that I love that you brought up in your talk was the idea of practicing chaos. In this context, what ideas do you have for how that would look?

Pal: If we had the tools developed, and we have an inventory of things that we have in terms of open source, just like chaos, let’s find out how many different ways we can actually generate a problem, such as declare a dummy CVE as a zero-day vulnerability, and score 10, and see what happens in the enterprise. Those kinds of things, so that you can formulate few scenarios like that, and see what happens. You can do that in multiple levels. You can do it at the level where it’s just the developer tools on somebody’s laptop, versus a library like Log4j that is used everywhere, almost. There are various levels that you can do that.

Tucker: I love that idea, because I think with these things that we don’t practice very often that could give us a muscle to improve and measure our response over time.

See more presentations with transcripts

MMS • RSS

MMS • RSS

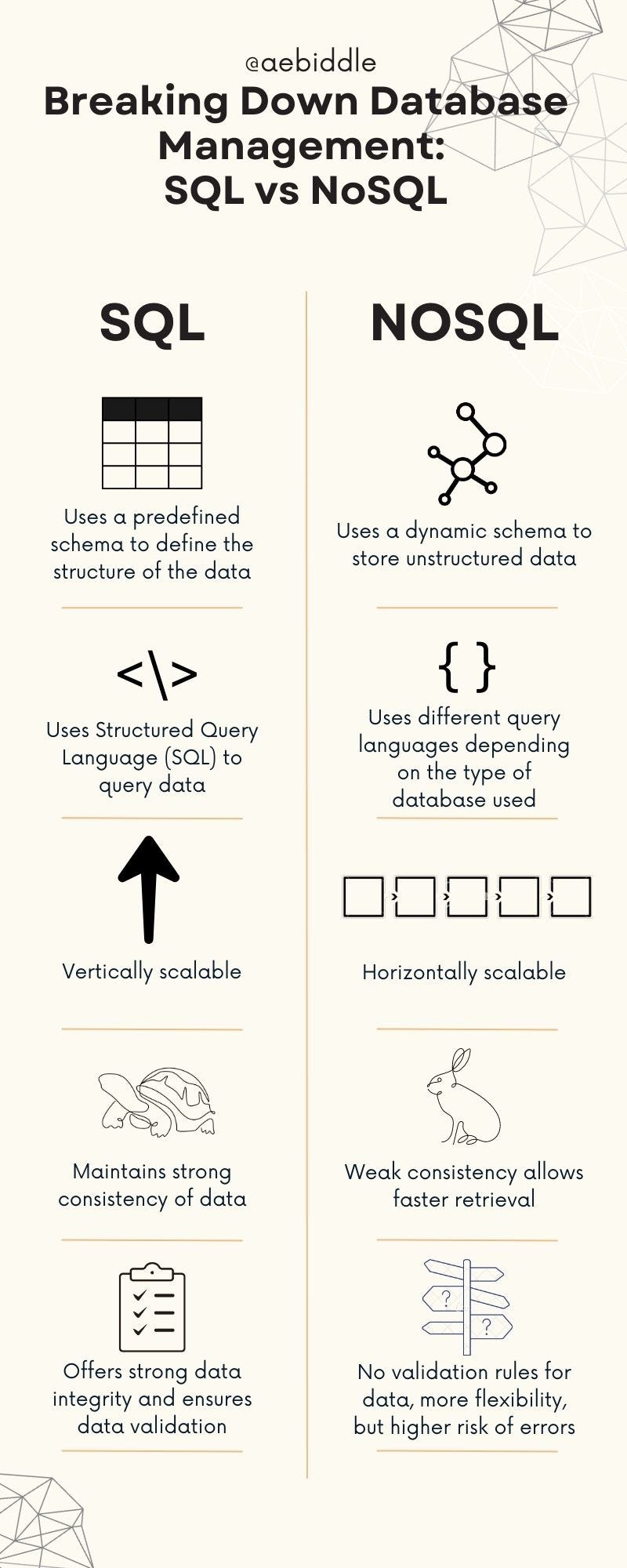

New Jersey, United States – The Global NoSQL Database Market is comprehensively and accurately detailed in the report, taking into consideration various factors such as competition, regional growth, segmentation, and market size by value and volume. This is an excellent research study specially compiled to provide the latest insights into critical aspects of the Global NoSQL Database market. The report includes different market forecasts related to market size, production, revenue, consumption, CAGR, gross margin, price, and other key factors. It is prepared with the use of industry-best primary and secondary research methodologies and tools. It includes several research studies such as manufacturing cost analysis, absolute dollar opportunity, pricing analysis, company profiling, production and consumption analysis, and market dynamics.

The competitive landscape is a critical aspect every key player needs to be familiar with. The report throws light on the competitive scenario of the Global NoSQL Database market to know the competition at both the domestic and global levels. Market experts have also offered the outline of every leading player of the Global NoSQL Database market, considering the key aspects such as areas of operation, production, and product portfolio. Additionally, companies in the report are studied based on key factors such as company size, market share, market growth, revenue, production volume, and profits.

Get Full PDF Sample Copy of Report: (Including Full TOC, List of Tables & Figures, Chart) @ https://www.verifiedmarketresearch.com/download-sample/?rid=129411

Key Players Mentioned in the Global NoSQL Database Market Research Report:

Objectivity Inc, Neo Technology Inc, MongoDB Inc, MarkLogic Corporation, Google LLC, Couchbase Inc, Microsoft Corporation, DataStax Inc, Amazon Web Services Inc & Aerospike Inc.

Global NoSQL Database Market Segmentation:

NoSQL Database Market, By Type

• Graph Database

• Column Based Store

• Document Database

• Key-Value Store

NoSQL Database Market, By Application

• Web Apps

• Data Analytics

• Mobile Apps

• Metadata Store

• Cache Memory

• Others

NoSQL Database Market, By Industry Vertical

• Retail

• Gaming

• IT

• Others

The report comes out as an accurate and highly detailed resource for gaining significant insights into the growth of different product and application segments of the Global NoSQL Database market. Each segment covered in the report is exhaustively researched about on the basis of market share, growth potential, drivers, and other crucial factors. The segmental analysis provided in the report will help market players to know when and where to invest in the Global NoSQL Database market. Moreover, it will help them to identify key growth pockets of the Global NoSQL Database market.

The geographical analysis of the Global NoSQL Database market provided in the report is just the right tool that competitors can use to discover untapped sales and business expansion opportunities in different regions and countries. Each regional and country-wise Global NoSQL Database market considered for research and analysis has been thoroughly studied based on market share, future growth potential, CAGR, market size, and other important parameters. Every regional market has a different trend or not all regional markets are impacted by the same trend. Taking this into consideration, the analysts authoring the report have provided an exhaustive analysis of specific trends of each regional Global NoSQL Database market.

Inquire for a Discount on this Premium Report @ https://www.verifiedmarketresearch.com/ask-for-discount/?rid=129411

What to Expect in Our Report?

(1) A complete section of the Global NoSQL Database market report is dedicated for market dynamics, which include influence factors, market drivers, challenges, opportunities, and trends.

(2) Another broad section of the research study is reserved for regional analysis of the Global NoSQL Database market where important regions and countries are assessed for their growth potential, consumption, market share, and other vital factors indicating their market growth.

(3) Players can use the competitive analysis provided in the report to build new strategies or fine-tune their existing ones to rise above market challenges and increase their share of the Global NoSQL Database market.

(4) The report also discusses competitive situation and trends and sheds light on company expansions and merger and acquisition taking place in the Global NoSQL Database market. Moreover, it brings to light the market concentration rate and market shares of top three and five players.

(5) Readers are provided with findings and conclusion of the research study provided in the Global NoSQL Database Market report.

Key Questions Answered in the Report:

(1) What are the growth opportunities for the new entrants in the Global NoSQL Database industry?

(2) Who are the leading players functioning in the Global NoSQL Database marketplace?

(3) What are the key strategies participants are likely to adopt to increase their share in the Global NoSQL Database industry?

(4) What is the competitive situation in the Global NoSQL Database market?

(5) What are the emerging trends that may influence the Global NoSQL Database market growth?

(6) Which product type segment will exhibit high CAGR in future?

(7) Which application segment will grab a handsome share in the Global NoSQL Database industry?

(8) Which region is lucrative for the manufacturers?

For More Information or Query or Customization Before Buying, Visit @ https://www.verifiedmarketresearch.com/product/nosql-database-market/

About Us: Verified Market Research®

Verified Market Research® is a leading Global Research and Consulting firm that has been providing advanced analytical research solutions, custom consulting and in-depth data analysis for 10+ years to individuals and companies alike that are looking for accurate, reliable and up to date research data and technical consulting. We offer insights into strategic and growth analyses, Data necessary to achieve corporate goals and help make critical revenue decisions.

Our research studies help our clients make superior data-driven decisions, understand market forecast, capitalize on future opportunities and optimize efficiency by working as their partner to deliver accurate and valuable information. The industries we cover span over a large spectrum including Technology, Chemicals, Manufacturing, Energy, Food and Beverages, Automotive, Robotics, Packaging, Construction, Mining & Gas. Etc.

We, at Verified Market Research, assist in understanding holistic market indicating factors and most current and future market trends. Our analysts, with their high expertise in data gathering and governance, utilize industry techniques to collate and examine data at all stages. They are trained to combine modern data collection techniques, superior research methodology, subject expertise and years of collective experience to produce informative and accurate research.

Having serviced over 5000+ clients, we have provided reliable market research services to more than 100 Global Fortune 500 companies such as Amazon, Dell, IBM, Shell, Exxon Mobil, General Electric, Siemens, Microsoft, Sony and Hitachi. We have co-consulted with some of the world’s leading consulting firms like McKinsey & Company, Boston Consulting Group, Bain and Company for custom research and consulting projects for businesses worldwide.

Contact us:

Mr. Edwyne Fernandes

Verified Market Research®

US: +1 (650)-781-4080

UK: +44 (753)-715-0008

APAC: +61 (488)-85-9400

US Toll-Free: +1 (800)-782-1768

Email: sales@verifiedmarketresearch.com

Website:- https://www.verifiedmarketresearch.com/

MMS • RSS

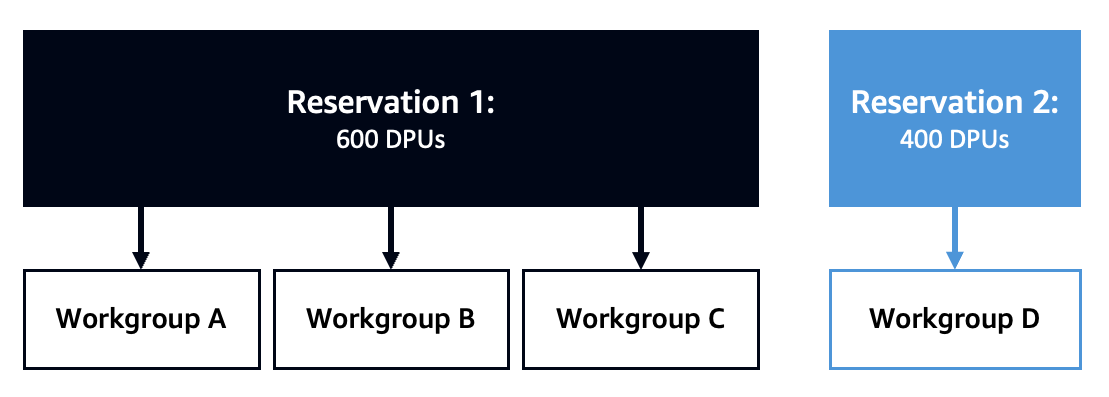

Database-as-a-service (DBaaS) provider DataStax is releasing a new support service for its open-source based unified events processing engine, Kaskada, that is aimed at helping enterprises build real-time machine learning applications.

Dubbed LunaML, the new service will provide customers with “mission-critical support and offer options for incident response time as low as 15 minutes,” the company said, adding that enterprises will also have the ability to escalate issues to the core Kaskada engineering team for further review and troubleshooting.

The company is offering two packages for raising tickets by the name of LunaML Standard and LunaML Premium, which in turn promises a 4-hour and 1-hour response time respectively, the company said in a blog posted on Thursday.

Under the standard plan, enterprises can raise 18 tickets annually. The Premium plan offers the option to raise 52 tickets in one year. Plan pricing was not immediately available.

DataStax acquired Kaskada in January for an undisclosed amount with the intent of adding Kaskada’s abilities into its offerings, such as its serverless, NoSQL database-as-a-service AstraDB and Astra Streaming.

DataStax’s acquisition of Kaskada was based on expected demand for machine learning applications.

The company believes that Kaskada’s capabilities can solve challenges of cost and scaling around machine learning applications, as the technology is designed to process large amounts of event data that is either streamed or stored in databases, and its time-based capabilities can be used to create and update features for machine learning models based on sequences of events, or over time.

MMS • RSS

Which one suits your needs?

Global NoSQL Software Market Size, Analysis, Industry Trends, Top Suppliers and COVID …

MMS • RSS

New Jersey, United States – In a recently published report by Verified Market Research, titled, “Global NoSQL Software Market Report 2030“, the analysts have provided an in-depth overview of Global NoSQL Software Market. The report is an all-inclusive research study of the Global NoSQL Software market taking into account the growth factors, recent trends, developments, opportunities, and competitive landscape. The market analysts and researchers have done extensive analysis of the Global NoSQL Software market with the help of research methodologies such as PESTLE and Porter’s Five Forces analysis. They have provided accurate and reliable market data and useful recommendations with an aim to help the players gain an insight into the overall present and future market scenario. The report comprises in-depth study of the potential segments including product type, application, and end user and their contribution to the overall market size.

In addition, market revenues based on region and country are provided in the report. The authors of the report have also shed light on the common business tactics adopted by players. The leading players of the Global NoSQL Software market and their complete profiles are included in the report. Besides that, investment opportunities, recommendations, and trends that are trending at present in the Global NoSQL Software market are mapped by the report. With the help of this report, the key players of the Global NoSQL Software market will be able to make sound decisions and plan their strategies accordingly to stay ahead of the curve.

Get Full PDF Sample Copy of Report: (Including Full TOC, List of Tables & Figures, Chart) @ https://www.verifiedmarketresearch.com/download-sample/?rid=153255

Key Players Mentioned in the Global NoSQL Software Market Research Report:

Amazon, Couchbase, MongoDB Inc., Microsoft, Marklogic, OrientDB, ArangoDB, Redis, CouchDB, DataStax.

Key companies operating in the Global NoSQL Software market are also comprehensively studied in the report. The Global NoSQL Software report offers definite understanding into the vendor landscape and development plans, which are likely to take place in the coming future. This report as a whole will act as an effective tool for the market players to understand the competitive scenario in the Global NoSQL Software market and accordingly plan their strategic activities.

Global NoSQL Software Market Segmentation:

NoSQL Software Market, By Type

• Document Databases

• Key-vale Databases

• Wide-column Store

• Graph Databases

• Others

NoSQL Market, By Application

• Social Networking

• Web Applications

• E-Commerce

• Data Analytics

• Data Storage

• Others

Competitive landscape is a critical aspect every key player needs to be familiar with. The report throws light on the competitive scenario of the Global NoSQL Software market to know the competition at both the domestic and global levels. Market experts have also offered the outline of every leading player of the Global NoSQL Software market, considering the key aspects such as areas of operation, production, and product portfolio. Additionally, companies in the report are studied based on the key factors such as company size, market share, market growth, revenue, production volume, and profits. This research report is aimed at equipping readers with the all necessary information that will help them operate efficiently across the global spectrum of the market and derive fruitful results.

The report has been segregated based on distinct categories, such as product type, application, end user, and region. Each and every segment is evaluated on the basis of CAGR, share, and growth potential. In the regional analysis, the report highlights the prospective region, which is estimated to generate opportunities in the Global NoSQL Software market in the forthcoming years. This segmental analysis will surely turn out to be a useful tool for the readers, stakeholders, and market participants to get a complete picture of the Global NoSQL Software market and its potential to grow in the years to come. The key regions covered in the report are North America, Europe, Asia Pacific, the Middle East and Africa, South Asia, Latin America, Central and South America, and others. The Global NoSQL Software report offers an in-depth assessment of the growth rate of these regions and comprehensive review of the countries that will be leading the regional growth.

Inquire for a Discount on this Premium Report @ https://www.verifiedmarketresearch.com/ask-for-discount/?rid=153255

What to Expect in Our Report?

(1) A complete section of the Global NoSQL Software market report is dedicated for market dynamics, which include influence factors, market drivers, challenges, opportunities, and trends.

(2) Another broad section of the research study is reserved for regional analysis of the Global NoSQL Software market where important regions and countries are assessed for their growth potential, consumption, market share, and other vital factors indicating their market growth.

(3) Players can use the competitive analysis provided in the report to build new strategies or fine-tune their existing ones to rise above market challenges and increase their share of the Global NoSQL Software market.

(4) The report also discusses competitive situation and trends and sheds light on company expansions and merger and acquisition taking place in the Global NoSQL Software market. Moreover, it brings to light the market concentration rate and market shares of top three and five players.

(5) Readers are provided with findings and conclusion of the research study provided in the Global NoSQL Software Market report.

Key Questions Answered in the Report:

(1) What are the growth opportunities for the new entrants in the Global NoSQL Software industry?

(2) Who are the leading players functioning in the Global NoSQL Software marketplace?

(3) What are the key strategies participants are likely to adopt to increase their share in the Global NoSQL Software industry?

(4) What is the competitive situation in the Global NoSQL Software market?

(5) What are the emerging trends that may influence the Global NoSQL Software market growth?

(6) Which product type segment will exhibit high CAGR in future?

(7) Which application segment will grab a handsome share in the Global NoSQL Software industry?

(8) Which region is lucrative for the manufacturers?

For More Information or Query or Customization Before Buying, Visit @ https://www.verifiedmarketresearch.com/product/nosql-software-market/

About Us: Verified Market Research®

Verified Market Research® is a leading Global Research and Consulting firm that has been providing advanced analytical research solutions, custom consulting and in-depth data analysis for 10+ years to individuals and companies alike that are looking for accurate, reliable and up to date research data and technical consulting. We offer insights into strategic and growth analyses, Data necessary to achieve corporate goals and help make critical revenue decisions.

Our research studies help our clients make superior data-driven decisions, understand market forecast, capitalize on future opportunities and optimize efficiency by working as their partner to deliver accurate and valuable information. The industries we cover span over a large spectrum including Technology, Chemicals, Manufacturing, Energy, Food and Beverages, Automotive, Robotics, Packaging, Construction, Mining & Gas. Etc.

We, at Verified Market Research, assist in understanding holistic market indicating factors and most current and future market trends. Our analysts, with their high expertise in data gathering and governance, utilize industry techniques to collate and examine data at all stages. They are trained to combine modern data collection techniques, superior research methodology, subject expertise and years of collective experience to produce informative and accurate research.

Having serviced over 5000+ clients, we have provided reliable market research services to more than 100 Global Fortune 500 companies such as Amazon, Dell, IBM, Shell, Exxon Mobil, General Electric, Siemens, Microsoft, Sony and Hitachi. We have co-consulted with some of the world’s leading consulting firms like McKinsey & Company, Boston Consulting Group, Bain and Company for custom research and consulting projects for businesses worldwide.

Contact us:

Mr. Edwyne Fernandes

Verified Market Research®

US: +1 (650)-781-4080

UK: +44 (753)-715-0008

APAC: +61 (488)-85-9400

US Toll-Free: +1 (800)-782-1768

Email: sales@verifiedmarketresearch.com

Website:- https://www.verifiedmarketresearch.com/

NoSQL Databases Software Market 2031 Insights with Key Innovations Analysis – Fylladey

MMS • RSS

Mr Accuracy Reports recently introduced a new title on NoSQL Databases Software Market 2023 and forecast 2031 from its database. The NoSQL Databases Software report provides a study with an in-depth overview, describing the product/industry scope and elaborates market outlook and status (2023-2031). The NoSQL Databases Software report is curated after in-depth research and analysis by experts. The NoSQL Databases Software report provides comprehensive valuable insights on the global NoSQL Databases Software market development activities demonstrated by industry players, growth opportunities, and market sizing with analysis by key segments, leading and emerging players, and geographies.

Following are the key-players covered in the report: – MongoDB, Amazon, ArangoDB, Azure Cosmos DB, Couchbase, MarkLogic, RethinkDB, CouchDB, SQL-RD, OrientDB, RavenDB, Redis

Get a free sample copy of the NoSQL Databases Software report: – https://www.mraccuracyreports.com/report-sample/204170

The NoSQL Databases Software report contains a methodical explanation of current NoSQL Databases Software market trends to assist the users to entail an in-depth market analysis. The study helps in identifying and tracking emerging players in the global NoSQL Databases Software market and their portfolios, to enhance decision-making capabilities. Market basic factors coated during this report embrace a market summary, definitions, and classifications, and business chain summary. The report predicts future market orientation for the forecast amount from 2022 to 2031 with the help of past and current market values.

NoSQL Databases Software Report Objectives:

- To examine the global NoSQL Databases Software market size by value and size.

- To calculate the NoSQL Databases Software market segments, consumption, and other dynamic factors of the various units of the market.

- To determine the key dynamics of the NoSQL Databases Software market.

- To highpoint key trends in the NoSQL Databases Software market in terms of manufacturing, revenue, and sales.

- To summarize the top players of the NoSQL Databases SoftwareX industry

- To showcase the performance of different regions and countries in the global NoSQL Databases Software market.

Global NoSQL Databases Software Market Segmentation:

Market Segmentation: By Type

Cloud Based, Web Based

Market Segmentation: By Application

Large Enterprises, SMEs

The NoSQL Databases Software report encompasses a comprehensive assessment of different strategies like mergers & acquisitions, product developments, and research & developments adopted by prominent market leaders to stay at the forefront in the global NoSQL Databases Software market. The research detects the most important aspects like drivers, restraints, on business development patterns, scope, qualities, shortcomings, openings, and dangers employing a SWOT examination.

FLAT30% DISCOUNT TO BUY FULL STUDY:- https://www.mraccuracyreports.com/check-discount/204170

The NoSQL Databases Software market can be divided into:

North America (U.S. , Canada, Mexico), Europe (Germany, France, U.K., Italy, Spain, Rest of the Europe), Asia-Pacific (China, Japan India, Rest of APAC), South America (Brazil and Rest of South America), Middle East and Africa (UAE, South Africa, Rest of MEA).

The recent flows and therefore the growth opportunities within the market in the approaching amount are highlighted. Major players/suppliers worldwide and market share by regions, with company and product introduction, position in the global NoSQL Databases Software market including their market status and development trend by types and applications which will provide its price and profit status, and marketing status & market growth drivers and challenges. This latest report provides worldwide NoSQL Databases Software market predictions for the forthcoming years.

Direct Purchase this Market Research Report Now @ https://www.mraccuracyreports.com/checkout/204170

If you have any special requirements, please contact our sales professional (sales@mraccuracyreports.com), No additional cost will be required to pay for limited additional research. we are going to make sure you get the report that works for your desires

Thank you for taking the time to read our article…!!

ABOUT US:

Mr Accuracy Reports is an ESOMAR-certified business consulting & market research firm, a member of the Greater New York Chamber of Commerce and is headquartered in Canada. A recipient of Clutch Leaders Award 2022 on account high client score (4.9/5), we have been collaborating with global enterprises in their business transformation journey and helping them deliver on their business ambitions. 90% of the largest Forbes 1000 enterprises are our clients. We serve global clients across all leading & niche market segments across all major industries.

Mr Accuracy Reports is a global front-runner in the research industry, offering customers contextual and data-driven research services. Customers are supported in creating business plans and attaining long-term success in their respective marketplaces by the organization. The industry provides consulting services, Mr Accuracy Reports research studies, and customized research reports.

MMS • Ben Linders

Three toxic behaviors that open-source maintainers experience are entitlement, people venting their frustration, and outright attacks. Growing a thick skin and ignoring the behavior can lead to a negative spiral of angriness and sadness. Instead, we should call out the behavior and remind people that open source means collaboration and cooperation.

Gina Häußge spoke about dealing with toxic people as an open-source maintainer at OOP 2023 Digital.

There are three toxic behaviors that maintainers experience all the time, Häußge mentioned. The most common one is entitlement. Quite a number of users out there are of the opinion that because you already gave them something, you owe them even more, and will become outright aggressive when you don’t meet their demands.

Then there are people venting their frustration at something not working the way they expect, Häußge said, who then can become abusive in the process.

The third toxic behavior is attacks, mostly from people who either don’t see their entitled demands met or who can’t cope with their own frustration, sometimes from trolls, as Häußge explained:

That has reached from expletives to suggestions to end my own life.

Häußge mentioned that she tried to deal with toxic behavior by growing a thick skin and, ignoring the behavior. She thought that getting worked up over them was a personal flaw of her. It turned out that she was trying to ignore human nature and the stress response cycle, as Häußge explained:

Trying to ignore things just meant they’d circle endlessly in my head, often for days, sometimes for weeks, and make me spiral into being angrier and angrier, or sadder and sadder. And that in turn would influence the way I communicate, often only escalating things further, or causing issues elsewhere.

Häußge mentioned that when she’s faced with entitlement or venting, she often reminds people of the realities at work. “Open Source means collaboration and cooperation, not demands,” she said. If people want to see something implemented, they should help getting it done – with code, but also things like documentation and bug analysis:

Anything I don’t have to do myself means more time for coding work to solve other people’s problems.

It shouldn’t just fall to the maintainer, Häußge said. We all can identify bad behavior when we see it and can call it out as such. We don’t have to leave it to the maintainers to also constantly defend their own boundaries or take abuse silently, she stated.

Häußge mentioned to also always look at ourselves in the mirror and make sure we don’t become offenders ourselves:

Remember the human on the other end at all times.

InfoQ interviewed Gina Häußge about toxic behavior towards open-source maintainers.

InfoQ: What impact of toxic behavior on both maintainers and OSS communities have you observed?

Gina Häußge: Over the years I’ve talked to a bunch of fellow OSS maintainers, and the general consensus also reflects my own experience: these experiences can ruin your day, they can ruin your whole week, and sometimes they make you seriously question why you even continue to maintain a project. They certainly contribute to maintainer burnout, and thus pose a risk to the project as a whole. It’s death by a thousand papercuts. And they turn whole communities toxic when left standing unopposed.

InfoQ: How have you learned to cope with toxic behavior?

Häußge: A solution for the stress response cycle is physical activity. I have a punching bag in my office, and even just 30 seconds on this thing get my heart going! This signals to my brain that I have acknowledged the threat and am doing something against it, completing the stress response cycle. Once I’ve done that, I’m in control again and can take appropriate next steps.