Month: July 2023

MMS • RSS

Data estates are expansive. Organizations in all business verticals are operating data stacks that run on a mixture of legacy technologies that work effectively but aren’t always easy to move or manipulate, plus a mixture of modernised platforms, services and data layers that form the new age of cloud-native. On the face of it, that proposition sounds fine i.e. the world is a multi-application, multi-platform and multi-cloud place, diversity matters.

While many businesses may continue to operate like this for many years to come, the younger forces within the data industry are working hard to convince us that a quiet revolution is well underway.

The end of cheap capital

Some point to world economic forces such as the end of cheap capital and the continuing spectre of infection, inflation and invasion that still dogs us right now. We know that there’s a huge drive to build applications more quickly, a concerted push to operate data services more effectively and to get enterprise applications themselves to do more, so what happens next?

Peter Guagenti, chief marketing officer at cloud-native, distributed SQL database Cockroach Labs asserts that these combined forces will drive a migration away from core transactional database infrastructures. Well, he would do, his firm is from the other (newer) side of the tracks i.e. not the database stalwarts that have dominated the pre-millennial times and still do in many areas.

For technical clarification, databases come in two flavors: relational and non-relational. Relational (SQL) databases store data in tables with strictly-defined relationships between tables. Non-relational (NoSQL) databases are more flexible and allow for less organized and less regimented data entry.

Honing into relational databases – they are typically preferred for processing transactions as this approach enforces data relationship ‘rules’. For example, an order can’t be entered into the database if there’s no user associated with it. Modern relational databases need to handle multiple transactions simultaneously (via a process known as Online Transaction Processing – OLTP). Increasingly, databases need to handle concurrent global transactions and potentially transactions written to different machines. This is where legacy databases are argued to struggle – and it’s the problem that next-gen distributed SQL databases were built to solve.

A big shift, really?

Taking the legacy (but it still works) database structure argument by the horns (yet again it seems) and asking whether any sort of major migration will really happen, how does the Cockroach team think it can justify or validate its propositions here?

“Such shifts have happened before,” said Guagenti. “Ten years ago, no one thought cloud would be mainstream – but now, everyone is doing it. No one thought Application Programming Interfaces (APIs) and containers would be mainstream – they thought they were just for Amazon or Apple and the like – and that’s proved not to be the case.”

Guagenti and team think we are witnessing change as a result of both technical evolution and market revolution. They say that before and shortly after the last recession, CIOs were focused on cutting costs. That flipped on a dime a couple of years later when they began to focus, instead, on opportunity costs and accelerating digital transformation. Every Fortune 500 company worried that a dot-com would eat their lunch. Digital initiatives were accelerated by a flood of investor dollars.

But how did that work? With money at zero percent interest, the only way to make money was to go for riskier investments, so it made sense to put money into the parent company instead. If it cost three dollars to capture a dollar of growth, companies did so, knowing that they’d scale that one dollar eventually. When things are booming, capital is cheap. You are focused on growth and winning market share, so this may lead to scenarios where firms are willing to accept inefficiencies in terms of how they get there.

“As such, companies had no problem leaving core transactional databases alone,” said Guagenti. “Out of fear and comfort, they were never touched. Because they thought doing so was to do open heart surgery on their infrastructure. For example, if it was the transactional system of the bank, would you really want to change it? If money were cheap, you’d likely leave it be.”

But now of course, money is not cheap. So the data industry is telling us that as access to capital dries up, people will again want efficiencies and economies of scale. We’re back to a place where CIOs are being asked, “How do you maintain this growth rate but with a lot less spend?”

The need for automation

After companies trim their fat, modern efficiencies today often come from automation. As described above, most relational databases – the ones that power the business and the transaction side of the house are running on technology that’s been around for 25 to 40 years. These systems obviously require a good amount of manual intervention and management just to them operating. It’s perhaps no surprise then to find that the data behemoths of Silicon Valley and elsewhere are championing the autonomous management message pretty heavily right now.

The data science team at Cockroach labs remind us that for many companies, data is the single highest category of IT spend, thus it represents one of the most significant opportunities to reduce costs. According to 2022 research from International Data Corporation (IDC), data management makes up the largest share of IT infrastructure budgets (more than 20%).

Human effort can make up 75% of data management spend for traditional relational databases. That includes the cost to architect and deploy them at scale, to manage operations and maintain business continuity. These costs represent lost hours that should go to delivering better customer service and acquiring market share instead.

“Add to the need to drive efficiencies, a doubling of app requirements – data volumes that are growing exponentially, user volumes that are growing exponentially, and all that we’re trying to achieve through AI and other developments. Intensity is soaring,” said Guagenti. “Fortunately, database technology has been advancing relentlessly. Innovators have applied everything they learned about software – including the power of distributed systems and core cloud infrastructure – to databases.”

Simpler management, please

As the rise of modern, cloud-native distributed relational databases from cloud providers or independents continues to grow, what can we expect next? We can see that distributed technology is converging with the consistency and SQL compliance of a traditional relational database.

“What companies already experience with Amazon Redshift [data warehouse] and Snowflake [data cloud services] for analytical, they’ll get for relational, too – and they will be able to consume these as a fully managed offering,” said Guagenti, in conclusion. “The aggregate benefits of migrating to (or building new applications on) distributed SQL databases have been demonstrated to reduce overall costs for customers by nearly 70%. The majority of these savings are tied to labor efficiencies gained by the dramatically simpler management and operations of distributed SQL underpinning applications that are data intensive or run at massive scale,”

The database flavor selection argument won’t be going away this year, or perhaps even this decade i.e. this is an ongoing debate where both the old and the new will continue to jostle with the transactional, the relational, the operational, the analytical and the otherwise functional ends of the data spectrum.

Whether we might look forward to a future with one type of database also seems fanciful as we stand today. What we can say is that cloud-native scale and data management autonomy will continue to win – you can bet your bug on it.

MMS • RSS

Banque Cantonale Vaudoise raised its holdings in shares of MongoDB, Inc. (NASDAQ:MDB – Free Report) by 6.5% during the first quarter, according to its most recent filing with the Securities and Exchange Commission (SEC). The firm owned 1,385 shares of the company’s stock after acquiring an additional 85 shares during the quarter. Banque Cantonale Vaudoise’s holdings in MongoDB were worth $323,000 as of its most recent filing with the Securities and Exchange Commission (SEC).

Banque Cantonale Vaudoise raised its holdings in shares of MongoDB, Inc. (NASDAQ:MDB – Free Report) by 6.5% during the first quarter, according to its most recent filing with the Securities and Exchange Commission (SEC). The firm owned 1,385 shares of the company’s stock after acquiring an additional 85 shares during the quarter. Banque Cantonale Vaudoise’s holdings in MongoDB were worth $323,000 as of its most recent filing with the Securities and Exchange Commission (SEC).

A number of other hedge funds and other institutional investors have also bought and sold shares of the business. Proficio Capital Partners LLC bought a new position in shares of MongoDB in the first quarter worth $39,000. Nordea Investment Management AB increased its holdings in shares of MongoDB by 8.2% in the first quarter. Nordea Investment Management AB now owns 4,174 shares of the company’s stock worth $927,000 after purchasing an additional 318 shares during the period. Clarius Group LLC increased its holdings in shares of MongoDB by 7.7% in the first quarter. Clarius Group LLC now owns 1,362 shares of the company’s stock worth $318,000 after purchasing an additional 97 shares during the period. Dakota Wealth Management bought a new position in shares of MongoDB in the first quarter worth $372,000. Finally, Raymond James Financial Services Advisors Inc. increased its holdings in shares of MongoDB by 10.5% in the first quarter. Raymond James Financial Services Advisors Inc. now owns 1,863 shares of the company’s stock worth $434,000 after purchasing an additional 177 shares during the period. Institutional investors own 89.22% of the company’s stock.

Wall Street Analysts Forecast Growth

A number of research firms recently commented on MDB. Wedbush reduced their target price on MongoDB from $240.00 to $230.00 in a research note on Thursday, March 9th. Needham & Company LLC increased their target price on MongoDB from $250.00 to $430.00 in a research note on Friday, June 2nd. Oppenheimer increased their target price on MongoDB from $270.00 to $430.00 in a research note on Friday, June 2nd. Sanford C. Bernstein increased their target price on MongoDB from $257.00 to $424.00 in a research note on Monday, June 5th. Finally, KeyCorp increased their target price on MongoDB from $229.00 to $264.00 and gave the company an “overweight” rating in a research note on Thursday, April 20th. One analyst has rated the stock with a sell rating, three have given a hold rating and twenty-one have issued a buy rating to the company’s stock. According to data from MarketBeat.com, the stock has an average rating of “Moderate Buy” and a consensus target price of $366.30.

Insider Buying and Selling at MongoDB

In related news, Director Dwight A. Merriman sold 2,000 shares of the firm’s stock in a transaction that occurred on Thursday, May 4th. The shares were sold at an average price of $240.00, for a total transaction of $480,000.00. Following the completion of the sale, the director now owns 1,223,954 shares of the company’s stock, valued at $293,748,960. The sale was disclosed in a legal filing with the Securities & Exchange Commission, which is available through this hyperlink. In related news, CEO Dev Ittycheria sold 20,000 shares of the firm’s stock in a transaction that occurred on Thursday, June 1st. The shares were sold at an average price of $287.32, for a total value of $5,746,400.00. Following the sale, the chief executive officer now owns 262,311 shares in the company, valued at $75,367,196.52. The sale was disclosed in a filing with the Securities & Exchange Commission, which is available at the SEC website. Also, Director Dwight A. Merriman sold 2,000 shares of the firm’s stock in a transaction that occurred on Thursday, May 4th. The stock was sold at an average price of $240.00, for a total transaction of $480,000.00. Following the completion of the sale, the director now owns 1,223,954 shares in the company, valued at approximately $293,748,960. The disclosure for this sale can be found here. Over the last quarter, insiders sold 50,050 shares of company stock valued at $13,942,694. Corporate insiders own 4.80% of the company’s stock.

MongoDB Trading Down 0.4 %

Shares of NASDAQ MDB opened at $409.57 on Thursday. MongoDB, Inc. has a fifty-two week low of $135.15 and a fifty-two week high of $418.70. The company has a 50 day simple moving average of $323.50 and a two-hundred day simple moving average of $250.14. The company has a quick ratio of 4.19, a current ratio of 4.19 and a debt-to-equity ratio of 1.44.

MongoDB (NASDAQ:MDB – Free Report) last announced its earnings results on Thursday, June 1st. The company reported $0.56 earnings per share for the quarter, beating analysts’ consensus estimates of $0.18 by $0.38. MongoDB had a negative net margin of 23.58% and a negative return on equity of 43.25%. The business had revenue of $368.28 million during the quarter, compared to the consensus estimate of $347.77 million. During the same quarter last year, the firm earned ($1.15) earnings per share. The business’s revenue for the quarter was up 29.0% on a year-over-year basis. On average, analysts forecast that MongoDB, Inc. will post -2.8 EPS for the current year.

MongoDB Company Profile

MongoDB, Inc provides general purpose database platform worldwide. The company offers MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premise, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Read More

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

MMS • Ben Linders

Everyone can be an agent of change, even with small contributions. You can also be an agent of change for yourself by focusing on what you can control. Knowing why to change matters, and exploring it you may find out that it’s not the time yet to make a change.

Hilary Weaver gave a keynote about embracing change at the Romanian Testing Conference 2023.

You don’t have to be doing huge things to make changes, Weaver said. You can make small contributions to the world (volunteering, teaching kids to code, etc) that can ultimately have a huge effect, she explained:

I didn’t think I was being an agent of change when I started my meetup group (Motor City Software Testers), but I realized I had helped create opportunities for people with the group, and that can change lives.

Weaver mentioned that you can also be an agent of change for yourself. Changes that happen to you can be anything from very easy to the worst thing you could ever imagine. Weaver found that, even with incredibly difficult changes like the death of a close loved one, she needed to embrace the change. Sit with how she felt about it, but know that focusing on things like “what if”’s is useless and causes more trouble and pain:

I focus on what I can control (even if it’s just making sure I eat), and use my support network. The testing community is such an incredible group of people and I’ve always felt supported when I needed it

When we make, or want to make, a change, we have a reason for that change. Especially for longer term changes, we should find our “why”, Weaver argued. Your “why” is the deep-in-your-heart reason you want to make this change.

When we feel not-quite-right about something, Weaver suggested doing a pros and cons list of keeping things as they are – what would life be like if she didn’t change anything, and what would it be like if she did make this change? Maybe from this, we can determine it’s not quite time yet.

InfoQ interviewed Hilary Weaver about embracing change.

InfoQ: What’s your approach to enacting change?

Hilary Weaver: It’s a multi-step process: you need to determine what you want to change, why you want to make the change, when to change it, and then determine how you’ll make the change. Each of these steps requires some introspection and self-reflection, and some outside support. Change can be simple, but it’s often not easy!

InfoQ: How can we know that it’s time to make a change?

Weaver: As humans, we’re pretty resilient and can deal with a lot, and sometimes don’t feel like we deserve to be happy or fulfilled! To help with this, I’ll create a “red flags” list – specific scenarios that will tell me it’s really time to make the change. And I’ll share this list with a trusted friend or my therapist, to make sure I’m accountable but also I don’t just continue in a bad situation.

InfoQ: What’s the difference between shorter term changes and longer term changes?

Weaver: Shorter term changes you kind of “just do” – such as quitting your job. Longer term changes, however, require consistent small changes over time – such as gaining a new skill so that you can get a better job in the future. Building habits that incorporate those smaller changes, which are sustainable for the time it takes to make that change.

Focusing on consistency and removing choice really helps me with these – for instance, I walk at least 1 mile (1.6km) every day. No matter the weather, there is no choice for me – if it’s raining, that just changes what I’m wearing for the walk, not whether or not I’ll walk.

InfoQ: What can we do when change gets difficult?

Weaver: Your “why” will help you through when motivation isn’t there.

For instance, if my “why” to walk every day was to be healthier, that’s not going to stand up to days when motivation is low. My “why” is to increase my endurance to keep up with my nieces and nephew, and to not take movement for granted.

So when change gets difficult, we can remember our “why” and focus on the feeling we have at the root of us when we think of it. We can also modify rather than quitting altogether – if it’s too difficult, we can still continue but not do as much. Maybe our initial small changes weren’t small enough – that’s ok! It’s not failure to learn, as long as you continue.

MMS • RSS

Banque Cantonale Vaudoise lifted its holdings in MongoDB, Inc. (NASDAQ:MDB – Free Report) by 6.5% during the 1st quarter, according to the company in its most recent Form 13F filing with the Securities and Exchange Commission. The institutional investor owned 1,385 shares of the company’s stock after buying an additional 85 shares during the period. Banque Cantonale Vaudoise’s holdings in MongoDB were worth $323,000 as of its most recent filing with the Securities and Exchange Commission.

Banque Cantonale Vaudoise lifted its holdings in MongoDB, Inc. (NASDAQ:MDB – Free Report) by 6.5% during the 1st quarter, according to the company in its most recent Form 13F filing with the Securities and Exchange Commission. The institutional investor owned 1,385 shares of the company’s stock after buying an additional 85 shares during the period. Banque Cantonale Vaudoise’s holdings in MongoDB were worth $323,000 as of its most recent filing with the Securities and Exchange Commission.

Other hedge funds and other institutional investors have also recently modified their holdings of the company. Proficio Capital Partners LLC acquired a new stake in MongoDB during the first quarter worth about $39,000. Nordea Investment Management AB increased its position in MongoDB by 8.2% during the first quarter. Nordea Investment Management AB now owns 4,174 shares of the company’s stock worth $927,000 after purchasing an additional 318 shares during the last quarter. Clarius Group LLC increased its position in MongoDB by 7.7% during the first quarter. Clarius Group LLC now owns 1,362 shares of the company’s stock worth $318,000 after purchasing an additional 97 shares during the last quarter. Dakota Wealth Management acquired a new stake in MongoDB during the first quarter worth about $372,000. Finally, Raymond James Financial Services Advisors Inc. increased its position in MongoDB by 10.5% during the first quarter. Raymond James Financial Services Advisors Inc. now owns 1,863 shares of the company’s stock worth $434,000 after purchasing an additional 177 shares during the last quarter. 89.22% of the stock is owned by hedge funds and other institutional investors.

Insiders Place Their Bets

In other MongoDB news, CRO Cedric Pech sold 15,534 shares of MongoDB stock in a transaction dated Tuesday, May 9th. The stock was sold at an average price of $250.00, for a total transaction of $3,883,500.00. Following the completion of the sale, the executive now directly owns 37,516 shares of the company’s stock, valued at approximately $9,379,000. The sale was disclosed in a document filed with the Securities & Exchange Commission, which can be accessed through this link. In other news, CRO Cedric Pech sold 15,534 shares of the business’s stock in a transaction dated Tuesday, May 9th. The stock was sold at an average price of $250.00, for a total value of $3,883,500.00. Following the completion of the sale, the executive now directly owns 37,516 shares of the company’s stock, valued at approximately $9,379,000. The transaction was disclosed in a document filed with the Securities & Exchange Commission, which can be accessed through the SEC website. Also, Director Hope F. Cochran sold 2,174 shares of the business’s stock in a transaction dated Thursday, June 15th. The shares were sold at an average price of $373.19, for a total value of $811,315.06. Following the completion of the sale, the director now directly owns 8,200 shares of the company’s stock, valued at $3,060,158. The disclosure for this sale can be found here. Insiders sold 50,050 shares of company stock valued at $13,942,694 in the last ninety days. Insiders own 4.80% of the company’s stock.

MongoDB Stock Down 0.4 %

Shares of NASDAQ MDB opened at $409.57 on Thursday. The company has a quick ratio of 4.19, a current ratio of 4.19 and a debt-to-equity ratio of 1.44. The business has a 50-day moving average of $323.50 and a two-hundred day moving average of $250.14. MongoDB, Inc. has a 1 year low of $135.15 and a 1 year high of $418.70.

MongoDB (NASDAQ:MDB – Free Report) last announced its quarterly earnings results on Thursday, June 1st. The company reported $0.56 earnings per share (EPS) for the quarter, topping analysts’ consensus estimates of $0.18 by $0.38. The company had revenue of $368.28 million during the quarter, compared to the consensus estimate of $347.77 million. MongoDB had a negative return on equity of 43.25% and a negative net margin of 23.58%. MongoDB’s revenue for the quarter was up 29.0% on a year-over-year basis. During the same period in the previous year, the business earned ($1.15) EPS. Research analysts anticipate that MongoDB, Inc. will post -2.8 EPS for the current fiscal year.

Wall Street Analyst Weigh In

Several research firms recently issued reports on MDB. Capital One Financial began coverage on shares of MongoDB in a research report on Monday, June 26th. They set an “equal weight” rating and a $396.00 price objective for the company. Needham & Company LLC lifted their price target on shares of MongoDB from $250.00 to $430.00 in a research report on Friday, June 2nd. Piper Sandler lifted their price target on shares of MongoDB from $270.00 to $400.00 in a research report on Friday, June 2nd. Morgan Stanley lifted their price target on shares of MongoDB from $270.00 to $440.00 in a research report on Friday, June 23rd. Finally, Mizuho lifted their price target on shares of MongoDB from $220.00 to $240.00 in a research report on Friday, June 23rd. One analyst has rated the stock with a sell rating, three have assigned a hold rating and twenty-one have given a buy rating to the company’s stock. Based on data from MarketBeat.com, the stock currently has a consensus rating of “Moderate Buy” and a consensus price target of $366.30.

MongoDB Company Profile

MongoDB, Inc provides general purpose database platform worldwide. The company offers MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premise, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Read More

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

MMS • Steef-Jan Wiggers

Recently, AWS announced the availability of versions and aliases in Step Functions to improve resiliency for deployments of serverless workflows.

AWS Step Functions is a serverless workflow service that allows developers to automate multiple AWS services into serverless workflows using visual workflows and state machines. It received several enhancements over the years, with workflow collections, additional observability features, and intrinsic functions of the more recent ones. The latest addition, versions and aliases, provide enhanced workflow deployment capabilities – offering developers a more resilient deployment experience.

Previously, developers required another Amazon States Language (ASL) update to fix the problem in their definition or an explicit action to revert the state machine to a previous definition.

With the versions and aliases feature, developers can manage multiple workflow versions, track their usage in each execution, and create aliases to route traffic between versions efficiently. By leveraging industry-standard techniques like blue-green, linear, and canary-style deployments, developers can gradually deploy workflows with swift rollbacks, enhancing deployment safety, minimizing downtime, and mitigating risks associated with Step Functions workflows.

Developers can leverage the AWS Step Functions Versions and Aliases feature in the AWS console, AWS CloudFormation, the AWS Command Line Interface (CLI), or the AWS Cloud Development Kit (CDK).

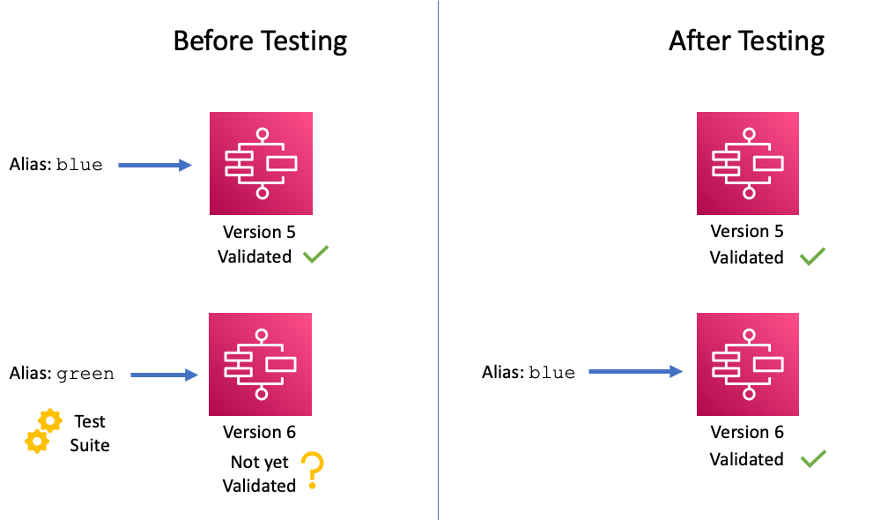

Peter Smith, a principal engineer for AWS Step Functions, outlines several scenarios for deploying state machines incrementally with versions and aliases in an AWS Compute blog post. One example is the blue-green deployment:

In this approach, the existing state machine version (currently used in production) is the “blue” version, whereas a newly deployed state machine is the “green” version. As a rule, you should deploy the blue version in production while testing the newer green version in a separate environment. Once the green version is validated, use it in production (it becomes the new blue version). If version 6 causes issues in production, roll back the “blue” alias to the previous value so that executions revert to version 5.

Benjamen Pyle, an AWS Community Builder in Serverless, concluded in a blog post:

My first thoughts when using this capability were, how have I been using Step Functions without Versions and Aliases the whole time? But then, as a stepped back, Lambda went through the same type of transition where you just always overwrote the existing function and didn’t do much canary or linear type rollouts. I have a feeling that I’m not going to use this technique for everything but for mission-critical workflows, it’s going to be a requirement.

In addition, Mario Bittencourt, a principal software architect, concluded in a medium blog post:

With this latest release of AWS you can bridge the gap for your step function-based processes as their support of aliases and versions give you enough built-in capabilities to address your needs.

Developers are not charged any extra fees for using Versions and Aliases; they only pay for what they use as per existing AWS Step Functions pricing.

MMS • RSS

1 Stock-Split Stock to Buy Hand Over Fist in July and 1 to Avoid Like the Plague

2 Stocks Down 60% and 87% to Buy Ahead of the New Bull Market

3 No-Brainer Stocks to Buy With $100 Right Now

Want $6,000 in Annual Dividend Income? Invest $64,200 in These 3 High-Yield Stocks.

MMS • A N M Bazlur Rahman

JEP 439, Generational ZGC, has been promoted from Targeted to Completed for JDK 21. This JEP proposes to improve application performance by extending the Z Garbage Collector (ZGC) to maintain separate generations for young and old objects. This will allow ZGC to collect young objects, which tend to die young, more frequently.

The Z Garbage Collector, available for production use since JDK 15, is designed for low latency and high scalability. It performs the majority of its work while application threads are running, pausing those threads only briefly. ZGC’s pause times are consistently measured in microseconds, making it a preferred choice for workloads that require low latency and high scalability.

The new generational ZGC aims to lower the risks of allocation stalls, reduce the required heap memory overhead, and decrease garbage collection CPU overhead. These benefits are expected to come without a significant reduction in throughput compared to non-generational ZGC. The essential properties of non-generational ZGC, such as pause times not exceeding one millisecond and support for heap sizes from a few hundred megabytes up to many terabytes, will be preserved.

The generational ZGC is based on the weak generational hypothesis, which states that young objects tend to die young, while old objects tend to stick around. By collecting young objects more frequently, ZGC can improve the performance of applications.

Generational ZGC will initially be available alongside non-generational ZGC. Users can select Generational ZGC by adding the -XX:+ZGenerational option to the -XX:+UseZGC command-line option. In future releases, Generational ZGC will become the default, and eventually, non-generational ZGC will be removed.

$ java -XX:+UseZGC -XX:+ZGenerational ...

The new generational ZGC splits the heap into two logical generations: the young generation for recently allocated objects and the old generation for long-lived objects. Each generation is collected independently of the other, allowing ZGC to focus on collecting the profitable young objects.

Generational ZGC introduces several design concepts that distinguish it from non-generational ZGC and other garbage collectors. These include no multi-mapped memory, optimized barriers, double-buffered remembered sets, relocations without additional heap memory, dense heap regions, large objects, and full garbage collections.

The introduction of Generational ZGC is a significant step forward in improving the performance of applications running on the Java platform. By focusing on collecting young objects more frequently, Generational ZGC can provide lower latency, reduced memory overhead, and improved CPU utilization, making it a better solution for most use cases than non-generational ZGC.

It introduces a more complex system that uses explicit code in load and store barriers and concurrently runs two garbage collectors. The new system eliminates the use of multi-mapped memory, making it easier for users to measure heap memory usage and potentially increasing the maximum heap size beyond the 16-terabyte limit of non-generational ZGC. The load and store barriers are optimized using techniques such as fast paths and slow paths, remembered-set barriers, SATB marking barriers, fused store barrier checks, and store barrier buffers. Generational ZGC also introduces double-buffered remembered sets for precise tracking of inter-generational pointers and allows relocations without additional heap memory, thus enabling efficient young generation collection. The system also handles large objects well, allowing them to be allocated to the young generation and promoting them to the old generation if they are long-lived. The full garbage collections consider pointers from objects in the young generation to objects in the old generation as roots of the old-generation object graph.

In conclusion, the implementation of Generational ZGC in OpenJDK introduces a more complex system, running two garbage collectors concurrently and utilizing more intricate barriers and coloured pointers. Despite the complexity, the long-term goal is to fully replace the non-generational ZGC with the generational version to minimize maintenance costs. While most use cases are expected to benefit from Generational ZGC, some non-generational workloads might experience slight performance degradation. However, the potential overhead is believed to be offset by the benefits of not having to frequently collect objects in the old generation. Future improvements and optimizations of Generational ZGC will be driven by benchmarks and user feedback. The introduction of Generational ZGC is a significant step forward in improving the performance of applications running on the Java platform.

MMS • Aditya Kulkarni

Sysdig recently unveiled the industry’s first Cloud Native Application Protection Platform (CNAPP) with end-to-end detection and response capabilities. This platform combines cloud detection and response (CDR) with CNAPP, integrating the power of open-source Falco for both agent and agentless deployment models.

As organizations expand their cloud environments, they often face the challenge of managing numerous applications, services, and identities, leaving them susceptible to potential vulnerabilities. Traditional cloud security tools may be slow to identify suspicious behavior, and once alerted, it can take significant time to piece together the details of an incident. Sysdig’s CNAPP aims to address these shortcomings by offering instant and continuous understanding of the entire cloud environment, empowering security teams with real-time insights and the ability to stop breaches instantly.

One of the important features of Sysdig CNAPP is its agentless cloud detection powered by Falco, an open-source solution widely adopted for cloud threat detection. Traditionally, organizations had to deploy Falco agents on their infrastructure to utilize its power within Sysdig. However, Sysdig has now introduced agentless cloud detection, enabling organizations to process cloud logs and detect threats across the cloud, identity, and the software supply chain without the need for additional agent deployments. This streamlined approach saves time and resources while enhancing the platform’s threat-detection capabilities.

Earlier this year, Google Cybersecurity Action Team (GCAT) published the State of Cloud Detection and Response Report, which surveyed 400 security leaders and SecOps practitioners in North America. Based on the survey results, it was found that the majority of organizations now perform a significant portion of their computing operations in the cloud. Additionally, four out of every ten organizations transitioned to the cloud within the last year.

Consequently, cyber attackers are adapting their strategies to focus on cloud customers. This shift in the threat landscape has led to 84% of the surveyed respondents expressing the need to increase automation in their security measures to combat these evolving security threats effectively.

To address identity attacks and protect against multifactor authentication fatigue and account takeover, Sysdig introduces Okta detections as part of its CNAPP. By integrating real-time cloud and container activity with Okta events, security teams gain insights into potential identity threats and can take proactive measures to safeguard their cloud environment. Additionally, Sysdig’s CNAPP incorporates GitHub detections, enabling organizations to receive real-time alerts when critical events, such as the unauthorized pushing of secrets into repositories, occur in the software supply chain.

Sysdig’s approach with CNAPP provides threat detection anywhere in the cloud, offering 360-degree visibility and correlation across workloads, identities, cloud services, and third-party applications. Interested readers can know more about the features in this Sysdig blog.

MMS • RSS

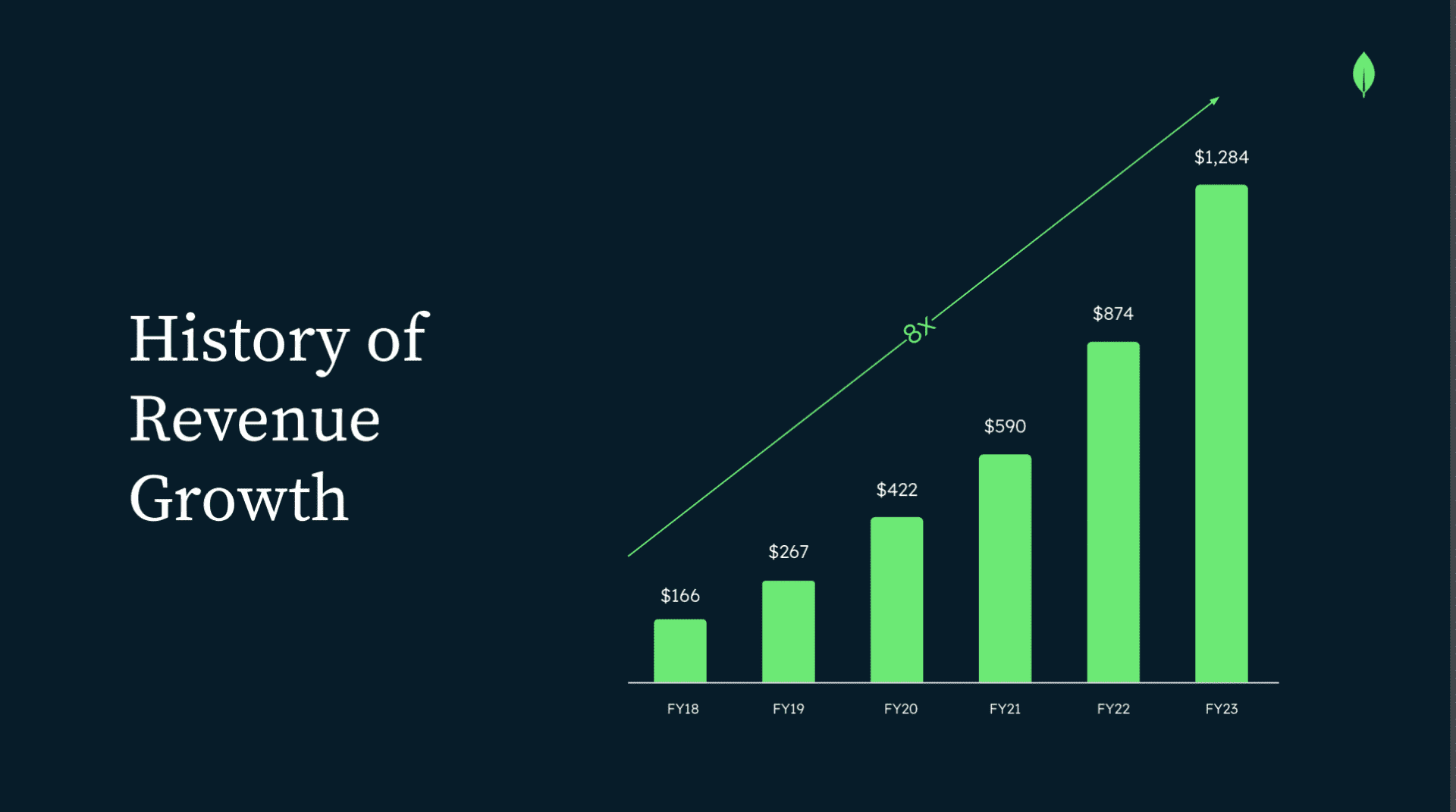

So some SaaS and Cloud leaders have seen big impacts from the post-Covid hangover, but others have just kept accelerating. MongoDB is one of those that just has never stopped, although as we’ll see below, even they have seen more cautious buying from their customers. But even macro challenges haven’t slowed Mongo much.

It’s now at a stunning $1.5 Billion in ARR, growing 29% overall, and it’s worth a stunning $29 Billion. That’s about as good as it gets in SaaS and Cloud!

Let’s dig in:

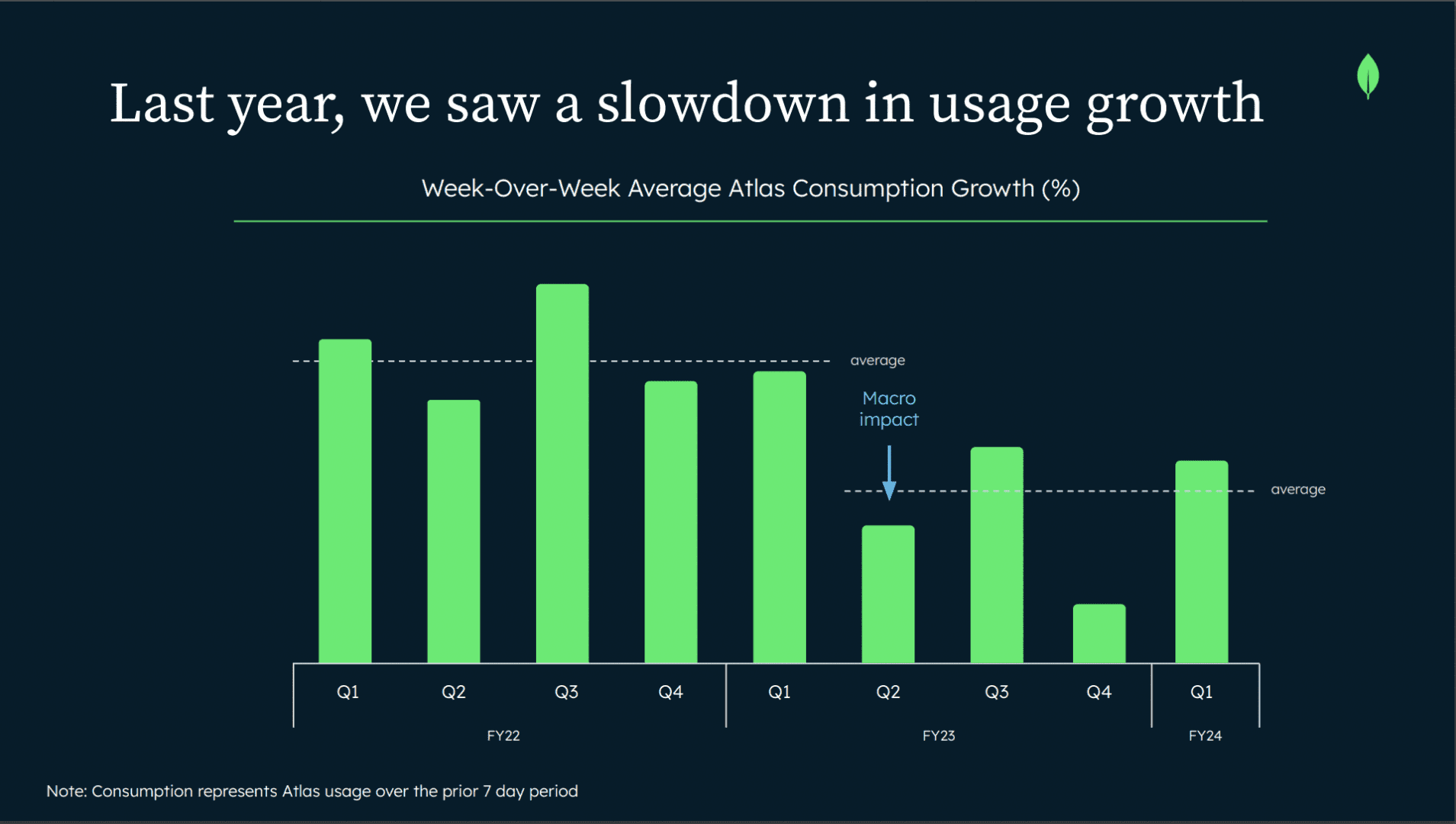

#1. 2022 Saw a Slowdown in Usage Growth, But 2023 Saw a Potential Bounce Back. While MongoDB overall has grown at epic rates, it hasn’t been immune from folks being more careful on how much compute they use. Mongo saw this show up in consumption growth materially decelerating about a year ago. The start of 2023 (FY ‘4) may have seen a partial bounce back, however. Are we near or past the lows in SaaS and Cloud buying patterns? We’ll see.

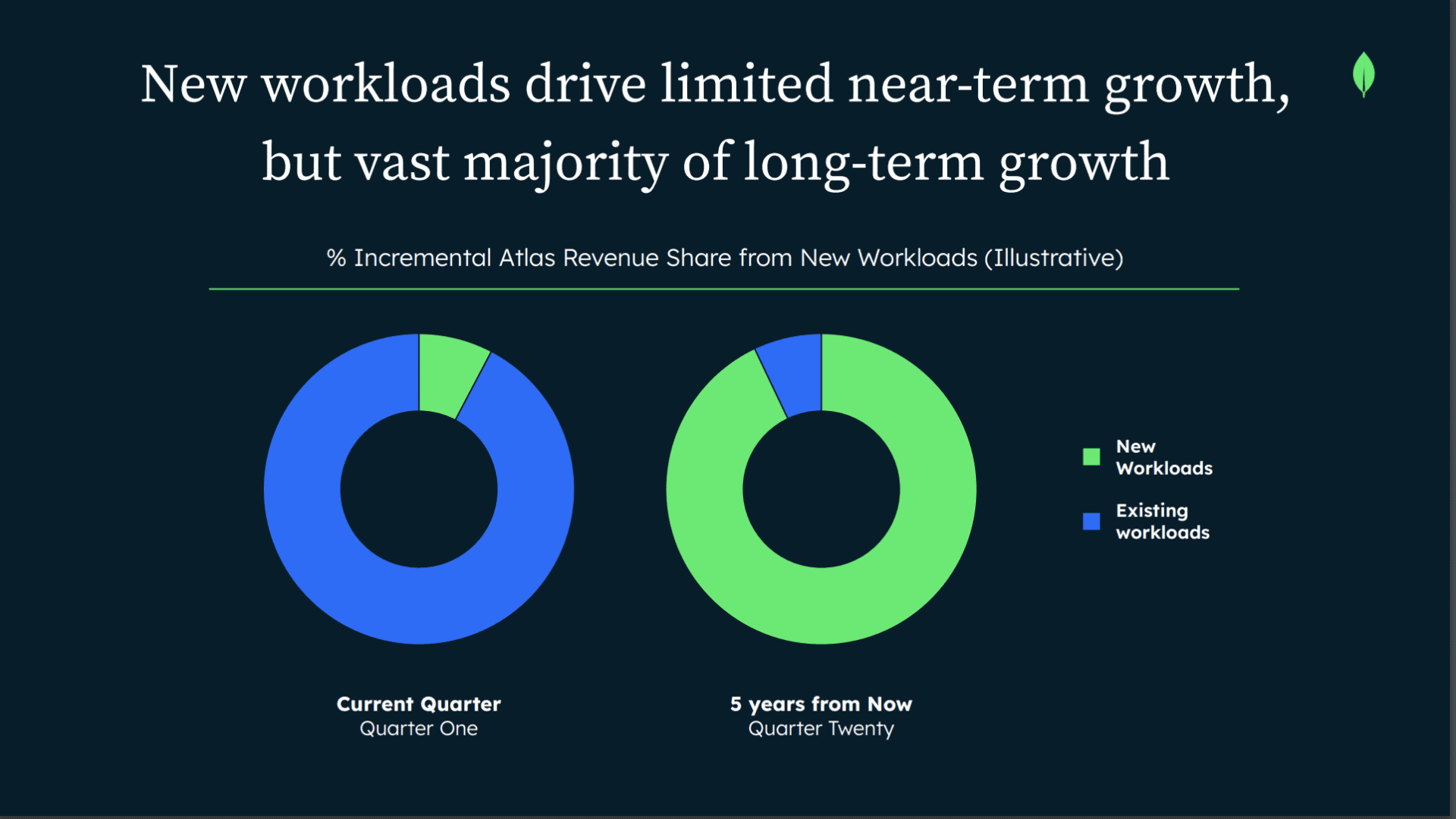

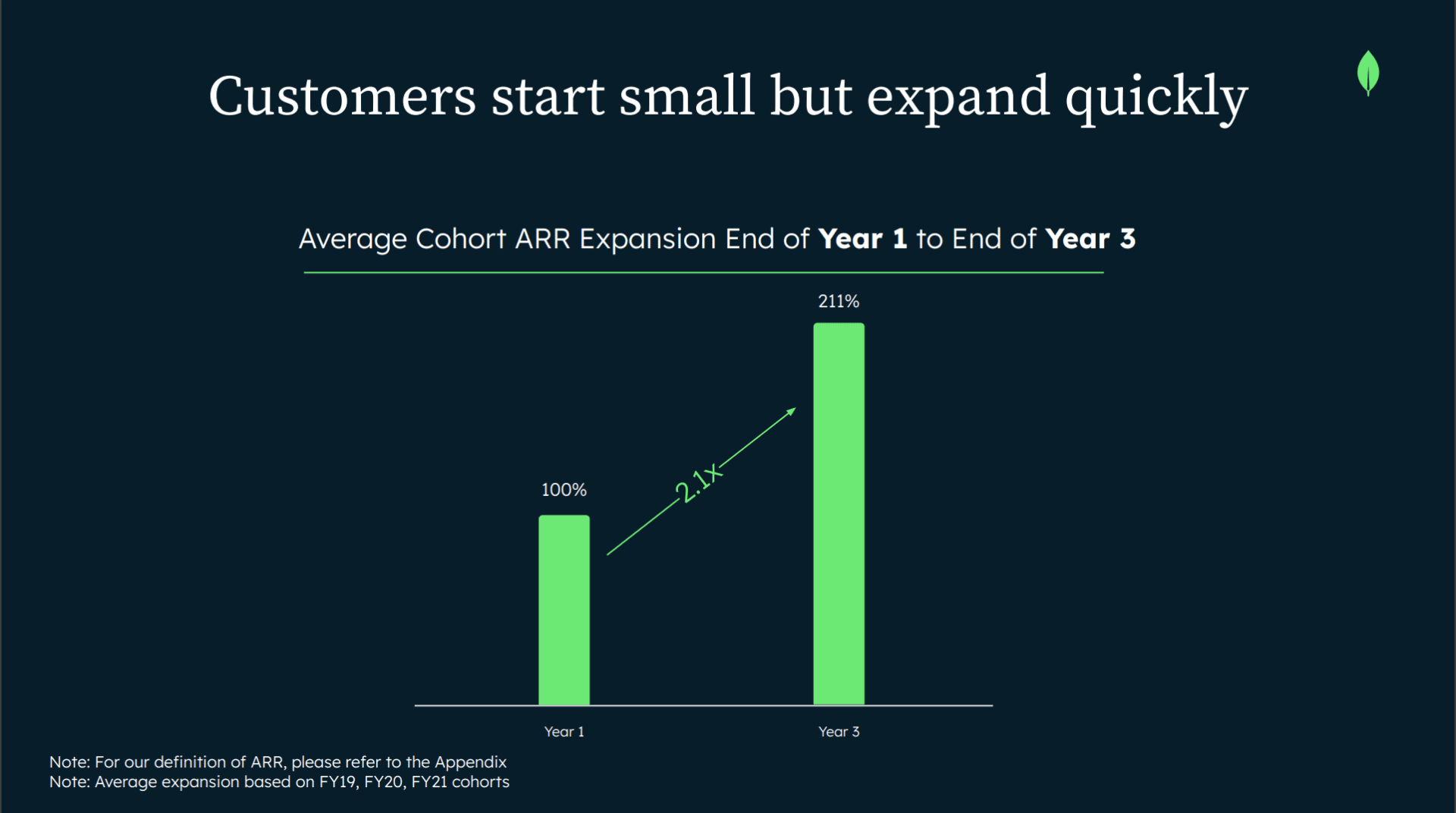

#2. New Workloads Are Only a Small Percent of Today’s Revenue, But Are The Majority of Tomorrow’s. I like this chart a lot showing both the awesome power of high NRR, and also how you have to invest in customers that scale with you over time. New workloads, new uses of Mongo are actually just a small percent of revenue. But those accounts grow dramatically over time, and don’t really churn. That leads to an awesome force of nature years down the road:

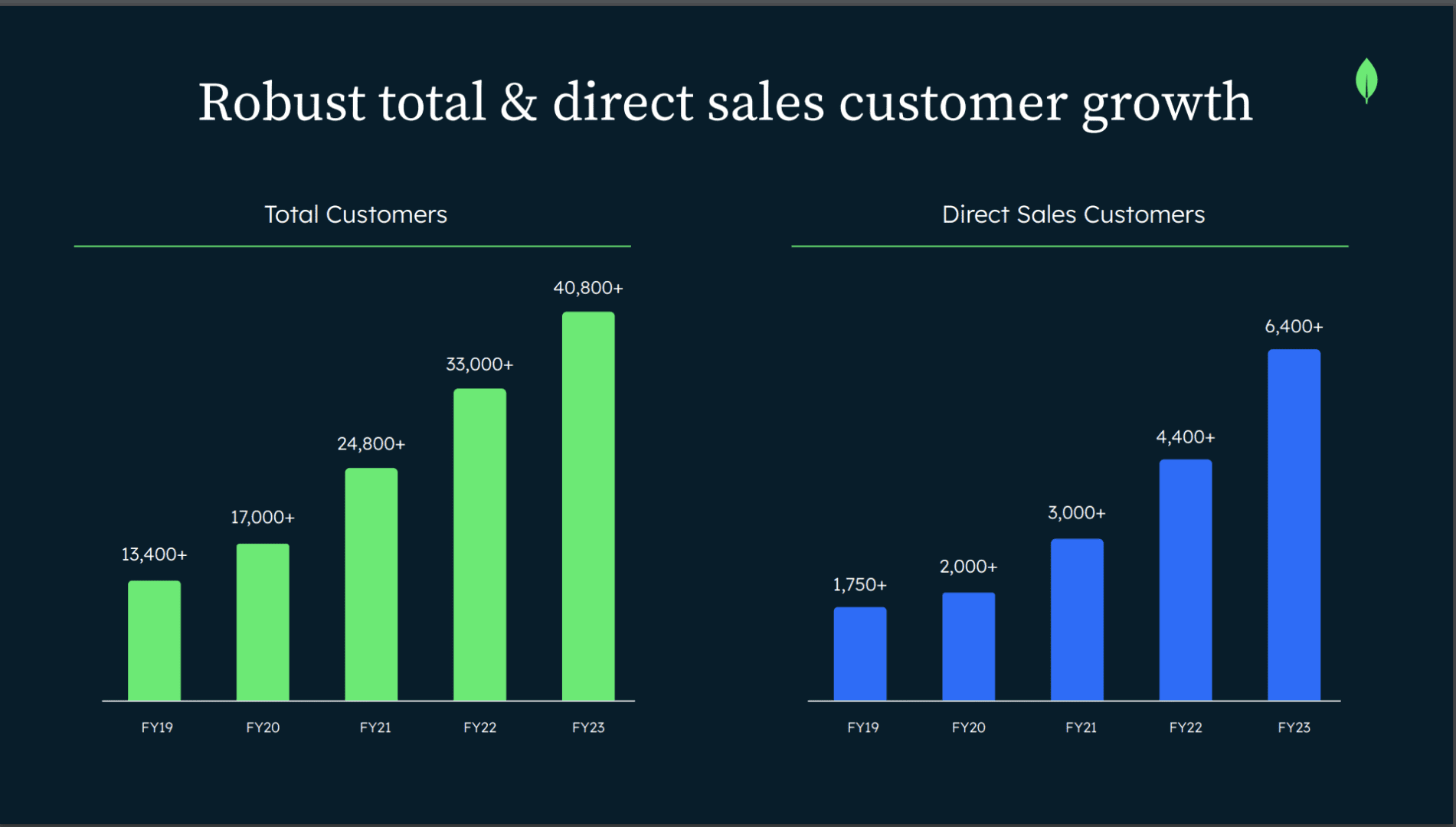

#3. New Customer Count Up 24%. A Very Good Sign. These days many are relying on upselling their existing base and raising prices to keep top line growth growing. That’s OK, but it doesn’t add real long-term value. Adding new customers does, and ideally growing new customers at least half as fast as revenue. Mongo’s doing better than that, adding +24% customers while growing +29% overall. A very bullish sign for the future.

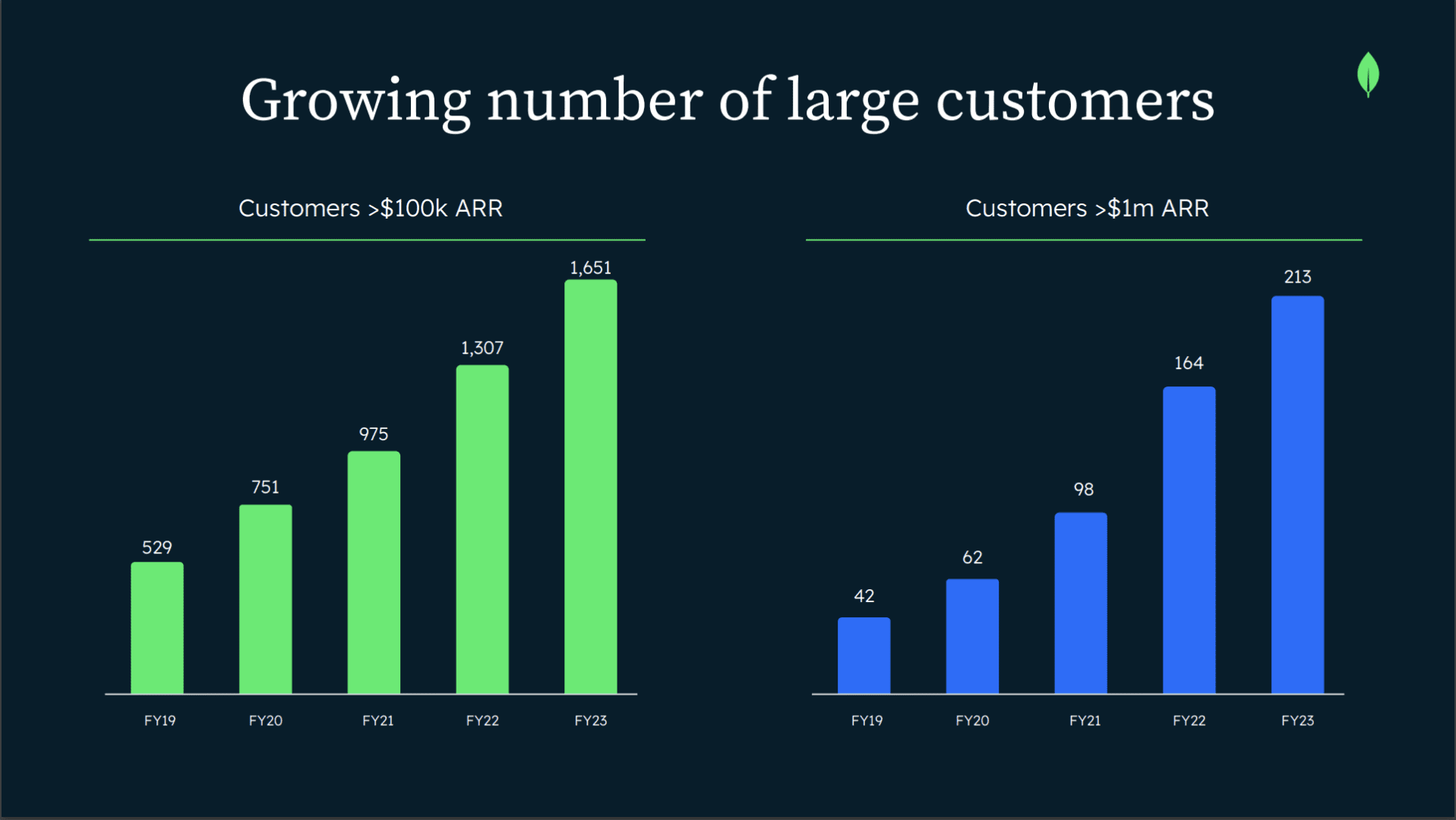

#4. $1m+ Customers Up 30%. Even with economic headwinds, $1M+ and bigger customers still fuel Mongo’s growth.

#5. Average Customer Spend More Than Doubles After 2 Years. High NRR is magical, but NRR as a metric isn’t a GAAP metric. It can be gamed a bit, and is subject to interpretation. But you know what isn’t? Revenue growth. That’s what really matters. And here you can see the magic in Mongo’s business model. After 24 months, the average customer has grown 2.1x. And that includes its biggest customers. They still grow 2x in spend over the first 24 months. Wow.

And a few other interesting notes:

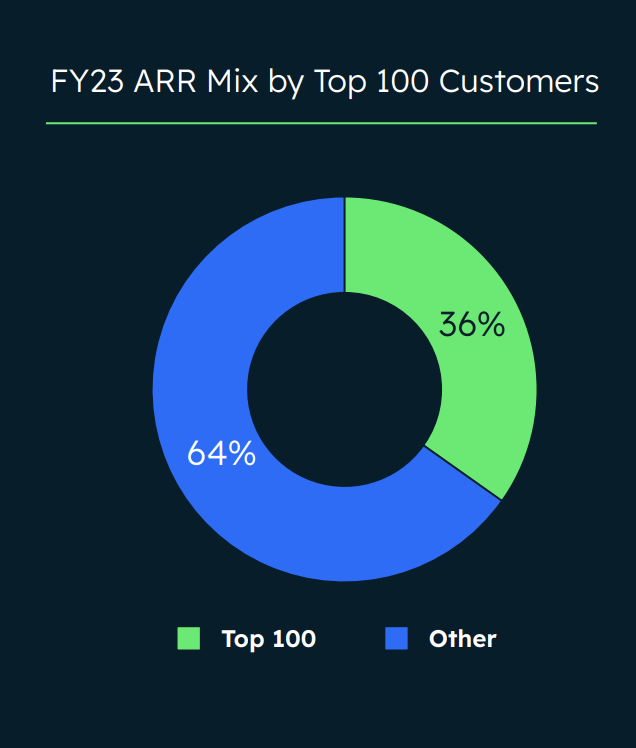

#6. Top 100 Customers are 33% of Revenue, and Enterprise Customers are 75% of Revenue. Anyone can start with Mongo, but the really big guys do … spend a lot! 75% of their revenue is Enterprise, even though it’s a small percent of total customer count. Yes, Mongo is PLG, at least in part. But it leans in big with a sales-driven motion of big customers as their accounts scale.

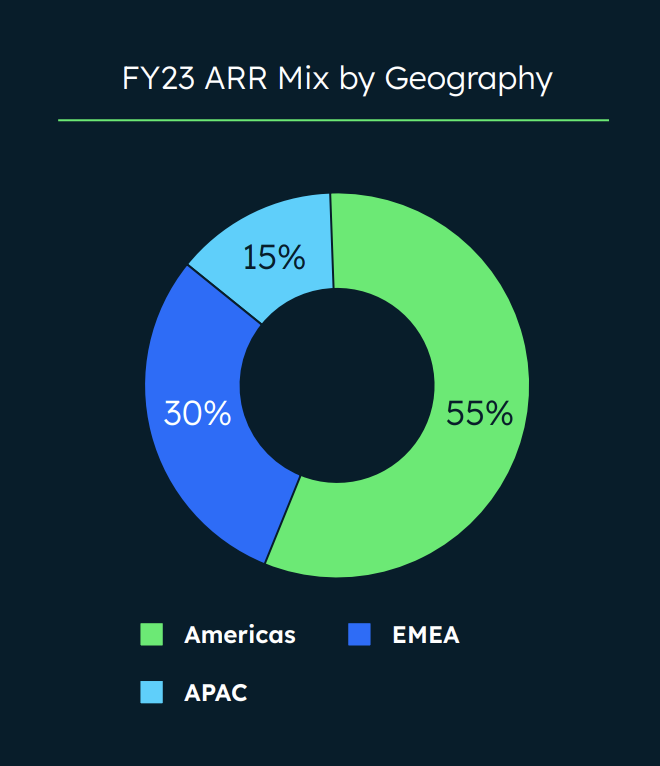

#7. 45% of Revenue Outside North America. Go global if you can, folks!

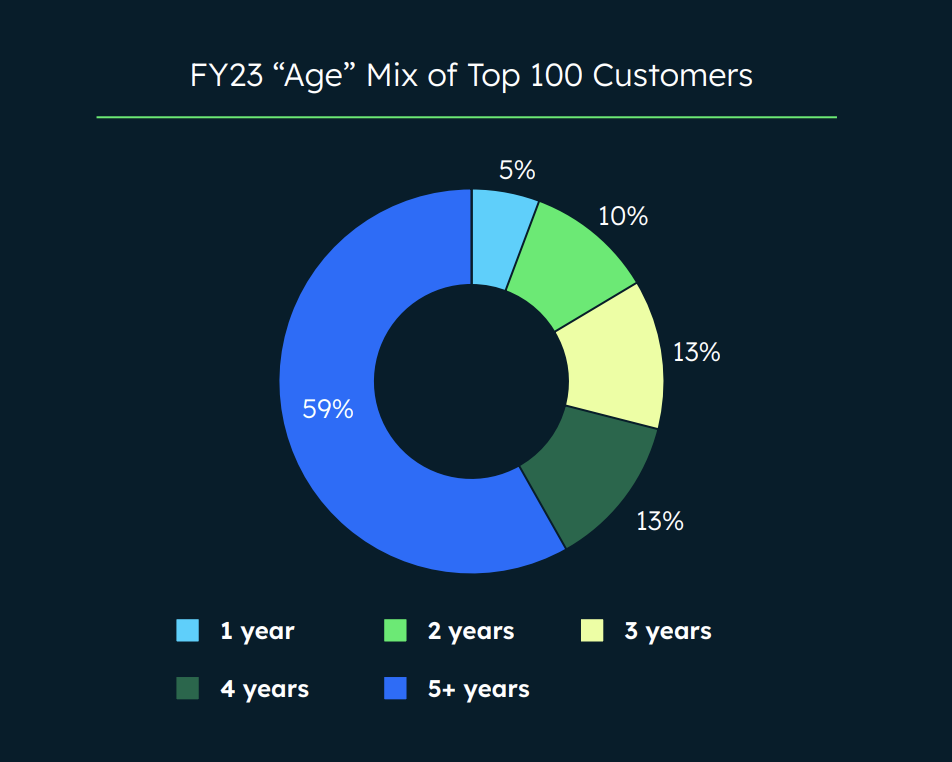

#8. Most of Its Top 100 Customers Have Been Customers for 5+ Years. What you’d expect from the account expansion math above, but very interesting to see. Go long, invest long. Keep them happy. You don’t always have to close a $1m deal … upfront. You can grow into them, too. Mongo does. It earns it.

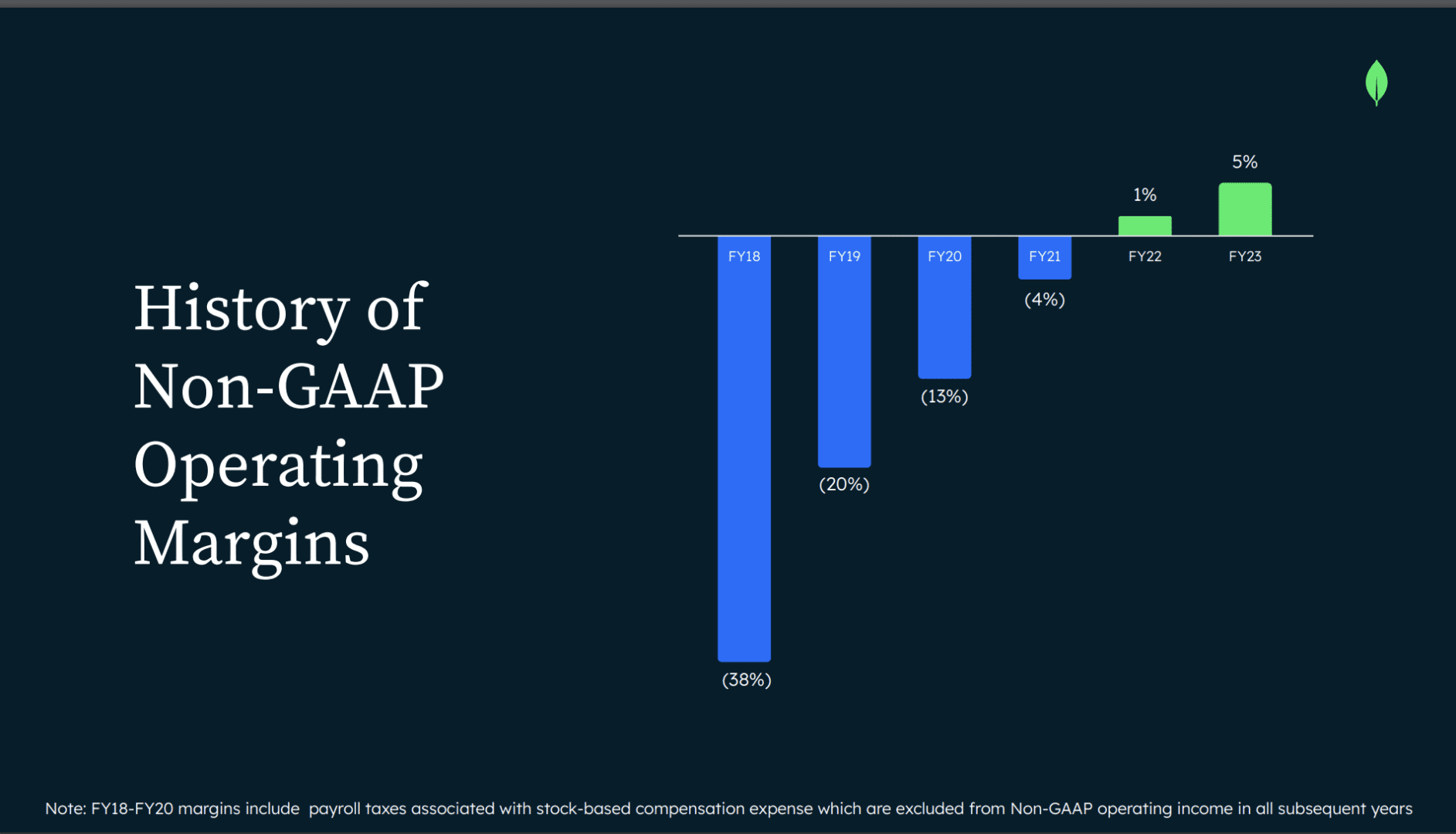

#9. MongoDB, too has gotten more efficient. Finally has non-GAAP positive operating margins. How efficient should you be in SaaS? How efficient can you be? Despite having a partial PLG motion and very high NRR, Mongo has invested in growth. And it wasn’t “profitable” until 2023. Until, well, probably until it had to be. But then it got there fast, like everyone from Monday to PagerDuty to HubSpot. Still, they waited, and they have one of the highest public multiples in SaaS and Cloud. Efficiency is a complicated metric. Mongo still isn’t wildly efficient, even at $1.5 Billion in ARR. But it’s wildly successful.

Wow, just an epic product, CEO, business model, and success story.

A great deep dive from SaaStr Annual here with Mongo’s CEO Dev Ittycheria and Jyoti Bansal, founder AppDynamics and CEO Harness.io, here:

MMS • RSS

We released a new market study on Global NoSQL Market with 100+ market data Tables, Pie Chat, Graphs & Figures spread through Pages and easy to understand detailed analysis. At present, the market is developing its presence. The Research report presents a complete assessment of the Market and contains a future trend, current growth factors, attentive opinions, facts, and industry validated market data. The research study provides estimates for Global NoSQL Forecast till 2030*. Some are the key players taken under coverage for this study are IBM Corporation, Aerospike Inc, MarkLogic Corporation, Hibernate, MariaDB, Oracle Database, Neo technology, MongoDB, Basho Technologies, Couchbase, PostgreSQL.

Click to get Global NoSQL Market Research Sample PDF Copy Here @: marketreports.info/sample/45470/NoSQL

Important Features that are under offering & key highlights of the report:

1) What all companies are currently profiled in the report?

Following are list of players that are currently profiled in the the report “IBM Corporation, Aerospike Inc, MarkLogic Corporation, Hibernate, MariaDB, Oracle Database, Neo technology, MongoDB, Basho Technologies, Couchbase, PostgreSQL”

** List of companies mentioned may vary in the final report subject to Name Change / Merger etc.

2) Can we add or profiled new company as per our need?

Yes, we can add or profile new company as per client need in the report. Final confirmation to be provided by research team depending upon the difficulty of survey.

** Data availability will be confirmed by research in case of privately held company. Upto 3 players can be added at no added cost.

3) What all regional segmentation covered? Can specific country of interest be added?

Currently, research report gives special attention and focus on following regions:

North America, Europe, Asia-Pacific etc

** One country of specific interest can be included at no added cost. For inclusion of more regional segment quote may vary.

4) Can inclusion of additional Segmentation / Market breakdown is possible?

Yes, inclusion of additional segmentation / Market breakdown is possible subject to data availability and difficulty of survey. However, a detailed requirement needs to be shared with our research before giving final confirmation to client.

** Depending upon the requirement the deliverable time and quote will vary.

To comprehend Global NoSQL market dynamics in the world mainly, the worldwide NoSQL market is analysed across major global regions. HTF MI also provides customized specific regional and country-level reports for the following areas.

• North America: United States, Canada, and Mexico.

• South & Central America: Argentina, Chile, and Brazil.

• Middle East & Africa: Saudi Arabia, UAE, Turkey, Egypt and South Africa.

• Europe: UK, France, Italy, Germany, Spain, and Russia.

• Asia-Pacific: India, China, Japan, South Korea, Indonesia, Singapore, and Australia.

2-Page profiles for 10+ leading manufacturers and 10+ leading retailers is included, along with 3 years financial history to illustrate the recent performance of the market. Revised and updated discussion for 2022 of key macro and micro market influences impacting the sector are provided with a thought-provoking qualitative comment on future opportunities and threats. This report combines the best of both statistically relevant quantitative data from the industry, coupled with relevant and insightful qualitative comment and analysis.

By Type

– Key-Value Store

– Document Databases

– Column Based Stores

– Graph Database

By Application

– Retail

– Online Game Development

– IT

– Social Network Development

– Web Applications Management

– Others

Geographical Analysis: North America, Europe, Asia-Pacific etc

In order to get a deeper view of Market Size, competitive landscape is provided i.e. Revenue (Million USD) by Players (2013-2022), Revenue Market Share (%) by Players (2013-2022) and further a qualitative analysis is made towards market concentration rate, product/service differences, new entrants and the technological trends in future.

Competitive Analysis:

The key players are highly focusing innovation in production technologies to improve efficiency and shelf life. The best long-term growth opportunities for this sector can be captured by ensuring ongoing process improvements and financial flexibility to invest in the optimal strategies. Company profile section of players such as IBM Corporation, Aerospike Inc, MarkLogic Corporation, Hibernate, MariaDB, Oracle Database, Neo technology, MongoDB, Basho Technologies, Couchbase, PostgreSQL includes its basic information like legal name, website, headquarters, its market position, historical background and top 5 closest competitors by Market capitalization / revenue along with contact information. Each player/ manufacturer revenue figures, growth rate and gross profit margin is provided in easy to understand tabular format for past 5 years and a separate section on recent development like mergers, acquisition or any new product/service launch etc.

Buy Full Copy Global NoSQL Report 2022 @ marketreports.info/checkout?buynow=45470/NoSQL

In this study, the years considered to estimate the market size of Global NoSQL are as follows:

History Year: 2013-2021

Base Year: 2021

Estimated Year: 2022

Forecast Year 2022 to 2030

Key Stakeholders/Global Reports:

NoSQL Manufacturers

NoSQL Distributors/Traders/Wholesalers

NoSQL Subcomponent Manufacturers

Industry Association

Downstream Vendors

Browse for Full Report at @: marketreports.info/industry-report/45470/NoSQL

Actual Numbers & In-Depth Analysis, Business opportunities, Market Size Estimation Available in Full Report.

Thanks for reading this article, you can also get individual chapter wise section or region wise report version like North America, Europe or Asia.

About Us:

Marketreports.info is the Credible Source for Gaining the Market Reports that will provide you with the Lead Your Business Needs. The market is changing rapidly with the ongoing expansion of the industry. Advancement in technology has provided today’s businesses with multifaceted advantages resulting in daily economic shifts. Thus, it is very important for a company to comprehend the patterns of the market movements in order to strategize better. An efficient strategy offers the companies a head start in planning and an edge over the competitors.

Contact Us

Market Reports

Phone (UK): +44 141 628 5998

Email: sales@marketreports.info

Web: https://www.marketreports.info