Month: August 2023

2023 Global NoSQL Market Emerging Trends and Opportunities: A Comprehensive Research Report

MMS • RSS

PRESS RELEASE

Published August 22, 2023

“Final Report will add the analysis of the impact of COVID-19 on this industry.”

The Global “NoSQL Market” Research, as presented in the 115 Pages report, provides comprehensive insights into the market based on types, applications, and geographical locations. These categories are further subdivided to offer a more profound comprehension of the NoSQL market dynamics. The study highlights the key players in the industry, outlining the top businesses based on market share, market size, growth rate, and other relevant factors, contributing to a comprehensive competitive landscape analysis. Additionally, the report takes into consideration the impact of COVID-19, emerging market trends, mergers and acquisitions, and regional conflicts, offering a holistic view of the market’s current state and future prospects.

Get a Sample Copy of the report: https://www.absolutereports.com/enquiry/request-sample/22376195

The global NoSQL market size was valued at USD 7520.13 million in 2022 and is expected to expand at a CAGR of 31.08% during the forecast period, reaching USD 38144.35 million by 2028.

Major Players in NoSQL market are:

- Microsoft Corporation

- Neo Technology, Inc.

- MarkLogic Corporation

- Aerospike, Inc.

- DataStax, Inc.

- Google LLC

- Amazon Web Services, Inc.

- PostgreSQL

- Couchbase, Inc.

- Objectivity, Inc.

- MongoDB, Inc.

Get a Sample Copy of the NoSQL Market Report

NoSQL Market by Types:

- Key-Value Store

- Document Databases

- Column Based Stores

- Graph Database

- DDDD

NoSQL Market by Applications:

- Retail

- Gaming

- IT

- Others

Key Factors Considered

COVID-19

Amid the COVID-19 crisis, the NoSQL market has definitely taken a hit. The report describes the market scenario during and post the pandemic in the vision of upstream raw materials, major market participants, downstream major customers, etc. Other aspects, such as changes in consumer behavior, demand, transport capacity, trade flow under COVID-19, have also been taken into consideration during the process of the research.

Regional Conflict / Russia-Ukraine War

The report also presents the impact of regional conflict on this market in an effort to aid the readers to understand how the market has been adversely influenced and how it’s going to evolve in the years to come.

Challenges and Opportunities

Factors that may help create opportunities and boost profits for market players, as well as challenges that may restrain or even pose a threat to the development of the players, are revealed in the report, which can shed a light on strategic decisions and implementation.

To Understand How Covid-19 Impact Is Covered in This Report – https://www.absolutereports.com/enquiry/request-covid19/22376195

Geographically, the detailed analysis of consumption, revenue, market share and growth rate, historical data and forecast:

- United States

- Europe

- China

- Japan

- India

- Southeast Asia

- Latin America

- Middle East and Africa

Years considered for this report:

- Historical Years: 2018-2022

- Base Year: 2022

- Estimated Year: 2023

- Forecast Period: 2022-2029

Client Focus

- Does this report consider the impact of COVID-19 and the Russia-Ukraine war on the NoSQL market?

Yes. As the COVID-19 and the Russia-Ukraine war are profoundly affecting the global supply chain relationship and raw material price system, we have definitely taken them into consideration throughout the research, and in Chapters 1.7, 2.7, 4.X.1, 7.5, 8.7, we elaborate at full length on the impact of the pandemic and the war on the NoSQL Industry.

- How do you determine the list of the key players included in the report?

With the aim of clearly revealing the competitive situation of the industry, we concretely analyze not only the leading enterprises that have a voice on a global scale, but also the regional small and medium-sized companies that play key roles and have plenty of potential growth.

Please find the key player list in Summary.

- What are your main data sources?

Both Primary and Secondary data sources are being used while compiling the report.

Primary sources include extensive interviews of key opinion leaders and industry experts (such as experienced front-line staff, directors, CEOs, and marketing executives), downstream distributors, as well as end-users.

Secondary sources include the research of the annual and financial reports of the top companies, public files, new journals, etc. We also cooperate with some third-party databases.

Please find a more complete list of data sources in Chapters 11.2.1 and 11.2.2.

- Can I modify the scope of the report and customize it to suit my requirements?

Yes. Customized requirements of multi-dimensional, deep-level and high-quality can help our customers precisely grasp market opportunities, effortlessly confront market challenges, properly formulate market strategies and act promptly, thus to win them sufficient time and space for market competition.

Inquire or Share Your Questions If Any before the Purchasing This Report – https://www.absolutereports.com/enquiry/pre-order-enquiry/22376195

Chapter including in NoSQL market report:

- Chapter 1 mainly defines the market scope and introduces the macro overview of the industry, with an executive summary of different market segments ((by type, application, region, etc.), including the definition, market size, and trend of each market segment.

- Chapter 2 provides a qualitative analysis of the current status and future trends of the market. Industry Entry Barriers, market drivers, market challenges, emerging markets, consumer preference analysis, together with the impact of the COVID-19 outbreak will all be thoroughly explained.

- Chapter 3 analyzes the current competitive situation of the market by providing data regarding the players, including their sales volume and revenue with corresponding market shares, price and gross margin. In addition, information about market concentration ratio, mergers, acquisitions, and expansion plans will also be covered.

- Chapter 4 focuses on the regional market, presenting detailed data (i.e., sales volume, revenue, price, gross margin) of the most representative regions and countries in the world.

- Chapter 5 provides the analysis of various market segments according to product types, covering sales volume, revenue along with market share and growth rate, plus the price analysis of each type.

- Chapter 6 shows the breakdown data of different applications, including the consumption and revenue with market share and growth rate, with the aim of helping the readers to take a close-up look at the downstream market.

- Chapter 7 provides a combination of quantitative and qualitative analyses of the market size and development trends in the next five years. The forecast information of the whole, as well as the breakdown market, offers the readers a chance to look into the future of the industry.

- Chapter 8 is the analysis of the whole market industrial chain, covering key raw materials suppliers and price analysis, manufacturing cost structure analysis, alternative product analysis, also providing information on major distributors, downstream buyers, and the impact of COVID-19 pandemic.

- Chapter 9 shares a list of the key players in the market, together with their basic information, product profiles, market performance (i.e., sales volume, price, revenue, gross margin), recent development, SWOT analysis, etc.

- Chapter 10 is the conclusion of the report which helps the readers to sum up the main findings and points.

- Chapter 11 introduces the market research methods and data sources.

Some of the key questions answered in this report:

- What will the NoSQL market growth rate during the forecast period?

- Which are the key factors driving the NoSQL market?

- What was the size of the NoSQL market by 2029?

- Which region is expected to hold the highest market share in the NoSQL market?

- What trends, challenges and barriers will impact the development and sizing of the Global NoSQL market?

- What are the NoSQL market opportunities in the global NoSQL Industry?

Purchase this Report (Price 3250 USD for a Single-User License) –https://www.absolutereports.com/purchase/22376195

Detailed TOC of Global NoSQL Industry Research Report

1 NoSQL Market Overview

1.1 Product Overview and Scope of NoSQL Market

1.2 NoSQL Market Segment by Type

1.2.1 Global NoSQL Market Sales Volume and CAGR (%) Comparison by Type

1.3 Global NoSQL Market Segment by Application

1.3.1 NoSQL Market Consumption (Sales Volume) Comparison by Application

1.4 Global NoSQL Market, Region Wise

1.4.1 Global NoSQL Market Size (Revenue) and CAGR (%) Comparison by Region

1.4.2 United States NoSQL Market Status and Prospect

1.4.3 Europe NoSQL Market Status and Prospect

1.4.4 China NoSQL Market Status and Prospect

1.4.5 Japan NoSQL Market Status and Prospect

1.4.6 India NoSQL Market Status and Prospect

1.4.7 Southeast Asia NoSQL Market Status and Prospect

1.4.8 Latin America NoSQL Market Status and Prospect

1.4.9 Middle East and Africa NoSQL Market Status and Prospect

1.5 Global Market Size of NoSQL

1.5.1 Global NoSQL Market Revenue Status and Outlook

1.5.2 Global NoSQL Market Sales Volume Status and Outlook

1.6 Global Macroeconomic Analysis

1.7 The impact of the Russia-Ukraine war on the NoSQL Market

2 Industry Outlook

2.1 NoSQL Industry Technology Status and Trends

2.2 Industry Entry Barriers

2.2.1 Analysis of Financial Barriers

2.2.2 Analysis of Technical Barriers

2.2.3 Analysis of Talent Barriers

2.2.4 Analysis of Brand Barrier

2.3 NoSQL Market Drivers Analysis

2.4 NoSQL Market Challenges Analysis

2.5 Emerging Market Trends

2.6 Consumer Preference Analysis

2.7 NoSQL Industry Development Trends under COVID-19 Outbreak

2.7.1 Global COVID-19 Status Overview

2.7.2 Influence of COVID-19 Outbreak on NoSQL Industry Development

3 Global NoSQL Market Landscape by Player

3.1 Global NoSQL Sales Volume and Share by Player (2018-2023)

3.2 Global NoSQL Revenue and Market Share by Player (2018-2023)

3.3 Global NoSQL Average Price by Player (2018-2023)

3.4 Global NoSQL Gross Margin by Player (2018-2023)

3.5 NoSQL Market Competitive Situation and Trends

3.5.1 NoSQL Market Concentration Rate

3.5.2 NoSQL Market Share of Top 3 and Top 6 Players

3.5.3 Mergers and Acquisitions, Expansion

Get a Sample Copy of the NoSQL Market Report

4 Global NoSQL Sales Volume and Revenue Region Wise (2018-2023)

4.1 Global NoSQL Sales Volume and Market Share, Region Wise (2018-2023)

4.2 Global NoSQL Revenue and Market Share, Region Wise (2018-2023)

4.3 Global NoSQL Sales Volume, Revenue, Price and Gross Margin (2018-2023)

4.4 United States NoSQL Sales Volume, Revenue, Price and Gross Margin (2018-2023)

4.4.1 United States NoSQL Market Under COVID-19

4.5 Europe NoSQL Sales Volume, Revenue, Price and Gross Margin (2018-2023)

4.5.1 Europe NoSQL Market Under COVID-19

4.6 China NoSQL Sales Volume, Revenue, Price and Gross Margin (2018-2023)

4.6.1 China NoSQL Market Under COVID-19

4.7 Japan NoSQL Sales Volume, Revenue, Price and Gross Margin (2018-2023)

4.7.1 Japan NoSQL Market Under COVID-19

4.8 India NoSQL Sales Volume, Revenue, Price and Gross Margin (2018-2023)

4.8.1 India NoSQL Market Under COVID-19

4.9 Southeast Asia NoSQL Sales Volume, Revenue, Price and Gross Margin (2018-2023)

4.9.1 Southeast Asia NoSQL Market Under COVID-19

4.10 Latin America NoSQL Sales Volume, Revenue, Price and Gross Margin (2018-2023)

4.10.1 Latin America NoSQL Market Under COVID-19

4.11 Middle East and Africa NoSQL Sales Volume, Revenue, Price and Gross Margin (2018-2023)

4.11.1 Middle East and Africa NoSQL Market Under COVID-19

5 Global NoSQL Sales Volume, Revenue, Price Trend by Type

5.1 Global NoSQL Sales Volume and Market Share by Type (2018-2023)

5.2 Global NoSQL Revenue and Market Share by Type (2018-2023)

5.3 Global NoSQL Price by Type (2018-2023)

5.4 Global NoSQL Sales Volume, Revenue and Growth Rate by Type (2018-2023)

6 Global NoSQL Market Analysis by Application

6.1 Global NoSQL Consumption and Market Share by Application (2018-2023)

6.2 Global NoSQL Consumption Revenue and Market Share by Application (2018-2023)

6.3 Global NoSQL Consumption and Growth Rate by Application (2018-2023)

7 Global NoSQL Market Forecast (2023-2028)

7.1 Global NoSQL Sales Volume, Revenue Forecast (2023-2028)

7.1.1 Global NoSQL Sales Volume and Growth Rate Forecast (2023-2028)

7.1.2 Global NoSQL Revenue and Growth Rate Forecast (2023-2028)

7.1.3 Global NoSQL Price and Trend Forecast (2023-2028)

7.2 Global NoSQL Sales Volume and Revenue Forecast, Region Wise (2023-2028)

7.2.1 United States NoSQL Sales Volume and Revenue Forecast (2023-2028)

7.2.2 Europe NoSQL Sales Volume and Revenue Forecast (2023-2028)

7.2.3 China NoSQL Sales Volume and Revenue Forecast (2023-2028)

7.2.4 Japan NoSQL Sales Volume and Revenue Forecast (2023-2028)

7.2.5 India NoSQL Sales Volume and Revenue Forecast (2023-2028)

7.2.6 Southeast Asia NoSQL Sales Volume and Revenue Forecast (2023-2028)

7.2.7 Latin America NoSQL Sales Volume and Revenue Forecast (2023-2028)

7.2.8 Middle East and Africa NoSQL Sales Volume and Revenue Forecast (2023-2028)

7.3 Global NoSQL Sales Volume, Revenue and Price Forecast by Type (2023-2028)

7.3.1 Global NoSQL Revenue and Growth Rate of Photo (2023-2028)

7.3.2 Global NoSQL Revenue and Growth Rate of Video (2023-2028)

7.4 Global NoSQL Consumption Forecast by Application (2023-2028)

7.4.1 Global NoSQL Consumption Value and Growth Rate of Commercial (2023-2028)

7.4.2 Global NoSQL Consumption Value and Growth Rate of Editorial

7.5 NoSQL Market Forecast Under COVID-19

8 NoSQL Market Upstream and Downstream Analysis

8.1 NoSQL Industrial Chain Analysis

8.2 Key Raw Materials Suppliers and Price Analysis

8.3 Manufacturing Cost Structure Analysis

8.3.1 Labor Cost Analysis

8.3.2 Energy Costs Analysis

8.3.3 RandD Costs Analysis

8.4 Alternative Product Analysis

8.5 Major Distributors of NoSQL Analysis

8.6 Major Downstream Buyers of NoSQL Analysis

8.7 Impact of COVID-19 and the Russia-Ukraine war on the Upstream and Downstream in the NoSQL Industry

9 Players Profiles

9.1 Manufacture 1

9.1.1 Manufacture 1 Basic Information, Manufacturing Base, Sales Region and Competitors

9.1.2 NoSQL Product Profiles, Application and Specification

9.1.3 Manufacture 1 Market Performance (2018-2023)

9.1.4 Recent Development

9.1.5 SWOT Analysis

10 Research Findings and Conclusion

11 Appendix

11.1 Methodology

11.2 Research Data Source

For Detailed TOC – https://www.absolutereports.com/TOC/22376195#TOC

Contact Us:

Absolute Reports

Phone: US +1 424 253 0807

UK +44 203 239 8187

Email: [email protected]

Web: https://www.absolutereports.com

Press Release Distributed by The Express Wire

To view the original version on The Express Wire visit 2023 Global NoSQL Market Emerging Trends and Opportunities: A Comprehensive Research Report

TheExpressWire

MMS • RSS

The worldwide “Distributed Database Management Systems Market” 2023 Research Report presents a professional and complete analysis of the Global Distributed Database Management Systems Market in the current situation. This report includes development plans and policies along with Distributed Database Management Systems manufacturing processes and price structures. the reports 2023 research report offers an analytical view of the industry by studying different factors like Distributed Database Management Systems Market growth, consumption volume, Market Size, Revenue, Market Share, Market Trends, and Distributed Database Management Systems industry cost structures during the forecast period from 2023 to 2030. It encloses in-depth Research of the Distributed Database Management Systems Market state and the competitive landscape globally. This report analyzes the potential of the Distributed Database Management Systems Market in the present and future prospects from various angles in detail.

The global Distributed Database Management Systems market report is provided for the international markets as well as development trends, competitive landscape analysis, and key region’s development status. Development policies and plans are discussed as well as manufacturing processes and cost structures are also analyzed. This report additionally states import/export consumption, supply and demand Figures, cost, price, revenue, and gross margins. The Global Distributed Database Management Systems market 2023 research provides a basic overview of the industry including definitions, classifications, applications, and industry chain structure.

Get the Sample Copy of the Report at: https://www.worldwidemarketreports.com/sample/973647

Our sample report includes:

➤ Introduction, overview, and in-depth industry analysis updated for 2030.

➤ A research report spanning over 115+ pages, including updated research.

➤ Chapter-wise guidance on specific requests.

➤ Updated regional analysis for 2023 with the graphical representation of size, share, and trends.

➤ An updated list of tables and figures.

➤ Top market players with their business strategy, sales volume, and revenue analysis.

Scope of the Distributed Database Management Systems Market:

The Global Distributed Database Management Systems market is anticipated to rise at a considerable rate during the forecast period, between 2023 and 2030. In 2023, the market is growing at a steady rate, and with the rising adoption of strategies by key players, the market is expected to rise over the projected horizon.

The report also gives a 360-degree overview of the competitive landscape of the industries that are:

- Oracle

- MongoDB

- Microsoft

- IBM

- Amazon Web Services (AWS)

- Cassandra

- Couchbase

- Redis

- YugabyteDB

By Types:

- Relational Distributed Databases

- NoSQL Distributed Databases

By Applications:

- Big Data Analytics

- IoT Data Management

- Financial Services

- E-commerce and Retail

- Healthcare

- Social Media and Content Management

- Others

Request Sample Copy of this Report at: https://www.worldwidemarketreports.com/sample/973647

Key Market Segmentation:

WMR provides an analysis of the key trends in each sub-segment of the global Distributed Database Management Systems market, along with forecasts at the global, regional, and country-level analysis from 2023 to 2030. Our report has categorized the market based on type, offering, technology, system, and end-use industry. The biggest highlight of the report is to provide companies in the industry with a strategic analysis of the impact of COVID-19. At the same time, this report analyzed the market of the leading 20 countries and introduce the market potential of these countries.

𝐌𝐚𝐫𝐤𝐞𝐭 𝐃𝐫𝐢𝐯𝐞𝐫𝐬: A few important variables, including the rising consumer demand for the product, effective marketing tactics in new markets, and significant financial investments in product development, are the primary drivers of Distributed Database Management Systems.

𝐌𝐚𝐫𝐤𝐞𝐭 𝐂𝐡𝐚𝐥𝐥𝐞𝐧𝐠𝐞𝐬: Easy availability to rivals is one of the challenges in the market for Distributed Database Management Systems. Another barrier in the market is the low cost of alternatives. However, firms intend to overcome this obstacle by using cutting-edge technology and managing prices, which will subsequently boost product demand. Moreover, in order for market participants to prevent risks, alter their plans, and carry on with operations, researchers have also highlighted major hurdles for them. By doing this, producers will be able to properly manage their resources without sacrificing product quality or timely market delivery.

𝐎𝐩𝐩𝐨𝐫𝐭𝐮𝐧𝐢𝐭𝐢𝐞𝐬: businesses can take advantage of them by putting the proper plans in place. The prospects described in the report assist the stakeholders and report buyers in properly planning their investments and obtaining the most return on investment.

𝐌𝐚𝐫𝐤𝐞𝐭 𝐓𝐫𝐞𝐧𝐝𝐬: The market sees a few developments that assist businesses in developing more successful tactics. The report with the most recent data discusses the current trends. Customers can obtain an idea of the upcoming offerings on the market, and businesses can plan on producing greatly improved solutions with the use of this information.

Geographically, the detailed analysis of consumption, revenue, market share, and growth rate of the following regions:

➳ North America (United States, Canada, Mexico)

➳ Europe (Germany, UK, France, Italy, Spain, Others)

➳ Asia-Pacific (China, Japan, India, South Korea, Southeast Asia, Others)

➳ The Middle East and Africa (Saudi Arabia, UAE, South Africa, Others)

➳ South America (Brazil, Others)

Global Distributed Database Management Systems Market Development Strategy Pre and Post COVID-19, by Corporate Strategy Analysis, Landscape, Type, Application, and Leading 20 Countries covers and analyzes the potential of the global Distributed Database Management Systems industry, providing statistical information about market dynamics, growth factors, major challenges, PEST analysis, and market entry strategy Analysis, opportunities and forecasts.

Have any query on this report? Click here at: https://www.worldwidemarketreports.com/quiry/973647

Valuable Points from Distributed Database Management Systems Market Research Report 2023-2030:

➼ Significant changes in Market dynamics.

➼ Reporting and assessment of recent industry developments.

➼ A complete background analysis, which includes a valuation of the parental Distributed Database Management Systems Market.

➼ Current, Historical, and projected size of the Distributed Database Management Systems Market from the viewpoint of both value and volume.

➼ Distributed Database Management Systems Market segmentation according to Top Regions.

➼ Distributed Database Management Systems Market shares and strategies of key Manufacturers.

➼ Emerging Specific segments and regions for Distributed Database Management Systems Market.

➼ An objective valuation of the trajectory of the Market.

➼ Recommendations to Top Companies for reinforcement of their foothold in the market.

FAQ’s:

[1] Who are the global manufacturers of Distributed Database Management Systems, what are their share, price, volume, competitive landscape, SWOT analysis, and future growth plans?

[2] What are the key drivers, growth/restraining factors, and challenges of Distributed Database Management Systems?

[3] How is the Distributed Database Management Systems industry expected to grow in the projected period?

[4] How has COVID-19 affected the Distributed Database Management Systems industry and is there any change in the regulatory policy framework?

[5] What are the key areas of applications and product types of the Distributed Database Management Systems industry that can expect huge demand during the forecast period?

[6] What are the key offerings and new strategies adopted by Distributed Database Management Systems players?

Reason to Buy:

Save and reduce time carrying out entry-level research by identifying the growth, size, leading players, and segments in the global Distributed Database Management Systems Market.

Save and reduce time carrying out entry-level research by identifying the growth, size, leading players, and segments in the global Distributed Database Management Systems Market. Highlights key business priorities in order to guide the companies to reform their business strategies and establish themselves in the wide geography.

Highlights key business priorities in order to guide the companies to reform their business strategies and establish themselves in the wide geography. The key findings and recommendations highlight crucial progressive industry trends in the Distributed Database Management Systems Market, thereby allowing players to develop effective long-term strategies in order to garner their market revenue.

The key findings and recommendations highlight crucial progressive industry trends in the Distributed Database Management Systems Market, thereby allowing players to develop effective long-term strategies in order to garner their market revenue. Develop/modify business expansion plans by using substantial growth offerings in developed and emerging markets.

Develop/modify business expansion plans by using substantial growth offerings in developed and emerging markets. Scrutinize in-depth global market trends and outlook coupled with the factors driving the market, as well as those restraining the growth to a certain extent.

Scrutinize in-depth global market trends and outlook coupled with the factors driving the market, as well as those restraining the growth to a certain extent. Enhance the decision-making process by understanding the strategies that underpin commercial interest with respect to products, segmentation, and industry verticals.

Enhance the decision-making process by understanding the strategies that underpin commercial interest with respect to products, segmentation, and industry verticals.

Buy this report and Get Up to 𝟕𝟎% Discount At: https://www.worldwidemarketreports.com/promobuy/973647

About WMR:

Worldwide Market Reports is your one-stop repository of detailed and in-depth market research reports compiled by an extensive list of publishers from across the globe. We offer reports across virtually all domains and an exhaustive list of sub-domains under the sun. The in-depth market analysis by some of the most vastly experienced analysts provides our diverse range of clients from across all industries with vital decision-making insights to plan and align their market strategies in line with current market trends.

Contact Us:

Contact Us:

Mr. Shah

Worldwide Market Reports,

Tel: U.S. +1-415-871-0703

U.K. +44-203-289-4040

Japan +81-50-5539-1737

Email: [email protected]

Website: https://www.worldwidemarketreports.com/

MMS • RSS

Keybank National Association OH grew its holdings in MongoDB, Inc. (NASDAQ:MDB – Free Report) by 20.9% in the 1st quarter, according to the company in its most recent filing with the SEC. The firm owned 1,915 shares of the company’s stock after buying an additional 331 shares during the quarter. Keybank National Association OH’s holdings in MongoDB were worth $446,000 at the end of the most recent quarter.

Keybank National Association OH grew its holdings in MongoDB, Inc. (NASDAQ:MDB – Free Report) by 20.9% in the 1st quarter, according to the company in its most recent filing with the SEC. The firm owned 1,915 shares of the company’s stock after buying an additional 331 shares during the quarter. Keybank National Association OH’s holdings in MongoDB were worth $446,000 at the end of the most recent quarter.

Several other institutional investors have also recently made changes to their positions in MDB. Raymond James & Associates boosted its position in shares of MongoDB by 32.0% during the first quarter. Raymond James & Associates now owns 4,922 shares of the company’s stock valued at $2,183,000 after purchasing an additional 1,192 shares in the last quarter. PNC Financial Services Group Inc. raised its stake in MongoDB by 19.1% during the first quarter. PNC Financial Services Group Inc. now owns 1,282 shares of the company’s stock valued at $569,000 after buying an additional 206 shares during the last quarter. MetLife Investment Management LLC purchased a new position in MongoDB during the first quarter valued at $1,823,000. Panagora Asset Management Inc. raised its stake in MongoDB by 9.8% during the first quarter. Panagora Asset Management Inc. now owns 1,977 shares of the company’s stock valued at $877,000 after buying an additional 176 shares during the last quarter. Finally, Vontobel Holding Ltd. raised its stake in MongoDB by 100.3% during the first quarter. Vontobel Holding Ltd. now owns 2,873 shares of the company’s stock valued at $1,236,000 after buying an additional 1,439 shares during the last quarter. Institutional investors own 89.22% of the company’s stock.

MongoDB Stock Up 1.0 %

Shares of MDB stock opened at $362.04 on Wednesday. MongoDB, Inc. has a 1 year low of $135.15 and a 1 year high of $439.00. The company has a debt-to-equity ratio of 1.44, a quick ratio of 4.19 and a current ratio of 4.19. The stock’s 50 day simple moving average is $391.67 and its two-hundred day simple moving average is $296.73.

MongoDB (NASDAQ:MDB – Get Free Report) last issued its quarterly earnings data on Thursday, June 1st. The company reported $0.56 earnings per share (EPS) for the quarter, beating analysts’ consensus estimates of $0.18 by $0.38. MongoDB had a negative return on equity of 43.25% and a negative net margin of 23.58%. The business had revenue of $368.28 million for the quarter, compared to the consensus estimate of $347.77 million. During the same period in the prior year, the business posted ($1.15) earnings per share. The company’s quarterly revenue was up 29.0% compared to the same quarter last year. Equities research analysts anticipate that MongoDB, Inc. will post -2.8 earnings per share for the current year.

Analyst Ratings Changes

A number of research analysts have issued reports on MDB shares. Royal Bank of Canada lifted their price objective on MongoDB from $400.00 to $445.00 in a research note on Friday, June 23rd. 58.com restated a “maintains” rating on shares of MongoDB in a research note on Monday, June 26th. KeyCorp boosted their price target on MongoDB from $372.00 to $462.00 and gave the stock an “overweight” rating in a research note on Friday, July 21st. Guggenheim cut MongoDB from a “neutral” rating to a “sell” rating and boosted their price target for the stock from $205.00 to $210.00 in a research note on Thursday, May 25th. They noted that the move was a valuation call. Finally, The Goldman Sachs Group boosted their price target on MongoDB from $420.00 to $440.00 in a research note on Friday, June 23rd. One research analyst has rated the stock with a sell rating, three have assigned a hold rating and twenty have assigned a buy rating to the company. Based on data from MarketBeat, the company has a consensus rating of “Moderate Buy” and an average target price of $378.09.

View Our Latest Research Report on MongoDB

Insider Transactions at MongoDB

In other news, CRO Cedric Pech sold 360 shares of the stock in a transaction that occurred on Monday, July 3rd. The stock was sold at an average price of $406.79, for a total value of $146,444.40. Following the sale, the executive now directly owns 37,156 shares in the company, valued at $15,114,689.24. The sale was disclosed in a document filed with the SEC, which is available at this link. In related news, CRO Cedric Pech sold 360 shares of the stock in a transaction that occurred on Monday, July 3rd. The stock was sold at an average price of $406.79, for a total value of $146,444.40. Following the transaction, the executive now owns 37,156 shares of the company’s stock, valued at $15,114,689.24. The sale was disclosed in a legal filing with the SEC, which can be accessed through the SEC website. Also, CAO Thomas Bull sold 516 shares of the stock in a transaction that occurred on Monday, July 3rd. The stock was sold at an average price of $406.78, for a total value of $209,898.48. Following the completion of the transaction, the chief accounting officer now directly owns 17,190 shares in the company, valued at approximately $6,992,548.20. The disclosure for this sale can be found here. Over the last three months, insiders have sold 102,220 shares of company stock worth $38,763,571. 4.80% of the stock is currently owned by company insiders.

MongoDB Profile

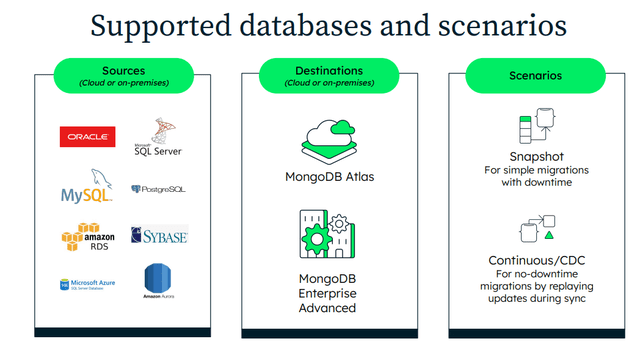

MongoDB, Inc provides general purpose database platform worldwide. The company offers MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premise, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Featured Articles

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

MMS • Adrian Cockcroft

Transcript

Cockcroft: I’m Adrian Cockcroft. I’m going to talk to you about cloud provider sustainability, the current status and future directions. I also call this DevSusOps, so adding sustainability concerns to development and operations. Although, I’m focusing very much on what the developer concerns are in this space. You can find me at OrionX.net, which is a consulting firm that I work for. Also, on mastodon.social and DevSusOps@hachyderm.io. I just want to talk about, why does sustainability matter? Why is this hard? Getting the terminology and mental models right is actually much more difficult than you might expect. I’m going to talk about cloud provider similarities and differences. What are the measurements? Then, the interesting part at the end, things developers need to build and what we need to get so that we can build them.

Why Does Sustainability Matter?

Let’s start with, why does sustainability matter? Fundamentally, we want to leave the world habitable for future generations. There’s also regulatory compliance coming down through board level from governmental regulations. There’s market transition risks, as the markets move to electric cars from gas powered cars. There’s physical risks to business assets, like flooding, and high temperatures. Green market positioning for your products. There’s employee enthusiasm. People may not want to work for you if you don’t have a green perception in the market. There’s potentially reduced cost now or in the future. It turns out, renewable energy is now the cheapest form of energy. That’s driving quite a lot of the work here. Then, some companies, it’s your social license to operate. There’s things we can do now. There’s development. You can optimize your code, just make things more efficient so you’re using less resources to get your job done. Operations, you want to deploy this code with high utilization, more automation. Just be careful about cleaning everything up as well.

Measuring Carbon

I’m going to focus mostly on measuring carbon, and why is this hard. Because, fundamentally, we’re just multiplying two numbers together. We need to figure out how much energy some code uses, and we have to figure out the carbon content of the machine it’s running on. That’s pretty much it. It turns out that there’s a lot more to this. What I just mentioned is called scope 2, which is the energy use. What actually matters also is scope 3, which is the energy used, and the carbon emissions of everything it took to make that computer, and deliver it, and put it in a building, and the cost of creating the building, and all of that stuff. That’s the supply chain. Then there’s also if you recycle it at the end, then, what’s the energy used to recycle things? This is from a Microsoft document that gives a good summary of what’s going on, https://learn.microsoft.com/en-us/industry/sustainability/api-calculation-method. You can see from Microsoft’s point of view, the scope 3 is their upstream supply chain. The operations of their services is scope 1 and 2. Scope 3 is also the downstream disposition or recycling.

Then, if you’re a cloud customer, though, what is reported to you by the cloud provider is your scope 3 is reported by you as your scope 3. As the carbon content of energy tends to zero, as we get more renewables, the scope 3 is dominating, because the shipping and manufacturing is not coming down as fast as the carbon content of energy. It’s taking much longer to decarbonize silicon production, and shipping, for example. It will get there in the end, but probably more like 2040, 2050, rather than 2025 to 2030, which is what we’re looking at for carbon. However, in Europe and the U.S., current renewable energy for the cloud providers is almost completely clean. They’re very high percentage of renewable energy. It’s really only in Asia, which is where the silicon is manufactured that the electricity there is particularly high carbon. If you’re trying to build APIs with your developers for supply chain automation and pulling in all the data from your suppliers, this Pathfinder Framework is a good place to look.

Cloud Provider Scope 3 Differences, and Methodology

There are some differences across the different cloud providers for scope 3. AWS doesn’t provide any scope 3 information yet. I wrote a blog. There was Computer Weekly story. There was a LinkedIn page with a response to that story. Fundamentally, they are working on it but they’re taking their time. If you really need scope 3 from AWS, and you’re a big enough customer, you can escalate, and they are providing estimates to people under NDA. That’s true of pretty much anything you want custom from AWS. If you’re big enough and you ask the right people, they will usually give you things under NDA, information that isn’t publicly available. What they’re working on is the APIs and all the infrastructure needed to provide more information to everybody, down to the individual. However, Microsoft has had this for a few years, they have a detailed paper and API. It’s a good paper. They include recycling, but they don’t include transport and buildings yet. Numbers are going to go up a bit when those are added. Google also has detailed scope 3, which I think includes transport and buildings, but doesn’t seem to include end of life recycling. They claim that that’s estimated to be immaterial. There’s going to be differences from provider to provider, but hopefully not too much. Most of the time, what we really care about is the silicon and the steel in the machine itself. Then, probably a bit of the building it’s in.

Then we look at the methodology. This is another diagram from Microsoft. The question is, how do you allocate the building and all of these other scope 3 things to the workload? What they do, as far as I can tell, is they take the proportion of the energy used by the different workloads or customers in the building, and then they apportion all the scope 3 proportionately to the energy use. Ideally, you’d want to say this machine uses this much rack, and it’s using this much space in the building. That’s a little too complicated. They just do a proportional allocation. You tend to find these kinds of simplifying assumptions quite a lot when you’re looking at carbon calculations. This isn’t bad, it’s just you have to dig in and find out, because it may be that another cloud provider is using a different methodology to allocate scope 3 to workloads. That’s one of the problems I’m going to highlight. It’s just there are differences here.

There’s some interesting information here from one of the AWS sustainability leads, Cyril. This is across Europe. What you can see here, if you just look at the columns for scope 1, 2, and 3 here. Scope 1 he’s saying is the same. They have the same data center in different countries around Europe. This isn’t cloud, this is just a data center. Scope 3 he’s estimating is the same. Scope 1’s the same, which is mostly the fuel used for backup generators. Scope 2 is all over the place. Like Poland has obviously a lot of fossil fuel in its energy, and it really dominates. If you’re in Sweden or Norway, then your energy is dominated by scope 3. This is just looking at it from the basis of grid mix. What the cloud providers do is they buy additional renewable energy to offset that. We’re developers, and scope 3 is about hardware, it’s a Ops problem. Let’s just concentrate on the energy, on the carbon content, because that’s something that we can control by using our machines more efficiently. It’s a number that we might want to manipulate.

Energy

Let’s look at energy. How much energy does a specific line of code use? There’s a few things out there. There’s JoularJX, which uses the JVM instrumentation to try and come up with some estimates for energy monitoring, by the line of code almost. There’s also, how much energy does a transaction use? There was a talk about the Green Software Foundation and SCI, which is, if you can figure out the energy you’re using and the transactions you’re doing with it in a workload, now you can start normalizing energy per customer, per transaction, per those kinds of things. That’s good, but you still need to know the underlying energy in order to calculate SCI. What I’m focusing on is the underlying information you would need to go and do SCI at all, from a cloud provider. How much energy does a workload use, would actually be interesting. Maybe you need to get down to an individual container. What does that use? How much energy does a specific cloud instance use? This one I’m running on right now, how much is that currently using? Unfortunately, cloud providers, none of them tell you any of those things in enough detail for you to answer that question. It’s a bit of a problem.

How could you measure it directly? If you have a physical access to a machine, you can put a monitor on it. This is a little box that you can get for 20 bucks that you can run your laptop or your desktop machine on and you can go and try and measure energy usage when you run different workloads and see if you can figure out how much energy they’re using. You’ll find, it’s very unrepeatable and unreproducible. If you’re trying to do this data for real and use the data, then I think you should understand how to do statistical analysis to make sure you’re not reporting noise. That’s what Gage R&R does. It basically extracts how much repeatability, reproducibility you really have in your data. Energy is a pain, we’re just going to have an average hourly rate, which we’ll get from somewhere.

Carbon Content

Let’s try and figure out now where the carbon content comes from. That depends. What’s the grid mix for energy generation in that location? Where did you get the measures of the mix? When did you get them? It turns out, the grid mix isn’t really available accurately until a month later when you get your bill from the energy supplier, so maybe a month or two. Can you get hourly? GCP has an hourly 24 by 7 measure that they use for generating their estimates, so they’re calculating based on an hourly rate, but they don’t tell you what the actual hourly rate is, as far as I can tell. Unfortunately, these numbers also change after you use them for up to a year, for reasons that I’ll get into later. The grid mix isn’t just a thing you can depend on. Then, what really matters for the cloud is how much private provider energy was used. They don’t actually tell you that. There’s something called a bundled REC, you want to know how much that was used. You have to know how much unbundled REC was used. These are all things that matter. The private energy is the entity they contract with directly. Then the RECs are things that are traded.

Grid mix, you get it from the bill, a month or so in arrears, along with power purchase agreements. This is the Google wind farm, the Amazon solar farm, the battery farms that go with them, those kinds of things that are basically built under contract by the cloud providers. There’s a lot of that happening. Then renewable energy credits are more tradable things. We also care about the power usage efficiency. It depends where it’s measured, but that’s the energy coming into the building. How much of it actually gets all the way to the computer and is running that CPU? It’s typically somewhere between 10% to 100%, maybe twice as much energy is coming into the building as is actually being used for your computer, in some parts of the world. It’s typically, for the cloud providers, 10% to 80% range of overhead. What we have then is to get the carbon we need to take the power mix. We need to know the power usage efficiency. We need to know how much capacity of something we’re using, and we need to know how much emissions factor is per that capacity.

There’s two completely different ways of reporting this, location and market based. Location based uses this utility grid mix, 24 by 7, this is what Google is using. They’re saying every hour how much energy is coming into the building, and what was the grid mix at that time. They’re not taking into account the fact that Google has a bunch of wind farms and solar farms out there. Market based accounts for these dedicated power purchases and the renewable energy credits that you bought in that same market. Market based means that the electricity is connected. You don’t account for a power station in another country that’s not connected to you. You can’t use that. You have to use the ones that are connected in the grid to the same company so the electricity could flow. Not all countries are fully connected. In the U.S., there’s multiple grids and there are interconnects. The interconnects don’t have enough capacity to power everything across them.

Why It Matters

Why does it matter? AWS and Azure use regional market-based data. Google, originally, they have this claim that since 2017, they’ve been 100% renewable. That’s global market. Effectively, generation in Europe and U.S. was being counted against Singapore, which is a bit. It was a good idea in 2017. It’s not such a great idea now. The current data that Google has is location based. You can’t really compare the data between location and regionally based, but it’s probably better for tuning work to use the location-based data if you can get it. Really, what we want is every cloud provider to give us both. Here’s that formula, the power mix, what are the problems? The utility bill that tells you your power mix is going to be a month or more depending on where in the world you are. It takes longer the further out into the developing world you go. Power purchase agreements, these are contracts to build and consume power generation. Amazon has over 20 gigawatts of power purchase agreements, I think solar, wind, and increasingly battery power so that they can use power during the day from solar, and run it overnight when the wind isn’t blowing, and things like that.

The cloud providers are now amongst the largest energy producers in the world. They have energy on a similar scale to a lot of utility providers that you would normally buy energy from. In Europe, renewable energy credits are called guarantees of origin, but they’re slightly different. Renewable energy credits are purchases of renewable capacity on the open market from existing generators. You can only claim that energy once, but you can claim it later on. You don’t have to claim it exactly at the time it was generated, which causes other problems I’ll get to later. RECs can top up on top of PPAs. What you typically do as a cloud provider, you buy lots of PPAs, and then a little bit extra here and there, so you buy some RECs. Maybe you’ve contracted for a wind farm, but it hasn’t finished being built yet, so you might buy some RECs to cover for that. You’ve got the commitment. You know you’re going to get it, but you’re waiting for them to finish building it, those kinds of things. Or just to get your percentage to where you want it to be. What’s good is that you buy as many PPAs as possible, and then you buy some RECs to top that up.

Guarantee of origin, renewable energy credit, fairly similar, a little bit different in how they’re regulated and traded. A local market REC in the same grid, that’s good. When you go cross-border or non-local market, that’s energy that can’t flow to you. You’re assuming that the carbon you’re saving in one part of the world is offsetting carbon being emitted somewhere else. You’re connecting the two places over the carbon cycle, rather than over the electricity grid. This is also often used as a cheap carbon offset and greenwashing. This has got RECs a bad name. It’s really the cross-border and non-local market RECs that people are buying them too cheaply that’s got a bad name. Local market RECs actually make much more sense and are actually reasonably valuable. There’s some links down below if you want to dig into this a bit more.

You get grid mix. It changes every month. There’s hourly data for 24/7 starting to appear. Google and Microsoft are publicly working towards 24/7. AWS hasn’t said anything about this. The cloudcarbonfootprint.org open source tool doesn’t include these PPAs or RECs. It just works off the grid mix, which is ok in some places, but it’s increasingly becoming inaccurate. Then there’s an interesting startup called FlexiDAO working in the energy trading space.

Power Mix Problems and Misconceptions

Here’s some problems and misconceptions. I try to illustrate this a bit. The problem is, let’s look at the bottom two things: you’ve got fossil fuel energy and renewable energy. That is the power mix reported as the grid mix used by the Cloud Carbon Footprint. Then on top of that, you’ve got some renewable energy purchase agreements by dedicated capacity and a few credits. The power mix used by a cloud provider in a more emerging market space is some mixture of these things. This is where the energy from the PPAs is never more than the cloud provider needs. Here, you’ve got a wind farm, you’re going to use every bit that that wind farm can possibly make all the time, there’s none left over. That’s what I mean by an emerging market. As we develop this, one of the problems here is that the RECs on top, the blue area, this is where it gets complicated. The more RECs you have, it makes the grid mix worse for everyone else. RECs take away from the grid mix. The other problem is RECs are traded for up to a year. You generate energy 1 month for up to 12 months. Twelve months later you could say, no, that was not green energy going into the grid mix, that is green energy I’m going to sell to somebody because they wanted to top up what they were doing a year ago. It may sound a bit bogus, but this is the rules. This is how it works. What that fundamentally means is the grid mix is not a stable metric for 12 months, and at various points in time it can decrease and look worse over time, just to get that out there. There’s explanation in this URL, https://www.ecocostsvalue.com/lca/gos-and-recs-in-lca/. It basically goes into this. That’s in emerging markets. What I mean by that is you’re still at a deficit, you’re still depending very heavily on the grid.

Then, if we get into a more mature market where the cloud provider is generating so much renewable energy that they have excess left over, what are they going to do with that excess energy that they can’t actually use because they’re generating more during the day than they can consume? They sell it as credits. Some of the renewable energy credits are now being put back into the market, and sold from cloud providers to anyone else that wants them. Then, any credits that aren’t sold, that excess renewable energy goes into the grid mix and it makes the grid mix look better than it was before. Now it’s not clear what’s going on, these boundaries are getting very blurry. It gets pretty complicated to understand. This is why this is difficult.

Then there’s this thing, if you follow this URL, the top one and that’s shown below, we get what I’m calling the Norway problem. In Norway, it is 2% fossil electricity, it’s 98% green. Great, let’s move our data center to Norway and get green energy. Unfortunately, Norway sells 86% of the RECs for its green energy to other countries in Europe. The effect of that is if you actually set up in Norway and run your data center or your company, for that 86% that’s sold to Europe, you actually get the residual mix from Europe back into that to compensate, and that ends up at about 50%. The net of this is even though almost all of the energy in Norway is hydroelectric, if you operate in Norway, you’ll have to report about 50% renewable and 50% non-renewable unless you go out and buy those RECs from the Norwegian energy companies that basically say, yes, you are really buying renewable energy. This may sound stupid, whatever, but this is the rule. This is fundamentally because selling these across borders is allowed. This is considered a bad practice nowadays, but this is what happens. This is complicated stuff.

Let’s look at power usage efficiency. It’s not that well standardized, but you can compare across similar data center designs. AWS doesn’t publish PUE. People tend to quickly point at this blog by James Hamilton that explains why it’s complicated. Azure and Google do publish PUE. They have numbers for it. Azure’s numbers are about 1.2, Google’s are about 1.1. I wouldn’t compare them across that much, because the methodology is all different. At least you can see that they are a lot better than most public data centers, which are 1.5 to 2. We’re trying to figure out the capacity that we’re using. We’ve got dedicated, capacity is relatively easy to account for, but then you’ve got the shared instances, network equipment, it’s pretty difficult. This is hard for the cloud providers to report to you. If you are a multi-tenant SaaS provider, you have the same problem, you’ve got to figure out the capacity you use in your business. How do you provide common information to the people that consume your product? Everyone’s got this problem. This allocation problem is a big, complicated area. There’s a lot of interesting algorithms needed here. This is a developer problem. Some of you maybe end up working on it. Then the other thing we need to know is how much power an instance type, storage class, or service uses. This depends on utilization overheads, and all of this data isn’t available.

Takeaways

What can you do today? Fundamentally, if you use less stuff, it makes the biggest difference. It reduces your energy use, but also it reduces your footprint in terms of scope 3. The best thing you can do is just use fewer computers, and use them for less time, just be efficient about it. High utilization and more efficient systems is just going to be the best thing you can do. If you’re looking at cloud providers, all the cloud providers are really pretty similar. The numbers, different people might argue that one is better than another. In fact, they are all pretty much in the same place, there isn’t that much difference. They’re all buying enough renewables to cover most of their energy usage in U.S. and Europe. They all have the same problems in Asia. Scope 3 is dominated by the same chip suppliers for everybody. They’re buying the same SSDs, the same Intel chips. It doesn’t really make much difference in terms of the carbon footprint of a machine in terms of scope 3. There are probably some differences in terms of efficiency of shipping and buildings and things. The big dominant things that go up to make a machine, it’s not that different.

Use any cloud provider. There will be detailed differences, but it’s not going to be that different. Try to minimize use of Asia regions for the next few years. Most of them have a roughly two-to-three-year timeframe to try and get Asia to decarbonize and to get more renewable energy there. In two, three-year’s time, we should be pretty renewable everywhere in the world. Fundamentally, they are all much better than a typical enterprise data center. There are papers from all the cloud providers, sort of 80% to 90% better reduced carbon compared to a typical enterprise data center. This illustrates that. It’s a Google figure, it shows you in the U.S. and Europe, there are some pretty good green all-day numbers. If you look at Singapore, it’s 4% renewable, and Taiwan is 17%. That’s what I’m talking about Asia being a problem. That is the fundamental problem. There just isn’t renewable energy, and there isn’t the ability to put renewable energy in Singapore. Everyone’s got that problem. This isn’t a Google problem. This is an everyone problem, and everyone’s working on it in different ways.

Measuring – Compare APIs and Schemas Across AWS, Azure, and GCP

Let’s look at the APIs and schemas, I said, developer talk. Let’s go and look at what data you can actually get across AWS, Azure, and GCP. This is the AWS data. It was released about a year ago. Customer Carbon Footprint tool is part of the billing console in an AWS account, and you get a monthly summary. The location, you get it for the three different continents, you don’t get it down to the region. You get accounts, EC2 per account. Account by account, you can get how much was EC2, how much was S3, and everything else is in others. It’s pretty low specificity. Then, you get down to 0.1 of a metric ton of CO2e for resolution. A lot of you, when you run these reports in Europe will just get zero. That’s actually expected, pretty much. You’ve got less than a 10th of a metric ton of CO2e per month, because you’re just not big enough to make it move off of that. This is scope 1 and market-based scope 2. This doesn’t include the scope 3, and there’s some criticism around that, but they’re working on it. It’ll get there eventually.

Azure actually have an OData API. There’s a tool. Let’s look at it through the API. There’s a bunch of queries there, but, fundamentally, what you get out of this query is, again, a monthly summary. The day is always going to be one, when you get a date. It’s going to tell you which month it is. This time, they have regions as well as countries. You have services as well as accounts. Every individual service, you can say, what is this service, and it will tell you the carbon for that service. The resolution is a kilogram instead of 100 kilograms. It’s 100 times better resolution than AWS from looking at the summaries of the data and figuring this out. They also include scope 3. It’s a market-based scope 2. Reasonable API. OData is a fun thing, you can write interesting queries with.

The way Google works is you export your data into BigQuery, and then use the BigQuery API on it. This is the export schema. Again, monthly summary. This time, they go down below regions to each zone. You get data per zone. You have projects on Google. If you wrap a workload into a project, you can get project specific data, at least at the monthly level. That’s a nice feature. I think AWS never had the concept of a project, and just very recently came out with something that is a bit like a project. I’m hoping that more AWS support starts to do projects. Resolution, a 10th of a kilogram. That’s like 10 times smaller than the Microsoft data that I’ve seen. They do scope 1, location-based scope 2 with placeholders in here for marketplace. They’re stating they’re going to have both, and scope 3. This is in some decent schema, and then some good examples of what the numbers might look like.

Workload Carbon Footprint Standard (Proposal)

Those are the measurements we can get, but what do we actually want? What I want is real-time carbon metrics for optimization. I want it to be just another metric like CPU utilization. I want it to be reported by the same tools we already use. I want whatever tool you’re using to monitor your machines to report carbon as well. This is what I’d like to see. This is me proposing something I’ve made up. This is a proposal. It’s not really been vetted by anybody. I’m launching that here. What I want to see is the same data for all cloud providers, but also data center automation tools, VMware, whatever, things like that. I’d like to see resolution, not monthly data, I want minutes, seconds, maybe. I want it to come out in CloudWatch, once a minute. I want to have a carbon number in CloudWatch as well as the CPU utilization. Country, region, and zone, I think that makes sense. I want to go down to containers, file systems, basically anything CloudWatch measures. Anything I can get a metric from that makes sense, I want to be able to get a carbon number for it. Actually, I don’t really just want carbon, what I actually want is the energy usage, pretty high-resolution energy usage. I think millijoules or milliwatt seconds is probably good, because some of these things we might want to measure might be battery powered devices at the edge, which use very little energy. If you have millions of them around the world, they add up, so you want to understand that.

I want to have energy and carbon, because the carbon is going to be an estimate. I want location and market-based scope. What I mean by this is energy is final. We could figure out the energy, we’re not going to revise that. Carbon would have to be an initial estimate, because it’s going to be a guess of what the next hour or what that hour looks like. The data is there to do an estimate, but the numbers are going to move. What I’d want is to have an initial estimate, just so the numbers are there, that’s roughly in the right ballpark. Perfectly good enough for tools and optimization algorithms, things like that. Then the audit report quality that the cloud providers currently give us takes a couple of months to produce. A couple of months is fine. Then, as I said, the RECs settle for a grid, so you really want to have another update after 12 months to say, this is the final number that we’re never going to change again, potentially, that might be useful for some cases. If we compare across all these, this is what I’d really like to have to develop some cool tools on this in the first column, and then AWS, Azure, and GCP in the other columns, just to get it all onto one table.

What Tools Could We Build?

What could you build with this? I think the first thing is there’s a lot of cost optimization tooling out there, which looks at the utilization of systems, it looks at the billing data, and it combines them together. Cloud Carbon Footprint is probably the most common one that’s out there. This should be in all the tooling. Any tool that does cost optimization or performance optimization should be able to optimize for carbon as a metric, as well as latency or utilization, or dollars, or euros, or whatever. Then, SaaS providers, we need to attribute and allocate tools. This is a big, complicated problem. We’re going to be building lots of tools here. There should be tools off-the-shelf that can plug in onto any provider and say, here’s how you do allocation. Then I think it’d be interesting to have architecture planning tools. Like, what’s the carbon footprint difference if you’re trying to decide, should you run your own Kafka on EC2? Should you run AWS MSK, which is a Kafka as a service, or should you go to Confluent, or Redpanda, which is a Kafka compatible clone? Or AWS Kinesis, which isn’t the same as Kafka, but it might be a lower carbon? It’s got a shared control plane, so it possibly is, but it depends. The question is, could we get all of these different things to either publish or have a way to calculate what that comparison would look like? I really open this up to ideas. I’m really looking for input. What would you want to build if you could get this fine-grained data and do something interesting with it?

Measuring Climate Impact and Risk

Finally, there’s climate impact and risk. The OS-Climate.org, I was involved in helping get them started while I was at AWS. A lot of open source software here. If you’re trying to do transition analysis, there’s economic modeling for business transitions. Then the other thing they look at is physical risk and resilience, things like trying to understand where your customers are. Like if your customers can’t get to you, if you’re a retail chain, and there’s floods, what happens? People can’t get to you, you’ve shut down. What’s the impact to your business of increasing probability of bad weather effects? This is an area. It’s all open source. If you want to go contribute to something, go take a look at it. Lots of interesting data science, climate science, and the transition analysis models are pretty sophisticated. Some cool software here.

Things Devs Need to Build

These are the things I think developers need to build, energy usage instrumentation for applications, attribution allocation algorithms, data lakes to collect energy and carbon measurements, instrumentation at the edge, IoT, mobile, whatever. Energy usage dashboards and reports. Supply chain, carbon interchange protocols, energy to carbon models, and climate impact and risk models.

See more presentations with transcripts

MMS • RSS

Sundry Photography

MongoDB (NASDAQ:MDB) is a document-based database provider. The global database landscape is currently dominated by legacy relational databases such as Oracle (ORCL), SQL Server, and DB2, which were designed decades ago. Document-based databases are part of the NoSQL structure, and I believe this new database structure is better suited to the demands of the emerging AI and big data era. It offers greater flexibility and scalability at a much lower cost. I envision MongoDB gaining a more significant foothold in the expansive database management market, with significant growth potential ahead.

Rational Database versus Document-Based Database

To understand MongoDB’s business, it is essential to grasp the distinctions between traditional relational databases and document-based databases.

All these existing relational databases were designed decades ago, and they utilize rigid rows and columns to store data. In these databases, every record must adhere to the same structure or fields. For instance, suppose you have one table that records basic employee information, such as first name, last name, gender, age, and ID. If you wish to include information about each employee’s hobbies, you need to create another table to accommodate multiple hobbies for each employee, linking the two tables via employee ID.

In contrast, a document-based database offers several advantages over a relational database:

Flexibility: Document-based databases do not rely on rows and columns, providing the capability to manage both structured and unstructured data. Using the same employee example, a document-based database can store all employee information in a single document, encompassing basic details and multiple hobbies. Records do not need to adhere to a rigid structure; instead, hobbies information can be stored within a single field in each employee’s record document. This simplifies database management for administrators and facilitates future record revisions. Moreover, since there are no connections between different documents, making changes to any document is straightforward, offering superior flexibility for enterprises.

Scalability: Expanding a relational database necessitates adding more database hardware and purchasing additional software licenses, resulting in significant costs. Document-based databases, on the other hand, store data in various documents, allowing for distribution across multiple servers or server clusters. Document-based databases offer cost-effective scalability.

Massive Data Processing: When applications require processing massive datasets with varying structures, document-based databases prove more suitable. They can store both structured and unstructured data. For instance, a large machine learning algorithm may require vast datasets from diverse sources, including server logs, website visit records, and social media data, all of which may have dissimilar data structures. A document-based database can support this type of data processing.

In summary, MongoDB’s document-based database is better suited for big data processing, offering greater flexibility and scalability compared to traditional relational database technologies.

Market Growth Potential

According to Gartner’s report published on August 2nd 2023, the global database market is projected to experience a compound annual growth rate of 16.8% from 2022 to 2027, reaching a total value of $203.6 billion by 2027. Currently, the NoSQL database sector holds a relatively small market share, with legacy relational databases dominating the market.

As MongoDB’s management indicated, migrating from a traditional database to a new structured database is a time-consuming process, as it necessitates rewriting application code and then migrating the database. Consequently, the majority of NoSQL database growth is currently driven by new workloads and applications. MongoDB has recently introduced its Relational Migrator tool, which enables enterprises to migrate data from legacy vendors to MongoDB. Additionally, the tool can assist in generating sample code for data queries. This tool is expected to expedite the migration of legacy systems for enterprises. Once the migration is complete and enterprises transition to a new structured database, they are likely to experience cost savings and modernize their data structures.

MongoDB 2023 Investor Day

If MongoDB can increasingly replace traditional databases, it stands to benefit from both the growth in new workloads and the substitution of existing workloads.

Low-Code Speeds up Application Creation

The growth of MongoDB is closely linked to the number of new applications developed, as each application necessitates a database. Therefore, as more applications are created, there is a higher demand for MongoDB’s databases. The emergence of generative AI technology is making low-code development a reality, as AI can automatically generate substantial portions of code for engineers.

According to Microsoft’s forecast, the next five years are expected to witness the creation of 500 million more applications, surpassing the total number built in the past 40 years. Moreover, modern applications increasingly require new structured databases like MongoDB, particularly as these applications often incorporate AI functionalities and process unstructured datasets. MongoDB’s document-based databases empower engineers to write code in a manner that aligns with how data is stored in the database. Consequently, I believe MongoDB stands to gain significantly from the surge in application development anticipated over the next five years.

Growth Driven by AI and Big Data

I firmly believe that the most critical factor for AI and machine learning is the availability of high-quality datasets, especially when it comes to large algorithmic machine learning models that require vast volumes of data. Additionally, the foundation of data analytics and big data lies in the utilization of unique, unstructured data. Consequently, I am highly optimistic about MongoDB’s growth potential, driven by the ongoing trends in AI and big data.

Furthermore, I see AI and Big Data adoption as catalysts for accelerating cloud migration and application modernization. To engage in AI and machine learning, enterprises are compelled to shift their workloads to the cloud. Simultaneously, they must redesign their existing applications to harness the capabilities of AI technologies. These types of application modernization efforts present MongoDB with substantial growth opportunities.

Recent Financial Results and Outlook

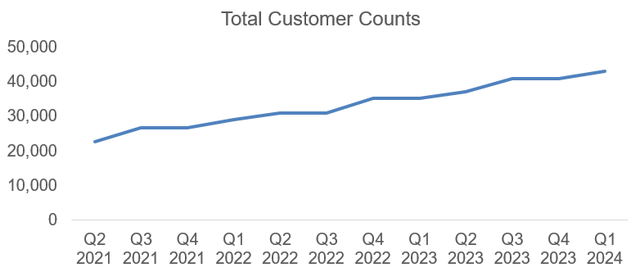

On June 1, 2023, MongoDB announced its Q1 FY24 earnings, showcasing robust growth figures. Their revenue surged by 29% year-over-year, while adjusted gross profits saw an impressive increase of 30.6%. Furthermore, the total number of their customers grew by 22.4% compared to the previous year.

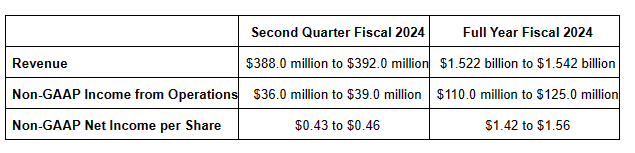

Looking forward, MongoDB has provided guidance for FY24, projecting a revenue growth rate of 18.5% to 20% and anticipating non-GAAP income from operations within the range of $110 million to $125 million.

MongoDB Q1 FY24 Earning Release

In my assessment, their revenue guidance for FY24 appears rather conservative, taking into account the potential deceleration in database consumption resulting from macroeconomic factors.

Risk Assessment

Competitions: I believe MongoDB faces competition from two primary categories: legacy vendors and emerging NoSQL databases. Legacy vendors may have difficulty introducing disruptive technologies that could jeopardize their established revenue streams, so they pose less concern. In contrast, the landscape includes numerous new NoSQL databases like Couchbase (BASE) and Astra DB. MongoDB enjoys a first-mover advantage and maintains a robust growth trajectory. For instance, in Q1 FY24, MongoDB witnessed a remarkable 28% year-over-year growth in its six-figure customer count, underscoring its strong market presence.

MongoDB Quarterly Results

Macro Uncertainties: I believe this is a common risk shared by cloud-based software companies. During periods of macroeconomic uncertainty, enterprises often consider reducing or postponing their cloud investments and projects, which could, in turn, impact application usage. MongoDB’s revenue model is consumption-based, and any deceleration in the consumption of existing customers could potentially dampen their revenue growth.

However, it’s worth noting that MongoDB’s full-year guidance may already factor in the potential slowdown in consumption.

Negative Profitability: MongoDB is currently in the early investment stage and has not yet generated profits. Many enterprises are likely to begin adopting MongoDB’s products for non-mission-critical workloads. As they become more familiar with MongoDB’s technologies and experience their benefits, I believe these enterprises will gradually increase their usage of existing applications or extend MongoDB’s products to a broader range of applications.

As previously mentioned, the entire NoSQL database sector is still in its infancy, with relational databases continuing to dominate the market. This suggests that MongoDB and similar NoSQL databases have ample room for growth and market expansion.

Valuations

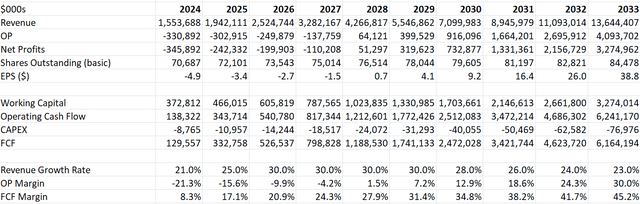

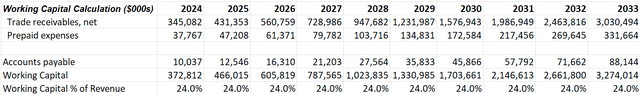

I am using a DCF model to estimate the fair value of MongoDB. My assumptions include a 21% revenue growth rate in FY24, and I anticipate that MongoDB could become a company with revenue exceeding $13 billion by FY33. On the margin side, I expect gradual margin expansion, aiming for a 30% margin by FY33, which is a typical figure for most software companies.

MongoDB DCF Model-Author’s Calculation

With these assumptions, the model forecasts a free cash flow margin of 45.2% in FY33. The working capital as a percentage of revenue is maintained at a stable 24% according to my estimates.

MongoDB DCF Model-Author’s Calculation

To calculate the discount rate, I am using the following assumptions:

Beta: 1.12. Data Source: Yahoo Finance 5-year monthly data.

Risk-Free Rate of Return: 4%. I am using 10-year US government bond yield.

Expected Market Return Premium: 7%. I am using the same assumption across my models.

Cost of debt: 10%. I am using the same assumption across my models.

Equity/(Equity + Gross Debt): 42.4% using my projected figure in FY24.

As a results, the discount rate is estimated to be 9.6% in my model, and applying the WACC, the fair value of equity value is estimated to be $33 billion for MongoDB. As such, the fair value is $460 per share as per my estimates.

Final Thoughts

I believe MongoDB’s document-based database technology has the potential to revolutionize the entire database industry, and their growth is driven by the increasing adoption of AI and big data technologies. In my view, MongoDB is positioned as a high-growth company for the next decade. Taking valuation into consideration, I would assign a ‘Strong Buy’ rating to MongoDB.

MMS • Steef-Jan Wiggers

Redis Inc recently announced the unified release of Redis 7.2, which includes several new features like auto-tiering, native triggers, and a preview of an enhanced, scalable search capability that provides increased performance for query and search scenarios, including vector similarity search (VSS).

Redis is the company’s flagship product, an open-source in-memory data store that functions as a database, cache, streaming engine, and message broker, facilitating communication between data services and applications that use different messaging protocols. With the launch of Redis 7.2, the company brings enhancements in performance, new commands, and improvements in manageability across all products and services, including open source, source-available, commercial cloud (e.g., Azure Cache for Redis, Redis Enterprise Cloud for AWS), software, and Kubernetes distributions.

The enhancement in performance applies to the auto-tiering feature, formerly known as Redis on Flash, enabling developers and operators to efficiently handle growing large datasets by maintaining frequently accessed data in memory and migrating less used data to SSD. According to the company, this results in over twofold improved throughput compared to the prior version and up to 70 percent reduced infrastructure expenses for managing extensive DRAM.

Another enhancement in response to growing challenges for generative AI applications is scalable search preview in Redis 7.2, which enables a higher query throughput, including VSS and full-text search. By blending sharding for seamless data expansion with efficient vertical scaling, the company claims it ensures optimal distributed processing across the cluster and improves query throughput by up to 16x compared to what was previously possible.

Furthermore, Redis 7.2 include triggers and functions, which makes it possible for developers to build and maintain real-time applications by moving business logic closer to the data while remaining inside Redis, providing lower latency. In a Redis blog post on triggers and functions, the authors explain: