Month: August 2023

Visual Studio 2022 17.7 with .NET and C++ Development Features, Performance Improvements, and More

MMS • Robert Krzaczynski

Visual Studio 2022 17.7 is now generally available. It brings plenty of features and improvements to create a high-level developer experience, based on community feedback. There are new features within .NET and C++ development as well as these ones that improve overall performance. The latest version is available for download.

Visual Studio 2022 17.7 contains some productivity features. Among others, there is a convenient file comparison within the Solution Explorer, eliminating the need for external tools. Within the Solution Explorer, it is possible to effortlessly compare files using methods such as right-clicking on a file and selecting “Compare With…” to open File Explorer, or multi-selecting files with Ctrl and choosing “Compare Selected” from the context menu.

The newest Visual Studio version also enables GitHub Actions workflow creation within Solution Explorer. Supporting Azure Container Apps and Kubernetes, it allows single workflow multi-project deployment. For GitHub projects, a Solution Explorer node appears, offering easy workflow initiation.

Github Actions (Source: Microsoft blog)

In Visual Studio 2022 version 17.7, significant performance enhancements are introduced, targeting areas like F5 Speed, enhanced C# Light Bulb Performance, reduced memory usage in C# spell checker, optimised IntelliSense for C++ Unreal Engine, Solution Explorer, and Find in Files.

Moreover, Visual Studio 2022 boosts F5 Speed with optimised PDB opening, trimming 4 seconds from Unreal Editor project screen loading. This aids native and managed debugging, offering a 5-10% initial F5 improvement in debugger start and process launch times. In Unreal Editor tests, debugger-launched project selection was 21% faster.

(Source: Microsoft blog)

Continuing with the improvements, Visual Studio bolsters C# Light Bulb performance. Tasks like Fix Formatting and Simplify Type Name display actions swiftly. This responsiveness extends to substantial documents. Furthermore, C# spell checker’s memory usage is reduced by 90%, thanks to LSP enhancements. This translates to accelerated speed, efficiency, communication, and scalability within the spell-checking framework.

Regarding the .NET development, Visual Studio introduces enhanced External Source Debugging with auto-decompilation for .NET code. Debugger displays execution points when stepping into external code, aiding call stack analysis by navigating directly to code on double-clicking stack frames. Detailed CPU usage insights are provided for specific methods like Enum.HasFlag, String.StartsWith, aiding code optimisation. A preview feature integrates GitHub Copilot chat for CPU usage tool, offering issue explanations and fixes for code enhancement.

There were also plenty of improvements within C++ and game development such as C++ build insights or observing the step-by-step expansion of macros. IDE incorporates Build Insights for optimizing C++ build times. Capturing trace data is simplified, and new features like Included Files and Include Tree view aid understanding. The “Open in WPA” option enables advanced profiling, while the post-compilation diagnostic report identifies expensive includes and facilitates header file navigation.

In Visual Studio 17.7 appeared additions related to Linux and embedded development with C++. Visual Studio now offers effortless WSL acquisition. Opening a CMake project prompts WSL installation through a gold bar or Project menu. Remote File Explorer gains search functionality, facilitating file navigation on the remote machine. In order to get access, it is needed to choose View > Remote File Explorer after Linux and Embedded Workflow download.

In ASP.NET projects, Visual Studio integrates npm Dependency Management by displaying package.json packages under the Dependencies node in Solution Explorer. This centralizes solution dependencies, including .NET references and NuGet packages. It is available to add, restore, update, or uninstall npm packages via right-click actions. Additionally, project templates now use Vite instead of create-react-app and vue cli for faster builds and server start.

In general, Visual Studio 2022 17.7 got positive feedback from the community. However, below the official announcement appeared some comments about issues after upgrading Visual Studio, among others, connected with Unity or running IDE.

MMS • Renato Losio

During the latest AWS Storage Day event, Amazon announced the general availability of Mountpoint for Amazon S3. The new open-source file client provides through a file interface the elastic storage and throughput of Amazon S3, supporting data transfer at up to 100 Gb/second between each EC2 instance and the object storage.

Announced in preview earlier this year, Mountpoint for Amazon S3 is designed for workloads like data lake applications that perform sequential writes, sequential and random reads, and do not need full POSIX semantics. Jeff Barr, vice president and chief evangelist at AWS, explains:

Many AWS customers use the S3 APIs and the AWS SDKs to build applications that can list, access, and process the contents of an S3 bucket. However, many customers have existing applications, commands, tools, and workflows that know how to access files in UNIX style: reading directories, opening & reading existing files, and creating & writing new ones. These customers have asked us for an official, enterprise-ready client that supports performant access to S3 at scale.

Other common use cases for the new open-source client are machine learning training, image rendering, autonomous vehicle simulation, and ETL. Mountpoint supports data transfer at up to 100 Gb/second between each EC2 instance and the S3 bucket, translating local file system API calls to S3 object API calls and reducing costs compared to a managed network file system (NFS). Barr adds:

Under the covers, the Linux Virtual Filesystem (VFS) translates these operations into calls to Mountpoint, which in turn translates them into calls to S3: LIST, GET, PUT, and so forth. Mountpoint strives to make good use of network bandwidth, increasing throughput and allowing you to reduce your compute costs by getting more work done in less time.

In a popular Reddit thread, different users question how the new product compares to existing open-source s3fs and commercial ObjectiveFS products. Fernando Schubert, senior cloud engineer at Human Made, comments:

Interesting this is now formally supported, but s3fs/fuse was already doing this for a while, eager for a feature comparison and benchmarks.

Jukka Forsgren, senior solutions architect at AWS, highlights one of the goals of the AWS client:

At my previous company, I was on 24/7 on-call duty for a large number of customer AWS environments. One of the common alerts was related to stuck S3 mounts on EC2 Linux instances, which we often had to fix in the middle of the night. In light of this, it was great to notice that we just GA’d our own client called Mountpoint for Amazon S3, with stability as one of its primary design goals.

Mountpoint is not a general-purpose networked file system and comes with some restrictions on file operations. Built on the AWS Common Runtime (CRT) library, the rust-powered client can read files up to 5 TB in size, cannot modify existing files or delete directories, and does not support symbolic links or file locking.

Not all S3 storage classes are currently supported, with Mountpoint not working with the archive classes Glacier Flexible Retrieval, Glacier Deep Archive, Intelligent-Tiering Archive Access Tier, and Intelligent-Tiering Deep Archive Access Tier.

There are no specific charges for the use of Mountpoint, developers pay for the underlying S3 operations. Mountpoint is available in RPM format and the roadmap is available on GitHub.

L & S Advisors Inc Reduces Stake in MongoDB Amidst Mixed Earnings Results – Best Stocks

MMS • RSS

On August 18, 2023, it was reported that L & S Advisors Inc has reduced its stake in shares of MongoDB, Inc. (NASDAQ:MDB) by 16.3% in the first quarter, according to a recent filing with the Securities and Exchange Commission (SEC). The institutional investor now owns 4,735 shares of the company’s stock after selling 923 shares during the quarter.

At the time of the filing, L & S Advisors Inc’s holdings in MongoDB were valued at $1,104,000. This reduction in stake suggests a lack of confidence in the company’s future prospects or a strategic decision to reallocate investments.

MongoDB announced its earnings results on June 1st. The company reported earnings per share (EPS) of $0.56 for the quarter, surpassing analysts’ consensus estimate of $0.18 by an impressive margin of $0.38. The firm generated revenue of $368.28 million during the quarter, exceeding analysts’ expectations of $347.77 million.

It is worth noting that MongoDB had a negative return on equity of 43.25% and a negative net margin of 23.58%. However, its quarterly revenue demonstrated growth as it increased by 29.0% compared to the same period last year when considering historical data.

These figures may have influenced L & S Advisors Inc’s decision to reduce its stake in MongoDB as they reflect varying degrees of performance and potential risk associated with investing in this company.

In other news related to MongoDB, Director Dwight A. Merriman sold 6,000 shares of the business’s stock on August 4th at an average price of $415.06 per share, resulting in a total transaction value of $2,490,360. This sale was disclosed through a legal filing with the SEC and Mr. Merriman now holds 1,207,159 shares valued at approximately $501,043,414.54.

Additionally, CRO Cedric Pech sold 360 shares on July 3rd at an average price of $406.79 per share, with a total transaction value of $146,444.40. Post-transaction, Mr. Pech owns 37,156 shares of the company’s stock valued at around $15,114,689.24.

Overall, insiders have sold 102,220 shares of MongoDB stock in the last 90 days with a total value of $38,763,571. This information sheds light on the sentiments and actions of individuals with inside knowledge of the company’s operations.

Investors should consider these factors before making decisions on whether to invest or divest from MongoDB as it provides valuable insights into recent activities that may impact the stock’s performance in the near future.

Hedge Funds and Institutional Investors Show Growing Interest in MongoDB (NASDAQ: MDB)

August 18, 2023 – In recent months, various hedge funds have been actively trading shares of the company MongoDB (NASDAQ: MDB). Some have increased their holdings, while others have sold off their positions. This flurry of activity has caught the attention of investors and analysts alike.

One prominent firm, Raymond James & Associates, bolstered its stake in MongoDB by a staggering 32% during the first quarter of this year. With an additional purchase of 1,192 shares, Raymond James & Associates now owns a total of 4,922 shares valued at approximately $2,183,000. Similarly, PNC Financial Services Group Inc. augmented its investment in the company by 19.1%, acquiring an extra 206 shares worth around $569,000. MetLife Investment Management LLC decided to enter the fray as well by initiating a new stake in MongoDB with an estimated value of $1,823,000.

Panagora Asset Management Inc., another notable participant in this buying frenzy, increased its position in MongoDB by nearly 10%. Having purchased an additional 176 shares during the first quarter, Panagora Asset Management now holds a total of 1,977 shares valued at approximately $877,000. Lastly, Vontobel Holding Ltd., the Swiss multinational investment bank and financial services company, took a bold step by doubling down on its investment in MongoDB. With an impressive increase of over 100%, Vontobel Holding Ltd. now owns a substantial stake amounting to 2,873 shares valued at around $1,236 million.

These developments contribute to the fact that currently institutional investors and hedge funds own a significant portion (89.22%) of MongoDB’s stock.

On Friday morning following these transactions on August 18th this year MDB began trading on NASDAQ at $345.10. The company has had some interesting price movements recently as indicated by its moving averages – its 50-day moving average stood at $393.05, while its 200-day moving average showed a lower figure of $292.95.

Numerous research reports have shed further light on the situation by providing insight into MongoDB’s performance and future prospects. Morgan Stanley, for instance, issued a research note raising their target price from $270.00 to a more optimistic $440.00. Robert W. Baird also expressed optimism by raising their price objective from $390.00 to $430.00. Meanwhile, VNET Group maintained their recommendation on MongoDB shares, issuing a “maintains” rating in their research report.

However, not all research analysts have been as positive about the company’s outlook. Guggenheim downgraded MongoDB from a “neutral” rating to a “sell” rating and increased their price target from $205.00 to $210.00. They categorized this move as a valuation call, suggesting that the stock may be overpriced relative to its current performance.

These diverse opinions have led to various ratings assigned to MongoDB shares by different analysts — one has recommended selling the stock, three suggest holding it, while an impressive twenty analysts advocate buying it. According to data collected from Bloomberg.com, the company currently holds a consensus rating of “Moderate Buy” with an average price target of around $378.09 per share.

Overall, the recent activities surrounding MongoDB have caused quite a stir within the investment community. As investors look for opportunities in this fast-paced industry, MongoDB’s stock is definitely one worth monitoring closely.

To know more about these developments and stay updated with our latest stock reports on MongoDB and other companies, please visit our website [Insert website URL].

MMS • RSS

In the latest trading session, MongoDB (MDB) closed at $350.83, marking a -0.1% move from the previous day. This change lagged the S&P 500’s 0.02% loss on the day. Meanwhile, the Dow gained 0.08%, and the Nasdaq, a tech-heavy index, lost 0.2%.

Heading into today, shares of the database platform had lost 14.9% over the past month, lagging the Computer and Technology sector’s loss of 6.18% and the S&P 500’s loss of 3.25% in that time.

Investors will be hoping for strength from MongoDB as it approaches its next earnings release, which is expected to be August 31, 2023. On that day, MongoDB is projected to report earnings of $0.45 per share, which would represent year-over-year growth of 295.65%. Meanwhile, the Zacks Consensus Estimate for revenue is projecting net sales of $389.93 million, up 28.41% from the year-ago period.

For the full year, our Zacks Consensus Estimates are projecting earnings of $1.51 per share and revenue of $1.54 billion, which would represent changes of +86.42% and +19.78%, respectively, from the prior year.

Investors should also note any recent changes to analyst estimates for MongoDB. These recent revisions tend to reflect the evolving nature of short-term business trends. With this in mind, we can consider positive estimate revisions a sign of optimism about the company’s business outlook.

Based on our research, we believe these estimate revisions are directly related to near-team stock moves. We developed the Zacks Rank to capitalize on this phenomenon. Our system takes these estimate changes into account and delivers a clear, actionable rating model.

The Zacks Rank system ranges from #1 (Strong Buy) to #5 (Strong Sell). It has a remarkable, outside-audited track record of success, with #1 stocks delivering an average annual return of +25% since 1988. Within the past 30 days, our consensus EPS projection remained stagnant. MongoDB is currently a Zacks Rank #2 (Buy).

Digging into valuation, MongoDB currently has a Forward P/E ratio of 232.76. For comparison, its industry has an average Forward P/E of 37.19, which means MongoDB is trading at a premium to the group.

The Internet – Software industry is part of the Computer and Technology sector. This industry currently has a Zacks Industry Rank of 90, which puts it in the top 36% of all 250+ industries.

The Zacks Industry Rank includes is listed in order from best to worst in terms of the average Zacks Rank of the individual companies within each of these sectors. Our research shows that the top 50% rated industries outperform the bottom half by a factor of 2 to 1.

To follow MDB in the coming trading sessions, be sure to utilize Zacks.com.

Want the latest recommendations from Zacks Investment Research? Today, you can download 7 Best Stocks for the Next 30 Days. Click to get this free report

MongoDB, Inc. (MDB) : Free Stock Analysis Report

MMS • RSS

“This is for you”, “Suggested for you”, or “You may also like”, are phrases that have become essential in most digital businesses, particularly in e-commerce, or streaming platforms.

Although they may seem like a simple concept, they imply a new era in the way businesses interact and connect with their customers: the era of recommendations.

Let’s be honest, most of us, if not all of us, have been carried away by Netflix recommendations while looking for what to watch, or headed straight for the recommendations section on Amazon to see what to buy next.

In this article, I’m going to explain how a Real-Time Recommendation Engine can be built using Graph databases.

A recommendation engine is a toolkit that applies advanced data filtering and predictive analysis to anticipate and predict customers’ needs and wants, i.e. which content, products, or services a customer is likely to consume or engage with.

For getting these recommendations, the engines use the combination of the following information:

- The customer’s past behaviors and history, e.g. purchased products or watched series.

- The customer’s current behaviors and relationships with other customers.

- The product’s ranking by customers.

- The business’ best sellers.

- The behaviors and history of similar or related customers.

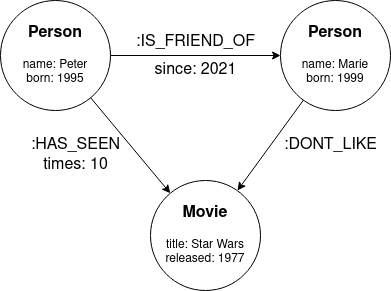

A Graph database is a NoSQL database where the data is stored in graph structures instead of tables or documents. A graph data structure consists of nodes that can be connected by relationships. Both nodes and relationships can have their own properties (key-value pairs), which further describe them.

The following image introduces the basic concepts of the graph data structure:

Example of a graph data structure

Now that we know what are a recommendation engine and a graph database, we’re ready to get into how we can build a recommendation engine using graph databases for a streaming platform.

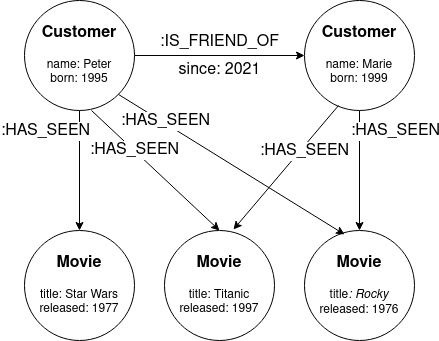

The graph below stores the movies two customers have seen and the relationship between the two customers.

Example of a graph of the streaming platform.

Having this information stored as a graph, we can now think about movie recommendations to influence the next movie to watch. The simplest strategy is to show the most viewed movies on the entire platform. This can be easy using Cypher query language:

MATCH (:Customer)-[:HAS_SEEN]->(movie:Movie)

RETURN movie, count(movie)

ORDER BY count(movie) DESC LIMIT 5However, this query is very generalist and does not take into account the context of the customer, so it’s not optimized for any given customer. We can do it much better using the social network of the customer, querying for friends and friends-of-friends relationships. With Cypher is very straightforward:

MATCH (customer:Customer {name:'Marie'})

<-[:IS_FRIEND_OF*1..2]-(friend:Customer)

WHERE customer friend

WITH DISTINCT friend

MATCH (friend)-[:HAS_SEEN]->(movie:Movie)

RETURN movie, count(movie)

ORDER BY count(movie) DESC LIMIT 5This query has two parts divided by WITH clause, which allows us to pipe the results from the first part into the second.

With the first part of the query, we find the current customer ({name: 'Marie'}) and traverse the graph matching for either Marie’s direct friends or their friends (her friend-of-friends) using the flexible path-length notation -[:IS_FRIEND_OF*1..2]-> which means one or two IS_FRIEND_OF relationships deep.

We take care not to include Marie herself in the results (the WHERE clause) and not to get duplicate friends-of-friends that are also direct (the DISTINCT clause).

The second half of the query is the same as the simplest query, but now instead of taking into account all the customers on the platform, we are taking into account Marie’s friends and friends-of-friends.

And that’s it, we have just built our real-time recommendation engine for a streaming platform.

In this article, the following topics have been seen:

- What a recommendation engine is and the amount of information it uses to make recommendations.

- What a graph database is and how the data is stored as a graph instead of a table or document.

- An example of how we can build a real-time recommendation engine for streaming platforms using graph databases.

José María Sánchez Salas is living in Norway. He is a freelance data engineer from Murcia (Spain). In the middle of business and development worlds, he also write a data engineering newsletter.

MMS • Sergio De Simone

In a recent article, Google engineer Russ Cox detailed what Google does to make sure each new Go release honors Go’s backward-compatibility guarantee. This includes generalizing GODEBUG in Go 1.21 to cover even subtle incompatibility cases.

Introduced in Go 1, Go’s backward compatibility guarantee ensures all correct Go programs will continue to work with future releases of the language. As Cox explains, this goal entails two major efforts: checking that each API change does not break anything and extensive testing to catch subtler incompatibility cases.

While the idea of checking API changes to prevent any breaking change from entering a new release may sound obvious, Cox also describes a number of cases where testing is the way to go:

The most effective way to find unexpected incompatibilities is to run existing tests against the development version of the next Go release. We test the development version of Go against all of Google’s internal Go code on a rolling basis. When tests are passing, we install that commit as Google’s production Go toolchain.

The examples provided by Cox fall into a few distinct categories, including changing the precision of the time.Now() library function, output or input changes, and protocol changes. There are cases, though, where a breaking change is brought by an important new features that must make it into the language, such as HTTP/2 support in Go 1.6 or SHA1 deprecation in Go 1.18.

To handle this kind of cases, Go provided a specific feature in release 1.6, the GODEBUG environment variable, which could be used to disable HTTP/2 for specific modules. In Go 1.21, Cox says, the GODEBUG mechanism has been extended and formalized.

Instead of using an environment variable, Go 1.21 uses a go:debug setting that can be specified in a package’s main. This works in combination with the Go version listed in a module’s go.mod file, so that each given Go version will have a default behavior that ensures compatibility on a feature-by-feature basis, while go:debug may be used to override it. For example, given that Go 1.21 changes panic(nil) behavior, developers may want to upgrade their toolchain to Go 1.21 but keep the old behavior. This is possible by overriding the version-specific go:debug setting by using:

//go:debug panicnil=1

According to Cox, this let every new version of Go be the best implementation of older versions, fixing bugs while preserving backward-compatibility even in the face of important breaking changes.

Cox’s article spun quite an interesting conversation on Hacker News, mostly on the relative merit of granting backwards compatibility in a language, versus introducing a new non-backward compatible version, as it happened in Python when going from Python 2 to Python 3; and comparing the approach taken by Go versus those taken by Java, C++, and .NT languages.

MMS • RSS

At Orbisresearch.com, a brand-new study titled “Global “NoSQL Professional” Market Trends and Insights” has just been released.

1. Introduction:

The primary objective of the research report focusing on the NoSQL Professional market is to furnish stakeholders with a thorough evaluation of the market scenario, covering essential elements like market size, factors driving growth, obstacles, and potential prospects. The report’s objectives are to offer a data-driven understanding of the NoSQL Professional market, evaluate the impact of the COVID-19 pandemic, present company profiles of key players, and provide a strategic overview for stakeholders to make informed decisions.

Request a pdf sample report : https://www.orbisresearch.com/contacts/request-sample/6746558

2. Objectives Covered by this Report:

a. Market Size and Growth Analysis: The report provides a comprehensive examination of the existing dimensions of the NoSQL Professional market and predicts its expansion path throughout the anticipated timeframe. This valuable information enables stakeholders to grasp potential market prospects and devise their business strategies accordingly.

b. COVID-19 Impact Assessment: The research report evaluates the impact of the COVID-19 pandemic on the NoSQL Professional market. It analyzes the disturbances in the flow of goods and services, alterations in customer preferences, and transformations in the market landscape. This analysis enables stakeholders to strategize for post-pandemic recovery and future growth.

c. Company Profiles: The report provides comprehensive profiles of key players in the NoSQL Professional market, including information about their products, technological innovations, market share, and recent strategic initiatives. Stakeholders have the ability to evaluate the competitive environment and pinpoint possible collaborators or rivals.

d. Technological Advancements: The research report explores the latest technological advancements in the NoSQL Professional market, such as innovations in energy-efficient gadgets, sustainable materials, and smart technologies. Stakeholders have the opportunity to use this information in order to improve their product offerings and strengthen their competitive edge.

e. Regulatory Considerations: The report addresses relevant regulatory considerations and policies influencing the NoSQL Professional market. Understanding the regulatory landscape is crucial for stakeholders to comply with regulations and navigate legal requirements effectively.

Buy the report at https://www.orbisresearch.com/contact/purchase-single-user/6746558

NoSQL Professional Market Segmentation:

NoSQL Professional Market by Types:

Key-Value Store

Document Databases

Column Based Stores

Graph DatabaseNoSQL Professional Market by Applications:

Retail

Online gaming

IT

Social network development

Web applications management

Government

BFSI

Healthcare

Education

Others

2. COVID-19 Impact:

The report evaluates the impact of the COVID-19 pandemic on the NoSQL Professional market. The global crisis disrupted supply chains, causing production delays and shortages. Additionally, lockdowns and social distancing measures affected consumer spending and purchasing behaviors. Nonetheless, the pandemic resulted in a heightened emphasis on health, wellness, and ecological consciousness, sparking a surge in the popularity of energy-efficient and environmentally-friendly devices. The report presents a comprehensive analysis of these impacts to help stakeholders adapt to the new market realities.

3. Company Profiles Covered:

The research report provides detailed profiles of leading companies operating in the NoSQL Professional market. The profiles include information about their product portfolios, market share, recent developments, and sustainability initiatives. Key players such as

. Leading Players in the NoSQL Professional market report:

Basho Technologies

Apache

Oracle

SAP

AranogoDB

MarkLogic

Redis

CloudDB

MarkLogic

MapR Technologies

Couchbase

DataStax

Aerospike

Microsoft

Neo4j

MongoLab

IBM Cloudant

Amazon Web Services

MongoDB

RavenDB

4. Strategic Overview:

The strategic overview section presents a comprehensive analysis of the NoSQL Professional market’s competitive landscape, growth opportunities, and challenges. It includes a SWOT analysis of the market, identifying strengths, weaknesses, opportunities, and threats. Stakeholders can leverage this strategic overview to develop effective business strategies, capitalize on growth prospects, and address market challenges.

5. Business Strategies:

Armed with the insights from the Strategic Overview section, stakeholders can develop robust business strategies. By aligning their strengths with opportunities and proactively addressing weaknesses and threats, businesses can craft actionable plans for sustainable growth and success. This may involve product diversification, innovation, market expansion, strategic partnerships, or brand positioning strategies.

6. Market Positioning:

The Strategic Overview also aids stakeholders in positioning their products or services effectively in the market. By understanding the competitive landscape and their unique selling propositions, businesses can target specific customer segments, create compelling value propositions, and differentiate themselves from competitors. This market positioning enables stakeholders to build a strong brand image and gain a competitive advantage.

Do Inquiry before Accessing Report at: https://www.orbisresearch.com/contacts/enquiry-before-buying/6746558

Conclusion:

The primary objective of the market research report focusing on the NoSQL Professional market is to furnish stakeholders with valuable information, enabling them to make well-informed choices and take advantage of the expanding and ever-changing market. Through an in-depth analysis of various factors such as market size, the influence of COVID-19, company profiles, technological progress, and strategic outlook, stakeholders will be empowered to effectively navigate the market landscape and position themselves for success amidst the competition in the NoSQL Professional market.

About Us:

Orbis Research (orbisresearch.com) is a single point aid for all your market research requirements. We have a vast database of reports from leading publishers and authors across the globe. We specialize in delivering customized reports as per the requirements of our clients. We have complete information about our publishers and hence are sure about the accuracy of the industries and verticals of their specialization. This helps our clients to map their needs and we produce the perfect required market research study for our clients.

Contact Us:

Hector Costello

Senior Manager – Client Engagements

4144N Central Expressway,

Suite 600, Dallas,

Texas – 75204, U.S.A.

Phone No.: USA: +1 (972)-591-8191 | IND: +91 895 659 5155

Email ID: sales@orbisresearch.com

MMS • RSS

A new category of analytics database has emerged that can handle massive data inflows and deliver subsecond latency on a large number of simultaneous queries. One of those real-time databases is Apache Druid, which was co-developed by former Metamarkets engineer Fangjin Yang, who is one of our People to Watch for 2023.

Datanami recently caught up with Yang, who is also the CEO and co-founder of Druid developer Imply, to discuss real-time analytics database and the success of Apache Druid.

Datanami: What spurred you to create Apache Druid? Why couldn’t existing databases solve the needs you had at Metamarkets?

Fangjin Yang: Back in 2011, we were trying to quickly aggregate and query real-time data coming from website users across the Internet to analyze digital advertising auctions. This involved large data sets with millions to billions of rows. While we weren’t intending to build a new database for this, we tried building the application with several relational and NoSQL databases, but none were able to support the performance and scale requirements for rapid interactive queries on this high dimensional and high cardinality data.

Datanami: What is the key attribute that has made Druid so successful?

Yang: The key to Druid’s performance at scale is “don’t do it.” It means minimizing the work the computer has to do. Druid doesn’t load data from disk to memory, or from memory to CPU, when it isn’t needed for a query. It doesn’t decode data when it can operate directly on encoded data. It doesn’t read the full dataset when it can read a smaller index. It doesn’t send data unnecessarily across process boundaries or from server to server.

With this philosophy of “don’t do it,” you end up having an architecture that’s incredibly efficient at processing queries at scale and under load. And it’s why Druid can be so fast and deliver aggregations on trillions of rows at thousands of queries per second in sub-second.

Datanami: How do you see the market for big and fast analytics platforms evolving in 2023? Do you think we’ll continue to see the introduction of novel database engines?

We see an emergence of a new category of data infrastructure – real-time analytics databases – to address the growing demand of developer-built analytics applications built on real-time, streaming data. The need for faster query performance at scale isn’t slowing down. It’s become a game-changer as it unlocks new operational workflows for so many Druid users like Confluent, Netflix, and Salesforce. Will there be more database engines emerging over time? For sure, developers are constantly innovating and driving new workload requirements that need databases built-for-purpose.

Datanami: Outside of the professional sphere, what can you share about yourself that your colleagues might be surprised to learn – any unique hobbies or stories?

Yang: I used to play video games semi-professionally, and am still an avid eSports fan.

You can read all of the interviews with the 2023 Datanami People to Watch at this link.

MongoDB Queryable Encryption Helps Companies Meet the Strictest Data-Privacy Requirements

MMS • RSS

MongoDB, Inc. is offering MongoDB Queryable Encryption, a technology that helps organizations protect sensitive data when queried and in-use on MongoDB.

According to the company, MongoDB Queryable Encryption significantly reduces the risk of data exposure for organizations and improves developer productivity by providing built-in encryption capabilities for highly sensitive application workflows—such as searching employee records, processing financial transactions, or analyzing medical records—with no cryptography expertise required.

“Protecting data is critical for every organization, especially as the volume of data being generated grows and the sophistication of modern applications is only increasing. Organizations also face the challenge of meeting a growing number of data privacy and customer data protection requirements,” said Sahir Azam, chief product officer at MongoDB. “Now, with MongoDB Queryable Encryption, customers can protect their data with state-of-the-art encryption and reduce operational risk—all while providing an easy-to-use capability developers can quickly build into applications to power experiences their end-users expect.”

With the general availability of MongoDB Queryable Encryption, customers can now secure sensitive workloads for use cases in highly regulated or data sensitive industries like financial services, health care, government, and critical infrastructure services by encrypting data while it is being processed and in-use.

Customers can quickly get started protecting data in-use by selecting the fields in MongoDB databases that contain sensitive data that need to be encrypted while in-use.

According to the company, with MongoDB Queryable Encryption, developers can now easily implement first-of-its-kind encryption technology to ensure their applications are operating with the highest levels of data protection and that sensitive information is never exposed while it is being processed—significantly reducing the risk of data exposure.

The MongoDB Cryptography Research Group developed the underlying encryption technology behind MongoDB Queryable Encryption, which is open source.

Organizations can freely examine the cryptographic techniques and code behind the technology to help meet security and compliance requirements.

MongoDB Queryable Encryption can be used with AWS Key Management Service, Microsoft Azure Key Vault, Google Cloud Key Management Service, and other services compliant with the key management interoperability protocol (KMIP) to manage cryptographic keys.

The general availability of MongoDB Queryable Encryption includes support for equality queries, with additional query types (e.g., range, prefix, suffix, and substring) generally available in upcoming releases.

Since the release of MongoDB Queryable Encryption in preview last year, MongoDB has worked in partnership with customers including leading financial institutions and Fortune 500 companies in the healthcare, insurance, and automotive manufacturing industries to fine-tune the service for general availability.

For more information about this news, visit www.mongodb.com.

MMS • RSS

TEMPO.CO, Jakarta – Peluang Novak Djokovic dan Carlos Alcaraz untuk bertemu di final turnamen tenis ATP/WTA Cincinnati Open 2023 tetap terbuka. Keduanya lolos ke babak perempat final, Jumat WIB, 18 Agustus 2023.

Djokovic, yang menjalani turnamen pertamanya di Amerika dalam dua tahun terakhir, mengalahkan Gael Monfils dengan skor 6-3, 6-2. Petenis unggulan kedua asal Serbia dan juara dua kali di Cincinnati itu memenangi pertandingan yang ke-19 atas petenis Prancis tersebut tanpa pernah kalah.

Djokovic memberikan tekanan lebih awal saat melawan Monfils dalam pertempuran para veteran tenis berusia 36 tahun, menjegal petenis nomor 211 itu untuk melangkah ke delapan besar pertamanya di Cincinnati setelah 2011.

Djokovic selanjutnya akan menghadapi petenis Amerika Taylor Fritz yang melaju setelah Dusan Lajovic mundur karena cedera jari kaki saat tertinggal 0-5.

“Gael adalah salah satu petenis paling atletis dalam tur itu dan dia menunjukkannya pada set pertama. Setiap bola bisa ia kembalikan,” kata Djokovic, seperti disiarkan AFP, Jumat.

“Saya melakukan servis dengan baik dan membuatnya bermain. Setelah jeda pertama, saya tidak melihat ke belakang dan meningkatkan level saya.”

“Saya menjalani set kedua yang nyaris sempurna. Semoga saya bisa mempertahankannya,” ujar Djokovic.

Alcaraz Juga Lolos

Pada pertandingan lain, Carlos Alcaraz bertahan dari serangkaian penundaan pertandingan karena hujan untuk mengalahkan Tommy Paul 7-6 (8/6), 6-7 (7/0) 6-3. Ia sekaligus membalas kekalahannya dari petenis Amerika itu di Toronto pekan lalu.

Alcaraz menyia-nyiakan tiga match point sebelum merebut set pertama dari serangkaian penundaan akibat hujan yang memaksa para petenis keluar masuk lapangan, yang akhirnya berakhir setelah lebih dari tiga jam.

Namun, petenis Spanyol itu bertahan untuk membukukan pertemuan perempat final dengan petenis Australia Max Purcell yang menang 6-4, 6-2 atas petenis Swiss Stan Wawrinka.

Scroll Untuk Melanjutkan

Setelah jeda hujan terakhir, Alcaraz yang unggul pada set ketiga, menyelesaikan beberapa poin terakhir dan melaju dengan 40 winner serta 61 unforced error.

“Saya benar-benar ingin menang setelah apa yang terjadi saat melawannya pekan lalu,” kata Alcaraz, seperti disiarkan AFP, Jumat.

Alcaraz melaju ke perempat final Masters 1000 kelimanya musim ini dan yang ke-11 secara keseluruhan, meningkatkan catatan menang-kalah dalam pertandingan menjadi 21-3 di seri elit musim ini, dan 51-5 secara keseluruhan.

Petenis berusia 20 tahun itu memberikan tekanan dan mengeluarkan permainan terbaik untuk menjinakkan Paul. Unggulan teratas itu bangkit dari kekalahan set kedua yang direbut Paul ketika mendominasi tiebrek untuk menyamakan kedudukan.

Jeda hujan yang membuat pertandingan menjalani skenario stop-start alias berhenti dan mulai kembali, membuat Alcaraz frustrasi. Para petenis harus melakukan pemanasan lagi untuk memulai pertandingan.

“Start dan stop tidak mudah. Saya menangani dengan baik,” ujar Alcaraz.

“Saya senang dengan level saya dan senang berada di perempat final. Saya merasa permainan saya menjadi semakin baik.”

Sementara itu, Alexander Zverev memenangi pertarungan atas unggulan ketiga Daniil Medvedev 6-4, 5-7, 6-4. Unggulan ke-16 dari Jerman yang absen pada paruh kedua musim lalu karena cedera pergelangan kaki yang serius itu memenangi pertandingan Cincinnati kedelapan berturut-turut sejak perebutan gelar 2021.

Di pertandingan lainnya, unggulan keempat Stefanos Tsitsipas juga kalah 3-6, 4-6 dari petenis Polandia Hubert Hurkacz.

Pilihan Editor: Prediksi Timnas U-23 Indonesia vs Malaysia di Piala AFF Malam Ini