Month: August 2023

MMS • RSS

Through this partnership, CloudMile’s customers will have access to MongoDB Atlas—a cloud-native developer data platform—on Google Cloud

KUALA LUMPUR, MALAYSIA, August 15, 2023: CloudMile, a leading AI and cloud technology company based in Asia that focuses on digital transformation and growth for its corporate clients, has announced a strategic partnership with MongoDB, providing CloudMile’s corporate clients with MongoDB Atlas. Through this partnership, CloudMile’s customers will have access to MongoDB Atlas—a cloud-native developer data platform—on Google Cloud to provide developers with the flexibility and scalability required to quickly and easily build enterprise-grade applications.

CloudMile’s corporate clients can now leverage MongoDB Atlas to accelerate their product development, build scalable and secure applications, and gain detailed insights into their data. MongoDB’s fully managed developer data platform works hand-in-hand with Google Cloud’s open data cloud to provide unmatched speed, scale, and security.

Strategic Partnership Empowers Customers

Businesses today face many challenges when it comes to data management, including data silos and slow query times. The partnership between CloudMile and MongoDB tackles these challenges, providing customers with a modern, intuitive, and unified developer data platform that provides detailed insights into customers, products, and operations.

Together with Google Cloud BigQuery, MongoDB Atlas allows customers to enrich operational data and enhance end-customer experiences. MongoDB Atlas handles real-time operational applications with thousands of concurrent sessions and millisecond response times. Its curated subsets of data are then replicated to BigQuery for aggregation, complex analytics, and the application of machine learning.

Accelerating Mobile Gaming Development Efficiency

The current mobile gaming market in Asia is experiencing a 3–5% compound annual growth rate (CAGR). To better leverage the power of data and address this growth opportunity, CloudMile and MongoDB’s partnership has already helped a leading gaming company in Taiwan greatly reduce maintenance and operation costs, allowing them to focus on product development. Alongside Google Cloud services delivered by CloudMile, MongoDB Atlas is easy to deploy, fulfilling the needs of scalability with performance and high availability. MongoDB Atlas streamlines data synchronisation via ACID Transaction, saving game developers time by using a unified platform through a single API, while also offering comprehensive security and data protection features to ensure business continuity and resilience during data storage and transmission. The flexible document data model, transaction function, and Google Cloud’s built-in security provide gamers with a controlled environment and allow them to enjoy games without downtime.

Fulfilling Digital Transformation With Optimised Solutions

“Through CloudMile, enterprises in Malaysia can seamlessly deploy partner products alongside our own. For example, they can release a new deployment of MongoDB Atlas alongside BigQuery to share transactional data and run complex, real-time analytics over petabyte-scale datasets. CloudMile, MongoDB, and Google Cloud are fundamentally committed to breaking down data silos and ensuring that our customers can securely harness the power of data from any source, in any location, and on any platform. We look forward to deepening our collaboration to help businesses of all sizes succeed at every step of their data-driven digital transformation journeys,” said Patrick Wee, Country Manager, Malaysia, Google Cloud.

Commenting on the announcement, Lester Leong, Country Manager of CloudMile Malaysia, said, “We are excited to announce this strategic partnership with MongoDB. MongoDB is well known for its horizontal scaling and load-balancing capabilities, which have given application developers exceptional flexibility and scalability. The collaboration will drive further growth and create new opportunities.”

“The partnership between CloudMile and MongoDB brings together the power of AI, our developer data platform, and cloud technology to empower customers to gain detailed insights into their data, accelerate their product development, and enhance end-customer experiences,” said Simon Eid, Senior Vice President of APAC, MongoDB. “We are committed to driving growth and success together in the region.”

The strategic partnership is expected to create significant synergies and opportunities for both companies, driving further growth and success in solving industry challenges, building credibility, and establishing trust amongst their joint customers.

Do Follow: CIO News LinkedIn Account | CIO News Facebook | CIO News Youtube | CIO News Twitter

About us:

CIO News, a proprietary of Mercadeo, produces award-winning content and resources for IT leaders across any industry through print articles and recorded video interviews on topics in the technology sector such as Digital Transformation, Artificial Intelligence (AI), Machine Learning (ML), Cloud, Robotics, Cyber-security, Data, Analytics, SOC, SASE, among other technology topics

MMS • Almir Vuk

The latest release of .NET 8 Preview 7 brings significant additions and changes to ASP.NET Core. The most notable enhancements for this release of ASP.NET Core are related to the Blazor alongside the updates regarding the Native AOT, Identity, new SPA Visual Studio templates, Antiforgery middleware additions and many more.

Regarding the Blazor, the endpoints are now required to use antiforgery protection by default. As reported, from this version, the EditForm component will now add the antiforgery token automatically. Also, the developers can disable this but it is not recommended. In terms of form creation, developers can now build standard HTML forms in server-side rendering mode without relying on EditForm.

Blazor introduces a range of other notable enhancements, including the Auto interactive render mode that combines Server and WebAssembly render modes seamlessly. This mode optimizes rendering by utilizing WebAssembly if the .NET runtime loads swiftly, within 100ms. Additionally, registering root-level cascading values extends their availability throughout the component hierarchy.

Moreover, interactive components can now be added, removed, and parameterized with enhanced navigation, enhanced form handling, and a streaming rendering. Also, the Virtualize component introduces the EmptyContent property, offering a concise content definition for scenarios where items are absent or the ItemsProvider yields zero TotalItemCount.

Regarding the APIs, a new middleware has been added for validating antiforgery tokens, a key defence against cross-site request forgery attacks. This middleware activates when antiforgery services are registered via the AddAntiforgery method. Placing the antiforgery middleware after authentication and authorization middleware is vital to prevent unauthorized form data access. Also, Minimal API handling form data will now require antiforgery token validation.

Concerning Native AOT, from this Preview 7 developers can benefit from the updated Request Delegate Generator, to support new C# 12 interceptors, and compiler features to support the interception of minimal API calls of Map action methods. The original blog post shares the results of changes in the startup time, so it is highly recommended to explore it. Also, there is a new WebApplication.CreateEmptyBuilder factory method which will result in smaller apps that only contain necessary features.

As reported in the original release blog post:

Publishing this code with native AOT using .NET 8 Preview 7 on a linux-x64 machine results in a self-contained, native executable of about 8.5 MB.

This Preview also introduces a notable breaking change affecting web projects compiled with trimming enabled using PublishTrimmed=true. Previously, projects defaulted to partial TrimMode. However, starting from this release, all projects targeting .NET 8 or above will now use TrimMode=full by default.

Furthermore, new Visual Studio templates have been introduced. These templates contain Angular, React, and Vue, utilizing the new JavaScript project system (.esproj) while seamlessly integrating with ASP.NET Core backend projects.

Finally, the comments section of the original release blog post has been active with responses regarding the framework changes and enhancements. The blog post has sparked considerable engagement, with users posing numerous questions and engaging in discussions with the development team. For an insight into the various viewpoints, it is strongly advised that users look into the comment section and participate in the ongoing discussions.

MMS • RSS

Prisma is a popular data-mapping layer (ORM) for server-side JavaScript and TypeScript. Its core purpose is to simplify and automate how data moves between storage and application code. Prisma supports a wide range of datastores and provides a powerful yet flexible abstraction layer for data persistence. Get a feel for Prisma and some of its core features with this code-first tour.

An ORM layer for JavaScript

Object-relational mapping (ORM) was pioneered by the Hibernate framework in Java. The original goal of object-relational mapping was to overcome the so-called impedance mismatch between Java classes and RDBMS tables. From that idea grew the more broadly ambitious notion of a general-purpose persistence layer for applications. Prisma is a modern JavaScript-based evolution of the Java ORM layer.

Prisma supports a range of SQL databases and has expanded to include the NoSQL datastore, MongoDB. Regardless of the type of datastore, the overarching goal remains: to give applications a standardized framework for handling data persistence.

The domain model

We’ll use a simple domain model to look at several kinds of relationships in a data model: many-to-one, one-to-many, and many-to-many. (We’ll skip one-to-one, which is very similar to many-to-one.)

Prisma uses a model definition (a schema) that acts as the hinge between the application and the datastore. One approach when building an application, which we’ll take here, is to start with this definition and then build the code from it. Prisma automatically applies the schema to the datastore.

The Prisma model definition format is not hard to understand, and you can use a graphical tool, PrismaBuilder, to make one. Our model will support a collaborative idea-development application, so we’ll have User, Idea, and Tag models. A User can have many Ideas (one-to-many) and an Idea has one User, the owner (many-to-one). Ideas and Tags form a many-to-many relationship. Listing 1 shows the model definition.

Listing 1. Model definition in Prisma

datasource db {

provider = "sqlite"

url = "file:./dev.db"

}

generator client {

provider = "prisma-client-js"

}

model User {

id Int @id @default(autoincrement())

name String

email String @unique

ideas Idea[]

}

model Idea {

id Int @id @default(autoincrement())

name String

description String

owner User @relation(fields: [ownerId], references: [id])

ownerId Int

tags Tag[]

}

model Tag {

id Int @id @default(autoincrement())

name String @unique

ideas Idea[]

}

Listing 1 includes a datasource definition (a simple SQLite database that Prisma includes for development purposes) and a client definition with “generator client” set to prisma-client-js. The latter means Prisma will produce a JavaScript client the application can use for interacting with the mapping created by the definition.

As for the model definition, notice that each model has an id field, and we are using the Prisma @default(autoincrement()) annotation to get an automatically incremented integer ID.

To create the relationship from User to Idea, we reference the Idea type with array brackets: Idea[]. This says: give me a collection of Ideas for the User. On the other side of the relationship, you give Idea a single User with: owner User @relation(fields: [ownerId], references: [id]).

Besides the relationships and the key ID fields, the field definitions are straightforward; String for Strings, and so on.

Create the project

We’ll use a simple project to work with Prisma’s capabilities. The first step is to create a new Node.js project and add dependencies to it. After that, we can add the definition from Listing 1 and use it to handle data persistence with Prisma’s built-in SQLite database.

To start our application, we’ll create a new directory, init an npm project, and install the dependencies, as shown in Listing 2.

Listing 2. Create the application

mkdir iw-prisma

cd iw-prisma

npm init -y

npm install express @prisma/client body-parser

mkdir prisma

touch prisma/schema.prisma

Now, create a file at prisma/schema.prisma and add the definition from Listing 1. Next, tell Prisma to make SQLite ready with a schema, as shown in Listing 3.

Listing 3. Set up the database

npx prisma migrate dev --name init

npx prisma migrate deploy

Listing 3 tells Prisma to “migrate” the database, which means applying schema changes from the Prisma definition to the database itself. The dev flag tells Prisma to use the development profile, while --name gives an arbitrary name for the change. The deploy flag tells prisma to apply the changes.

Use the data

Now, let’s allow for creating users with a RESTful endpoint in Express.js. You can see the code for our server in Listing 4, which goes inside the iniw-prisma/server.js file. Listing 4 is vanilla Express code, but we can do a lot of work against the database with minimal effort thanks to Prisma.

Listing 4. Express code

const express = require('express');

const bodyParser = require('body-parser');

const { PrismaClient } = require('@prisma/client');

const prisma = new PrismaClient();

const app = express();

app.use(bodyParser.json());

const port = 3000;

app.listen(port, () => {

console.log(`Server is listening on port ${port}`);

});

// Fetch all users

app.get('/users', async (req, res) => {

const users = await prisma.user.findMany();

res.json(users);

});

// Create a new user

app.post('/users', async (req, res) => {

const { name, email } = req.body;

const newUser = await prisma.user.create({ data: { name, email } });

res.status(201).json(newUser);

});

Currently, there are just two endpoints, /users GET for getting a list of all the users, and /user POST for adding them. You can see how easily we can use the Prisma client to handle these use cases, by calling prisma.user.findMany() and prisma.user.create(), respectively.

The findMany() method without any arguments will return all the rows in the database. The create() method accepts an object with a data field holding the values for the new row (in this case, the name and email—remember that Prisma will auto-create a unique ID for us).

Now we can run the server with: node server.js.

Testing with CURL

Let’s test out our endpoints with CURL, as shown in Listing 5.

Listing 5. Try out the endpoints with CURL

$ curl http://localhost:3000/users

[]

$ curl -X POST -H "Content-Type: application/json" -d '{"name":"George Harrison","email":"george.harrison@example.com"}' http://localhost:3000/users

{"id":2,"name":"John Doe","email":"john.doe@example.com"}{"id":3,"name":"John Lennon","email":"john.lennon@example.com"}{"id":4,"name":"George Harrison","email":"george.harrison@example.com"}

$ curl http://localhost:3000/users

[{"id":2,"name":"John Doe","email":"john.doe@example.com"},{"id":3,"name":"John Lennon","email":"john.lennon@example.com"},{"id":4,"name":"George Harrison","email":"george.harrison@example.com"}]

Listing 5 shows us getting all users and finding an empty set, followed by adding users, then getting the populated set.

Next, let’s add an endpoint that lets us create ideas and use them in relation to users, as in Listing 6.

Listing 6. User ideas POST endpoint

app.post('/users/:userId/ideas', async (req, res) => {

const { userId } = req.params;

const { name, description } = req.body;

try {

const user = await prisma.user.findUnique({ where: { id: parseInt(userId) } });

if (!user) {

return res.status(404).json({ error: 'User not found' });

}

const idea = await prisma.idea.create({

data: {

name,

description,

owner: { connect: { id: user.id } },

},

});

res.json(idea);

} catch (error) {

console.error('Error adding idea:', error);

res.status(500).json({ error: 'An error occurred while adding the idea' });

}

});

app.get('/userideas/:id', async (req, res) => {

const { id } = req.params;

const user = await prisma.user.findUnique({

where: { id: parseInt(id) },

include: {

ideas: true,

},

});

if (!user) {

return res.status(404).json({ message: 'User not found' });

}

res.json(user);

});

In Listing 6, we have two endpoints. The first allows for adding an idea using a POST at /users/:userId/ideas. The first thing it needs to do is recover the user by ID, using prisma.user.findUnique(). This method is used for finding a single entity in the database, based on the passed-in criteria. In our case, we want the user with the ID from the request, so we use: { where: { id: parseInt(userId) } }.

Once we have the user, we use prisma.idea.create to create a new idea. This works just like when we created the user, but we now have a relationship field. Prisma lets us create the association between the new idea and user with: owner: { connect: { id: user.id } }.

The second endpoint is a GET at /userideas/:id. The purpose of this endpoint is to take the user ID and return the user including their ideas. This gives us a look at the where clause in use with the findUnique call, as well as the include modifier. The modifier is used here to tell Prisma to include the associated ideas. Without this, the ideas would not be included, because Prisma by default uses a lazy loading fetch strategy for associations.

To test the new endpoints, we can use the CURL commands shown in Listing 7.

Listing 7. CURL for testing endpoints

$ curl -X POST -H "Content-Type: application/json" -d '{"name":"New Idea", "description":"Idea description"}' http://localhost:3000/users/3/ideas

$ curl http://localhost:3000/userideas/3

{"id":3,"name":"John Lennon","email":"john.lennon@example.com","ideas":[{"id":1,"name":"New Idea","description":"Idea description","ownerId":3},{"id":2,"name":"New Idea","description":"Idea description","ownerId":3}]}

We are able to add ideas and recover users with them.

Many-to-many with tags

Now let’s add endpoints for handling tags within the many-to-many relationship. In Listing 8, we handle tag creation and associate a tag and an idea.

Listing 8. Adding and displaying tags

// create a tag

app.post('/tags', async (req, res) => {

const { name } = req.body;

try {

const tag = await prisma.tag.create({

data: {

name,

},

});

res.json(tag);

} catch (error) {

console.error('Error adding tag:', error);

res.status(500).json({ error: 'An error occurred while adding the tag' });

}

});

// Associate a tag with an idea

app.post('/ideas/:ideaId/tags/:tagId', async (req, res) => {

const { ideaId, tagId } = req.params;

try {

const idea = await prisma.idea.findUnique({ where: { id: parseInt(ideaId) } });

if (!idea) {

return res.status(404).json({ error: 'Idea not found' });

}

const tag = await prisma.tag.findUnique({ where: { id: parseInt(tagId) } });

if (!tag) {

return res.status(404).json({ error: 'Tag not found' });

}

const updatedIdea = await prisma.idea.update({

where: { id: parseInt(ideaId) },

data: {

tags: {

connect: { id: tag.id },

},

},

});

res.json(updatedIdea);

} catch (error) {

console.error('Error associating tag with idea:', error);

res.status(500).json({ error: 'An error occurred while associating the tag with the idea' });

}

});

We’ve added two endpoints. The POST endpoint, used for adding a tag, is familiar from the previous examples. In Listing 8, we’ve also added the POST endpoint for associating an idea with a tag.

To associate an idea and a tag, we utilize the many-to-many mapping from the model definition. We grab the Idea and Tag by ID and use the connect field to set them on one another. Now, the Idea has the Tag ID in its set of tags and vice versa. The many-to-many association allows up to two one-to-many relationships, with each entity pointing to the other. In the datastore, this requires creating a “lookup table” (or cross-reference table), but Prisma handles that for us. We only need to interact with the entities themselves.

The last step for our many-to-many feature is to allow finding Ideas by Tag and finding the Tags on an Idea. You can see this part of the model in Listing 9. (Note that I have removed some error handling for brevity.)

Page 2

Listing 9. Finding tags by idea and ideas by tags

// Display ideas with a given tag

app.get('/ideas/tag/:tagId', async (req, res) => {

const { tagId } = req.params;

try {

const tag = await prisma.tag.findUnique({

where: {

id: parseInt(tagId)

}

});

const ideas = await prisma.idea.findMany({

where: {

tags: {

some: {

id: tag.id

}

}

}

});

res.json(ideas);

} catch (error) {

console.error('Error retrieving ideas with tag:', error);

res.status(500).json({

error: 'An error occurred while retrieving the ideas with the tag'

});

}

});

// tags on an idea:

app.get('/ideatags/:ideaId', async (req, res) => {

const { ideaId } = req.params;

try {

const idea = await prisma.idea.findUnique({

where: {

id: parseInt(ideaId)

}

});

const tags = await prisma.tag.findMany({

where: {

ideas: {

some: {

id: idea.id

}

}

}

});

res.json(tags);

} catch (error) {

console.error('Error retrieving tags for idea:', error);

res.status(500).json({

error: 'An error occurred while retrieving the tags for the idea'

});

}

});

Here, we have two endpoints: /ideas/tag/:tagId and /ideatags/:ideaId. They work very similarly to find ideas for a given tag ID and tags on a given idea ID. Essentially, the querying works just like it would in a one-to-many relationship, and Prisma deals with walking the lookup table. For example, for finding tags on an idea, we use the tag.findMany method with a where clause looking for ideas with the relevant ID, as shown in Listing 10.

Listing 10. Testing the tag-idea many-to-many relationship

$ curl -X POST -H "Content-Type: application/json" -d '{"name":"Funny Stuff"}' http://localhost:3000/tags

$ curl -X POST http://localhost:3000/ideas/1/tags/2

{"idea":{"id":1,"name":"New Idea","description":"Idea description","ownerId":3},"tag":{"id":2,"name":"Funny Stuff"}}

$ curl localhost:3000/ideas/tag/2

[{"id":1,"name":"New Idea","description":"Idea description","ownerId":3}]

$ curl localhost:3000/ideatags/1

[{"id":1,"name":"New Tag"},{"id":2,"name":"Funny Stuff"}]

Conclusion

Although we have hit on some CRUD and relationship basics here, Prisma is capable of much more. It gives you cascading operations like cascading delete, fetching strategies that allow you to fine-tune how objects are returned from the database, transactions, a query and filter API, and more. Prisma also allows you to migrate your database schema in accord with the model. Moreover, it keeps your application database-agnostic by abstracting all database client work in the framework.

Prisma puts a lot of convenience and power at your fingertips for the cost of defining and maintaining the model definition. It’s easy to see why this ORM tool for JavaScript is a popular choice for developers.

Next read this:

Article: Becoming More Assertive: How to Express Yourself, Give Feedback, and Set Boundaries

MMS • Marta Firlej

Key Takeaways

- Assertiveness is a communication style. It is being able to express your feelings, thoughts, beliefs, and opinions in an open manner that doesn’t violate the rights of others.

- The “Bill of Assertive Rights” can guide you in making decisions and show you that it is okay to change your mind, make mistakes, or simply not know the answer.

- No one is perfect in assertive communication, but as with every skill, we can improve by practicing it.

- To communicate more assertively, you need to know communication techniques and understand how and when to use them. For example, signal flexibility by providing options that would work for you or get in touch with your personal needs and values and communicate them.

- Understanding the side effects of being unassertive will help you motivate yourself to work on the skills of assertive people. Asking for help or expressing feelings may help you in your everyday professional communication.

Do you know that feeling when you are brave enough to say “NO” and then you don’t feel comfortable about it? I know that feeling very well.

During my professional career, there have been multiple times that people around me have struggled with setting boundaries. After those experiences, I have decided to learn more about communication skills, and I want to share with you what I have mastered.

In this article, we will build a proper understanding of what an assertiveness skill is, and learn how to identify communication skills we need to work on to be more assertive. You will get information about the characteristics and skills of assertive people. In addition, I will share my personal experience when I struggle with my assertiveness skill and when I practice it. Ultimately, I hope you will be equipped to make some small changes within everyday communication.

Why have I decided to learn more about assertiveness?

In a previous company I worked for, I noticed that many of my coworkers struggled to communicate their boundaries; they were not brave enough, or they lacked the knowledge of how to be assertive. I was even more surprised when I was kind but assertive with my superiors; they simply did not know how to react.

Of course, it was not happening with everyone, but it was still noticeable. My assertive communication and reaction to it in my workplace was a new situation for many of us. I tried to mentor many of my direct colleagues about assertiveness skills, and after I decided to leave that organization, I conducted a session about assertiveness. It was very impactful for some attendees and for me as well.

After that session, I realized how essential and life-changing such sharing can be. And that maybe I should share my skills and knowledge more often.

Defining assertiveness

I have been searching for a good definition, and it was not an easy task! Every source of knowledge seems to create its own definition. If I were to pick one, I would choose the definition I found on the Center of Clinical Interventions site, which is part of the Government of Western Australia:

“Assertiveness is a communication style. It is being able to express your feelings, thoughts, beliefs, and opinions in an open manner that doesn’t violate the rights of others.”

It resonates with my experience that assertiveness is not just one but multiple sets of skills. As an assertive person, you must be able to communicate your beliefs and feelings but do so without violating others. Numerous times during my career as a Test Lead or Quality Manager, I kept receiving feedback that I was either too emotional or too “aggressive” in expressing myself.

While working on that feedback with its authors, we concluded that people I worked with were simply not used to communicating assertively in a straightforward way.

Skills that assertive people have

Have you ever thought about how many skills you should have to be assertive?

Here you can find a list of skills that assertive people have based on materials from the Center of Clinical Interventions under the Government of Western Australia:

- Saying “No”

- Giving compliments

- Expressing your opinion

- Asking for help

- Expressing anger

- Expressing affection

- Stating your right and needs

- Giving criticism

- Being criticized

- Starting and keeping a conversation going

Looking at the above list, I was surprised at the length and the versatility of skills needed to gain and practice assertiveness. I was lucky that in my childhood, my parents, teachers, and colleagues taught me how to say “no” and express my opinions or thoughts without worries.

I’m actively using those skills in everyday life – for example, I refuse to drink coffee as I am not a huge coffee fan. The reality is that I dislike the taste, and I often need to use my assertiveness arsenal when someone is offering me a coffee or coffee-themed dessert. I found it interesting how many of the skills from the list above I need to use to be assertive and avoid being a part of the “coffee cult.” My co-workers and friends ask me to taste new kinds of coffee, often arguing that “it doesn’t taste like coffee” or “maybe you will change your mind.” Thankfully since childhood, I practiced avoiding foods that I don’t like, and later I refused smoking cigarettes and drinking alcohol.

Sometimes I struggle with some parts of assertiveness skills, and sometimes I will try these coffee-themed desserts or drinks just to confirm that I do not like them. Below I will share with you some ways to communicate more assertively that I use often. I know I still need to learn a lot about how to take care of my emotions, especially when someone tries to push my boundaries and I become angry. The effects of being unassertive not only manifest situations where we agree to things we do not like, but can also cause other effects mentioned below.

The effects of people being unassertive

The clinical study I mentioned showed what skills assertive people should have and presented what adverse effects can meet you when being unassertive:

- The main effect of not being assertive is that it can lead to low self-esteem.

- If we never express ourselves openly and conceal our thoughts and feelings, that can make us feel tense, stressed, anxious, or resentful.

- It can also lead to unhealthy and uncomfortable relationships.

- We can feel as if the people closest to us would not really know us.

Do you remember situations at work when someone asked you to take on extra responsibilities or support your team for longer hours, but you already had plans, or were simply tired? If you didn’t speak up before becoming more educated in assertive communication, you probably felt uncomfortable, even more tired, and angry.

As an example of lacking assertiveness, I can recall a situation when I asked off from work to go to my grand-grand-mother’s funeral. Despite my kind request, my boss asked me to stay and support the team. I stayed at work, and for years I felt bad because I made a wrong decision regarding an important situation in my personal life, and I didn’t communicate it properly to my boss and the team I worked with.

What’s more, I discussed it later with my boss, and we both agreed it was the wrong decision. I didn’t communicate that I felt uncomfortable and I missed an important moment in my life. From that day on, I created a list of my life values, and till today, they are navigating me in communicating my decisions – that helps me to be assertive.

I faced another situation during the pandemic. We had all been working too much, feeling stressed and exhausted. Did we communicate properly? I don’t think so. Later, with huge support from our HR Business Partner, my team organized a session on how to deal with stress and recognize when we are burning out. It was an eye-opening session for many of my team members and a great start for the conversation about setting boundaries. We learned that we were stressed and we shared with each other how to recognize this feeling via online tools in a new virtual reality.

What language do we use or behavior we have when we feel stressed? The trainer shared with us some tips on reducing stress or what to do when it stays with us for too long. We could not decrease stress related to the environment or work, but we changed everyday communication and supported each other a lot more. Each of us recognized what values are the most important, and making decisions became a lot easier.

I need to mention here that when people are joining a new team, organization, or environment, there is a tendency to be less assertive; we want everyone to accept us, like us, and recognize us as professionals. It is a trap that may lead us to all the mentioned negative effects. That’s why I have a printed copy of the “Bill of Assertive Rights” next to my screen at my workplace. Let’s read below what it actually is.

The Bill of Assertive Rights

I found the “Bill of Assertive Rights” when I was reading the book When I Say No, I Feel Guilty: How to Cope – Using the Skills of Systematic Assertive Therapy by Manuel J. Smith. It is an old book, and I am surprised I found it so late in my life. I strongly recommend you read it.

I believe the “Bill of Assertive Rights” is one of the things we all should print and have in front of our eyes, especially during business meetings. As I’ve mentioned, I have it at my workplace, and I read it when I feel that something is expected from me, when someone shares their feedback with me, or when I need to change decisions I’ve made. In Polish, we say “Tylko głupi nie zmienia zdania,” which translates to “Only a fool doesn’t change his/her mind,” and sometimes it may be the proper thing to do as an assertive person!

“The Bill of Assertive Rights” by Manuel J. Smith:

- You have the right to judge your own behavior, thoughts, and emotions, and to take the responsibility for their initiation and consequences upon yourself.

- You have the right to offer no reasons or excuses for justifying your behavior.

- You have the right to judge if you are responsible for finding solutions to other people’s problems.

- You have the right to change your mind.

- You have the right to make mistakes – and be responsible for them.

- You have the right to say, “I don’t know.”

- You have the right to be independent of the goodwill of others before coping with them.

- You have the right to be illogical in making decisions.

- You have the right to say, “I don’t understand.”

- You have the right to say, “I don’t care.”

Now I want to propose one exercise for you – please remind yourself of the last situation you felt uncomfortable with your decision. Then look at the list above. Are your thoughts looking different after looking at the list? For me, often – yes.

I must mention here how shocked I was to see that the book was not translated into Polish even though it was published in 1975. In the Polish language, we have a lot of books on how to avoid manipulation, but only a few on how to communicate assertively. Hopefully, in your languages, there are more translated books like that!

We know now what assertiveness is, which skills we should have, what unassertive effects can cause, and the list of rights we have, but how should we use it in practice?

Practicing assertiveness

I believe each of us needs to find our own way to communicate more assertively with respect to others. We need to consider a variety of factors – for example, gender, cultural background, the organization we are in, and our environment.

Looking at myself compared to others within my environment, I tend to express and fight for my beliefs and opinions stronger than others – so I am currently learning to be more mindful of their opinions, thoughts, or beliefs.

It is not easy for others to express themselves and present their point of view to me or to change my mind. Despite that, I found out that I have a lot of empathy, and I often put others’ needs in front of my own, so this is another thing I have to tackle with assertiveness.

There are so many things that I need to remember when I communicate with others not to cross their or my borders. Despite being perceived as an assertive person, I still have much to learn.

Some time ago, I found a few tips on communicating more assertively. I believe the points below are valuable and useful. Let’s take a look:

- Get in touch with your own needs and values.

- Be confident if your ask is reasonable, and prepare arguments WHY you need it.

- See the other person’s point of view.

- Signal flexibility by providing options that work for you.

- Keep your delivery calm and firm.

- Make yourself the scapegoat.

- Use the broken record technique.

I would like to highlight that some tips above may not work for everyone and not on every occasion. For example – “Make yourself the scapegoat.” – will depend on the situation, your relationships, and your environment. You can’t be a scapegoat all the time, and you can’t be responsible for others’ mistakes.

Let’s get back to the topic of personal life values. Personal values-based decision-making was a key to feeling good with all things I agree to in my professional and private life.

How to do it?

You can, for example, play in your head with the scenario “What would happen if__.” For example, would you quit your current job if your family member got sick and required your full attention? What should you do?

How do I make the decisions?

During my professional career, I’ve learned not to agree on anything until I take time to think about it and prepare a list of questions to set expectations and possible timelines properly.

It was something that changed a lot in my life as I am one of those people who always tries to deliver even if I am left alone with too challenging a task. Before I learned to tackle such situations and make proper decisions, it often made me feel stressed, uncomfortable, or exhausted.

What helped me a lot was learning how to ask for help or changes within deadlines or scope. Every day, I practice preparing arguments for “why” something has to be changed and what options I see.

It saved me many times, and I strongly advise you to say NO and at least provide 2-3 options about what you can do instead. If you take your time to think things through, it’ll be easy to communicate assertively, and you will be happy with your decisions.

As you see, it is a challenging task to be assertive. It requires many skills, communication techniques, and effort, but it may benefit you in achieving your goals and preserving good relationships.

Learning more about being assertive

I’m not an expert or educated psychologist but a dedicated practitioner willing to share my experience with you. I wanted to share what helped me and the people I worked with to be happier and deliver on time.

Below you will find some materials I found helpful during my research. You will likely find a lot more after reading my article and googling a bit.

And my final recommendation to you is to look at children’s books, which may help you to understand emotions and communicate more effectively and calmly.

Other resources which I recommend:

I hope to leave you with some inspiration, an understanding of how to be more assertive, and knowledge about what to work on. Good luck, you can make it!

Homework for you – exercise your communication skills and assertiveness

Your task is to look at the communication skills listed below and analyze how comfortable you feel in communicating with people that you meet the most:

- Your partner

- Your parents

- Your child

- Your best friends

- Other friends

- Strangers

- Your boss

- Work colleague

The results will show you which communication skills you should address in your future personal development.

List of skills:

- Saying “no”

- Giving compliments

- Expressing your opinion

- Asking for help

- Expressing anger

- Expressing affection

- Stating your right and needs

- Giving criticism

- Being criticized

- Starting and keeping a conversation going

The listed skills come from a study about assertiveness made by the Centre for Clinical Interventions – Government of West Australia: What is Assertiveness?

Presentation: Speed of Apache Pinot at the Cost of Cloud Object Storage with Tiered Storage

MMS • Neha Pawar

Transcript

Neha Pawar: My name is Neha Pawar. I’m here to tell you about how we added tiered storage for Apache Pinot, enabling the speed of Apache Pinot at the cost of Cloud Object Storage. I’m going to start off by spending some time explaining why we did this. We’ll talk about the different kinds of analytics databases, the kinds of data and use cases that they can handle. We’ll dive deep into some internals of Apache Pinot. Then we will discuss why it was crucial for us to decrease the cost of Pinot while keeping the speed of Pinot. Finally, we’ll talk in depth about how we implemented this.

All Data Is Not Equal

Let’s begin by talking about time, and the value of data. Events are the most valuable when they have just happened. They tell us more about what is true in the world at the moment. The value of an event tends to decline over time, because the world changes and that one event tells us less and less about what is true as time goes on. It’s also the case that the recent real-time data tends to be queried more than the historical data. For instance, with recent data, you would build real-time analytics, anomaly detection, user facing analytics.

These are often served directly to the end users of your company, for example, profile view analytics, or article analytics, or restaurant analytics for owners, feed analytics. Now imagine, if you’re building such applications, they will typically come with a concurrency of millions of users have to serve about thousands of queries per second, and the SLAs will be stringent in just a few milliseconds. This puts pressure on those queries to be faster. It also justifies more investment in the infrastructure to support those queries.

Since recent events are more valuable, we can in effect, spend more to query them. Historical data is queried less often than real-time data. For instance, with historical data, you would typically build metrics, reporting, dashboards, use it for ad hoc analysis. You may also use it for user facing analytics. In general, your query volume will be much lower and less concurrent than the recent data. What we know for sure about historical data that it is large, and it keeps getting bigger all the time. None of this means that latency somehow becomes unimportant. We will always want our database to be fast. It’s just that with historical data, the cost becomes the dominating factor. To summarize, recent data is more valuable and extremely latency sensitive. Historical data is large, and tends to be cost sensitive.

What Analytics Infra Should I Choose?

Given you have two such kinds of data that you have to handle, and manage the use cases that come with it, if you are tasked with choosing an analytics infrastructure for your organizations, the considerations on top of your mind are going to be cost, performance, and flexibility. You need systems which will be able to service the different kinds of workloads, while maintaining query and freshness SLAs needed by these use cases. The other aspect is cost. You’ll need a solution where the cost of service is reasonable and the business value extracted justifies this cost. Lastly, you want a solution that is easy to operate, to configure, and also one that will fulfill a lot of your requirements together.

Now let’s apply this to two categories of analytics databases that exist today. Firstly, the real-time analytics or the OLAP databases. For serving real-time data and user facing analytics, you will typically pick a system like Apache Pinot. There’s also some other open source as well as proprietary systems, which can help serve real-time data, such as ClickHouse and Druid. Let’s dig a little deeper into what Apache Pinot is. Apache Pinot is a distributed OLAP datastore that can provide ultra-low latency even at extremely high throughput.

It can ingest data from batch sources such as Hadoop, S3, Azure. It can also ingest directly from streaming sources such as Kafka, Kinesis, and so on. Most importantly, it can make this data available for querying in real-time. At the heart of the system is a columnar store, along with a variety of smart indexing and pre-aggregation techniques for low latency. These optimizations make Pinot a great fit for user facing real-time analytics, and even for applications like anomaly detection, dashboarding, and ad hoc data exploration.

Pinot was originally built at LinkedIn, and it powers a wide variety of applications there. If you’ve ever been on linkedin.com website, there’s a high chance you’ve already interacted with Pinot. Pinot powers LinkedIn’s iconic, who viewed my profile application, and many other such applications, such as feed analytics, employee analytics, talent insights, and so on. Across all of LinkedIn, there’s over 80 user facing products backed by Pinot and they’re serving queries at 250,000 queries per second, while maintaining strict milliseconds and sub-seconds latency SLAs.

Another great example is Uber Eats Restaurant Manager. This is an application created by Uber to provide restaurant owners with their orders data. On this dashboard, you can see sales metrics, missed orders, inaccurate orders in a real-time fashion, along with other things such as top selling menu items, menu item feedback, and so on. As you can imagine, to load this dashboard, we need to execute multiple complex OLAP queries, all executing concurrently. Multiply this with all the restaurant owners across the globe. This leads to several thousands of queries per second for the underlying database.

Another great example of the adoption of Pinot for user facing real-time analytics is at Stripe. There, Pinot is ingesting hundreds of megabytes per second from Kafka, and petabytes of data from S3, and solving queries at 200k queries per second, while maintaining sub-second p99 latency. It’s being used to service a variety of use cases, some of them being for financial analysts, we have ledger analytics. Then there’s user facing dashboards built for merchants. There’s also internal dashboards for engineers and data scientists.

Apache Pinot Community

The Apache open-source community is very active. We have over 3000 members now, almost 3500. We’ve seen adoption from a wide variety of companies in different sectors such as retail, finance, social media, advertising, logistics, and they’re all together pushing the boundaries of Pinot in speed, and scale, and features. These are the numbers from one of the largest Pinot clusters today, where we have a million plus events per second, serving queries at 250k queries per second, while maintaining strict milliseconds query latency.

Apache Pinot Architecture

To set some more context for the rest of the talk, let’s take a brief look at Pinot’s high-level architecture. The first component is the Pinot servers. This is the component that hosts the data and serve queries of the data that they host. Data in Pinot is stored in the form of segments. Segment is a portion of the data which is packed with metadata and dictionaries, indexes in a columnar fashion. Then we have the brokers.

Brokers are the component that gets queries from the clients. They scatter them to the servers. The servers execute these queries for the portion of data that they host. They send the results back to the brokers. Then the brokers do a final merge and return the results back to the client. Finally, we have the controllers that control all the interactions and state of the cluster with the help of Zookeeper as a persistent metadata store, and Helix for state management.

Why is it that Pinot is able to support such real-time low latency milliseconds level queries? One of the main reasons is because they have tightly coupled storage and compute architecture. The compute nodes used typically have a disk or SSD attached to store the data. The disk and SSD could be on local storage, or it could be remote attached like an EBS volume. The reason that they are so fast is because for both of these, the access method is POSIX APIs.

The data is right there, so you can use techniques like m-mapping. As a result, accessing this data is really fast. It can be microseconds if you’re using instant storage and milliseconds if you’re using a remote attached, say, an EBS volume. One thing to note here, though, is that the storage that we attach in such a model tends to be only available to the single instance to which it is attached. Then, let’s assume that this storage has a cost factor of a dollar. What’s the problem, then?

Let’s see what happens when the data volume starts increasing by a lot. Say you started with just one compute node, which has 2 terabytes of storage. Assume that the monthly cost is $200 for compute, $200 for storage, so $400 in total. Let’s say that your data volume grows 5x. To accommodate that, you can’t just add only storage, there are limits on how much storage a single instance can be given. Plus, if you’re using instant storage, it often just comes pre-configured, and you don’t have much control on scaling that storage up or down for that instance. As a result, you have to provision the compute along with it. Cost will be $1,000 for storage and $1000 for compute.

If your data grows 100x, again, that’s an increase in both storage and compute. More often than not, you won’t need all the compute that you’re forcibly adding just to support the storage, as the increasing data volume doesn’t necessarily translate to a proportional increase in query workload. You will end up paying for all this extra compute which could remain underutilized. Plus, this type of storage tends to be very expensive compared to some other storage options available, such as cloud object stores. That’s because the storage comes with a very high-performance characteristic.

To summarize, in tightly coupled systems, you will have amazing latencies, but as your data volume grows, you will end up with a really high cost to serve. We have lost out on the cost aspect of our triangle of considerations.

Data Warehouses, Data Lakes, Lake Houses

Let’s look at modern data warehouses, Query Federation technologies like Spark, Presto, and Trino. These saw the problem of combining storage and compute, so they went with a decoupled architecture, wherein they put storage into a cloud object store such as Amazon S3. This is basically the cheapest way you will ever store data. This is going to be as much as one-fifth of the cost of disk or SSD storage.

On the flip side, what were POSIX file system API calls which completed in microseconds, now became network calls, which can take thousands or maybe 10,000 times longer to complete. Naturally, we cannot use this for real-time data. We cannot use this to serve use cases like real-time analytics and user facing analytics. With decoupled systems, we traded off a lot of latency to save on cost, and now we are looking not so good on the performance aspect of our triangle. We have real-time systems that are fast and expensive, and then batch systems that are slow and cheap. What we ideally want is one system that can do both, but without infrastructure that actually supports this, data teams end up adding both systems into their data ecosystem.

They will keep the recent data in the real-time system and set an aggressive retention period so that the costs stay manageable. As the data times out of the real-time database, they’ll migrate it to a storage decoupled system to manage the historical archive. With this, we’re doing everything twice. We’re maintaining two systems, often duplicating data processing logic. With that, we’ve lost on the flexibility aspect of our triangle.

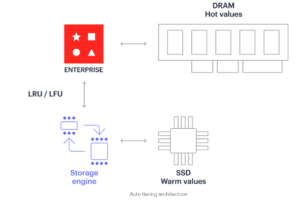

Could we somehow have a true best of both worlds, where we’ll get the speed of a tightly coupled real-time analytics system. We’ll be able to use cost effective storage like a traditionally decoupled analytic system. At the same time, have flexibility and simplicity of being able to use just one system and configure it in many ways. With this motivation in mind, we at StarTree set out to build tiered storage for Apache Beam. With tiered storage, your Pinot cluster is now not limited to just use disk or SSD storage. We are no longer strictly tightly coupled.

You can have multiple tiers of storage with support for using a cloud object storage such as S3 as one of the storage tiers. You can configure exactly which portion of your data you want to keep locally, and which is offloaded to the cloud tier storage. One popular way to split data across local versus cloud is by data age. You could configure in your table, something like, I want data less than 30 days to be on disk, and the rest of it, I want it to go on to S3. Users can then query this entire table across the local and remote data like any other Pinot dataset. With this decoupling, you can now store as much data as you want in Pinot, without worrying about the cost. This is super flexible and configurable.

The threshold is dynamic, and can be changed at any point in time, and Pinot will automatically reflect the changes. You can still operate Pinot in fully tightly coupled mode, if you want, or in completely decoupled mode. Or go for a hybrid approach, where some nodes are still dedicated for local data, some nodes for remote data, and so on. To summarize, we saw that with tiered storage in Pinot, we have the flexibility of using a single system for your real-time data, as well as historical data without worrying about the cost spiraling out of control.

Keep the Speed of Pinot

We didn’t talk much about the third aspect yet, which is performance. Now that we’re using cloud object storage, will the query latencies take a hit and enter the range of other decoupled systems? In the next few sections, we’re going to go over in great detail how we approached the performance aspect for queries accessing data on the cloud storage. Until tiered storage, Pinot has been assuming that segments stay on the local disk. It memory mapped the segments to access the data quickly. To make things work with remote segments on S3, we extended the query engine to make it agnostic to the segment location.

Under the hood, we plugged in our own buffer implementation, so that during query execution, we can read data from remote store instead of local as needed. Making the queries work is just part of the story. We want to get the best of two worlds using Pinot. From the table, you can see that the latency to access segments on cloud object storage is a lot higher, so hence, we began our pursuit to ensure we can keep the performance of Pinot in an acceptable range, so that people can keep using Pinot for their real-time user facing analytics use cases that they have been used to.

We began thinking about a few questions. Firstly, what is the data that should be read? We certainly don’t need to read all of the segments that are available for any query. We may not even need to read all of the data inside a given segment. What exactly should we be reading? Second question was, when and how to read the data during the query execution? Should we wait until the query has been executed and we’re actually processing a segment, or should we do some caching? Should we do some prefetching? What smartness can we apply there? In the following slides, I’ll try to answer these questions by explaining some of the design choices we made along the way.

Lazy Loading

The first idea that we explored was lazy loading. This is a popular technique used by some other systems to solve tiered storage. In lazy loading, all of the data segments would be on the remote store to begin with, and each server will have to have some attached storage. When the first query comes in, it will check if the local instance storage has the segments that it needs. If it does not find the segments on there, those will be downloaded from the remote store during the query execution. Your first query will be slow, of course, because it has to download a lot of segments.

The hope is that the next query will need the same segments or most of the segments that you already have, and hence reuse what’s already downloaded, making the second query execute very fast. Here, for what to fetch, we have done the entire segment. For when to fetch, we have done during the query execution. In typical OLAP workloads, your data will rarely ever be reusable across queries.

OLAP workloads come with arbitrary slice and dice point lookups across multiple time ranges and multiple user attributes. Which means that more often than not, you won’t be able to reuse the downloaded segment for the next query, which means we have to remove them to make space for the segments needed by the new query, because instance storage is going to be limited. This will cause a lot of churn and downloads. Plus, in this approach, you are fetching the whole segment.

Most of the times, your query will not need all of the columns in the segment, so you will end up fetching a lot of excessive data, which is going to be wasteful. Also, using lazy loading, the p99 or p99.9 of the query latency would be very bad, since there will always be some query that needs to download the remote segments. Because of this, lazy loading method was considered as a strict no-go for OLAP bias where consistent low latency is important. Instead of using lazy loading, or similar ideas, like caching segments on local disks, we started to think about how to solve the worst case. That is when the query has to read data from remote segments. Our hope was that by solving this, we can potentially guarantee consistent and predictable, low latency for all queries.

Pinot Segment Format

Then, to answer the question of what should we fetch, given that we know we don’t want to fetch the whole segment? We decided to take a deeper look at the Pinot segment format, to see if we could use the columnar nature of this database to our advantage. Here’s an example of a Pinot segment file. Let’s say we have columns like browser, region, country, and then some metric columns like impression, cost, and then our timestamp as well.

In Pinot, the segments are packed in a columnar fashion. One after the other, you’re going to see all these columns lined up in this segment file called columns.psf. For each column as well, you will see specific, relevant data buffers. For example, you could have forward indexes, you could have dictionaries, and then some specialized indexes like inverted index, range index, and so on.

This segment format allowed us to be a lot more selective and specific when deciding what we wanted to read from the Pinot segment. We decided we would do a selective columnar fetch, so bringing back this diagram where we have a server and we have some segments in a cloud object store. If you get a query like select sum of impressions, and a filter on the region column, we are only interested in the region and impressions. That’s all we’ll fetch.

Further, we also know from the query plan, that region is only needed to evaluate a filter, so we probably just need a dictionary and inverted index for that. Once we have the matching rows or impressions, we only need the dictionary and forward index. All other columns can be skipped. We used a range GET API, which is an API provided by S3, to just pull out these portions of the segment that we need: the specific index buffers, region dictionary, region inverted index, impressions for word index, impressions dictionary.

This worked pretty well for us. We were happy at that point with, this is the what to read part. Now that we know what data to read, next, we began thinking about when to read the data. We already saw earlier, that when a Pinot broker gets a query, it scatters the request to the servers, and then each server executes the query. Now in this figure, we are going to see what happens within a Pinot server when it gets a query. First, the server makes a segment execution plan as part of the planning phase. This is where it decides, which are the segments that it needs to process.

Then those segments are processed by multiple threads in parallel. One of the ideas that we explored was to fetch the data from S3, just as we’re about to execute this segment. In each of these segment executions, just before we would fetch the data from S3, and only then proceed to executing the query on that segment.

We quickly realized that this is not a great strategy. To demonstrate that, here’s a quick example. Let’s say you have 40 segments, and parallelism at our disposal on this particular server is 8. That means we would be processing these 40 segments in batches of 8, and that would mean that we are going to do 5 rounds to process all of them. Let’s assume that the latency to download data from S3 is 200 milliseconds.

For each batch, we are going to need 200 milliseconds, because as soon as the segment batch begins to get processed, we will first make a round trip to S3 to get that data from that segment. This is quickly going to add up. For each batch, we will need 200 milliseconds, so your total query time is going to be 1000 milliseconds overhead right there. One thing that we observed was that if you check the CPU utilization during this time, most of the time the threads are waiting for the data to become available, and the CPU cores would just stay idle.

Could we somehow decide the segments needed by the query a lot earlier, and then prefetch them so that we can pipeline the IO and data processing as much as possible? That’s exactly what we did. During the planning phase itself, we know on the server which segments are going to be needed by this query. In the planning phase itself, we began prefetching all of them. Then, just before the segment execution, the thread would wait for that data to be available, and the prefetch was already kick started. In the best-case scenario, we’re already going to have that data prefetched and ready to go.

Let’s come back to our example of the 40 segments with the 8 parallelism. In this case, instead of fetching when each batch is about to be executed, we would have launched the prefetch for all the batches in the planning phase itself. That means that maybe the first batch still has to wait 200 milliseconds for the data to be available. While that is being fetched, the data for all the batches is being fetched. For the future batches, you don’t have to spend any time waiting, and this would potentially reduce the query latency down to a single round trip of S3. That’s just 200 milliseconds overhead.

Benchmark vs. Presto

Taking these two techniques, so far, which is selective columnar fetch and prefetching during data planning with pipelining the fetch and execution, we did a simple benchmark. The benchmark was conducted in a small setup with about 200 gigabytes of data, one Pinot server. The queries were mostly aggregation queries with filters, GROUP BY and ORDER BY. We also included a baseline number with the same data on Presto to reference this with a decoupled architecture. Let’s see the numbers. Overall, Pinot with tiered storage was 5 times to 20 times faster than Presto.

What Makes Pinot Fast?

How is it that Pinot is able to achieve such blazing fast query latencies compared to other decoupled systems like Presto, even when we change the underlying design to be decoupled storage and compute? Let’s take a look at some of the core optimizations used in Pinot which help with that. Bringing back the relevant components of the architecture, we have broker, let’s say we have 3 servers, and say that each server has 4 segments. That means we have total 12 segments in this cluster.

When a query is received by the broker, it finds the servers to scatter the query to. In each server, it finds the segments it should process. Within each segment, we process certain number of documents based on the filters, then we aggregate the results on the servers. A final aggregation is done on the broker. At each of these points, we have optimizations to reduce the amount of work done. Firstly, broker side pruning is done to reduce the number of servers that we fan out to. Brokers ensure that they select the smallest subset of servers needed for a query and optimize it further using techniques like smart segment assignment strategies, partitioning, and so on.

Once the query reaches the server, more pruning is done to reduce the number of segments that it has to process on each server. Then within each segment, we scan the segment to get the documents that we need. To reduce the amount of work done and the document scan, we apply filter optimizations like indexes. Finally, we have a bunch of aggregation optimizations to calculate fast aggregations.

Let’s talk more about the pruning techniques available in Pinot, and how we’re able to use them even when segments have been moved to the tier. We have pruning based on min/max value columns, or partition-based pruning using partition info. Both of these metadata are cached locally, even if the segment is on a remote cloud object store. Using that, we are quickly able to eliminate segments where we won’t find the matching data. Another popular technique used in Pinot is Bloom filter-based pruning. These are built per segment.

We can read it to know if a value is absent from a given segment. This one is a lot more effective than the min/max based or partition-based pruning. These techniques really help us a lot because they help us really narrow down the scope of the segments that we need to process. It helps us reduce the amount of data that we are fetching and processing from S3.

Let’s take a look at the filter optimizations available in Pinot. All of these are available for use, even if the segment moves to the remote tier. We have inverted indexes where for every unique value, we keep a bitmap of matching doc IDs. We also have classic techniques like sorted index, where the column in question is sorted within the segment, so we can simply keep start and end document ID for the value. We also have range index, which helps us with range predicates such as timestamp greater than, less than, in between.

This query pattern is quite commonly found in user facing dashboards and in real-time anomaly detection. Then we have a JSON index, which is a very powerful index structure. If your data is in semi-structured form, like complex objects, nested JSON. You don’t need to invest in preprocessing your data into structured content, you can ingest it as-is, and Pinot will index every field inside your complex JSON, allowing you to query it extremely fast. Then we have the text index for free text search and RegEx b like queries, which helps with log analytics.

Then, geospatial index, so if you’re storing geo coordinates, it lets you compute geospatial queries, which can be very useful in applications like orders near you, looking for things that are 10 miles from a given location, and so on. We also have aggregation optimizations such as theta sketches, and HyperLogLog for approximate aggregations. All of these techniques we can continue using, even if the segment is moved on to a cloud object store. This is one of the major reasons why the query latency for Pinot is so much faster than traditionally decoupled storage and compute systems.

Benchmark vs. Tightly-Coupled Pinot

While these techniques did help us get better performance than traditionally decoupled systems, when compared to tightly coupled Pinot, which is our true baseline, we could see a clear slowdown. This showed that the two techniques that we implemented in our first version are not enough, they are not effective enough to hide all the data access latency from S3. To learn more from our first version, we stress tested it with a much larger workload.

We put 10 terabytes of data into a Pinot cluster with 2 servers that had a network bandwidth on each server of 1.25 gigabytes per second. Our first finding from the stress test was that the network was saturated very easily and very often. The reason is that, although we tried to reduce the amount of data to read with segment pruning and columnar fetch, we still read a lot of data unnecessarily for those columns, because we fetch the full column in the segment.

Especially, if you have high selectivity filters where you’re probably going to need just a few portions from the whole column, this entire columnar fetch is going to be wasteful. Then, this also puts pressure on the resources that we reserve for prefetching all this data. Also, once the network is saturated, all we can do from the system’s perspective, is what the instance network bandwidth will allow us. No amount of further parallelism could help us here. On the other hand, we noticed that when network was not saturated, we could have been doing a lot more work in parallel and reducing the sequential round trips we made to S3. Our two main takeaways were, reduce the amount of unnecessary data read, and increase the parallelism even more.

One of the techniques we added for reading less was an advanced configuration to define how to split the data across local versus remote. It doesn’t just have to be by data age, you can be super granular and say, I want this specific column to be local, or the specific index of this column to be local, and everything else on cloud storage. With this, you can pin lightweight data structures such as Bloom filters locally onto the instance storage, which is usually a very small fraction of the total storage, and it helps you do fast and effective pruning. Or you can also pin any other index structures that you know we’ll be using often.

Another technique we implemented is, instead of doing a whole columnar fetch all the time, we decided that we will just read relevant chunks of the data from the column. For example, bringing back our example from a few slides ago, in this query, when we are applying the region filter, after reading the inverted index, we know that we only need these few documents from the whole impressions column. Maybe we don’t need to fetch the full forward index, all we can do is just read small blocks of data during the post filter execution.

With that, our execution plan becomes, during prefetch, only fetch the region.inv_idx. Or the data that we need to read from the impressions column, we will read that on-demand, and only we will read few blocks. We tested out these optimizations on the 10-terabyte data setup. We took three queries of varying selectivity. Then we ran these queries with the old design that had only columnar fetch and prefetching and pipelining, and also with the new design where we have more granular block level fetches instead of full columnar fetch. We saw some amazing reduction in data size compared to our phase one. This data size reduction directly impacted and improved the query latency.

StarTree Index

One index that we did not talk about when we walked through the indexes in Pinot is the StarTree index. Unlike other indexes in Pinot, which are columnar, StarTree is a segment level index. It allows us to maintain pre-aggregated values for certain dimension combinations. You can choose exactly which dimensions you want to pre-aggregate, and also how many values you want to pre-aggregate at each level. For example, assume our data has columns, name, environment ID, type, and a metric column value along with a timestamp column.

We decided that we want to create a StarTree index, and only materialize the name and environment ID, and we only want to store the aggregation of sum of value. Also, that we will not keep more than 10 records unaggregated at any stage. This is how our StarTree will look. We will have a root node, which will split into all the values for the name column. In each name column, we will have again a split-up for all the values of environment ID. Finally, at every leaf node, we will store the aggregation value, sum of value.

Effectively, StarTree lets you choose between pre-aggregating everything, and doing everything on the fly. A query like this where we have a filter on name and environment ID and we are looking for sum of value, this is going to be super-fast because it’s going to be a single lookup. We didn’t even have to pre-aggregate everything for this, nor did we have to compute anything on the fly.

How did we effectively use this in tiered storage, because you can imagine that this index must be pretty big in size compared to other indexes like inverted or Bloom filter, so pinning it locally won’t work as that would be space inefficient. Prefetching it on the fly will hurt our query latency a lot. This is where all the techniques that we talked about previously came together. We pinned only the tree structure locally, which is very small, and lightweight.

As for the data at each node and aggregations, we continue to keep them in S3. When we got a query that could use this index, it quickly traversed the locally pinned tree, pointing us to the exact location of the result in the tree, which we could then get with a few very quick lookups on S3. Then we took this for a spin with some very expensive queries, and we saw a dramatic reduction in latency because the amount of data fetched had reduced.

More Parallelism – Post Filter

So far, we discussed techniques on how to reduce the amount of data read. Let’s talk about one optimization we are currently playing with to increase the parallelism. Bringing back this example where we knew from the inverted index that we’ll only need certain rows, and then we only fetch those blocks during the post filter evaluation phase. We build sparse indexes which help us get this information about which exact chunks we would need from this forward index in the planning phase itself.

Knowing this in the planning phase helps because now we’re able to identify and begin prefetching these chunks. In the planning phase, while the filter is getting evaluated, these chunks are getting prefetched in parallel so that the post filter phase is going to be much faster.

Takeaways

We saw a lot of techniques that we used in order to build tiered storage, such that we could keep the speed of Pinot, while reducing the cost. I’d like to summarize some of the key takeaways with tiered storage in Apache Pinot:

- We have unlocked flexibility and simplicity of using a single system for real-time as well as historical data.

- We’re able to use cheap cloud object storage directly in the query path instead of using disk or SSD, so we don’t have to worry about the cost spiraling out of control as our data volume increases.

- We’re able to get better performance than traditionally decoupled systems, because we’re effectively using our indexes, prefetching optimizations, pruning techniques, and so on.

- We also keep pushing the boundaries of the performance with newer optimizations to get closer to the latencies that we saw in tightly-coupled Pinot.

See more presentations with transcripts

MMS • RSS

TEMPO.CO, Jakarta – Beberapa orang, mungkin termasuk Anda kurang familiar jika mendengar nama dedaunan yang satu ini. Daun balakacida yang memiliki nama lain kirinyuh ini, sebenarnya masuk pada salah satu jenis tumbuhan pengganggu dari famili asteraceae. Umumnya balakacida banyak dijumpai di dataran dengan ketinggian sekitar 100 sampai 2800 mdpl, namun di Indonesia sendiri, balakacida justru dapat tumbuh di dataran rendah bahkan kurang dari 500 mdpl.

Apa itu Tanaman Balakacida ?

Tanaman balakacida merupakan gulma yang berbentuk serat berkayu yang dapat berkembang dengan cepat sehingga terkadang sulit dikendalikan pertumbuhannya. Ujung daun tumbuhan balakacida berbentuk runcing dimana kedua tepi daun di kanan kiri ibu tulang daun sedikit menuju keatas dan membentuk sudut lancip. Sementara itu, bentuk pangkal daun balakacida berbentuk ramping atau rata. Tepi daunnya adalah toreh dengan bentuk bergerigi dimana bentuk sinus dan angulusnya sama-sama lancip.

Batang tanaman balakacida berbentuk bulat dan tegak lurus dengan arah tumbuh batang. Pada permukaan batangnya, terdapat rambu dan permukaan berbulu seperti rambut. Tumbuhan tahunan ini, memiliki percabangan pada batang dengan menggunakan cara percabangan monopodial, dimana batang pokok tampak lebih jelas karena berukuran lebih besar dan panjang daripada cabang-cabangnya. Bentuk percabangan pada tumbuhan ini adalah tegak, dimana sudut antara batang dan cabang amat kecil, sehingga arah tubuh cabang lainnya pada pangkal sedikit serong keatas, namun selanjutnya hampir sejajar dengan batang pohonnya.

Apa Manfaat Tanaman balakacida?

Tumbuhan ini memiliki beragam manfaat bagi kesehatan manusia. Berikut beberapa manfaat dari tumbuhan balakacida bagi kesehatan.

Obat luka tanpa menimbulkan bengkak

Khasiat utama dari tanaman balakacida adalah untuk mengobati luka jaringan lunak, luka bakar, dan infeksi kulit. Daun dari tumbuhan ini dapat berkhasiat sebagai antelmintik, antimalaria, analgesik, antispasmodik, antipiretik, diuretik, antihipertensi, antibakteri, antijamur, anti inflamasi, insektisida, antioksidan, infeksi saluran kemih, serta juga mampu berperan dalam pembekuan darah. Secara tradisional, daun balakacida juga digunakan secara turun-temurun sebagai obat penyembuhan luka, obat kumur untuk sakit tenggorokan, obat batuk, obat demam, obat sakit kepala, dan anti diare.

Bahan insektisida nabati untuk mengendalikan beberapa jenis mikroorganisme