Month: August 2023

MMS • RSS

Tower Research Capital LLC TRC increased its position in shares of MongoDB, Inc. (NASDAQ:MDB – Free Report) by 116.4% in the first quarter, according to its most recent 13F filing with the Securities & Exchange Commission. The institutional investor owned 6,173 shares of the company’s stock after purchasing an additional 3,320 shares during the quarter. Tower Research Capital LLC TRC’s holdings in MongoDB were worth $1,439,000 at the end of the most recent quarter.

Tower Research Capital LLC TRC increased its position in shares of MongoDB, Inc. (NASDAQ:MDB – Free Report) by 116.4% in the first quarter, according to its most recent 13F filing with the Securities & Exchange Commission. The institutional investor owned 6,173 shares of the company’s stock after purchasing an additional 3,320 shares during the quarter. Tower Research Capital LLC TRC’s holdings in MongoDB were worth $1,439,000 at the end of the most recent quarter.

A number of other hedge funds have also made changes to their positions in MDB. Cherry Creek Investment Advisors Inc. increased its position in MongoDB by 1.5% in the 4th quarter. Cherry Creek Investment Advisors Inc. now owns 3,283 shares of the company’s stock valued at $646,000 after acquiring an additional 50 shares in the last quarter. CWM LLC increased its position in MongoDB by 2.4% in the 1st quarter. CWM LLC now owns 2,235 shares of the company’s stock valued at $521,000 after acquiring an additional 52 shares in the last quarter. Cetera Advisor Networks LLC boosted its stake in shares of MongoDB by 7.4% in the 2nd quarter. Cetera Advisor Networks LLC now owns 860 shares of the company’s stock valued at $223,000 after purchasing an additional 59 shares during the last quarter. First Republic Investment Management Inc. boosted its stake in shares of MongoDB by 1.0% in the 4th quarter. First Republic Investment Management Inc. now owns 6,406 shares of the company’s stock valued at $1,261,000 after purchasing an additional 61 shares during the last quarter. Finally, Janney Montgomery Scott LLC boosted its stake in shares of MongoDB by 4.5% in the 4th quarter. Janney Montgomery Scott LLC now owns 1,512 shares of the company’s stock valued at $298,000 after purchasing an additional 65 shares during the last quarter. Institutional investors and hedge funds own 89.22% of the company’s stock.

Wall Street Analyst Weigh In

MDB has been the topic of several recent research reports. Barclays raised their target price on shares of MongoDB from $374.00 to $421.00 in a research note on Monday, June 26th. 58.com reissued a “maintains” rating on shares of MongoDB in a research note on Monday, June 26th. Royal Bank of Canada lifted their price objective on shares of MongoDB from $400.00 to $445.00 in a research note on Friday, June 23rd. Tigress Financial lifted their price objective on shares of MongoDB from $365.00 to $490.00 in a research note on Wednesday, June 28th. Finally, William Blair reaffirmed an “outperform” rating on shares of MongoDB in a research note on Friday, June 2nd. One investment analyst has rated the stock with a sell rating, three have issued a hold rating and twenty have issued a buy rating to the company’s stock. According to data from MarketBeat.com, MongoDB presently has a consensus rating of “Moderate Buy” and a consensus target price of $378.09.

Check Out Our Latest Stock Report on MongoDB

Insiders Place Their Bets

In other MongoDB news, Director Dwight A. Merriman sold 3,000 shares of MongoDB stock in a transaction on Thursday, June 1st. The shares were sold at an average price of $285.34, for a total transaction of $856,020.00. Following the completion of the transaction, the director now directly owns 1,219,954 shares of the company’s stock, valued at $348,101,674.36. The transaction was disclosed in a document filed with the SEC, which is available at the SEC website. In other news, Director Dwight A. Merriman sold 3,000 shares of the business’s stock in a transaction dated Thursday, June 1st. The shares were sold at an average price of $285.34, for a total value of $856,020.00. Following the completion of the sale, the director now owns 1,219,954 shares of the company’s stock, valued at $348,101,674.36. The sale was disclosed in a document filed with the SEC, which is available at this hyperlink. Also, Director Dwight A. Merriman sold 1,000 shares of the business’s stock in a transaction dated Tuesday, July 18th. The shares were sold at an average price of $420.00, for a total transaction of $420,000.00. Following the completion of the sale, the director now directly owns 1,213,159 shares of the company’s stock, valued at approximately $509,526,780. The disclosure for this sale can be found here. Insiders sold a total of 102,220 shares of company stock valued at $38,763,571 over the last ninety days. 4.80% of the stock is currently owned by company insiders.

MongoDB Price Performance

Shares of MDB opened at $364.41 on Tuesday. The company has a market cap of $25.72 billion, a price-to-earnings ratio of -78.03 and a beta of 1.13. MongoDB, Inc. has a 1-year low of $135.15 and a 1-year high of $439.00. The company’s fifty day simple moving average is $394.12 and its 200 day simple moving average is $291.06. The company has a current ratio of 4.19, a quick ratio of 4.19 and a debt-to-equity ratio of 1.44.

MongoDB (NASDAQ:MDB – Get Free Report) last issued its earnings results on Thursday, June 1st. The company reported $0.56 earnings per share for the quarter, topping analysts’ consensus estimates of $0.18 by $0.38. MongoDB had a negative net margin of 23.58% and a negative return on equity of 43.25%. The business had revenue of $368.28 million for the quarter, compared to the consensus estimate of $347.77 million. During the same period in the previous year, the company earned ($1.15) earnings per share. The firm’s revenue for the quarter was up 29.0% on a year-over-year basis. On average, research analysts forecast that MongoDB, Inc. will post -2.8 earnings per share for the current fiscal year.

MongoDB Profile

MongoDB, Inc provides general purpose database platform worldwide. The company offers MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premise, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Further Reading

This instant news alert was generated by narrative science technology and financial data from MarketBeat in order to provide readers with the fastest and most accurate reporting. This story was reviewed by MarketBeat’s editorial team prior to publication. Please send any questions or comments about this story to contact@marketbeat.com.

Before you consider MongoDB, you’ll want to hear this.

MarketBeat keeps track of Wall Street’s top-rated and best performing research analysts and the stocks they recommend to their clients on a daily basis. MarketBeat has identified the five stocks that top analysts are quietly whispering to their clients to buy now before the broader market catches on… and MongoDB wasn’t on the list.

While MongoDB currently has a “Moderate Buy” rating among analysts, top-rated analysts believe these five stocks are better buys.

Click the link below and we’ll send you MarketBeat’s guide to investing in electric vehicle technologies (EV) and which EV stocks show the most promise.

MMS • RSS

SQL often struggles when it comes to managing massive amounts of time series data, but it’s not because of the language itself. The main culprit is the architecture that SQL typically works in, namely relational databases, which quickly become inefficient because they’re not designed for analytical queries of large volumes of time series data.

Traditionally, SQL is used with relational database management systems (RDBMS) that are inherently transactional. They are structured around the concept of maintaining and updating records based on a rigid, predefined schema. For a long time, the most widespread type of database was relational, with SQL as its inseparable companion, so it’s understandable that many developers and data analysts are comfortable with it.

However, the arrival of time series data brings new challenges and complexities to the field of relational databases. Applications, sensors, and an array of devices produce a relentless stream of time series data that does not neatly fit into a fixed schema, as relational data does. This ceaseless data flow creates colossal data sets, leading to analytical workloads that demand a unique type of database. It is in these situations where developers tend to shift toward NoSQL and time series databases to handle the vast quantities of semi-structured or unstructured data generated by edge devices.

While the design of traditional SQL databases is ill-suited for handling time series, using a purpose-built time series database that accommodates SQL has offered developers a lifeline. SQL users can now utilize this familiar language to develop real-time applications, and effectively collect, store, manage, and analyze the burgeoning volumes of time series data.

However, despite this new capability, SQL users must consider certain characteristics of time series data to avoid potential issues or challenges down the road. Below I discuss four key considerations to keep in mind when diving head-first into SQL queries of time series data.

Time series data is inherently non-relational

That means it may be necessary to reorient the way we think about using time series data. For example, an individual time series data point on its own doesn’t have much use. It is the rest of the data in the series that provides the critical context for any single datum. Therefore, users look at time series observations in groups, but individual observations are all discrete. To quickly uncover insights from this data, users need to think in terms of time and be sure to define a window of time for their queries.

Since the value of each data point is directly influenced by other data points in the sequence, time series data is increasingly used to perform real-time analytics to identify trends and patterns, allowing developers and tech leaders to make informed decisions very quickly. This is much more challenging with relational data due to the time and resources it can take to query related data from multiple tables.

Scalability is of paramount importance

As we connect more and more equipment to the internet, the amount of generated data grows exponentially. Once these data workloads grow beyond trivial—in other words, when they enter a production environment—a transactional database will not be able to scale. At that point, data ingestion becomes a bottleneck and developers can’t query data efficiently. And none of this can happen in real time, because of the latency due to database reads and writes.

A time series database that supports SQL can provide sufficient scalability and speed to large data sets. Strong ingest performance allows a time series database to continuously ingest, transform, and analyze billions of time series data points per second without limitations or caps. As data volumes continue to grow at exponential rates, a database that can scale is critical to developers managing time series data. For apps, devices, and systems that create huge amounts of data, storing the data can be very expensive. Leveraging high compression reduces data storage costs and enables up to 10x more storage without sacrificing performance.

SQL can be used to query time series

A purpose-built time series database enables users to leverage SQL to query time series data. A database that uses Apache DataFusion, a distributed SQL query engine, will be even more effective. DataFusion is an open source project that allows users to efficiently query data within specific windows of time using SQL statements.

Apache DataFusion is part of the Apache Arrow ecosystem, which also includes the Flight SQL query engine built on top of Apache Arrow Flight, and Apache Parquet, a columnar storage file format. Flight SQL provides a high-performance SQL interface to work with databases using the Arrow Flight RPC framework, allowing for faster data access and lower latencies without the need to convert the data to Arrow format. Engaging the Flight SQL client is necessary before data is available for queries or analytics. To provide ease of access between Flight SQL and clients, the open source community created a FlightSQL driver, a lightweight wrapper around the Flight SQL client written in Go.

Additionally, the Apache Arrow ecosystem is based on columnar formats for both the in-memory representation (Apache Arrow) and the durable file format (Apache Parquet). Columnar storage is perfect for time series data because time series data typically contains multiple identical values over time. For example, if a user is gathering weather data every minute, temperature values won’t fluctuate every minute.

These same values provide an opportunity for cheap compression, which enables high cardinality use cases. This also enables faster scan rates using the SIMD instructions found in all modern CPUs. Depending on how data is sorted, users may only need to look at the first column of data to find the maximum value of a particular field.

Contrast this to row-oriented storage, which requires users to look at every field, tag set, and timestamp to find the maximum field value. In other words, users have to read the first row, parse the record into columns, include the field values in their result, and repeat. Apache Arrow provides a much faster and more efficient process for querying and writing time series data.

A language-agnostic software framework offers many benefits

The more work developers can do on data within their applications, the more efficient those applications can be. Adopting a language-agnostic framework, such as Apache Arrow, lets users work with data closer to the source. A language-agnostic framework not only eliminates or reduces the need for extract, transform, and load (ETL) processes, but also makes working on large data sets easier.

Specifically, Apache Arrow works with Apache Parquet, Apache Flight SQL, Apache Spark, NumPy, PySpark, Pandas, and other data processing libraries. It also includes native libraries in C, C++, C#, Go, Java, JavaScript, Julia, MATLAB, Python, R, Ruby, and Rust. Working in this type of framework means that all systems use the same memory format, there is no overhead when it comes to cross-system communication, and interoperable data exchange is standard.

High time for time series

Time series data include everything from events, clicks, and sensor data to logs, metrics, and traces. The sheer volume and diversity of insights that can be extracted from such data are staggering. Time series data allow for a nuanced understanding of patterns over time and open new avenues for real-time analytics, predictive analysis, IoT monitoring, application monitoring, and devops monitoring, making time series an indispensable tool for data-driven decision making.

Having the ability to use SQL to query that data removes a significant barrier to entry and adoption for developers with RDBMS experience. A time series database that supports SQL helps to close the gap between transactional and analytical workloads by providing familiar tooling to get the most out of time series data.

In addition to providing a more comfortable transition, a SQL-supported time series database built on the Apache Arrow ecosystem expands the interoperability and capabilities of time series databases. It allows developers to effectively manage and store high volumes of time series data and take advantage of several other tools to visualize and analyze that data.

The integration of SQL into time series data processing not only brings together the best of both worlds but also sets the stage for the evolution of data analysis practices—bringing us one step closer to fully harnessing the value of all the data around us.

Rick Spencer is VP of products at InfluxData.

—

New Tech Forum provides a venue to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to newtechforum@infoworld.com.

Next read this:

MMS • Andrew Hoblitzell

The open-source Project Jupyter, used by millions for data science and machine learning, has released Jupyter AI, a free tool bringing powerful generative AI capabilities to Jupyter notebooks.

The tool includes %%ai magic commands for notebooks. The tool also includes a chat interface called Jupyternaut to leverage large language models like GPT-3, Claude, and Amazon Titan to generate, explain, and code directly within JupyterLab and Jupyter notebooks. Additionally, users can use the “/generate” command to produce entire notebooks from text prompts.

Amazon also announced an Amazon CodeWhisperer Jupyter extension. Apart from these generative AI extensions, AWS has introduced new Jupyter extensions to build, train, and deploy ML at scale. These include notebooks scheduling, SageMaker open-source distribution, and the Amazon CodeGuru Jupyter extension.

The innovative technology of Jupyter AI not only helps simplify tasks and save the developer’s time but also increases their ability to tackle new difficulties. Gleb Tsipursky wrote in Forbes, “Generative AI’s coding ability will automate repetitive tasks, increase productivity, and enhance collaboration between humans and AI, creating a symbiotic relationship that will redefine the programming landscape”.

The use of generative AI in software development has been steadily increasing. Research by McKinsey Digital shows how generative AI like Jupyter AI accelerates updates to existing codes and provides a head start to the first draft of a new code.

Jupyter senior front-end engineer Jason Weill has pointed out the intention of creating ethical, socially responsible AI with data protection in mind, and that is evident in features such as the freedom of users to select their favourite LLM, embedding model, and vector database to meet their individual needs. Jupyter AI aims to protect the privacy of user data and only communicates with LLMs upon request; data is never accessed or transmitted without the user’s consent.

Users can use pip to install JupyterLab (version 3 or 4) to begin using Jupyter AI. The extension can be found here on GitHub.

AI, ML, Data Engineering News Roundup: Jupyter AI, AudioCraft, OverflowAI, StableCode and Tabnine

MMS • Daniel Dominguez

The latest update, which covers developments until August 7, 2023, highlights significant accomplishments and statements made in the fields of artificial intelligence, machine learning, and data science. This week’s major news involved Jupyter, Meta AI, Stack Overflow, Stability AI and Tabnine.

Jupyter Notebook Releases Generative AI Extension for JupyterLab

Jupyter introduced Jupyter AI, a project that adds generative artificial intelligence to Jupyter notebooks. It enables users to generate full notebooks from simple natural language prompts, explain and write code, correct errors, and summarize content. The program links Jupyter to large language models (LLMs) from several vendors, including AI21, Anthropic, AWS, Cohere, and OpenAI, which is supported by LangChain.

Jupyter AI gives users the freedom to select their favorite LLM, embedding model, and vector database to meet their individual needs because it was created with ethical AI and data protection in mind. Data transparency is guaranteed by the open source nature of the software’s core prompts, chains, and components. Additionally, it stores metadata regarding model-generated content, making it easier to follow AI-generated code throughout the workflow. Importantly, Jupyter AI protects the privacy of user data and only communicates with LLMs upon request; data is never accessed or transmitted without the user’s express authorization.

Meta Open Sources Audiocraft, a Framework for Generating Sounds and Music

Meta has open sourced its text-to-music generative AI, AudioCraft, for researchers and practitioners to train their own models and help advance the state of the art. AudioCraft is a framework to generate high-quality, realistic audio and music from short text descriptions, or prompts. AudioCraft is largely based on three distinct AI models: MusicGen, AudioGen, and EnCodec. Using music samples that are owned and licensed by Meta, MusicGen creates music from text-based inputs. While using publicly accessible sound effects, AudioGen creates audio from text-based inputs. True-to-life audio outputs are produced by the EnCodec decoder, enabling higher-quality music generation with fewer artifacts.

AudioCraft simplifies the processes of compression, generation, and music/sound creation. This enables users to enhance the present code foundation for more efficient sound generation and compression methods, showcasing the adaptability of AudioCraft. In essence, you can build upon an established framework rather than starting anew. Your starting point will be determined by the dataset’s existing limitations.

Stack Overflow Jumps Into Generative AI with OverflowAI

Stack Overflow announced its strategic plan to incorporate generative AI into its public platform, Stack Overflow for Teams. This innovative approach extends to new product domains, including an IDE integration designed to seamlessly merge the extensive expertise encapsulated within 58 million community-generated questions and answers into the very space where developers channel their concentration and accomplish tasks. This comprehensive effort is collectively referred to as OverflowAI.

The OverflowAI initiative consists of a number of projects, including improved AI search on both public and enterprise platforms. OverflowAI is not a single product. Additionally, there is a Slack integration and an OverflowAI Visual Studio code plugin available for businesses. Additionally, OverflowAI will aid with enterprise knowledge intake for Stack Overflow for Teams. The ultimate objective is to make it simpler for businesses and developers to locate and utilize the information they require.

Stability AI Launches StableCode, an LLM for Code Generation

Stability AI announced StableCode, its very first LLM generative AI product for coding. This product is designed to assist programmers with their daily work while also providing a great learning tool for new developers ready to take their skills to the next level. Three tiers of StableCode are being offered: a base model for general use cases, an instruction model, and a long-context-window model with a maximum support of 16,000 tokens.

The StableCode model draws upon an initial compilation of programming language data sourced from the open-source BigCode project, further refined and fine-tuned by Stability AI. In its initial phase, StableCode offers support for programming endeavors encompassing languages such as Python, Go, Java, JavaScript, C, markdown, and C++.

Tabnine Announced Tabnine Chat

Tabnine has recently announced the beta of Tabnine Chat to interact with Tabnine’s AI models using natural language. The chat application works inside the IDE, allows organizations to train on permissive code only, and can run on isolated environment deployment.

Tabnine Chat is an enterprise-grade coding chat application that lets developers interact naturally with Tabnine’s AI models. Unlike ChatGPT, it focuses on aiding professional developers in sizable projects. It operates within the IDE, supporting both initial app creation and ongoing work, particularly in larger commercial projects. Tabnine Enterprise users can connect their repositories to benefit from internal project-specific assistance. The tool complies with security needs and offers isolated deployment options, ensuring code privacy. Tabnine Chat’s training exclusively involves open-source code with permissive licenses, preventing contamination from copyleft code.

Big Data Analytics in Telecom Market Analysis and Revenue Prediction – The Chaminade Talon

MMS • RSS

The research study “Big Data Analytics in Telecom market Insights” has been added to Orbisresearch.com

An extensive and sequential assessment of the worldwide Big Data Analytics in Telecom market produces an end-to-end, verified, and well-documented research report agglomerating the main elements of the global Big Data Analytics in Telecom market comprising the supply chain, sales, marketing, product or project development, and cost structure. The research effectively integrates a balanced qualitative and quantitative investigation of the general Big Data Analytics in Telecom market which branches out into every component element underpinned by a similar analytical technique.

Request a sample report : https://www.orbisresearch.com/contacts/request-sample/6677139

The research article concentrates on the qualitative factors dependent on the components to rationally foundation the growth-related projections. evaluation of the most essential driving forces and their effect on the growth scales and patterns gives a credible prediction of future possibilities. On the other hand, proper appraisal of the principal limiting variables shows the essential components of the industry inhibiting the growth rate of the worldwide Big Data Analytics in Telecom market. moreover, the report scrutinizes current industry developments and internationally popularizing megatrends of diverse kinds recognizing their precise influence on the global Big Data Analytics in Telecom market growth in terms of increase in revenue and demand.

Key Players in the Big Data Analytics in Telecom market report:

Microsoft Corporation

MongoDB

United Technologies Corporation

JDA Software, Inc.

Software AG

Sensewaves

Avant

SAP

IBM Corp

Splunk

Oracle Corp.

Teradata Corp.

Amazon Web Services

Cloudera

The study extends into assessing the reaction of the worldwide Big Data Analytics in Telecom market dynamics to the devastating consequences of the COVID-19 breakout. The research assesses the effect of the unprepared environment and extreme delay in economic activity due to the deployment of continual lockdowns globally. the unexpected decline in market demand as well as stalled manufacturing capacity destroyed the worldwide Big Data Analytics in Telecom market substantially. The research report also captivates the significant alterations generated by the pandemic affecting the business models of the worldwide Big Data Analytics in Telecom industry. It also discusses the difficulties generated by the strong government measures imposed by governments in obedience to fight the devastating impacts of the pandemic.

Furthermore, the worldwide Big Data Analytics in Telecom market research gives conclusive insight via a thorough study of the competitive ecology of the business. It effectively aggregates extremely essential industry data displaying the major contributions of the main market players in expanding the commercial presence of the global Big Data Analytics in Telecom market. The analysis also examines the demand-to-supply ratio of each competitor evaluating the greatest to smallest capacity. The analysis includes an in-depth review of the particular growth efforts and company development strategies together with the infrastructural capabilities scaling up the growth potential of the worldwide Big Data Analytics in Telecom industry.

Do Inquiry before Accessing Report at: https://www.orbisresearch.com/contacts/enquiry-before-buying/6677139

Big Data Analytics in Telecom Market Segmentation:

Big Data Analytics in Telecom Market by Types:

Cloud-based

On-premiseBig Data Analytics in Telecom Market by Applications:

Small and Medium-Sized Enterprises

Large Enterprises

Why Purchase This Market Research Report:

• Critical concerns and challenges the worldwide Big Data Analytics in Telecom market will be encountering in the predicted years are outlined in the research to assist market participants align their business choices and strategies appropriately.

• The research outlines significant trends impacting the worldwide Big Data Analytics in Telecom industry.

• Trends responsible for the global and regional economic development of the global Big Data Analytics in Telecom market are emphasized in the study to aid market participants in a critical understanding of the future of the global Big Data Analytics in Telecom market.

• The study evaluates the production and operational practices taking place in the marketplace.

• The paper shows the issues experienced by the main geographies and countries from the pandemic and their reorientation of policies to survive the market.

Buy the report at https://www.orbisresearch.com/contact/purchase-single-user/6677139

The Investigation Report Takes to Response to some of the Following Topics:

• What will be the financial performance of North America, APAC, Europe, and Africa in the worldwide Big Data Analytics in Telecom market in 2022 and beyond?

• Which firms are expected to flourish in the worldwide Big Data Analytics in Telecom market with the support of foreign corporations, mergers and acquisitions, new product launches, and technical innovation?

• What are the strategy advice and business models for developing market players?

• Which are the worldwide Big Data Analytics in Telecom market’s biggest manufacturing enterprises and most competitive firms?

About Us:

Orbis Research (orbisresearch.com) is a single point aid for all your market research requirements. We have a vast database of reports from leading publishers and authors across the globe. We specialize in delivering customized reports as per the requirements of our clients. We have complete information about our publishers and hence are sure about the accuracy of the industries and verticals of their specialization. This helps our clients to map their needs and we produce the perfect required market research study for our clients.

Contact Us:

Hector Costello

Senior Manager – Client Engagements

4144N Central Expressway,

Suite 600, Dallas,

Texas – 75204, U.S.A.

Phone No.: USA: +1 (972)-591-8191 | IND: +91 895 659 5155

Email ID: sales@orbisresearch.com

MMS • Renato Losio

At the recent AWS Summit in New York, Amazon announced AWS HealthImaging. The new HIPAA-eligible service helps healthcare providers to store, analyze, and share medical imaging data at scale.

AWS HealthImaging optimizes medical imaging data, storing a single authoritative copy of each image for all clinical and research workflows. The service ingests data in the DICOM P10 format and provides APIs for low-latency retrieval. It creates de-identified copies of an image without copying pixel data, providing access control at the metadata level. Tehsin Syed, general manager at AWS, and Andy Schuetz, principal product manager at AWS, write:

Globally, more than 3.6 billion medical imaging procedures are performed each year, collectively generating exabytes of medical imaging data. The healthcare system is struggling to meet the growing demand for medical imaging procedures. Since 2008, the average number of imaging procedures assigned to radiologists in the US has increased from 58 per day to 100 per day. In that same period, the typical imaging study size doubled to nearly 150 MB.

The new service integrates with AWS DataSync and AWS Direct Connect to move data to the cloud. According to AWS, it is already supported by major medical imaging vendors and provides access to medical imaging data with sub-second image latency: once imported, data is immediately available for research workflows and diagnostic applications like picture archiving and communication systems (PACS). Syed and Schuetz explain how the import works:

Individual DICOM P10 files are imported as image frames and automatically organized in image sets with consistent metadata at the patient, study, and series levels. (…) The pixel data for each DICOM P10 file is encoded as High-Throughput JPEG 2000 (HTJ2K), a state-of-the-art image compression codec offering efficient lossless compression and resolution scalability.

HealthImaging validates that all data is transcoded successfully by adding checksums for each frame, so customers can verify the lossless image processing upon retrieving an image frame. Jeremy Bikman, president & CEO at Reaction Data, writes:

For the past 5 years, I’ve warned every medical imaging supplier that it was a matter of when, not if, Amazon would truly enter the space beyond providing just cloud services. Well, the other shoe finally dropped. Now the question is how long before they move upstream and start displacing solutions radiologists actually use.

AWS HealthImaging is not the only managed service announced at the summit targeting the medical industry. As separately reported on InfoQ, the cloud provider also previewed AWS HealthScribe, a new HIPAA-eligible service that uses speech recognition and generative AI to generate clinical documentation. Commenting on the announcements, Corey Quinn, chief cloud economist at The Duckbill Group, writes:

This is a prime example of AWS’s industry-vertical approach: slap an industry name on a barely differentiated version of a baseline service. Trouble is, customers are sophisticated when it comes to their specific industries, and they aren’t fooled. Virtually every previous incarnation of this pattern lands well with the tech press and the analyst firms, but actual customers pan them.

HealthImaging charges according to the amount of data stored and accessed, offering a tiered storage similar to the S3 Intelligent-Tiering storage class.

MMS • RSS

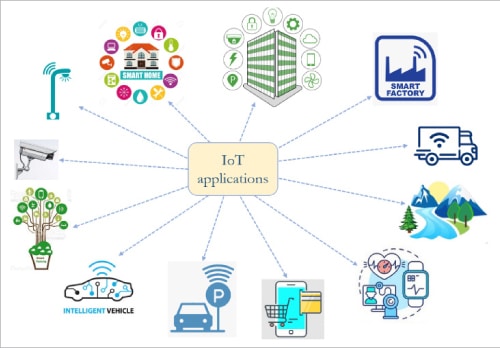

By leveraging databases for IoT, organisations can efficiently store, process, analyse, and derive insights from the massive volumes of data generated by IoT devices. We take a look at how to implement the MongoDB database in an IoT environment.

The Internet of Things (IoT) generates vast amounts of data from various sources, including sensors, devices, and applications. Databases provide a structured and organised way to store this data.

IoT applications often need to access specific subsets of data quickly and efficiently. Databases offer indexing and querying mechanisms that optimise data retrieval performance. They enable developers to define indexes on particular fields or attributes, improving the speed of data retrieval operations and reducing the overall latency in accessing IoT data.

Thus, by leveraging databases in IoT environments, organisations can effectively manage and harness the vast amounts of data generated by IoT devices. Databases provide data storage, processing, integration, security, scalability, and historical analysis capabilities that are essential for unlocking the full potential of IoT applications and deriving actionable insights from IoT data. Figure 1 shows the various sources of data generation in the IoT environment.

Comparison of MongoDB, RethinkDB, SQLite, and Apache Cassandra

Popular databases used in IoT applications are MongoDB, RethinkDB, SQLite, and Apache Cassandra. The choice between these depends on specific project requirements. MongoDB and RethinkDB are document databases suitable for handling diverse and evolving data, with MongoDB having broader industry adoption. SQLite is a lightweight embedded database for local storage, while Cassandra excels in high scalability, availability, and distributed architectures. Table 1 compares these four databases critically.

Table 1: MongoDB vs RethinkDB vs SQLite vs Apache Cassandra

| Criteria | MongoDB | RethinkDB | SQLite | Apache Cassandra |

| Latest version | MongoDB 6.0 | RethinkDB 2.4.2 | SQLite 3.42.0 | Apache Cassandra 4.1 |

| Released in | 2009 | 2012 | 2000 | 2008 |

| Supportable programming languages | C++, Python, JavaScript | C++, Python, JavaScript | Python, Java, C#, Ruby, PHP | C++, Java, Python, Go, Node.JS |

| Database model | Supports JSON | Supports JSON | Follows a relational data model and supports SQL queries. | Follows NoSQL database |

| Key features | Autosharing, indexing | Distributed database, automatic sharding, and replication | Serverless, flexible | Easy data distribution, fast linear-scale performance |

| Advantages of using in IoT applications | Document-oriented, flexible schema | Adaptable query language, plug-and-play functions | Enables small memory footprint, no dependencies | Fault-tolerant, scalable |

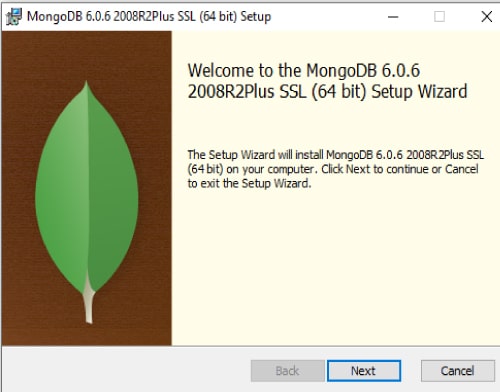

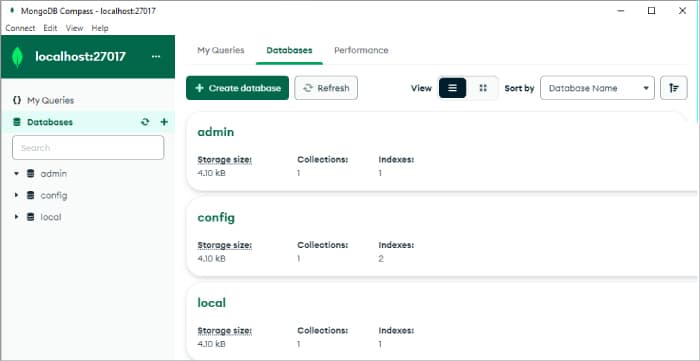

Installation of MongoDB on Windows

MongoDB 6.0 is the latest community edition version and can be downloaded on the official URL of MongoDB. To install MongoDB on Windows, a 64-bit system is required. The steps are given below.

Step 1: Download the MongoDB community .msi installer zip package and unpack it in your system directory.

Step 2: Next, navigate to the download directory; there should be an installer file called .msi. From the admin user, the installation wizard will be launched by double-clicking the .msi file, as shown in Figure 2.

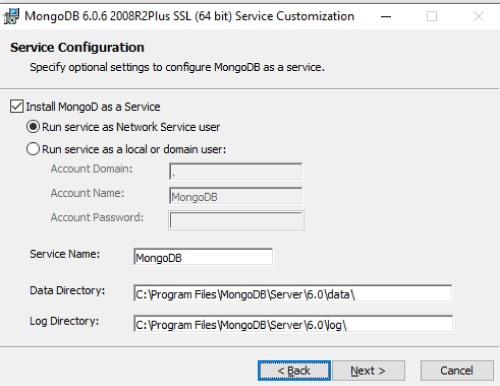

Step 3: After running the .msi installation file of MongoDB, choose the complete setup type. Configure and start MongoDB as a Windows service during the installation, and the MongoDB service will start on successful installation, as shown in Figure 3.

Step 4: Now, the user can edit the system environment variable from the ‘program files’ of the C drive.

Step 5: The MongoDB services can now be accessed at the local host, as shown in Figure 4.

Popular commands used in MongoDB for IoT

MongoDB offers a rich set of commands that can be useful for IoT applications. Table 2 lists some popular MongoDB commands commonly used in IoT scenarios.

Table 2: List of MongoDB commands used in IoT

| Command | Description |

| db.createCollection (“sensors”) | Creates a collection of ‘sensors’ to store data |

| db.insertOne | Adds a document to a collection |

| db.insertMany | Adds multiple files to a collection |

| db.sensors.find ({ sensorId: s }) | Retrieves sensor readings where the sensor ID is ‘s’ |

| db.aggregate | Performs aggregation operations on the data |

| db.updateOne | Updates a filter-matched document |

| db.deleteOne | Deletes a filter-matched document |

| db.deleteMany | Deletes multiple filter-matched documents |

Programming MongoDB using Python

To work with MongoDB in Python, you can use the PyMongo library, the official MongoDB driver for Python. PyMongo provides a simple and intuitive API for interacting with MongoDB from your Python code.

Step 1: Install PyMongo. PyMongo can be set up with the help of pip, Python’s package manager. This can be done using the following command:

$ pip install pymongo

Step 2: The PyMongo package can now be imported by running the following command:

$ import pymongo

Step 3: To work with MongoClient, we need to connect to the default host and port using the following command:

$ client = pymongo.MongoClient(“mongodb://localhost:27017/”)

Step 4: MongoDB allows multiple databases to coexist on a single server. Databases in PyMongo are accessed via MongoClient instances with dictionary-style access:

$ db = client[“mydatabase”]

Step 5: Now, access a collection within the database:

$ collection = db[“yourcollection”]

A collection in MongoDB is a set of related documents that functions similar to a table in a traditional database. Python’s method for accessing collections is identical to that used for databases.

Step 6: In this step, you can insert a document into a collection. Data in MongoDB is represented (and stored) using JSON-style documents. In PyMongo, we use dictionaries to represent documents. As an example, the following dictionary inserts a document in a MongoDB collection:

$document = {“Name”: “OSFY”, “Month”: 07}

$ collection.insert_one(document)

MongoDB is a NoSQL database widely used because of its document-oriented design. Built with horizontal scalability across multiple servers, it can easily handle massive amounts of data. Industries using the Internet of Things often need to process and analyse streaming data in real-time. MongoDB’s real-time data processing, querying, and aggregation capabilities facilitate prompt analysis and decision-making.

MMS • RSS

Database vendor MongoDB has completed an Information Security Registered Assessor Program (IRAP) assessment for its Atlas data platform, making the software available to Australian federal government agencies.

The assessment’s completion means Atlas has the appropriate security controls in place for the processing, storing and transmission of information classified up to, and including, the “‘PROTECTED” level.

Security vendor CyberCX carried out the assessment, evaluating Atlas for data workloads across AWS, Microsoft Azure and Google Cloud.

MongoDB said a number of Australian government agencies currently use its products on-premises, and the IRAP assessment now allows access to a fully managed experience in the cloud.

The vendor said government continues to be a growing focus, with the Australian IRAP assessment following the launch last month of the MongoDB Atlas for Public Sector initiative to help government agencies and public sector organisations address unique digital transformation and cloud adoption challenges.

“Government agencies are expected to offer citizens flawless and secure digital services, as well as easier ways to engage with government entities,” MongoDB APAC senior vice president Simon Eid said.

“Internally, teams are pressured to make data-driven decisions that are accurate and timely, while improving efficiency without jeopardising security.”

“Legacy database models have become a real hindrance and government agencies are looking at new ways to build and deliver the government services of tomorrow,” Eid added.

“Now, with the completion of the IRAP assessment, government agencies in Australia can empower their development teams to build new classes of applications that reimagine citizen experiences using MongoDB Atlas.”

“We think this will actively contribute to Australia’s reaching its goal of being the most cyber-secure nation in the world by 2030,” he said.

Microsoft ANZ chief technology officer Lee Hickin the tech giant is pleased to see MongoDB Atlas successfully complete the IRAP assessment, that enables Australian government agencies to securely build modern applications in the cloud.

“This will take the partnership between Microsoft and MongoDB to a new level, creating more opportunities for our joint customers, and provide government customers with a robust and reliable platform to accelerate digital transformation efforts, enhance citizen experiences, and ensure data safety and security,” Hickin said.

MMS • RSS

COMPANY NEWS: MongoDB Atlas can now be used by Australian government agencies to securely build modern applications in the cloud, quickly and at scale.

MongoDB, Inc. announced the completion of an Information Security Registered Assessor Program (IRAP) assessment that allows federal government agencies across Australia to use MongoDB’s developer data platform MongoDB Atlas to quickly and easily build, manage, and deploy modern applications. Carried out by security firm CyberCX, the IRAP assessment evaluated MongoDB Atlas’s platform for data workloads up to “PROTECTED” level across all three major cloud providers— AWS, Microsoft Azure, and Google Cloud Platform. Completion of this assessment provides Australian government customers with the required assurance that MongoDB Atlas has the appropriate security controls in place for the processing, storing and transmission of information classified up to and including the “‘PROTECTED” level.

Australian government agencies are under growing pressure to accelerate digital transformation efforts and increase time to market for new digital government services to improve citizen experiences while ensuring data is safe and secure. Many Australian government agencies currently use MongoDB on premises but want to take advantage of a fully managed experience in the cloud. With the completion of the IRAP assessment, government agencies in Australia will now be able to use MongoDB Atlas’s multi-cloud infrastructure and take advantage of embedded encryption capabilities, text-based and generative-AI-based search, and stream processing for high-volume and high-velocity data of virtually any type to quickly build modern applications for desktop and mobile with less complexity.

Data sovereignty, resilience and data security through multi-cloud and encryption

MongoDB Atlas is the only platform today offering true multi-cloud capabilities, meaning that data can be stored and synchronised on multiple cloud providers at the same time, rather than on one single cloud provider, to ensure high levels of resilience for critical government services. MongoDB’s multi-region capability allows organisations to use multiple cloud regions within the same defined geographic area, which makes applications more resilient and makes data sovereignty easier.

Finally, embedded security features built-in within MongoDB Atlas provide government agencies with the utmost data protection and data privacy levels. In particular, Queryable Encryption introduces an industry-first fast, searchable encryption scheme developed by the pioneers in encrypted search. The new feature means organisations can search and return encrypted data that becomes visible to application end-users only when decrypted with customer-controlled cryptographic keys—but remains encrypted in use throughout the query process, in transit over networks, and at rest in storage.

Leading government agencies around the world already trust MongoDB Atlas

More than one thousand public sector customers globally rely on MongoDB to run mission-critical workloads every day and use MongoDB Atlas to transform the way they serve citizens all around the world.

For example, as the COVID-19 crisis increased demand for digital services from millions of citizens and thousands of businesses overnight, the UK’s largest government department, the Department for Work and Pensions (DWP), has used MongoDB Atlas flexible document model and scale to offer its development team a new and more productive way to build applications, handle highly diverse data types and manage data efficiently at scale.

Government continues to be a growing focus for MongoDB, with the Australian IRAP assessment following the launch last month of the MongoDB Atlas for Public Sector initiative to help government agencies and public sector organisations address unique digital transformation and cloud adoption challenges to accelerate time-to-mission.

“Government agencies are expected to offer citizens flawless and secure digital services, as well as easier ways to engage with government entities,” said Simon Eid, Senior Vice President, APAC at MongoDB. “Internally, teams are pressured to make data-driven decisions that are accurate and timely while improving efficiency without jeopardising security. Legacy database models have become a real hindrance, and government agencies are looking at new ways to build and deliver the government services of tomorrow. Now, with the completion of the IRAP assessment, government agencies in Australia can empower their development teams to build new classes of applications that reimagine citizen experiences using MongoDB Atlas. We think this will actively contribute to Australia’s reaching its goal of being the most cyber-secure nation in the world by 2030.”

Lee Hickin, ANZ Chief Technology Officer, Microsoft, said: “We are pleased to see MongoDB Atlas successfully complete the IRAP assessment, enabling Australian government agencies to securely build modern applications in the cloud. This will take the partnership between Microsoft and MongoDB to a new level, creating more opportunities for our joint customers, and providing government customers with a robust and reliable platform to accelerate digital transformation efforts, enhance citizen experiences, and ensure data safety and security. “

MongoDB Developer Data Platform

MongoDB Atlas is the leading multi-cloud developer data platform that accelerates and simplifies building with data. MongoDB Atlas provides an integrated set of data and application services in a unified environment to enable developer teams to quickly build with the capabilities, performance, and scale modern applications require.

About MongoDB

Headquartered in New York, MongoDB’s mission is to empower innovators to create, transform, and disrupt industries by unleashing the power of software and data. Built by developers, for developers, our developer data platform is a database with an integrated set of related services that allow development teams to address the growing requirements for today’s wide variety of modern applications, all in a unified and consistent user experience. MongoDB has tens of thousands of customers in over 100 countries. The MongoDB database platform has been downloaded hundreds of millions of times since 2007, and there have been millions of builders trained through MongoDB University courses. To learn more, visit mongodb.com.

MMS • RSS

MongoDB, Inc. (NASDAQ:MDB), a leading technology company, has recently received a “Moderate Buy” rating from twenty-four brokerages, as reported by Bloomberg on August 14, 2023. This positive consensus demonstrates the confidence that analysts have in the company’s future prospects. Out of these twenty-four analysts, one has given a sell recommendation, three suggests holding the stock, while an overwhelming twenty analysts recommend buying it. The average 12-month price target is estimated to be $378.09 based on the ratings provided by these industry experts.

On Monday, MDB stock opened at $356.22, reflecting its current market valuation. Over the past 50 days, the stock has maintained a moving average of $394.61 and demonstrated its growth potential with a two-hundred day moving average of $289.96. These figures highlight the upward trajectory of the company’s shares in recent times.

Looking at other financial metrics for MongoDB, it boasts healthy liquidity ratios with both its quick ratio and current ratio standing at 4.19 each, indicating its ability to meet short-term obligations efficiently. Furthermore, considering debt management practices is crucial for investors; MongoDB’s debt-to-equity ratio stands at 1.44.

In terms of historical performance, MongoDB has seen significant fluctuations in its stock price over the last twelve months. Its 12-month low was recorded at $135.15 while it reached a peak of $439.00 during this period.

With a market capitalization of approximately $25.14 billion, MongoDB is positioned as a major player in the technology sector. Additionally, it has sustained an impressive price-to-earnings ratio (-76.28) and maintains a beta value of 1.13 for measuring systematic risk associated with its stock.

Institutional investors have also shown great interest in MongoDB lately by making notable changes to their positions in the company’s shares.

Price T Rowe Associates Inc. MD, for instance, raised their stake in MongoDB by 13.4% during the first quarter. As a result, they now own a staggering 7,593,996 shares of the company’s stock amounting to an estimated value of $1,770,313,000.

Vanguard Group Inc., another influential institutional investor, increased its position in MongoDB by 2.1% during the same period. They currently hold 5,970,224 shares valued at approximately $2,648,332,000.

Jennison Associates LLC has also made significant moves by acquiring an additional 1,986,767 shares of MongoDB’s stock during the second quarter. Their current ownership constitutes 1,988,733 shares valued at $817,350,000.

Franklin Resources Inc., on the other hand,

raised its position in MongoDB by 6.4% during the fourth quarter which now amounts to 1,962574 shares with an estimated value of around $386.313 million.

State Street Corp’s recent activities include lifting its stake in MDB by 1.8% during the first quarter and consequently owning approximately 1,386773 shares worth $323.280 million.

These actions from prominent institutional investors indicate a strong belief in the future growth potential of MongoDB.

In terms of financial performance and outlook for shareholders,MongoDB announced its quarterly earnings results on Thursday as of June 1st. The company exceeded expectations with earnings per share (EPS) standing at $0.56 compared to a consensus estimate of $0.18 EPS – a significant beat by $0.38.For this quarter ended June,it generated revenue amounting to $368.28 million topping analyst estimates who anticipated $347.77 million.The remarkable achievement is further substantiated by noting that MongoDB achieved a robust year-over-year revenue increase of 29%. However,it is imperative to mention that the company has exhibited a negative return on equity of 43.25% along with a negative net margin of 23.58%. Nevertheless, these numbers should not overshadow the overall growth trajectory that MongoDB is experiencing.

Considering the historical perspective and future outlook, it is expected that MongoDB’s earnings per share for the current fiscal year will be -2.8.This projection reflects the company’s steady progress in navigating challenges and boosting its financial performance.

Overall, MongoDB maintains a strong position in the technology industry as demonstrated by its positive analyst ratings and stock price performance. With increasing interest from institutional investors and a solid financial performance in recent times,MongoDB appears to be an attractive investment opportunity for those seeking exposure to the technology sector.

MongoDB: Analyst Endorsements and Insider Transactions Indicate Continued Success in the Tech Sector

MongoDB’s Success Continues with Analyst Endorsements and Insider Transactions

Date: August 14, 2023

In the bustling world of technology and data management, MongoDB stands as one of the leading companies revolutionizing the industry. As we delve into recent news surrounding the company, we uncover a series of significant developments that affirm MongoDB’s position as a market leader. From glowing reviews by research analysts to notable insider transactions, the journey of this innovative company continues to captivate investors and industry experts alike.

Let us begin with the accolades MongoDB has received from esteemed research analysts in recent times. In their assessments, several prominent institutions expressed unwavering confidence in the company’s potential for sustained growth. One such endorsement came from 22nd Century Group, which reaffirmed its “maintains” rating on MongoDB shares in a research note issued on June 26th. Similarly, renowned online marketplace platform 58.com also reaffirmed its “maintains” rating for MongoDB on the same day.

Citigroup, another prominent financial institution, raised their target price on MongoDB shares from $363.00 to an impressive $430.00 just days prior to the release of their report on June 2nd. Such recognition underscores not only MongoDB’s outstanding performance but also its vast potential for continued success.

VNET Group also voiced their support through a “maintains” rating on MongoDB shares in their comprehensive research report published on June 26th. Their assessment further galvanizes investor confidence towards MongoDB and solidifies its standing within the highly competitive tech sector.

Moreover, esteemed investment firm Sanford C. Bernstein impressed with their bold move by boosting their price objective on MongoDB from $257.00 to $424.00 in their research report dated June 5th. This stands as further testament to analysts’ belief that MongoDB possesses tremendous value and is poised for remarkable future growth.

On another front, significant insider transactions have occurred within MongoDB, demonstrating the faith stakeholders have in the company. Director Dwight A. Merriman sold 3,000 shares of MongoDB’s stock on June 1st at an average price of $285.34, totaling an impressive $856,020.00. Following this transaction, his ownership stakes in the company amount to a whopping 1,219,954 shares valued at approximately $348,101,674.36.

Simultaneously, CEO Dev Ittycheria made headlines by selling 20,000 shares of MongoDB stock also on June 1st at an average price of $287.32. The total value of this transaction amounted to a substantial $5,746,400.00. With this sale concluded, the chief executive officer retains ownership of 262,311 shares that hold a market value of approximately $75,367,196.52.

These insider transactions showcase the confidence and commitment displayed by MongoDB’s key individuals towards their own organization’s future prospects. Such trust from insiders is often taken as a positive sign by potential investors.

In conclusion, MongoDB has garnered immense recognition from industry experts and witnessed notable insider activity that underscore its resilience and growth trajectory in the technology sector. With glowing ratings and increased target prices from renowned financial institutions combined with enthusiastic insider transactions reinforcing commitment to the company’s success story – MongoDB seems poised for continued prosperity ranging beyond its competition.

As we continue to navigate through the rapidly evolving world of technology and data management services, companies like MongoDB will undoubtedly continue capturing our attention with their transformative innovations while presenting lucrative opportunities for investment and advancement.