Month: December 2023

MMS • RSS

Database management giant MongoDB says it’s investigating a security incident that has resulted in the exposure of some information about customers.

The New York-based MongoDB helps more than 46,000 companies, including Adobe, eBay, Verizon and the U.K.’s Department for Work and Pensions, manage their databases and vast stores of data, according to its website. The company’s offerings include its MongoDB self-hosted open source database and its Atlas database-as-a-service offering.

In a notice published late on Saturday, MongoDB said it was actively investigating a “security incident involving unauthorized access to certain MongoDB corporate systems, which includes exposure of customer account metadata and contact information.”

MongoDB said it first detected suspicious activity on Wednesday but noted that “unauthorized access has been going on for some period of time before discovery.” It’s not known how long hackers had access to MongoDB’s systems; MongoDB CISO Lena Smart declined to say when asked by TechCrunch.

In an update published on Sunday, MongoDB said it does not believe hackers accessed any customer data stored in MongoDB Atlas, the company’s hosted database offering.

But the company confirmed that it is “aware” that hackers accessed some of its corporate systems that contained customer names, phone numbers, email addresses and other unspecified customer account metadata.

For one customer, this included system logs, MongoDB said. System logs can include information about the running of a database or its underlying system. CISO Smart said this customer was notified, and that it has “found no evidence that any other customers’ system logs were accessed.”

It’s not clear what technical evidence — such as its own logs — MongoDB has to detect malicious activity on its network.

MongoDB declined to say how many customers may be affected by the compromise of its corporate systems. It is not yet known how and when the company was compromised, which corporate systems were accessed or whether it has notified the U.S. Securities and Exchange Commission. As of December 18, organizations must disclose “material” cybersecurity incidents to the regulator within four days of discovery.

MongoDB recommends that customers should remain vigilant for social engineering and phishing attacks, and activate phishing-resistant multi-factor authentication on their accounts, which the company does not require customers to use by default.

The company noted over the weekend that it was “experiencing a spike in login attempts resulting in issues for customers attempting to log in to Atlas and our Support Portal,” but said this was unrelated to the security incident.

MMS • RSS

It has been a busy few days on the cybersecurity front as three notable companies confirmed hacks over the last two days: MongoDB Inc., North Face and Vans owner VF Corp., and mortgage broker Mr. Cooper Group Inc.

The first hack, that of MongoDB, was confirmed over the weekend and involved its corporate systems being breached and customer data exposed. Bleeping Computer reports that MongoDB sent emails to affected customers on Dec. 13.

“MongoDB is investigating a security incident involving unauthorized access to certain MongoDB corporate systems,” the email sent to affected customers stated. “This includes exposure of customer account metadata and contact information. At this time, we are NOT aware of any exposure to the data that customers store in MongoDB Atlas.”

Although further details were unavailable, a spokesperson for MongoDB stated that it’s still investigating the breach.

The breach of VF Corp. is described by the company as involving hackers encrypting “some” systems and stealing personal data.

While describing the attack in a disclosure with the U.S. Securities and Exchange Commission as “material cybersecurity incidents,” the ransomware duck rule comes into play: If it sounds like ransomware and VF Corp. says data was encrypted, it likely is ransomware.

The company said in its disclosure that it first identified hackers in its systems on Dec. 13 and that the attack is expected to affect the company’s operations in the lead-up to the holiday shopping period. The hack is possibly the first filing with the SEC under new rules, which state that companies must disclose any cybersecurity incidents within four days of their occurrence.

The largest of the three hacks, at least as known now, was that on Mr. Cooper previously known as Nationstar Mortgage Holdings Inc. The hack has reportedly affected 14.7 million former and current customers.

Described by the company simply as a “cyber breach,” the attack involved “substantially all of our current and former customers” sensitive personal information, according to filings reported by ABC News. Information stolen included names, addresses, phone numbers, Social Security numbers, dates of birth and bank account numbers.

Mr. Cooper added that it shut systems to contain the incident at the time it was detected and that it was monitoring the dark web to see if any of the stolen data is released. We “have not seen any evidence that the data related to this incident has been further shared, published, or otherwise misused,” the company told those affected.

As is the case with VF Corp., if it sounds like ransomware, it probably is. Although Mr. Cooper didn’t mention data being encrypted, that it’s monitoring leak sites on the dark web suggests that it’s highly likely the company was targeted in a double-tap ransomware attack. That involves both encryption and data theft, with the ransomware gang threatening to publish stolen data if a ransom is not paid.

“The disclosure of Mr. Cooper’s affected customers highlights the need for the financial industry, particularly nonbank financial institutions, to prioritize cybersecurity,” Nick Tausek, lead security automation architect at security operations company Swimlane Inc., told SiliconANGLE. “The Federal Trade Commission’s amendment to the Safeguards Rule, making it mandatory for non-banking financial institutions to report data breaches within 30 days, came one week before Mr. Cooper’s cyberattack.”

Andrew Costis, chapter lead of the Adversary Research Team at breach and attack simulation company AttackIQ Inc., commented that “just weeks after the FTC mandated 30-day breach reporting for nonbanking financial institutions, Mr. Cooper was hit by this cyberattack, serving as a stark reminder of the vulnerability of these institutions to cybercrime and the urgency of cybersecurity measures in this sector.”

Image: DALL-E 3

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

THANK YOU

MMS • RSS

The National Security Agency has updated its software bill of materials for its IT partners and agencies.

The updated SBOM will provide companies with guidelines for better managing vulnerability disclosures and patches.

“The dramatic increase in cyber compromises over the past five years, specifically of software supply chains, prompted intense scrutiny of measures to strengthen the resilience of supply chains for software used throughout government and critical infrastructure,” the NSA said in rolling out the new requirements.

The new SBOM will emphasize basic security principles including patch management, authentication of software being used, and incident management when a compromise or zero day flaw is detected.

The idea is to provide government agencies with a better footing for flaws and patch management.

Additionally, the updated bill will place further requirements on companies that contract with the US government in regards for addressing vulnerabilities.

“Software developers must take ownership of their customers’ security outcomes rather than treating each product as if it carries an implicit caveat emptor,” the NDA said.

The exposure of vulnerabilities and the ability to address them has only become more prevalent in recent years. With supply chains opening up and suppliers becoming more open to attack, securing the middle ground is more important than ever.

“As Software Bills of Materials become more integral to Cybersecurity Supply Chain Risk Management standards, best practices will become critical to ensuring efficiency and reliability of the software supply chain,” NSA cybersecurity director Rob Joyce said in a statement provided to The Stack.

“Network owners and operators we work with count on NSA to advise them on shoring up their defenses.”

In practice, this means companies have to do more to secure the stack and address the middle ground between the supplier and the end consumer.

MMS • RSS

They disclosed in an email to users and on their website that this unauthorized access might have been going on for some time before its discovery. MongoDB assured that, as of now, there is no known exposure to the customer data stored in MongoDB Atlas. The company noted that the incident did involve exposure to customer account metadata and contact information.

Analysts responded to the incident, highlighting MongoDB’s proactive approach in notifying customers and users about the details of the data breach, such as which systems were accessed and providing actionable steps for enhanced security, like activating multi-factor authentication. This response, according to analysts, reflects MongoDB’s commitment to responsible communication in the aftermath of an attack.

While awaiting further updates from MongoDB to disclose the full extent of the breach, analysts maintain their Outperform rating and a target price of $490 for MongoDB’s shares.

MMS • RSS

© Reuters. Peering Into MongoDB’s Recent Short Interest

Benzinga – by Benzinga Insights, Benzinga Staff Writer.

MongoDB’s (NYSE:MDB) short percent of float has risen 3.45% since its last report. The company recently reported that it has 3.53 million shares sold short, which is 5.69% of all regular shares that are available for trading. Based on its trading volume, it would take traders 3.1 days to cover their short positions on average.

Why Short Interest Matters

Short interest is the number of shares that have been sold short but have not yet been covered or closed out. Short selling is when a trader sells shares of a company they do not own, with the hope that the price will fall. Traders make money from short selling if the price of the stock falls and they lose if it rises.

Short interest is important to track because it can act as an indicator of market sentiment towards a particular stock. An increase in short interest can signal that investors have become more bearish, while a decrease in short interest can signal they have become more bullish.

MongoDB Short Interest Graph (3 Months)

As you can see from the chart above the percentage of shares that are sold short for MongoDB has grown since its last report. This does not mean that the stock is going to fall in the near-term but traders should be aware that more shares are being shorted.

Comparing MongoDB’s Short Interest Against Its Peers

Peer comparison is a popular technique amongst analysts and investors for gauging how well a company is performing. A company’s peer is another company that has similar characteristics to it, such as industry, size, age, and financial structure. You can find a company’s peer group by reading its 10-K, proxy filing, or by doing your own similarity analysis.

According to Benzinga Pro, MongoDB’s peer group average for short interest as a percentage of float is 6.16%, which means the company has less short interest than most of its peers.

Did you know that increasing short interest can actually be bullish for a stock? This post by Benzinga Money explains how you can profit from it.

This article was generated by Benzinga’s automated content engine and was reviewed by an editor.

© 2023 Benzinga.com. Benzinga does not provide investment advice. All rights reserved.

MMS • RSS

On December 16, 2023, MongoDB, Inc. confirmed that it was the recent victim of what appears to be a cyberattack. In this notice, MongoDB explains that the incident resulted in an unauthorized party being able to access consumers’ sensitive information, which includes their metadata and contact information; however, the company’s investigation is ongoing. Upon completing its investigation, MongoDB will be required under federal law to notify any individuals whose confidential information was affected by the recent data security incident.

If you receive a data breach notification from MongoDB, Inc., it is essential you understand what is at risk and what you can do about it. While the MongoDB investigation is not yet complete, the company has confirmed that hackers were able to access customer data, which may expose affected customers to an increased risk of identity theft. A data breach lawyer can help you learn more about how to protect yourself from becoming a victim of fraud or identity theft, as well as discuss your legal options following the MongoDB data breach. For more information, please see our recent piece on the topic here.

What Caused the MongoDB Cyberattack?

The MongoDB cyberattack was only recently announced, and more information is expected in the near future. However, MongoDB’s website notices provide some important information on what led up to the breach. According to this source, on December 13, 2023, MongoDB detected suspicious activity within its corporate network. In response, MongoDB informed law enforcement and began working with an outside forensics firm to assist with the company’s investigation.

Thus far, MongoDB has determined that an unauthorized actor was able to gain access to the company’s corporate network “for some period of time before discovery,” although an exact date was not provided. However, MongoDB notes that there is “no evidence of unauthorized access to MongoDB Atlas clusters,” and the company has “not identified any security vulnerability in any MongoDB product.”

On December 17, 2023, MongoDB posted an update, explaining it is aware of unauthorized access to some corporate systems that contain customer names, phone numbers, and email addresses, among other customer account metadata. If MongoDB confirms confidential information was leaked, it will be required to send data breach letters to anyone who was affected by the recent data security incident. These letters should provide victims with a list of what information belonging to them was compromised.

More Information About MongoDB, Inc.

Founded in 2007, MongoDB, Inc. is a software company based out of New York City, New York. MongoDB created a database platform called “Atlas” that is used by millions of people worldwide, making it the most widely used database platform in the world. MongoDB is publicly traded on the NASDAQ under the symbol “MDB.” MongoDB employs more than 4,626 people and generates approximately $1.5 billion in annual revenue.

MMS • RSS

Major U.S. database management firm MongoDB had some of its corporate systems infiltrated by threat actors, who were able to compromise customer metadata and contact details, reports Hackread.

Immediate action has been taken to respond to the incident, which was first identified on Dec. 13, according to a notification email from MongoDB Chief Information Security Officer Lena Smart, who also urged increased vigilance to phishing and social engineering campaigns, as well as the adoption of anti-phishing multi-factor authentication following the intrusion. “We are still conducting an active investigation and believe that this unauthorized access has been going on for some period of time before discovery. We have also started notifying relevant authorities,” said Smart. Significantly increased login attempts that have hindered access to MongoDB Atlas and Support Portal was also reported by the firm, which clarified that there was no correlation between the surge in login attempts and the breach of its systems.

MMS • Fran Mendez

Subscribe on:

Intro

Thomas Betts: Hi everyone. Before we start today’s podcast, I wanted to tell you about QCon London 2024. Our International Software development conference takes place in the heart of London this April 8th to the 10th. Uncover senior practitioner’s points of view on emerging trends and best practices across topics like AI software architectures, Generative AI, platform engineering, and modern software security. Explore what they’ve learned, techniques they’ve discovered, and the pitfalls to avoid to validate your ideas and plans. I’ll be there hosting a track on connecting systems with speakers talking about APIs, protocols, and observability. Learn more at qconlondon.com. We hope to see you there.

Hello, and thank you for joining us for another episode of the InfoQ podcast. I’m Thomas Betts, and today I’m speaking with Fran Mendez about AsyncAPI. Fran is the founder and former project director for AsyncAPI and director of engineering at Postman. I previously spoke to him about AsyncAPI back in 2021. Version Three has just come out and I wanted to catch up and see what’s changed. Fran, welcome back to the InfoQ Podcast.

Fran Mendez: Thank you Thomas. Thank you for having me. It’s a pleasure.

What is AsyncAPI? [01:01]

Thomas Betts: So to start off, for the listeners who might not be familiar with AsyncAPI or they haven’t used it yet, I think it’d be useful to back up and say, what is AsyncAPI? What are the problems that it solves and who is it for?

Fran Mendez: Sure. So AsyncAPI, I’ll say, it’s a format, it’s a specification to define interactions in between services or applications that exchange messages, right? What I said, it’s just very generic because I always like to keep it generic because it is what it is. But you can think about it like the exchange of messages that you do through Kafka or Web Socket. And AsyncAPI helps you define this communication in between different applications, as I said, or services. So it helps you say what your application is sending and receiving and what are the messages that are expected to come through and how you can validate them. And also, it’s not just a spec, it’s a bunch of tooling that we provide.

Thomas Betts: The AsyncAPI, if I recall, it came from OpenAPI, and I think people are familiar with that. Swagger became OpenAPI and it was, here’s how I’m going to specify the contracts that my API agrees to. And so, like you said, the validation is very important of that, like here’s the actual contract. And so this is similar, but it’s meant for more the async processing, event-driven architectures.

Fran Mendez: Correct. I tend to avoid using the word contract because of the many meanings of the contract testing and the contract word itself in tech. So yeah, I said definitions because you can use it for contract testing, you can use it for documenting or generating documentation, you can use it for validating on runtime to actually deploy infrastructure, infrastructure as code, and for a myriad of possibilities here. Yeah. And if you want to think about it like this, it’s the same as OpenAPI, formerly known as Swagger, and actually it started from the OpenAPI specification, that’s how I started the spec, and it’s meant for asynchronous interactions.

Documentation is the main use case, but not the only use [02:59]

Thomas Betts: Yeah, I’m glad you mentioned documentation because I think that’s always one of the things, especially with async workflows, communicating what is being communicated and explaining to other people and sometimes just having the ability to, in a consistent way, create that documentation is really, really useful. It just lowers the barrier to entry to using those services.

Fran Mendez: I agree. And it’s actually our main use case so far. It’s also the low hanging fruit of defining your interactions because, yeah, it’s pretty easy and quick to generate documentation, right? It’s the same for OpenAPI. Even though we’re trying to escape from that messaging that says that AsyncAPI is about documentation because we’ve seen that many people are missing the good parts of AsyncAPI, which is also code generation, runtime validation, infrastructure as code, and many other things that you could be doing. So documentation is going to be there, but we want to make clear that it’s not just for documentation. It’s not the documentation framework, right?

Thomas Betts: Those other tools are where some of the power really comes in and I think people who’ve gotten invested in the OpenAPI ecosystem have learned, “Oh, I have this contract that my API publishes and I just need to generate a client and I can just go find that swagger.json or YAML file and run it through and all of a sudden I’ve got hundreds or thousands of lines of client code that I don’t need to write and support and they work.”

Breaking changes in V3 – Publish and Subscribe [04:20]

Thomas Betts: So Version 3, what were some of the major goals with coming up with a new version and is this a major version in the sense of, there are some breaking changes?

Fran Mendez: Oh yeah, there are. We follow SemVer as much as we can, of course, which is usually… I mean, we follow it. The problem is that with specifications, it’s always hard to understand what’s going to be a breaking change and what’s not going to be a breaking change. Some people argue that everything is a breaking change. So yeah, we try to, let’s say, adapt to what the majority thinks or what we think the majority thinks is a breaking change here.

So Version Three, I’m glad you asked because it’s coming pretty soon actually. By the time we are publishing this podcast, it’ll be already out. So yeah. So it comes with lots of goodies, but the major goal that we had and the reason we started working on Version 3 is because of the confusion that most people had with publish and subscribe. Let me explain a little bit what I mean.

In AsyncAPI, you have two verbs, publish and subscribe, unlike in OpenAPI that you have get, put, post and all the HTTP verbs. In AsyncAPI, you have two. Thing is that it wasn’t so much about publish and subscribe meaning, it’s more about the perspective of this AsyncAPI definition. So this AsyncAPI definition, following the same approach as OpenAPI, is defining what others can do with your application. So if you see a subscribe, it means that you can subscribe to what my application is producing, and the same for publish.

This works well for Rest APIs and client server interactions, but in messaging, most of the interactions are client to client and the server is the broker. So that was highly confusing for people saying, “What does it mean that my clients can use it or can publish to me? I don’t have clients, I don’t even know who these clients are. I’m a client, I’m not a server.”

Yes, that was highly confusing. So we changed that to send and receive instead. But it’s not just a matter of changing the names. So we not only change the names because the problem will still persist, but we changed the perspective of what the document means.

When you have now an AsyncAPI document, this is not defining what others can do with your service or with your application, it’s defining what your application is doing. So if you see a send or receive, it means that your application is sending or is receiving. And if someone else is interested, then they will probably, and I say probably, will have to do the reverse operation. And I’m saying probably because in messaging, just because an application is publishing to, let’s say, a Kafka broker, that doesn’t mean that consumers can connect to Kafka directly. So we see this a lot of times. So I’m pushing something to a Kafka broker, but my customers are probably consuming this information from an HTTP API or a web socket connection on another URL or maybe on another broker because some messages have been forwarded, some others are not.

That doesn’t mean that you have the permissions to subscribe to that same topic on Kafka. And the opposite is actually even, let’s say, more frequent, which is, it’s common to see the pattern where consumers, if you want to call it like that, consumers publish messages to a broker using an HTTP endpoint, which has enhanced authorization mechanisms that the broker usually doesn’t have or the underlying protocol like Kafka doesn’t have for instance. But then you can subscribe to them using the Kafka protocol for instance.

I want to clarify that, that the perspective changed, so that’s really important because that means that it’s no longer from the same perspective as OpenAPI or Swagger if you want. And yeah, we thought about it for two years. We interviewed a bunch of people, we got a lot of use cases from different people, and this is the approach it seemed to make the most sense.

Benefits of the new structure [08:19]

Thomas Betts: That makes sense that it started with OpenAPI. Like if you understand the origin story that, oh, here’s a specification that is kind of defining the definitions of what my service does, and that seemed like a good place for starting with async messaging. But as you got it more evolved over time, that model started to break down. I like the explanation that everyone understands how an API web server works, like HTTP requests, you send a request, you get a response, but the fact that what you are sending and receiving from AsyncAPI, you’re not necessarily talking about that server being the thing you can connect to. And so what comes out one side is not what someone else can consume. Is there still value in saying that? Is that for other people to observe or is it more for you to be able to put that in for documentation, to put it in for tooling and infrastructure needs? What’s the benefit of having that more clearly defined in the structure now?

Fran Mendez: So now this new approach lets you do something that was impossible before. So now it lets you separately define where do you publish the messages and where do you have to subscribe to receive them, as I explained, right? So that’s the example I just was explaining. But it also lets you do something that some people are, let’s say detractors of this approach, but we’re seeing the same problem in OpenAPI and it lets you define what the client, let’s say… I hate to say client, let’s say consumer, because everything is a client here. So what the consumer is able to do, right? So that means that before you had a single AsyncAPI file, and from that AsyncAPI file, you could generate the server or the client the same that you do with the OpenAPI. And in some cases, you can still do server and client. So for instance, with web sockets, you can have a web socket server. It’s a proper server, it’s not a client. But you can also generate the web socket client. Well, that’s a broken model that we found.

That’s also a broken model in OpenAPI that many people are complaining because there are many fields in the definition that are either meant for one or the other. So for instance, if you have a description or a summary or an operation ID, and this applies both to a AsyncAPI and OpenAPI, you’re probably writing it like, “This endpoint accepts a request from the client,” Blah, blah, blah. That’s the server’s perspective, right? It’s talking about what the server is doing. If the operation ID is saying OnPostUser, imagine that the idea of the operation is called OnPostUser, you would expect that this is the server code that is handling a POST request to the Users endpoint. Let’s say if you want to do the same thing from the client and generate the client from the same AsyncAPI document or OpenAPI document, it works for both. You’ll get a code generated that says OnPostUser, and that is not “On.” That is actually sending the post request.

There are many things in a spec that are multiple things, not many, but a bunch of them in the specs that tie them together to a client or a server to one of the perspectives.

What we are suggesting now with V3 is that if you’re going to have an AsyncAPI document for your, let’s say, “server,” I’m quoting here with my hands even though you cannot see it. So if you’re going to have an AsyncAPI document for your “server,” that doesn’t mean that you can reuse it for the “client.” So we tell people to create another one for the “client.” And the reason is that, like I said, just because I’m subscribing or publishing to a topic, that doesn’t mean that you will be able to do the reverse operation on that topic. That’s common. We commonly found that this is not possible.

And it’s also that just because I’m, let’s say, sending a message to three different brokers, that doesn’t mean that you should have access to these three different brokers. I might just want you to access one of them. So you anyway need a subset of my document, not the whole document.

Create AsyncAPI files for producer and consumer [12:17]

Thomas Betts: You’re saying you have two separate files, the server file and the client file, I know you said server and client is confusing, but the producer side, you’re still the one creating both of those documents?

Fran Mendez: Ideally, yes. That’s usually the case. If you’re going to publish it for public usage, for public consumption, then you should probably craft the ideal client experience there and interactions that they can do. But if it’s something internal, you might just want to have one single file, right? One single AsyncAPI file and then let the rest of the teams to figure out how to actually communicate with you. We also see it often. Like, it’s internal, we know how to do it. Where you’re publishing, I can actually subscribe, so that’s not a problem. That is usually not unusual, right?

But yeah, what I’m saying is that you should be crafting these two files if possible, and we’ll be offering tools for that. In case your main AsyncAPI file can be just simply translated to, let’s say, the client version or the outsider version if you want directly reversing the verbs, send and receive, then we’re going to offer a tool for you to quickly change the verbs and so you don’t have to worry about the rest. Yeah, so it will be something quickly. But yeah, the spec now defines that it’ll be what a specific application is doing.

Thomas Betts: Just to extrapolate this out, and this is the naive person’s thing, but let’s just say we have two systems that are talking to each other and they’re sending messages back and forth. System A is going to have a send and receive set of documents, and if I create both of those, then the other service uses the flip side, but don’t they also have to create their own two copies? Don’t we end up with four copies of AsyncAPI documents or have I gone off the rails and that doesn’t make any sense?

Fran Mendez: What I mean, so you can have all of them in a single AsyncAPI file, right? So I’m not saying that you should be creating one for send another one for receive, but it’s instead you create any AsyncAPI document for your application, right? Whatever that means. Let’s say you have client A or system A sending messages, but also receiving messages in different channels. I’m going to create a single AsyncAPI file where I put all this information. My application is sending and receiving here and here and here. On system B, I’ll probably be doing the flip side, as you said, of system A or the flip side of some of them, not all of them because I might not be interested in all the things that you’re doing on system A, and I may also be doing some other operations on system B completely different from system A that are completely different. So it’ll be the flip side. If it’s all internal, most likely where system A is sending, system B will be able to receive directly. So that’s why we’re going to offer that tool so that you can quickly switch the verb if you want.

But if system B is, let’s say, a public client or a partner client, then you probably want to offer them that system B AsyncAPI file, or at least the operations that they should have in their system B or AsyncAPI file, so you might want to craft that. Because like I said, maybe they cannot receive the messages directly in the same channel as where they were published initially by system A. We learned over the last two years that in client-server, it’s a one-to-one relationship, so it’s always the client and the server, there’s no one else. There might be some actors in the middle, but they act transparently as the server. But in event-driven architectures, what we learned is that this is not the same. This is a many to many relationship. This is actually a mash of multiple nodes talking to each other, usually through a broker. So we definitely had to adopt a different perspective when it comes to how to define these communication patterns.

Thomas Betts: Like I said, it’s clearly a breaking change through Version 3?

Fran Mendez: Yes.

Other breaking changes in V3 – Request-reply [16:12]

Thomas Betts: Was that the only thing or did you take the time to add other new features that were maybe not related to that or possibly related to that, but had other benefits?

Fran Mendez: So one thing that we learned the hard way was, we thought now that we’re going to break AsyncAPI, we’re going to produce a Version 3, we cannot just ship it with that breaking change because then people will be like, “I don’t care. This is just your problem. It’s fixing a problem of yours. I’m still fine with Version 2, so I don’t care, I’m not going to migrate.” But because we want to encourage people to keep migrating and growing with us with a feature of the spec and the direction the spec has taken without like, okay, so we’re going to add some more features, valuable features for the users, something that they will actually like to use, love to use. So we are introducing request-reply, support on AsyncAPI. And to me, that is an amazing addition because unlike OpenAPI that has request-response, let’s say, over HTTP, we also have it on HTTP, but you can do different request-reply. We call that patterns of our brokers as well. For instance, I can give you an example.

Over brokers, let’s say, request-reply often works that you publish a message on a specific topic and then you might receive the reply on a different topic or that you publish a message on a given topic and on the same time, you don’t know where you’re going to receive the reply, but the message that you’re publishing to a given topic will contain information metadata saying, “I want you to reply to this other topic.” So this new topic will have to be probably generated on the fly, at runtime, and then the client or the consumer will have to subscribe to that other topic to receive the reply, which is, let’s say, it’s often the same, so the client is the same. It’s the same kind of request-reply pattern that you see over HTTP. It’s just that in some cases, in many cases in event-driven architectures, it’s not over HTTP, it’s over some other protocols like AMQP, like MQTT or Kafka, and then you don’t have responses as such. So yeah, so you have to model them differently.

And all these cases have been considered for Version 3, and you can define these kind of interactions now with Version 3.

Thomas Betts: Yeah, that seems like something that, like you said, came from OpenAPI and that wasn’t a model that existed and it wasn’t simple request-response works, it’s request and reply, and so that’s a different pattern specific to event-driven architectures. And I think that’s what makes AsyncAPI so powerful is it started from OpenAPI, but it was focused on the EDA approach and now you’re trying to bring in more and more of those patterns of here’s how you do a good event-driven architecture, and here’s the patterns we see, and now AsyncAPI is capturing more of those patterns as something you can just document as opposed to, we only have this small set of verbs and nouns we could use to describe what we wanted to do, and now request-reply is a first-class citizen. Is that accurate to describe what’s going on?

Fran Mendez: Correct. Yeah, exactly. I will just add that in AsyncAPI, we didn’t want to define, let’s say, the EDA landscape, it was more like protocol agnostic, so we were just focused on defining the interactions, whether they were over HTTP or any other protocol. So that’s why it’s still possible to document your REST API using V3. You can do it. We don’t yet, let’s say, suggest it or recommend it because we’re not yet there when we compare to OpenAPI when it comes to HTTP-specific features, but we’re getting there. We’re actually getting to a point where you’ll be able to document everything, I mean REST APIs and event-driven architectures with AsyncAPI.

Should you upgrade to V3? [19:52]

Thomas Betts: I think we grossly categorize our users as two groups, the people who are using AsyncAPI now and are probably on version 2 and people who haven’t yet used it. If you’re on version 2, what is the impetus? Why should they upgrade to Version 3 and what are the steps and what effort does it take for them to get there?

Fran Mendez: Reasons to migrate to Version 3 if you’re in version two, I’ll say don’t do it unless you need it, right? So let’s be pragmatic here. So if you think you’re going to need request reply for instance, go ahead and migrate it. If maintaining V2 is becoming a hell for you because of publish and subscribe, because you cannot reuse the channel definitions because it’s not possible to reuse channel definitions in V2, but it is in V3. So if all these things are becoming a pain for you, then migrate to V3. If you’re just fine with V2, then continue using V2. We’re going to keep supporting it for years, so that’s not going to be a problem.

And the best way to start, I would say, is through our tools. So just go to AsyncAPI.com and you’ll find that Studio and many other tools like the AsyncAPI CLI, you have a bunch of tools that will let you convert your V2 documents to V3 quickly and you can even pass a bunch of them at the same time and they will be all converted together.

Improved maintainability [21:08]

Thomas Betts: You mentioned maintainability. So what are some of the things that people struggle with in maintaining their AsyncAPI files? If I recall, they’re just YAML, or maybe it’s JSON just like OpenAPI, but a lot of times, people don’t like editing those by hand. Are there things that are easier to do with V3 or is it just different tools? You mentioned Studio and CLI and other stuff.

Fran Mendez: So that’s the same. That remains the same as YAML and JSON. That’s the spec. That’s the machine readable format. I also hate to edit AsyncAPI files and OpenAPI files by hand. That sucks. And the learning curve is really big. So what we’re going to be focusing on 2024, at least on my team at Postman, one of the things that we’re going to be focusing on is on making that experience a little bit better. So not so much about automating everything… Actually, even though with AI, we could probably automate a bunch of stuff, but the thing is that nobody likes to edit AsyncAPI files by hand. So some things we’re going to be providing on the CLI, on the Studio and other tools are going in that direction of giving you the tools so you don’t have to edit AsyncAPI files by hand. So you can edit them using your mouse and clicking here and there and instructions and even AI, as I said, that’s something that we were exploring, but we have to continue this kind of direction, so yeah. But that’s for 2024.

V3 was over two years in the making [22:28]

Thomas Betts: Always good to have something else on the roadmap. How long have you been working on Version 3? You mentioned two years?

Fran Mendez: Two years.

Thomas Betts: You were already working on this the last time we had you on the show.

Fran Mendez: Yes, we were already working on it, even started a podcast series myself because of that, right? So I started interviewing different people so they could give me their perspective and their use cases and so on, and it resulted in a podcast series. So yeah, that was funny.

Thomas Betts: So you talked a little bit about some of the tools, and I know that’s really the benefit, especially on, I know, OpenAPI, I keep going back to that. The tooling is what makes it so useful and so powerful, and version 2 has a lot of the tools for AsyncAPI established. Are those tools going to have to be upgraded to support Version 3? And when do you expect that to take place? Are people already working on updating the tools?

Fran Mendez: It’s already there. So we got Studio, we got the AsyncAPI Parser, we got Generator, Glee, CLI, Modelina, we have a bunch of tools that let you do different things with AsyncAPI, and they’re already migrated. Even at the time we’re talking right now, it’s already migrated. We made sure that everything is working before we release V3, right?

Thomas Betts: That’s good to know.

Fran Mendez: Yes, because that’s another thing. So we postponed the release of Version 3 for almost six months because of this, because we wanted to give a really good user experience to those who are trying it, right? And yeah, we can release Version 3, but if it’s not usable, who cares?

Getting started with AsyncAPI [23:56]

Thomas Betts: Right. You need to be able to use it. So we’ve talked about the people who have to migrate from version 2 and whether they should or not. What about someone who hasn’t used this at all? Should they start with Version 3? Is there a reason to start with version 2? Is it more their needs or what should the newbies do?

Fran Mendez: No, no. Go straight to Version 3 if you don’t want to suffer the same pains we did. Yeah, yeah, definitely start with Version 3 because actually it’s the latest one, it’s stable, it’s going to be well maintained, like V2 of course, but V2 is not going to be developed anymore. It’s going to be supported, but we’re not going to make changes to V2 anymore. So everything that’s new is going to be on Version 3, 3.1, 3.2, even 4 depending on the kind of change. So if you’re starting new, starting fresh, start with Version 3 because also, the vendors that are supporting AsyncAPI are already migrating their products to support Version Three as well. So you’re not going to encounter any kind of block because of using Version 3.

Thomas Betts: And then if someone wants to get started, what is the basic ‘hello world’ for AsyncAPI?

Fran Mendez: The best way to get a simple case working will be go to the asyncapi.com website and the docs and you’ll find them there. You have a getting start guide there and with a simple hello world example. It’s a little bit long to read here. I’ll say it’s just the AsyncAPI field, the info object with the title and version, and that’s it, actually. You don’t need anything else. We made channels optional and operations is optional, so everything else is optional. The minimal document will be just the AsyncAPI field and an info field, that’s it, with title and version. But yeah, that doesn’t make any sense.

Thomas Betts: As you said, the documentation is like the low hanging fruit. Is that the best hello world is just use it to document my API, don’t use it to auto generate anything yet, just start with the documentation so you learn what it’s there for?

Fran Mendez: So actually I’ll suggest that you go to studio.asyncapi.com and try there. So start there with the examples that you see on documentation and on the right side of Studio, you will immediately see the documentation generated. That’s why I was saying it’s a low hanging fruit because it’s immediate. As you type, you get it, and it’s easy to see. You can download this documentation, you can generate it as HTML, Markdown or whatever you want to do, even use our react component to embed it in your website. I mean, react component and web component as well. So yeah, that is something that is really easy to get started.

But if you’re more in the code side and you don’t care so much about documentation, I’ll suggest that you give Glee an opportunity there. So we have a framework called Glee. For those who don’t know what glee means, it’s like happiness, right?

We gave it this name because that’s what we wanted developers to feel while using the framework. So it’s like, “Oh, that is really easy to get started.” So if you’re creating a websocket API or MQTT client or a Kafka client or something like that, give it a go, give it a spin because it’s really easy to get started. And the cool thing is that Glee will make sure that you only have to worry about your business logic, you don’t have to worry how to connect to Kafka or how to validate the messages and so on. No, no, no, that’s all handled by Glee, and you only have to write your business logic on the functions that you’ll find there. It’s really easy to get started. And if you’re not a JavaScript guy, you have TypeScript as well.

Plans for V4 [27:11]

Thomas Betts: So that covers V3. What’s on the roadmap? Is there going to be a V4? Is that down the road, along ways, and you think V4 has answered a lot of the needs for now?

Fran Mendez: That’s going to be V4 for sure. We don’t know yet what it’s going to contain, but something we learned during the last two years is that we shall not accumulate changes. Just because it’s a major version, that doesn’t mean that we have to accumulate changes like crazy there. So most likely there’s going to be something in the upcoming year or two years, but I’m sure that in 2024, most likely something will pop up that will make us break the spec again and release V4, hopefully. I mean, hopefully. We always try to do it for the good. And we are starting to talk about adopting a more fluent way of releasing new major versions so that we don’t accumulate changes in the first place. And the fact that we release version 4 doesn’t mean that you have to adopt version four immediately, right? So we’ll make it case by case. So if we think it makes sense, go ahead and adopt it, if it doesn’t, then we’re not even going to suggest it.

And there is something, Thomas, if you don’t mind, there’s something I wanted to add. We are also releasing a new version of our parser. So that’s the tool that helps validate the documents and convert them to a structure, in this case in TypeScript and JavaScript, and we’re releasing a new parser with what we call an intent-driven API. So I won’t explain, because that’s for another episode, but I’ll say the audience that to have a look at it because if you’re building tools for AsyncAPI or a product at any company, and it happens that you have to use the parser, adopt a new parser because it’s made in a way… So the API is made in a way that it’s going to save you from future breaking changes as much as possible, right? So the API of the parser is not anymore mapping one by one the structure of the spec like it was before. So now if the structure changes, your code remains the same, you just have to upgrade to the latest version of the parser and it’ll continue working.

Thomas Betts: We’ll include that and everything else in the show notes, links to everything. So I want to thank Fran Mendez for joining me today on the InfoQ Podcast.

Fran Mendez: Thank you. Thanks a lot. Really glad to join you and the InfoQ Community. It’s a pleasure.

Thomas Betts: And listeners, we hope you’ll join us again for a future episode. See you then.

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • RSS

The North Face and Vans owner VF Corporation has been hit by ransomware, making it (The Stack believes) the first company to disclose a “material” security incident under new SEC rules effective today.

MMS • RSS

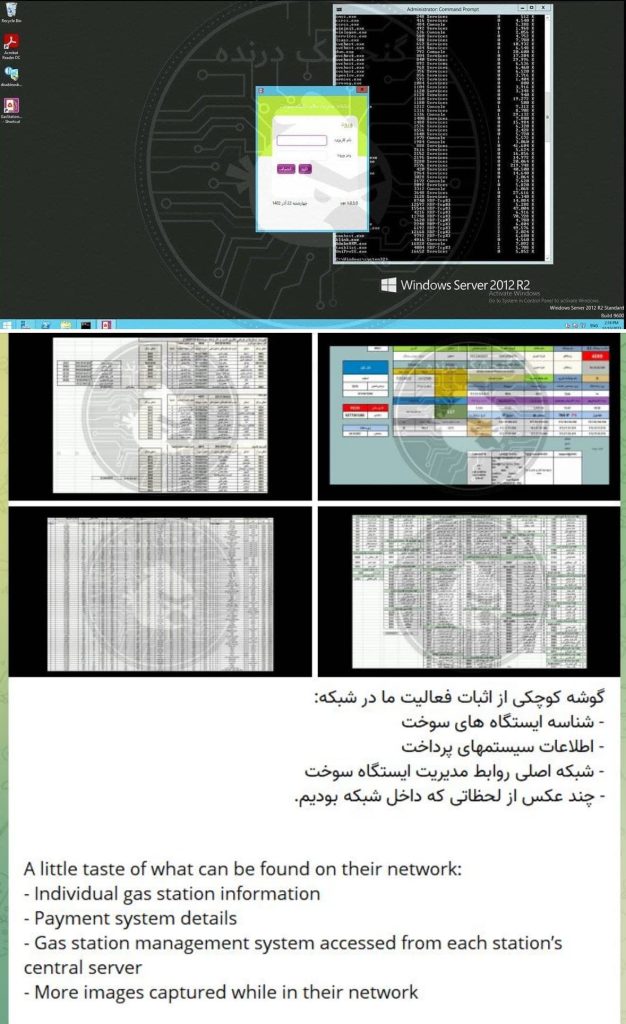

The responsibility for the attack has been claimed by Gonjeshke Darande,” (which means Predatory Sparrow in Persian), a hacker group with pro-Israeli sentiments.

A hacking group linked to Israel has claimed responsibility for a cyberattack that reportedly disrupted most gas stations in Iran, leading to long lines and angry crowds. The group, known as “Gonjeshke Darande,” (which means Predatory Sparrow in Persian) cited that the attack was in response to the Islamic Republic’s aggression.

In a series of Tweets on X (formerly Twitter) in both English and Persian languages, the group explained the reason behind the attack:

“We, Gonjeshke Darande, carried out another cyberattack today, taking out a majority of the gas pumps throughout Iran. This cyberattack comes in response to the aggression of the Islamic Republic and its proxies in the region.”

Addressing Iran’s leader Ayatollah Ali Khamenei, the group said he is playing with fire and will pay the price.

“Khamenei, playing with fire has a price.”

In a statement given to Iran’s state TV, the country’s Oil Minister Javed Owji, said that the attack was caused by external interference and led to service disruption on 70% of Iran’s gas stations. At least 30% of Iran’s gas stations are operational, with the rest gradually resolving service disruptions.

Some petrol stations, particularly in the capital, have experienced software issues with their fuel systems, and experts are working to fix the issue. The possibility of a cyberattack is also being considered.

Gonjeshke Darande revealed that the attack was conducted in a controlled manner, limiting potential damage to emergency services and ensuring a portion of gas stations were left unharmed. The group also emphasized the precautions taken to avoid harm to civilians, claiming that these companies are subject to international sanctions and continue their operations despite restrictions.

“We delivered warnings to emergency services across the country before the operation began, and ensured a portion of the gas stations across the country were left unharmed for the same reason, despite our access and capability to completely disrupt their operation,” the attackers noted.

This isn’t the first time Gonjeshke Darande has targeted Iranian infrastructure. It claimed responsibility for a cyberattack on Iran’s major steel companies in June 2022. The attack started a fire in a steel factory, releasing top secret documents proving the companies’ affiliation with Iran’s Revolutionary Guard Corps. The plant’s CEO confirmed no harm

The country’s civil defence agency is currently investigating the Monday attack on Iran’s gas stations, and Israeli media has covered the alleged attack. It is worth noting that there has been no statement from the Israeli government about the cyberattack.

RELATED ARTICLES

- Iranian Gas Stations Crippled After Suffering Cyberattack

- Watch as hackers disrupt Iran’s prison computers; leak live footage

- Anonymous Hits Iranian State Sites, Hacks Over 300 CCTV Cameras

- Iran State-Run TV’s Live Transmission Hacked by Edalate Ali Hackers

- Iranian State TV Hacked During President’s Speech on Revolution Day

- Iran’s Fars News Agency website hacked as part of anti-govt protests

- Black Reward Hackers Steal Emails from Iran’s Atomic Energy Agency