At the recent Google Cloud Next 2025, Google Cloud announced the preview of Firestore with MongoDB compatibility, introducing support for the MongoDB API and query language. This capability allows users to store and query semi-structured JSON data within Google Cloud’s real-time document database.

Firestore with MongoDB compatibility is backed by a serverless infrastructure offering single-digit-millisecond read performance, automatic scaling, and high availability. Minh Nguyen, senior product manager at Google Cloud, and Patrick Costello, engineering manager at Google Cloud, stated:

“Firestore developers can now take advantage of MongoDB’s API portability along with Firestore’s differentiated serverless service, to enjoy multi-region replication with strong consistency, virtually unlimited scalability, industry-leading high availability of up to 99.999% SLA, and single-digit milliseconds read performance.”

The backend of Firestore automatically replicates data across availability zones and regions, ensuring no downtime or data loss. Future developments will include data interoperability between Firestore’s MongoDB compatible interface and Firestore’s real-time and offline SDKs.

.ai-rotate {position: relative;}

.ai-rotate-hidden {visibility: hidden;}

.ai-rotate-hidden-2 {position: absolute; top: 0; left: 0; width: 100%; height: 100%;}

.ai-list-data, .ai-ip-data, .ai-filter-check, .ai-fallback, .ai-list-block, .ai-list-block-ip, .ai-list-block-filter {visibility: hidden; position: absolute; width: 50%; height: 1px; top: -1000px; z-index: -9999; margin: 0px!important;}

.ai-list-data, .ai-ip-data, .ai-filter-check, .ai-fallback {min-width: 1px;}

Details about supported query and projection operators and features by MongoDB API version are available, along with limitations and behavior differences. Text search operators are currently unsupported.

For more information, explore the following links:

MongoDB announced several updates at Google Cloud Next ’25, emphasizing their collaboration with Google Cloud.

MongoDB Atlas has expanded availability to Google Cloud regions in Mexico and South Africa. The company was recognized as the 2025 Google Cloud Partner of the Year for Data & Analytics – Marketplace, marking MongoDB’s sixth consecutive year as a partner of the year.

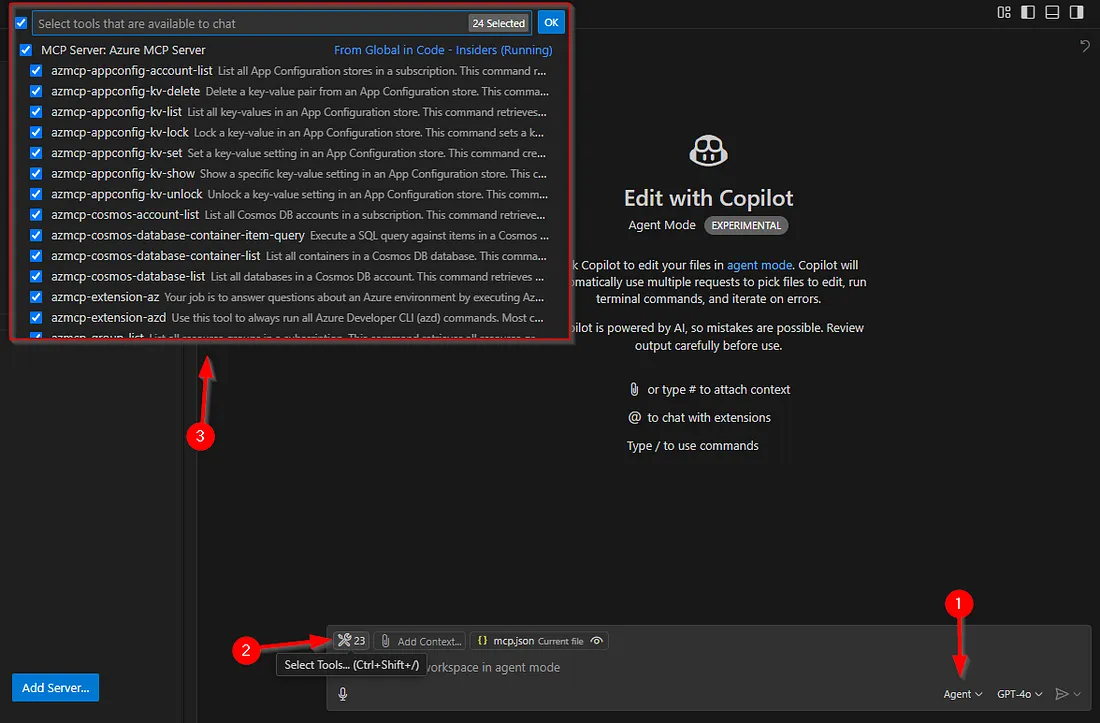

MongoDB is enhancing developer productivity through integrations with Google Cloud’s Gemini Code Assist. This tool allows developers to access MongoDB documentation and code snippets directly within their Integrated Development Environments (IDEs).

Additionally, MongoDB is now integrated with Project IDX, which helps developers set up MongoDB environments quickly. Developers can also easily integrate MongoDB Atlas with Firebase, using a new Firebase extension for MongoDB Atlas for real-time synchronization.

For more details, visit:

Google Cloud introduced numerous database enhancements at its Next 2025 conference, including new AI features in AlloyDB and a MongoDB-compliant API for Firestore. AlloyDB has incorporated the open-source pgvector extension, allowing storage and querying of vector embeddings.

In April 2024, Google Cloud added the Scalable Nearest Neighbor (ScaNN) algorithm to AlloyDB, significantly enhancing performance metrics. This was highlighted by Andi Gutmans, VP & GM for databases at Google:

“We’re seeing thousands and thousands of customers doing vector processing. Target went into production with AlloyDB for their online retail search and they have a 20% better hit rate on recommendations. That’s real revenue.”

For more on this, check:

Google Cloud Migration Center simplifies the transition from on-premises servers to Google Cloud, which significantly enhances performance and reduces operational costs. The integration of MongoDB cluster assessment into the Migration Center allows for deeper insights into MongoDB deployments.

Using Migration Center provides significant advantages, including:

- Automated discovery and inventory of MongoDB clusters.

- Enhanced understanding of configuration and resource utilization.

- A unified platform for asset discovery and migration tools.

For further insights, see:

MongoDB Atlas now supports native JSON for BigQuery, simplifying data transformations and reducing operational costs. This integration allows businesses to analyze data with better flexibility and efficiency.

MongoDB also introduced support for Data Federation and Online Archive, enabling users to manage and archive cold data seamlessly.

Discover more at:

For a secure and smooth login experience, explore passwordless authentication solutions at mojoauth. Quickly integrate passwordless authentication for web and mobile applications to enhance user experience and security. Contact us today to learn more about our services!

*** This is a Security Bloggers Network syndicated blog from MojoAuth – Go Passwordless authored by Dev Kumar. Read the original post at: https://mojoauth.com/blog/google-cloud-enhances-databases-with-firestore-and-mongodb-features/