Month: June 2025

MMS • RSS

Artificial intelligence is the greatest investment opportunity of our lifetime. The time to invest in groundbreaking AI is now, and this stock is a steal!

AI is eating the world—and the machines behind it are ravenous.

Each ChatGPT query, each model update, each robotic breakthrough consumes massive amounts of energy. In fact, AI is already pushing global power grids to the brink.

Wall Street is pouring hundreds of billions into artificial intelligence—training smarter chatbots, automating industries, and building the digital future. But there’s one urgent question few are asking:

Where will all of that energy come from?

AI is the most electricity-hungry technology ever invented. Each data center powering large language models like ChatGPT consumes as much energy as a small city. And it’s about to get worse.

Even Sam Altman, the founder of OpenAI, issued a stark warning:

“The future of AI depends on an energy breakthrough.”

Elon Musk was even more blunt:

“AI will run out of electricity by next year.”

As the world chases faster, smarter machines, a hidden crisis is emerging behind the scenes. Power grids are strained. Electricity prices are rising. Utilities are scrambling to expand capacity.

And that’s where the real opportunity lies…

One little-known company—almost entirely overlooked by most AI investors—could be the ultimate backdoor play. It’s not a chipmaker. It’s not a cloud platform. But it might be the most important AI stock in the US owns critical energy infrastructure assets positioned to feed the coming AI energy spike.

As demand from AI data centers explodes, this company is gearing up to profit from the most valuable commodity in the digital age: electricity.

The “Toll Booth” Operator of the AI Energy Boom

- It owns critical nuclear energy infrastructure assets, positioning it at the heart of America’s next-generation power strategy.

- It’s one of the only global companies capable of executing large-scale, complex EPC (engineering, procurement, and construction) projects across oil, gas, renewable fuels, and industrial infrastructure.

- It plays a pivotal role in U.S. LNG exportation—a sector about to explode under President Trump’s renewed “America First” energy doctrine.

Trump has made it clear: Europe and U.S. allies must buy American LNG.

And our company sits in the toll booth—collecting fees on every drop exported.

But that’s not all…

As Trump’s proposed tariffs push American manufacturers to bring their operations back home, this company will be first in line to rebuild, retrofit, and reengineer those facilities.

AI. Energy. Tariffs. Onshoring. This One Company Ties It All Together.

While the world is distracted by flashy AI tickers, a few smart investors are quietly scooping up shares of the one company powering it all from behind the scenes.

AI needs energy. Energy needs infrastructure.

And infrastructure needs a builder with experience, scale, and execution.

This company has its finger in every pie—and Wall Street is just starting to notice.

Wall Street is noticing this company also because it is quietly riding all of these tailwinds—without the sky-high valuation.

While most energy and utility firms are buried under mountains of debt and coughing up hefty interest payments just to appease bondholders…

This company is completely debt-free.

In fact, it’s sitting on a war chest of cash—equal to nearly one-third of its entire market cap.

It also owns a huge equity stake in another red-hot AI play, giving investors indirect exposure to multiple AI growth engines without paying a premium.

And here’s what the smart money has started whispering…

The Hedge Fund Secret That’s Starting to Leak Out

This stock is so off-the-radar, so absurdly undervalued, that some of the most secretive hedge fund managers in the world have begun pitching it at closed-door investment summits.

They’re sharing it quietly, away from the cameras, to rooms full of ultra-wealthy clients.

Why? Because excluding cash and investments, this company is trading at less than 7 times earnings.

And that’s for a business tied to:

- The AI infrastructure supercycle

- The onshoring boom driven by Trump-era tariffs

- A surge in U.S. LNG exports

- And a unique footprint in nuclear energy—the future of clean, reliable power

You simply won’t find another AI and energy stock this cheap… with this much upside.

This isn’t a hype stock. It’s not riding on hope.

It’s delivering real cash flows, owns critical infrastructure, and holds stakes in other major growth stories.

This is your chance to get in before the rockets take off!

Disruption is the New Name of the Game: Let’s face it, complacency breeds stagnation.

AI is the ultimate disruptor, and it’s shaking the foundations of traditional industries.

The companies that embrace AI will thrive, while the dinosaurs clinging to outdated methods will be left in the dust.

As an investor, you want to be on the side of the winners, and AI is the winning ticket.

The Talent Pool is Overflowing: The world’s brightest minds are flocking to AI.

From computer scientists to mathematicians, the next generation of innovators is pouring its energy into this field.

This influx of talent guarantees a constant stream of groundbreaking ideas and rapid advancements.

By investing in AI, you’re essentially backing the future.

The future is powered by artificial intelligence, and the time to invest is NOW.

Don’t be a spectator in this technological revolution.

Dive into the AI gold rush and watch your portfolio soar alongside the brightest minds of our generation.

This isn’t just about making money – it’s about being part of the future.

So, buckle up and get ready for the ride of your investment life!

Act Now and Unlock a Potential 100+% Return within 12 to 24 months.

We’re now offering month-to-month subscriptions with no commitments.

For a ridiculously low price of just $9.99 per month, you can unlock our in-depth investment research and exclusive insights – that’s less than a single fast food meal!

Here’s why this is a deal you can’t afford to pass up:

- Access to our Detailed Report on our AI, Tariffs, and Nuclear Energy Stock with 100+% potential upside within 12 to 24 months

- BONUS REPORT on our #1 AI-Robotics Stock with 10000% upside potential: Our in-depth report dives deep into our #1 AI/robotics stock’s groundbreaking technology and massive growth potential.

- One New Issue of Our Premium Readership Newsletter: You will also receive one new issue per month and at least one new stock pick per month from our monthly newsletter’s portfolio over the next 12 months. These stocks are handpicked by our research director, Dr. Inan Dogan.

- One free upcoming issue of our 70+ page Quarterly Newsletter: A value of $149

- Bonus Content: Premium access to members-only fund manager video interviews

- Ad-Free Browsing: Enjoy a month of investment research free from distracting banner and pop-up ads, allowing you to focus on uncovering the next big opportunity.

- Lifetime Price Guarantee: Your renewal rate will always remain the same as long as your subscription is active.

- 30-Day Money-Back Guarantee: If you’re not absolutely satisfied with our service, we’ll provide a full refund within 30 days, no questions asked.

Space is Limited! Only 1000 spots are available for this exclusive offer. Don’t let this chance slip away – subscribe to our Premium Readership Newsletter today and unlock the potential for a life-changing investment.

Here’s what to do next:

1. Head over to our website and subscribe to our Premium Readership Newsletter for just $9.99.

2. Enjoy a month of ad-free browsing, exclusive access to our in-depth report on the Trump tariff and nuclear energy company as well as the revolutionary AI-robotics company, and the upcoming issues of our Premium Readership Newsletter.

3. Sit back, relax, and know that you’re backed by our ironclad 30-day money-back guarantee.

Don’t miss out on this incredible opportunity! Subscribe now and take control of your AI investment future!

No worries about auto-renewals! Our 30-Day Money-Back Guarantee applies whether you’re joining us for the first time or renewing your subscription a month later!

MMS • RSS

The popularity of Laravel has improved recently as more developers have started to adopt the technology. Laravel has an easy-to-understand syntax that boosts productivity by letting the developer focus on the core features of their application and not bother with repetitive tasks like authentication and sending emails. It has a very vibrant community of users and great learning materials.

Freshly created Laravel applications are configured to use relational databases. The purpose of this tutorial is to show how to use MongoDB in a Laravel application. We will build a simple task reminder system to achieve this.

Prerequisites

The following tools and technologies are required to follow along effectively with this tutorial.

- A free MongoDB Atlas cluster

- PHP and Composer

- NPM

- Basic knowledge of the Laravel framework

- Familiarity with MongoDB and NoSQL databases

Environment setup

To work with MongoDB, we need to configure our development environment. We need to ensure that all the necessary development dependencies are installed and configured properly. Make sure you have the following installed.

- PHP and the MongoDB extension

- Composer

Project setup

To get started building our reminder system, the first thing to do is create a new Laravel project. We can do so using the composer with the command below:

composer create-project laravel/laravel LaravelMongodbProject

cd LaravelMongodbProject

Install MongoDB Laravel package

A freshly created Laravel project when installed comes with default configurations for relational databases like MySql and PostgreSQL. MongoDB does not work in Laravel by default. We need to install the Laravel MongoDB package and also do a little configuration in config/database.php. Proceed to install the package using the command below:

composer require mongodb/laravel-mongodb

Configure database

Once the installation of the Laravel MongoDB package is completed, the next step is to add our MongoDB database connection to our config/database.php file to complete the configuration. Copy the code below and paste it in the connections array that contains configurations for other database types.

return [

'connections' => [

'mongodb' => [

'driver' => 'mongodb',

'dsn' => env('MONGODB_URI'),

'database' => 'YOUR_DATABASE_NAME',

],

//You can keep other existing connections

],

Let’s take a moment to explain. The dsn value is obtained from the .env file. In your .env file, create a value for 'MONGODB_URI and set it to the value of your MongoDB Atlas connection string, like below:

MONGODB_URI="<>"

DB_CONNECTION=mongodb

Authentication with Laravel Breeze

We have installed and configured our application to work with MongoDB. Let’s proceed to authentication. Laravel simplifies the implementation of authentication by providing packages like Laravel Breeze, Laravel Fortify, and Laravel Jetstream. In this tutorial, we will use Laravel Breeze for our authentication. We need to install it using Composer with the command below:

composer require laravel/breeze --dev

Once the installation is complete, proceed by running the next set of commands.

php artisan key:generate

php artisan breeze:install

php artisan migrate

php artisan db:seed

npm install

npm run dev

This will prompt you to choose your preferred stack and testing package like the sample below. For the purpose of this article, we will select the first option (Blade and Alpine).

┌ Which Breeze stack would you like to install? ───────────────┐

│ > ● Blade with Alpine │

│ ○ Livewire (Volt Class API) with Alpine │

│ ○ Livewire (Volt Functional API) with Alpine │

│ ○ React with Inertia │

│ ○ Vue with Inertia │

│ ○ API only

Afterward, it will add authentication views, routes, controllers, and other related resources to your application.

At this point, let’s serve the project using Laravel’s built-in server and confirm that everything works properly. Serve your project using the command below:

php artisan serve

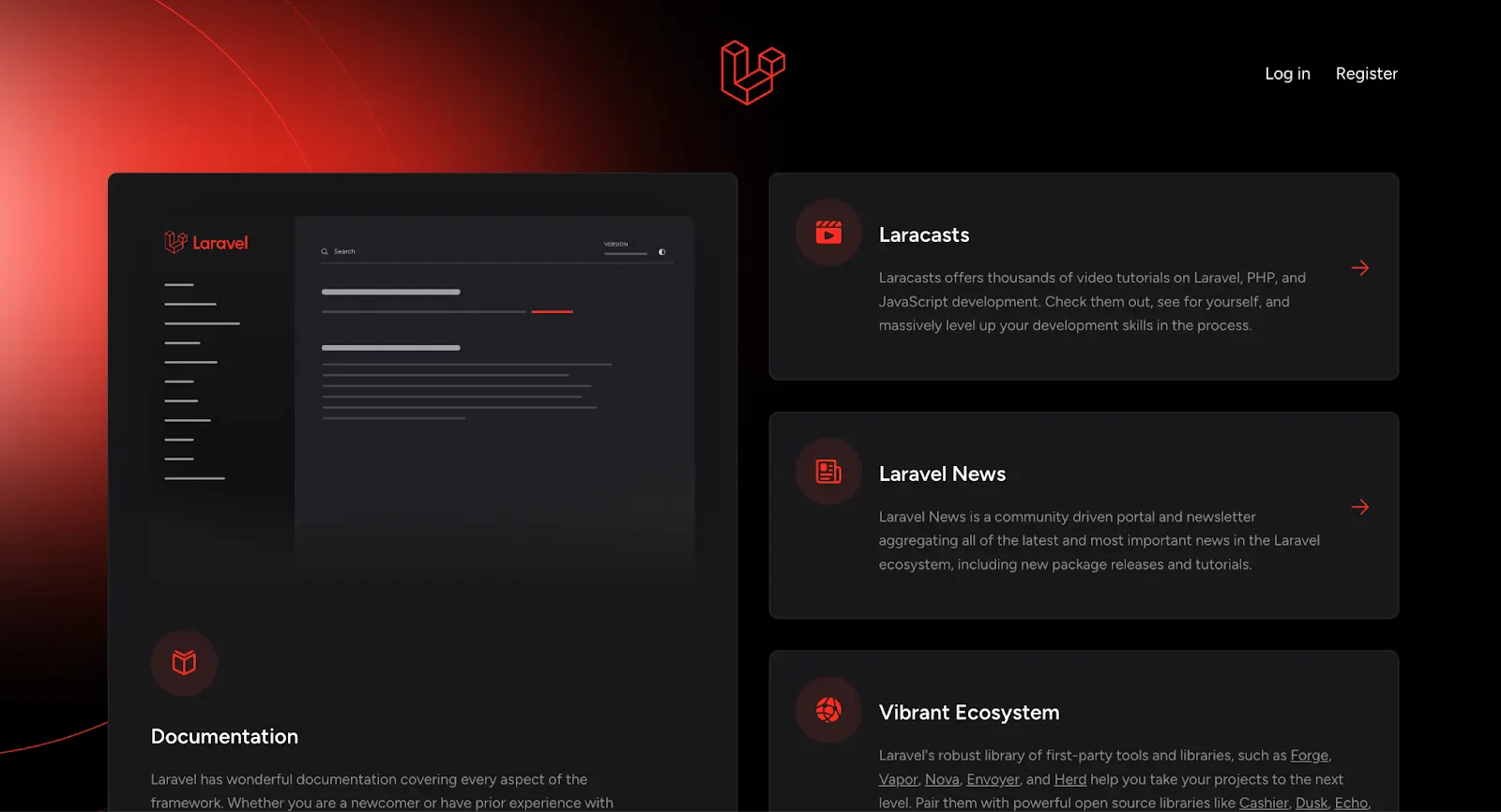

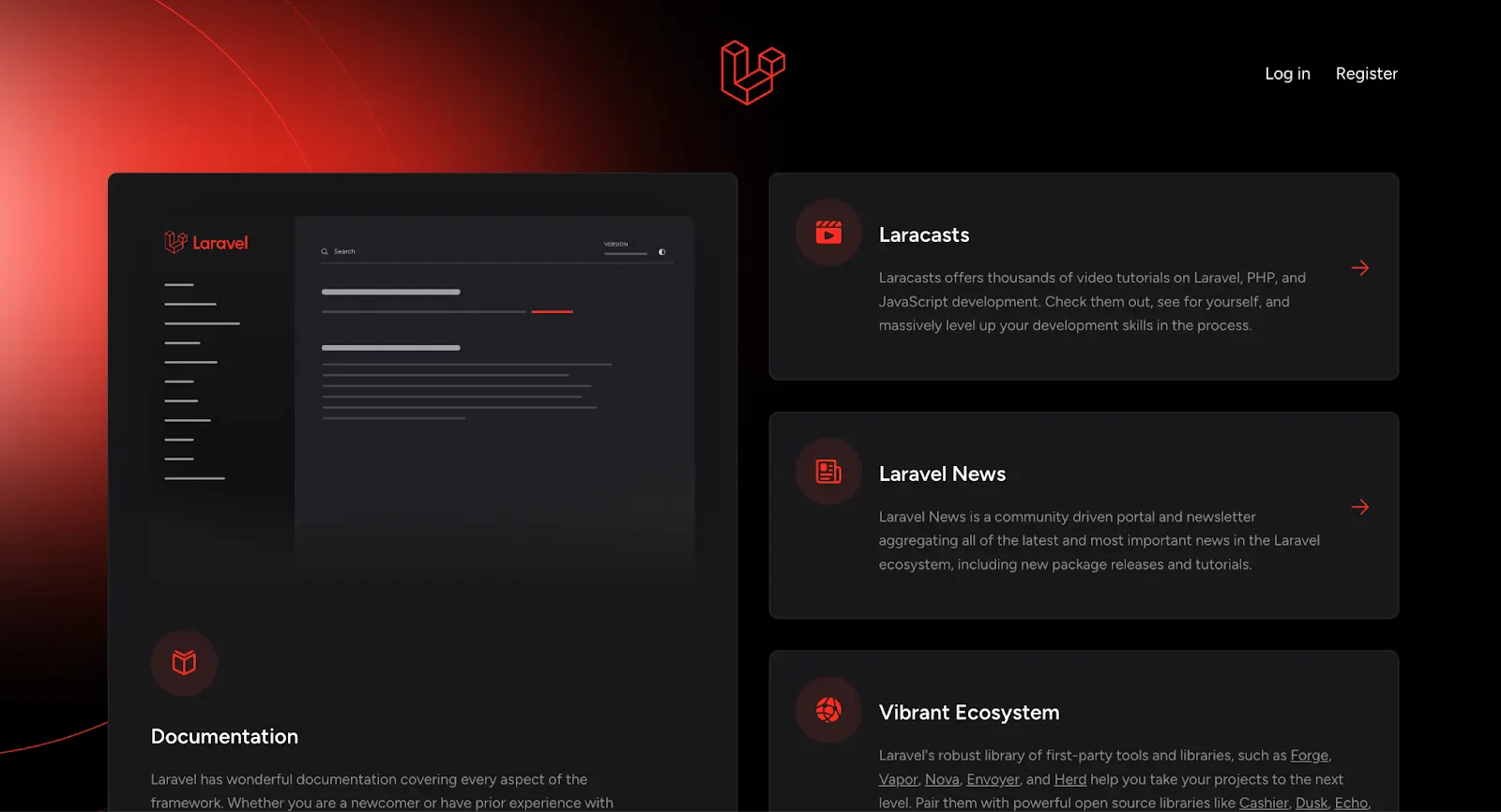

The project should be served at 127.0.0.1:8000. In case the port 8000 is already in use, Laravel will switch to a new available port. If everything was done right, your screen should look like the image below

You can log in using these credentials: email is “test@example.com” and password is “password”.

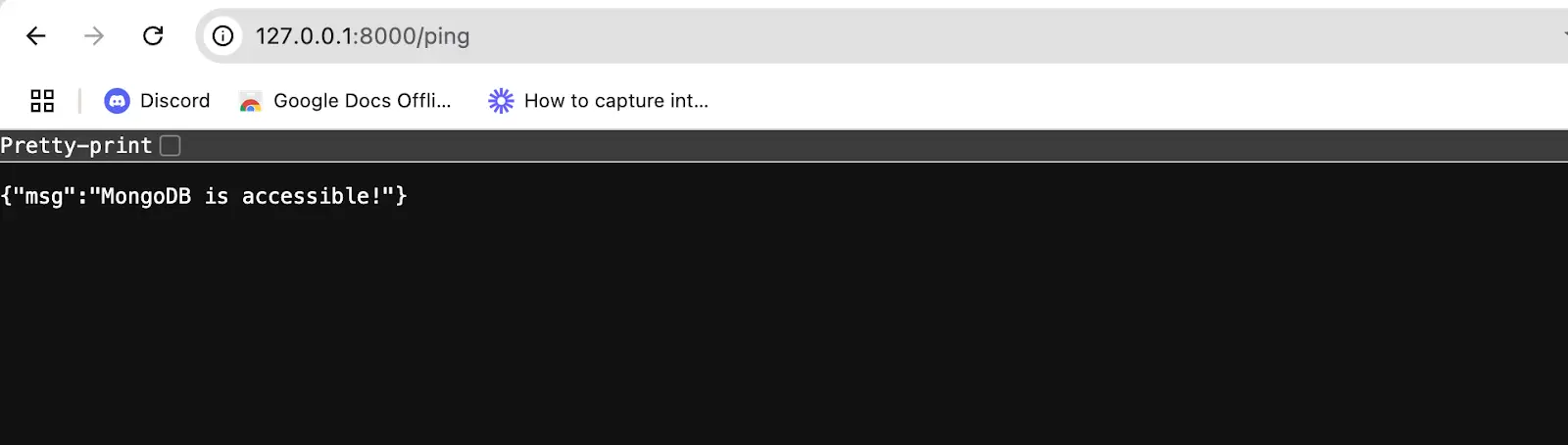

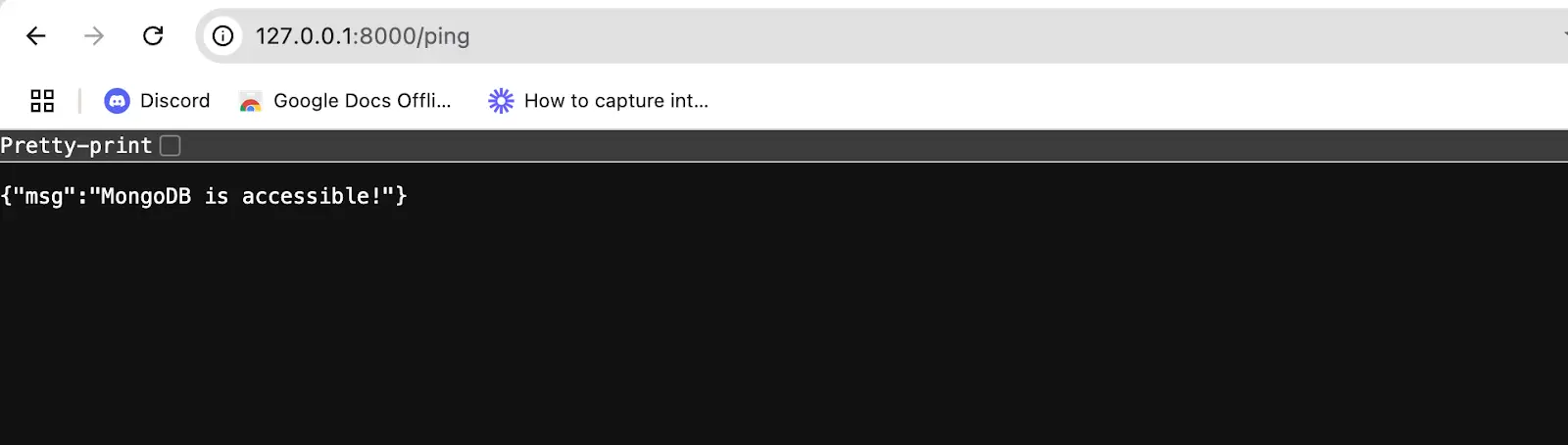

To make sure that the Laravel MongoDB package was configured properly, let’s create a route to ping our MongoDB cluster. Add the following to route/web.php.

Route::get('/ping', function (Request $request) {

$connection = DB::connection('mongodb');

try {

$connection->command(['ping' => 1]);

$msg = 'MongoDB is accessible!';

} catch (Exception $e) {

$msg = 'You are not connected to MongoDB. Error: ' . $e->getMessage();

}

return ['msg' => $msg];

});

Visit this route in your browser. Your screen should look like the image below, if everything was done right:

Creating the task reminder system

Let’s create our model and controller for the task scheduling feature. Use the command below to do so:

php artisan make:model Task --resource --controller

The command above will create the Task model in the app/Models directory and the TaskController in the app/Http/Controllers directory with resource methods.

Let’s create a route for the TaskController. Navigate to routes/web.php and add the following to the file.

use AppHttpControllersTaskController;

Route::resource('tasks', TaskController::class)->middleware('auth');

Next, let’s modify the content of the task model to our needs. Navigate to app/Models/Task.php and replace the content with the following:

<?php

namespace AppModels;

use MongoDBLaravelEloquentModel;

class Task extends Model

{

protected $connection = 'mongodb';

protected $table = 'tasks';

protected $fillable = [

'title', 'description', 'due_date', 'email', 'reminder_time', 'last_notification_date'

];

}

The code above is our task model.

- The

use MongoDBLaravelEloquentModelstatement after the namespace is specific to MongoDB models. It overrides the Eloquent features implemented with SQL, using MongoDB queries. - The

protected $table = 'tasks'is optional. It is the name of the MongoDB collection that is used to tore the documents from this model. - The

protected $fillable = ['title', 'description', 'due_date', 'email', 'reminder_time']specifies the mass assignable properties.

One of the unique features of MongoDB is that it doesn’t need migrations like relational databases do. This means you can add new fields directly to your documents without having to update the model or create migrations. This is particularly helpful in handling dynamic data.

Next, let’s modify our controller. Navigate to app/Http/Controllers/TaskController.php and update the content with the code below:

<?php

namespace AppHttpControllers;

use AppModelsTask;

use CarbonCarbon;

use IlluminateHttpRequest;

class TaskController extends Controller

{

/**

* Display a listing of the resource.

*/

public function index()

{

$tasks = Task::where('email', auth()->user()->email)->get();

return view('tasks.index', compact('tasks'));

}

/**

* Show the form for creating a new resource.

*/

public function create()

{

return view('tasks.create');

}

/**

* Store a newly created resource in storage.

*/

public function store(Request $request)

{

$request->validate([

'title' => 'required|string|max:255',

'description' => 'nullable|string',

'due_date' => 'required|date',

'reminder_time' => 'required|date',

]);

$data = $request->all();

$data['due_date'] = Carbon::parse($request->due_date);

$data['reminder_time'] = Carbon::parse($request->reminder_time);

$data['email'] = auth()->user()->email;

$data['last_notification_date'] = null;

Task::create($data);

return redirect()->route('tasks.index')->with('success', 'Task created successfully.');

}

/**

* Display the specified resource.

*/

public function show(string $id)

{

//

}

/**

* Show the form for editing the specified resource.

*/

public function edit(string $id)

{

$tasks = Task::where('id', $id)->get();

return view('tasks.edit', ['tasks' => $tasks]);

}

/**

* Update the specified resource in storage.

*/

public function update(Request $request, string $id)

{

$task = Task::findOrFail($id);

$data = $request->all();

$data['due_date'] = Carbon::parse($request->due_date)->format('Y-m-d H:i:s');

$data['reminder_time'] = Carbon::parse($request->reminder_time)->format('Y-m-d H:i:s');

$task->update($data);

return redirect()->route('tasks.index')->with('success', 'Task updated successfully.');

}

/**

* Remove the specified resource from storage.

*/

public function destroy(string $id)

{

$task = Task::findOrFail($id);

$task->delete();

Return redirect()->route('tasks.index')->with('success', 'Task deleted successfully.');

}

}

Our newly created TaskController above contains code that handles the CRUD operations of our task model. The index method retrieves all tasks belonging to the logged-in user and sends them to the index.blade.php file to be displayed on the front end.

The create method returns the form view for creating a new task, while the store method validates the input, assigns the logged-in user’s email to the task, and saves it to the database.

For updates, the edit method retrieves the specific task to be edited and displays it in an edit form. When this form is submitted, it calls the update method which saves the edited task in our MongoDB task collection.

The destroy method deletes a specific task. Each operation redirects back to the task’s list with a success message for user feedback.

Let’s create view files for our task scheduler. In the resources/views directory, create a folder named /tasks and create the following files in it:

- create.blade.php

- edit.blade.php

- index.blade.php

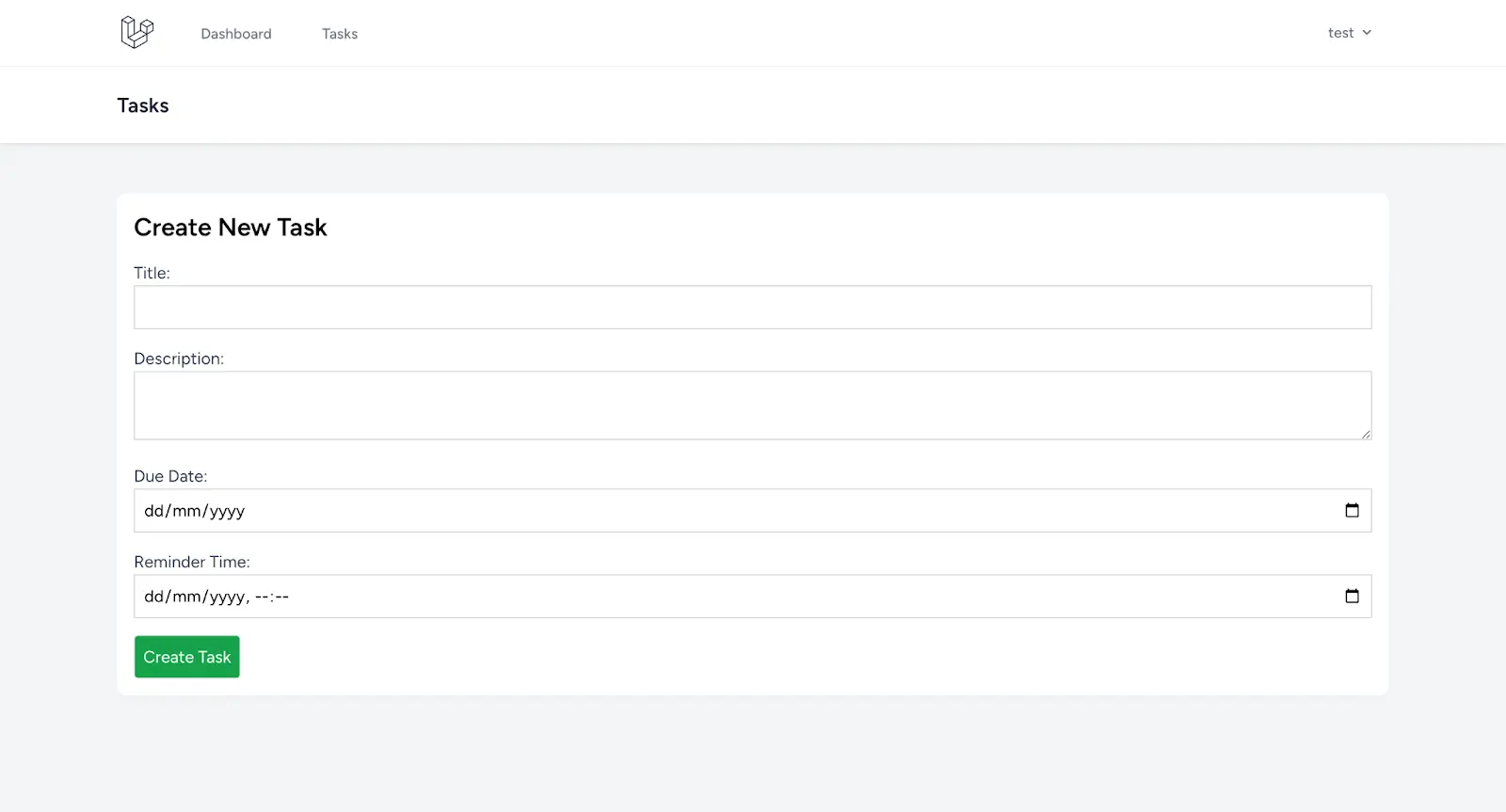

Navigate to resources/views/create.blade.php and replace the content of the page with the following:

<x-app-layout>

<x-slot name="header">

<h2 class="font-semibold text-xl text-gray-800 leading-tight">

{{ __('Tasks') }}

</h2>

</x-slot>

<div class="py-12">

<div class="max-w-7xl mx-auto sm:px-6 lg:px-8">

<div class="bg-white overflow-hidden shadow-sm sm:rounded-lg">

<div class="container mx-auto p-4">

<h2 class="text-2xl font-bold mb-4">Create New Task</h2>

<form action="{{ route('tasks.store') }}" method="POST">

@csrf

<div class="mb-4">

<label for="title" class="block text-gray-700">Title:</label>

<input type="text" name="title" id="title" required class="border border-gray-300 p-2 w-full" value="{{ old('title') }}">

@error('title')

<p class="text-red-500">{{ $message }}</p>

@enderror

</div>

<div class="mb-4">

<label for="description" class="block text-gray-700">Description:</label>

<textarea name="description" id="description" class="border border-gray-300 p-2 w-full">{{ old('description') }}</textarea>

</div>

<div class="mb-4">

<label for="due_date" class="block text-gray-700">Due Date:</label>

<input type="date" name="due_date" id="due_date" required class="border border-gray-300 p-2 w-full" value="{{ old('due_date') }}">

@error('due_date')

<p class="text-red-500">{{ $message }}</p>

@enderror

</div>

<div class="mb-4">

<label for="reminder_time" class="block text-gray-700">Reminder Time:</label>

<input type="datetime-local" name="reminder_time" id="reminder_time" class="border border-gray-300 p-2 w-full" value="{{ old('reminder_time') }}">

</div>

<button type="submit" class="bg-green-600 text-white text-gray-399 p-2 border rounded">Create Task</button>

</form>

</div>

</div>

</div>

</div>

</x-app-layout>

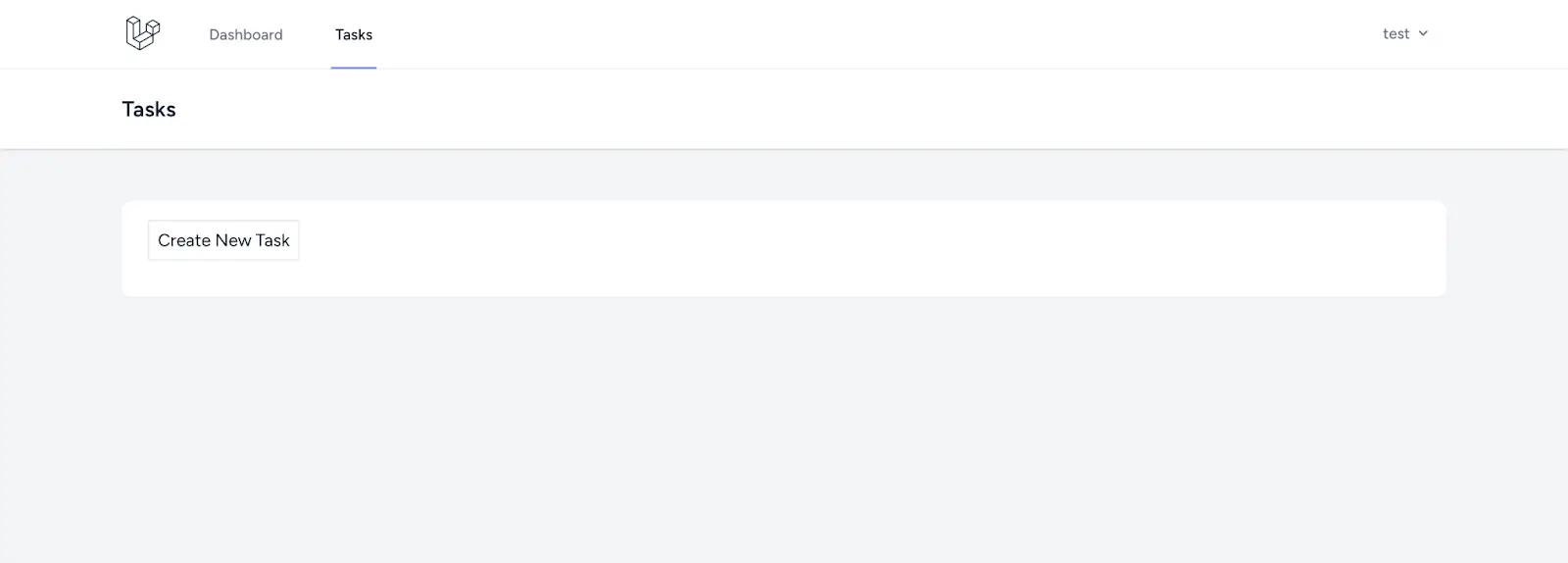

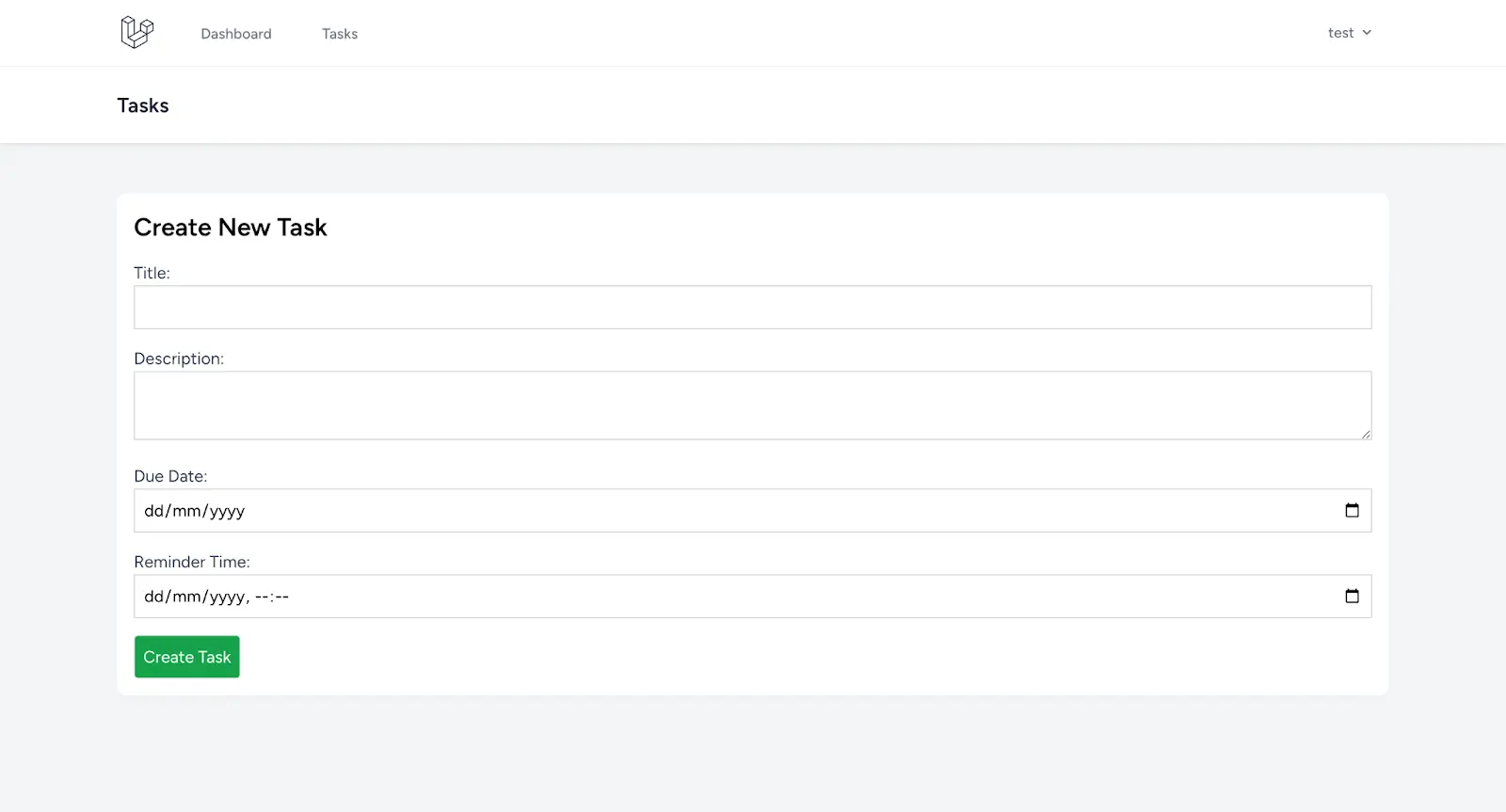

In the code above, we added HTML for the create form. The form contains text input for title and description and date and time input for due date and reminder time. If your code is right, your screen should look like the image below.

Do the same for edit.blade.php. Navigate to resources/views/edit.blade.php and add the code below to the content of the page:

<x-app-layout>

<x-slot name="header">

<h2 class="font-semibold text-xl text-gray-800 leading-tight">

{{ __('Edit Task') }}

</h2>

</x-slot>

<div class="py-12">

<div class="max-w-7xl mx-auto sm:px-6 lg:px-8">

<div class="bg-white overflow-hidden shadow-sm sm:rounded-lg">

<div class="p-6 text-gray-900">

@foreach($tasks as $task)

<form action="{{ route('tasks.update', $task->id) }}" method="POST">

@csrf

@method('PUT')

<div class="mb-4">

<label for="title" class="block text-gray-700">Title:</label>

<input type="text" name="title" id="title" required class="border border-gray-300 p-2 w-full" value="{{ old('title', $task->title) }}">

@error('title')

<p class="text-red-500">{{ $message }}</p>

@enderror

</div>

<div class="mb-4">

<label for="description" class="block text-gray-700">Description:</label>

<textarea name="description" id="description" class="border border-gray-300 p-2 w-full">{{ old('description', $task->description) }}</textarea>

</div>

<div class="mb-4">

<label for="due_date" class="block text-gray-700">Due Date:</label>

<input type="date" name="due_date" id="due_date" required class="border border-gray-300 p-2 w-full" value="{{ old('due_date', $task->due_date) }}">

@error('due_date')

<p class="text-red-500">{{ $message }}</p>

@enderror

</div>

<div class="mb-4">

<label for="reminder_time" class="block text-gray-700">Reminder Time:</label>

<input type="datetime-local" name="reminder_time" id="reminder_time" class="border border-gray-300 p-2 w-full" value="{{ old('reminder_time', $task->reminder_time) }}">

</div>

<button type="submit" class="bg-blue-500 text-white p-2 rounded">Update Task</button>

</form>

@endforeach

</div>

</div>

</div>

</div>

</x-app-layout>

The edit contains the same input fields as the create form above. It is loaded with the data of the task currently being edited.

Lastly, navigate to resources/views/index.blade.php and replace the content with the code below:

<x-app-layout>

<x-slot name="header">

<h2 class="font-semibold text-xl text-gray-800 leading-tight">

{{ __('Tasks') }}

</h2>

</x-slot>

<div class="py-12">

<div class="max-w-7xl mx-auto sm:px-6 lg:px-8">

<div class="bg-white overflow-hidden shadow-sm sm:rounded-lg">

<div class="p-6 text-gray-900">

<div class="mb-2">

<a href="{{ route('tasks.create') }}" class="p-2 border mb-4">Create New Task</a>

</div>

<ul class="mt-4">

@foreach ($tasks as $task)

<div class="mt-2">

<hr>

</div>

<li>

<h1 class="text-2xl">

<strong>{{ $task->title }}</strong> - Due: {{ $task->due_date }}

</h1>

<p class="text-gray-600">

{{ $task->description }}

</p>

<div class="flex gap-2 mt-4">

<div class="p-2 text-white bg-gray-700">

<a href="{{ route('tasks.edit', $task->id) }}">Edit</a>

</div>

<div class="p-2 text-white bg-red-700 rounded">

<form action="{{ route('tasks.destroy', $task->id) }}" method="POST" style="display:inline;">

@csrf

@method('DELETE')

<button type="submit">Delete</button>

</form>

</div>

</div>

</li>

@endforeach

</ul>

</div>

</div>

</div>

</div>

</x-app-layout>

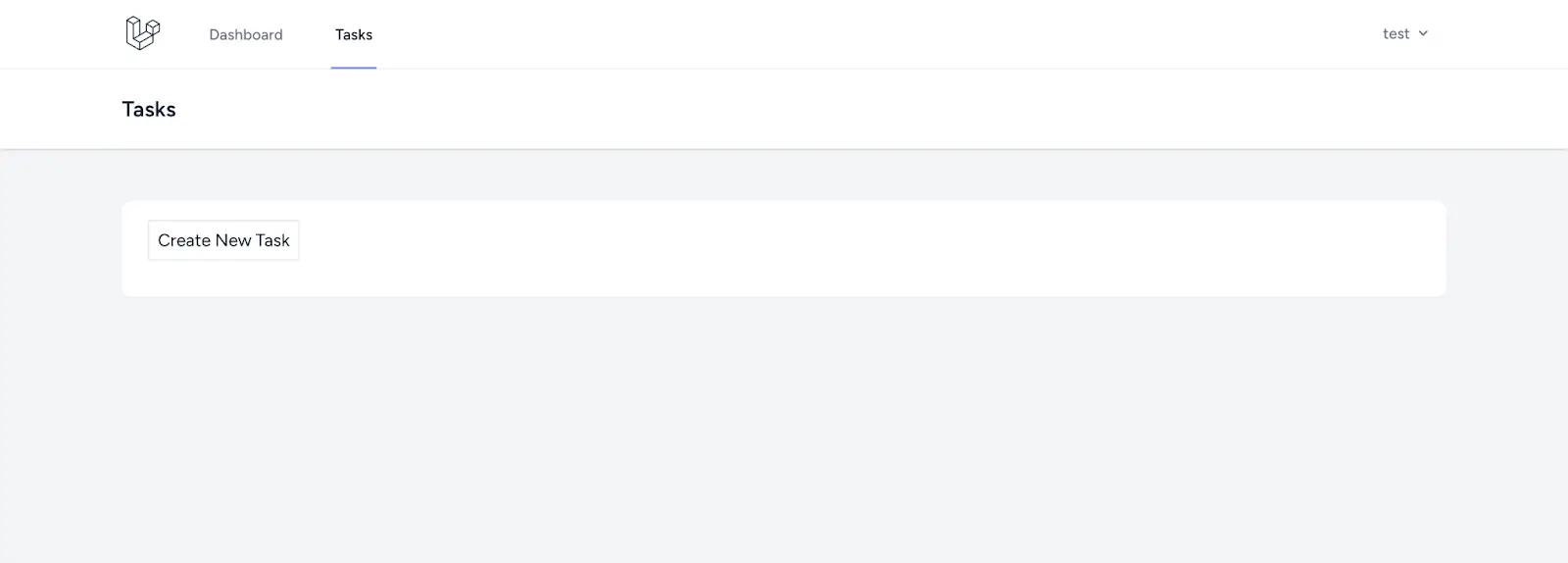

In the code above, we have a link to the create form. It also loops through all the reminders and displays those belonging to this user.

Add route

Next, we need to add a link to the task feature from our application navigation. Open resouces/views/layouts/navigation.blade.php and add the following line of code just after the dashboard navigation link.

//...existing code ...

<div class="hidden space-x-8 sm:-my-px sm:ms-10 sm:flex">

<x-nav-link :href="route('tasks.index')":active="request()->routeIs('tasks.index')">

{{ __('Tasks') }}

</x-nav-link>

</div>

At this point, we should be able to test the CRUD operation of our task management system. Ensure that everything works correctly before moving on to the next section.

Setting reminders using Laravel task scheduling

Let’s proceed to implementing the reminder system for due tasks. To do this we will:

- Create a custom artisan command to send reminders through email.

- Register the command for automatic scheduling. Let’s create a custom artisan command using the command below:

php artisan make:command SendTaskReminders

Once the command is completed, update the content of app/Console/Commands/SendTaskReminders.php with the code below:

<?php

namespace AppConsoleCommands;

use AppModelsTask;

use CarbonCarbon;

use IlluminateConsoleCommand;

use IlluminateSupportFacadesMail;

class SendTaskReminders extends Command

{

/**

* The name and signature of the console command.

*

* @var string

*/

protected $signature = 'app:send-task-reminders';

/**

* The console command description.

*

* @var string

*/

protected $description = 'Command description';

/**

* Execute the console command.

*/

public function handle()

{

$now = Carbon::now();

$upcomingTasks = Task::where('last_notification_date', null)->get();

$upcomingTasks = Task::where('last_notification_date', null)

->where('reminder_time', '>=', $now->clone()->subMinutes(10))

->get();

foreach ($upcomingTasks as $task) {

$emailBody = <<<EOF

Hello,

This is a reminder for your task:

Title: {$task->title}

Description: {$task->description}

Due Date: {$task->due_date}

Please make sure to complete it on time!

Regards,

Your Task Reminder App

EOF;

Mail::raw($emailBody, function ($message) use ($task) {

$message->to($task->email)

->subject("Task Reminder: {$task->title}");

});

$task->last_notification_date = $now;

$task->save();

$this->info("Reminder email sent for task: {$task->title}");

}

return self::SUCCESS;

}

}

The main logic of our custom artisan command is written in the handle() method. From the code above, we get the current timestamp using Carbon::now().

Next, we query the database to get all the tasks where reminder_time is less than or equal to the current time (the value of $now) and where reminder_time is greater than or equal to 10 minutes before the time, with this line of code: 'reminder_time','>=',$now->clone()->subMinutes(10). In MongoDB, all dates are stored in UTC. Even if your server uses a different timezone, you don’t need to change it.

To throw more light, let’s assume that the current time is $now = 2024-10-22 15:00:00. The task reminder query fetches all tasks between 2024-10-22 14:50:00 and 2024-10-22 15:00:00. We fetch all tasks that are due in 10 minutes.

Then, we loop through the result and send reminders to the user emails of tasks that will be due in the next 10 minutes.

For the task scheduler to work properly, you must configure your application to send emails.

Mailtrap.io is a great tool for testing email sending. Get a detailed explanation of how to configure your application to send emails using Mailtrap.

Scheduling notifications for task reminders

To wrap it all up, we need to schedule the artisan command created above to run every minute. Think of this as a way to automatically run php artisan app:send-task-reminders every minute.

There are different approaches to scheduling a task in Laravel. In this tutorial, we are working with Laravel version 11.9, which is the most recent version at the time of this writing. To proceed, navigate to routes/console.php and add the following:

//...existing code ...

Schedule::command('app:send-task-reminders')->everyMinute();

To test that this works, run the command below:

php artisan schedule:run

On a production server, you will need to configure a cron job to run the php artisan schedule:run command at regular intervals.

In a Linux- or Unix-based server, you can open your cron configuration file using the command below:

crontab -e

Add the code below into the cron configuration tab:

* * * * * /usr/bin/php /path-to-your-project/artisan schedule:run >> /dev/null 2>&1

For this to work properly, replace /usr/bin/php with the path to your PHP binary and /path-to-your-project with the full path to your Laravel project on your server, then save and exit.

You can verify that everything works fine by typing this command below:

crontab -l

Conclusion

We have come to the end of this tutorial; great job if you followed along. Now, for a recap, in this tutorial, we walked through the process of creating a task scheduler in Laravel and MongoDB. Some of the key implementations were:

- Configuring a Laravel project to work with MongoDB.

- Implementing CRUD features for our tasks scheduler.

- Creating a custom Laravel artisan command for our reminder system.

- Scheduling a task to run the artisan command in intervals.

- Walking through the steps to configure the cron job on a Linux-based server.

Find the project on GitHub. Feel free to clone it, sign up for MongoDB Atlas, and customize it to your specific needs. For more support, join the MongoDB Developer Community.

MMS • RSS

HTF MI just released the Global Backend Database Software Market Study, a comprehensive analysis of the market that spans more than 143+ pages and describes the product and industry scope as well as the market prognosis and status for 2025–2032. The marketization process is being accelerated by the market study’s segmentation by important regions. The market is currently expanding its reach.

Major companies profiled in Backend Database Software Market are:

Oracle DB (USA), Microsoft SQL Server (USA), PostgreSQL (Open Source), MySQL (Oracle, USA), MongoDB (USA), Redis (USA), Cassandra (Apache, USA), MariaDB (USA), IBM Db2 (USA), Google Cloud Spanner (USA), AWS Aurora (USA), SAP HANA (Germany), Couchbase (USA), Firebird (Open Source), CockroachDB (USA)

𝐑𝐞𝐪𝐮𝐞𝐬𝐭 𝐏𝐃𝐅 𝐒𝐚𝐦𝐩𝐥𝐞 𝐂𝐨𝐩𝐲 𝐨𝐟 𝐑𝐞𝐩𝐨𝐫𝐭: (𝐈𝐧𝐜𝐥𝐮𝐝𝐢𝐧𝐠 𝐅𝐮𝐥𝐥 𝐓𝐎𝐂, 𝐋𝐢𝐬𝐭 𝐨𝐟 𝐓𝐚𝐛𝐥𝐞𝐬 & 𝐅𝐢𝐠𝐮𝐫𝐞𝐬, 𝐂𝐡𝐚𝐫𝐭) @

👉 https://www.htfmarketinsights.com/sample-report/4361127-backend-database-software-market?utm_source=Akash_NewsTrail&utm_id=Akash

HTF Market Intelligence projects that the global Backend Database Software market will expand at a compound annual growth rate (CAGR) of 6.5% from 2025 to 2032, from USD 85 Billion in 2025 to USD 140 Billion by 2032.

Our Report Covers the Following Important Topics:

𝐁𝐲 𝐓𝐲𝐩𝐞:

Relational, NoSQL, Cloud-native, In-memory, Graph

𝐁𝐲 𝐀𝐩𝐩𝐥𝐢𝐜𝐚𝐭𝐢𝐨𝐧:

Enterprise apps, Web backends, Analytics, IoT, Mobile apps

Definition:

Backend database software is used to store, manage, and retrieve structured data for applications, typically running on servers with SQL or NoSQL architectures, providing scalability, performance, and data integrity in software systems.

Dominating Region:

North America

Fastest-Growing Region:

Asia‑Pacific

Market Trends:

- Distributed SQL/NoSQL systems, Serverless DB functions, Auto‑sharding, Graph DB support, Schema‑less engines

Market Drivers:

- Data performance scaling need, Cloud‑native app support, Multi‑tenant demand, OLAP/OLTP mix requirement

Market Challenges:

- ACID vs CAP tradeoffs, Schema evolution drift, Multi‑cloud replication latency

Have different Market Scope & Business Objectives; Enquire for customized study: https://www.htfmarketinsights.com/report/4361127-backend-database-software-market?utm_source=Akash_NewsTrail&utm_id=Akash

The titled segments and sub-section of the market are illuminated below:

In-depth analysis of Backend Database Software market segments by Types: Relational, NoSQL, Cloud-native, In-memory, Graph

Detailed analysis of Career &Education Counselling market segments by Applications: Enterprise apps, Web backends, Analytics, IoT, Mobile apps

Global Backend Database Software Market -𝐑𝐞𝐠𝐢𝐨𝐧𝐚𝐥 𝐀𝐧𝐚𝐥𝐲𝐬𝐢𝐬

• North America: United States of America (US), Canada, and Mexico.

• South & Central America: Argentina, Chile, Colombia, and Brazil.

• Middle East & Africa: Kingdom of Saudi Arabia, United Arab Emirates, Turkey, Israel, Egypt, and South Africa.

• Europe: the UK, France, Italy, Germany, Spain, Nordics, BALTIC Countries, Russia, Austria, and the Rest of Europe.

• Asia: India, China, Japan, South Korea, Taiwan, Southeast Asia (Singapore, Thailand, Malaysia, Indonesia, Philippines & Vietnam, etc.) & Rest

• Oceania: Australia & New Zealand

Buy Now Latest Edition of Backend Database Software Market Report 👉 https://www.htfmarketinsights.com/buy-now?format=1&report=4361127?utm_source=Akash_NewsTrail&utm_id=Akash

Backend Database Software Market Research Objectives:

– Focuses on the key manufacturers, to define, pronounce and examine the value, sales volume, market share, market competition landscape, SWOT analysis, and development plans in the next few years.

– To share comprehensive information about the key factors influencing the growth of the market (opportunities, drivers, growth potential, industry-specific challenges and risks).

– To analyze the with respect to individual future prospects, growth trends and their involvement to the total market.

– To analyze reasonable developments such as agreements, expansions new product launches, and acquisitions in the market.

– To deliberately profile the key players and systematically examine their growth strategies.

FIVE FORCES & PESTLE ANALYSIS:

Five forces analysis—the threat of new entrants, the threat of substitutes, the threat of competition, and the bargaining power of suppliers and buyers—are carried out to better understand market circumstances.

• Political (Political policy and stability as well as trade, fiscal, and taxation policies)

• Economical (Interest rates, employment or unemployment rates, raw material costs, and foreign exchange rates)

• Social (Changing family demographics, education levels, cultural trends, attitude changes, and changes in lifestyles)

• Technological (Changes in digital or mobile technology, automation, research, and development)

• Legal (Employment legislation, consumer law, health, and safety, international as well as trade regulation and restrictions)

• Environmental (Climate, recycling procedures, carbon footprint, waste disposal, and sustainability)

Get customized report 👉 https://www.htfmarketinsights.com/customize/4361127-backend-database-software-market?utm_source=Akash_NewsTrail&utm_id=Akash

Points Covered in Table of Content of Global Backend Database Software Market:

Chapter 01 – Backend Database Software Executive Summary

Chapter 02 – Market Overview

Chapter 03 – Key Success Factors

Chapter 04 – Global Backend Database Software Market – Pricing Analysis

Chapter 05 – Global Backend Database Software Market Background or History

Chapter 06 – Global Backend Database Software Market Segmentation (e.g. Type, Application)

Chapter 07 – Key and Emerging Countries Analysis Worldwide Backend Database Software Market

Chapter 08 – Global Backend Database Software Market Structure & worth Analysis

Chapter 09 – Global Backend Database Software Market Competitive Analysis & Challenges

Chapter 10 – Assumptions and Acronyms

Chapter 11 – Backend Database Software Market Research Method Backend Database Software

Thank you for reading this post. You may also obtain report versions by area, such as North America, LATAM, Europe, Japan, Australia, or Southeast Asia, or by chapter.

About Author:

HTF Market Intelligence is a leading market research company providing end-to-end syndicated and custom market reports, consulting services, and insightful information across the globe. With over 15,000+ reports from 27 industries covering 60+ geographies, value research report, opportunities, and cope with the most critical business challenges, and transform businesses. Analysts at HTF MI focus on comprehending the unique needs of each client to deliver insights that are most suited to their particular requirements.

Contact Us:

Nidhi Bhawsar (PR & Marketing Manager)

HTF Market Intelligence Consulting Private Limited

Phone: +15075562445

sales@htfmarketintelligence.com

MiniMax Releases M1: A 456B Hybrid-Attention Model for Long-Context Reasoning and Software Tasks

MMS • Robert Krzaczynski

MiniMax has introduced MiniMax-M1, an open-weight language model designed for long-context reasoning and tool use. Based on the earlier MiniMax-Text-01, M1 uses a hybrid Mixture-of-Experts (MoE) architecture and a new “lightning attention” mechanism. The model has a total capacity of 456 billion parameters, with 45.9 billion active per token, and supports context lengths of up to 1 million tokens.

M1 distinguishes itself through its efficient use of compute and support for long-context reasoning. Its lightning attention mechanism reduces test-time computation, requiring only 25% of the FLOPs used by DeepSeek R1 for sequences of 100K tokens. The model was trained using large-scale reinforcement learning across a range of domains, including mathematical problem-solving and software engineering environments.

Two versions of the model are available. The models are evaluated using a custom RL scaling approach. Notably, MiniMax introduces CISPO, a novel RL algorithm that clips importance sampling weights rather than token updates—reportedly improving stability and performance over traditional variants.

Across benchmarks, MiniMax-M1-80K consistently ranks at or near the top among open-weight models, with strong results in:

- Long-context tasks (OpenAI-MRCR 128K: 73.4%, LongBench-v2: 61.5%)

- Software engineering (SWE-bench Verified: 56.0%)

- Tool use (TAU-bench airline: 62.0%, retail: 63.5%)

- Reasoning-heavy math benchmarks (AIME 2024: 86.0%)

One Reddit user commented on its standout capabilities:

This looks pretty great. Especially for function calling (Tau-bench) and long context, this seems like SOTA for open-weights. The latter by some big margin, which I don’t even find unbelievable because their old non-reasoning model was also great for this.

However, others pointed to limitations in practice. For example, dubesor86 shared:

It’s unusable, though. I had it play chess matches (usually takes a few minutes), and I had to have it run all night, and it still wasn’t done by the time I woke up. All the scores in the world mean nothing if the usability is zero.

MiniMax-M1 also supports structured function calling, making it suitable for agent frameworks. The model is available in two versions (40K and 80K) via HuggingFace. For deployment, the team recommends vLLM, offering optimized serving, memory management, and batching performance. Developers can also experiment via the MiniMax MCP Server, which bundles API access and capabilities such as video and image generation, speech synthesis, and voice cloning.

MMS • RSS

The popularity of Laravel has improved recently as more developers have started to adopt the technology. Laravel has an easy-to-understand syntax that boosts productivity by letting the developer focus on the core features of their application and not bother with repetitive tasks like authentication and sending emails. It has a very vibrant community of users and great learning materials.

Freshly created Laravel applications are configured to use relational databases. The purpose of this tutorial is to show how to use MongoDB in a Laravel application. We will build a simple task reminder system to achieve this.

Prerequisites

The following tools and technologies are required to follow along effectively with this tutorial.

- A free MongoDB Atlas cluster

- PHP and Composer

- NPM

- Basic knowledge of the Laravel framework

- Familiarity with MongoDB and NoSQL databases

Environment setup

To work with MongoDB, we need to configure our development environment. We need to ensure that all the necessary development dependencies are installed and configured properly. Make sure you have the following installed.

- PHP and the MongoDB extension

- Composer

Project setup

To get started building our reminder system, the first thing to do is create a new Laravel project. We can do so using the composer with the command below:

composer create-project laravel/laravel LaravelMongodbProject

cd LaravelMongodbProject

Install MongoDB Laravel package

A freshly created Laravel project when installed comes with default configurations for relational databases like MySql and PostgreSQL. MongoDB does not work in Laravel by default. We need to install the Laravel MongoDB package and also do a little configuration in config/database.php. Proceed to install the package using the command below:

composer require mongodb/laravel-mongodb

Configure database

Once the installation of the Laravel MongoDB package is completed, the next step is to add our MongoDB database connection to our config/database.php file to complete the configuration. Copy the code below and paste it in the connections array that contains configurations for other database types.

return [

'connections' => [

'mongodb' => [

'driver' => 'mongodb',

'dsn' => env('MONGODB_URI'),

'database' => 'YOUR_DATABASE_NAME',

],

//You can keep other existing connections

],

Let’s take a moment to explain. The dsn value is obtained from the .env file. In your .env file, create a value for 'MONGODB_URI and set it to the value of your MongoDB Atlas connection string, like below:

MONGODB_URI="<>"

DB_CONNECTION=mongodb

Authentication with Laravel Breeze

We have installed and configured our application to work with MongoDB. Let’s proceed to authentication. Laravel simplifies the implementation of authentication by providing packages like Laravel Breeze, Laravel Fortify, and Laravel Jetstream. In this tutorial, we will use Laravel Breeze for our authentication. We need to install it using Composer with the command below:

composer require laravel/breeze --dev

Once the installation is complete, proceed by running the next set of commands.

php artisan key:generate

php artisan breeze:install

php artisan migrate

php artisan db:seed

npm install

npm run dev

This will prompt you to choose your preferred stack and testing package like the sample below. For the purpose of this article, we will select the first option (Blade and Alpine).

┌ Which Breeze stack would you like to install? ───────────────┐

│ > ● Blade with Alpine │

│ ○ Livewire (Volt Class API) with Alpine │

│ ○ Livewire (Volt Functional API) with Alpine │

│ ○ React with Inertia │

│ ○ Vue with Inertia │

│ ○ API only

Afterward, it will add authentication views, routes, controllers, and other related resources to your application.

At this point, let’s serve the project using Laravel’s built-in server and confirm that everything works properly. Serve your project using the command below:

php artisan serve

The project should be served at 127.0.0.1:8000. In case the port 8000 is already in use, Laravel will switch to a new available port. If everything was done right, your screen should look like the image below

You can log in using these credentials: email is “test@example.com” and password is “password”.

To make sure that the Laravel MongoDB package was configured properly, let’s create a route to ping our MongoDB cluster. Add the following to route/web.php.

Route::get('/ping', function (Request $request) {

$connection = DB::connection('mongodb');

try {

$connection->command(['ping' => 1]);

$msg = 'MongoDB is accessible!';

} catch (Exception $e) {

$msg = 'You are not connected to MongoDB. Error: ' . $e->getMessage();

}

return ['msg' => $msg];

});

Visit this route in your browser. Your screen should look like the image below, if everything was done right:

Creating the task reminder system

Let’s create our model and controller for the task scheduling feature. Use the command below to do so:

php artisan make:model Task --resource --controller

The command above will create the Task model in the app/Models directory and the TaskController in the app/Http/Controllers directory with resource methods.

Let’s create a route for the TaskController. Navigate to routes/web.php and add the following to the file.

use AppHttpControllersTaskController;

Route::resource('tasks', TaskController::class)->middleware('auth');

Next, let’s modify the content of the task model to our needs. Navigate to app/Models/Task.php and replace the content with the following:

<?php

namespace AppModels;

use MongoDBLaravelEloquentModel;

class Task extends Model

{

protected $connection = 'mongodb';

protected $table = 'tasks';

protected $fillable = [

'title', 'description', 'due_date', 'email', 'reminder_time', 'last_notification_date'

];

}

The code above is our task model.

- The

use MongoDBLaravelEloquentModelstatement after the namespace is specific to MongoDB models. It overrides the Eloquent features implemented with SQL, using MongoDB queries. - The

protected $table = 'tasks'is optional. It is the name of the MongoDB collection that is used to tore the documents from this model. - The

protected $fillable = ['title', 'description', 'due_date', 'email', 'reminder_time']specifies the mass assignable properties.

One of the unique features of MongoDB is that it doesn’t need migrations like relational databases do. This means you can add new fields directly to your documents without having to update the model or create migrations. This is particularly helpful in handling dynamic data.

Next, let’s modify our controller. Navigate to app/Http/Controllers/TaskController.php and update the content with the code below:

<?php

namespace AppHttpControllers;

use AppModelsTask;

use CarbonCarbon;

use IlluminateHttpRequest;

class TaskController extends Controller

{

/**

* Display a listing of the resource.

*/

public function index()

{

$tasks = Task::where('email', auth()->user()->email)->get();

return view('tasks.index', compact('tasks'));

}

/**

* Show the form for creating a new resource.

*/

public function create()

{

return view('tasks.create');

}

/**

* Store a newly created resource in storage.

*/

public function store(Request $request)

{

$request->validate([

'title' => 'required|string|max:255',

'description' => 'nullable|string',

'due_date' => 'required|date',

'reminder_time' => 'required|date',

]);

$data = $request->all();

$data['due_date'] = Carbon::parse($request->due_date);

$data['reminder_time'] = Carbon::parse($request->reminder_time);

$data['email'] = auth()->user()->email;

$data['last_notification_date'] = null;

Task::create($data);

return redirect()->route('tasks.index')->with('success', 'Task created successfully.');

}

/**

* Display the specified resource.

*/

public function show(string $id)

{

//

}

/**

* Show the form for editing the specified resource.

*/

public function edit(string $id)

{

$tasks = Task::where('id', $id)->get();

return view('tasks.edit', ['tasks' => $tasks]);

}

/**

* Update the specified resource in storage.

*/

public function update(Request $request, string $id)

{

$task = Task::findOrFail($id);

$data = $request->all();

$data['due_date'] = Carbon::parse($request->due_date)->format('Y-m-d H:i:s');

$data['reminder_time'] = Carbon::parse($request->reminder_time)->format('Y-m-d H:i:s');

$task->update($data);

return redirect()->route('tasks.index')->with('success', 'Task updated successfully.');

}

/**

* Remove the specified resource from storage.

*/

public function destroy(string $id)

{

$task = Task::findOrFail($id);

$task->delete();

Return redirect()->route('tasks.index')->with('success', 'Task deleted successfully.');

}

}

Our newly created TaskController above contains code that handles the CRUD operations of our task model. The index method retrieves all tasks belonging to the logged-in user and sends them to the index.blade.php file to be displayed on the front end.

The create method returns the form view for creating a new task, while the store method validates the input, assigns the logged-in user’s email to the task, and saves it to the database.

For updates, the edit method retrieves the specific task to be edited and displays it in an edit form. When this form is submitted, it calls the update method which saves the edited task in our MongoDB task collection.

The destroy method deletes a specific task. Each operation redirects back to the task’s list with a success message for user feedback.

Let’s create view files for our task scheduler. In the resources/views directory, create a folder named /tasks and create the following files in it:

- create.blade.php

- edit.blade.php

- index.blade.php

Navigate to resources/views/create.blade.php and replace the content of the page with the following:

<x-app-layout>

<x-slot name="header">

<h2 class="font-semibold text-xl text-gray-800 leading-tight">

{{ __('Tasks') }}

</h2>

</x-slot>

<div class="py-12">

<div class="max-w-7xl mx-auto sm:px-6 lg:px-8">

<div class="bg-white overflow-hidden shadow-sm sm:rounded-lg">

<div class="container mx-auto p-4">

<h2 class="text-2xl font-bold mb-4">Create New Task</h2>

<form action="{{ route('tasks.store') }}" method="POST">

@csrf

<div class="mb-4">

<label for="title" class="block text-gray-700">Title:</label>

<input type="text" name="title" id="title" required class="border border-gray-300 p-2 w-full" value="{{ old('title') }}">

@error('title')

<p class="text-red-500">{{ $message }}</p>

@enderror

</div>

<div class="mb-4">

<label for="description" class="block text-gray-700">Description:</label>

<textarea name="description" id="description" class="border border-gray-300 p-2 w-full">{{ old('description') }}</textarea>

</div>

<div class="mb-4">

<label for="due_date" class="block text-gray-700">Due Date:</label>

<input type="date" name="due_date" id="due_date" required class="border border-gray-300 p-2 w-full" value="{{ old('due_date') }}">

@error('due_date')

<p class="text-red-500">{{ $message }}</p>

@enderror

</div>

<div class="mb-4">

<label for="reminder_time" class="block text-gray-700">Reminder Time:</label>

<input type="datetime-local" name="reminder_time" id="reminder_time" class="border border-gray-300 p-2 w-full" value="{{ old('reminder_time') }}">

</div>

<button type="submit" class="bg-green-600 text-white text-gray-399 p-2 border rounded">Create Task</button>

</form>

</div>

</div>

</div>

</div>

</x-app-layout>

In the code above, we added HTML for the create form. The form contains text input for title and description and date and time input for due date and reminder time. If your code is right, your screen should look like the image below.

Do the same for edit.blade.php. Navigate to resources/views/edit.blade.php and add the code below to the content of the page:

<x-app-layout>

<x-slot name="header">

<h2 class="font-semibold text-xl text-gray-800 leading-tight">

{{ __('Edit Task') }}

</h2>

</x-slot>

<div class="py-12">

<div class="max-w-7xl mx-auto sm:px-6 lg:px-8">

<div class="bg-white overflow-hidden shadow-sm sm:rounded-lg">

<div class="p-6 text-gray-900">

@foreach($tasks as $task)

<form action="{{ route('tasks.update', $task->id) }}" method="POST">

@csrf

@method('PUT')

<div class="mb-4">

<label for="title" class="block text-gray-700">Title:</label>

<input type="text" name="title" id="title" required class="border border-gray-300 p-2 w-full" value="{{ old('title', $task->title) }}">

@error('title')

<p class="text-red-500">{{ $message }}</p>

@enderror

</div>

<div class="mb-4">

<label for="description" class="block text-gray-700">Description:</label>

<textarea name="description" id="description" class="border border-gray-300 p-2 w-full">{{ old('description', $task->description) }}</textarea>

</div>

<div class="mb-4">

<label for="due_date" class="block text-gray-700">Due Date:</label>

<input type="date" name="due_date" id="due_date" required class="border border-gray-300 p-2 w-full" value="{{ old('due_date', $task->due_date) }}">

@error('due_date')

<p class="text-red-500">{{ $message }}</p>

@enderror

</div>

<div class="mb-4">

<label for="reminder_time" class="block text-gray-700">Reminder Time:</label>

<input type="datetime-local" name="reminder_time" id="reminder_time" class="border border-gray-300 p-2 w-full" value="{{ old('reminder_time', $task->reminder_time) }}">

</div>

<button type="submit" class="bg-blue-500 text-white p-2 rounded">Update Task</button>

</form>

@endforeach

</div>

</div>

</div>

</div>

</x-app-layout>

The edit contains the same input fields as the create form above. It is loaded with the data of the task currently being edited.

Lastly, navigate to resources/views/index.blade.php and replace the content with the code below:

<x-app-layout>

<x-slot name="header">

<h2 class="font-semibold text-xl text-gray-800 leading-tight">

{{ __('Tasks') }}

</h2>

</x-slot>

<div class="py-12">

<div class="max-w-7xl mx-auto sm:px-6 lg:px-8">

<div class="bg-white overflow-hidden shadow-sm sm:rounded-lg">

<div class="p-6 text-gray-900">

<div class="mb-2">

<a href="{{ route('tasks.create') }}" class="p-2 border mb-4">Create New Task</a>

</div>

<ul class="mt-4">

@foreach ($tasks as $task)

<div class="mt-2">

<hr>

</div>

<li>

<h1 class="text-2xl">

<strong>{{ $task->title }}</strong> - Due: {{ $task->due_date }}

</h1>

<p class="text-gray-600">

{{ $task->description }}

</p>

<div class="flex gap-2 mt-4">

<div class="p-2 text-white bg-gray-700">

<a href="{{ route('tasks.edit', $task->id) }}">Edit</a>

</div>

<div class="p-2 text-white bg-red-700 rounded">

<form action="{{ route('tasks.destroy', $task->id) }}" method="POST" style="display:inline;">

@csrf

@method('DELETE')

<button type="submit">Delete</button>

</form>

</div>

</div>

</li>

@endforeach

</ul>

</div>

</div>

</div>

</div>

</x-app-layout>

In the code above, we have a link to the create form. It also loops through all the reminders and displays those belonging to this user.

Add route

Next, we need to add a link to the task feature from our application navigation. Open resouces/views/layouts/navigation.blade.php and add the following line of code just after the dashboard navigation link.

//...existing code ...

<div class="hidden space-x-8 sm:-my-px sm:ms-10 sm:flex">

<x-nav-link :href="route('tasks.index')":active="request()->routeIs('tasks.index')">

{{ __('Tasks') }}

</x-nav-link>

</div>

At this point, we should be able to test the CRUD operation of our task management system. Ensure that everything works correctly before moving on to the next section.

Setting reminders using Laravel task scheduling

Let’s proceed to implementing the reminder system for due tasks. To do this we will:

- Create a custom artisan command to send reminders through email.

- Register the command for automatic scheduling. Let’s create a custom artisan command using the command below:

php artisan make:command SendTaskReminders

Once the command is completed, update the content of app/Console/Commands/SendTaskReminders.php with the code below:

<?php

namespace AppConsoleCommands;

use AppModelsTask;

use CarbonCarbon;

use IlluminateConsoleCommand;

use IlluminateSupportFacadesMail;

class SendTaskReminders extends Command

{

/**

* The name and signature of the console command.

*

* @var string

*/

protected $signature = 'app:send-task-reminders';

/**

* The console command description.

*

* @var string

*/

protected $description = 'Command description';

/**

* Execute the console command.

*/

public function handle()

{

$now = Carbon::now();

$upcomingTasks = Task::where('last_notification_date', null)->get();

$upcomingTasks = Task::where('last_notification_date', null)

->where('reminder_time', '>=', $now->clone()->subMinutes(10))

->get();

foreach ($upcomingTasks as $task) {

$emailBody = <<<EOF

Hello,

This is a reminder for your task:

Title: {$task->title}

Description: {$task->description}

Due Date: {$task->due_date}

Please make sure to complete it on time!

Regards,

Your Task Reminder App

EOF;

Mail::raw($emailBody, function ($message) use ($task) {

$message->to($task->email)

->subject("Task Reminder: {$task->title}");

});

$task->last_notification_date = $now;

$task->save();

$this->info("Reminder email sent for task: {$task->title}");

}

return self::SUCCESS;

}

}

The main logic of our custom artisan command is written in the handle() method. From the code above, we get the current timestamp using Carbon::now().

Next, we query the database to get all the tasks where reminder_time is less than or equal to the current time (the value of $now) and where reminder_time is greater than or equal to 10 minutes before the time, with this line of code: 'reminder_time','>=',$now->clone()->subMinutes(10). In MongoDB, all dates are stored in UTC. Even if your server uses a different timezone, you don’t need to change it.

To throw more light, let’s assume that the current time is $now = 2024-10-22 15:00:00. The task reminder query fetches all tasks between 2024-10-22 14:50:00 and 2024-10-22 15:00:00. We fetch all tasks that are due in 10 minutes.

Then, we loop through the result and send reminders to the user emails of tasks that will be due in the next 10 minutes.

For the task scheduler to work properly, you must configure your application to send emails.

Mailtrap.io is a great tool for testing email sending. Get a detailed explanation of how to configure your application to send emails using Mailtrap.

Scheduling notifications for task reminders

To wrap it all up, we need to schedule the artisan command created above to run every minute. Think of this as a way to automatically run php artisan app:send-task-reminders every minute.

There are different approaches to scheduling a task in Laravel. In this tutorial, we are working with Laravel version 11.9, which is the most recent version at the time of this writing. To proceed, navigate to routes/console.php and add the following:

//...existing code ...

Schedule::command('app:send-task-reminders')->everyMinute();

To test that this works, run the command below:

php artisan schedule:run

On a production server, you will need to configure a cron job to run the php artisan schedule:run command at regular intervals.

In a Linux- or Unix-based server, you can open your cron configuration file using the command below:

crontab -e

Add the code below into the cron configuration tab:

* * * * * /usr/bin/php /path-to-your-project/artisan schedule:run >> /dev/null 2>&1

For this to work properly, replace /usr/bin/php with the path to your PHP binary and /path-to-your-project with the full path to your Laravel project on your server, then save and exit.

You can verify that everything works fine by typing this command below:

crontab -l

Conclusion

We have come to the end of this tutorial; great job if you followed along. Now, for a recap, in this tutorial, we walked through the process of creating a task scheduler in Laravel and MongoDB. Some of the key implementations were:

- Configuring a Laravel project to work with MongoDB.

- Implementing CRUD features for our tasks scheduler.

- Creating a custom Laravel artisan command for our reminder system.

- Scheduling a task to run the artisan command in intervals.

- Walking through the steps to configure the cron job on a Linux-based server.

Find the project on GitHub. Feel free to clone it, sign up for MongoDB Atlas, and customize it to your specific needs. For more support, join the MongoDB Developer Community.

MMS • Renato Losio

AWS recently announced exportable public SSL/TLS certificates from AWS Certificate Manager, addressing a long-standing community request. This update allows users to export certificates along with their private keys for use beyond AWS-managed services.

Exportable public certificates enable customers to deploy ACM-issued certificates on Amazon EC2 instances, containers, and on-premises hosts, supporting AWS, hybrid, and multicloud workloads. Channy Yun, lead blogger at AWS, explains:

The exportable public certificates are valid for 395 days. There is a charge at time of issuance, and again at time of renewal. Public certificates exported from ACM are issued by Amazon Trust Services and are widely trusted by commonly used platforms such as Apple and Microsoft and popular web browsers such as Google Chrome and Mozilla Firefox.

Previously, users could only create public certificates within AWS Certificate Manager or import certificates issued by third-party certificate authorities (CAs) for use with AWS services, such as Elastic Load Balancing (ELB), CloudFront, and API Gateway. However, there was no way to export these certificates or create and manage certificates for external services or EC2 instances.

Corey Quinn, chief cloud economist at The Duckbill Group, published a long article, “AWS Certificate Manager Has Announced Exportable TLS Certificates, and I’m Mostly Okay With It,” reviewing the new feature. Quinn writes:

The pricing is quite reasonable. It costs either $15 per domain, or $149 for a wildcard certificate. That seemed a bit expensive to me, until I remembered what these things used to cost–and apparently still do. $400 per certificate isn’t at all uncommon from trustworthy vendors (…) ACM’s pricing is a comparative steal.

While many cloud practitioners question the benefits of the new solution over free options like Let’s Encrypt, Wojtek Szczepucha, solutions architect at AWS, highlights the main targets of the announcement:

Meeting regulations and compliance from a governance perspective. Simpler to manage from an operations perspective. It’s not invented out of thin air, but rather based on customers asking for it.

While the ability to export has been well received by the community and the new certificates are currently valid for over a year, the SSL/TLS certificate lifespans are expected to shrink to 200 days next year, 100 days in 2027, and only 47 days by 2029, as previously reported on InfoQ. When customers renew certificates on AWS Certificate Manager, they are charged for a new certificate issuance. Yun adds:

You can configure automatic renewal events for exportable public certificates by Amazon EventBridge to monitor certificate renewals and create automation to handle certificate deployment when renewals occur. (…) You can also renew these certificates on-demand.

Quinn warns:

At least at launch, this feature doesn’t support ACME for automatic renewal, which means there’s likely to be an inherently manual process to renew them. While you can automate the entire certificate issuance dance via AWS APIs, I’ve worked with enough enterprise software to know how this is going to play out: it’s a manual process.

Customers have to choose to make a certificate exportable before it’s issued, and there is no option to go back and retroactively export certificates that are already in use. This ensures that there is no default export of certificates with their private keys.

The ability to issue exportable public certificates was one of the main announcements of the recent re:Inforce conference. During the 3 days in Philadelphia, the cloud provider introduced other new security capabilities and services, including multi-party approval for logically air-gapped vaults in AWS Backup and the new AWS Security Hub for risk prioritization and response at scale.

MMS • A N M Bazlur Rahman

The University of Manchester’s Beehive Lab has released GPULlama3.java, marking the first Java-native implementation of Llama3 with automatic GPU acceleration. This project leverages TornadoVM to enable GPU-accelerated large language model inference without requiring developers to write CUDA or native code, potentially transforming how Java developers approach AI applications in enterprise environments.

At the heart of GPULlama3.java lies TornadoVM, an innovative heterogeneous programming framework that extends OpenJDK and GraalVM to automatically accelerate Java programs on GPUs, FPGAs, and multi-core CPUs. Unlike traditional GPU programming approaches that require developers to rewrite code in low-level languages like CUDA or OpenCL manually, TornadoVM enables GPU acceleration while keeping all code in pure Java.

According to the TornadoVM documentation, the system works by extending the Graal JIT compiler with specialized backends that translate Java bytecode to GPU-compatible code at runtime. When a method is marked for acceleration using annotations like @Parallel, TornadoVM’s compilation pipeline converts standard Java bytecode through Graal’s Intermediate Representation, applies GPU-specific optimizations, and generates target-specific code—whether that’s OpenCL C for cross-platform compatibility, PTX assembly for NVIDIA GPUs, or SPIR-V binary for Intel graphics.

// TornadoVM Task-Graph API example from documentation

TaskGraph taskGraph = new TaskGraph("computation")

.transferToDevice(DataTransferMode.FIRST_EXECUTION, data)

.task("process", MyClass::compute, input, output)

.transferToHost(DataTransferMode.EVERY_EXECUTION, output);

TornadoExecutionPlan executor = new TornadoExecutionPlan(taskGraph.snapshot());

executor.execute();

The TornadoVM programming guide demonstrates how developers can utilize hardware-agnostic APIs, enabling the same Java source code to run identically on various hardware accelerators. The TornadoVM runtime handles all device-specific optimizations, memory management, and data transfers automatically.

According to the GPULlama3.java repository, the project supports three primary backends, enabling execution across diverse hardware:

- NVIDIA GPUs: Full support through both OpenCL and PTX backends

- Intel GPUs: Including Arc discrete graphics and integrated HD Graphics through the OpenCL backend

- Apple Silicon: M1/M2/M3 support through OpenCL (though Apple has deprecated OpenCL in favour of Metal)

The repository indicates that configuration is handled through command-line flags:

# Run with GPU acceleration (from project README)

./llama-tornado --gpu --verbose-init --opencl --model beehive-llama-3.2-1b-instruct-fp16.gguf --prompt "Explain the benefits of GPU acceleration."

The GPULlama3.java implementation leverages modern Java features as documented in the repository:

- Java 21+ requirement for Vector API and Foreign Memory API support

- GGUF format support for single-file model deployment

- Quantization support for Q4_0 and Q8_0 formats to reduce memory requirements

The project builds upon Mukel’s original Llama3.java, adding GPU acceleration capabilities through TornadoVM integration.

GPULlama3.java joins other Java LLM projects, including:

- JLama: A modern LLM inference engine for Java with distributed capabilities

- Llama3.java: The original pure Java implementation focusing on CPU optimization

As noted in Quarkus’s blog on Java LLMs, the Java ecosystem is expanding its AI/ML capabilities, enabling developers to build LLM-powered applications without leaving the Java platform.

TornadoVM originated from research at the University of Manchester, aiming to make heterogeneous computing accessible to Java developers. The framework has been in development since 2013 and continues to progress with new backend support and optimizations.

GPULlama3.java is currently in beta, with ongoing performance optimization and benchmark collection. The performance on Apple Silicon remains suboptimal due to the deprecation of OpenCL. The TornadoVM team is developing a Metal backend to enhance support for Apple Silicon, optimizing transformer operations and broadening model architecture compatibility.

GPULlama3.java represents a significant advancement in bringing GPU-accelerated large language model (LLM) inference to the Java ecosystem. By leveraging TornadoVM, the project demonstrates that Java developers can utilize GPU acceleration without leaving their familiar programming environment. While performance optimization continues and the project remains in active development, it opens up new possibilities for Java-based AI applications in enterprise settings, where Java’s strengths in security, scalability, and maintainability are highly valued.

For developers interested in exploring GPU-accelerated LLM inference in Java, the project is open source and accessible on GitHub, complete with documentation and examples to help get started.

Article: The State Space Solution to Hallucinations: How State Space Models are Slicing the Competition

MMS • Albert Lie

Key Takeaways

- Transformers often hallucinate because they prioritize generating statistically likely text rather than factual accuracy.

- State Space Models (SSMs) offer a more reliable alternative for maintaining factual accuracy and context.

- SSMs process information sequentially, making them more efficient and less prone to hallucinations.

- Case studies on Perplexity and RoboMamba demonstrate the practical impact of SSMs in real-world scenarios.

- Practical guidelines are provided for implementing SSMs, including architecture selection, memory optimization, and real-time data integration.

AI-powered search tools like Perplexity and Arc are quickly becoming the go-to platforms for millions of users seeking instant answers. These tools promise quick, conversational responses with cited sources, making them feel more like talking to a smart assistant than using a traditional search engine. However, there is a growing problem: these systems often hallucinate.

In other words, they confidently make up facts, misquote sources, and recycle outdated information. For users, this means you might get an answer that sounds right, but is actually wrong. For example, Air Canada’s chatbot once confidently gave a fake refund policy to a grieving customer, leading the airline to be legally ordered to compensate him.

While many people blame bad data or unclear prompts, the real issue is deeper and tied to the architecture of most AI models: the transformer. In this article, I’ll explain why transformers struggle with hallucinations, how SSMs offer a promising solution, and what this shift could mean for the future of AI search.

Why Transformers Hallucinate

Transformers are the backbone of popular AI models like GPT-4. They predict the next word in a sentence by analyzing relationships between all words in a text at once. This attention mechanism is powerful for generating fluent and coherent text, but it comes with trade-offs:

Token Prediction,Not Truth Seeking

Transformers are designed to generate text that is statistically likely, not necessarily factually correct. In instances where there may be issues with the training data such as unfilled gaps or a level of noise or ambiguity, the model ends up filling these gaps with near guesses that may seem reasonably correct, but are not always well attuned to the context of the prompt or past information.

Computational Overload

Transformers analyze every word relationship, which becomes expensive and inefficient for long texts. As a result, they sometimes take shortcuts, losing important context and increasing the risk of errors.

Source Blindness

When given multiple sources, transformers can’t always tell which ones are reliable. This unreliability can lead to citing AI-generated or outdated information, as seen when Perplexity cited an AI-generated LinkedIn post about Kyoto festivals.

The end result is that AI search tools can act like persuasive storytellers. They are confidently wrong with answers that sound good but aren’t always accurate.

State Space Models: A Step Forward Towards Context Aware Accuracies

SSMs are emerging as a promising alternative to transformers for many sequence-based tasks. Unlike transformers, SSMs process information step-by-step, updating a memory bank as they go. Arguably, this approach resembles the typical framework of how humans read and retain information.

How SSMs Work

Using step-by-step analysis, SSMs read information one piece at a time, building understanding incrementally. This reduces the risk of context overload and helps the model keep track of important details.

SSMs are more efficient with computations. The memory and computational needs of SSMs grow linearly with the length of the input, rather than exponentially as with transformers. Therefore, SSMs can handle much longer texts or sequences without running into performance issues found in transformers.

SSMs store key facts in a controlled state, which helps minimize errors from conflicting information. In AI dialogue systems, maintaining a consistent internal state is crucial for ensuring coherent and contextually relevant interactions. For instance, Microsoft’s research into goal-oriented dialogue systems highlights the necessity of memory in complex tasks like vacation planning. These tasks involve multiple parameters and require the system to remember user preferences and constraints across the conversation. Without memory, the system would struggle to provide consistent responses, leading to user frustration.

A technical example of this is the MemoryBank mechanism, which enhances large language models (LLMs) by incorporating long-term memory. MemoryBank allows the model to recall relevant memories, update them over time, and adapt to a user’s personality. This is achieved through a memory updating mechanism inspired by the Ebbinghaus Forgetting Curve, enabling the AI to forget and reinforce memories based on their significance and the time elapsed.

Recent research shows that SSMs, especially models like Mamba, can perform competitively with transformers on many language tasks, particularly those involving long sequences or the need to track information over time. While transformers still have an edge in some areas, SSMs are closing the gap and offer unique advantages for certain applications.

Case Study 1: Perplexity’s Hallucination Trap

Perplexity, one of the leading AI-powered search engines, provides a clear example of why architecture matters. Despite using retrieval-augmented generation (RAG) to pull in real-time data, Perplexity has cited non-existent Vietnamese markets and AI-generated travel guides. This happens for several reasons.

By trusting unreliable sources, transformers treat all retrieved data equally, even if it’s AI-generated misinformation. Transformer models, such as BERT and GPT, are designed to process and generate text based on patterns learned from large datasets. Unfortunately, they lack inherent mechanisms to assess the veracity of the information they process. This limitation means that when these models retrieve information, especially through techniques like RAG, they may treat all sources with equal weight, regardless of their reliability.