Category: Uncategorized

MMS • Steef-Jan Wiggers

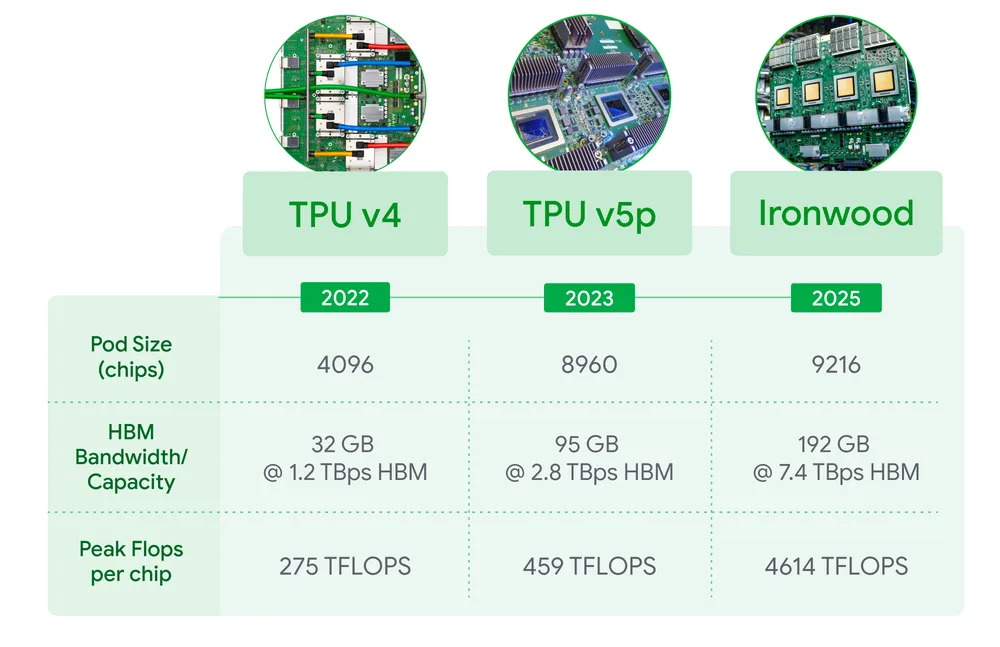

Google has unveiled its seventh-generation Tensor Processing Unit (TPU), Ironwood, at Google Cloud Next 25. Ironwood is Google’s most performant and scalable custom AI accelerator to date and the first TPU designed specifically for inference workloads.

Google emphasizes that Ironwood is designed to power what they call the “age of inference,” marking a shift from responsive AI models to proactive models that generate insights and interpretations. The company states that AI agents will use Ironwood to retrieve and generate data, delivering insights and answers.

A respondent in a Reddit thread on the announcement said:

Google has a huge advantage over OpenAI because it already has the infrastructure to do things like making its own chips. Currently, it looks like Google is running away with the game.

Ironwood scales up to 9,216 liquid-cooled chips, connected with Inter-Chip Interconnect (ICI) networking, and is a key component of Google Cloud’s AI Hypercomputer architecture. Developers can leverage Google’s own Pathways software stack to utilize the combined computing power of tens of thousands of Ironwood TPUs.

The company states, “Ironwood is our most powerful, capable, and energy-efficient TPU yet. And it’s purpose-built to power thinking, inferential AI models at scale.”

Furthermore, the company highlights that Ironwood is designed to manage the computation and communication demands of large language models (LLMs), mixture of experts (MoEs), and advanced reasoning tasks. Ironwood minimizes data movement and latency on-chip and uses a low-latency, high-bandwidth ICI network for coordinated communication at scale.

Ironwood will be available for Google Cloud customers in 256-chip and 9,216-chip configurations. The company claims that a 9,216-chip Ironwood pod delivers more than 24x the compute power of the El Capitan supercomputer, with 42.5 Exaflops compared to El Capitan’s 1.7 Exaflops per pod. Each Ironwood chip boasts a peak compute of 4,614 TFLOPS.

Ironwood also features an enhanced SparseCore, a specialized accelerator for processing ultra-large embeddings, expanding its applicability beyond traditional AI domains to finance and science.

Other key features of Ironwood include:

- 2x improvement in power efficiency compared to the previous generation, Trillium.

- 192 GB of high-bandwidth memory (HBM) per chip, 6x that of Trillium.

- 1.2 TBps bidirectional ICI bandwidth, 1.5x that of Trillium.

- 7.37 TB/s of HBM bandwidth per chip, 4.5x that of Trillium.

(Source: Google blog post)

Regarding the last feature, a respondent on another Reddit thread commented:

Tera? Terabytes? 7.4 Terabytes? And I’m over here praying that AMD gives us a Strix variant with at least 500GB of bandwidth in the next year or two…

While NVIDIA remains a dominant player in the AI accelerator market, a respondent in another Reddit thread commented:

I don’t think it will affect Nvidia much, but Google is going to be able to serve their AI at much lower cost than the competition because they are more vertically integrated, and that is pretty much already happening.

In addition, in yet another Reddit thread, a correspondent commented:

The specs are pretty absurd. Shame Google won’t sell these chips, a lot of large companies need their own hardware, but Google only offers cloud services with the hardware. Feels like this is the future, though, when somebody starts cranking out these kinds of chips for sale.

And finally, Davit tweeted:

Google just revealed Ironwood TPU v7 at Cloud Next, and nobody’s talking about the massive potential here: If Google wanted, they could spin out TPUs as a separate business and become NVIDIA’s biggest competitor overnight.

These chips are that good. The arms race in AI silicon is intensifying, but few recognize how powerful Google’s position actually is. While everyone focuses on NVIDIA’s dominance, Google has quietly built chip infrastructure that could reshape the entire AI hardware market if it decides to go all-in.

Google states that Ironwood provides increased computation power, memory capacity, ICI networking advancements, and reliability. These advancements, combined with improved power efficiency, will enable customers to handle demanding training and serving workloads with high performance and low latency. Google also notes that leading models like Gemini 2.5 and AlphaFold run on TPUs.

The announcement also highlighted that Google DeepMind has been using AI to aid in the design process for TPUs. An AI method called AlphaChip been used to accelerate and optimize chip design, resulting in what Google describes as “superhuman chip layouts” used in the last three generations of Google’s TPUs.

Earlier, Google reported that AlphaChip had also been used to design other chips across Alphabet, such as Google Axion Processors, and had been adopted by companies like MediaTek to accelerate their chip development. Google believes that AlphaChip has the potential to optimize every stage of the chip design cycle and transform chip design for custom hardware.

Podcast: Achieving Sustainable Mental Peace in Software Engineering with Help from Generative AI

MMS • John Gesimondo

Transcript

Shane Hastie: Good day, folks. This is Shane Hastie for the InfoQ Engineering Culture Podcast. Today, I’m sitting down with John Gesimondo. John, welcome. Thanks for taking the time to talk to us.

John Gesimondo: Yes. Thank you. Glad to be here.

Shane Hastie: Now, John, you were recently a QCon speaker. But before we get into that, tell us a little bit, who’s John?

Introductions [01:09]

John Gesimondo: Sure. Yes. I’m John. I’ve been a software engineer for quite a while now, I guess about 15 years. My undergrad was actually in business. I’m a self-taught engineer. But with enough time and diligence, you can do anything. I’ve been working at Netflix for the last six years, doing various things, mostly internal tools, and this will be relevant later, but I have ADHD and definitely some traits of autism. I’m super keen on software in the tooling space, and then I really enjoy optimizing life, especially thinking about mental health and how that touches on technology.

Shane Hastie: Your talk, Achieving Sustainable Mental Peace at Work, with the twist, using Gen AI… What brought that about?

Using generative AI for mental peace [02:07]

John Gesimondo: Well, organic. I basically just started using generative AI for basically everything in my life. There’s people at work that made fun of me for this. It’s actually hilarious, but I, in the early days, tried to apply Gen AI to everything I could possibly think of just as a pure explore. When things are brand new, it’s nice to know what they’re capable of. I really pushed the limits, and I found quite a lot of sticky areas with a lot of value that were actually in this work adjacent/work support, I guess, space. Maybe you could call it the socio side of sociotechnical. There’s been a lot there that has really helped me out at work, so tried to turn it into a framework and give it as a talk.

Shane Hastie: Let’s dig in first there… You’ve been using that to support the socio side of the sociotechnical systems. Why is mental such a challenge for us in the software engineering space?

Challenges of mental health in software engineering [03:20]

John Gesimondo: Oh, man. Software is just really complicated, the way that it’s actually made. Then, it’s obviously also technically challenging. There’s a lot of needs being managed and a lot of communication and organization. Then, I find that there’s a temporal nature of everything that really messes with it.

If you can manage to get the usual corporate stuff, let’s say, compared to a non-engineering situation in a corporation, it’s already hard. There’s a lot of organizing. You have cross-functional difficulty aligning goals and all this type of stuff. But then, with software, you have this added element of the temporal nature of “why are things the way they are? Who knows actually how this software works? Why did this person make this decision? Oh, they don’t work here anymore”. That creates a whole other aspect of things that makes it impossible to really keep things structured and predictable and on time.

You’ve also got incidents that happen. You have support rotation. You have this need to focus. But then, at the same time, there’s so much work that’s going to interrupt you by the nature of how this whole thing works. Quite a lot of forces going on here and most of them don’t have good answers. That leaves you with difficulty.

Shane Hastie: You said you came up with a framework. What is the framework?

Overview of the Sustainable Mental Peace Framework [04:58]

John Gesimondo: The concept that we’re trying to get to is sustainable mental peace, which I call basically a steadfast calm while you’re in the middle of work chaos.

If we accept that work chaos is inevitable based on the reasons we just gave, then there’s not much you can do to control the environment permanently. Therefore, you have to take matters into your own hands to make sure that you can deal with living in that environment in a peaceful way. That’s where the framework comes in.

The framework is specifically limited to stuff where you can use generative AI since that was the topic of the talk. The way that I framed it is… starting from the most unstable, is basically having tools to recover quickly from emotional difficulty. Then, next up is getting unstuck when you’re stuck. Then, the next is to enhance your planning and communication skills because proper planning and proper proactive communication can save you so much of the interruptions I was just mentioning. Structure often, in this area, gives you a sense of calm. That’s very helpful.

Then, after that, now, we’re in a state that’s more stable. You’d want to try to add more time doing the things that you intrinsically enjoy and attempt to shortcut the things that aren’t really bringing you any personal value, which we now have some tools to do. Then, lastly, try to do some divergent thinking to keep things interesting, and to see opportunities, and to have some fun sometimes.

Shane Hastie: We maybe work through the different stages. It feels to me almost like a Maslow Hierarchy Pyramid. If we start at that base, recovering quickly from emotional difficulties, that just… The stereotypical engineer is considered not to have emotions. But of course, we’re all emotional beings and do respond. How do we recover quickly?

Stage 1: Recovering from emotional difficulty and getting unstuck [07:24]

John Gesimondo: Yes, definitely. I think I can group the first two, so recovering from emotions and overcoming stuck points. I think they’re very similar. They’re both often emotional in nature. When I say recover from emotions, I’m thinking a more acute situation, like some trigger big or small, or sitting in a room with someone that you really don’t like, or something… Things happen. It’s just… creates these unstable mental states. For being stuck, it’s a milder version, but it’s as practically important.

How do you do this? In either case, basically, we’re looking at a AI therapy/AI coach model. With the “recover from emotions”, I recommend using prompts that actually mention some therapy techniques, so using CBT technique or using IFS, which is Internal Family Systems, can sometimes help. Especially if the person using this already goes to therapy, this is extra helpful because it’s like having the tools that your therapist may have already gone over with you available to you at any time, and you don’t have to run the whole thing yourself. You’re still having that interaction which really helps open up.

For overcoming stuck points, it’s a blend. It’s like sometimes you need a coach, which AI… Again, similarly, you can just prompt, in a way, to ask, “Be my coach. Help me through this. Get me back to taking action”. But in other times, it can start the work for you. Sometimes you’re stuck because you have blank page syndrome, for instance. You don’t have to deal with that anymore. You can generate a crappy draft that you hate, but at least you can be the editor now, instead of a person staring at a blank screen. I think those two… You’re looking at using the AI as a coach/therapist.

Shane Hastie: Then, moving into the next level, the planning and communication, getting better at that.

Stage 2: Enhancing planning and communication with AI [09:53]

John Gesimondo: In this case, now you’re looking at, again, a mix. I guess, in this one, you’re looking at actually doing things in the standard way. I think that this one touches on the neurodivergence themes a lot because this is where, at least in my experience, as someone with ADHD, my thoughts are very scattered. Actually, getting them into a structure that other people understand is not a free exercise.

Now, with copilots and such, that exercise can be actually fairly close to free, I would say: dumping a bunch of bullet points and making sure that I covered everything, and asking for a review and to expand the set of points to a proposed audience, like, “If I were this type of person, what would I say? If you were that type of person, what would you say?” Agreeing on the content and then refactoring. Put it in programming terms, the whole thing into exactly the way that the target audience would expect to see it. You can even get that down to quite local. Giving an example and a prompt, in this case, is super helpful for structure, tone, language.

As much as I love creativity, as a neurodivergent person, when it comes to planning and communication, the less creative the end result is, the more buttoned up and structured and follows-what-people-expect it is, the less people will ask you questions and interrupt you later. They’re the reason for these things. At least in my experience, the way I’m using this is as a shim. I call it the neurodivergent planning shim. You’re just taking away the code-switching that I would have to do to write this myself and delegating that work to the AI.

Shane Hastie: But it starts, of course, with your knowledge, what you’re providing it.

John Gesimondo: Yes, exactly. But that’s the fun part, at least for me. The back and forth in that middle process, when it’s about agreeing, “Is this the right content?” is really fun. Asking different personas… “What would this person say? Would that person say?” It’s a lot more efficient than actually asking those people, which, of course, we’ll do anyway later. But it’s a nice creative brainstorming at the beginning, and then just speed run me through the structuring part because my brain structure… not a natural fit, I will say.

Stage 3: Maximizing flow and enjoyment in Engineering Work [12:42]

For the add time in flow bit, this is all about… Each of us have different parts of engineering that we like to do the most. Even when you look within what I’ll call, I guess, the core loop of engineering, of actually writing code and testing it, documenting it, and getting feedback, and all of this stuff, even within that process, there’s a lot of preferences.

Even within writing the code, there are preferences. Some people like to do more theoretical stuff. Other people like to do the structuring and architecture and system design. I think that with the flow aspect here, what you’re hoping to do is spend more time on the parts you really like and less time on the other parts, and especially don’t get interrupted.

Previously, before AI was around, we all had to go to Stack Overflow and read through all kinds of different correct and incorrect answers for our problems. When you have to do that in the middle of a working session, it takes you out of the flow of everything.

Now, with copilots, we often get that information at the snap of a finger. That’s already helpful. But on top of that, I recommend tuning your overall time spent coding to be tuned towards the areas that you really deeply enjoy/that feel immersive for you and do that using any latest frontier tooling that you can find. The cool thing is that this is currently where the frontier moves the fastest, is in the copilot space. This one’s super fun to keep up to date on.

Shane Hastie: And then divergent thinking.

Stage 4: Enabling divergent thinking and creativity [14:35]

John Gesimondo: Yes. Divergent thinking is really interesting. I think this is a strength of neurodivergent people. I mean, there’s a reason why “divergent” is in the name. But, in a sense, it’s interesting because this whole framework is helping neurodivergent people and others. But for neurodivergent people, it helps them be a little more neurotypical for less cost. But in this case, this is the flip. This is like, “Help neurotypicals be more neurodivergent”.

It’s funny. My story is I’m looking for a place to live. I was considering different suburbs or, back to San Francisco, in the city. I just was stuck in… There’s trade-offs everywhere, and nothing’s capturing my attention. Sometimes when I’m in this situation, including at work, the answer is zoom out and get a little more divergent with the thinking. In this case, I asked ChatGPT for some ideas of… I asked it for some housing ideas. It gave me a normal list, and then I asked it, “Get a little more divergent with this list”. It came up with some really funny stuff.

One of them is the tethered air home. This is a living pod suspended from cables between skyscrapers, or cliffs, or large trees. Nice hammock-like structure; could be retractable, and can retract the ground level when needed. Not bad. There’s one that was really funny, which was a distributed home network, so instead of one location, your home is spread across multiple cities. But its cloud-connected lockers store your essentials, minimizing the need for luggage. I don’t think that’s how the cloud works. I don’t think that’s how physical systems work but sounds good.

Anyway, it really got me very unstuck. Now, my mind is open to… I don’t know how to explain it. That’s what happens when you do creative thinking. It just got that problem a little bit shaken up into some new directions. You can do that at work. I think especially when you look at career, or you look at people being technical leaders, you want to spend some time thinking about what could be. Sometimes, these crazy ideas turn into… They spark some actual practical ideas. It’s a fun process.

Shane Hastie: As a neurodivergent person, what has your journey been and how has the environment supported or inhibited you?

Personal journey with neurodivergence [17:40]

John Gesimondo: Well, I didn’t know I was neurodivergent until during COVID. There’s, I guess, two eras of this journey: unaware and the aware stage. I think the only time that being unaware was blissful was certain parts of school. I actually am a very, very curious person so whenever I was interested in the topic that was in that class, I did quite well. It was miserable when I wasn’t interested, but that didn’t happen that often, luckily for me. That was I will call the mostly blissful unaware period.

Where it was no longer blissful is when I started working. I think the further I’ve gone in my career, the more difficult it’s been, to be honest. As a junior who’s just expected to learn all the time, it’s school-like. It’s somewhat structured, depending on the environment. My environment was very structured, so that was great.

During mid-career, there’s a lot of learning to be done still, and a little bit more confidence, I think, in your abilities. You start to get to know your strengths, something that helps. There’s this linearish feeling/sense of learning. It’s clear that you’re getting smarter and better at this job, even if you just look at a maybe two-week basis. Even within a sprint, you’re like, “Oh, wow. That was hard. Now, I could do it again much faster”. It’s great.

Then I think it gets a little difficult after that. As a senior, it’s like the structure lines start to blur. I think that’s especially true at Netflix, but I’m sure it’s true of many places. But the structure starts to decrease, and, as you get even further past that, you’re expected to add some structure for the less tenured folks.

This is where I think the burden of planning and communicating and structuring things in ways that other people can understand and being able to manage… I don’t have an answer for this one, but being able to manage the worker… The maker schedule and the manager schedule getting mixed in your calendar is brutal when you have ADHD.

I think this is where the rigor of my systems has had to increase a lot lately because of pursuing being a technical leader. I mean, I think, for a lot of people… They just wouldn’t. But I think, in good and bad ways, my achiever side is stronger than my neurodivergence side is suffering. That means build the tools, learn the processes, add the rigor to the systems, and rely on AI so that I can have my sustainable mental peace and be able to achieve what I’m looking to achieve.

Shane Hastie: You said you got a formal recognition during COVID. What difference has that made for you?

The impact of formal neurodivergence recognition [21:03]

John Gesimondo: Oh. Yes. It helps and it hurts. I know there’s a lot of mixed feelings about finding out that you’re neurodivergent later in life. But I think, in a practical way, for me, it’s mostly been beneficial because you really have to understand that you experience things differently than the people around you to get to some point that you can do something about it.

If you don’t know that, then it feels like the world is gaslighting you all the time. Someone proposes a system, like, “Oh, just use a task list to organize your tasks”, and that system doesn’t quite work for you… Well, if you don’t know that you have ADHD, then it just makes you feel like, “Oh, something’s wrong with me, or I’m lazy, or I didn’t try hard enough”.

But if you do know you have ADHD, then you know that you should check… “Is this an ADHD problem, or is it a me problem?” You’ll find out, in this example, that it’s an ADHD problem. You just find an alternative, or you give yourself extra care and patience on the journey of trying to figure out how that works usually to find a workaround, or find support tends to work better. Definitely need patience either way.

I often see this analogy with glasses. It’s like if you don’t know that your vision is bad, and then someone’s talking to you about something that’s a little too far away, and you can’t see it, you don’t have anything to work with there. It’s a crazy feeling, like, “What are they talking about? Should I say I don’t understand what they’re talking about? Because they sound pretty confident about it. Everyone else knows what they’re talking about”. Then, it’s like, “Okay, now I wear glasses. Okay, great. Well, now I know that if I’m not wearing my glasses, then that’s the problem. I can’t see the thing because I don’t have my glasses on”.

It just gives you this sense of certainty, even though that doesn’t fix the problem, but it does make you feel better about it. You understand the problem. Then, you can start the road, I think, from there, of looking for solutions. I shouldn’t even say solutions. I think that’s a little unfair. There’s no solution per se, and there’s no… We don’t have to go fully down that road, but no need to solve the problem. But you do need to adapt if you want to work with a majority neurotypical people at work, which is usually the case.

Shane Hastie: Thank you for that. Good insights there. If we swing back around to flow, you mentioned vibe coding when we were chatting earlier. What’s it like, and how do you get into that? In the swing of that today?

Vibe coding and the future of AI in development [24:02]

John Gesimondo: Yes. Vibe coding is so fun, sometimes. Vibe coding, we’ll say, is… For those that are not familiar, I think most engineers know about copilots, like GitHub Copilot, for instance, been around for a while. But the latest frontier of this is to have an agent-based copilot, so you’re giving it one instruction.

It can go do an entire loop of work of many different back-and-forth prompts, and you can either approve each step, “Oh, I want to write to this file”, accept, “I want to write a test now”, accept, or you can just click the auto approve, and you can just sit back and watch and hope for the best. This is what we call vibe coding in the AI developer community. It’s a fun time.

For those listening that haven’t tried it, even managers, please try it because it is probably one of the most exciting yet jarring experiences I’ve had in a while, and I hear that from others as well. The jarring part is just… It’s the closest you get to that, “Are we still going to be having jobs in the next year?” But it’s just… I don’t know. It’s a mind-blowing experience.

It really flips everything that you think you know about what’s difficult, what’s easy, how I’m going to do my work from now on. When should I use this? What is it good at? But let me tell you, on the other side of it, it’s so hard to abstract any actual lessons from these experiences. What is it good at? You just try, and you find out. You’re either really delighted, or you’re basically trying to pair with a under-educated intern. One of those two, and you don’t know until you try.

Shane Hastie: Lean into the unknown and experience the flow.

John Gesimondo: Totally, totally. I see a lot of people… I guess we could combine this with divergent thinking as well because that allows you… If you’re coming up with what you think are crazy ideas, and they’re in the software space, it is easier than ever to make a prototype through vibe coding, especially that, because I think the big open question is, “What kind of code quality are we making for the maintainers of all this stuff?” But if you want to just play around and make a inspirational prototype of something, it’s never been easier. Just open up your favorite vibe coding copilot and pray.

Shane Hastie: The tester in me shutters.

John Gesimondo: Absolutely. Absolutely.

Shane Hastie: John, some great insights here, and some really interesting stuff. If people do want to continue the conversation, where can they find you?

John Gesimondo: I think LinkedIn would probably be best. My username on there is jmondo. I’m occasionally active on Twitter as well, or X, and that’s also @jmondo. I’d go with that.

Shane Hastie: Thank you so much.

John Gesimondo: Thank you.

Mentioned:

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • RSS

MongoDB, Inc. (NASDAQ:MDB – Get Free Report) was the target of some unusual options trading activity on Wednesday. Stock traders bought 23,831 put options on the company. This represents an increase of approximately 2,157% compared to the typical volume of 1,056 put options.

MongoDB, Inc. (NASDAQ:MDB – Get Free Report) was the target of some unusual options trading activity on Wednesday. Stock traders bought 23,831 put options on the company. This represents an increase of approximately 2,157% compared to the typical volume of 1,056 put options.

MongoDB Price Performance

Shares of NASDAQ:MDB opened at $172.19 on Friday. MongoDB has a 1-year low of $140.78 and a 1-year high of $380.94. The firm has a fifty day moving average price of $186.51 and a 200 day moving average price of $245.99. The stock has a market cap of $13.98 billion, a P/E ratio of -62.84 and a beta of 1.49.

MongoDB (NASDAQ:MDB – Get Free Report) last announced its quarterly earnings results on Wednesday, March 5th. The company reported $0.19 EPS for the quarter, missing the consensus estimate of $0.64 by ($0.45). MongoDB had a negative return on equity of 12.22% and a negative net margin of 10.46%. The firm had revenue of $548.40 million for the quarter, compared to analysts’ expectations of $519.65 million. During the same period last year, the firm earned $0.86 EPS. Sell-side analysts expect that MongoDB will post -1.78 EPS for the current fiscal year.

Wall Street Analysts Forecast Growth

MDB has been the topic of several recent research reports. Bank of America cut their price objective on MongoDB from $420.00 to $286.00 and set a “buy” rating on the stock in a report on Thursday, March 6th. Rosenblatt Securities reaffirmed a “buy” rating and set a $350.00 price objective on shares of MongoDB in a research report on Tuesday, March 4th. Scotiabank reissued a “sector perform” rating and issued a $160.00 price objective (down previously from $240.00) on shares of MongoDB in a research note on Friday, April 25th. Piper Sandler lowered their price objective on MongoDB from $280.00 to $200.00 and set an “overweight” rating for the company in a research note on Wednesday, April 23rd. Finally, China Renaissance started coverage on shares of MongoDB in a research report on Tuesday, January 21st. They set a “buy” rating and a $351.00 target price on the stock. Eight investment analysts have rated the stock with a hold rating, twenty-four have assigned a buy rating and one has issued a strong buy rating to the company’s stock. Based on data from MarketBeat.com, the company currently has an average rating of “Moderate Buy” and a consensus price target of $294.78.

Read Our Latest Analysis on MongoDB

Insider Activity at MongoDB

In related news, CFO Srdjan Tanjga sold 525 shares of the business’s stock in a transaction on Wednesday, April 2nd. The stock was sold at an average price of $173.26, for a total value of $90,961.50. Following the completion of the sale, the chief financial officer now directly owns 6,406 shares in the company, valued at $1,109,903.56. The trade was a 7.57 % decrease in their position. The transaction was disclosed in a document filed with the SEC, which is available through the SEC website. Also, CEO Dev Ittycheria sold 18,512 shares of MongoDB stock in a transaction dated Wednesday, April 2nd. The stock was sold at an average price of $173.26, for a total transaction of $3,207,389.12. Following the completion of the sale, the chief executive officer now owns 268,948 shares of the company’s stock, valued at $46,597,930.48. This trade represents a 6.44 % decrease in their position. The disclosure for this sale can be found here. Insiders sold a total of 39,345 shares of company stock worth $8,485,310 in the last quarter. Corporate insiders own 3.60% of the company’s stock.

Institutional Investors Weigh In On MongoDB

Several hedge funds have recently modified their holdings of the stock. Strategic Investment Solutions Inc. IL purchased a new position in shares of MongoDB in the 4th quarter valued at $29,000. Hilltop National Bank lifted its holdings in shares of MongoDB by 47.2% in the 4th quarter. Hilltop National Bank now owns 131 shares of the company’s stock worth $30,000 after purchasing an additional 42 shares during the last quarter. Cloud Capital Management LLC bought a new stake in shares of MongoDB during the first quarter valued at approximately $25,000. NCP Inc. bought a new position in MongoDB in the 4th quarter valued at about $35,000. Finally, Wilmington Savings Fund Society FSB acquired a new stake in MongoDB in the third quarter worth approximately $44,000. 89.29% of the stock is owned by institutional investors and hedge funds.

About MongoDB

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Featured Articles

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

MongoDB, Inc. (NASDAQ:MDB) Given Consensus Recommendation of “Moderate Buy” by Analysts

MMS • RSS

Shares of MongoDB, Inc. (NASDAQ:MDB – Get Free Report) have been given an average recommendation of “Moderate Buy” by the thirty-three research firms that are currently covering the company, Marketbeat Ratings reports. Eight equities research analysts have rated the stock with a hold recommendation, twenty-four have given a buy recommendation and one has issued a strong buy recommendation on the company. The average twelve-month target price among analysts that have updated their coverage on the stock in the last year is $294.78.

A number of research analysts have weighed in on the company. Rosenblatt Securities reiterated a “buy” rating and set a $350.00 target price on shares of MongoDB in a report on Tuesday, March 4th. Truist Financial decreased their price objective on MongoDB from $300.00 to $275.00 and set a “buy” rating for the company in a report on Monday, March 31st. Monness Crespi & Hardt upgraded shares of MongoDB from a “sell” rating to a “neutral” rating in a report on Monday, March 3rd. Scotiabank reiterated a “sector perform” rating and issued a $160.00 price target (down from $240.00) on shares of MongoDB in a research note on Friday. Finally, KeyCorp lowered shares of MongoDB from a “strong-buy” rating to a “hold” rating in a research note on Wednesday, March 5th.

Check Out Our Latest Report on MDB

Insider Buying and Selling

In other news, Director Dwight A. Merriman sold 3,000 shares of the business’s stock in a transaction on Monday, February 3rd. The shares were sold at an average price of $266.00, for a total transaction of $798,000.00. Following the completion of the sale, the director now owns 1,113,006 shares of the company’s stock, valued at $296,059,596. The trade was a 0.27 % decrease in their position. The sale was disclosed in a legal filing with the SEC, which is available at this link. Also, CAO Thomas Bull sold 301 shares of the firm’s stock in a transaction on Wednesday, April 2nd. The stock was sold at an average price of $173.25, for a total value of $52,148.25. Following the completion of the transaction, the chief accounting officer now directly owns 14,598 shares in the company, valued at approximately $2,529,103.50. This represents a 2.02 % decrease in their ownership of the stock. The disclosure for this sale can be found here. Insiders sold 39,345 shares of company stock valued at $8,485,310 over the last 90 days. Company insiders own 3.60% of the company’s stock.

Institutional Trading of MongoDB

Hedge funds and other institutional investors have recently bought and sold shares of the stock. Cloud Capital Management LLC bought a new stake in MongoDB during the first quarter valued at about $25,000. Strategic Investment Solutions Inc. IL purchased a new stake in shares of MongoDB during the fourth quarter worth about $29,000. Hilltop National Bank raised its stake in MongoDB by 47.2% during the 4th quarter. Hilltop National Bank now owns 131 shares of the company’s stock valued at $30,000 after purchasing an additional 42 shares during the period. NCP Inc. purchased a new position in MongoDB in the 4th quarter worth approximately $35,000. Finally, Versant Capital Management Inc boosted its stake in MongoDB by 1,100.0% in the 4th quarter. Versant Capital Management Inc now owns 180 shares of the company’s stock worth $42,000 after purchasing an additional 165 shares during the period. Hedge funds and other institutional investors own 89.29% of the company’s stock.

MongoDB Price Performance

NASDAQ MDB opened at $174.69 on Wednesday. The business’s 50-day moving average is $190.43 and its 200 day moving average is $247.31. The stock has a market capitalization of $14.18 billion, a PE ratio of -63.76 and a beta of 1.49. MongoDB has a 1 year low of $140.78 and a 1 year high of $387.19.

MongoDB (NASDAQ:MDB – Get Free Report) last issued its quarterly earnings data on Wednesday, March 5th. The company reported $0.19 earnings per share for the quarter, missing analysts’ consensus estimates of $0.64 by ($0.45). MongoDB had a negative return on equity of 12.22% and a negative net margin of 10.46%. The business had revenue of $548.40 million during the quarter, compared to the consensus estimate of $519.65 million. During the same quarter in the prior year, the business posted $0.86 earnings per share. As a group, equities research analysts anticipate that MongoDB will post -1.78 earnings per share for the current year.

MongoDB Company Profile

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Recommended Stories

This instant news alert was generated by narrative science technology and financial data from MarketBeat in order to provide readers with the fastest and most accurate reporting. This story was reviewed by MarketBeat’s editorial team prior to publication. Please send any questions or comments about this story to contact@marketbeat.com.

Before you consider MongoDB, you’ll want to hear this.

MarketBeat keeps track of Wall Street’s top-rated and best performing research analysts and the stocks they recommend to their clients on a daily basis. MarketBeat has identified the five stocks that top analysts are quietly whispering to their clients to buy now before the broader market catches on… and MongoDB wasn’t on the list.

While MongoDB currently has a Moderate Buy rating among analysts, top-rated analysts believe these five stocks are better buys.

Explore Elon Musk’s boldest ventures yet—from AI and autonomy to space colonization—and find out how investors can ride the next wave of innovation.

MMS • RSS

MongoDB, Inc. (NASDAQ:MDB – Get Free Report) saw unusually large options trading on Wednesday. Stock investors bought 36,130 call options on the stock. This is an increase of approximately 2,077% compared to the average daily volume of 1,660 call options.

MongoDB, Inc. (NASDAQ:MDB – Get Free Report) saw unusually large options trading on Wednesday. Stock investors bought 36,130 call options on the stock. This is an increase of approximately 2,077% compared to the average daily volume of 1,660 call options.

Insider Transactions at MongoDB

In other news, CAO Thomas Bull sold 301 shares of the firm’s stock in a transaction that occurred on Wednesday, April 2nd. The shares were sold at an average price of $173.25, for a total value of $52,148.25. Following the transaction, the chief accounting officer now owns 14,598 shares in the company, valued at approximately $2,529,103.50. This trade represents a 2.02 % decrease in their ownership of the stock. The sale was disclosed in a document filed with the Securities & Exchange Commission, which is accessible through this hyperlink. Also, Director Dwight A. Merriman sold 3,000 shares of the stock in a transaction on Monday, February 3rd. The shares were sold at an average price of $266.00, for a total transaction of $798,000.00. Following the sale, the director now directly owns 1,113,006 shares of the company’s stock, valued at $296,059,596. The trade was a 0.27 % decrease in their position. The disclosure for this sale can be found here. Over the last 90 days, insiders sold 39,345 shares of company stock valued at $8,485,310. 3.60% of the stock is owned by corporate insiders.

Institutional Investors Weigh In On MongoDB

A number of institutional investors have recently bought and sold shares of MDB. Cloud Capital Management LLC purchased a new stake in MongoDB in the 1st quarter worth $25,000. Strategic Investment Solutions Inc. IL purchased a new stake in MongoDB in the 4th quarter worth $29,000. Hilltop National Bank increased its stake in MongoDB by 47.2% in the 4th quarter. Hilltop National Bank now owns 131 shares of the company’s stock worth $30,000 after buying an additional 42 shares in the last quarter. NCP Inc. purchased a new position in shares of MongoDB during the fourth quarter valued at about $35,000. Finally, Versant Capital Management Inc grew its position in shares of MongoDB by 1,100.0% during the fourth quarter. Versant Capital Management Inc now owns 180 shares of the company’s stock valued at $42,000 after purchasing an additional 165 shares in the last quarter. Hedge funds and other institutional investors own 89.29% of the company’s stock.

MongoDB Stock Performance

Shares of MongoDB stock opened at $172.19 on Friday. MongoDB has a fifty-two week low of $140.78 and a fifty-two week high of $380.94. The company has a market capitalization of $13.98 billion, a P/E ratio of -62.84 and a beta of 1.49. The company has a fifty day moving average of $186.51 and a 200 day moving average of $245.99.

MongoDB (NASDAQ:MDB – Get Free Report) last issued its quarterly earnings results on Wednesday, March 5th. The company reported $0.19 earnings per share (EPS) for the quarter, missing analysts’ consensus estimates of $0.64 by ($0.45). MongoDB had a negative return on equity of 12.22% and a negative net margin of 10.46%. The business had revenue of $548.40 million during the quarter, compared to analysts’ expectations of $519.65 million. During the same quarter in the previous year, the company posted $0.86 EPS. Analysts forecast that MongoDB will post -1.78 EPS for the current year.

Analyst Upgrades and Downgrades

Several research firms have recently commented on MDB. Royal Bank of Canada dropped their target price on shares of MongoDB from $400.00 to $320.00 and set an “outperform” rating for the company in a research report on Thursday, March 6th. Rosenblatt Securities reaffirmed a “buy” rating and issued a $350.00 target price on shares of MongoDB in a research report on Tuesday, March 4th. Truist Financial dropped their target price on shares of MongoDB from $300.00 to $275.00 and set a “buy” rating for the company in a research report on Monday, March 31st. Macquarie lowered their price target on shares of MongoDB from $300.00 to $215.00 and set a “neutral” rating for the company in a research report on Friday, March 7th. Finally, China Renaissance started coverage on shares of MongoDB in a research report on Tuesday, January 21st. They set a “buy” rating and a $351.00 price target for the company. Eight equities research analysts have rated the stock with a hold rating, twenty-four have issued a buy rating and one has issued a strong buy rating to the company. Based on data from MarketBeat, the company currently has a consensus rating of “Moderate Buy” and an average target price of $294.78.

Get Our Latest Stock Analysis on MongoDB

MongoDB Company Profile

MongoDB, Inc, together with its subsidiaries, provides general purpose database platform worldwide. The company provides MongoDB Atlas, a hosted multi-cloud database-as-a-service solution; MongoDB Enterprise Advanced, a commercial database server for enterprise customers to run in the cloud, on-premises, or in a hybrid environment; and Community Server, a free-to-download version of its database, which includes the functionality that developers need to get started with MongoDB.

Further Reading

Receive News & Ratings for MongoDB Daily – Enter your email address below to receive a concise daily summary of the latest news and analysts’ ratings for MongoDB and related companies with MarketBeat.com’s FREE daily email newsletter.

Presentation: Moving Your Bugs Forward in Time: Language Trends That Help You Catch Your Bugs at Build Time Instead of Run Time

MMS • Chris Price

Transcript

Price: I’m going to be talking about moving your bugs forward in time. This is the topic that I’ve been thinking about on and off for many years. Before we get into the meat of the talk, how many folks are up to date on the Marvel Cinematic Universe? Those of you who are not, no problem. I’m going to start with a little story related to it. In the recent movies and TV shows, they’ve been building on this concept of the multiverse, where there are all these different parallel universes that have different timelines from one another. They differ by small details along the timelines. There’s one show in particular called Loki. He’s kind of a hero/villain. In his show, there are all these different timelines where the difference in each timeline is like Loki is slightly different in each one. In one of them, for example, Loki is an alligator, which, as you might imagine, leads to all sorts of shenanigans.

In that show, there’s this concept of the sacred timeline. There’s the one timeline that they’re trying to keep everything on line with. All of the other timelines somehow diverge into some weird apocalyptic situation, so they’re working to try to keep everything on this sacred timeline. I’m going to talk about what a bug might look like on this sacred timeline. We start off with, a developer commits a bug. We don’t like for this to happen, but it’s inevitable. It happens to all of us. What’s important is what happens after that. This is a little sample bit of toy Python code where we’ve got a function called divide_by_four. It takes in an argument.

Then it just returns that argument divided by four. Somewhere else in our codebase, some well-meaning developer creates a variable that is actually a string variable, and they try to call this function and pass it as the argument. What happens in Python is that’s a runtime error where you get this error that says, unsupported operand type for the divide by operator. On the sacred timeline, we’ve got something in our CI that catches this after that commit goes in. We may have some static analysis tool that we’re running. We may have test coverage that exercises that line of code. The important thing is our CI catches it, and that prevents us from shipping this bug to production. What happens next? The developer fixes the bug.

Then the CI passes, and they’re able to successfully ship that feature to prod. This is a pretty short, pretty simple to reason about timeline. The cost of that bug was basically like one engineer, like one hour of their time, something on that order. Fixing the bug probably actually took less than an hour, but dealing with monitoring the CI, monitoring the deployment, maybe it takes an hour of their time. Still not a catastrophic expense for our business.

Now we’re going to look at that bug on an alternate timeline. We’ll refer to this as the alligator Loki timeline. In this timeline, the developer commits the bug. For whatever reason, we’re not running the static analysis tool or we don’t have test coverage, and the bug does not get caught by our CI. Then we have our continuous delivery pipeline. It goes ahead and deploys this bug to production. Say we’re a regional service that deploys to regions one at a time to reduce blast radius, so we deploy this bug to U.S.-west-1. Then, for whatever reason, this code path where the bug exists isn’t something that gets frequently exercised by all of our users, so we don’t notice it. Maybe a day passes. Then the time passes. The bug ends up getting deployed to U.S.-east. Some more time passes, we still haven’t noticed there’s a bug. Deploys to Europe. Some more time passes.

Then alligator Loki eats the original developer, or maybe something more realistic happens, like they transfer to another team, or get a promotion, or whatever. That developer is not around anymore. Our bug keeps going through the pipeline and deploys to Asia. Now we have this big problem. It turns out that in that region, there is a customer who uses that code path that we didn’t catch in the earlier regions when we deployed. Now we’ve gotten this alert from this very important customer that they’re experiencing an outage and we’ve got to do something about it. Our operator gets paged. The manager gets paged because the operator doesn’t immediately know what’s going on. Maybe some more engineers get added to a call to try to address the situation. They start going through the version control history to figure out where this bug might have come in. They identify the bad commit, so now they know where the bug came in, but this has been days.

Several other commits have probably come in since then, and now they have to spend some time thinking about whether or not it’s safe to roll back to the commit prior to that, or whether that’s going to just cause more problems. They spend some time talking about that, decide if the rollback is safe. Then, they decide it’s safe. They do the rollback in that one region, and then they confirm that that customer’s impact was remediated. That’s great. We’re taking a step in the right direction. Now we have to deal with all those other regions that we rolled it out to. Got to do rollbacks in those as well. Depending on how automated our situation is, that may be a lot of work. Then this could just keep going for a long time, but I’m going to stop here.

When we think about the cost of this bug, compared to the one on the sacred timeline, the first and most important thing is there was a visible customer outage. Depending on how big your company is and how important that customer was, that can be a catastrophic impact for your business. We also spent time and money on the on-call being engaged, the manager being engaged, additional engineers being engaged, executing all these rollbacks however much time that ended up taking. Re-engineer the feature. Now we have to assign somebody new to go figure out what that original developer was trying to achieve, redo the work in a safe way, get it fixed. They’ve also got to make sure that whatever other commits got rolled back in that process, that we figure out how to get those reintroduced safely as well.

Then the one that we don’t talk about enough is opportunity cost. Every person who was involved in this event could have spent that time on something else that was more valuable to your business, working on other features, whatever it may be. When we compare the cost of these two timelines, the first timeline looks so quaint in comparison. It looks so simple. The cost was really not that big of a deal. On the second timeline, it bubbled into this big giant mess that sucked up a whole bunch of people’s time and potentially cost us a customer. The cost is just wildly different between the two. We want to really avoid this alligator timeline. What’s the difference between those two timelines? The main difference is that in the sacred timeline, we caught that bug at build time. In the alligator timeline, we caught it at runtime. That subtle difference is the key branching factor that ends up determining where you end up between these two scenarios.

Background

That’s what my talk’s going to be about, when I say moving your bugs forward in time. I’m talking about moving them from runtime to build time. Thankfully, I think that a lot of modern programming languages have been building more features in to the language to help make sure that you can catch these bugs earlier. That’s what I want to talk about. My name is Chris Price. I am a software engineering manager/software engineer at Momento. We’re a serverless caching and messaging company. Previous to that, I worked at AWS with a lot of other folks that are at Momento now. I worked on video streaming services and some of us worked at DynamoDB. Before that, I worked at Puppet doing infrastructure as code.

Maintainability

Then zooming out before I get into the weeds on this, this phenomenon I’m talking about, about moving bugs from runtime to build time is really a subset of maintainability, which as I’ve progressed through my career as a software engineer, I’ve really found more of that maintainability is one of the most important things that you can strive for, one of the most important skills that you can have as a software engineer.

When I first got started straight out of college, I thought that the only important thing about my job was how quickly I could produce code, how fast can I get a feature out the door, how many features can I ship and how quickly. As I got more experience in the industry and worked on larger codebases with more diverse teammates, what I realized is that that’s not really the most important skill for a software engineer. It’s way more important to think about what your code can do tomorrow and how easy it’s going to be for your teammates and your future teammates that you haven’t even met yet to be able to understand and modify and have confidence in their changes that they’re making to your code. That’s going to be the central theme of this talk.

Content, and Language Trends

These are the six specific language features that I’m going to dive into. First, we’ll talk about static types and null safety. Then we’re going to talk about immutable data and persistent collections. Then we’ll wrap up by talking about errors as return types, and exhaustive pattern matching. Some of the languages that have influenced the points that I’ll be making in this talk. I spent a lot of time working in Clojure a while back, and that is where I really got the strong sense for how valuable it is to use immutable data structures, how much that improves the maintainability of your code. Rust is one of the places where I really got used to doing a lot of pattern matching statements. Go is the first language that I worked in that really espoused this pattern of treating errors as return types rather than exceptions.

Then, Kotlin is a language that I really love because I feel like it takes a lot of these ideas that come from some of these more functional programming languages, and it makes them really approachable and really accessible in a language that runs on the JVM. You can adopt it in your Java codebase without boiling the ocean. You can ease your way into some of these patterns without having to switch out of a completely object-oriented mindset overnight. It’s a really awesome, approachable language. Two engineers have had a lot of influence on my thinking, Rich Hickey, the creator of Clojure, Martin Odersky, the creator of Scala.

If you get a chance to watch any talks that these gentlemen have given in the past, I highly recommend them. They’re always really informative, and they’ve been really foundational for me. I also highly recommend if you can find a way to buy yourself some time to do a side project in a functional programming language. The time that I spent writing Clojure, I think, was more formative for me and improved my skills as an engineer more than any other time throughout my whole career, even though I haven’t written a line of Clojure code in quite some time now.

Static Types, Even in Dynamic Languages

We’ll start off with static types, and I’m saying even in dynamic languages. I realize that that may be controversial to some folks. We’re going to go back to this bug that we started off with on the sacred timeline and the alligator timeline, where we passed the wrong data type into this Python function. A lot of times when I try to talk to people about opting into static typing in some of these dynamic languages, I hear responses like this, “I can build things faster with dynamic types, and I can spend my time thinking about my business logic rather than having to battle with this complicated type system”. Or, another thing I hear is, “I can avoid those runtime type errors that you’re talking about as long as I have good test coverage that exercises all the code”. I used to believe these two things, and they’re still definitely very reasonable opinions to have, but I’ve drifted away from these.

Working at AWS was probably the place where I really started to drift away from these. Inside of AWS, there’s a lot of language and a lot of shared vocabulary that gets used to try to give people a shared context about how you’re thinking about your work. One of the ones that really stuck with me was this one, “Good intentions don’t work, mechanisms do”. This is a Jeff Bezos quote, but it’s really widely spread through a lot of AWS blogs and other literature. Mechanisms here just means some kind of automated process that takes a little bit of the error-prone decision-making stuff out of the hands of a human and makes sure that the thing just happens correctly. It takes away your reliance on the good intentions of engineers. That’s going to be another key theme of this talk is that a lot of these types of bugs that we’re talking about, they come to play when you have something in your codebase that relies on the good intentions of your engineers to stick to the best pattern.

We’ve got these beloved dynamic languages like Clojure, JavaScript, Ruby, Python. My claim is that if you opt into the static type systems in these languages, you just completely avoid shipping that class of bug to production, no if, ands, or buts about it. That particular bug that we started with on the sacred timeline and the alligator timeline, it just goes away. What’s really powerful about it is you’re taking away this reliance on the good intentions of your engineers. You may have best practices established in your engineering work that whenever you’re using a dynamic language, you better make sure you have thorough test coverage that’s going to prevent you from having one of these kinds of bugs go to production, but you’re relying on the engineers to adhere to that best practice.

Then you hire new people to your team and they don’t know the best practices yet and they’re prone to making mistakes sometimes. Putting that power in the hands of the compiler instead of the humans, it just eliminates that class of bugs. That doesn’t mean that we have to abandon our favorite dynamic languages. Pretty much all of these languages have added opt-in tools that you can use to get static analysis and static typing. Python has mypy. JavaScript, obviously TypeScript is becoming much more popular over the last five years or so. Ruby has a system called rbs. Clojure has several things including Typed Clojure. Whenever you opt into one of these, you can usually do it pretty gradually. You don’t have to boil the ocean with your codebase. It really just boils down to just adding a few little type int to the method signatures. That little action changes this bug from a runtime bug to a build time bug where mypy is going to catch this up front and say you can’t pass a string to this function. That allows us to avoid that alligator timeline.

Null Safety

Second one I’ll talk about is null safety. You’ve probably all heard the phrase about this being like the million-dollar mistake in programming. If you’ve written any Java, you’re probably really familiar with this pattern where like every time you write a new function, there’s 15 lines of boilerplate of checking all the arguments for nulls up front. Same thing in C#. These are again relying on good intentions. The first thing is you’re relying on your developers to remember to put all those null checks into place. Then, even worse, if they do put the null checks into place, it’s still a runtime error that’s getting thrown, so you’re still subject to the same kind of bugs that led us to the alligator timeline. A lot of the newer languages like Kotlin have started almost taking away support for assigning nulls to normally typed variables. In Kotlin, if you declare a variable as of type string, you just can’t assign a null to it. That won’t compile.

If you know that you need it to accept null, then you can put this special question mark operator on the type definition, and that allows you to assign a null to it. Now once you’ve done that, you can no longer call the normal string methods directly on that object. The compiler will fail right there. Instead, the compiler will enforce that you’ve either done an if-else and handled the null case, or you can use these special question mark operators to say that you’re willing to just tolerate passing the null along. In either case, the compiler has made you made an explicit decision upfront about what you’re going to do in case it’s null rather than you essentially finding out about this bug at runtime. Rust is another language where there is no null.

In Rust, the closest thing you have to null is this option type. Any option in Rust is either an instance of None or an instance of Some. This is similar to optional in Java, but in Rust, it’s much more of a first-class concept. In this code here, you can see I declared this function called foo, and I’d said its argument is a string. I cannot call that function and pass a None in. That’s a compile time error. Bar, I said it’s an option of string. I can pass a None in or I can pass a Some in, but again I’ve had to be explicit about it and make the decision upfront. Compile time null safety, most languages have some support for this these days.

The languages that have been around the longest like Python, C#, Java, those languages have to deal with a lot of backward compatibility concerns. They can’t just flip a switch and adopt this behavior. In those languages, you’ll probably have to work a little bit harder to figure out how to configure your build tools to disallow nulls, but they all have some support for it. An experiment that I suggest is just writing an intentional bug where you pass a null to something that you know should not accept a null, and then play with your build tool configuration until it catches that at build time rather than allowing it to possibly happen at runtime.

Immutable Variables, and Classes

Now we’ll move on to number three, which is immutable variables and classes. There are very few things that I’ve worked with in my career that I feel improve the maintainability of my code as much as leaning into immutable variables as much as humanly possible. The main reason for this is that they dramatically reduce the amount of information that you need to keep in your brain when you’re reading a piece of code in order to reason about it and make assertions about it. As an example of that, here’s some Java code. I’ve got this function called doSomething that takes in a foo as an argument. Foo is just a regular POJO in this case. Then it calls doSomething else and passes that foo along. Now here’s some calling code that appears in some other file where I construct an instance of the foo, and then I pass it to that doSomething function. Then imagine we have maybe 100 lines of code right here or maybe even more than that.

Then we eventually get to this line of code where we print out foo. If I’m an engineer working on a feature in this codebase and the change that I want to make is somewhere around this line that’s doing the print statement, what can I assert about the state of my foo at this point in the code? Were there any statements in between those two that might have modified my foo? It’s certainly possible, so I’m going to have to read all that code to find out. Was my foo passed by reference to any functions that might’ve mutated it? Yes, it was passed to do something and then that passed it along to do something else. Does that mean that I need to go examine the source code of all of those functions in order to be able to reason about the state that this variable is going to be in when I get to this line of code? The answer to that is basically yes. Without knowing what’s happening in every one of those pieces of code, I have no idea whether this variable got mutated in between those two points in time.

Then the situation gets infinitely more difficult if you have concurrency in your program. If potentially this doSomething else function is passing that reference to some pool of background threads that may be doing work in the background, then you can imagine a scenario where I add another print line here, just two print lines in a row printing this variable out twice. I can’t even assert that it’s going to have the same value in between those two print statements because some background thread might’ve changed it in between the two.

Again, I have to go read all of the code everywhere in my application to know what I can and can’t assume about this variable at this point in time. That just slows me down a lot. An alternate way to handle this with newer versions of Java is rather than foo being a POJO, we use this new keyword called record, which basically makes it a data class. It means that it’s going to have these two properties on it and they can’t change ever. It’s an immutable piece of data.

Then I also add this final keyword, which says that nobody can reassign this variable anywhere else in this scope here. With those two changes in place, I know that nobody can have reassigned my foo to a different foo object because that would have been a compile time error. I also know that nobody can have modified this inner property, this myString, because that also would be a compile error. I don’t care anymore that we passed a reference to this variable to the Bar.doSomething method, because no matter what it does, it can’t have modified my data. I don’t have to worry about that. Also, if there’s 100 lines of code here, I know that they can’t have modified it. I no longer have to spend any time thinking about the state that might have changed in between these lines of code.

When I get down here to this print statement, I know exactly what it’s going to print. That means I can just move on with my changes that I want to make to the code without getting distracted by having to page all of the rest of this application into my brain and think about it. Most languages have some support for this these days. Kotlin definitely has data classes. Clojure, everything’s immutable by default. Java has records and final. You can find this in pretty much any programming language. TypeScript and Rust, you have to roll your own a little bit, but it’s definitely possible to follow these patterns.

Persistent Collections, and Immutable Collections

That leads us into a related but slightly different topic, which is about collections. I also want my collections to be immutable for the same reasons, but that’s a little bit harder. You can see this line of code here where I’m constructing a Java ArrayList. I’m using this final keyword because I want this to be immutable. I want to be able to make those assumptions about the state of my list without having to spend a bunch of time reading my other code. The problem is this ArrayList provides these mutation functions, the .add, .remove, whatever else. I’m right back in the world where I was before, where these other functions that I’m calling, these other lines of code that might happen here, they can mutate that list in any number of ways.

Again, I cannot make any mental assertions about what this list has in it by the time I get to this point in my code. Recent versions of Java have added some stuff, like there’s this new list of factory function that actually does produce an immutable list, which is what I want. Now I don’t have to worry about the fact that I’ve called doSomething because I know that this list is immutable. I do, again, know that by the time I get to this print statement, I know what my list has in it. The flaw with that is you’ll notice this list.of factory function is still returning the normal list interface.

That list interface provides these mutating functions like add, remove, whatever else. Even worse, if I call those now, it’s a runtime error. The compiler won’t detect that this is a problem, but the program won’t throw an error at runtime. Now I’m back to the world of relying on good intentions. Now I’ve got this immutable list, which is what I wanted, but if I’m passing it around to all these other functions and only advertising it as a list, then they may try to call the mutation functions on it and then we get a bug at runtime.

Some of the more modern languages like Kotlin, they’ve solved this problem by, in Kotlin, collections are immutable by default. If I say listOf, then I get an immutable list and it doesn’t have any methods on it like add. Again, compile time error if somebody tried to call that. It does also have mutable variants of those collections. If I really need one, I can have one, but the key here is that it has a separate interface for the two. I can lean into the immutable interface in all the places where I want to make sure that I don’t have to worry about somebody modifying the collection underneath me.

Whenever I talk about this concept of these immutable collections, people ask me, what about performance? Your code is going to make changes to the collection over time, otherwise your code’s not doing anything interesting. Doesn’t that mean that we have to clone the whole collection every time we need to make a modification to it, and isn’t that super slow and memory intensive? The answer to that is, no, thankfully. There’s a really cool talk from QCon 2009, from Rich Hickey, the author of Clojure, about persistent data structures, which is the data structures that he built in as the defaults in the language of Clojure. They present themselves to you as a developer as immutable at all times. When you have a reference to one, it’s guaranteed to be immutable. It provides modifier functions like add, remove, but what they do is they produce a new data structure and they give you a reference to it.

Now you can have two references, one to the old one, one to the new one, and neither one of them can be modified by other code out from underneath you. The magic is, behind the scenes, they’re implemented via trees and they use structural sharing to share most of the memory that makes up the collection. It’s actually not nearly as expensive as you might fear. This was a hard thing for me to wrap my head around when I first started writing Clojure. I was like, that can’t possibly be performant. It’s a really nice solution to the problem. In practice, the way that they’re implemented, you almost never need to clone more than about four nodes in the tree in order to make a modification to it, even if there’s millions of nodes in the tree. This is a slide from Rich’s QCon talk where he talks about how these are implemented. What you can see here is two trees. The one on the left with the red outline, that’s the root node of the original collection. It has all these values in it.

On the right, he’s showing us, so we want to add a new child node to this purple node with the red outline. We’re going to try to add a new child node to it. To implement that, what we actually do is we just clone all the parent nodes that go down to that one, and we add the new child node there. Then the rest of the child nodes of all of these new nodes that we’ve created, they just point back to the same exact memory from the original data structure. We’ve cloned four tiny little objects and retained 99% of the memory that we were using from the original collection. With this pattern, you can have your cake and eat it too. You can have a collection that presents itself to you as immutable so that you know that it can’t be modified out from underneath you while you’re working on it. You don’t have to sacrifice performance when other threads, for example, need to change it.

This is hugely powerful in concurrent programming because there’s all kinds of problems that you can run into with shared collections across multiple threads in your concurrent code, where you either have to do a lot of locking to make sure that one thread doesn’t modify it while another thread is using it, or you can end up just running into these weird race conditions that cause runtime errors. With this pattern, any thread, once it grabs a reference to this collection, you know that that collection’s not going to change while you’re consuming it. After it’s done with it, it can go grab a new reference to the collection, which might have been updated somewhere else, but again, that one will be immutable, and we don’t have to worry about it being modified from underneath this either. Clojure and Scala have these kinds of collections built right into their standard library, but every other programming language that I’ve looked into has great libraries available on GitHub for this, and they’re usually pretty well-consumed and battle-tested.

Errors as Return Types – Simple, Predictable Control Flows

Now we’re going to move on to errors as return types. This one has mostly to do with control flow. When I’m talking about this one, I like to reflect on the history of Java and how at the beginning of Java, it was really common for us to have these checked exceptions versus unchecked exceptions. Method signatures would be really weird depending on whether they’re using checked or unchecked exceptions. These are trying to do exactly what I’m advocating for in this talk. They were trying to give us compile time safety to make sure that we were handling these errors that might happen.

In practice, we just collectively decided we did not like the ergonomics of how it was implemented and we drifted away from it over time. I think one of the funniest examples of that evolution is in the standard library of Java itself, the basic URI class that you use for everything that has to do with networks. It throws a checked exception called URISyntaxException whenever you call its constructor, which means you literally cannot construct one of these objects without the compiler forcing you to put this try-catch there, or without you changing your method signature to advertise that you’re going to rethrow that.

Then everybody else who’s calling your function now has to deal with the same problem. Everybody hated that because the odds that we were going to actually pass something in there that would cause one of these exceptions were really low and drove people crazy. A couple releases later in Java, they added this static factory function called create that literally all it does is call the constructor and then catch the exception and rethrow it as a runtime exception. They put that into the standard library. That was an interesting trend to observe. Likewise, all of the JVM languages that have appeared in the last 10, 15 years, Kotlin, Scala, Clojure, they’ve all basically gotten rid of these checked exceptions in favor of runtime exceptions. That means now all of our errors are runtime errors. That again is really against the grain of what I’m pitching in this talk. It means now we have to go read the docs or the code for every function we’re calling and make sure we know what kinds of exceptions it could be throwing, and handle them successfully. We’re back here, good intentions.

Go is the first language recently that I’ve tickled something in my brain for thinking about different ways to solve this problem. Go really leaned into the syntax of, if you’re going to call a function that might cause some kind of error, instead of there being an exception with weird control flow semantics and relying on this weird try-catch syntax, just returns a tuple instead. You either get your result back or your error back. One of those is going to be nil whenever you call this function. Then the compiler can force that, that you’ve done some checking on that nil, and you’ve decided how to handle it.

This is again, like the compiler is now doing this work rather than relying on good intentions. The other thing that I really like about this is we’re just using an if-else statement to interact with this error. It’s not a new special language construct that differs from how we’re dealing with all the other pieces of data in our code, like a try-catch is. It’s just like the same type of code we’d write for any other piece of data. We got more clear control flow. It allows the compiler to enforce more explicit handling, prevents us from silently swallowing types of exceptions. Yes, again, we can use our normal language constructs rather than the special try-catch stuff. Here’s a Rust equivalent of that. In Rust, there’s this type called result. Any instance of result is either error or ok.

Then it’s a generic type. If it’s a success, if it’s an ok, then the type is going to be this integer 32 bit. If it’s an error, then the value is going to be a string. Then we can use this pattern match statement and say, if it’s ok, then I’m going to do something with the success case. If it’s an error, then I’m going to do something with the error case. In these case statements, we get back the types that we declared in the result declaration.

Exhaustive Pattern Matching, and Algebraic Data Types

Errors as return types help us move our bugs from runtime to build time. I’ve shown you that they’re pretty ingrained in the languages in both Go and Rust, but can we do this in other languages? That leads me into my last topic that I want to talk about, which is about exhaustive pattern matching and algebraic data types. I’m going to explain what those are a little bit, and then I’m going to close the loop on the error handling part of this. What is an algebraic data type? It’s basically like a polymorphic class. You can imagine if you had a parent class called shape, and then you had child classes called circle, square, octagon. It’s basically just that, except for the compiler knows upfront all of the existing subtypes that can exist rather than it being open-ended. Most modern languages have some way of expressing these now.