Month: October 2022

Podcast: The Collaborative Culture and Developer Experience in the Redis Open Source Community

MMS • Yiftach Shoolman

Article originally posted on InfoQ. Visit InfoQ

Subscribe on:

Transcript

Shane Hastie: Good day, folks. This is Shane Hastie for the InfoQ Engineering Culture Podcast. Today, I’m sitting down with if Yiftach Shoolman. Yiftach is the co-founder and CTO of Redis. Today I’m in Australia and he’s in Israel. Yiftach, welcome. Thank you for taking the time to talk to us today.

Yiftach Shoolman: Great to talk with you, Shane. You pronounce my name correctly. Thank you.

Shane Hastie: Thank you very much. So let’s start. Tell us about your background.

Introductions [00:48]

Yiftach Shoolman: Okay, so I’m a veteran in the high tech area. I started my career early in 1990, and did ADSL system in order to accelerate the broadband. So everything in my career is somehow related to performance and then I realized that after the broadband become adopted, I realized that this is no more the bottleneck. The bottleneck is inside at the centre. I started my first company named Crescendo Networks that does, I would say, application acceleration plus balance capability at the front end of web servers and actually accelerate web servers. This company was eventually sold to a F5 in 2008 and one of the board member in Crescendo was Ofer Bengal, my co-founder in Redis. Back then we realized that after we solved the bottleneck at the front end of the data centre, the web server, the application server, we realized that the main bottleneck inside where the data is, which is the database, and this is where we started to think about Redis. At that time that was early 2010.

We started a company in 2011. There was a technology called Memcached which is an open source that does cashing for databases and we found a lot of holes in the Memcached. For instance, every time you scale it you lose data or it doesn’t have replication, et cetera. We thought “What is the best way to solve all these walls at the technology level?” Then we realized that there is a new project which is getting momentum. It is called Redis. It has replication built in. Back then it didn’t have scaling capabilities but we managed to solve it somehow and then we created the Redis Company. Back then it was different name. It was called Garantia Data and we offered Memcached while the backend was the Redis infrastructure. Now when we launched the service and it was the first database as a service ever, I think, in the middle of 2012, all of a sudden people were asking us, “Okay, Memcached is great but what about Redis?”

We said to ourself, “The entire infrastructure is Redis. Let’s also open the interface of Redis and offer two services.” So we did it early 2013. We offered Redis as a service and Memcached as a service. It took us one year to understand that the market wants Redis, not anymore Memcached and this is the story I would say. Few years afterwards we asked Salvatore Sanfilippo who is the creator of Redis open source to join forces and to work for Redis Company because we believe it’ll help the community and to grow Redis. He accepted the offer and became part of our team, is still part of our team. Redis as a company, in addition to us contributing to the open source, the majority of the contributions coming from our employees, we also have a commercial product, Redis as a service by Redis the company. It is called Redis Enterprise Cloud as well as the Redis Enterprise Software that people can deploy on-prem.

Few words about the company, we are almost 800 employee distributed across multiple geo regions and of course continue in supporting the open source in a friendly open manner. We’ve well defined committee that is composed from people from registered company, a lady from AWS named Madelyn and a guy from Alibaba, Zhao Zhao and freed two leaders of the open source are from Redis, Yossi and Oran and another guy Itamar is also from Redis, so like five people, the committee of the open source in addition to all the commercial products that we have. But we are able to talk about developer culture. So I’ll let you ask the questions.

Shane Hastie: Thank you. So how do you build a collaborative culture that both supports the open source community but also does bring the for-profit motive of a commercial enterprise?

Supporting open-source and commercial needs [05:08]

Yiftach Shoolman: So let’s start with giving you some numbers. I cannot expose our commercial numbers because we are private company, but just for our audience to know. Redis is downloaded more than 5 million time a day. If you combine all the download alternative like going to register and downloading the code, launching dock here with Redis, et cetera, this is MOS and all the other databases together. Amazing, this is because of many two reasons. First, Redis is very simple to work with. Second, it solves a real problem. You cannot practically guarantee any real time application without using Redis, whether use it as a cache or whether use it as your primary database. We as a commercial company of many, many customers, were using it as a primary database. On our cloud service metrics that I can share with you, we have over 1.6 million databases that were launched, which is a huge number of course, all the public clouds like AWS, GCP and Azure.

This is just to show the popularity of Redis. We tried and I think so far we didn’t try it to be able to make the core of Redis completely open source BSD so people can use it everywhere, even create a competitive product to our commercial offering which you can see that all the large CSP cloud service provider provide ready as a service, which is fine. We are competing with them. We think we are better, but it’s up to the user to decide. This is a huge community of users but also contributors. If you look at the number of active contributors to Redis, they think it is close to 1000. I think we discuss it well in our conversation prior to the recording, just for everyone to understand what it means to manage an open source. It’s not just go to GitHub and open source it and people can contribute whatever they like.

When you manage a critical component such as Redis which is the core reason for your real time performance, you just cannot allow any PR out there to be accepted. First, some of the PR are not relevant to the roadmap of the border. Some of them need to be rewritten because they may expose to security breach or they may contain bugs, et cetera. Someone needs to mentor everyone who is contributing and we allocate our best people to do that, which is a huge work. It’s a huge work. Think about the group of your best engineers on a daily basis looking at the PRs the community guys are providing. They’re viewing them, providing comment and then making sure that this can be part of the next version of Redis.

By doing so, we believe that we increase the popularity of Redis, increase the usage of Redis and increase the ambition of developers to develop, to contribute to Redis because people would like to be a contributor to Redis because it’s very populated, well written, it’s a great technology and you want to publish in your LinkedIn profile or whenever you go to an interview that you are a contributor to such a great success. So this is how we do it.

Source Available licenses [08:17]

You ask on the commercial side. There are some capabilities that we keep only on the commercial license that we provide with Redis Enterprise. I think by 2018 we were the first company launched what is called Source Available License, which is a new version I would say of open-source software. It allows you the freedom to change it, to use it for yourself without contributing back, et cetera but it doesn’t allow you to create a competitive commercial product to Redis the company. We did it only on certain component of the open source, not the core, on the modules that extend the Redis capabilities to new use cases like Search and document with JSON and Graph and TimeSeries and even vector similarity search and AI. We put this under the source available license so people can use it for free in the open source wherever they want, but if you want to create a commercial product, we say you cannot use our code for that. This is how we monetize. We have the free deals of licensing, open source, source available and closed source.

Shane Hastie: You described putting your best engineers onto reviewing the open source, managing the pull request and so forth. How do you nurture and build that community and how do you, I want to say incentivize, motivate your teams?

Nurturing the community and motivating contributors [09:45]

Yiftach Shoolman: Yeah, great question. I think it is out to maintain, to be able to be successful for such a long time. If you look at the history of Redis, almost any other database out there used to pitch against Redis because the other databases found that Redis is so popular and can solve a lot of the problems that their commercial product was designed for. It actually put a risk on their business.

I think the magic of Redis with this large community of user is that we don’t stop innovating. We don’t stop contributing code and we build an architecture that allows people to extend it for their own use cases. By the way, like I mentioned with the modules that we decided to commercialize and at least the company, Redis, whenever we hire developers, we tell them, “Listen guys, if you find a problem that you think it is meaningful and you want to create a product out of it, the Redis platform is the right platform for you. Just do it and if we find it attractive in the market and we see good reaction from customers and community, we’ll support you. We’ll create a team around you. You will live it, et cetera.

As a result of this, we created a lot of very good ideas and product like this Search, JSON, the Graph, the IimeSeries, the Probabilistic, the AI. All these were created by the developers who found real problems and this is what we do internally. I know that there are other companies who are doing it internally for their own use cases based on the open source so these provide it as a platform. It’s a core platform, open core that allows you to extend it with your own core and you can decide whether you want this core to be open source, source available or closed source even. The platform allows you to do that. If it was a very closed platform, limited capabilities without any contribution from outside, et cetera, there are open sources like this, the adoption would’ve been very, very limited.

Shane Hastie: And you talk about hiring developers, how do you build great teams?

Building great teams [11:56]

Yiftach Shoolman: Great question. I think culturewise, first of all, in term of where to hire engineers, we don’t care. If there is someone that wants to work for us as a company or with us, the partner of our team, if we find a match between his desires and what we need anywhere in any place in the world so we are completely distributed. On the other end, we would like to maintain the culture of Redis which is humble design, humble people. I would say simple design but very, very unique in the way it was written, very clear, well documented. This is how we want our developers to react. Be very clear. Be very transparent. Expose the things that bother you and be very precise in how you document the issues that you encounter with. I think if we manage to maintain this culture for a very long time… I forgot to mention what I mentioned before, be innovative if you find the problems that you think it is a real problem.

Redis was designed when it started 2009. It is almost 13 years ago. The technology world has changed dramatically. The basic design of Redis is great like simple, shared-nothing architecture, scale horizontally and vertically as you want with multi processes, not multi-threaded, et cetera. Again, shared-nothing architecture but if for instance there is a problem that can only be solved with multi-threaded, this is fine. Let’s sync together how we can change the architecture of Redis and allow it to scale also horizontally. Okay, this is just one example.

If there are other, for instance, Redis is written in C and if someone wants to extend Redis with other capabilities with modules and it wants to use Rust because Rust is becoming very popular, it runs as fast as C almost, but it protects you from memory leak and under memory corruption bug, we provide a way to do it. You can do it. You can extend the code with fast functionality to solve any problem you want. The code will continue to be written in C, but you want the extension to be with Rust or other language like Python or Java or whatever or Go, go ahead and do that.

So this diversity I would say of programming languages that can support the core is very good for Redis. In addition, I would say there is a huge ecosystem that the community built around clients like clients that utilize the capabilities of Redis and are integrated part of any applications that people are developing today.

There are 200 different clients for Redis. Someone can say, “Listen this is too much.” I can agree with that as well. This is why for our customer we recommended I would say full set of clients across the different languages that we maintain together with the community. We guarantee that the bugs are fixed, et cetera. On the other end, if someone find is that what we provide is not good enough and you want to do something with other language, et cetera or just want to innovate something, go ahead and do that. We will support you by promoting it in the developer side of Redis, redis.io. If it is good, you will pick up stars very, very nicely and you will see adoption.

There are multiple examples in which people created additions to Redis, not only on the core server but also on the client side or on the ecosystem. This became a very popular open source software and some of them are also maintained commercially. They provide open source client for some language with commercial supports that they provide and they created a small business around it. Some of them even created bigger business world, which is good. This is how open source should work.

Shane Hastie: So looking back over the last 12 years and beyond, what are the significant changes that you have seen in the challenges that developers face?

The evolving challenges developers face [16:04]

Yiftach Shoolman: I think no question. The first, the foremost is the cloud. Today’s are developers who start developing in the clouds. They don’t even put the code on the laptop. I still think this is the minority, but the new wave of developers will go to some of the developer platform out there and start developing immediately in the cloud that was not there before, so this is one thing.

Second, they think from the database perspective, it is no more relational. In fact, if you look at the trends in the market, the number of new applications that are developed with relational databases declines. More and more applications are now starting new model data models that are provided by the No-SQL of the world or the multi-model of the world, like key-value document, graph, time series with search capabilities, et cetera. Unstructured data can scale linearly without all the restrictions of schema, et cetera. This is another trend.

The third trend, I would say, it’s real time. A lot of application need the real time speed today and real time has two areas, I would say. First I think the more significant one is how fast you access the data and this is where Redis shine. As a general concept, we want all the operation complex or simple and ready to build in less than a millisecond speed because otherwise you just break the real time experience of the end user. This is just one part. The other part is how far the application is being deployed from the end user because if for instance, the application works great and inside the data centre the experience is less than 50 millisecond which include the application and the database and everything in between, but your distance from your application is 200 millisecond, you will not get the written experience.

And I think the trends that we see today specifically by the cloud provider but also by a CDN vendor, is that they try to develop a lot of edge data centre and data centre across the regions worldwide in order to be closer as possible to the user because if you think on next generation applications, the ways will be deployed is completely globally between that hour and that hour, the majority of the user will be from Europe. Let’s build the clusters there. With Kubernetes it’s easy to do that because you can scale it very nicely and then shrinking down doing the night time and scale out your cluster in South America because this is where the users are there. But applications cannot work without data and the data needs to be real time, so Redis is used in these use cases and it should be globally deployed, et cetera. This is a sync game. Another trend that we see today in the market.

We mentioned cloud, we mentioned the multi-model, we mentioned the real time, we mentioned the globally distributed need. Last but not least, you will ask it, async AI will be a component of every application stack soon if not now. Whether this is features for feature store, feature stories, a way for you to enrich your data before you do the influencing of AI, use the store in order to add more information to the data, receive from the user in order to be more accurate in your AI results or whether this is vector similarity search which also supported by Redis. Just to make sure that we align with this definition, so vector allows you to put the entire context about the user in a vector and almost every application needs it in addition to the regular data about the user.

I don’t know your hobbies or things that related to context, what you like, what you prefer, et cetera. You can do recommendation engine. You can do a lot of stuff with this. Eventually if you look at the next generation of profile of user in any database, they will have the regular stuff like where do you live, your age, your height, and then a vector about yourself, which any application will decide how to formulate the vector. Then if you would like to see user with similar capabilities, you will look for vector similarity search inside your database. I truly believe this is a huge trend in next generation application and the way databases should be built.

Shane Hastie: I can hear screams about privacy and how do we do this ethically.

Privacy challenges from vector data [20:40]

Yiftach Shoolman: You’re right. It’s a good question to ask. How do you do it without exposing privacy? It’s a challenge. I totally agree it’s a challenge. By the way, from our perspective at Redis, we don’t really deal with that because it’s up to the application to decide which vectors they put in the database but once they put it there, we will make sure that if you would like to find similar users based on vector which is no one can read vectors. It’s a floating point, it’s coded. No one can understand from it anything about the user unless you wrote the application. I think in term of privacy, this is more secure than any other characteristics that you put on the user but based on the fact that the vector is there and under all the privacy compliance, et cetera, you can search for similar vectors in Redis very, very fast. This is what I’m trying to say.

Shane Hastie: On leadership perspective, how do you grow good leaders in technology teams?

Growing leaders in technology teams [21:42]

Yiftach Shoolman: As a technology company, we always have these guys in the technologies that want to be great managers and there is another type of people who want to be great technologists. They less want to manage others. They want to invest deeper in the technology and be a kind of architect. As a company we allow people to grow on both direction. It’s up to them to decide whether they want to be manager that mean less closer to the code, probably in some cases less closer to the technology, to the day-to-day technology, not in general and the other wants to continue doing it but less managing people. We allow people to grow on both parts and I think other companies are doing it as well, but we always want, even if you select to be a manager in the technology, we want you to be closer to the technology in order to take the right decisions.

I mean it’s a different space. It changes so quickly. One of the things that differentiate I think software programming than any other profession out there is the gap between great developers, I would say mediocre developer, not the poor one can be 100 time in execution. This is why we would like to hire great developers. We understand that. To manage these people, sometimes you need to be flexible because they are smart at their own perspective, not only on the code and technology but what is going on in the world. How we can be better citizen in our countries and in the world in general. By the way, the spirit of the open source is exactly that. It’s exactly that. Contribute to other. It’s not only yours. People can take it and do something else. Encourage people to do other use cases, not the things that you thought about only.

You should have this culture to accept these people because even if they grow… I don’t know in the morning, to run for four hours and they start. They work at 11:00 AM and they work until 5:00 PM but they’re excellent in what they do. The deliveries that they can provide is huge, huge, huge benefit to the companies that they work for. This is why we try not to put limitations there. I think our leaders, our managers, of course you need to stand behind the milestones and the promises, et cetera. One of the ways to do it is to be able to accept that some of your employees are exceptional and they work differently and you cannot use the same boundaries to everyone. This is a thing that we are taking care of.

Shane Hastie: Looking back over the last couple of years, there are industry statistics out there that developer experience hasn’t been great. Burnout figures are at 80 plus percent, the great resignation, all of these things happening, mental health being a challenge and general developer experience. How do we make these people’s lives better?

Supporting teams through developer experience [24:46]

Yiftach Shoolman: I think it’s a great question in here. I think also you will find out different answer from different people because eventually a great developer experience allows you to write your code even without writing code. You would agree like low code, you don’t need to do anything. Just few clicks here, few clicks there, no compilation, click the button, it is running in the cloud. I have an application. Not all developers want to work like this. Some of them want to understand what is running behind the scene, what is the overhead associated with the fact that you abstract so many things for me and they want to deep dive and understand the details in order to create faster application, better application or paper so I think you always need to give this balance. One of the thing, and by the way, I didn’t mention it when you asked me about the trends, and I think at least at the database space, there is what is called today a serverless concept.

I must say, and you ask me not to do it, but I’ll do it, that we started with this concept in 2011 when we started the company. We said, “Why should developer when it comes to the cloud, know about instances, know about cluster, know about everything that the outer world that is going there.” If you look at the number of instances that each cloud provider provides today, these are a hundred and every year there is a new generation, etc. Why should as a developer, I should care about it. The only thing I care about when I access the database are two things. How many operations per second I would like to make and what is the memory limit or the dataset size that I would like to work with? You was a database provider should challenge me according to the combination of both, how many operation, what is the side of my data set?

If I run more operation, you should be smart. The database as a service, you should be smart to scale out or scale in the cluster and eventually charge me according to the actual uses that I use. We invested, we started with it as I mentioned earlier, we don’t want the customer to deal with no cluster, how many CPUs, et cetera, care about your Redis and we will charge you only for the amount of request that you use and the memories that you use. This is becoming very popular. We are not the only one who provide serverless solution today and it makes the developer experience very easy because you just have an end point. You do whatever you want and eventually if needed you will be charged for a few dollar a month, so maybe more depending on your usage. That said, the other developers really like to fill the other way even if it is VM, even if it’s Docker to fill the software, to load it, to understand exactly how it uses the CPU, et cetera.

As a company, we also provide them a way to work with our software and do that and of course, they can take the source available element and do it themself. I think we are trying, I would say, to give a lot of options for developers across the spectrum not only serverless, but if someone wants more details, go manage it yourself. Understand what is running behind the scene. We will help you to run it better.

Shane Hastie: Yiftach, thank you very very much, some really interesting concepts there and some good advice. If people want to continue the conversation, where do they find you?

Yiftach Shoolman: So you can find me on Twitter, I’m @yiftachsh or you can of course find me on LinkedIn. Look for Yiftach Shoolman. Look for Redis. If you want to start developing with Redis, go to redis.io or redis.com. You will find a lot of material about this great technology and this is it.

Shane Hastie: Thank you so much. Thank

Yiftach Shoolman: Thank you. It was great talking with you Shane.

Mentioned:

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • Steef-Jan Wiggers

Article originally posted on InfoQ. Visit InfoQ

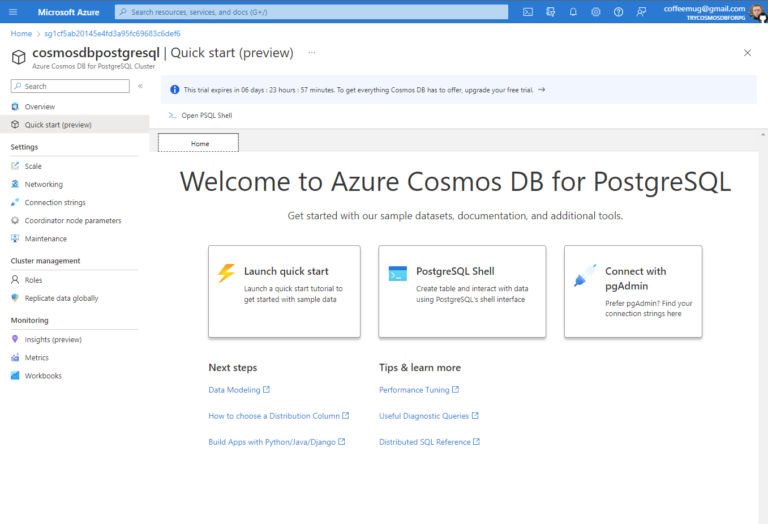

During the recent Ignite conference, Microsoft announced Azure Cosmos DB for PostgreSQL, a new generally available (GA) service to build cloud-native relational applications. It is a distributed relational database offering with the scale, flexibility, and performance of Azure Cosmos DB.

Azure Cosmos DB is a fully managed NoSQL database with various APIs targeted at NoSQL workloads, including native NoSQL and compatible APIs. With the support of PostgreSQL, the service now supports relational and NoSQL workloads. Moreover, the company states that Azure is the first cloud platform to support both relational and NoSQL options on the same service.

The PostgreSQL support includes all the native capabilities that come with PostgreSQL, including rich JSON support, powerful indexing, extensive datatypes, and full-text search. Next to being built on open-source Postgres, Microsoft enabled distributed query execution using the Citus open-source extension. Furthermore, the company also stated in a developer blog post that as PostgreSQL releases new versions, it will make those versions available to its users within two weeks.

Source: https://devblogs.microsoft.com/cosmosdb/distributed-postgresql-comes-to-azure-cosmos-db/

Developers can start building apps on a single node cluster the same way they would with PostgreSQL. Moreover, when the app’s scalability and performance requirements grow, it can seamlessly scale to multiple nodes by transparently distributing their tables. A difference compared to Azure Database for PostgreSQL, which Jay Gordon, a Microsoft Azure Cosmos DB senior program manager, explains in a tweet:

#AzureCosmosDB for #PostgreSQL is a distributed scale-out cluster architecture that enables customers to scale a @PostgreSQL workload to run across multiple machines. Azure Database for PostgreSQL is a single-node architecture.

In addition, the product team behind Cosmos DB tweeted:

We are offering multiple relational DB options for our users across a number of Database services. Our Azure Cosmos DB offering gives you PostgreSQL extensions and support for code you may already be using with PostgreSQL.

And lastly, Charles Feddersen, a principal group program manager of Azure Cosmos DB at Microsoft, said in a Microsoft Mechanics video:

By introducing distributed Postgres in Cosmos DB, we’re now making it easier for you to build highly scalable, cloud-native apps using NoSQL and relational capabilities within a single managed service.

More service details are available through the documentation landing page, and guidance is provided in a series of YouTube videos. Furthermore, the pricing details of Azure Cosmos DB are available on the pricing page.

MMS • Steef-Jan Wiggers

Article originally posted on InfoQ. Visit InfoQ

During the recent Ignite Conference, Microsoft announced the public preview of Azure Deployment Environments. This managed service enables dev teams to quickly spin up app infrastructure with project-based templates to establish consistency and best practices while maximizing security, compliance, and cost-efficiency.

Setting up environments for applications that require multiple services and subscriptions in Azure can be challenging due to compliance, security, and possible long lead time to have it ready. Yet, with Azure Deployment Environments, organizations can eliminate the complexities of setting up and deploying environments, according to the company, which released the service in a private preview earlier this year.

Organizations can preconfigure a set of Infrastructure as Code templates with policies and subscriptions. These templates are built as ARM (and eventually Terraform and Bicep) files and kept in source control repositories with versioning, access control, and pull request processes. Furthermore, through Azure RBAC and Azure AD security authentication, organizations can establish comprehensive access controls for environments by the project- and user types. And finally, resource mappings ensure that environments are deployed to the correct Azure subscription, allowing for accurate cost tracking across the organization.

In a Tech Community blog post, Sagar Chandra Reddy Lankala, a senior product manager at Microsoft, explains:

By defining environment types for different stages of development, organizations make it easy for developers to deploy environments not only with the right services and resources, but also with the right security and governance policies already applied to the environment, making it easier for developers to focus on their code instead of their infrastructure.

Environments can be deployed manually through a custom developer portal, CLI, or pipelines.

Azure Deployment Environments are an addition to the existing services, such as CodeSpaces and Microsoft Dev Box the company made available earlier to enhance developer productivity and coding environments. CodeSpaces allows developers to get a VM with VSCode quickly, and similarly, with Microsoft Dev Box, they can get an entire preconfigured developer workstation in the cloud.

Amanda Silver, CVP of the Product Developer Division at Microsoft, tweeted:

Minimize environment setup time and maximize environment security and compliance with Azure Deployment Environments. Now in public preview! Game changer for platform engineering teams.

More details of Azure Deployment Environments are available on the documentation landing page. Pricing-wise, the service is free during the preview period, and customers will only be charged for other Azure resources like compute storage and networking created in environments.

MMS • Daniel Mangum

Article originally posted on InfoQ. Visit InfoQ

Transcript

Mangum: My name is Dan Mangum. I’m a Crossplane maintainer. I’m a staff software engineer at Upbound, which is the company behind the initial launch of the Crossplane project, which is now a CNCF incubating project. I’m going to be talking to you about Kubernetes as a foundation for infrastructure control planes. I’m going to cheat a little bit, I did put an asterisk on infrastructure, because we’re going to be talking about control planes in general. It’s going to expand a little bit beyond infrastructure specifically. That is going to serve as a great use case for control planes here.

Outline

When I give talks, I like to be really specific about what we’re going to cover, so you can know if there’s going to be value for you in this talk. I usually go through three stages, motivation. That’s going to be, what is a control plane, and why do I need one? Then explanation. Why Kubernetes as a foundation. That’s the title of our talk here. Why does Kubernetes serve as a good foundation for building control planes on top of? Then finally, inspiration. Where do I start? Now that I’ve heard about what control planes are, why Kubernetes is useful for them, how do I actually go about implementing one within my organization with some tangible steps?

What Is a Control Plane?

What is a control plane? In the Crossplane community, we like to describe a control plane as follows, a declarative orchestration API for really anything. We’re going to be specifically talking about two use cases for control planes. The first one is infrastructure. Infrastructure is likely the entry point that many folks have had to a declarative orchestration API. What does that look like? The most common use case is with the large cloud providers, so AWS, Azure, and GCP. There’s countless others. Essentially, what you’re doing is you’re telling them, I would like this state to be a reality. It’s a database to exist or a VM to exist, or something like that. They are reconciling that and making sure that that is a state that’s actually reflected, and then giving you access to that. You don’t actually worry about the implementation details or the imperative steps to make that state a reality. There’s also a bunch of other platforms that offer maybe specialized offerings, or something that has a niche take on what a product offering should look like. Here we have examples of bare metal, we have specific databases, we have a message bus solution, and also CDN. There’s a lot of smaller cloud providers and platforms that you may also use within your organization.

The other type of control plane that you may be familiar with, and is used as a declarative orchestration API is for applications. What are the platforms that offer declarative orchestration APIs for applications? Once again, the large cloud providers have higher level primitives that give you the ability to declare that you want an application to run. Whether that’s Lambda on AWS, or Cloud Run on GCP. These are higher level abstractions that they’ve chosen and defined for you, that you can interact with to be able to run an application and perhaps consume some of that infrastructure that you provisioned. There’s also layer-2 versions of this for applications as well. Examples here include static site generators, runtimes like Deno or something, and a quite interesting platform like Fly.io that puts your app close to users. These are basically opinionated offerings for application control planes, where you’re telling them you want an application to run, and they’re taking care of making that happen.

Why Do I Need a Control Plane?

Why do I need a control plane? We’ve already made the case that you have a control plane. These various platforms, I would hazard a guess that most people have had some exposure or are currently using at least one of these product offerings. Let’s take it for granted that you do have a control plane. The other part we need to focus on is I, or maybe we, what are the attributes about us that make us good candidates for a control plane? QCon likes to call its attendees and speakers, practitioners. I like to use a term similar to that, builders. In my mind, within an organization, there’s a spectrum between two types of builders. One is platform, and the other is product. On the platform side, you have builders who are building the thing that builds the thing. They’re giving you a foundation that the product folks build on top of to actually create the business value that the organization is interested in. When we talk about these two types of personas, we frequently view it as a split down the middle. There’s a single interface where platform teams give a set of APIs or a set of access to product folks. In some organizations that may look like a ticketing system. In a more mature DevOps organization, there may be some self-service workflow for product engineers.

In reality, the spectrum looks a lot more like this. There’s folks that exist at various parts of this. You may have someone in the marketing team, for instance, who wants to create a static site, and they have no interest in the underlying infrastructure. They’re very far to the product side. You may have someone who is a developer who knows a lot about databases, and wants to tune all the parameters. Furthermore, you may have an organization that grows and evolves over time. This is something I’m personally experiencing, working at a startup that’s growing rather quickly. We’ve gone from having a small number of engineers to many different teams of engineers, and our spectrum and where developers on the product and platform side sit on that, has changed quite a bit over time. Those abstractions that you may be getting from those cloud providers or platforms that you’re using may not fit your organization in perpetuity.

Control Plane Ownership

We said that you already have a control plane. What’s the issue? You just don’t own it yet. All of these control planes that you’re using are owned by someone else. Why do they have really great businesses around them? Why would you want to actually have some ownership over your control plane? Before we get into the benefits of ownership, I want to make it very clear that I’m not saying that we shouldn’t use these platforms. Folks at AWS, and GCP, and Azure, and all of those other layer-2 offerings, they’ve done a lot of work to give you very compelling offerings, and remove a lot of the operational burden that you would otherwise have to have yourself. We absolutely want to take advantage of all that work and the great products that they offer, but we want to bring that ownership within your organization.

Benefits to Control Plane Ownership

Let’s get to the benefits. The first one is inspection, or owning the pipe. I’ve heard this used when folks are referring to AWS. I like to use Cloudflare actually as a great example of this. It’s a business that’s built on having all of the traffic of the internet flow through it, and being able to build incredible products on top of that. You as an organization currently probably have someone else who has the inspection on your pipe. That may be AWS, for instance. They can give you additional product offerings. They can see how you’re using the product. They can grow and change it over time. If you move beyond a single platform, you lose the insight and you may not be the priority of the person who owns a pipe that you’re currently using. When you have that inspection, you can actually understand how folks within your organization are using infrastructure, deploying it, consuming it. Everything goes through a central place, even if you’re using multiple different platforms.

The next is access management. I know folks who are familiar with IAM on AWS or really any other platform, know how important this is and how complicated it can be. From a control plane perspective that sits in front of these platforms, you have a single entry point for all actors. You’re not saying anymore, I want to give permission for a single developer to AWS, even if you’re giving them the ability to create a database on AWS. We’ll actually define a higher level concept that even if it maps one to one as an abstraction on top of a concrete implementation, you’re going to have a single entry point where you can define all access for users, no matter what resources they’re accessing behind the scenes.

Next is the right abstractions. Unless you’re the largest customer of a cloud platform, you are not going to be the priority. There may be really great abstractions that they offer you. For instance, in my approximation, Lambda on AWS has been very successful, lots of folks are building great products on top of it. However, if your need for abstraction changes, and maybe your spectrum between platform and product folks evolves over time, you need to be able to change the different knobs, and maybe even for a specific project offer a different abstraction to developers, and not be locked into whatever another platform defines as the best for you.

The last one is evolution. Your organization is not going to be stagnant, and so the API that you present to developers may change over time. Your business needs may change over time, so the implementations behind the API of your control plane can also evolve over time without the developers actually even needing to know.

Why Kubernetes as a Foundation?

We’ve given some motivation for why you would need a control plane within an organization. We’re going to make that really concrete here by looking at an explanation of why Kubernetes serves as a strong foundation for a control plane. Really, what I’m talking about here is the Kubernetes API. Kubernetes has come about as a de facto container orchestration API. In reality, it’s really just an API for orchestrating anything. Some may even refer to it as a distributed systems framework. When I gave this presentation at QCon in London, I referred to it as the POSIX for distributed systems. While that may have some different abstractions than something like an operating system, it is critical to start to think about Kubernetes and distributed systems as something we program just like the things that an operating system offers us, the APIs it gives us. What Kubernetes allows us to do is extend APIs and offer our own. Let’s get into how that actually works.

Functional Requirements of a Control Plane

What are the functional requirements for a control plane? Then let’s try to map those back to what Kubernetes gives us. First up is reliable. I don’t mean that this is an assertion that a pod never restarts or something like that. What I’m saying is we can’t tolerate unexpected deletion of critical infrastructure. If you’re using ephemeral workloads on a container orchestration platform, this isn’t as big of a deal. You can stand for nodes to be lost, and that sort of thing. We can still stand for that to happen when dealing with infrastructure. What we can’t have happen is for the control plane to arbitrarily go and delete some of that infrastructure. We can withstand it not reconciling it for a period of time.

The next is scalable, so you have more value at higher adoption of a control plane. We talked about that inspection and the value that you get from that. You must be able to scale to meet the increased load that comes from having higher adoption within your organization, because that higher adoption is what brings the real value to your control plane. The next is extendable. I’ve already mentioned how Kubernetes does this a little bit, and we’ll go into the technical details. You must be able to evolve that API over time to match your evolving organization. It needs to be active and responsive to change. We’re not just saying that we want this to exist, and after it exists, we’re done. We’re going to have requirements that are changing over time. Our state that we desire is going to evolve over time. There’s also going to be external factors that impact that. I said earlier that we don’t want to give up all of the great work that those cloud providers and platforms have done, so we want to take advantage of those. You may have a rogue developer come in and edit a database outside of your control plane, which you don’t want to do, because that’s going outside of the pipe. Or you may just have an outage from the underlying infrastructure, and you want to know about that. You need a control plane that constantly reconciles your state, and make sure what’s represented externally to the cluster matches the state that you’ve put inside of it.

If you’ve seen this before, it’s because I took most of these directly from the Kubernetes website. These are the qualities that Kubernetes says it provides for container orchestration. It also offers them for really any other distributed system you want to build on top. There’s a number of others that we’re not going to talk specifically about, but are also useful. One is secrets management, which is useful when you need to connect to these external cloud providers, as well as potentially manage connection details to infrastructure you provision. There’s network primitives. There’s access control, which we touched on a little bit already. Last and certainly not least, is a thriving open source ecosystem. You have a foundation that if you need to actually change the foundation itself, you can go in and be a part of driving change. You can have a temporary fork where you have your own changes. You can actually be part of making it a sustainable project over time, which lots of organizations as well as individuals do today.

You Can Get a Kubernetes

Last on the list of things that is really useful for Kubernetes, is what we like to say, you can get a Kubernetes. What I mean by this is Kubernetes is available. There is a way to consume Kubernetes that will fit your organization. In fact, there’s 60-plus distributions listed on the CNCF website that you can use on your own infrastructure, you can run yourself, or pay a vendor to do it for you. There’s 50-plus managed services where you can actually just get the API without worrying about any of the underlying infrastructure. Kubernetes, despite what platforms you use, is going to be there for you, and you can always consume upstream as well.

What’s Missing?

What’s missing from this picture? If Kubernetes gives us everything that we need, why do we need to build on top of it? There’s two things that Kubernetes is missing out on, and I like to describe them as primitives and abstraction mechanisms. Kubernetes does offer quite a few primitives. You’ve probably heard of pods, deployments, ingress. There’s lots of different primitives that make it very easy to run containerized workloads. What we need to do is actually start to take external APIs and represent them in the Kubernetes cluster. For example, an AWS EC2 instance, we want to be able to create a VM on AWS alongside something like a pod or a deployment, or maybe just in a vacuum. There’s also things outside of infrastructure, though. At Upbound, we’re experimenting with onboarding employees by actually creating an employee abstraction. We’ll talk about how we do that. That is backed by things like a GitHub team that automatically make sure an employee is part of a GitHub organization. There can also be higher level abstractions, like a Vercel deployment that you may want to create from your Kubernetes cluster for something like an index.js site.

The other side is abstraction mechanisms. How do I compose those primitives in a way that makes sense for my organization? Within your organization, you may have a concept of a database, which is really just an API that you define that has the configuration power that you want to give to developers. That may map to something like an RDS instance, or a GCP Cloud SQL instance. What you offer to developers is your own abstraction, meaning you as a platform team have the power to swap out implementations behind the scenes. I already mentioned, the use for an employee, and a website maps a Vercel deployment. The point is, you can define how this maps to concrete infrastructure, and you can actually change it without changing the API that you give to developers within the organization. Lastly, is how do I manage the lifecycle of these primitives and abstraction mechanisms within the cluster? I’m going to potentially need multiple control planes. I’m going to need to be able to install them reliably. I might even want to share them with other organizations.

Crossplane – The Control Plane Tool Chain

I believe Crossplane is the way to go to add these abstractions on top of Kubernetes, and build control planes using the powerful foundation that it gives us. If you’re one of the Crossplaners, it’s essentially the control plane tool chain. It takes those primitives from Kubernetes and it gives you nice tooling to be able to build your control plane on top of it by adding those primitives and abstraction mechanisms that you need. Let’s try and take a shot at actually mapping those things that we said are missing from Kubernetes to concepts that Crossplane offers.

Primitives – Providers

First up is providers. They map to primitives that we want to add to the cluster. Provider brings external API entities into your Kubernetes cluster as new resource types. Kubernetes allows you to do this via custom resource definitions, which are actually instances of objects you create in the cluster that define a schema for a new type. The easiest way to conceptualize this is thinking of a REST API and adding a new endpoint to it. After you add a new endpoint in a REST API, you need some business logic to actually handle taking action when someone creates updates or deletes at that endpoint. A provider brings along that logic that knows how to respond to state change and drift in those types that you’ve added to the cluster. For example, if you add an RDS instance to the cluster, you need a controller that knows how to respond to the create, update, or delete of an RDS instance within your Kubernetes cluster.

The important part here is that these are packaged up as an OCI image for ease of distribution and consumption. All of these things are possible without doing this, but taking the OCI image path for being able to distribute these means that they are readily available. OCI images have become ubiquitous within organizations, and every cloud provider has their own registry solution as well. These are really easy to share and consume. You can also compose the packages themselves, which providers is one type of Crossplane package, and start to build a control plane hierarchy out of these packages, which we’ll talk a little bit in when we get to composition.

Abstraction Mechanisms – Composition

Abstraction mechanisms are this kind of second thing that we said we’re missing from Kubernetes. Crossplane offers abstraction mechanisms via composition. Composition allows you to define abstract types that can be satisfied by one or more implementations. This is done via a composite resource definition. This is different from the custom resource definition that Kubernetes offers. Crossplane brings along a composite resource definition. The composite resource definition, or as we like to call an XRD, as opposed to the Kubernetes CRD. The XRD allows you to define a schema just like a CRD, but it doesn’t have a specific controller that watches it. Instead, it has a generic controller, which we call the composition engine, which takes instances of the type defined by the XRD and maps them to composition types. A composition takes those primitives, and basically tells you how the input on the XRD maps to the primitives behind the scenes.

Let’s take an example, and we’ll keep using that same one that’s come up a number of times in this talk, a database. In your organization, you have a database abstraction. Really, the only fields a developer needs to care about, let’s say, are the size of the database, and the region that it’s deployed in. On your XRD, you define those two fields, and you might have some constraints on the values that could be provided to them. Then you author, let’s say, three compositions here. One is for AWS, one is for GCP, and one is for Azure. On the AWS one, let’s say you have some subnets, a DB subnet group and an RDS instance. On the GCP one, you have, let’s say a network and a Cloud SQL instance. On Azure, you have something like a resource group, and an Azure SQL instance. When a developer creates a database, it may be satisfied by any one of these compositions, which will result in the actual primitives getting rendered and eventually reconciled by the controllers. Then the relevant state gets propagated back to the developer in a way that is only applicable to what they care about. They care about that their database is provisioned. They don’t care about if there is a DB subnet group and a DB behind the scenes.

These are actually also packaged as OCI images, that’s the second type of Crossplane package. A really powerful ability you have with these packages is to say what you depend on. You can reference other images as dependencies, which means that if you install a configuration package with the XRD and compositions that we just talked about, you can declare dependencies on the AWS, GCP, and Azure providers, and Crossplane will make sure those are present. If not, will install them for you with appropriate versions within the constraints that you define. This allows you to start to build a DAG of packages that define your control plane and make it really easy to define a consistent API and also build them from various modular components.

Making this a little more concrete, I know this is difficult to see. If you want this example it’s from the actual Crossplane documentation. Here we’re on the left side defining an XRD that is very similar to the one that I just described. This one just takes a storage size. On the right side, we’re defining a Postgres instance implementation that is just an RDS instance that maps that storage size to the allocated storage field in the AWS API. Something that’s really important here is that we strive and always do have high fidelity representations of the external API. That means that if you use the AWS REST API, and there’s a field that’s available for you, you’re going to see it represented in the same way as a Kubernetes object in the cluster. This is a nice abstraction here, but it’s quite simplistic, you’ll see there’s something like the public accessible field is set to true, which basically means it’s public of the internet. Not a common production use case.

Without actually changing the XRD or the API that the developer is going to interact with, we can have a much more complex example. This example here is creating a VPC, three subnets, a DB subnet group, some firewall rules, and the RDS instance, which is a much more robust deployment setup here. The developer doesn’t have to take on this increased complexity. This really goes to show that you may have lots of different implementations for an abstraction, whether on the same platform or on multiple, and you can, just like you do in programming, define interfaces, and then implementations of that interface. Because what we’re doing here is programming the Kubernetes API and building a distributed system, building a control plane on top of it.

This is what the actual thing the developer created would look like. They could as it’s a Kubernetes object, include it in something like a Helm chart, or Kustomize, or whatever your tooling of choice is for creating Kubernetes objects. They can specify not only their configuration that’s exposed to them, but potentially allow them to select the actual composition that matches to it based on schemes that you’ve defined, such as a provider and VPC fields here. They can also define where the connection secret gets written to. Here, they’re writing it to a Kubernetes secret called db-conn. We also allow for writing to External Secret Stores, like Vault, or KMS, or things like that. Really, you get to choose what the developer has access to, and they get a simple API without having to worry about all the details behind the scenes.

Where to Start

Where do I start? How do we get to this inspiration? There’s two different options. One is pick a primitive. You can take a commonly used infrastructure primitive that you use in your architecture, maybe it’s a database we’ve been talking about, maybe it’s a Redis Cache, maybe it’s a network. Everyone has things that have to communicate over the network. If you identify the provider that supplies it, and go and install that provider, without actually moving to the abstraction mechanisms, you can create the instances of those types directly. You can create your RDS instance directly, or your Memcached instance directly, and include that in your Kubernetes manifest, and have that deployed and managed by Crossplane.

The next option is picking an abstraction. This is the more advanced case that we just walked through. You can choose a commonly used pattern in your architecture, you can author an XRD with the required configurable attributes. You can author compositions for the set of primitives that support it, or maybe multiple sets of primitives that support it. You can specify those dependencies. You can build and publish your configuration, and then install your configuration. Crossplane is going to make sure all those dependencies are present in the cluster. The last step then is just creating an instance, like that Postgres database we saw.

These are some of the examples of some of the abstractions that the community has created. Some of these are really great examples here. Platform-ref-multi-k8s, for example, allows you to go ahead and create a cluster that’s backed by either GKE, EKS, or AKS. There’s lots of different options already. I hope this serves as inspiration as well, because you can actually take these and publish these publicly for other folks to install, just like you would go and install your npm package. Maybe that’s not everyone’s favorite example, but install your favorite library from GitHub. You can start to actually install modules for building your control plane via Crossplane packages, and you can create high level abstractions on top of them. You can create different implementations, but you don’t have to reinvent the wheel every time you want to bring along an abstraction. A great example we’ve seen folks do with this is create things like a standard library for a cloud provider. For example, if there is some common configuration that is always used for AWS, someone can create an abstraction in front of an RDS instance that basically has same defaults. Then folks who want to consume RDS instances without worrying about setting those same defaults themselves can just have the ones exposed to them that make sense.

Questions and Answers

Reisz: This is one of those projects that got me really excited. I always think about like Joe Beda, one of the founders of Kubernetes, talking about defining like a cloud operating model, self-service, elastic, and API driven. I think Crossplane proves that definition of building a platform on top of a platform. I think it’s so impressive. When people look at this, talk about the providers that are out there and talk about the community. Obviously, people that are getting started are dependent at least initially on the community that’s out there. Hopefully always. What’s that look like? How are you seeing people adopting this?

Mangum: The Crossplane community is made up of core Crossplane, which is the engine which provides the package manager and composition, which allows you to create those abstractions. Then from a provider perspective, those are all their individual projects with their own maintainers, but they’re part of the Crossplane community. In practice, over time, there’s been a huge emphasis on the large cloud providers, as you might expect, for either AWS, GCP, Azure, Alibaba. Those are more mature providers. However, about five months ago or so, the Crossplane community came up with some new tooling called Terrajet, which essentially takes Terraform providers for which there’s already a rich ecosystem, and generates Crossplane providers from the logic that they offer. That allowed us and the community to have a large increase in the coverage of all clouds. At this point, it’s, number one, very easy to find the provider that already exists that has full coverage. Number two, if it doesn’t exist to actually just generate your own. We’ve actually seen an explosion of folks coming in generating new providers, and in some cases putting their own spin on it to make it fit their use case, and then publishing that for other folks to do some.

Reisz: You already got all these Terraform scripts that are out there. Is it a problem to be able to use those? It’s not Greenfield. We have to deal with what already exists. What’s that look like?

Mangum: Having the cloud native ecosystem in the cloud in general exist for quite a while, almost no one who comes to Crossplane is coming in with a Greenfield thing. There’s always some legacy infrastructure. There’s a couple of different migration patterns, and there’s two kind of really important concepts. One is just taking control of resources that already exist on a cloud provider. That’s fairly straightforward to do. Cloud providers always have a concept of identity of any entity in their information architecture. If you have a database, for instance, the cloud may allow you to name that deterministically, or it may be non-deterministic, where you create the thing and they give you back a unique identifier, which you use to check in on it. Crossplane uses a feature called the external-name, which is an annotation you put on all of your resources, or if you’re creating a fresh one, Crossplane will put it on there automatically. That basically says, this is the unique identifier of this resource on whatever cloud or whatever platform it actually exists on. As long as you have those unique identifiers, let’s say like a VPC ID or something like that, you could just create your resource with that external-name already there. The first thing the provider will do is go and say, does this already exist? If it does, it’ll just reconcile from that point forward. It won’t create something new. That’s how you can take control of resources on a cloud provider.

If you have Terraform scripts and that sort of thing, there are a couple of different migration patterns. A very simple one, but one we don’t recommend long term is we actually have a provider Terraform, where you can essentially put your Terraform script into a Kubernetes manifest, and it’ll run Terraform for you, so give you that active reconciliation. There’s also some tooling to translate Terraform HCL into YAML, to be more native in Crossplane’s world.

Reisz: What are you all thinking out there, putting these XRDs, putting these CRDs onto Kubernetes clusters to maintain on and off-cluster resources. I’m curious, the questions that everybody has out there in the audience. For me, like the operator pattern is so popular with Kubernetes, and applying it on and off-cluster just seems so powerful to me. This is one of the reasons why I’m so excited about the project and the work that you all are doing.

Mangum: One of the benefits of that model is just the standardization on the Kubernetes API. One thing we like to point out, I’m sure folks are familiar with policy engines like Kyverno, or OPA, or that sort of thing, as well as like GitOps tooling, like Argo, or Flux, because everything is a Kubernetes manifest, you actually get to just integrate with all of those tools more or less for free. That’s a really great benefit of that standardization as well.

Reisz: Talk about security and state, particularly off-cluster. On-cluster is one thing, you have access to the Kubernetes API. You can understand what’s happening there. What about off-cluster? Is that like a lot of polling, what does that look like? How do you think about both managing state and also security when you’re off-cluster?

Mangum: Generally, we talk about security through configuration. Setting up security in a way where you’re creating a type system that doesn’t allow for a developer to access something they’re not supposed to. That can look like never actually giving developers access to AWS credentials, for example. You actually just give them Kubernetes access, kind of that like single access control plane I was talking about, and they interact through that. There’s never any direct interaction with AWS. The platform isn’t in charge of interacting with AWS.

In terms of how we actually get state about the external resources and report on them, there is no restriction on how a provider can be implemented. In theory, let’s say that some cloud provider offered some eventing API on resources, you could write a provider that consumed that. Frequently, that’s not an option, so we do use polling. In that case, what we really try to do is offer the most granular configuration you need to poll at a rate that is within your API rate limits, and also matches the criticality of that resource. For instance, in a dev environment, you may say, I just want you to check in on it once a day. It’s not critical infrastructure, I don’t need you to be taking action that often. If it’s production, maybe you want it more frequently.

Reisz: What do you want people to leave this talk with?

Mangum: I really encourage folks to go in and try authoring their own API abstractions. Think about the interface that you would like to have. If you could design your own Heroku or your own layer-2 cloud provider, what would that look like to you? Look at the docs for inspiration, and then think about writing your own API, maybe mapping that to some managed resources from cloud providers. Then offering that to developers in your organization to consume.

See more presentations with transcripts

MMS • Sergio De Simone

Article originally posted on InfoQ. Visit InfoQ

Developed at Fox-IT, part of NCC Group, Dissect is a recently open-sourced toolset that aims to enable incident response on thousands of systems at a time by analyzing large volumes of forensic data at high speed, says Fox-IT.

Dissect comprises various parsers and file formats implementations that power its target-query and target-shell tools, providing access to forensic artifacts, such as Runkeys, Prefetch files, Windows Event Logs, etc. Using Dissect you can, for example, build an incident timeline based on event logs, identify anomalies in services, perform incident response, and more.

Before being open-sourced, Dissect has been used for over 10 years at Fox-IT for a number of large organizations. This explains its focus on analyzing complex IT infrastructures:

Incident response increasingly involves large, complex and hybrid IT infrastructures that must be carefully examined for so-called Indicators of Compromise (IOCs). At the same time, victims of an attack need to find out as quickly as possible what exactly happened and what actions should be taken in response.

At its core, Dissect is built on top of several abstractions, including containers, volumes, filesystems, and OSes. This layered architecture, where each layer can operate independently from the others, provides the foundations for analysis plugins, which include OS-specific plugins such as Windows event logs or Linux bash, as well as more generic ones, like browser history or filesystem timelining.

An important detail is that, by default, we only target the “known locations” of artifacts. That means that we don’t try to parse every file on a disk, but instead only look for data in the known or configured locations.

Dissect’s main benefits, according to Fox-IT, are speed, which makes it possible to reduce data acquisition that previously required two weeks down to an hour, and flexibility, which makes it almost data format and OS-agnostic. Dissect aims indeed to simplify the task of accessing a container, extracting files, and use a specific tool to parse them for forensic evidence by providing a unique tool covering all of these usages.

Dissect workhorse is the already mentioned target-query tool, which makes it possible to retrieve information from a target, including basic attributes like its hostname, OS, users, as well as more in-depth information like file caches, registry, shellbags, runkeys, USB devices, and more. If you prefer a more interactive approach, you can use target-shell which is able to launch a shell running on your target to quickly browse an image or access some Python API provides by Dissect.

Dissect can be installed running pip install dissect or run using Docker. If you want to have some data to play around with, you can use NIST Hacking case images.

Presentation: Project Loom: Revolution in Java Concurrency or Obscure Implementation Detail?

MMS • Tomasz Nurkiewicz

Article originally posted on InfoQ. Visit InfoQ

Transcript

Nurkiewicz: I’d like to talk about Project Loom, a very new and exciting initiative that will land eventually in the Java Virtual Machine. Most importantly, I would like to briefly explain whether it’s going to be a revolution in the way we write concurrent software, or maybe it’s just some implementation detail that’s going to be important for framework or library developers, but we won’t really see it in real life. The first question is, what is Project Loom? The question I give you in the subtitle is whether it’s going to be a revolution or just an obscure implementation detail. My name is Tomasz Nurkiewicz.

Outline

First of all, we would like to understand how we can create millions of threads using Project Loom. This is an overstatement. In general, this will be possible with Project Loom. As you probably know, these days, it’s only possible to create hundreds, maybe thousands of threads, definitely not millions. This is what Project Loom unlocks in the Java Virtual Machine. This is mainly possible by allowing you to block and sleep everywhere, without paying too much attention to it. Blocking, sleeping, or any other locking mechanisms were typically quite expensive, in terms of the number of threads we could create. These days, it’s probably going to be very safe and easy. The last but the most important question is, how is it going to impact us developers? Is it actually so worthwhile, or maybe it’s just something that is buried deeply in the virtual machine, and it’s not really that much needed?

User Threads and Kernel Threads

Before we actually explain, what is Project Loom, we must understand what is a thread in Java? I know it sounds really basic, but it turns out there’s much more into it. First of all, a thread in Java is called a user thread. Essentially, what we do is that we just create an object of type thread, we parse in a piece of code. When we start such a thread here on line two, this thread will run somewhere in the background. The virtual machine will make sure that our current flow of execution can continue, but this separate thread actually runs somewhere. At this point in time, we have two separate execution paths running at the same time, concurrently. The last line is joining. It essentially means that we are waiting for this background task to finish. This is not typically what we do. Typically, we want two things to run concurrently.

This is a user thread, but there’s also the concept of a kernel thread. A kernel thread is something that is actually scheduled by your operating system. I will stick to Linux, because that’s probably what you use in production. With the Linux operating system, when you start a kernel thread, it is actually the operating system’s responsibility to make sure all kernel threads can run concurrently, and that they are nicely sharing system resources like memory and CPU. For example, when a kernel thread runs for too long, it will be preempted so that other threads can take over. It more or less voluntarily can give up the CPU and other threads may use that CPU. It’s much easier when you have multiple CPUs, but most of the time, this is almost always the case, you will never have as many CPUs as many kernel threads are running. There has to be some coordination mechanism. This mechanism happens in the operating system level.

User threads and kernel threads aren’t actually the same thing. User threads are created by the JVM every time you say newthread.start. Kernel threads are created and managed by the kernel. That’s obvious. This is not the same thing. In the very prehistoric days, in the very beginning of the Java platform, there used to be this mechanism called the many-to-one model. In the many-to-one model. The JVM was actually creating user threads, so every time you set newthread.start, a JVM was creating a new user thread. However, these threads, all of them were actually mapped to a single kernel thread, meaning that the JVM was only utilizing a single thread in your operating system. It was doing all the scheduling, so making sure your user threads are effectively using the CPU. All of this was done inside the JVM. The JVM from the outside was only using a single kernel thread, which means only a single CPU. Internally, it was doing all this back and forth switching between threads, also known as context switching, it was doing it for ourselves.

There was also this rather obscure many-to-many model, in which case you had multiple user threads, typically a smaller number of kernel threads, and the JVM was doing mapping between all of these. However, luckily, the Java Virtual Machine engineers realized that there’s not much point in duplicating the scheduling mechanism, because the operating system like Linux already has all the facilities to share CPUs and threads with each other. They came up with a one-to-one model. With that model, every single time you create a user thread in your JVM, it actually creates a kernel thread. There is one-to-one mapping, which means effectively, if you create 100 threads, in the JVM you create 100 kernel resources, 100 kernel threads that are managed by the kernel itself. This has some other interesting side effects. For example, thread priorities in the JVM are effectively ignored, because the priorities are actually handled by the operating system, and you cannot do much about them.

It turns out that user threads are actually kernel threads these days. To prove that that’s the case, just check, for example, jstack utility that shows you the stack trace of your JVM. Besides the actual stack, it actually shows quite a few interesting properties of your threads. For example, it shows you the thread ID and so-called native ID. It turns out, these IDs are actually known by the operating system. If you know the operating system’s utility called top, which is a built in one, it has a switch -H. With the H switch, it actually shows individual threads rather than processes. This might be a little bit surprising. After all, why does this top utility that was supposed to be showing which processes are consuming your CPU, why does it have a switch to show you the actual threads? It doesn’t seem to make much sense.

However, it turns out, first of all, it’s very easy with that tool to show you the actual Java threads. Rather than showing a single Java process, you see all Java threads in the output. More importantly, you can actually see, what is the amount of CPU consumed by each and every of these threads? This is useful. Why is that the case? Does it mean that Linux has some special support for Java? Definitely not. Because it turns out that not only user threads on your JVM are seen as kernel threads by your operating system. On newer Java versions, even thread names are visible to your Linux operating system. Even more interestingly, from the kernel point of view, there is no such thing as a thread versus process. Actually, all of these are called tasks. This is just a basic unit of scheduling in the operating system. The only difference between them is just a single flag, when you’re creating a thread rather than a process. When you’re creating a new thread, it shares the same memory with the parent thread. When you’re creating a new process, it does not. It’s just a matter of a single bit when choosing between them. From the operating system’s perspective, every time you create a Java thread, you are creating a kernel thread, which is, in some sense you’re actually creating a new process. This may actually give you some overview like how heavyweight Java threads actually are.

First of all, they are Kernel resources. More importantly, every thread you create in your Java Virtual Machine consumes more or less around 1 megabyte of memory, and it’s outside of heap. No matter how much heap you allocate, you have to factor out the extra memory consumed by your threads. This is actually a significant cost, every time you create a thread, that’s why we have thread pools. That’s why we were taught not to create too many threads on your JVM, because the context switching and memory consumption will kill us.

Project Loom – Goal