Month: November 2022

Omni Faces 4.0 Changes Minimal Dependency to Java 11, While Removing Deprecated Classes

MMS • Olimpiu Pop

Article originally posted on InfoQ. Visit InfoQ

Almost five years since its previous major release, OmniFaces 4.0 has been released after a long series of milestones that included a “Jakartified version of 3.14 with a couple of breaking changes” following the release of Jakarta EE 10. Besides the minimum requirements and breaking changes, new utility methods have been added and omnifaces.js is now sourced by Typescript rather than vanilla JavaScript.

The biggest considered advantage of the change to TypeScript is its ability to transpile the exact same source code to an older EcmaScript version such as ES5 or even ES3 for ancient web browsers. The intent is to do the same for Jakarta Faces’ own faces.js. Targeted at ES5, omnifaces.js is compatible with all major web browsers.

OmniFaces 4.0 is compatible with the Jakarta Faces 4.0 and Jakarta Faces 3.0 specifications from Jakarta EE 10 and Jakarta EE 9.1, respectively, with the minimum required version of Java 11. The Components#addFacesScriptResource() method was added for component library authors who’d like to be compatible with both Faces 3.0 and 4.0. It will allow the component developer to support both Faces 3.0 jakarta.faces:jsf.js and Faces 4.0 jakarta.faces:faces.js as resource dependencies. The method exists because it is technically not possible to use a variable as an attribute of an annotation such as @ResourceDependency.

Besides the increase in the minimal version of Java, other minimum dependencies have changed as well: Java 11, Jakarta Server Faces 3.0, Jakarta Expression Language 4.0, Jakarta Servlet 5.0, Jakarta Contexts and Dependency Injection 3.0, Jakarta RESTful Web Services 2.0 and Jakarta Bean Validation 3.0. In other words, OmniFaces 4.0 is not backwards compatible with previous versions of these dependencies because of the compiler-incompatible rename of the javax.* package to the jakarta.* package.

In the current version, everything that had been marked under the @Deprecated annotation in version 3.x has been physically removed. For instance: the tag ; and the WebXml.INSTANCE enumeration has been replaced with the WebXml.instance() and FacesConfigXml.instance() methods.

The built-in managed beans, #{now} and #{startup}, will now return an instance of the Temporal interface rather than the Java Date class. The framework still supports the time property, as in #{now.time} and #{startup.time}, in a backwards-compatible manner. Additionally, it provides two new convenience properties: instant and zonedDateTime as in #{now.instant}, #{now.zonedDateTime}, #{startup.instant} and #{startup.zonedDateTime}.

The Callback interfaces, dating back to Java 1.7, which had replacements available in Java 1.8, are now annotated under @Deprecated. For example, Callback.Void has been replaced by Runnable, Callback.Returning has been replaced by Supplier, Callback.WithArgument has been replaced by Consumer and Callback.ReturningWithArgument has been replaced by Function. Utility methods in Components and Events have been adjusted to use the new types.

Even if the changes in OmniFaces 4.0 are not that revolutionary, this has been a long-awaited version after the stretched milestone releases. Having a more current minimum dependency and changing to TypeScript, might provide interesting options to component builders relying on it.

Java News Roundup: WildFly 27, Spring Release Candidates, JEPs for JDK 20, Project Reactor

MMS • Michael Redlich

Article originally posted on InfoQ. Visit InfoQ

This week’s Java roundup for November 7th, 2022 features news from OpenJDK, JDK 20, OpenSSL CVEs, Build 20-loom+20-40, Spring Framework 6.0-RC4, Spring Boot 3.0-RC2, Spring Security 6.0-RC2, Spring Cloud 2021.0.5, WildFly 27, WildFly Bootable JAR 8.1, Quarkus 2.14.0 and 2.13.4, Project Reactor 2022.0, Micrometer Metrics 1.10 and Tracing 1.0, JHipster Lite 0.22.0 and Camel Quarkus 2.14 and 2.13.1.

OpenJDK

JEP 432, Record Patterns (Second Preview), was promoted from Candidate to Proposed to Target status for JDK 20. This JEP updates since JEP 405, Record Patterns (Preview), to include: added support for inference of type arguments of generic record patterns; added support for record patterns to appear in the header of an enhanced for statement; and remove support for named record patterns.

JEP 433, Pattern Matching for switch (Fourth Preview), was promoted from Candidate to Proposed to Target status for JDK 20. This JEP updates since JEP 427, Pattern Matching for switch (Third Preview), to include: a simplified grammar for switch labels; and inference of type arguments for generic type patterns and record patterns is now supported in switch expressions and statements along with the other constructs that support patterns.

The next step in a long history of addressing the inherently unsafe stop() and stop(Throwable) methods defined in the Thread and ThreadGroup classes , has been defined in JDK-8289610, Degrade Thread.stop. This proposes to degrade the stop() method in the Thread class to unconditionally throw an UnsupportedOperationException and deprecate the ThreadDeath class for removal. This will require updates to section 11.1.3 of the Java Language Specification and section 2.10 of the Java Virtual Machine Specification where asynchronous exceptions are defined.

JDK 20

Build 23 of the JDK 20 early-access builds was also made available this past week, featuring updates from Build 22 that include fixes to various issues. Further details on this build may be found in the release notes.

For JDK 20, developers are encouraged to report bugs via the Java Bug Database.

OpenSSL

OpenSSL, a commercial-grade, full-featured toolkit for general-purpose cryptography and secure communication project, has published two Common Vulnerabilities and Exposures (CVE) reports that affect OpenSSL versions 3.0.0 through 3.0.6 that may lead to a Denial of Service or Remote Code Execution.

CVE-2022-3602, X.509 Email Address 4-byte Buffer Overflow, would allow an attacker to use a specifically crafted email address that can overflow four bytes on the stack.

CVE-2022-3786, X.509 Email Address Variable Length Buffer Overflow, would allow an attacker to create a buffer overflow caused by a malicious email address abusing an arbitrary number of bytes containing the “.” character (decimal 46) on the stack.

BellSoft has reported that OpenJDK distributions, that include Liberica JDK, are not affected by these vulnerabilities as they use their own implementation of TLS. Developers are encouraged to upgrade to OpenSSL version 3.0.7.

Project Loom

Build 20-loom+20-40 of the Project Loom early-access builds was made available to the Java community and is based on Build 22 of JDK 20 early-access builds. This build also includes a snapshot of the ScopedValue API, currently being developed in JEP 429, Scoped Values (Incubator). It is important to note that JEP 429, originally named Extent-Local Variables (Incubator), was renamed in mid-October 2022.

Spring Framework

The fourth release candidate of Spring Framework 6.0.0 ships with new features such as: support for the Jakarta WebSocket 2.1 specification; introduce the DataFieldMaxValueIncrementer interface for SQL Server sequences; and introduce a variant of the findAllAnnotationsOnBean() method on the ListableBeanFactory interface for maintenance and potential reuse in retrieving annotations. There were also dependency upgrades to Micrometer 1.10.0, Micrometer Context Propagation 1.0.0 and Jackson 2.14.0. More details on this release may be found in the release notes.

The second release candidate of Spring Boot 3.0.0 features changes to /actuator endpoints and dependency upgrades to Jakarta EE specifications such as: Jakarta Persistence 3.1, Jakarta Servlet 6.0.0, Jakarta WebSocket 2.1, Jakarta Annotations 2.1, Jakarta JSON Binding 3.0, and Jakarta JSON Processing 2.1. Further details on this release may be found in the release notes.

The second release candidate of Spring Security 6.0.0 delivers: a new addFilter() method to the SpringTestContext class which allows a Spring Security test to specify a filter; the createDefaultAssertionValidator() method in the OpenSaml4AuthenticationProvider class should make it easier to add static parameters for the ValidationContext class; and numerous improvements in documentation. More details on this release may be found in the release notes.

Spring Cloud 2021.0.5, codenamed Jubilee, has been released featuring upgrades to the sub-projects such as: Spring Cloud Kubernetes 2.1.5, Spring Cloud Config 3.1.5, Spring Cloud Function 3.2.8, Spring Cloud Config 3.1.5 andSpring Cloud Openfeign 3.1.5. Further details on this release may be found in the release notes.

Red Hat

Red Hat has provided major and point releases to WildFly and Quarkus.

The release of WildFly 27 delivers support for Jakarta EE 10, MicroProfile 5.0, JDK 11 and JDK 17. There are also dependency upgrades to Hibernate ORM 6.1, Hibernate Search 6.1, Infinispan 14, JGroups 5.2, RESTEasy 6.2 and Weld 5. WildFly 27 is a compatible implementation for Jakarta EE 10 having passed the TCKs in the Platform, Web and Core profiles. Jakarta EE 8 and Jakarta EE 9.1 will no longer be supported. InfoQ will follow up with a more detailed news story.

WildFly Bootable JAR 8.1 has been released featuring support for JDK 11, examples having been upgraded to use Jakarta EE 10, and a remote dev-watch. More details on Bootable JAR may be found in the documentation.

Red Hat has released Quarkus 2.14.0.Final that ships with: support for Jandex 3, the class and annotation indexer; new Redis commands that support JSON, graph and probabilistic data structures; and caching annotations for Infinispan. Further details on this release may be found in the changelog.

Red Hat has also released Quarkus 2.13.4.Final featuring: a minimum version of GraalVM 22.3; dependency upgrades to JReleaser 1.3.0 and Mockito 4.8.1; and improvements such as support programmatic multipart/form-data responses. More details on this release may be found in the changelog.

On the road to Quarkus 3.0, Red Hat plans to support: Jakarta EE 10; MicroProfile 6.0; Hibernate ORM 6.0; HTTP/3; improved virtual threads and structured concurrency support based on their initial integration; a new gRPC server; and a revamped Dev UI. InfoQ will follow up with a more detailed news story.

Project Reactor

Project Reactor 2022.0.0 has been released featuring upgrades to subprojects: Reactor Core 3.5.0, Reactor Addons 3.5.0, Reactor Pool 1.0.0, Reactor Netty 1.1.0, Reactor Kafka 1.3.13 and Reactor Kotlin Extensions 1.2.0.

Micrometer

The release of Micrometer Metrics 1.10.0 features support for: Jetty 11; creating instances of the KeyValues class from any iterable; Kotlin Coroutines, allow for different metric prefixes in the StackdriverMeterRegistry class; and a message supplier in the WarnThenDebugLogger class to reduce String instance creation when the debug level is not enabled.

The release of Micrometer Tracing 1.0.0 features: establishing the Context Propagation library as a compile-time dependency to avoid explicitly having to define it in the classpath; support for RemoteServiceAddress in Sender/Receiver contexts; a handler that allows tracing data available for metrics; and setting an error status on an OpenTelemetery span when recording an exception.

JHipster Lite

JHipster Lite 0.22.0 has been released featuring an upgrade to Spring Boot 3.0, a new PostgreSQL dialect module; a refactor of the AsyncSpringLiquibaseTest class; fix the dependency declaration of the database drivers and developer tools; and remove the JPA properties that do not alter defaults.

Apache Software Foundation

Maintaining alignment with Quarkus, version 2.14.0 of Camel Quarkus was released that aligns with Camel 3.19.0 and Quarkus 2.14.0.Final. It delivers full support for new extensions, CloudEvents and Knative, and brings JVM support to the DSL Modeline. Further details on this release may be found in the list of issues.

Similarly, Camel Quarkus 2.13.1 was released that ships with Camel 3.18.3, Quarkus 2.13.3.Final and several bug fixes.

MMS • Sergio De Simone

Article originally posted on InfoQ. Visit InfoQ

Meta has been at work to port their Android codebase from Java to Kotlin. In the process, they have learned a number of lessons of general interest and developed a few useful approaches, explains Meta engineer Omer Strulovich.

Meta’s decision to adopt Kotlin for their Android apps was motivated by Kotlin advantages over Java, including nullability and functional programming support, shorter code, and the possibility of creating domain specific languages. It was also clear to Kotlin engineers that they had to port to Kotlin as much of their Java codebase as possible, mostly to prevent Java null pointers from creeping into the Kotlin codebase and to reduce the remaining Java code requiring maintenance. This was no easy task and required quite some investigation at start.

A first obstacle Meta engineers had to overcome came from several internal optimization tools in use at Meta that did not work properly with Kotlin. For example, Meta had to update the ReDex Android bytecode optimizer and the lexer component of syntax highlighter Pygments, and built a Kotlin symbol processing (KSP) API, used to create Kotlin compiler plugins.

On the front of code conversion proper, Meta engineers opted to use Kotlin official converter J2K, available as a compiler plugin. This worked quite well except for a number of specific frameworks, including JUnit, for which the tool lacks sufficient knowledge to be able to produce correct conversions.

We have found many instances of these small fixes. Some are easy to do (such as replacing isEmpty), some require research to figure out the first time (as in the case of JUnit rules), and a few are workarounds for actual J2K bugs that can result in anything from a build error to different runtime behavior.

The right approach to handle this cases consisted in a three-step pipeline to first prepare Java code, then automatically run J2K in a headless Android Studio instance, and finally postprocess the generated files to apply all required refactoring and fixes. Meta has open sourced a number of those refactorings to help other developers to accomplish the same tasks.

These automations do not resolve all the problems, but we are able to prioritize the most common ones. We run our conversion script (aptly named Kotlinator) on modules, prioritizing active and simpler modules first. We then observe the resulting commit: Does it compile? Does it pass our continuous integration smoothly? If it does, we commit it. And if not, we look at the issues and devise new automatic refactors to fix them.

Thanks to this approach, Meta has been able to port over 10 million lines of Kotlin code, allowing thus the majority of Meta Android engineers to switch to Kotlin for their daily tasks. The process also confirmed a number of expected outcomes, including shorter generated code and unchanged execution speed. On the negative side, though, Kotlin compiler proved significantly slower than Java’s. This opened up a new front for optimization by using KSP for annotation processing and improving Java stub generation and compile times, which is still an ongoing effort.

Do not miss the original article about Meta’s journey to adopt Kotlin if you are interested in the full detail.

Kubecost Open Sources OpenCost: An Open Source Standard for Kubernetes Cost Monitoring

MMS • Mostafa Radwan

Article originally posted on InfoQ. Visit InfoQ

Kubecost recently open sourced OpenCost, an open source cost standard for Kubernetes workloads. OpenCost enables teams to operate with a single model for real-time monitoring, measuring, and managing Kubernetes costs across different environments.

OpenCost introduces a new specification and an implementation to monitor and manage the costs in Kubernetes environments above version 1.8.

InfoQ sat with Webb Brown, CEO of Kubecost, at KubeCon+CloudNativeCon NA 2022 and talked about OpenCost, its relevance to developers, and the state of Kubernetes cost management.

InfoQ: Can you tell us more about OpenCost and its significance?

Webb Brown: We’ve come together with a group of contributors to build the first open standard or open spec for Kubernetes or container-based cost allocation and cost monitoring. We’ve worked with teams at Red Hat, AWS, Google, New Relic, and others. We open sourced it earlier this year and contributed it to the CNCF. OpenCost was accepted by CNCF and is a sandbox project.

We think this is so important because there’s no agreed-upon common language or definition for determining the cost of a namespace or a pod or a deployment, ..etc. We see a growth in the amount of support with this community-built standard to converge on one set of common definitions.

Today, We have a growing number of integrations. We just had the fourth integration launch this week and have a lot more in the works. It’s been amazing to see the community come together and see this as a commonly agreed-upon definition.

InfoQ: What OpenCost enables for this ecosystem and what integrations are delivering for end-users?

Webb Brown: We see cost metrics going to a lot of different products whether it is Grafana or other FinOps platforms such as Vantage which recently launched OpenCost cost monitoring support for EKS.

We have seen lots of adoption and gotten positive feedback. There’s a lot more we want to do. I think that’s just representative of what open source allows us to do. We have lots of integrations and data and we’re ready to take it to new and exciting places.

InfoQ: What is the state of Kubernetes cost management today and where do you think we are heading?

Webb Brown: I think it’s helpful to go over the history. When we started the Kubecost open source project in 2019, more than 90% of the teams we surveyed reported not having accurate cost visibility into Kubernetes clusters.

Last year, CNCF did a study and that number was about 70%. Today we think we’re closer to 50%. Now We see more and more teams have visibility, whereas again, a year or two ago, most teams were in a black box. Today, I think it is about giving teams accurate and real-time cost visibility on all their infrastructure.

Now we’re moving into what we believe is phase two, In which, we have this great visibility, how do we make sure that we are running infrastructure efficiently? How do we optimize for the right balance of performance, reliability, and cost with applications and organizational goals in mind?

That is super exciting for us. Again, we think about cost as an important metric on its own, but one that’s deeply tied to all these other things. We are seeing more and more teams enter that phase two and we are working closely with thousands of them at this point.

InfoQ: Are there any plans to integrate with other cloud providers or vendors?

Webb Brown: Today, we have support for AWS, Azure, and GCP, as well as on-prem clusters and air-gapped environments. We plan to add support for a couple of other cloud providers soon. I believe support for Alibaba is going to be next and I expect it will be available this year. And we’re in talks with a handful of other vendors to support OpenCost as well as Kubecost.

InfoQ: You recently announced that Kubecost is going to be free for unlimited clusters, Can you tell us more about that?

Webb Brown: When we started Kubecost, soon after leaving Google five years ago, we expected that the number of clusters for a small team would tend to be pretty small, three, four, or maybe fewer. That number of clusters has grown way faster than we expected. What we saw were small teams that were saying we’ve twenty-five clusters.

We thought KubeCost’s original free product that could be installed on one cluster would be sufficient for small teams. Recently, we’ve decided we want to bring our product free to an unlimited number of clusters so that teams of all shapes and sizes can get cost visibility and management solutions.

Kubecost builds real-time cost monitoring and management tools for Kubernetes. OpenCost is a vendor-neutral open source project for measuring and allocating infrastructure and container costs.

Users can get started with Kubecost for unlimited individual clusters free of charge through the company’s website.

MMS • Andrew Lau

Article originally posted on InfoQ. Visit InfoQ

Subscribe on:

Transcript

Shane Hastie: Hey, folks. Before starting today’s podcast, I wanted to share that QCon Plus, our online software development conference, returns from November 30 to December 8. Learn from senior software leaders at early adopter companies, as they share their real world technical and leadership experiences, to help you adopt the right patterns and practices. Learn more at qconplus.com. We hope to see you there.

Shane Hastie: Good day, folks. This is Shane Hastie for the InfoQ Engineering Culture podcast. Today, I have the privilege of sitting down, across many miles, with Andrew Lau. Andrew, you are the CEO of Jellyfish, and probably a good starting point is, who’s Andrew, and what’s Jellyfish?

Introductions [00:51]

Andrew Lau: Shane, thank you for having me here. It’s great to talk to you and your community, and thanks for letting us share my story around. Okay, so who am I? Gee. So, I’m hailing from Cambridge, Massachusetts, right across the water from Boston. I’m actually a Bay Area, California native, who’s been in the more volatile weather for the last 25 years.

But to know me, I was trained as a software engineer, I came to the East Coast of the US for school, and I had no intention of staying. It wasn’t the cold winters that I sought, but life happened, is the actual answer. Transit engineer… Put my finger, almost by accident, into the startup scene. Met my current co-founders of Jellyfish 22 years ago, and I can talk to that in a second. But that’s what actually got me to both be in the startup scene, and stay here, geographically, where I am.

And so, I’ve gone on the journey from being an engineering manager, learning how to do pre-sales, pro services, engineering management, to find myself running big engineering teams, to eventually, entrepreneuring, and finding my way, and growing teams and businesses along the way. I probably wear multiple hats in life, doing all those things. And for fun, to know me personally, gee, I play tennis. And the last few years, a lot of people have been baking bread. I’ve been working on my, well prior life I was a barbecue judge, so I’ve actually been working on my Texas brisket game. So some people have been sitting at home watching bread rise. I’ve been sitting at home watching my smoker run. So that’s knowing me a little bit on this.

And to your second question, what is Jellyfish? Well, Jellyfish is a software company, five years old. Jellyfish makes an engineering management platform to really help leaders of engineering teams kind of help their teams get more successful and connected to the business.

We’ve been really lucky and during the last few years and I say that lucky in the sense that, look, it’s been a hard few years for all of us and I come here today with empathy. Shane, I hope you and your family are doing well. I hope listeners, your families are as well. It’s just been a tumultuous last few years, but the silver lining for us is just the growth that we’ve seen. We were just a handful of people before the pre Covid times and today we’re almost couple hundred. And so that change has been really a positive part of it, but it’s also been hectic, amazing and crazy to get the chance to work with such amazing people along the way.

Shane Hastie: An interesting background there and definitely we’ll probably delve into some of those things, but let’s start with something that came out of Jellyfish is this state of engineering management report. Tell us a little bit about that.

State of Engineering Management Report [03:25]

Andrew Lau: So, we have this unique seat from where we’re sitting where we have the privilege, and I say privilege, by working with hundreds of companies, the leading edge, the upper echelon of teams that are really defining the way that we do engineering at scale as an industry. And we have the privilege of working and helping with them and learning from them. But also that because of this we actually can see trends move through the actual teams, right?. And because of this earlier this spring, we put out an annual report, a state of engineering management and we’re really able to get a feel of what’s changing in these teams and what’s relevant along the way. And so those are… That really is the nature of our report and it’s because we’re sitting in this place where we can actually see what’s actually happening across the teams.

Shane Hastie: You can see what happens across the teams. So what are some of those insights? What are the things that you were seeing in this report?

Andrew Lau: Shane, with the state of engineering manage report, we actually look across the hundreds of companies we’re actually talking to, which allows us to see how hundreds of thousands of engineers are doing their work. And in doing so, we can actually see the trends in the world of engineering management leadership. And so the things that are actually in that report tie into how we can look at data driven teams actually outpace like data deficient teams, those that actually are focusing on innovation, quality and speed to market. Those are all areas where we actually can see the correlation moving that those actually focus on those things can actually accelerate those pieces.

If you cared a lot about making sure you invest in innovation, we can see how teams that actually focus on that are able to drive that up. We actually see teams that actually are focused on quality and actually how the implications of quality. Where poor quality actually influences the amount of time being spent actually repairing and maintaining and how you can actually manage that going forward once you start looking at it.

And the last area that I would actually say is actually even things are speed to market. So the time it takes from the time you conceive something to the time you actually can get it out there and you can see how teams, when they actually start looking at this data and how they behave differently across there. The report’s available, you’re welcome to actually pull it and take a look. A lot of great insights there. And I think part of it is because it’s a sampling of being able to see what trends play out in these kind of contemporary organizations that are really are driving the way forward for all of us. And so we’re privileged to actually get a chance to actually both see that and learn from these folks marching forward.

Shane Hastie: So what does it really mean to be data driven as an engineering manager today?

What does being data driven really mean? [05:57]

Andrew Lau: Great question. So, look, I grew up as an engineer, eventually made my way to engineering management. I would say that in my experience, a lot of it is what makes one successful. Well you get the knows of what’s actually happening on your team. You get the knows of when things are stuck, who actually needs help when a project’s actually in trouble and how can you actually nudge it forward. You also find out the knows of actually what’s happening from the business side and what they need.

I actually think after years of that, those are the things that you instinctually learn, which is great. However, I think instinct alone often isn’t sufficient because a few things at scale as you get bigger, as your team gets bigger, instinct alone can be dangerous. And look, we all know we’re wrong sometimes. We have gut feels. We have a quick read. I think we should feel that muscle. I think that’s an asset for all of us.

But sometimes you should also double check with data in that stuff. And I think especially in this day and age, I think we have to be careful of actually not realizing that we might actually have biases if we’re not careful. We all think we know who the best engineer is that helps save these projects. But sometimes we should lift the covers a little bit and realize is someone’s stuck? Are they not able to get through something because the PR’s are not getting helped? Is someone not actually getting the actual ramp up that they actually need?

And so when I come back to data driven, I think I’m going to paraphrase it wrong, but there’s an old analogy which is you can’t improve what you don’t measure, right? And I think data is part of your tool belt.

So what do we all bring as leaders? We bring, well we’re in technology space. We bring our technology chops forward and our own experiences when we’re actually doing it. We bring our own war stories and experiences that we’ve been through on the good days and the bad days and team members and friends that have had done well or actually hit walls. We bring all those with us. Those who alone are the kind of historical tool belts. I think what we should bring forward too is actually bring some data to validate your gut feel and assumptions along the way. Because in some ways as we scale, all of us… Data brings that kind of the bionic tool belt to help us make decisions better, right? Either because it validates that your gut is actually right or sometimes when we’re lost in not knowing where our team is needing help to bring kind of insight into figuring out, hey where should we turn? Where should we actually dive into? Or actually where should we actually correct the business? Because it doesn’t understand what’s actually happening.

And so to me data is actually just part of contemporary leadership, which is because we’re awash in it, we actually have to integrate in the way that we do things today. So that’s what data driven means to me.

Shane Hastie: You make the point there that we are awash in data making sense of those floods.

Making sense of the floods of data available to us [08:35]

Andrew Lau: Yeah, I was talking to someone recently, we have KPIs, we have all the KPIs, all the KPIs. We have all the data and I’m like well if you have all the data, the problem is then you almost have nothing because we’re so awash in it today, right? It’s easy to say you have all the data. I think it’s actually important to figure out what it is to look at. And I think when you actually think about this actually you should…

The area that we all can help each other on is actually understanding what to look at. Because today you could open any charting package, you could open any data stream, we’re awash in it and the question is what do you pay attention to? And I think for us there’s a few things. We’ve learned from some experienced individuals, people that we work with that like which things they pay attention to and we aim to actually make those data streams more accessible to those that are actually earlier in their pathway that don’t know those things.

We also are able to look at the corpus of all the things that are happening and kind of bring some data science to actually highlight what what’s correlating in these different factors, which things are actually the pieces that actually you should pay attention to. And so when I think about our role in this and all of our roles, it’s not that we need more data for data’s sake. We need to figure out which data pieces we should be looking at, which things we need to actually refine and which things we actually need to focus on. And then last bit is to make it easy to get to .but I think that’s the journey that we’re all on together to actually evolve this industry forward

Shane Hastie: As an engineering manager, which are the data gems, the things that really can help me understand what’s going on with my team and how do we make this data safe?

Andrew Lau: Okay, so my turn to actually turn the tables, Shane. What does safe mean to you? Because I feel like that’s a leading question. I want to make sure I get from you what safe means to you? ‘Cause you’re teaching us here,

Shane Hastie: How do we use the data in a way that the insights are useful and the people who the data is from have trust that it’s being used in a way that’s not going to be used against me. We go back to the 1940’s and before. W. Edwards Deming give a manager a numeric target and he will meet it even if it means killing the organization to do so.

Andrew Lau: You’re right. I would say a couple things to that. So this is fun by the way. Look, I think we can be terrible leaders with or without data. I’ll start with there first. So it is not because of data that actually makes us terrible leaders. And I actually think of this first and foremost when we think about leadership, core leadership, data’s an enabler, data’s not the thing.

Good leadership is not dependent on data [11:08]

I often talk about, before we talk about anything when it comes to data, we as a leader we need to talk about culture. And culture can be this soft, squishy word. Sometimes you can talk about enduring, it’s the unwritten rules. But I think when it comes to technology teams, it’s like actually culture actually implies what matters to us. And when I say us as a team, us as people delivering and you actually phrased it as against, I mean look frankly any company that actually is talking about against probably isn’t in the right place anyways because why are we all here at the companies we’re at? I don’t know anybody that actually wants their company to fail or at least I hope not.

So when I look at this, it’s all about making sure that if there is conflict it’s because we’re not understanding each other. We’re not rowing in the same direction proverbially.

The importance of alignment and clarity of purpose [11:57]

And so go back to culture. I actually think the most important part in leadership is to make clear what we care about and why. When I see disagreements actually happening and technology companies. I first started, is there a misalignment and lack of understanding because who the hell actually wants to sink their ship? I don’t know, that’s a crazy person in my view. More than likely I look at this and I see teams where the interns are working hard, jamming late into the night on a legacy code base fixing bugs of something they didn’t even make. And all the while the business is screaming, why isn’t this new feature delivered because we promised it yesterday.

Okay, well let me pause on that part of it. There’s a fundamental misalignment there. These guys and gals are staying late at night fixing a piece of software they didn’t actually fix. The business is yelling about delivering this other thing. Both of those are valuable for the company clearly, but somehow both parties doesn’t know what the other one wants and why. They’re not actually having a conversation. And so back to culture, I think it’s important to actually align on what matters to us. So Shane, you and I, we could care like, hey we need to just get things on the floor out as fast as possible. We might care about lead time and velocity like nobody’s business because all we want is get stuff out there. That’s all that matters. So if that were the case then I would say we should agree on that and we should actually make sure that we’re on the same page around why lead time and velocity of things that we actually look at and we care about.

Okay, it could be that you and I are like, hey we really got to spend time on innovation, this new thing. We need to put away everything else we’re doing. We need to agree on that. And so we should make sure that we’re protecting our time to work on those things. And so we should measure to make sure what else are we doing and let’s make sure we get it off the plate.

We should have this conversation first before we talk about any data because you’re right, we are awash with data in the world and data can take you many places if you don’t talk where you’re going first, right? It is but a tool to accomplish what you’re doing. I come back to it’s part of your Swiss army knife. It’s part of what you do once you’ve decided as a team, as a leader where you want to go. It is not magic on its own but I think it’s actually just a superpower to enhance what you’re already going in.

Shane Hastie: Shifting gear a tiny bit. Looking back at your career, you’ve gone from as you said, engineer to engineering manager to business person to entrepreneur. What’s that journey and what are the different perspectives that you take at each step in that journey?

The journey to engineering manager and entrepreneur [14:27]

Andrew Lau: I appreciate you asking because you and I were kibitzing a second ago about the languages we all started in. I started with C and you said you’re a COBOL guy, right? And so both of these things are probably lost annals of time. I don’t even know if any of my team members today actually can even talk about a Malik or a Calic anymore in these things. But who knows? I did start as an engineer. I was a systems engineer. I lived in kind of network stacks and the good old days of making sure my memory was free and rolled out. And look, I really enjoyed those days. Probably if I find some moment of retirement that probably is the life of me that can go back to that kind of hands on bit of it. I think that there’s something cathartic about actually working there in that way.

But I actually then eventually moved on to engineering management and I found that role just different. I actually think that when people go into engineering management, I think people think that’s a step forward. I don’t think it’s a step forward for everybody. I think there are times when it’s not fun and I actually look at this like, I’m a dad now of two girls and I kind of think of actually management as parenting. You don’t know you’re going to love it till you’re in it. We can intellectualize it all day long but you have to learn whether you find derivative satisfaction in leading others or when you’re coding yourself, you make a thing, you do a thing in that part of it. And so you kind of learn your way through that.

And then as I kind of progressed in my career, I learned around kind of large scale management, more executive management and I learned that the tillage of actually needing how to translate to business people that actually don’t understand how engineering actually works and that actually becomes the role.

Earlier my career I used to think that oh, you get into these roles and then you get to tell people what to do. Well you know, don’t tell anyone what to do actually in reality. You spend a lot of time bridging is the role and you’re trying to connect the dots and making two pieces actually fit together.

And then entrepreneuring on the other side, on the pure business side, having been through the other journey, I’m actually seeing that, look, there are business implications in this stuff and I empathize with not understanding on the other side. And I guess the benefit of going through all those journeys is I get to look at my other self and actually realize I didn’t actually understand the perspective from the other side of the table at the time. And I try the best I can to realize, to speak to myself back then to understand two sides of it. This is what I need from the other side of the table.And I think to me that’s actually, I joke with my engineer leader either the best job or the worst job. The worst job is that I know the challenges he’s facing and I could tell him, did you do this? Did you didn’t do that? Okay, I know I can be a pain in the ass.

On the flip side, I come here with empathy on it and I understand that your stereotypical business CEO is a pain in the butt yelling this, this, this. And at the very least I try to come back and I should contextualize it. Hey, this is the why. This is what I saw when I was in that seat the other side and I empathize with you. It is a thing but this is a business reality and our job together is to connect those pieces, right?

And I think at the same time I look back to my old engineer self and I would say, hey, there were days that I hated too. I hated working on somebody else’s code that was horrible and then we needed to ship a date on this thing and I hated time commitments that someone else made. But at the same time with that empathy I can actually say, well if a time commitment was made it was because of this and this is why the business needs this and if we don’t do this, this is what’s actually happening and dear engineer tell me what else could we actually do differently to make sure that we get this or tell me what’s going to give. And the engineer that’s stuck late at night working on bugs, I see you and I understand that sucks and that wasn’t your code and that’s not your fault. And so please bring it up to me because the business would rather actually have you working on new features and great things.

So surface that for me so that we can actually talk about what we can actually put away so you can get to those other things. I think that to me being in all these seats has actually helped me actually see the other side. And I hope and you can go connect with my engineering team to figure out, am I the good or bad version of me? But I try to show up to actually figure out can I speak in a way that I actually can bridge both sides of it. At least to both contextualize why I’m saying what I’m saying and try to elicit what’s holding us back from doing those things. And I think being in multiple seats helps us see the constraints of each party along the way.

Shane Hastie: Thank you for that. So culture. It’s a wide ranging term. With Jellyfish with the organizations that you have been a part of and being a founder for, how do you define the culture and maintain the culture?

Understanding culture [18:54]

Andrew Lau: First of all, culture’s a hard word to define. You called it out. On the worst of times people think of it as foosball tables and yoga balls. That’s not culture. We can actually talk about the artifacts and outcomes that it is the unwritten rules. It’s the fabric that holds us together. These are analogies but they’re not actually defining it. So if you ever find a way to describe to me culture in succinct words, Shane, please email me and teach me how to talk about it.

I take it for granted that culture’s vividly important. It is not the foosball tables and yoga balls. I think that’s a media cleansed way of what culture actually is. I actually think of it as the way that we behave. It’s the norms that we actually do things. I do like that it’s the unwritten rules. So we don’t actually have lots of process. It’s process without process. It’s the values, it’s all of those things.

I do think it’s really important. It’s what keeps high performing teams heading in the same direction without a lot of direction and berating. And I actually think that I am definitely a student of it more than anything I know it’s what kept me at companies over time saying that we actually have to develop. So to me personal philosophy, I don’t think culture can be mandated. And we’re definitely in the middle of learning this over the last couple years. So maybe we were actually have a handful of people. Maybe you could pretend that one could mandate culture like dear team, have fun, right? Okay, well guess what? That doesn’t work. Last I checked, no one has fun when you say to have fun. And this is extra true actually at the couple hundred people that we’re actually at now. And so the other thing to recognize that culture can be stagnant, it actually has to evolve because the thing that’s stuck in the past is never going to come with a new team. And teams have to own their culture. And so for me philosophically, culture has to be refreshed and built by the teams. It can’t be proclaimed. It actually has to be organically developed at the team level. And my role in this is to actually shepherd it, to nudge it to be the guardrails. I can tamp down things that are bad but I can still click a fire in things that are good but the kernel of it actually has to start on the team first.

Leadership’s role is to spot things that are good and give them fuel [20:52]

And so my role in this is to guide to spot things that are good and give it fuel. And fuel can be attention, time could be money, encouragement, teaching. Those are all things that I look for because I actually think that’s the most important part of actually that culture does evolve. Culture is present and culture is owned by the team themselves, not proclaimed from somebody from far away.

And so to make it super tangible, I’ll tell a silly story. Really early on in the company someone came in and I don’t know if you’ve ever seen, there’s a YouTube show called Hot Ones about eating chicken wings. And I know this is going to take your podcast in funny directions here, but it’s about chicken wings. And I remember a woman, I’m not going to out her ’cause she’s awesome. She just joined the team and she came to work one day. She’s like, I saw this thing about the show of Hot Ones. I think we should do it at work. And then the conversation was like, no I don’t think we should, I don’t think I’m going to do any work. I think you should do this. What I didn’t mean by that is, it is not like it’s on her, it’s actually it’s about her. And so the encouragement was actually like you should do it.

And so behind the scenes, yes we make sure that we’re buying the hot sauces. We make sure we’re picking up the wings at the grocery store for her and Falafels in her case because she’s vegetarian too and we make sure that she’s sending out the emails to nudge people to show up and to remind them. But she shows up and she throws this amazing party. Everyone had a great time, burnt their faces off, remembers it for years to come. And she did this amazing and trivia. But the key part is it’s about her. It’s about that culture. It’s about the initiative there. And that’s a very minor, silly story. It’s about chicken wings but it’s actually about how we play a role, all of us and actually encouraging each other to bring it forward, to be yourself and to bring it to the team and let it blossom in that way. And I know most of your content on your podcast is actually about engineering and teams and that stuff and we’re talking about chicken wings. But I actually think culture is a big part of what we do as leaders and teams. That’s the way we think about it at our house.

Shane Hastie: Another part of culture is rituals. How do we define build and allow these rituals to be positive experiences for our teams?

Rituals as a part of culture [22:56]

Andrew Lau: I think rituals go part of culture. It’s part of the lore of being there, part of why you’re there. It also makes you feel at home, makes you feel part of the team. It’s part of the identity along the way. I think they are important. And I go back to my matching point around culture, which is I think they evolve organically.

Rituals are almost the best things that we remember from our past that we keep doing forward. I think can’t pre-think a ritual before it actually happens. So you can’t proclaim culture from on high, it doesn’t work. Rituals are the things that happen to survive because people like them. I’ll share a fun story. One of our rituals at our team is that if you look from the outside you would say, oh you guys do an all meeting every Thursday at 4:00 PM. I was like, yeah, we kind of do but we don’t call it that. It has a strange name. It’s called demos. Okay, you’re like demos, what is that?

So it’s an outgrowth. It’s an outgrowth of when we were under 10 people, we used to sit around a big red table. We were probably seven folks we’re all working on different things and we’d sit around a table and we used to demonstrate what we’re actually working on with each other just every Thursday. So we sit down, sit around table, grab a beer maybe and just pass the screen share around and just show what we’re actually working on. And back then it was probably more about code, more about product. And that evolved as we grew too as we actually added a sales team, a finance team, marketing team. And it evolved to being something that we actually, I took turns sharing what are we doing in different departments. I remember actually saying, hey, here’s a new legal contract we actually showed and my co-founder, David, used to make fun of me. You just showed a word document that doesn’t count. But it was actually good.

People actually found value in actually learning what each of us actually working on. And there are times when the world is tough that we take a moment to actually share that hey, here’s our bank account balance, we’re going to be okay team. But either way it became a thing that we rotated. And so we still continue that ritual today at 200 people and we now go department to department and we actually pass the mic and each week we describe what we’re actually working on. And for my turn when I get to do it, we still have tradition which is every board meeting we do, which is every quarter. We then run the Thursday after a board meeting. We run the same slides with the whole team and for two reasons. One because I believe it’s deeply aligning to actually understand what we’re marching towards and why as a team. We execute better for it.

But also back to the term culture. I think we have a culture of curiosity in our team. We have a culture of actually curiosity, especially around entrepreneurship. Not everybody wants to be an entrepreneur tomorrow, but they’re all really curious about it. And so we’re there to demystify what actually happens. When I was earlier in my career, it’s like what happens in these boards? It’s really scary, complicated. It’s not. We have to approve things. We have to legal stamp things. But it is I think valuable to actually unfurl those things. And so that’s an example of a ritual that we took from when we were smaller to when we’re actually bigger because people still wanted it. We still check in every Thursday. It’s like, do you still want this? We still keep doing that, right? You made me think of recently I’ve been unfurling, where did this ritual come from?

And so my co-founders and I met 23 years ago. They hired me in 1999 and I realized that this whole demos concept actually goes back to a thing we used to do. That ritual back at that company we used to call dogs and demos. We would do hot dogs and demos, same concept. And I realized that that actually concept itself was actually born in a company that I was actually with when I crossed paths. My co-founder, Dave, in 1999 called Inc. to me, which became Yahoo’s search when they used to demos on a monthly basis, similar thing, grab a beer and watch a demo.

And I learned as I pulled that thread, that ritual actually goes all the way back to the mid nineties to SGI. So silicon graphics, so Shane, you and I can date ourselves that we can nod at each other and actually know what SGI was. Those were the super coolest workstations back in the day. It is super cool to, for me to actually realize that these rituals do carry on from generation to generation just because when things work, you keep doing them right? And we learn from that part of it. And that’s how rituals actually established because we propagate forward what worked. And you can’t proclaim that beforehand. You have to do it post facto.

Shane Hastie: Andrew, some really interesting conversations and great stuff here. If people want to continue the conversation, where do they find you?

Andrew Lau: Well, you can find me on LinkedIn. You can find me on Twitter. I guess the handles amlau, A-M-L-A-U and please come check us out jellyfish.co. We’re hiring. Anyone actually that has contributions around culture or metrics or thinking of engineering leadership and how that actually makes a dent in the world. We’d love to meet and trade notes. We’re here as students of all of us and love to learn from you. So, Shane would love the chance to actually meet some of your crew here and really appreciate you having us here.

Shane Hastie: Thank you so much.

Mentioned

.

From this page you also have access to our recorded show notes. They all have clickable links that will take you directly to that part of the audio.

MMS • Steef-Jan Wiggers

Article originally posted on InfoQ. Visit InfoQ

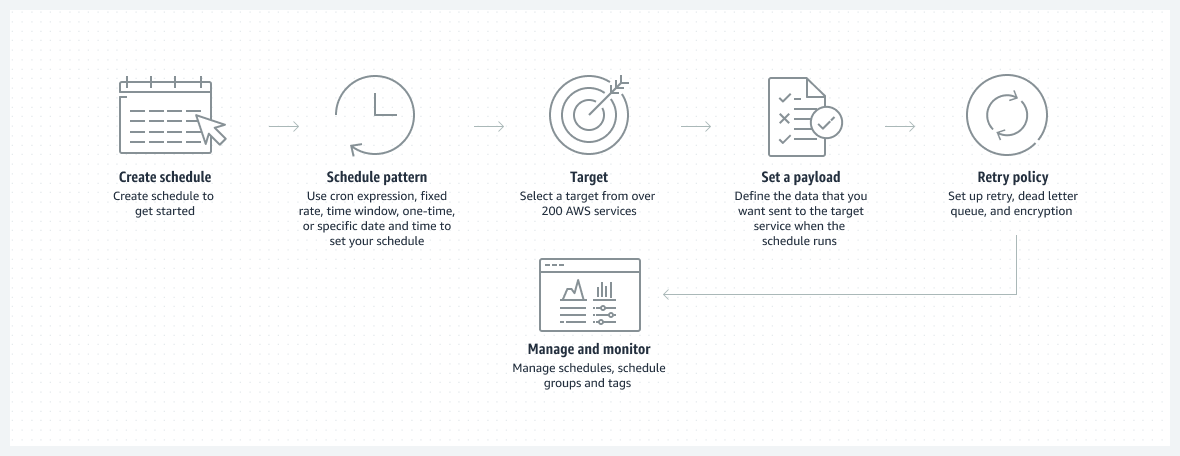

AWS recently introduced Amazon EventBridge Scheduler, a new capability in Amazon EventBridge that allows organizations to create, run, and manage scheduled tasks at scale.

Amazon EventBridge is a serverless event bus that allows AWS services, Software-as-a-Service (SaaS), and custom applications to communicate with each other using events. Since its inception, the scheduler is the latest addition to the service, which has received several updates, such as the schema registry and event replay.

Marcia Villalba, a senior developer advocate for Amazon Web Services, explains in an AWS Compute blog post:

With EventBridge Scheduler, you can schedule one-time or recurrently tens of millions of tasks across many AWS services without provisioning or managing the underlying infrastructure.

Source: https://aws.amazon.com/eventbridge/scheduler/

Developers can create schedules using the AWS CLI that specify when events and actions are triggered to automate IT and business processes and provide scheduling capabilities within their applications. EventBridge Scheduler allows developers to configure schedules with a minimum granularity of one minute.

Yan Cui, an AWS serverless hero, tweeted:

It’s great to finally see an ad-hoc scheduling service in AWS. My only nitpick is that it’s granular to the minute, not an issue in most cases, though.

The capability provides at least once event delivery to targets, and you can create schedules that adjust to different delivery patterns by setting the window of delivery, the number of retries, the time for the event to be retained, and the dead letter queue (DLQ). An example is:

$ aws scheduler create-schedule --name SendEmailOnce

--schedule-expression "at(2022-11-01T11:00:00)"

--schedule-expression-timezone "Europe/Helsinki"

--flexible-time-window "{"Mode": "OFF"}"

--target "{"Arn": "arn:aws:sns:us-east-1:xxx:test-chronos-send-email", "RoleArn": " arn:aws:iam::xxxx:role/sam_scheduler_role" }"

More specifics on the configuration are available in the user guide.

Developers in the past have used other means. A respondent in a Reddit thread mentions:

I was already using AWS Rules for a scheduler, but this scheduler they’ve made doesn’t seem like it can do everything that the rules can.

However, Mustafa Akın, an AWS community builder, expressed in a tweet:

I have waited for this service a long time. Many of us had to write custom schedulers because of this, we use SQS with time delays, it works but not ideal. Now we can delete some code and rejoice in peace.

Yet received a response from Zafer Gençkaya, an engineering manager, OpsGenie at Atlassian, stating:

One more lovely vendor-lock use case- that’s we have been waiting for for so long. It makes lots of backend logics redundant! I’d love to see exact-once delivery support, too — of course with additional fee.

Amazon EventBridge Scheduler is currently generally available in the following AWS Regions: US East (N. Virginia), US West (Oregon), US East (Ohio), Europe (Ireland), Europe (Frankfurt), Europe (Stockholm), Asia Pacific (Sydney), Asia Pacific (Tokyo), and Asia Pacific (Singapore). Pricing details of the service are available on the pricing page.

MMS • Audun Fauchald Strand Truls Jorgensen

Article originally posted on InfoQ. Visit InfoQ

Transcript

Strand: Autonomy is the cornerstone of team based work. Teams should include all the competence and all the capabilities they need to solve the problems they own. When they have that, they should have the freedom and trust to make the decisions about their domain, without asking others for permission. We work at NAV, the largest bureaucracy in Norway. When you’re that big, it’s also valuable to have alignment across these teams. Most things should be done in mostly the same way.

Background

Jørgensen: My name is Truls, and the guy that just started talking is Audun. We are principal engineers at NAV. We have been working here for five and six years. Both of us have been working as software developers for over 15 years. Maybe it’s because of that, or maybe it’s because we are often seen together, colleagues tend to compare us to Statler and Waldorf, the silly old Muppets on the balcony of The Muppet Show.

Strand: Here we are, we are programmers working in IT. In this talk, we’re going to talk about how we achieved alignment in the technology dimension. We think that the tools, techniques we’ve used can be useful for others in other dimensions as well.

Jørgensen: Let’s start with a really short introduction of NAV. It’s Norwegian Labor and Welfare Administration. We got 20,000 employees across the country, making it the largest bureaucracy in Norway. The mission for NAV is to assist people back into work. We also provide benefits to Norwegians from before they are born until after they’re dead. In total, it sums up to one-third of the state budget.

IT at NAV (Norwegian Labor and Welfare Administration)

Let’s talk about IT at NAV. Since NAV was established in 2006, IT was always slow. By slow, I’m talking four huge coordinated releases a year slow. As you know, slow means risky. By risky, I mean 103,000 development hours in a single release risky. It doesn’t get more risky than that. That has changed. That changed in 2016, and what a change that has been. Instead of releasing four times a year, we now release over 1300 times per week. This has been a massive transformation. This transformation is the context for this talk. This graph is saying something about speed, but perhaps not much on flow. We have more graphs for you.

What happened in 2016, was that we got a new CTO, and he insisted that NAV should reclaim technical ownership, and start to insource. As you see, this graph correlates with the previous one. NAV used to think of IT as a support function, and as a consequence, NAV didn’t have any in-house developers at all. Instead, NAV tended to lean on consultants to get that boring IT stuff done. In fact, I started as the very first developer back in 2016. Today, we have almost 450 internal developers. We think it’s worth making a point there. If you want to optimize for speed and fast flow, you must leave behind the mindset that IT is a support function. In order to succeed with the digitalization, NAV had to consider IT as a core domain. This third graph shows a very similar curve, so absolutely no surprise at all. We didn’t have any product teams at all before 2016. We used to be organizing projects, but today teams are considered the most important part of our organization, and by Conway’s Law, the architecture.

What We Left Behind

Strand: These changes mean that we have left something behind. Basically, before 2016, NAV was organized by function. That is organized by function where each function is specialized and solves a very specific task. Such a design yields a busy organization but the flow suffers because of all the internal handovers. An organization that optimizes for flow should further aim to reduce the handovers and need to accept the cost of duplicating functions, reducing the handover risk by domain instead in the cross-functional teams.

What We Adopt and Prefer

Jørgensen: What we adopt and prefer when optimizing for fast flow. That’s really the topic for the rest of this talk. As we have seen, we have adopted a team first mindset at NAV, but as we shall see, the surrounding organization struggles to figure out how we can set those teams up for success. While high performing cross-functional teams demand autonomy, it is also valuable for NAV to have alignment across these teams, but getting alignment in the age of autonomy, that’s pretty hard work. We have come up with some techniques to achieve that alignment that we’re going to share with you. First, let us start with the teams and why they demand autonomy in NAV. As we have seen, we have over 100 teams, and each team is playing a part directly or indirectly in the lives of the people of Norway.

Strand: We have one team working on the unemployment benefit, another with the parent key benefits. We have teams owning and coding the front page on nav.no, and the application platform that everything runs on. One team we worked a lot with lately is a team behind the sickness benefit. This doesn’t really translate to relevant English, but the team is called team Bamble. Bamble is an island off the west coast of Norway, and one of the central team members of the team comes from that island. That’s why they chose that name. Team Bamble is a proper interdisciplinary team. There’s programmers, legal experts, communication experts, and designers, and other kinds of members. They’re mature, having worked again for a few years, having practiced continuous learning for a long period of time. In theory, they know everything they need to know to figure out how to do this, how to solve all the problems that need to be solved to create a system that automates the full chapter of Norwegian law, controlling the payment of the sickness benefit to our users.

Jørgensen: People come to this team with different perspectives, with different voices. One voice, the developer voice suggests that the most important thing the team should deliver is a sustainable system. Because the system are modeling an important part of a welfare state that is constantly changing. The software we create should be adaptive to change. Another voice argues that the most important thing the team should deliver is a system that makes the case handling more efficient. Then we have a third voice that wants the team to focus on a more user friendly service for the sick. Finally, the fourth voice claiming that the most important thing that the team could do is to create a system that does a better job with compliance than the old system for sickness benefit. Coming to think of it, all of these voices make sense. Ideally, we want all of those perspectives implemented in the new system. These voices don’t always form a beautiful choir. When push comes to shove, which perspective wins, which voice is the loudest? To top it off, when the team funding comes from one of the voices alone, that makes it even harder to balance their perspectives. Getting their perspectives is really important.

Let’s run quick examples on why. Providing support for the sick. That’s nothing new. The previous system is over 40 years old and tailored for a human caseworker. This new system is instead tailored for computers, and aims to do a fully automated calculation of the benefits. In some ways, this is where the human brain meets the machine. It’s also where theory meets practice. Coming to think about it, the rule set is the theory, but those rules has been put to test by caseworkers for over 40 years. That raises some really interesting questions, such as the practice has in some cases served as a Band-Aid for inconsistencies in the rule set. How do we transform that practice to a new system? Also, during that time, different practices throughout the country has emerged. Which of those practices should be considered to be the correct one? What does that mean for the practices that we now consider wrong? These are indeed complex questions to answer. It doesn’t matter how good you are in Kotlin. In order to be able to come up with answers to these kinds of questions, the team must be cross-functional: developers, designers, compliance experts, and legal experts must work together.

Strand: With all these people in the team, the team requires autonomy to function well. The essence of what Truls just said is that this is basically the job, but NAV wants to automate the sickness benefit. This is what this means. Navigating a long line of problems without a clear solution, but many different forces trying to shape the solution to the problem. This isn’t really about how fast a programmer you are or how fast the team can produce code. It’s about how fast the team can learn about the problems and the forces affecting them. Trying to find solutions that are sustainable, understandable, legal, and sound technical. For the team to be able to do that they need to work together over time. They need trust and psychological safety, and good relationships between the team members. Seldom has this quote by our hero, Alberto Brandolini, been more correct, “Software development is a learning process, working code is a side effect.” After thinking about this for a little while, if you believe this code, how does that affect how you work? What’s important if learning is more important than creating code? How should you change how you work? You need to put structures in place outside of teams as well as inside, that help the teams to learn as efficiently as possible. In such a team, with all these different team members from all these different departments, each team brings a different perspective to the table. The departments need to find ways to structure the learnings being done in the teams, and finding ways to get this knowledge back into all the teams that work at NAV.

The Surrounding Organization

Jørgensen: Let’s talk about the surrounding organization. We had just briefly touched upon it. Remember those graphs earlier? What does the organization behind this change look like? Cross-functional teams demand not only autonomy, but they need that the surrounding organization operate as support. That is not how it really is at NAV. NAV is not just a system of teams. NAV is also Norway’s largest bureaucracy, and we have two competing organization designs. Both are struggling to find a way to coexist. There’s obviously a lot of tension between the cross-functional teams, and the traditional hierarchy, which is very much alive. Everyone agrees to some extent that cross-functional problems are best solved in cross-functional teams. In order to be responsible, teams need autonomy. Empowering the teams means that the different departments such as the IT department, legal, and the benefit departments are losing influence. Everybody wants to go to heaven, but nobody really wants to die. In NAV, changing the formal organization to be a better fit for a system of teams is a really long running process. We cannot sit and wait it out, although this process is currently running. This is the reality. We have over 100 teams that is deploying to production all the time, and they are cross-functional, and they are competent. We have also this large bureaucracy surrounding those teams struggling with the question, what could the departments do to set the teams up for success? Now we will discuss what IT at NAV has done to set the teams up for success to increase the speed and flow.

Alignment in the Age of Autonomy

Strand: We really think that the other departments both in NAV and maybe even other places, can learn from what we have done. First, we need to talk a little bit about how alignment and autonomy comes together. Most of you have probably seen this drawing. It’s from one of our software development process heroes, Henrik Kniberg. There’s always a Kniberg drawing to visualize the concept you want to explain to someone else. It’s easy from seeing this drawing where you want to be. It’s a quadrant so we want to be in the upper right-hand quadrant, also there’s a green circle showing the desired place to be. What is Kniberg saying here? He’s saying that you need teams that have a clear understanding of what problems to solve, and the constraints and opportunities to connect it to that problem. You also want to give the teams a large amount of freedom on how to solve this problem. This is very easy to say, we need aligned autonomy, but getting there isn’t necessarily as easy as just saying those words. How do you get there? What do you do differently tomorrow to get closer to the desired state and the best working? You really need a plan. You need people to work on the measures of that plan. Now we’re going to talk about our measures, what our plan looked like. I probably like to say that we planned all the history before we started. We have tried things and we’ve tried to keep doing what worked and stopped doing things that didn’t work.

Jørgensen: Something that didn’t work was NAV’s strategy for getting alignment in the years before 2016. That used to be, to write principles on the company wiki. In the age of autonomy, that’s some crazy definition of done. You will write that principle on the wiki and then you can get frustrated over lazy employees not following what you just wrote. We have seen this strong indication of sloppy leadership repeating at several organizations, not just NAV. The message is the same. This isn’t good enough. If you aim for speed and fast flow in your organization, writing stuff on the company wiki is not enough, if you want to direct the flow somewhere. Alignment in the age of autonomy needs another approach. You need to find each team and talk with them with respect for their autonomy. Make the team want to do it, and provide helpful guidance along the way. Always adapt it to their context and their abilities, and be aware to not exceed their cognitive capacity. You do this for each team.

Strand: What you’re saying is you can just put this principle on the company wiki and then we’ll be done.

Jørgensen: I guess we are done. No, we should discuss some examples instead. First, we will discuss two descriptive techniques, and then two normative techniques that we have seen work good together.

Descriptive Technique – The Technology Radar

Strand: The most basic technique that we have is the technology radar. This is an internal technology radar just for NAV. We want the teams at NAV to use this to share the technologies they’ve tried, and the conclusions or the learnings they’ve made from those choices. The radar has traditional categories, just like the Thoughtworks radar, of assess, trial, adopt, and hold, and also another one called disputed. It’s implemented with a simple Slack channel, where anyone can make suggestions and attribute the discussions, and add that up in a database to show the conclusions and the data for what happened. If you really want to summarize our strategy with the technology radar in a tabloid way, you can say that when it comes to technology, everything is loud, as long as you broadcast your choices to the organization. This is basically our implementation of that principle.

It’s sometimes misunderstood as a list of allowed technologies. We think it is a curated list of the technologies used. We have a small group of editors that collect the suggestions and moderate the discussions. Sometimes the discussions are basically as good as the conclusions. One of my hobbies at NAV is to, for instance, say that we need Gradle in adopt and Maven in hold, or the other way around, just to observe all the interesting discussion that comes afterwards. Or maybe just say something about the Spring Framework, it works the same way. Basically, our radar shows the breadth of the technology landscape, but it also gives teams insight into what other teams are doing. This insight has a real effect on alignment. Because when one team can see what another team does, it’s easier for them to choose the same thing if the first team is happy with what they’ve been doing.

At the same time, this radar is very descriptive. It doesn’t have any normal development. It doesn’t say what’s allowed and not. That has a really interesting and helpful side effect of reducing or maybe even removing shadow IT. Because when teams can publish the choices they made without being yelled at for doing something illegal, it’s much easier for them to say what they have, and there’s no reason to hide choices that I think others will not like. All in all, this has been a really good thing for NAV. Sometimes the radar isn’t enough, we need to go into more depth on the topic.

Descriptive Technique – Weekly Technical Demo

Jørgensen: For this, we have the weekly technical deep dive. The weekly technical deep dive started small, back when we were less than 20 in-house developers. Now this arena serves as a weekly technical pulse throughout the organization, perhaps even more so during the pandemic. We’re now using Zoom, and it attracts over 400 people each Tuesday, and the recipe, that’s still the same. One team takes the mic and presents something they’re working on, something they’re proud of to all the other teams. The weekly demo is as the technology radar a descriptive technique for creating alignment. It’s really efficient for spreading good ideas across teams. It’s relatively low effort. It needs a dedicated and motivated group of people to arrange this every week. It’s low effort, but really high impact. That group is doing an incredible important job. They’re really changing the culture at NAV towards more openness, and more kindness, and more curiosity, and more learning each week. These are two descriptive techniques, time to discuss some more normative ones. This is the technical direction presentation, and we should spend a little more time on this one.

Technical Direction

Strand: This presentation is basically a complement to the more descriptive techniques that we talked about already. For those, the goal was to create more transparency and more discussion. With this presentation, technical direction, we want to be more normative and say what we as principal engineers believe to be the best way forward with different teams. This is perhaps a little more concrete things we do during [inaudible 00:22:21]. We spend a lot of time between the teams writing the architecture layer and translating between the layers. We also make it a target to spend some time coding in teams. Doing that makes it possible for us to aggregate and refine the experiences that teams have, as an input to our presentation. We also try to talk to and learn from other organizations, and have learnt a lot from people outside NAV as well.

Jørgensen: The presentation itself is a big Miro board, and each team gets their own copy of this, meaning that there are now over 100 copies of this somewhere. We usually start by telling why this presentation exists, and why we need a technical direction in NAV. It’s basically the gist of what we have been talking about so far, starting with this graph. Then we move on to tell each team our plan. We have held this presentation for over 100 times now. It is in Norwegian. The presentation exists to serve as a discussion material for each team. If the team needs some time after this session to think, we are more than happy to come back to discuss more. We can also provide training material for each topic and provide courses or time with subject matter experts, if the team wants. If the team wants part, it’s really important, because this is pull, not push.

Strand: NAV is very heterogeneous. We have teams with mostly new code, they’ve written all by themselves. We have teams that maintains almost 40-year-old code. It’s quite challenging to create a document or an opinion even that’s relevant for all of these different teams. Something that’s been a part of the solution from the beginning for some teams requires an incredible effort and is almost impossible to implement for other teams. Our way around this has been to try to describe what we want to happen as arrows. What should the teams move away from and what should they move towards? Then we can use that set of arrows as a background for discussion with the teams. Do they need to start to move away from the left side or should they aim to get all the way to the right side? Also, by being concrete and talking about what the team should stop doing, it makes it much more useful for the teams. It’s easier for people like us to create a list of good things, but actually saying what’s bad and what we want the team to stop doing is more difficult, but also more helpful for the teams.

Jørgensen: There are four categories of topics. It is data driven, culture, techniques, and sociotechnical.

Data Driven

Strand: NAV has a really unique position when it comes to data. We have so much data about our users but we still have a great potential in how we actually use this data. We want to become more data driven, both in how the teams work and operate, but also in the products and services we provide to our users. To achieve this, we need to think differently when it comes to data. How can we keep sharing data between our large product areas in NAV without creating the tight coupling that makes it impossible for teams to change independently? We are basically saying that sharing of data between teams should be done using streams instead of APIs or even the database. For analytical data, we are heavily influenced by the data mesh concept by Zhamak Dehghani. We have created a new data platform, and try to encourage teams to see analytical data as a product instead of a byproduct of the application they own.

Culture

Jørgensen: We consider the team to be the most important component in our system architecture. The culture category is also really important. As Audun mentioned on the technology radar part, we don’t want technology choices to lay in the shadows, we want transparency, and we want openness. We challenge the teams to try pair programming for internal knowledge sharing in order to not be dependent on the most experienced developers. Our point is that the whole team should benefit from knowledge sharing, even the most experienced developers. This is just our suggestion, and the team can come up with other ways to achieve that knowledge sharing goal, which is really the outcome. Also, we code in the open after great inspiration from Anna Shipman and government of UK. The last arrow is about sustainability. We have left a project mindset behind, and now we think that our projects are never done. Therefore, we shouldn’t race to a finish line that does not exist. Instead we should pace ourselves for the long run, and do health checks in the teams on a regular basis.

Techniques