Article originally posted on InfoQ. Visit InfoQ

Transcript

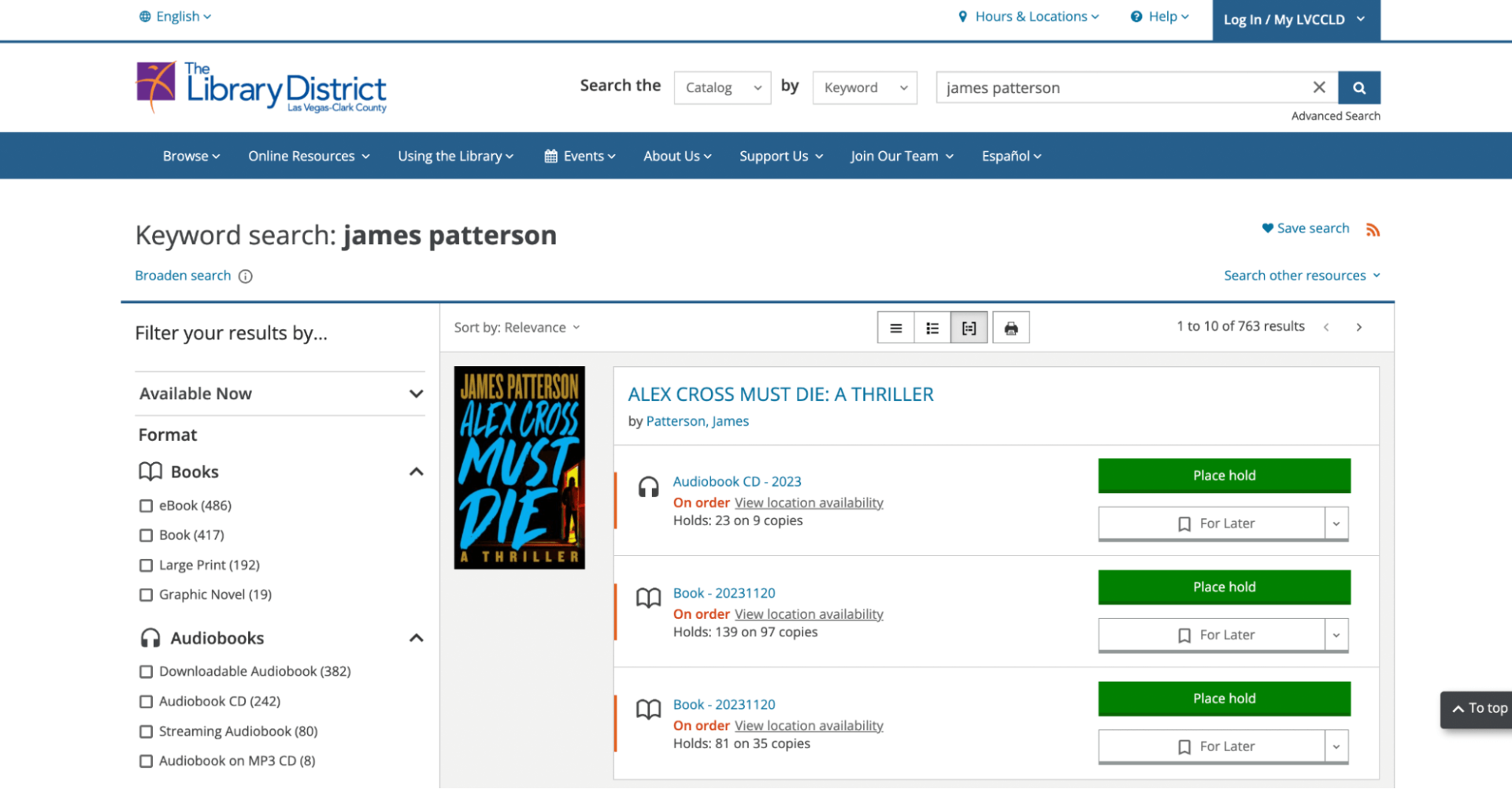

Michel: This is a classroom in 2020. Basically, a bunch of white names and a black background as a teacher is speaking in the void. This was a practical session, even though it wasn’t that practical, and the students were working on a computer graphics assignment. You see the people raising hands, these are Mac users. They all have a problem here. They have a problem because when I wrote the code base, students were all using the university computers, which were not Mac. That’s when I realized that I actually had to backport live the whole code base to an older version of OpenGL because macOS does not support the last version. It’s even actually deprecating the new versions of OpenGL. That was a hard way to learn portability, that portability is important. That portability is also not really easy when it comes to graphics programming, even for native graphics programming. OpenGL was long, a good API for doing portable graphics, but now it’s no longer the case. We’ll first see how WebGPU is actually a good candidate to replace this, even for native GPU programming, not just for web. Then I’m going to show a more detailed and more practical introduction of how to get started using WebGPU for native developments using C++ in that case. I’m going to finish with a point about whether it’s too early or not to adopt WebGPU because it’s still ongoing, the design process is still unfinished. It might be too early or not. I think it’s ok but we’ll see more in details.

Background

I do a lot of prototypes. I’m a researcher in computer graphics. In a lot of cases, I have to write from scratch little applications using real-time graphics, using GPU programming, so I often restart and always wonder whether the solution I was adopting the last time is still the most relevant one. There’s a need to iterate really faster so it’s not always a good option to go too low level, but I still need a lot of control on what I’m doing. I think this is quite representative of a lot of needs. The other interesting part is that I also teach these computer graphics. For this, I’m always wondering, what’s the best way to get started with GPU programs either for 3D graphics, or just for all the other things that a GPU can actually do nowadays, it’s not limited to 3D graphics. For both of those reasons, I got interested in WebGPU and started writing this guide of how to use WebGPU, not especially for the web, but more importantly, more focused on desktop programming. Because that’s what I was mainly focused on, even though as a side effect it will enable you to use this on the web once the WebGPU API is officially released in web browsers.

Graphics APIs

Our first point, graphics API. Graphics API, like OpenGL, WebGPU, the first question is actually, why do we need such a thing to make 3D or to access the GPU? Then, what are the options? What is the problem? Why do we need a graphics API? I’m first going to assume that we actually need to use the GPU otherwise, indeed, we don’t need a graphics API. Why could we need to use the GPU? Because that’s a massively parallel computing device and a lot of problems are actually massively parallelizable. That’s the case of 3D graphics. That’s also the case of a lot of simulations, physics simulations. That’s the case of neural network training and evaluation. That’s the case for some cryptographic problems as well. The other hypothesis I’m making is that we intend to have our code portable to multiple platforms, because if we don’t, we’ll make different choices and focus really under the target platform.

Why do we need a graphics API? Because the GPU is like another machine in the machine. It has its own memory. It’s a completely different device, and actually communicates with the CPU just through a PCI Express connection, but they all have their life. It’s really like a remote machine. Think of it as if it was a server and the CPU is the client if you’re used to the web, for instance. They’re far away and communicating between those takes time. Everything we’re doing when we’re doing programming in languages like C++ here, but also most of other languages is describing the behavior of the CPU. If we want to run things in the GPU, we will actually have to talk with the driver to communicate to the GPU what we expect it to be doing. That’s to handle this communication that we need to the graphics API.

What are the options? There are a lot of different ways of doing this. There are some options provided directly by the manufacturers of the GPU, of the device itself, some other are provided by the operating system. Last, we’ll see portable APIs that try to be standard implemented by multiple drivers, multiple OSes. The vendor specific APIs, I’m not going to focus too much on these. For example, there is CUDA that is an NVIDIA specific API for general-purpose GPU programming. I’m also briefly mentioning Mantle, it’s no longer a thing, but it was at the base of Vulkan. When it started, it was an AMD specific API. For OS specific APIs, the well-known one is Direct3D. That’s been around for a lifetime, even though each different version of Direct3D is really different from the previous one. Direct3D 12 is really different from Direct3D 11, for instance, that was also different from the previous ones. That’s what is used. That’s the native graphics API for Windows and for the Xbox as well. If you target only those platforms, then just go for the DirectX API.

There is Metal for macOS and iOS. Same thing, but for Apple devices. It’s not that old, actually. It’s much more recent than DirectX. I’m briefly mentioning the fact that if you go to other kinds of devices, especially gaming consoles, you will also have some specific APIs for these that are provided by the platform. I’m mostly going to focus on desktop, because, often, we want something to be portable so that we don’t want to rewrite for those different APIs. We need portable APIs. For a long time, the portable API for 3D was OpenGL. OpenGL that also had some variants. One is OpenGL ES that was to target low-end devices like phones in particular. WebGL, which is basically a mapping of OpenGL ES to be exposed as a JavaScript API. There is also OpenCL, that was more focused on general-purpose GPU programming. OpenGL was focused on 3D graphics, OpenCL on non-3D related things. Actually, since with time both of those, the compute pipeline and the render pipeline actually got much closer, it’s really common to use both in the same application. It no longer made sense to have two different APIs, in my opinion. The new version of OpenGL or what was supposed to be the new version of OpenGL, it’s really different from where it was because OpenGL was always backward compatible with the previous versions. The new API that totally broke this and went much more low level is Vulkan. Vulkan is supposed to be as portable as OpenGL, but the thing is that Apple didn’t really adopt it because they wanted to focus on Metal and they didn’t want to support Vulkan. That’s why actually it becomes a bit complicated now to have a future-proof, portable way of communicating with the GPU. That’s where WebGPU, which is also a portable API, because it’s an API developed for the web, that must be portable, becomes really promising.

How do we get a truly portable API? That’s what I was just mentioning. If you look at some compatibility table, you see there’s actually no silver bullet. No API that will run on everything. Assuming we’re not using WebGPU yet, because that’s something that the developers of WebGPUs themselves were wondering, could we do like for WebGL, just map an existing API and have it exposed in JavaScript? Couldn’t really decide on one. The choice that had been made for WebGL was to take the common denominator, but that limits to the capabilities of the lowest end device. If we want access to more performance and more advanced features of the GPU, we need something different. It was a question of whatever the draft of WebGPU is, how exactly will the browser developers implement it in practice? That’s actually a similar question to the one we’re wondering if we do desktop GPU programming, how do we develop something that is compatible with all the platforms?

What they ended up deciding for the case of the browser was to actually duplicate the development efforts. On top, there is this WebGPU abstraction layer, this WebGPU API that has multiple backends. That’s valid both for the Firefox implementation and the Chrome implementation. They all have different low-level APIs behind depending on the platform it’s running in. Since this is some effort, they actually thought about sharing this effort, making it reusable, even when what we’re doing is not JavaScript programming. If we’re doing just native programming, we can actually use WebGPU, because the different implementations of WebGPU are on the course of deciding on the common header webgpu.h that will be a way to expose to what are native code, the WebGPU backend, and then indirectly to use either Metal, DirectX, Vulkan, or others, depending on the platform. As a user of the WebGPU API, you don’t have to care that much about it. That’s what is really interesting here with WebGPU, and why we can really consider it as a desktop API for graphics as well. This model of having a layer that abstracts the differences between the backend is not something new, it’s actually something that is done in other programs because this problem that we were facing at the beginning, a lot of real-life applications are facing it. Just taking a few examples, here for Unreal Engine, there is this render hardware interface that abstracts the different possible backends. Here is another example in Qt. Qt is doing user interfaces so it also needs to talk with different low-level graphics APIs, so it also has such RHI. One last example is a library developed by NVIDIA, even though I don’t know how much it’s used in practice, but it was also facing the same need and also proposing for others to reuse it.

A question is, among all those different render hardware interfaces, which one should we use? Would it be WebGPU, or this NVIDIA version, or the Unreal version, or the Qt version? A lot of them have been developed with a specific application in mind. Thinking of Qt and Unreal, for instance, they will have some bias. They will not be fully agnostic in the application. They’re really good for developing a game engine or for developing a UI framework. If you’re not sure, if you want to learn an interface that will be reusable in a lot of different scenarios, then it should be something that is more agnostic. Then, WebGPU seems to be a really good choice because it will be used by a lot of people since it will be the web API as well. There will be a lot of documentation and a powerful user base. That’s why I think it’s interesting to focus on WebGPU for this even though there exist other render hardware interfaces.

I’m just going to finish this part with a slight note about how things changed. If we compare it to the case of OpenGL, the driver had a lot of responsibility. That’s why actually, at some point, OpenGL became intractable. It was asking too much for the driver. Especially to enter the backward compatibility, the driver still has to ensure that they support OpenGL 1.0, which is really old and no longer in line with the current architecture of modern GPUs. With this model of developing RHI, the thing is that we decoupled the low-level driver part from the user facing interface that is developed potentially by different teams, because the driver here would be developed by AMD or NVIDIA, while the library behind the render hardware interface is developed by Firefox team or the Chrome team. It’s a powerful way of sharing the development effort. A lot of modern choices for GPU programming will go in this direction.

If I recap, one other thing, if we need portability and performance at the same time, we need to use some kind of RHI because, otherwise, we have to code the same thing multiple times. WebGPU is a good candidate for this because it doesn’t need an RHI. Since it’s domain agnostic, it has a lot of potential of being something that you can learn and reuse in a lot of different contexts. It’s likely future proof because it’s developed to last and it’s developed to be maintained for a long time by powerful actors, namely the web browsers. I think it’s a good bet. Plus, you get some bonus with this. The first one is I find it a reasonable level of abstraction. It’s organized in a more modern way than OpenGL. If you compare it to Vulkan, for instance, it is higher level, so it doesn’t have thousands of lines of code, Hello Triangle. I really like using it so far. Also, since there are two concurrent implementations of this same API, we can expect some emulation here. Chrome and Firefox are challenging, and they will likely get something really low overhead in the end.

WebGPU Native – How to Get Started

We can go now to the second part, if you want to do development with WebGPU now, how do we get started? First thing is to build a really small Hello World. Then I’m going to show the application skeleton. I’m not going to go into details of all the parts because there is the guide I’ve started writing to go further. Instead, I’m going to focus in a bit on how to debug things, because that’s also really important to be able to debug what we’re doing when we start with a new tool, especially since there is this dual timeline with the CPU on one hand, the GPU on the other hand, it can become tricky. How do we get started? It starts by just including this WebGPU header, of course. Then we can create the WebGPU instance. We don’t need any context. We don’t need a window for instance, to create this. One thing that we can see already is that when we create objects in WebGPU, it always looks the same. There is this create something function that takes a descriptor that is a structure that holds all the possible arguments for this object creation, because there can be a lot in some cases. Instead of having a lot of arguments in the create instance function, it’s all hidden in the descriptor for which you can set some default values in the utility function for instance. Then, you can check for errors and just display, that’s really minimal Hello World.

Here, we see two things, as I was saying there is the descriptor. Keep in mind that there is always in descriptors, this nextInChain field that is dedicated to extension mechanisms that you have to set up to null pointer. The create instance function returns a blind handle, it’s a pointer basically. It’s really something that you can copy around without worrying about the cost of copying it because it’s just a number. There are also two other types that are manipulated during the API, some Enum values and some structs that are just used to detail the descriptor, so to organize the things in the descriptor. It’s really simple. There’s descriptors, handles, and just Enum and Struct that are details actually. All the handles represent objects that are created with a create something and there is always a descriptor associated. Simple.

How do we build this in practice? That’s where it becomes tricky. Not that tricky, but not obvious, because the first thing is, where is this header with the webgpu.h? Also, if we have a header that declares symbols, they must be defined somewhere, so how do we link to an implementation of WebGPU? As I was mentioning, contrary to other APIs, those are not provided by the driver. We have multiple choices. Either we use wgpu-native, that is based on the Firefox backend, or we use Dawn which is the Chrome backend. The last possibility is a bit different, but we could also just use Emscripten that provides the webgpu.h header, but doesn’t provide any symbol because it doesn’t need to. It will just map the calls to the WebGPU API to JavaScript calls once it transpiles the code to WebAssembly. I’m going to rule out Emscripten, because it’s a bit different, but keep in mind that it’s always a possibility. Something that I wanted when I started working on this was, I would like to have an experience that is similar to what I have when I do OpenGL, when I use something like Glad. It’s a really small tool. I can just download something that is not too big, and I link my application. It doesn’t take time to build. It doesn’t require a complicated setup. It doesn’t invade my build system. I was looking for this and I started comparing the wgpu-native and Dawn. First, wgpu is Rust based, which is great, but easy to build from scratch if I’m doing a C++ project. That was something that was a bit of a concern for me at this point. Then I looked at Dawn. The thing is Dawn doesn’t have a standard build system, neither, it really needs some extra tools. It also needs Python, because it autogenerates some part of the code. It was not obvious neither how to build from scratch. I’m not saying it’s not possible, it’s not really easy to do. From the perspective of sharing this with students, for instance, or with people who are not experts in build systems and don’t want to have something complicated, that was also a concern.

I’ve started working on stripping down a build of Dawn after generating a Python based part. It wasn’t so straightforward. In the remaining, I provide a version that I’m satisfied with because it doesn’t need any extra tool besides CMake and Python. There’s still this extra Python dependency, but no longer the need for depot_tools and can just be included using fetch content, for instance, or as a sub-model. Still, it was taking time to build because that’s actually a big project. There’s a lot of lines of code because it’s a complicated thing to have all those different backends. I kept on looking on ways to make this initial build for the end user much faster. One possibility was to provide a pre-built version. I was thinking maybe a build based in Zig that already had some scripts set up to prebuild their static libraries. There was no MSVC version in this. I’m just providing the link in case you’re interested, https://github.com/hexops/mach-gpu-dawn. Since I started thinking about using a prebuilt version, I actually realized the fact that wgpu’s Rust base is no longer an issue in this scenario. I was happy to see that these projects provide autogenerated builds at a regular pace.

The only thing was that there were some issues with naming and some binaries were missing some time, so I had to curate it a little bit. That’s why I ended up actually repackaging the distributions of WebGPU. I did two. One based on wgpu-native, the other one on Dawn so that we can compare equally. The goal was to have a clean CMake integration, something that I add the distribution, and then I just link my application to the WebGPU target and it just works. I wanted also this integration to be compatible with emcmake, to use Emscripten. Not have too many dependencies. I didn’t want depot_tools to be a dependency for the end user. I wanted those distributions to be really interchangeable, so that at any point in time, if I want to switch from wgpu-native backend to the Dawn backend, because I want to compare for benchmarking reasons, for instance, or because they’re not fully in line and I prefer the debug messages from Dawn but it was faster to develop at first with wgpu-native, that’s always something we can do with this way of packaging in the same way as the application. I did this for both. I did define it to be able to see the difference, because there are still some things, it’s still a work in progress, the WebGPU specification, some things are still different. In the long run, I knew this would not be needed, I would not have to share myself, a repackaged version. For the time, before it’s fully solved, this is something that can be quite helpful.

If we come back to this initial question of how to build this tool, this Hello World, we’re going to just download one of the distributions, any one of them, could be next to our main.cpp and add this really simple CMakeLists file, and we can build. Nothing crazy here, it’s just showing the address of the instance pointer. Since it’s not null, it means it worked. We’re able to use WebGPU, so what will the application look like? There will first be the initialization of the device, I’m going to focus a bit on this afterwards. Then we typically load the resources. This is allocating memory and copying things from the CPU to the GPU memory, textures, buffers, also shaders, which are programs executed on the GPU. Then we can initialize the set of the bindings that tells the GPU how to access the different resources because there are really different natures of memory in the GPU memory, so that it can be accessed through optimized path, depending on the usage we’re doing. This is something quite different with the way that CPU memory works. That plays an important role in the performance of the GPU programming.

Then you set up pipelines. This is something also specific to the GPU, because GPU has this weird mix of fixed stage where some operations are really hard coded in the hardware itself. You can only tune the pipeline by changing some options, but not really program it. There are also stages that are programmable, like the vertex shader, the fragment shader, and the compute shader if you’re using a compute pipeline. The pipeline object is something that basically saves the state of all the options that we set up for both fixed and programmable stages. Important that the shaders are using a new shading language WGSL, which is neither GLSL nor HLSL nor SPIR-V even though for now, it is still possible to use SPIR-V shaders even in the web browser. In the long run, this will be dropped, so make the move to learn WGSL. It’s not that different conceptually, even though it’s using a different syntax from the traditional shader languages.

Then comes the core of the application. You submit comments to the GPU, and the GPU is executing shaders and running pipelines. You can eventually fetch back some data from the GPU. I’m also going to detail this eventually. This is asynchronous, because, again, the communication between the VRAM and the RAM takes time. All the other operations that were issued on the CPU towards the GPU, it’s fire and forget. You say, do something, and when the function returns in the CPU side, it doesn’t mean that it’s done, it just means that the driver received the instruction and will eventually forward it to the GPU. This is not a problem until you actually want to get some results back from the GPU, and that’s where some asynchronicity is needed. At the end, of course, you clean up, which is still a problem now, because there is a key difference between wgpu and Dawn implementations, when it comes to cleaning up resources. Optionally, if you’re doing graphic things, there is also the swap chain to set up, which will tell how the GPU basically communicates with the screen by presenting the frame buffer.

With all this, you can have this kind of application. Here is just an extract from the guide, where you load a 3D model and turn it around and have some really basic UI. There’s absolutely nothing here, but just to play with. Just for the case of graphics programming, really 3D rendering, I also had to develop some small libraries, one to communicate with GLFW, which is the library typically used to open a window. It’s just one file to add to your project. Also, here, I’m using the ImGui library for the user interface, which is really handy, but I had some issues with the WebGPU backend. There is this branch of mine that you can use. On all this skeleton, I’m going to focus on the device creation, because if you’re interested in targeting various types of devices and still benefit from their performance, there is something interesting going on here.

The device creation is split into two parts. One is the creation of the adapter, and then the creation of the device. The adapter is not really a creation. We get the adapter, which is the information about the contexts. From this we can know what are the hard limits and the capabilities of the device. From this, we can set up the virtual limits of the device objects that we’re using to abstract the adapter. This mechanism is important, because if we don’t set any limits, the device will use the same one as the adapter, which is really easy. You don’t have to do anything. It means that your application might fail anywhere in the code because you don’t really know about the limits. Suddenly, you’re maybe creating a new texture, and this texture is exceeding the maximum number of textures, and the code will fail. It might work on your machine because your adapter has different limits than the machine of your colleague that has a different GPU for instance. This is really not convenient and something really to avoid.

Instead, you really should specify limits when creating the device. This means it might fail the creation of the device but it will fail right away. You will know that just the device doesn’t support the requirements of your program. Stating the limits is stating the minimum requirements of a program. If you don’t want to fail, you can actually define multiple quality tiers with different limit presets. When you get the adapter, you look at the support limit. You stop at the first set that is fully supported. Then you set the device according to this, and you have maybe a global variable or an application attribute that says at any time at which quality tier you are, so that your application can adapt without breaking. A quick note for the initialization of the device in the case of the web target, it is handled on the JavaScript side, so instead of doing all this you will just use the emscripten_webgpu_get_device function that is provided by Emscripten, and do the initialization on the JavaScript side. Something to keep in mind for portability, if you want to target web of course.

Debugging

Last practical point on debugging. How do we debug applications using WebGPU? The first thing to go for is the error callbacks. There are two error callbacks that you can set up right after creating the device. Maybe the most important one for native programming is to set the UncapturedErrorCallback. This callback is basically the one through which all the errors will be sent. This is something you can set up. I recommend that you put a breakpoint in this so that your program will start whenever it encounters an error. You can first have a message and also inspect the stack. The thing is that the different implementations have really different error messages. Here, I’m just showing an example for wgpu-native. We can understand it, there’s no problem. If you compare it with the Dawn version, that is actually detailing all the steps through which it went before reaching this issue. Also, it’s using the labels because all the objects that you create in old descriptors, you can actually specify a label for the object so that it can be used at the beginning like this. There are really differences. Here another example on shader validation. Tint is the shader compiler for Dawn. Mega is the one for wgpu. They present errors in different ways. That could be a reason to choose Dawn over wgpu, even though the Dawn distribution takes time to compile because it’s not prebuilt.

Another thing that is really useful is graphics debuggers. That’s something that is not specific to WebGPU at all, but something that when you want to talk with the GPU, you need to use actually. Two key examples, RenderDoc and NVIDIA Nsight. The only thing about the graphics debugger is that it will actually show comments from the underlying low-level API and not comments from WebGPU. It doesn’t know about WebGPU because it intercepts what’s happening between the CPU and the GPU. At this stage, it’s all low-level API comments. In a lot of cases, just looking at the order of things, you can guess which command corresponds to which one in your code, for simple enough applications. If your applications become really complex, that might become an issue. Something that is more WebGPU aware is the dev tools of the browser itself, even though it’s not as feature full as the graphics debugger when it comes to GPU programming, of course. That sounds stupid, but I’m just mentioning when you debug, something that is important to look at is the documentation of the JavaScript spec. Even though you’re doing native, there’s not really a specification for the native API, so I end up using a lot of JavaScript spec to figure out how the native one works. Actually, if you’re interested in the specificities of the native API, just basically look at the header, and I’ll have a more advanced tip for this.

The last thing for debugging is profiling. This is something that is not obvious, because, again, when you’re doing GPU programming you have different timelines. The CPU is living in one side, the GPU on the other side. When the CPU issues commands for the GPU, it doesn’t really know when it starts, when it stops. You cannot use CPU side things to measure performance for the GPU. In theory, WebGPU provides something for this, which is timestamp queries. You create queries and you ask GPU to measure things and then send the result back to you. These are not implemented, and there is even a concern actually about whether it will be implemented because the timestamp queries can leak a lot of private information. Since the web context needs to be safe and not leak too much information about the clients, this might be a true virtual issue. In the long run, it would still be usable for native programming, but be disabled in the JavaScript API. Something you can use though, which is handy is the debug groups. Something that has no effect on your program itself but that will actually make you see in here in Nsight, some segment of performance, some timings that you can basically name in your C++ code. That’s really helpful for measuring things.

Just taking a little example here, since I’m talking about figures, I’m going to show some for comparing two algorithms for contouring a signed distance field. I’m comparing both algorithms, and I’m also comparing the wgpu-native and the Dawn version. I’d be careful with those figures. I didn’t carry an intensive benchmarking with a lot of different cases. It’s just one example. What we can see is that Dawn is a little bit slower, but it might be possible to accelerate it by disabling robustness features. I haven’t stress tested this. What’s interesting is actually, I think that those figures are quite similar, which shows that whether it’s wgpu-native or Dawn, they both talk with the GPU with such a low-level access, that they can actually reach similar performance, and that there is not so much overhead. That’s something that should be measured by actually comparing with a non-WebGPU implementation. I’m optimistic about this.

Is WebGPU Ready Enough (For Native Applications)?

To finish this talk, maybe the key question that remains is, is it ready enough to be used now for native application, for what concerns me? First thing is backend support is rather good, whether it’s for wgpu or Dawn. This is not a detailed table, but it at least shows that for modern desktops, it’s well supported. A few pain points that I should mention. One of them is asynchronous operations that I talked about for getting data back from the GPU, I still end up needing to wait with an infinite loop for some times, by submitting empty queues just for the backend to check whether the async operation is finished. There might be something unified to do this in the later version of the standard. Another thing is, I miss a bit shader model introspection, because here is the typical beginning of a shader. You define some variables and you tell by numbers, by figures like here the binding 0, binding 1, you tell how this will be filled in from the CPU side. Just communicating with numbers instead of just using the variable names makes the C++ side of the code a bit unclear sometimes. For the JavaScript version, there is an automatic layout that is able to automatically infer by looking at the code, the shader code, what the binding layout should look like. This has raised some issues, so it’s been removed from the native version for some good reasons.

Lastly, there are still a lot of differences between the implementation. I’m getting a lot of examples here. I’m just focusing on one key difference, which is there is no agreeing yet on the mechanism for releasing, freeing the memory after use, so wgpu uses Drop semantics, and Dawn, Release. What’s the difference? The difference is that Drop means these objects will never be used by anything, neither me nor anybody else, just destroy it. In the case of Dawn, the call to Release means I’m done with this, but if somebody else actually still uses it, then don’t free it. To go with this mechanism, there is also a reference that does the opposite of this, which is, ok, I’m going to use this so don’t free it until I release. That means Dawn actually exposes a reference counting capability feature that wgpu prefers to hide. The debate about which one should be adopted is still an ongoing debate.

Lastly, some limitations that might remain limitations even on the longer run. Here are a few questions, the timestamp queries. Again, on desktop, that should be possible to enable them. Maybe it’s even possible already. For the JavaScript side, that will be an issue. If the logic of your program is relying on timestamp queries to optimize some execution path, it will be a problem. Another question is, how does shader compilation time gets handled? Because in a complex application, compiling the shader can take multiple seconds, tens of seconds or even minutes. A desktop application, you would cache the compiled version, or the driver will do it for you. The question is, do we have some control in this cache, or how can we avoid the user experience where they reach to the page, to the web application they were used to? Suddenly it has to rebuild all the shaders for some reason. It’s taking too much time to start. Another open question, I didn’t look a lot into this, but I didn’t see anything related to tiled rendering, which is something specific to low-end devices that have a low memory bandwidth, and that might require some specific care. The question is, are we able to detect it in WebGPU so that we can adapt the code for this kind of low consumption device? I haven’t found anything related to this so far. I’m just providing a link to some other debates that are really interesting with all the arguments that can be used, https://kvark.github.io/webgpu-debate. One last thing, the extension mechanism could be used if you’re not targeting the web. Here’s an example.

Conclusion

The key question is, should I use WebGPU? In my context where I look for portability and a future proof API, and I was using OpenGL for this and it’s becoming problematic, then I would suggest, yes, go for it. You can already start looking at it. You can already start using it. Be ready to change some things in the coming months because maybe some issues will be fixed, some slight changes will occur, and it would break the existing code. At this point, no strong change should be expected. Learning it right now should be representative of what it will be in the end. I think it’s a good domain-agnostic render hardware interface. It’s not too low level, but it’s more in line with the way things are underneath, so it can be efficient even without being too low level. It’s future oriented, because it’s going to be the API of the web so there will be a huge user base behind it. My bet is that it will become the most used graphics API, even for desktop because of this. Even though it’s unfinished, it’s in very active development, so you can expect it to be ready, not by the end of the year, but it’s really going fast. It’s always nice to work with something that is dynamic that you can talk with the people.

Of course, you get a web-ready code base for free, which, in my case, I was never targeting the web, because I wasn’t interested in downgrading my application just for it to be compatible with the web. Now, I don’t have to care about this, I can just do things as I was doing for my desktop experiment and then share it on the web, without too much concern. As long as people use a WebGPU-ready browser, which is not so many people for now. I’m just showing an example that is not 3D, that’s something that is really recent, the demo where people used WebGPU for neural network inference here for Stable Diffusion, so image generation. It’s a really relevant example of how WebGPU will enable things on the web that are using the GPU but not related to 3D at all. There’s likely a lot of other applications in a lot of other domains. To finish, just a link to this guide that I’ve started working on with much more details about all the things I was mentioning here, https://eliemichel.github.io/LearnWebGPU. I’ve opened a Discord to support this guide, but there are also existing communities centered around wgpu and around Dawn that I invite you to join, because that’s where most of the developers are currently.

See more presentations with transcripts

Janney Montgomery Scott LLC decreased its holdings in shares of MongoDB, Inc. (

Janney Montgomery Scott LLC decreased its holdings in shares of MongoDB, Inc. (