Month: April 2018

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Summary: Not everyone wants to invest the time and money to become a data scientist, and if you’re mid-career the barriers are even higher. If you still want to be deeply involved in the new data-driven economy and well paid, the growth rate and opportunities as a data engineer or business analyst need to be on your radar screen.

OK, given that Data Scientist is still the sexiest job in America, what happens if you can’t or don’t want to invest your time and money to achieve that credential?

Seldom a week goes by without someone posing a question about how to switch from their existing career into data science. While I personally believe that you can’t have more fun anywhere else, there are many legitimate barriers facing those wanting to switch. Especially if you are more than 5 or 10 years out of school.

We wrote at length about this in “Some Thoughts on Mid-Career Switching Into Data Science”. It’s one of our most widely read articles so apparently there are a lot of folks trying to figure this out. Basically we said there are no shortcuts. While it will be intellectually and eventually financially rewarding, it’s hard if your career is already underway.

Even if you are mid-education, the full blown data science credential isn’t right for everyone. And with the new corporate orientation toward democratizing data (code word for self-service), anyone who wants to get down into the data will have plenty of opportunity to do so.

The good news in an increasingly data-driven world is that there is a pyramid of supporting roles growing even faster than the data scientist role. They’re in demand. They pay well, and you may indeed find it easier to leverage your current skills into one of these roles, perhaps with the aid of a MOOC, a boot camp, or even OJT.

We’re talking about two roles that have existed for some time, Data Engineer and Business Analyst.

Data Engineers

For the better part of the last decade and certainly in the last five years, data scientists have put an inordinate new load on traditional IT organizations. Traditional IT has also had a real change of mission.

Historically, IT was about servicing its internal customers at least cost within acceptable SLAs. Keeping costs down is still important, but as data and data science became drivers of revenue, profit, and strategic differentiation, IT found itself with a more urgent support mission.

The separate skills associated with Big Data, particularly Hadoop/Hive, Spark, streaming data, IoT, data lakes, cloud platforms, and the ETL and blending of structured and unstructured data have been separated out into a career path variously called either Data Engineer or Big Data Developer.

As data scientists have become more specialized, they don’t have these hands on skills nor is it something that’s efficient for them to do. Companies are increasingly establishing analytic teams in which Data Engineers may be part of this new group, or continue to report in through IT, but distinct from the traditional IT organization.

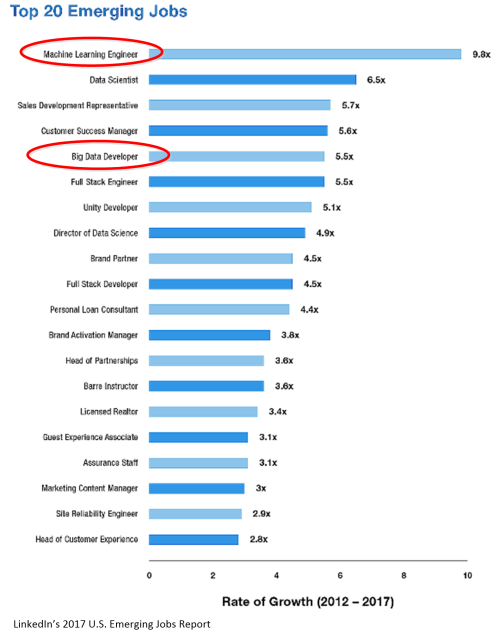

As it turns out, the Data Engineer path, by its many names has been growing faster than the Data Scientist role. Machine Learning Engineer (aka Data Engineer) opportunities for example are growing 50% faster than Data Scientist opportunities.

What other evidence do we have that this is true? Qubole whose business is to very rapidly setup and manage cloud data platforms as a service has just released its 2018 Big Data Activation Report. This is a statistical look at over 200 of its customers ranging from large to small and how they are dealing with Big Data. As customers of Qubole, we know they are fairly advanced in their data lifecycle and look for both flexibility and economy.

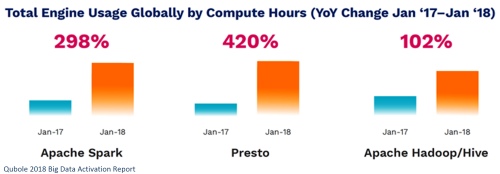

Here’s the first interesting fact from their report. 76% of these companies actively use at least three Big Data open source engines for prep, machine learning, and reporting and analysis workloads (Apache Hadoop/Hive, Apache Spark, or Presto). These are squarely in the wheelhouse of the data engineer.

Secondly, the role of the data engineer in supporting self-service access to data is growing rapidly. The Qubole report shows year-over-year growth in users of 255% for Presto, 171% for Spark, and 136% for Hadoop/Hive.

While in data science we think of Spark as having completely replaced Hadoop, in fact Hadoop had 5 to 8 years of dominance in which to become the legacy Big Data platform of choice before Spark emerged. As a result, the data engineer is just as likely to be working in Hadoop/Hive as in Spark. There are clearly still roles for both.

And while the number of users for all three systems has grown dramatically, the year-over-year growth in the number of actual compute hours tells a slightly different story with Spark growing almost three times as fast as Hadoop/Hive (298% versus 102%). Note that Hadoop/Hive still had the bulk of the hours (as the established legacy system) but that growth clearly favors Spark.

Data Analysts

Data Analysts are the new Citizen Data Scientists. This doesn’t mean they are expected to do everything a data scientist can do. It does mean they are increasingly expected to embrace new self-service tools in analytics and data viz, and to much more actively engage with all types of data than in the past.

As data science came into vogue and captured the imagination of business leaders, traditional BI receded from view but obviously never disappeared. BI is still at the heart of most operational reporting though analytics and reporting increasingly demands accessing data well beyond traditional RDBMS.

When Hadoop/Hive became open source in 2007 under the more generic name of NoSQL there was a period of perhaps five years in which the analyst’s classical strength in SQL couldn’t be applied to this new technology. That passed rapidly.

The new providers of Big Data DBs rapidly discovered that SQL was so dominant in the user community that growth without SQL was a non-starter. Rapidly NoSQL became NotOnlySQL and the number of SQL-like tools expanded rapidly.

Today, the lead open source SQL engine for Big Data platforms is Presto, and the number of implementations and growth in users and compute hours far outstrips either Hadoop/Hive or Spark (see above). This tells the story of just how rapidly self-service analytics and the blending of structured and unstructured data have become.

Data Analysts today have tools undreamed of by their earlier selves, ranging from full on blending and analytic platforms like Alteryx to remarkable data viz platforms like Tableau and Qlik. Or for the open source user, Presto allows all the capabilities of the SQL you have become expert with to be applied to the full range of unstructured and semi-structured data.

New Career Paths

Both Data Engineer and Business Analyst are career paths into data science. Both leverage existing skills in data science and SQL-based analytics without the requirement to meet the rigorous requirements needed by the full-fledged data scientist.

Both of these new opportunities are based on much more easily acquired skills, either through formal education, or through various types of self-study and OJT. If you’re looking at data science, don’t overlook these much more plentiful and easier to achieve satisfying careers.

Some other Data Science Career articles you may find valuable:

Some Thoughts on Mid-Career Switching Into Data Science (2017)

The New Rules for Becoming a Data Scientist (2016)

Data Scientist –Still the Best Job in America – Again (2016)

So You Want to be a Data Scientist (2015)

Getting a Data Science Education (2015)

How to Become a Data Scientist (2014)

Other articles by Bill Vorhies

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at:

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Summary: Deep Learning, based on deep neural nets is launching a thousand ventures but leaving tens of thousands behind. Transfer Learning (TL), a method of reusing previously trained deep neural nets promises to make these applications available to everyone, even those with very little labeled data.

Deep Learning, based on deep neural nets is launching a thousand ventures but leaving tens of thousands behind. The broad problems with DNNs are well known.

Deep Learning, based on deep neural nets is launching a thousand ventures but leaving tens of thousands behind. The broad problems with DNNs are well known.

- Complexity leading to extended time to success and an abnormally high outright failure rate.

- Extremely large quantities of labeled data needed to train.

- Large amounts of expensive specialized compute resources.

- Scarcity of data scientist qualified to create and maintain the models.

The entire field has really come into its own only in the last two or three years and growth has been exponential among those able to overcome these drawbacks. And it’s fair to say that the deep learning provider community understands and is working to resolve these issues.

It’s likely that over the next two or three years these will be overcome. But in the meantime, large swaths of potential users are being deterred and delayed in gaining benefit.

Two Main Avenues of Progress – Automation and Transfer Learning

The problem for those wanting to create their own robust multi-object classifiers is most severe. If you succeed in hiring the necessary talent, and if your pockets are deep enough for AWS, Azure, or Google cloud resources, you still face the main problems of complexity and large quantities of labeled data.

Already you can begin to find companies who claim to have solved the complexity problem by automating DNN hyperparameter tuning. Right behind these small innovators are Google and Microsoft who are publically laying out their strategies for doing the same thing.

More than any other algorithms we’ve been faced with; the hyperparameters of DNNs are the most varied and complex. Starting with the number of nodes, the number, types, and connectivity of the layers, selection of activation function, learning rate, momentum, number of epochs, and batch size for starters. The requirement for handcrafting through multiple experimental configurations is the root cause of cost, delay, and the failure of some systems to train at all.

But there is a way for companies with only modest amounts of data and modest data science resources to join the game is short order, and that’s through Transfer Learning (TL).

The Basics

TL is primarily seen today in image classification problems but has been used in video, facial recognition, and text-sequence type problems including sentiment analysis.

TL is primarily seen today in image classification problems but has been used in video, facial recognition, and text-sequence type problems including sentiment analysis.

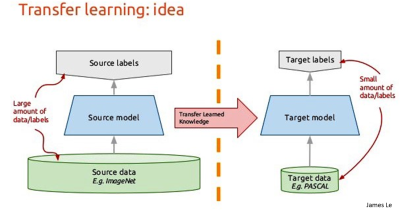

The central concept is to use a more complex but successful pre-trained DNN model to ‘transfer’ its learning to your more simplified (or equally but not more complex) problem.

The existing successful pre-trained model has two important attributes:

- Its tuning parameters have already been tested and found to be successful, eliminating the experimentation around setting the hyperparameters.

- The earlier or shallower layers of a CNN are essentially learning the features of the image set such as edges, shapes, textures and the like. Only the last one or two layers of a CNN are performing the most complex tasks of summarizing the vectorized image data into the classification data for the 10, 100, or 1,000 different images they are supposed to identify. These earlier shallow layers of the CNN can be thought of as featurizers, discovering the previously undefined features on which the later classification is based.

There are two fundamentally different approaches to TL, each based separately on these two attributes.

Create a Wholly New Model

If you have a fairly large amount of labeled data (estimates range down to 1,000 images per class but are probably larger) then you can utilize the more accurate TL method by creating a wholly new model using the weights and hyperparameters of the pre-trained model.

Essentially you are capitalizing on the experimentation done to make the original model successful. In training the number of layers will remain fixed (as well as the overall architecture of the model). The final layers will be optimized for your specific set of images.

Simplified Transfer Learning

The more common approach is a benefit for those with only a limited quantity of labeled data. There are some reports of being able to train with as few as 100 labeled images per class, but as always, more is better.

If you attempted to use the first technique of training the entire original model with just a few instances, you would almost surely get overfitting.

In simplified TL the pre-trained transfer model is simply chopped off at the last one or two layers. Once again, the early, shallow layers are those that have identified and vectorized the features and typically only the last one or two layers need to be replaced.

The output of the truncated ‘featurizer’ front end is then fed to a standard classifier like an SVM or logistic regression to train against your specific images.

The Promise

There’s no question that simplified TL will appeal to the larger group of disenfranchised users since it needs neither large quantities of data or exceptionally sophisticated data scientists.

It’s no surprise that the earliest offerings rolled out by Microsoft and Google focus exclusively on this technique. Other smaller providers can demonstrate both simplified and fully automated DNN techniques.

Last week we reviewed the offering from OneClick.AI that has examples of simplified TL achieving accuracies of 90% and 95%. Others have reported equally good results with limited data.

It’s also possible to use the AutoDL features from Microsoft, Google, and OneClick to create a deployable model without any code, by simply dragging-and-dropping your images onto their platform.

The Limits

Like any automated or greatly simplified data science procedure, you need to know the limits. First and foremost, the pre-trained model you choose for the starting point must process images that are similar to yours.

For example, a model trained on the extensive ImageNet database that can correctly classify thousands of objects will probably let you correctly transfer features from horses, cows, and other domestic livestock onto exotic endangered species. When doing facial recognition, best to use a different existing model like VGG.

However, trying to apply the featurization ability of an ImageNet trained DNN to more exotic data may not work well at all. Others have noted for example that medical imaging from radiography or CAT scans are originally derived in greyscale and that these image types, clearly not present in ImageNet, are unlikely to featurize accurately. Other types that should be immediately suspect might include scientific signals data or images for which there is no real world correlate, including seismic or multi-spectral images.

The underlying assumption is that the patterns in the images to be featurized existed in the pre-trained model’s training set.

The Pre-trained Model Zoo

There are a very large number of pre-trained models to use as the basis of TL. A short list drawn from the much larger universe would include:

- LeNet

- AlexNet

- OverFeat

- VGG

- GoogLeNet

- PReLUnet

- ResNet

- ImageNet

- MS Coco

All of which have several versions with different original image sets for you to pick from.

One Other Interesting Application of Transfer Learning

This may not have much commercial application but it’s interesting to know that you can use TL to combine two separate pre-trained models to achieve unexpected artistic results.

Right now these are being applied experimentally for mostly artistic results. The technique takes one model selected for content and another model selected for style, and combines them.

Right now these are being applied experimentally for mostly artistic results. The technique takes one model selected for content and another model selected for style, and combines them.

What takes a bit of experimentation is looking at each convolutional and pooling layer to decide which unique look you want your resulting image to have.

In the End

You can go-it-alone in Tensorflow or the other DL platforms, or you can start by experimenting with one of the fully automated ADL offerings above. Neither data nor experience should hold you back from adding deep learning features to your customer-facing or internal systems.

Previous articles on Automated Machine Learning and Deep Learning

Automated Deep Learning – So Simple Anyone Can Do It (April 2018)

Next Generation Automated Machine Learning (AML) (April 2018)

More on Fully Automated Machine Learning (August 2017)

Automated Machine Learning for Professionals (July 2017)

Data Scientists Automated and Unemployed by 2025 – Update! (July 2017)

Data Scientists Automated and Unemployed by 2025! (April 2016)

Other articles by Bill Vorhies.

About the author: Bill Vorhies Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at:

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Summary: There are several things holding back our use of deep learning methods and chief among them is that they are complicated and hard. Now there are three platforms that offer Automated Deep Learning (ADL) so simple that almost anyone can do it.

There are several things holding back our use of deep learning methods and chief among them is that they are complicated and hard.

There are several things holding back our use of deep learning methods and chief among them is that they are complicated and hard.

A small percentage of our data science community has chosen the path of learning these new techniques, but it’s a major departure both in problem type and technique from the predictive and prescriptive modeling that makes up 90% of what we get paid to do.

Artificial intelligence, at least in the true sense of image, video, text, and speech recognition and processing is on everyone’s lips but it’s still hard to find a data scientist qualified to execute your project.

Actually when I list image, video, text, and speech applications I’m selling deep learning a little short. While these are the best known and perhaps most obvious applications, deep neural nets (DNNs) are also proving excellent at forecasting time series data, and also in complex traditional consumer propensity problems.

Last December as I was listing my predictions for 2018, I noted that Gartner had said that during 2018, DNNs would become a standard component in the toolbox of 80% of data scientists. My prediction was that while the first provider to accomplish this level of simplicity would certainly be richly rewarded, no way was it going to be 2018. It seems I was wrong.

Here we are and it’s only April and I’ve recently been introduced to three different platforms that have the goal of making deep learning so easy, anyone (well at least any data scientist) can do it.

Minimum Requirements

All of the majors and several smaller companies offer greatly simplified tools for executing CNNs or RNN/LSTMs, but these still require experimental hand tuning of the layer types and number, connectivity, nodes, and all the other hyperparameters that so often defeat initial success.

To be part of this group you need a truly one-click application that allows the average data scientists or even developer to build a successful image or text classifier.

The quickest route to this goal is by transfer learning. In DL, transfer learning means taking a previously built successful, large, complex CNN or RNN/LSTM model and using a new more limited data set to train against it.

Basically transfer learning, most used in image classification, summarizes the more complex model into fewer or previously trained categories. Transfer learning can’t create classifications that weren’t in the original model, but it can learn to create subsets or summary categories of what’s there.

The advantage is that the hyperparameter tuning has already been done so you know the model will train. More importantly, you can build a successful transfer model with just a few hundred labeled images in less than an hour.

The real holy grail of AutoDL however, is fully automated hyperparameter tuning, not transfer learning. As you’ll read below, some are on track, and others claim to already have succeeded.

Microsoft CustomVision.AI

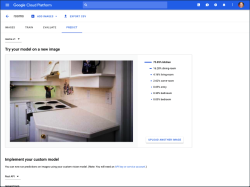

Late in 2017 MS introduced a series of greatly simplified DL capabilities covering the full range of image, video, text, and speech under the banner of the Microsoft Cognitive Services. In January they introduced their fully automated platform, Microsoft Custom Vision Services (https://www.customvision.ai/).

The platform is limited to image classifiers and promises to allow users to create robust CNN transfer models based on only a few images capitalizing on MS’s huge existing library of large, complex, multi-image classifiers.

Using the platform is extremely simple. You drag and drop your images onto the platform and press go. You’ll need at least a pay-as-you-go Azure account and basic tech support runs $29/mo. It’s not clear how long the models take to train but since it’s transfer learning it should be quite fast and therefore, we’re guessing, inexpensive (but not free).

During project setup you’ll be asked to identify a general domain from which your image set will transfer learn and these currently are:

- General

- Food

- Landmarks

- Retail

- Adult

- General (compact)

- Landmarks (compact)

- Retail (compact)

While all these models will run from a restful API once trained, the last three categories (marked ‘compact’) can be exported to run off line on any iOS or Android edge device. Export is to the CoreML format for iOS 11 and to the TensorFlow format for Android. This should entice a variety of app developers who may not be data scientist to add instant image classification to their device.

You can bet MS will be rolling out more complex features as fast as possible.

Google Cloud AutoML

Also in January, Google announced its similar entry Cloud AutoML. The platform is in alpha and requires an invitation to participate.

Like Microsoft, the service utilizes transfer learning from Google’s own prebuilt complex CNN classifiers. They recommend at least 100 images per label for transfer learning.

It’s not clear at this point what categories of images will be allowed at launch, but user screens show guidance for general, face, logo, landmarks, and perhaps others. From screen shots shared by Google it appears these models train in the range of about 20 minutes to a few hours.

It’s not clear at this point what categories of images will be allowed at launch, but user screens show guidance for general, face, logo, landmarks, and perhaps others. From screen shots shared by Google it appears these models train in the range of about 20 minutes to a few hours.

In the data we were able to find, use appears to be via API. There’s no mention of export code for offline use. Early alpha users include Disney and Urban Outfitters.

Anticipating that many new users won’t have labeled data, Google offers access to its own human-labeling services for an additional fee.

Beyond transfer learning, all the majors including Google are pursuing automated ways of automating the optimal tuning of CNNs and RNNs. Handcrafted models are the norm today and are the reason so many often unsuccessful iterations are required.

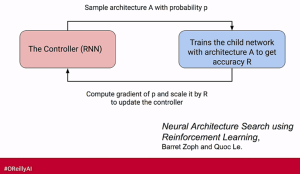

Google calls this next technology Learn2Learn. Currently they are experimenting with RNNs to optimize layers, layer types, nodes, connections, and the other hyperparameters. Since this is basically very high speed random search the compute resources can be extreme.

Google calls this next technology Learn2Learn. Currently they are experimenting with RNNs to optimize layers, layer types, nodes, connections, and the other hyperparameters. Since this is basically very high speed random search the compute resources can be extreme.

Next on the horizon is the use of evolutionary algorithms to do the same which are much more efficient in terms of time and compute. In a recent presentation, Google researchers showed good results from this approach but they were still taking 3 to 10 days to train just for the optimization.

OneClick.AI

OneClick.AI is an automated machine learning (AML) platform new in the market late in 2017 which includes both traditional algorithms and also deep learning algorithms.

OneClick.AI would be worth a look based on just its AML credentials which include blending, prep, feature engineering, and feature selection, followed by the traditional multi-models in parallel to identify a champion model.

However, what sets OneClick apart is that it includes both image and text DL algos with both transfer learning as well as fully automated hyperparameter tuning for de novo image or text deep learning models.

Unlike Google and Microsoft they are ready to deliver on both image and text. Beyond that, they blend DNNs with traditional algos in ensembles, and use DNNs for forecasting.

Forecasting is a little explored area of use for DNNs but it’s been shown to easily outperform other times series forecasters like ARIMA and ARIMAX.

For a platform with this complex offering of tools and techniques it maintains its claim to super easy one-click-data-in-model-out ease which I identify as the minimum requirement for Automated Machine Learning, but which also includes Automated Deep Learning.

The methods used for optimizing its deep learning models are proprietary, but Yuan Shen, Founder and CEO describes it as using AI to train AI, presumably a deep learning approach to optimization.

Which is Better?

It’s much too early to expect much in the way of benchmarking but there is one example to offer, which comes from OneClick.AI.

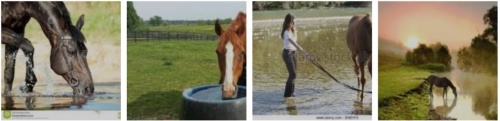

In a hackathon earlier this year the group tested OneClick against Microsoft’s CustomVision (Google AutoML wasn’t available). Two image classification problems were tested. Tagging photos with:

Horses running or horses drinking water.

Detecting photos with nudity.

The horse tagging task was multi-label classification, and the nudity detection task was binary classification. For each task they used 20 images for training, and another 20 for testing.

- Horse tagging accuracy: 90% (OneClick.ai) vs. 75% (Microsoft Custom Vision)

- Nudity detection accuracy: 95% (OneClick.ai) vs. 50% (Microsoft Custom Vision)

This lacks statistical significance and uses only a very small sample in transfer learning. However the results look promising.

This is transfer learning. We’re very interested to see comparisons of the automated model optimization. OneClick’s is ready now. Google should follow shortly.

You may also be asking, where is Amazon in all of this? In our search we couldn’t find any reference to a planned AutoDL offering, but it can’t be far behind.

Previous articles on Automated Machine Learning

Next Generation Automated Machine Learning (AML) (April 2018)

More on Fully Automated Machine Learning (August 2017)

Automated Machine Learning for Professionals (July 2017)

Data Scientists Automated and Unemployed by 2025 – Update! (July 2017)

Data Scientists Automated and Unemployed by 2025! (April 2016)

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at:

Genomics England uses MongoDB to Power the Data Science Behind the 100,000 Genomes Project

MMS • RSS

Article originally posted on MongoDB. Visit MongoDB

LONDON, April 10, 2018 /PRNewswire/ — Genomics England is using MongoDB (Nasdaq: MDB), the leading modern, general purpose data platform, to power the data science that makes the 100,000 Genomes Project possible and reduce the processing time for complex queries from hours to milliseconds, enabling scientists to discover new insights, faster.

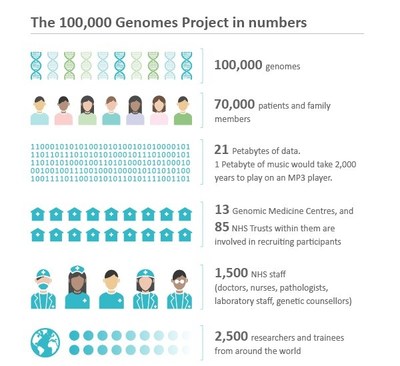

Genomics England, a company owned by the UK government’s Department of Health and Social Care, runs the 100,000 Genomes Project. This flagship project is sequencing 100,000 whole genomes from patients with rare diseases and their families as well as patients with common cancers. Working with the UK’s National Health Service, the aim is to harness the power of whole genome sequencing technology to transform the way people are cared for. Patients may be offered a diagnosis where there wasn’t one before. In time, there is the potential of new and more effective personalised treatments for patients.

Patients’ genomic data is combined with their clinical data to enable interpretation and analysis. Approved researchers, clinicians and industry partners can also access the project’s dataset – studying how best to use genomics in healthcare and how best to interpret the data to help patients.

The project is sequencing on average 1,000 genomes per week, producing about 10 terabytes of data per day. To manage this complex, massive and sensitive data set, Genomics England uses MongoDB Enterprise Advanced as an important component of its computing platform.

“Managing clinical and genomic data at this scale and complexity has presented interesting challenges,” said Augusto Rendon, Director of Bioinformatics at Genomics England.

“That’s why adopting MongoDB has been vital to getting the 100,000 Genomes Project off the ground. It has provided us with great flexibility to store and analyse these complex data sets together. This will ultimately help us to realise the benefits of the Project – delivering better diagnostic approaches for patients and new discoveries for the research community.

Why MongoDB

The project’s data platforms were built from the ground up on MongoDB. The document database was chosen for three core reasons. The first is the database’s native ability to handle a wide variety of data types, even those data structures that weren’t considered at the beginning of the project. This makes it simple for developers and data scientists to rapidly evolve data models and develop software solutions. The second reason was performance at scale. It was clear there would be a massive and constantly growing data set that would need to seamlessly scale across underlying compute resources. Not only would the data set be big and complex, but researchers need to easily explore the data and not have long waits for simple queries.

The third key driver for Genomics England to select MongoDB was its operational maturity with features such as end-to-end encryption, fine-grained access control to data and operational tooling. The 100,000 Genomes Project dataset is sensitive: as well as the full genetic makeup of a patient, it also includes their clinical features and lifetime health data. Instead, de-identified data is analysed within a secure, monitored environment. This makes encryption and data security vital. Varying levels of complex permissions are required so that only those with the appropriate credentials can access the most sensitive data sets.

MongoDB Enterprise Advanced satisfied these requirements and has been providing Genomics England with data flexibility, performance at scale and security since the project started in 2013.

Ignacio Medina, Head of Computational Biology Lab HPC Service, University of Cambridge, and Head of Bioinformatics Databases at Genomics England has been building many of the applications that sit on top of MongoDB, he said: “MongoDB is performing beautifully for us. From the beginning of the project it’s been fantastic for our developers to build and iterate quickly. Now that the 100,000 Genomes Project is running at scale, MongoDB is also helping us extend that great experience on to the scientists and clinicians who access the data, making it easier and faster for them to find critical insights in the data.”

Two of the most important projects are Cellbase and OpenCGA (Computational Genomics Analysis). Cellbase is a data warehouse and open API that stores reference genomic data from public resources such as Ensembl, Clinvar, and Uniprot. The genomic information itself includes the specific annotations other researchers have made on cell mutations. The code for Cellbase is available on Github. By relying on MongoDB, Cellbase can typically run sophisticated queries in an average of 40 milliseconds or less, and complex aggregations in less than 1 second. This is down from six hours using previous filesystem-based querying and storage. Importantly, it can annotate about 20,000 variants per second, making it compatible with whole genome sequencing data throughput requirements, while also returning a rich set of annotations that helps scientists better understand the data.

OpenCGA aims to provide researchers and clinicians with a high-performance solution for genomic big data processing and analysis. The OpenCGA platform also includes much more detailed information on genomic material including private metadata and more specific background information on specific sequenced genomes. This means OpenCGA has the ability to process incredibly complex queries based on a huge variety of variables, from a single mutation right through to analysing common mutations within a specific geographic region.

By using MongoDB, OpenCGA enables researchers to query data in any way they want. This is done by use of MongoDB’s secondary indexes – from compound indexes to query data across related attributes, text search facets to efficiently navigate and explore data sets, and sparse indexes to access highly variable data structures. Each collection can have 20 more secondary indexes to service multiple query patterns, including complex, ad-hoc queries.

The project has now reached its halfway point, with over 50,000 genomes sequenced and patients already benefiting from new diagnoses and opportunities to take part in clinical trials. By the end of 2018 the 100,000 Genomes project will be complete, at which point there will be more than 20 petabytes of data stored on the project’s infrastructure. The project will go on to provide the infrastructure for a National Genomics Service being put in place by NHS England.

Dev Ittycheria, President and CEO, MongoDB, concluded: “The 100,000 Genomes Project hits home for me in a very personal way as I recently lost my mother to cancer. I am extremely grateful that so many brilliant people are dedicating their time and energy to this important project. We are honoured that MongoDB is playing an essential role as the underlying data platform to produce data science that is likely to change the lives of millions of people, including someone we may personally know, for the better. This is the kind of project that inspires us to do our best work every day.”

About Genomics England

Genomics England is a company owned by the Department of Health and Social Care, and was set up to deliver the 100,000 Genomes Project. This flagship project will sequence 100,000 whole genomes from NHS patients and their families.

Genomics England has four main aims:

- to bring benefit to patients

- to create an ethical and transparent programme based on consent

- to enable new scientific discovery and medical insights

- to kickstart the development of a UK genomics industry

The project is focusing on patients with rare diseases, and their families, as well as patients with common cancers. For more information visit www.genomicsengland.co.uk.

Contact

Katrina Nevin-Ridley

Director of Communications

Email: katrina.nevin-ridley@genomicsengland.co.uk

Phone: 0207 882 6493

@GenomicsEngland #genomes100k

About MongoDB

MongoDB is the leading modern, general purpose database platform, designed to unleash the power of software and data for developers and the applications they build. Headquartered in New York, MongoDB has more than 5,700 customers in over 90 countries. The MongoDB database platform has been downloaded over 35 million times and there have been more than 850,000 MongoDB University registrations.

Press Contact

MongoDB EMEA

+44 870 495 8023 x7804

Communications@MongoDB.com

![]() View original content with multimedia:http://www.prnewswire.com/news-releases/genomics-england-uses-mongodb-to-power-the-data-science-behind-the-100-000-genomes-project-300626780.html

View original content with multimedia:http://www.prnewswire.com/news-releases/genomics-england-uses-mongodb-to-power-the-data-science-behind-the-100-000-genomes-project-300626780.html

SOURCE MongoDB

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Blog post by Henry Hinnefield, Lead Data Scientist at Civis Analytics

“When machine learning models make decisions that affect people’s lives, how can you be sure those decisions are fair?”

In our work at Civis, we build a lot of models. Most of the time we’re modeling people and their behavior because that’s what we’re particularly good at, but we’re hardly the only ones doing this — as we enter the age of “big data” more and more industries are applying machine learning techniques to drive person-level decision-making. This comes with exciting opportunities, but it also introduces an ethical dilemma: when machine learning models make decisions that affect people’s lives, how can you be sure those decisions are fair?

Defining “Fairness”

A central challenge in trying to build fair models is quantifying some notion of ‘fairness’. In the US there is a legal precedent which establishes one particular definition. However, this is also an area of active research. In fact, a substantial portion of the academic literature is focused on proposing new fairness metrics and proving that they display various desirable mathematical properties. This proliferation of metrics reflects the multifaceted nature of ‘fairness’: no single formula can capture all of its nuances. This is good news for the academics — more nuance means more papers to publish — but for practitioners, the multitude of options can be overwhelming. To address this my colleagues and I focused on three questions that helped guide our thinking around the tradeoffs between different definitions of fairness.

Group vs. Individual Fairness

For a given modeling task, do you care more about group-level fairness or individual-level fairness? Group fairness is the requirement that different groups of people should be treated the same on average. Individual fairness is the requirement that individuals who are similar should be treated similarly. These are both desirable, but in practice, it’s usually not possible to optimize both at the same time. In fact, in most cases, it’s mathematically impossible. The debate around affirmative action in college admissions illustrates the conflict between individual and group fairness: group fairness stipulates that admission rates be equal across groups (for example, gender or race) while individual fairness requires that each applicant be evaluated independently of any broader context.

Balanced vs. Imbalanced Ground Truth

Is the ground truth for whatever you are trying to model balanced between different groups? Many intuitive definitions of fairness assume that ground truth is balanced, but in real-world scenarios, this is not always the case. For example, suppose you are building a model to predict the occurrence of breast cancer. Men and women have breast cancer at dramatically different rates, so a definition of fairness that required similar predictions between different groups would be ill-suited to this problem. Unfortunately, determining the balance of ground truth is generally hard because in most cases our only information about ground truth comes from datasets which may contain bias.

Sample Bias vs. Label Bias in your Data

Speaking of, what types of bias might be present in your data? In our thinking, we focused on two types of bias that affect data generation: label bias and sample bias. Label bias occurs when the data-generating process systematically assigns

labels differently for different groups.

For example, studies have shown that non-white kindergarten children are suspended at higher rates than white children for the same problem behavior, so a dataset of kindergarten disciplinary records might contain label bias. Accuracy is often a component of fairness definitions. However, optimizing for accuracy when data labels are biased can perpetuate biased decision making.

Sample bias occurs when the data-generating process samples from different groups in different ways. For example, an analysis of New York City’s stop-and-frisk policy found that Black and Hispanic people were stopped by police at rates disproportionate to their share of the population (while controlling for geographic variation and estimated levels of crime participation). A dataset describing these stops would contain sample bias because the process by which data points are sampled is different for people of different races. Sample bias compromises the utility of accuracy as well as ratio-based comparisons, both of which are frequently used in definitions of algorithmic fairness.

Recommendations for Data Scientists

Based on our reading and learnings from our daily work, my colleagues and I came up with three recommendations for our coworkers:

Think about the ground truth you are trying to model

Whether or not ground truth is balanced between the different groups in your dataset is a central question. However, there is often no way to know for sure one way or the other. Absent any external source of certainty it is up to the person building the model to establish a prior belief about an acceptable level of imbalance between the model’s predictions for different groups.

Think about the process that generated your data

To paraphrase a famous line, “All datasets are biased, some are useful.” It is usually impossible to know exactly what biases are present in a dataset, so the next best option is to think carefully about the process that generated the data. Is the dependent variable the result of a subjective decision? Could the sampling depend on some sensitive attribute? Failing to consider the bias in your datasets at best can lead to poorly performing models, and at worst can perpetuate a biased decision-making process.

Keep a human in the loop, if your model affects people’s lives.

There is an understandable temptation to assume that machine learning models are inherently fair because they make decisions based on math instead of messy human judgments. This is emphatically not the case — a model trained on biased data will produce biased results. It is up to the people building the model to ensure that its outputs are fair. Unfortunately, there is no silver bullet measurement which is guaranteed to detect unfairness: choosing an appropriate definition of model fairness is task-specific and requires human judgment. This human intervention is especially important when a model can meaningfully affect people’s lives.

Machine learning is a powerful tool, and like any powerful tool, it has the potential to be misused. The best defense against misuse is to keep a human in the loop, and it is incumbent on those of us who do this kind of thing for a living to accept that responsibility.

Written by Henry Hinnefeld, Data Scientist @ Civis Analytics

MMS • RSS

Article originally posted on Data Science Central. Visit Data Science Central

Summary: Automated Machine Learning has only been around for a little over two years and already there are over 20 providers in this space. However, a new European AML platform called Tazi, new in the US, is showing what the next generation of AML will look like.

I’ve been a follower and a fan of Automated Machine Learning (AML) since it first appeared in the market about two years ago. I wrote an article on all five of the market entrants I could find at the time under the somewhat scary title ‘Data Scientists Automated and Unemployed by 2025!’.

I’ve been a follower and a fan of Automated Machine Learning (AML) since it first appeared in the market about two years ago. I wrote an article on all five of the market entrants I could find at the time under the somewhat scary title ‘Data Scientists Automated and Unemployed by 2025!’.

As time passed I tried to keep up with the new entrants. A year later I wrote a series of update articles (links at the end of this article) that identified 7 competitors and 3 open source code packages. It was growing.

I’ve continued to try to keep track and I’m sure the count is now over 20 including at least three new entrants I just discovered at the March Strata Data conference in San Jose. I’m hoping that Gartner will organize all of this into a separate category soon.

Now that there are so many claimants, I do want to point out that the ones I think really earn this title are the ones that come closest to the original description of one-click-data-in-model-out. There are a whole host of providers providing semi-AML in which there are a number of simplified steps but are not truly one-click in simplicity and completeness.

What’s the Value?

Originally the claim was that these might completely replace data scientists. The marketing pitch was that the new Citizen Data Scientists (CDS) comprised mostly of motivated but untrained LOB managers and business analysts could simply step in and produce production quality models. Those of us on this side of the wizard’s curtain understood that was never going to be the case.

There is real value here though and it’s in efficiency and effectiveness. Allowing fewer rare and expensive data scientist do the work that used to require many in a fraction of the time. Early adopters with real data science departments like Farmers Insurance are using it in exactly this way.

The Minimum for Entry

To be part of this club the minimum functionality is the ability to automatically run many different algorithms in parallel, auto tune hyperparameters, and select and present a champion model for implementation.

This also means requiring as close to one click model building as you can get. While there might be expert modes, the fully automated mode should benchmark well against the fully manual efforts of a data science team.

For most entrants, this has meant presenting the AML platform with a fully scrubbed flat analytic file with all the data prep and feature engineering already completed. DataRobot (perhaps the best known or at least most successfully promoted), MLJAR, and PurePredictive are in this group. Some have added front ends with the ability to extract, blend, clean, and even feature engineer. Xpanse Analytics, and TIMi are some of the few with this feature.

New Next Generation Automated Machine Learning

At Strata San Jose in March I encountered a new entrant, Tazi.ai that after a long demonstration and a conversation with their data scientist founders really appears to have broken through into the next generation of AML.

Ordinarily DataScienceCentral does not publish articles featuring just a single product or company, so please understand that this is not an endorsement. However, I was so struck by the combination and integration of features that I think Tazi is the first in what will become the new paradigm for AML platforms.

Ordinarily DataScienceCentral does not publish articles featuring just a single product or company, so please understand that this is not an endorsement. However, I was so struck by the combination and integration of features that I think Tazi is the first in what will become the new paradigm for AML platforms.

Tazi has been in the European market for about two years; recently received a nice round of funding, and opened offices in San Francisco.

What’s different about Tazi’s approach is not any particularly unique new feature but rather the way they have fully integrated the best concepts from all around our profession into the Swiss Army knife of AML. The value of the Swiss Army knife after all is not just that it has a blade, can opener, corkscrew, screwdriver, etc. The break through is in the fact all these features fit together so well in just one place so we don’t have to carry around all the pieces.

Here’s a high level explanation of the pieces and the way Tazi has integrated them so nicely.

Core Automated Algorithms and Tuning

Tazi of course has the one-click central core that runs multiple algorithms in parallel and presents the champion model in a nice UI. Hyperparameter tuning is fully automated.

The platform will take structured and unstructured data including text, numbers, time-series, networked-data, and features extracted from video.

As you might expect this means that some of the candidate algorithms range from simple GLMs and decision trees to much more advanced boosting and ensemble types and DNNs. This also means that some of these are going to run efficiency on CPUs but some are only going to realistically be possible on GPUs.

As data volume and features scale up Tazi can take advantage of IBM and NVIDIA’s Power8, GPU and NVLink based architecture that allows super-fast communication of CPU and GPUs working together in very efficient MPP.

Problem Types

Tazi is designed to cover the spectrum of business problem types divided into three broad categories:

- Typical consumer propensity classification problems like churn, non-performing loan prediction, collection risk, next best offer, cross sell, and other common classification tasks.

- Time series regression value predictions such as profitability, demand, or price optimization.

- Anomaly detection including fraud, predictive maintenance, and other IoT-type problems.

Streaming Data and Continuous Learning

The core design is based on streaming data making the platform easy to use for IoT type problems. Don’t need streaming capability? No problem. It accepts batch as well.

However, one of the most interesting features of Tazi is that its developers have turned the streaming feature into Continuous Learning. If it’s easier, think of this as Continuous Updating. Using atomic level streaming data (as opposed to mini-batch or sliding window), each new data item received is immediately processed as part of a new data set to continuously update the models.

If you are thinking fraud, preventive maintenance, or medical monitoring you have an image of very high data thru put from many different sources or sensors, which Tazi is equipped to handle. But even in relatively slow moving B2B or brick and mortar retail, continuous updating can mean that every time a customer order is received, a new customer registers, or a new return is processed those less frequent events will be fed immediately into the model update process.

If the data drifts sufficiently, this may mean that an entirely new algorithm will become the champion model. More likely, with gradual drift, the parameters and variable weightings will change indicating an update is necessary.

Feature Engineering and Feature Selection

Like any good streaming platform, Tazi directly receives and blends source data. It also performs the cleaning and prep, and performs feature engineering and feature selection.

For the most part, all the platforms that offer automated feature engineering execute by taking virtually all the date differences, ratios between values, time trends, and all the other statistical methods you might imagine to create a huge inventory of potential engineered variables. Almost all of these, which can measure in the thousands, will be worthless.

So the real test is to produce these quickly and also to run selection algos on them quickly to focus in on those that are actually important. Remember this is happening in a real time streaming environment where each new data item means a model update.

Automated feature engineering may never be perfect. It’s likely that the domain knowledge of SMEs may always add some valuable new predictive variable. Tazi says however that many times its automated approach has identified engineered variables that LOB SMEs had not previously considered.

Interpretability and the User Presentation Layer

Many of the early adopters in AML are in the regulated industries where the number of models is very large but the requirement for interpretability always requires a tradeoff between accuracy and explainability.

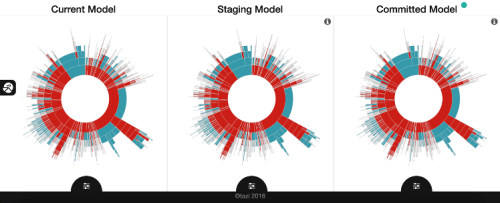

Here, Tazi has cleverly borrowed a page from the manual procedures many insurance companies and lenders have been using.

Given that the most accurate model may be the product of a non-explainable procedure like DNNs, boosting, or large ensembles, how can most of this accuracy be retained in a vastly simplified explainable decision tree or GLM model?

The manual procedure in use for some time in regulated industries is to take the scoring from the complex more accurate model, along with its enhanced understanding of and engineering of variables, and use this to train an explainable simplified model. Some of the accuracy will be lost but most can be retained.

Tazi takes this a step further. Understanding that the company’s data science team is one thing but users are another, they have created a Citizen Data Scientist / LOB Manager / Analyst presentation layer containing the simplified model which these users can explore and actually manipulate.

Tazi elects to visualize this mostly as sunburst diagrams that non-data scientists can quickly learn to understand and explore.

Finally, the sunburst of the existing production model is displayed next to the newly created proposed model. LOB users are allowed to actually manipulate and change the leaves and nodes to make further intentional tradeoffs between accuracy and explainability.

Perhaps most importantly, LOB users can explore the importance of variables and become comfortable with any new logic. They also can be enabled to directly approve and move a revised model into production if that’s appropriate.

This separation of the data science layer from the explainable Citizen Data Scientist layer is one of Tazi’s most innovative contributions.

Implementation by API or Code

Production scoring can occur via API within the Tazi platform or Scala code of the model can be output. This is particularly important in regulated industries that must be able to roll back their systems to see how scoring was actually occurring on some historical date in question.

Active, Human-in-the-Loop Learning

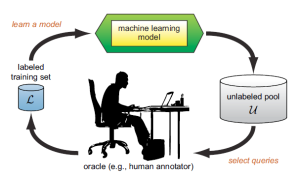

Review and recoding of specific scored instances by human workers, also known as active learning, is a well acknowledged procedure. Crowdflower for example has built an entire service business around this, mostly in image and language understanding.

Review and recoding of specific scored instances by human workers, also known as active learning, is a well acknowledged procedure. Crowdflower for example has built an entire service business around this, mostly in image and language understanding.

However, the other domain where this is extremely valuable is in anomaly detection and the correct classification of very rare events.

Tazi has an active learning module built directly into the platform so that specified questionable instances can be reviewed by a human and the corrected scoring fed back into the training set to improve model performance. This eliminates the requirement of extracting these items to be reviewed and moving them on to a separate platform like Crowdflower.

This semi-supervised learning is a well acknowledged procedure in improving anomaly detection.

Like the Swiss Army Knife – Nicely Integrated

There is much to like here in how all these disparate elements have been brought together and integrated. It doesn’t necessarily take a break through in algorithms or data handling to create a new generation. Sometimes it’s just a well thought out wide range of features and capabilities, even if there’s no corkscrew.

Previous articles on Automated Machine Learning

More on Fully Automated Machine Learning (August 2017)

Automated Machine Learning for Professionals (July 2017)

Data Scientists Automated and Unemployed by 2025 – Update! (July 2017)

Data Scientists Automated and Unemployed by 2025! (April 2016)

About the author: Bill Vorhies is Editorial Director for Data Science Central and has practiced as a data scientist since 2001. He can be reached at: